FORECASTS PREDICTIONS

Top executives share their industry and technology forecasts for 2022 and ahead.

Top executives share their industry and technology forecasts for 2022 and ahead.

The last two years were the warm up years for digital transformation. 2022 looks all set to be the kick off year and enterprises need to get this right, without the overarching headache of pandemic disruptions. However, like every year, wherever there is a silver lining for the IT industry there are also challenges.

The continuous attack on IT infrastructure and the failure of enterprise software to protect its integrity has finally made a dent on enterprise sentiment. Alongside climate change, the 2021 Edelman Trust Barometer shows trust in technology sector is declining.

Through a supply chain exploit, rather than attacking 100 or 1,000 separate organisations, they can successfully exploit a complete ecosystem through one company alone.

The reputation and integrity of enterprise software vendors has dropped to such new lows, that Lior Div, CEO and Cofounder of Cybereason categorically stated that organisations depending on Microsoft for security will make headlines in 2022.

According to Div, Microsoft will continue to be the primary focus for cyberattacks in 2022 and defenders need to understand the risk of relying on Microsoft to protect them. Microsoft has a dominant role across operating systems, cloud platforms, applications that makes it fairly ubiquitous.

Div continues, what we need to be aware of as we go into 2022 is the increasing cooperation and collaboration between threat actors.

According to Ettiene van der Watt at Axis Communications, companies must pay closer attention to their processes from end to end. Authenticity is becoming the next big hurdle in the age of data manipulation.

With some businesses getting over 200,000 threats per year it is impossible for humans alone to manage, points out Gregg Petersen at Cohesity. Ransomware continues to be a powerful and potentially devastating type of cyberattack. Ransomware as a Service, RaaS has seen continued evolution during 2021.

A distributed workforce means protecting a corporate network similar to a walled garden is no longer appropriate. The way forward will be provided by automation and artificial intelligence, and CISOs need to be aware of the potential of AI in their armoury, stresses Petersen.

The arrival of low-code, data analytics, real time insights will make 2022 an important year for data sciences. No-code and low-code will simplify and democratise AI. Alan Jacobson and David Sweenor from Alteryx point out that 2022 will be the year of the Chief Transformation Officer.

There is an existing skills gap between data scientists as practitioners and those as teachers. Organisations will shift their mindsets to sharing rather than data hording. With the continued democratisation of analytics, data scientists need to evolve from problem solvers to teachers.

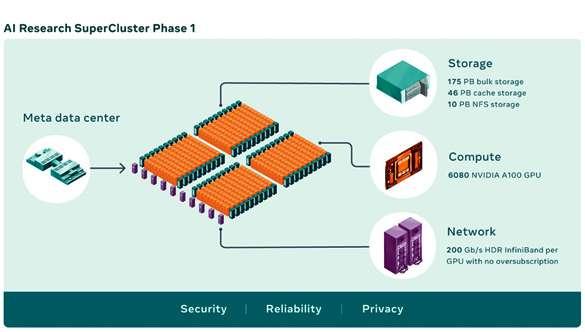

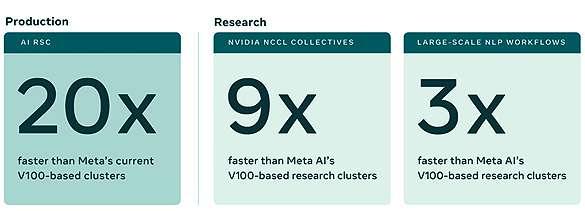

Turn these pages to learn more about forecasts and predictions for 2022 and ahead. Also, read about the launch and build-up of Meta’s Research SuperCluster.

Wishing you a super busy, on-going events quarter, and positive returns in business.

MANAGING DIRECTOR

TUSHAR SAHOO

TUSHAR@GECMEDIAGROUP.COM

EDITOR

ARUN SHANKAR

ARUN@GECMEDIAGROUP.COM

CEO

RONAK SAMANTARAY

RONAK@GECMEDIAGROUP.COM

GLOBAL HEAD, CONTENT AND STRATEGIC ALLIANCES

ANUSHREE DIXIT

ANUSHREE@GECMEDIAGROUP.COM

GROUP SALES HEAD

RICHA S RICHA@GECMEDIAGROUP.COM

EVENTS EXECUTIVE GURLEEN ROOPRAI GURLEEN@GECMEDIAGROUP.COM

JENNEFER LORRAINE MENDOZA JENNEFER@GECMDIAGROUP.COM

SALES AND ADVERTISING

RONAK SAMANTARAY

RONAK@GECMEDIAGROUP.COM

PH: + 971 555 120 490

DIGITAL TEAM

IT MANAGER

VIJAY BAKSHI

DIGITAL CONTENT LEAD DEEPIKA CHAUHAN

SEO & DIGITAL MARKETING ANALYST HEMANT BISHT

PRODUCTION, CIRCULATION, SUBSCRIPTIONS INFO@GECMEDIAGROUP.COM

CREATIVE LEAD AJAY ARYA

GRAPHIC DESIGNER RAHUL ARYA

DESIGNED BY

SUBSCRIPTIONS

INFO@GECMEDIAGROUP.COM

PRINTED BY

Al Ghurair Printing & Publishing LLC.

Masafi Compound, Satwa, P.O.Box: 5613, Dubai, UAE # 203 , 2nd Floor

G2 Circular Building , Dubai Production City (IMPZ)

Phone : +971 4 564 8684

31 FOXTAIL LAN, MONMOUTH JUNCTION, NJ - 08852 UNITED STATES OF AMERICA PHONE NO: + 1 732 794 5918

A PUBLICATION LICENSED BY International Media Production Zone, Dubai, UAE @copyright 2013 Accent Infomedia. All rights reserved. while the publishers have made every effort to ensure the accuracyof all information in this magazine, they will not be held responsible for any errors therein.

Authenticity is becoming next big hurdle

COVER STORY / 24-46 2022 and ahead: Forecasts and predictions

l ALAN JACOBSON, DAVID SWEENOR: 2022 will be the year of the Chief Transformation Officer

l AMEL GARDNER: Information and insights are required just in time

l ASHRAF YEHIA: Challenge for datacentres is not efficiency but sustainability

l DAVID BROWN: Attack surface management an important area in 2022

l DAVID HUGHES: Consumers are placing value on experiences over things

l DAVID NOËL: Appetite for digital services unlikely to reverse itself

l ETTIENE VAN DER WATT: Trust in the technology sector is declining globally

l FADI KANAFANI: In 2022 AI starts to permeate all industries

l FIRAS JADALLA: Spatial and video analytics to progress in 2022

l GREGG PETERSEN: CISOs need to be aware of potential of AI in their armoury

l ISSAM LACHGAR: Digital technologies can help meet 2030 and 2050 targets

l LIOR DIV: Organisations depending on Microsoft for security will make headlines

l MOUSSALAM DALATI: Retailers will have to create experiential retail

l OSAMA AL-ZOUBI: Data has ownership, sovereignty, privacy, compliance challenges

l RAJESH GANESAN: Hybrid work making network-based security obsolete

l SHERIFA HADY: Opportunities for channel in reducing network complexity

l SID BHATIA: Enterprise AI will be supplanted by everyday AI

l WOJTEK ŻYCZYŃSKI: Channel marketplaces driving away product catalogues

l And others

Here are some forward looking forecasts about the role played by 5G, costs of device connectivity, low earth orbit satellites, and factory area networks.

There is so much happening in the Internet of Things world. Here, we explain why demand for device connectivity is about to take off with these predictions.

You will see a steady increase in IoT connected device projects that require more than one method of connectivity, but costs are high, and standards are lacking. One way to accelerate this would be if LPWAN providers adhered to some standardisation, making it easier for IoT platform providers to support multiple vendors. However, this is not an attractive choice for the LPWAN providers for the obvious reason that cheaper competitors could replace them.

What will make it happen is simply price drops. This will make it possible to cheaply add multiple connectivity standards to a device. IoT platforms will be expected to abstract and normalise them, acting as the do-it-all Swiss knife.

There will be huge hype around low earth orbit satellites for connectivity, but that may be short-lived. A low earth orbit satellite is an object that orbits the earth at lower altitudes than geosynchronous satellites - normally between 1,000 km and 160 km above the earth. They are commonly used for communications, military reconnaissance, spying, and other imaging applications.

In 2022 we will see many more launches, where companies are aiming for complete worldwide connectivity at fast speeds and with low latency.

Although there will be a huge hype around it in 2022, we predict that it might not stick. Why?

The use cases are based on unlocking access to remote locations. And 95% of them are in agriculture. While it goes without saying that the satellite connectivity technology for devices is relatively cheap, the lack of variety in use cases makes you wonder on how to achieve full scale adoption in the long run.

Once the hype settles will find a niche in other areas where cellular coverage is difficult, example Australia or Brazil. As such, it will be at the most a complementary technology.

In 2022, private 5G for IIoT uptake will be slower than the market expected. One of the reasons is that due to the slow adoption of other technologies like the new Wi-Fi 6 standard, that will offer at least four times faster speed than Wi-Fi 5, are catching up.

Therefore, the question is whether 5G’s most-hyped use case, factory area networks FAN, is really going to take off. Sure, very large areas like airports and harbours will benefit from 5G, but factories might be tempted to cover with Wi-Fi. Most assets might already have Wi-Fi connectivity and not 5G connectivity, so the business case will likely not be good enough to upgrade your brownfield. ë

IoT platforms will be expected to abstract and normalise them, acting as the do-it-all Swiss knife

BART SCHOUW, Chief Evangelist in CTO Office, Software AG.

Drawing a line to represent your organisation’s digital perimeter is problematic since such perimeters are not expanding outward like a balloon filling up with air.

JAAFARAWI, Managing Director, Middle East, Qualys.

Arecent VMware report showed 80% of security professionals had experienced increases in attack levels in their organisation because of remote work. The pandemic did not cause a spike in cyber-incidents. It caused a spike in digital transformation, which expanded the opportunities for attackers to attack.

As we start to consider our new normal as just normal, there are a few challenges we still must overcome.

Drawing a line — even in an abstract sense — to represent your organisation’s digital perimeter is deeply problematic. Such perimeters are not expanding outward like a balloon filling up with air. Entirely new balloons, such as third-party networks and the private homes of employees, are joining the environment, as well as new factory — and field-based devices that make up the rapidly expanding Internet of Things.

Remote work, for example, is a necessary component of today’s world. Hybrid environments will remain, so CISOs must form a plan of action for managing them that retains the flexibility they have added while diluting the risk they pose.

And finally, security leaders must justify budget spends. They must target areas of improvement, balancing cost with value added. They must weigh issues such as talent shortages with the pressing concerns of discovering, auditing, and securing new digital assets, from field and factory machinery and traditional endpoints to cloud environments and containerised apps.

Automation is the standout quick win for today’s embattled CISO. Assuming regional technology stakeholders have been able to assemble a security team of any size, that team is likely to be overworked in the post-Cloud Rush era. Overwhelmed by false positives and preoccupied with firefighting, these professionals, recruited for their ability to add value, are instead succumbing to alert fatigue.

Automation can be applied to several areas that are traditionally labor-intensive. It can sift through telemetry in a fraction of the time it would take a human agent to do so. And it can be put to work in asset discovery, compiling a rich and accurate inventory that gives security teams a baseline from which to understand their new environment. Next, automation can get to work on auditing discovered assets and triaging them for action, whether that is further investigation by a human resource or immediate patching of a known vulnerability.

Visibility alone, gained by automated asset discovery, is priceless. Remote devices, cloud workloads, the activity within containers — all this and more should be transparent to CISOs and their teams. Zero Trust network architectures are also becoming popular. Other challenges, such as how to match the speed and ferocity of the attack landscape, can be met through advanced AI. Technologies like machine learning have proved themselves capable of drastically shrinking response times. They comb through lakes of data and flag threats in real time, reducing the number of false positives and further making the case for automation.

Tools are improving. Security vendors are starkly aware of the growing need for forward-looking digital strategies among their customers and have responded by raising their game once again to outwit bad actors. Cloud-oriented, container-sensitive security platforms are now capable of advanced prevention, detection, and response, including automatic asset discovery and inventory management, machine-controlled patching, and more streamlined compliance management. ë

Formjacking is appealing to cybercriminals because it is a way to extract data and stands to get a boost from hacking-as-aservice and affiliate models.

Much of the discussion about the threat landscape in 2021 has been about either ransomware or high-consequence vulnerabilities in enterprise software. Amidst all this excitement, it has been easy to overlook the risk to e-commerce from formjacking attacks such as Magecart.

In brief, formjacking is an attack type where threat actors inject a malicious script into an e-commerce site, which then captures and extracts credit card information during the payment process.

While attackers have been using this technique against a progressively broader profile of targets than strictly e-commerce sites, including public utilities and professional organisations, e-commerce is still the prime target because that is where the most easy money is. In 2020, more than half of retail data breaches in the US were attributable to formjacking attacks.

One significant factor that makes them so popular is the potential to inject a script once against an outsourced payment platform provider, and have it served to all of the target’s customers—harvesting their credentials. This extracts a huge amount of value for little work, a variation on the supply chain attack approach that has caused so many headaches in the last few years.

Formjacking is appealing to cybercriminals because it is a way to quickly extract valuable and easily saleable data. Now, however, it stands to get an additional boost from the growing trend of hacking-as-a-service and affiliate models. Most of the attention on this model is currently focused on Ransomware-as-a-service. This approach lets ransomware developers focus on development and outsource every other part of the attack to affiliates, who often rent the malware. And this trend is not limited to ransomware.

A growing number of intelligence sources indicate that specialisation and division of labor in the attacker community is becoming more common and more intensive, meaning that there are now separate experts focusing on specific areas such as gaining an initial foothold, establishing persistence, evading detection, and so on, whose services are all for sale.

This is particularly important for formjacking because the greatest degree of variation between Magecart variants lies in the methods of initial entry and detection evasion. As long as each formjacking threat actor

needs to figure out every aspect of their own vector.

This means that attack chains that are powerful at one stage might be hamstrung by limitations at another stage. For example, clever injection techniques might be undone by the noisy extraction of information or an unsuccessful attempt to masquerade as a Google Analytics or Recaptcha script.

As specialisation and division of labor accelerate in the attacker community, there is potential for each attack to feature the best practices in each phase of the attack chain.

Formjacking is not the single most prevalent attack technique around, nor is it the most devastating. However, in terms of a coherent pattern between attacker, technique, and target, it is one of the most clear and focused. Attackers prefer payment cards to other kinds of stolen data because they are easily monetised.

We probably cannot collectively reduce the risk of formjacking to zero, but with some situational awareness and a few extra controls, you can at least determine what happens on your own e-commerce site—which is, of course, a fine place to start. ë

Intelligence sources indicate specialisation and division of labor in the attacker community is becoming common

OPSWAT, ondeso and EMT in association with the Global CIO Forum organised a virtual summit on the Protect Your Critical Infrastructure webinar on 26th January 2022. The conference focused on critical infrastructure that are vital to a company or country and their incapacity would have a disastrous impact.

In the IT World, critical infrastructure is always referred to as operational technology and is the practice of using hardware and software to control industrial equipment.

For the past couple of years, threat actors have targeted organisations in energy, oil and gas and utility sectors. Cyberattacks on critical infrastructure have become increasingly complex and disruptive, causing systems to shut down.

ICS environments can also serve as a gateway into enterprise and government which frequently maintain sensitive data, as well as classified security information. Simply put, it is because of such high stakes that critical infrastructure organizations needs an abundance of qualified, highly skilled cybersecurity professionals to help identify, mitigate, and remediate threats of all types.

Critical infrastructure security is highly important in protecting systems and services that are essential to society and the economy.

Nandini Sapru, Vice President of Sales, EMT welcomed all the speakers and attendees. “It is what makes a company’s data or network so critical, that in case of a calamity a lot of people and a lot of data and a lot of

situations would be affected.”

Fawad Laiq, Senior Technical Manager, emt Distribution briefed the challenges faced in OT Networks and said, “Many of the OT Networks have a lot of legacy systems. Their updates, patching and management is not that easy because of the restrictions including physical and even the logical restriction which we have in a place.”

Oren Dvoskin, Vice-President of OT and Industry Marketing, OPSWAT talked about 2020-2021 OT ICS Security trends and OPSWAT OT, Industrial Cybersecurity, OPSWAT Netwall, and Unidirectional file transfers.

Vincent Turmel, Senior Director of OT Product Sales Engineering, OPSWAT highlighted few essential points including Unidirectional file transfers, OT ICS data replication, Data Centre Security, case study on electricity distribution and OPC UA Replication.

Peter Lukesch, COO, ondeso also highlighted the hidden potential of strategic IT Management in industrial environments, avoiding vulnerabilities, improving reliability and quality, major errors of operational technology and information technology solutions.

Arun Shankar, Editor, GEC Media Group conducted a question-andanswer session with Oren Dvoskin, Vincent Turmel, Nandini Sapru, and Peter Lukesch.

The event was concluded with an amazing quiz with the attendees and Megha Arora as the winner won an iphone 13.

Proven Robotics, announced the launch of its first robotics and technical service centre in Riyadh, Saudi Arabia. The new service centre will help customers to enhance strategic sales and achieve their technical goals, while benefiting from end-to-end local support and expertise in robotics and advanced technologies.

The new facility builds on Proven Robotics’ reputation of delivering efficient and innovative robotics solutions. It will offer a wide range of services including providing customers with original spare parts, onsite troubleshooting, inhouse maintenance from qualified and technically certified teams, as well as the installation and configuration of robots.

Newly launched facility will enable shorter response times for customers and empower customer to meet digital transformation goals, while deepening advanced technology expertise within the Kingdom

As a first-of-its-kind service centre for Proven Robotics, the new facility strengthens the company’s operations within the region at a time when demand for advanced technologies is on the rise worldwide.

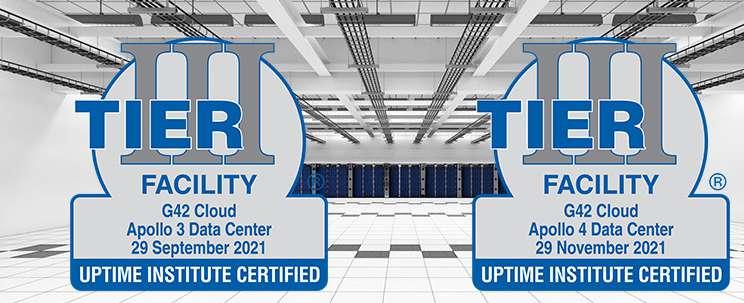

Khazna Datacentres, the UAE’s largest data centre provider achieved the Uptime Institute’s Tier III certification of Constructed Facility for its Apollo 3 and Apollo 4 Datacentres.

In meeting today’s data centre demands, innovative requirements come in the form of speed, higher density, modularity, energy efficiency, sustainability, and scalability; whilst remaining secure and highly available. The wholesale model adopted by Khazna Datacentres, addresses enterprise data centre requirements by presenting highly secure, ultra-modern wholesale datacentres that are fully equipped with the latest technologies. These may be customised and scaled as customers grow allowing for faster time to

market.

With the increased digitalisation of processes, along with a growing demand for operational readiness, agility and availability of IT systems and infrastructure, datacentres are becoming more critical to accommodate for these developments. The datacentres which form an integral part of the 108MW data centre, focuses on improving efficiencies in the design and elimination of anomalies that ensures we are adhering to the best practises in data centre-built environments and implementing effective controls to protect critical infrastructure from environmental hazards and human errors.

Khazna Datacentres is setting up efficient design and delivery, at a quicker pace, halving the time it takes to get the data centre operational compared to traditional construction methods. Khazna Datacentres continue to empower customers and partners to accelerate their digital transformation journeys, re-imagine new ways of working, and optimise operations.

Uptime Institute’s Tier III accreditation was achieved after a diligent assessment and evaluation by expert teams from Uptime Institute. This certification assesses data centre reliability, availability, maintainability, and overall performance needed to provide continuous and efficient operations.

StarLink, announced a distribution partnership with Anomali, the leader in intelligencedriven extended detection and response cybersecurity solutions. By adding Anomali to its portfolio, joint customers’ security teams will gain in-depth visibility over all threats, automate threat blocking, and enable faster response times.

This new partnership will provide organisations across MEA with access to the awardwinning Anomali portfolio, an innovative suite of products that leverages global intelligence to empower security teams with the precision attack detection and optimised response needed to stop immediate and future breaches and attackers.

Included in the offering are Anomali ThreatStream, a leading threat management platform, Lens, a Natural Language Processing NLP extension that identifies all threats in any web content to operationalise it across security infrastructures, and Match, an advanced XDR attack detection and response solution that quickly identifies and responds to threats in real-time by automatically correlating all

Enova, announced its expansion into Turkey with the signing of its latest contract with Munzur Su. The expansion will see Enova securing new contracts and opening a new office as a part of its growing regional operations.

Enova’s expansion follows a successful year, with new EPC, Solar, and FM contracts including a long-term commitment with Dubai Metro aligning with Road & Transport Authority RTA and public health regulations. Enova has also signed with TECOM Group – a member of Dubai Holding, and Tarshid in Saudi Arabia along with existing contract renewals. Enova’s regional growth has been accelerated by the increased adoption of the energy services company model in the GCC and beyond, with companies financing their energy management strategy through the savings that they achieve.

Enova specialises in providing its clients with comprehensive and performance-based energy and facilities management solutions, paving the way to achieve financial, operational, and environmental targets. The company functions as an O&M provider, acting as a key enabler of energy efficiency in the industrial sector.

security telemetry data against active threat intelligence.

StarLink, with its unique GTM strategy, regional on-ground and technical expertise as well as its extensive partner network will help build a robust market expansion plan for Anomali in the region to meet the growing customers’ cybersecurity requirements as well as enhance their market presence.

Quantum is partnering with value-added distributor Mobius to deliver security and video surveillance infrastructure portfolio, across the Middle East and Africa regions.

The partnership allows Mobius, a distributor within the video surveillance market in MEA, to now offer customers Quantum’s entire security infrastructure portfolio, from high-performance NVRs, to hyperconverged infrastructure, to the largest shared storage and archive solutions, and analytics processing. Customers looking to build or upgrade their CCTV and physical security systems across the region can now access Quantum’s flagship video surveillance offerings, including:

l VS-NVR Series Network Video Recording Servers

l VS-HCI Series Surveillance Recording Appliances

l VS1110-A Application Server

l VS2108-A Analytics Server

Equinix announced its expansion into Africa through its intended acquisition of MainOne, a leading West African data centre and connectivity solutions provider, with presence in Nigeria, Ghana and Côte d’Ivoire. The acquisition is expected to close Q1 of 2022, subject to the satisfaction of customary closing conditions including the requisite regulatory approvals.

The transaction has an enterprise value of $320M and is expected to be AFFO accretive upon close, excluding integration costs, marking the first step in Equinix’s long-term strategy to become a leading African carrier neutral digital infrastructure company. With more than 200 million people, Nigeria is Africa’s largest economy and, along with Ghana, has become an established data centre hub. This makes the acquisition a pivotal entry point for Equinix into the continent.

Equinix believes MainOne to be one of the most exciting technology businesses to emerge from Africa. Founded by Funke Opeke in 2010, the company has enabled connectivity for the business community of Nigeria and now has digital infrastructure assets including three operational datacentres, with an additional facility under construction expected to open in Q1 2022. Upon closing, these facilities

will add more than 64,000 gross square feet of space to Platform Equinix, with 570,000 square feet of land for future expansions.

MainOne owns and operates a subsea network from Nigeria to Portugal, as well as 1,200 kilometers of reliable terrestrial fiber network across southern Nigeria. These are all improving connectivity to and from Europe, West African countries and the major business communities in Nigeria. When completed, this acquisition will extend Platform Equinix into West Africa, giving organisations based inside and outside of Africa access to one of the world’s fastest growing markets.

Tata Consultancy Services launched a pioneering All-Women Innovation Lab in Riyadh, to collaborate with startups and universities to provide a platform for students to explore and innovate with new technologies. The new innovation lab promotes digital skill development and supports Kingdom of Saudi Arabia’s Vision 2030.

The inaugural ceremony was chaired by Dr Ahmed Althnayyan, Deputy Minister for Future Jobs and Digital Entrepreneurship, Ministry of Communication and Information Technology, Saudi Arabia, and attended by dignitaries from Saudi ministries, semigovernment agencies, as well as CXOs and directors from top Saudi banking and telecom companies.

The TCS All-Women Centre is a unique business model developed to serve customer needs and help local communities.

It harnesses the best of TCS’ expertise to train and develop professional capabilities in Saudi women to support them in pursuing fulfilling careers and realising their potential in the Kingdom.

The centre provides long term career opportunities in the fields of finance and accounting, human resource operations, supply chain management, IT and digital related services and helps customers across the globe. Recently, it won the Bronze Trophy at the King Khalid Sustainability Awards 2021 for ensuring global sustainability standards and practices.

Siemon, hosted an African channel and service provider partner event at the stunning Southern Palm Beach Resort in Mombasa, Kenya. The three-day event took place in early December and provided an opportunity for Siemon and its longstanding distribution partner, Mart Networks Group, to reconnect with all of their partner companies and thank them for their continual hard work during these challenging past two years.

Representatives from partners across the continent followed the invitation to beautiful Diani Beach at the edge of the Indian Ocean to meet in a relaxed environment, strengthen business relations and have an opportunity to learn more about Siemon’s innovative IT infrastructure portfolio.

The meeting agenda included a conference, an excursion to Kisite Marine Park and Wasini Island where there were plenty of opportunities to watch dolphins and snorkel in the pristine waters, followed by a Kenyan barbeque dinner.

At the evening conference Siemon’s global intelligent building solutions specialist Bob Allan shared Siemon’s knowledge on the latest technology trends impacting intelligent building infrastructure and the company’s solutions portfolio for smart buildings. He was followed by Gary Bernstein, Siemon’s global data centre solutions specialist who discussed key infrastructure considerations including singlemode and multimode fibre cabling options as enterprise data centres look to adopt next-generation speeds.

The event also celebrated the successes that Siemon and its long-standing channel partners are enjoying in Africa. As the continent continues to experience broad investment in the data centre sector, Siemon has firmly established itself as a leading provider of quality data centre design services and cutting-edge IT infrastructure solutions within the finance, education and colocation data centre markets. The company is set to grow its footprint in the region in 2022 as the growth in internet penetration and a rising demand for cloudbased services will result in further data centre development.

CyberKnight, announced its financial results for FY2021. In its second year of operation, CyberKnight processed more than $29 Million in orders realising a revenue increase of more than 200% over the previous year.

This notable achievement and the rapid growth are the result of substantial investment into high-caliber business development and technical local teams across the Middle East, a keen focus on expanding the breadth of the channel partner ecosystem, successfully delivering extensive high-ROI marketing activities, maintaining strategic customer relationships, and effectively building out a market-leading Zero Trust Security focused portfolio offering.

A10 Networks announced significant ongoing success achieved by its channel programme in 2021, with 23 new strategic partners signed up in the last year and plans to further develop channel initiatives in 2022.

At the start of 2021, A10 Networks refocused its five key strategic channel pillars encompassing building ecosystems, channel enablement, lead generation activities, deal registration and working with distribution. At the end of 2021, A10 Networks signed up 23 new partners as a result of this focus on its channel, which now comprises over 80 partners and 30 distributors.

Furthermore, A10 Networks continues to work with strategic alliance partners, Dell and Ericsson. These alliances will be a key focus in 2022 driving combined technology solutions that deliver better business outcomes for customers. New business development initiatives are underway within the distribution community with joint-funded resources assigned in territories such as the Middle East, UK&I, Benelux, DACH, Africa and Scandinavia working with distributors such as Exertis, Ingram, Netex, V-Valley, 2SB, MUK and others.

A10 Networks also launched its new Affinity Technical Ambassador programme in EMEA in 2021 which is gaining great traction with strong technical collaborations within key partners underway to harness and enrich the knowledge level of partners. The company has also focused on taking partners on a progressive journey and its Elevate to Elite initiative has been successfully enabling partners to make the transition to Elite partner status.

CHRIS MARTIN, Channel Sales Leader for EMEA and APAC at A10 Networks.

A10

new

Liferay announced that Link Development, a global provider of technology solutions and Liferay EMEA partner for close to two years now, has achieved multi-country Platinum Partner status, Liferay’s highest partnership level.

The distinction highlights Link Development’s know-how in terms of consulting, partnering and delivering digital experiences for enterprises, thereby achieving success and winning the Liferay Platinum Partner Award for their outstanding performance. Joining the exclusive list brings further proof of its expertise in the digital experience market. The strategic alliance has exceeded the objectives of both the organisations across Egypt, United Arab Emirates, Saudi Arabia, Bahrain, Kuwait, and Oman, as well as Canada and the United States.

Liferay’s DXP enables a unified and optimised experience across several platforms and scales up on the cloud for increased agility

and flexibility to drive digital transformation across verticals that include government, telecommunications, insurance, banking, retail amongst others. It also empowers entities with seamless, creative customer experiences across various touchpoints and plays a crucial role in preventing data breaches and securing customers’ data.

On this occasion, Ahmed Saad, Alliance Manager at Liferay Middle East, expressed his delight in elevating the cooperation between Liferay and Link Development to a new level, highlighting mutual trust and paving the way for future collaborative accomplishments.

Finesse, entered into an agreement with Barracuda, to provide its customers with easyto-deploy cloud security solutions. Through this agreement, Finesse’s expertise in digital transformation will be complemented by the power and simplicity of Barracuda’s cybersecurity solutions portfolio, enabling Finesse customers to reduce cybersecurity risk on their digital journeys.

Finesse delivers digital transformation solutions to over 350+ customers in industries like BFSI, healthcare, energy, education, and government. Barracuda offers enterprise-grade security solutions that protect employees, customers, their data and applications on the cloud, from a wide range of threats through their easy, comprehensive, and affordable solutions for email protection, application and

cloud security, network security, and data protection. Through Barracuda’s portfolio of products, Finesse will offer regional customers the ability to visualise, segment, and protect their cloud security structure, as well as respond seamlessly to zero-day threats by integrating Barracuda solutions into their existing security ecosystem.

Dubai Internet City announced a deal with Khazna Datacentres, one of the largest wholesale data centre providers in the Middle East and North Africa, to establish two state-of-the-art facilities to further support enterprises of all sizes by enabling the integration of technology across all business functions.

The government’s ambition of transforming the UAE into a smart country necessitates the deployment of secure cloud infrastructure and data storage across all industries, from government and residential services to healthcare and manufacturing. Combined with many businesses adopting hybrid work models on the heels of the global pandemic, the volume of digital data has increased significantly in recent years.

National strategies that encourage the development and deployment of IoT, AI and cloud computing are also increasing the demand for reliable storage. The growing reliance on the internet has elevated data security to a global priority, especially in relation to sensitive data generated by the healthcare and financial sectors. Wholesale storage providers such as Khazna will play a critical

role in catering to the recording, movement and security of increasingly large amounts of information. As digital adoption sweeps through the greater MENA region, the opening of data privacy and storage centres can enhance business activity.

The two new data centres will be strategically located in Dubai and will feature the unique design of Khazna’s data centre pods allow for the rapid scaling of operations when required, which offers partners long-term growth benefits, while businesses involved in 5G, smart city projects and cloud computing can also leverage Khazna’s technology.

Khazna Datacentres will operate a total of fourteen datacentres combined, creating the UAE’s largest data centre provider.

Tata Communications and Zain KSA announced they have entered a strategic engagement to fuel digital transformation

journeys of enterprises and government organisations in the Saudi Arabia. With this collaboration the combined ecosystems will

deliver solutions and platforms to remodel cities with smart street lighting, smart waste management, connected workplace, healthcare and connected cars.

The flagship project where Tata Communications and Zain KSA are working together to bring smart street lighting solution for one of the key cities in KSA.

Tata Communications IoT ecosystem will serve as one-stop-shop to provide the hardware, platform, application and insights while Zain KSA will expand the footprint with its business-to-business B2B offerings through joint projects related to software-defined wide area network SD-WAN and global contact centres, as well as the application of smart transport and Internet of Things IoT solutions enabling smart waste handling, smart metering and other smart city use cases, to name a few.

The Tata Communications and Zain KSA strategic engagement will serve Saudi’s enterprises and government institutions with advanced technologies such as IoT, 5G, Low Range Wide Area Network, Managed Security Services, SDWAN and many others. It will also support environmental sustainability measures and digital transformation of the region.

BROUGHT TO YOU BY

REGISTER NOW

Top executives indicate switching to a burner may not be sufficient, and it is equally important to avoid accessing personal and organisational accounts.

The FBI has notified Olympic athletes to leave their personal cell phones at home and carry a burner phone to the Beijing Winter Olympics. It has cited the potential for malicious cyber activities. The FBI has advised athletes to use a temporary phone while at the games. According to the FBI there is no country that presents a broader threat to US ideas, innovation, and economic security than China. US intelligence officials have warned that officials and members of business and academia, who travel to China can face possible risk of their personal devices getting hacked. While US athletes are allowed to compete, the Biden administration is not sending government officials to the games.

The National Olympic Committees in some Western countries are also advising their athletes to leave personal devices at home or use temporary phones due to cybersecurity concerns at the Games.

Top executives of the industry share their opinions on this advisory also indicating that just switching to a burner may not be sufficient. It is equally important to avoid accessing personal and organisation credentials and accounts, even while using the burner phone. Transfer of data from the burner phone back to a primary device is best done through an intermediate account and device as well.

Read on for a deep dive on this subject.

Using a burner phone limits the exposure of the device to the trip itself. Assuming the burner is activated for the trip and discarded or even destroyed either before leaving China or on arrival in the USA, the opportunity for significant compromise is severely reduced.

There may be opportunity to monitor calls, texts and internet activity while the phone is in use within China, but this activity can be limited through good education on the risks for those travelling. Using a long-term device may result in the compromise remaining in place when the person returns to the USA, a far more serious concern and almost certainly the basis for the FBI statement.

The real objective of any compromise of a device would likely be to establish a method of access that remains available once the device leaves the country. Likely malware-spyware would be an inobtrusive monitoring and or remote access control tool that could allow attackers access to the device wherever it travels. The biggest benefit for the malicious actors in this scenario is full control over the infrastructure from where the attack originates.

There is no need to compromise telcos, masts or Wi-Fi, as these are all within their sphere of control. This should make eavesdropping, while in country, relatively easy but also provide far more opportunity to probe for vulnerabilities without triggering infrastructure monitoring that exists outside of the country borders. That alone could provide opportunity to discover vulnerabilities that could be exploited long after the person leaves the country, avoiding checks on devices when the individuals arrive back in the USA.

With successful athletes potentially being invited into secure spaces, for example, The White House, those devices might offer a beachhead, in terms of a continued attack. Not only that, being able to track athlete’s movements, conversations, messages, and potentially even compromise their encrypted social media feeds, long-term, potentially offers opportunities to coerce individuals. High-profile individuals will always represent a richer target for attackers.

Our smartphones and tablets are full of sensitive personal data that we would not want anyone to have their eyes on or potentially steal. In the case of Olympic athletes, they might have photos of sensitive health documents or passports saved as a backup on their mobile devices.

That being said, regardless of whether athletes and press are using burner phones or not, they should be incredibly wary of any individual, app, or message that encourages them to share login credentials because the risk of being phished on mobile exists regardless of the type of device or operating system.

Furthermore, apps could easily be running malware in the background, especially if they are not being downloaded from a trusted source like the App Store or Play Store.

Also, there are concerns about the official Olympics application, so Lookout researchers took a look at the app, and found that it requires the user to enter some PII such as demographic information, passport information, travel and medical history. There also appears to be a list of forbidden words for censorship purposes.

The app also has a chat feature as well as file transfer capabilities between users. Considering the likelihood that the Chinese government could be monitoring all of this data, users should not use the app for anything more than the bare minimum. By the same token, they should enter as little information as they’re required to.

There is an identity angle here for us as well. It is all well and good using burner phones but if these are used to sign into accounts then there is an opportunity for device compromise to lead to identity compromise. A device these days can be temporary and easily replaced however a user’s digital identity is far more permanent.

As such it is as important, if not more, to protect the users’ identity and access as it is not the mobile device that magically grants access to data but the identities and the access these allow.

High-profile individuals will always represent a richer target for attackers

As with many high-profile international events, the Olympic Games generate a spike in economic activity and press coverage in the host nation, which we have seen attract the attention of cyber threat actors in the past.

With the Winter Olympics just around the corner, Mandiant has historically seen the Games attract the attention of cyber threat actors, but with them taking place in China this year, there are a few additional things to consider – whether you are attending, or part of an organisation with ties to the event.

Based on our understanding of threat activity surrounding previous Olympics, this activity could be in the form of nation-state actors and information operations campaigns using the media attention to embarrass rivals through hack-and-leak campaigns, website defacements or other disinformation.

We have also seen financial criminal actors capitalise on events like this to exploit increased tourism and local spending or use Olympic-themed subject lures in their malware campaigns targeting the public.

“With the event in China this year, known to be one of the big four nations when it comes to cyber activity, we could also see reconnaissance activities on devices brought into the country by visitors. It is important to be aware that cyber activity could target athletes, officials and visitors, but also the different businesses that support the Olympics too, whether that is in industries like hospitality, telecoms or providing a sponsorship deal.

Leave your personal devices at home and take burner devices if you need to – ones that you will only use while visiting and replace afterwards. Secure these devices and accounts you will access with strong passwords.

Use a VPN at all times and enable multi-factor authentication wherever possible. Avoid accessing social media and banking if at all possible – pick up the phone and make a call for anything that requires credentials

Remember your connections. It is not just about protecting yourself, but also organisations you’re linked with.

DAVID BROWN,

In the past, where I come from, there was always an urging demand for holding secondary devices when traveling to certain counties that do not provide the same level of personal device ownership or have institutionalised censorship. However, holding a second device also means that it must come with a secondary account.

At the end of the travel, the device and accounts used are scrubbed. Even then, at no point should these devices or accounts be used to access any sensitive data or systems such as banking or primary account servers like email or storage.

To retrieve data like photos once home, it is recommended to use the second account to log into its web storage and download it to an intermediated system for scanning before uploading it to the primary account.

It is not about malware or spyware, where institutionalised censorship is the law, they have lawful interception. They see, record, and alter inline ether data you send or receive. There is no need to push malware when they have the right to access anything on the device and everything in transmission. This interception extends to legitimate apps.

Furthermore, since they are running gateway proxies, both transparent and the Chinese firewall, they also control the end-to-end encryption of all transactions, so again, there is no need to push anything. When you leave and return home, they could have account access to your services and access as needed. At that point, they could push backdoors and infostealers to ensure access is maintained.

There is no reason why China would overly target average US sports citizens over any other global sports citizens; this is fearmongering. The truth is that targeting will be widespread for average citizens, most likely via Natural language processing keywords, standard practices already in use. There will then be a list of high-priority targets of people of interest that will span all global sports citizens in which real-time or near-time targeting is most likely with NLP.

Everyone should control their speech; you do not have freedom there. Mind what you say, always assume someone can hear everything. On top of that, we all know that there are hot button topics in China, so it is best to avoid discussing them. As the old saying goes, if you have nothing nice to say, say nothing at all.

It is not just about protecting yourself, but also organisations you’re linked withSecurity Operations Director, Axon Technologies CRISTIANA KITTNER, Principal Analyst, Mandiant Threat Intelligence

The truth is that targeting will be widespread for average citizens, most likely via natural language processing

l With successful athletes potentially being invited into secure spaces, for example, The White House, those devices might offer a beachhead, in terms of a continued attack.

l High-profile individuals will always represent a richer target for attackers.

l Considering the likelihood that the Chinese government could be monitoring this data, users should not use the app for anything more than the bare minimum.

l Regardless of whether athletes are using burner phones or not, they should be incredibly wary of any message that encourages them to share login credentials.

l It is not just about protecting yourself, but also organisations you’re linked with.

l Avoid accessing social media and banking if at all possible.

l Pick up the phone and make a call for anything that requires credentials. Remember your connections.

l There is no reason why China would overly target average US sports citizens over any other global sports citizens; this is fearmongering.

l The truth is that targeting will be widespread for average citizens, most likely via natural language processing.

l There will then be a list of high-priority targets of people of interest that will span all global sports citizens.

l Consider if you will, how many people will be in a local proximity at different times of the games.

l With the current global tensions occurring around the world, you have to consider what is the data on each device worth.

l You need to also consider what is that individual athlete or delegation representative worth.

l A device these days can be temporary and easily replaced however a user’s digital identity is far more permanent.

l While travelling to many countries not just China, you should consider the same practice.

l When going through any border crossing most have the rights to check your electronic devices and possibly clone them.

l While China is top of mind you should always consider what other countries laws could result in.

l A very sophisticated culture of surveillance and censorship exists in China.

l Within China, authorities can request access to and access any data being transmitted.

l The official Olympic games application has been shown to have significant vulnerabilities.

Any event, such as the Olympics, draws high volumes of people which inherently means more opportunity for cyber adversaries. At the most basic level, using a cheap burner phone means that if the phone is lost or stolen, the impact to the owner is reduced.

Taking it a step further, one aspect, that we have sadly seen grow in recent years, is ransomware being used by bad actors to analyse the data on a device and use it either for profit or to blackmail the victim. It is unlikely that athletes would have state secrets on their phones that would be of value, but it is likely they may have personal information that they would be embarrassed for others to know, which as highprofile athletes could make them susceptible to coercion.

If a nation state is serious about compromising devices, it is likely they would be using zero-day attacks, threats that are not detected by common security tools. Today, most people do not see their mobile phone or tablets as a risk and so many have very weak security; easy to guess passwords, no anti-threat controls and are likely to click on anything that pops up.

If hackers attempt to compromise the mobile phones or tablets used by athletes or the traveling delegation from any nation in Beijing, there is a high likelihood they will be trying to install spyware and remote access trojans; software that allows the device to be controlled by third parties.

Consider if you will, how many people will be in a local proximity at different times of the games, country team meetings, key ceremonies. If hackers can compromise one device using the communications on that device, such as Bluetooth or other local peer-to-peer capabilities, they could analyse and compromise many other devices at the same time.

I do not anticipate US athletes being the only nation targeted. In fact, all nations are at risk. However, with the current global tensions occurring around the world, you have to consider what is the data on each device worth, which is loosely linked to the net-worth of the individual. But more importantly, you need to also consider what is that individual athlete or delegation representative worth and that is where the nationality of the device owner is key.

promised what would the impact be to you personally and your company.

It is also important that people understand that a burner phone does not mean a second phone and it means that you can easily wipe it clean, cannot be personally traced to you and you do not access any sensitive data from that phone so you would simply use an encrypted messaging service that is temporary for that period of time.

Honestly while travelling to many countries not just China, you should consider the same practice as when going through any Border crossing most have the rights to check your electronic devices and possibly clone them. So yes, while China is top of mind right now you should always consider what other countries laws could result in the same compromise and risks.

When inside a controlled network, anything you do online is already filtered and might not be the official website you think it is. So, it is very easy to insert malware that could steal credentials, passwords, exfiltrate sensitive data, steal identities, embed backdoor agents and much more.

It is not only a risk for US sports citizens but all citizens from around the world who should be vigorous and cautious when bringing devices that contain sensitive data or could be used later by attackers to gain persistent access long after the Olympics is over. The Olympics is the perfect venue to be able to infect as many people as possible.

It really depends on what risks your personal cell phone could expose. So before deciding to use a burner phone you should really understand what risks you are trying to reduce. If your cell phone is an extension of everything you do aka your complete digital life such as health data, personal information, financial information, election voting to business data then you should really consider if your phone gets fully com-

A very sophisticated culture of surveillance and censorship exists in China, and it IS worth noting that Chinese laws differ markedly from Western ones. Within China, authorities can request access to and access any data being transmitted within its geographical territory.

The official Olympic games application, which all athletes and officials are required to use, has been shown to have significant vulnerabilities that if exploited could lead to data on the handset being compromised.

Chinese authorities are deeply concerned about protecting China’s image both at home and overseas which has led them to become the world’s leading advanced digital authoritarian state. The Olympic Games application has been shown to contain censorship capabilities which are designed to safeguard the official State narrative and ensure China is perceived in a positive light.

Upon their arrival, athletes should expect to have their phones voice and data intercepted. There are a number of national security laws which are enforced that compel all communications companies to provide the information to the state’s intelligence and security services upon request.

A very sophisticated culture of surveillance and censorship exists in China

Top executives share their industry and technology forecasts for 2022 and ahead.

Executives from Alteryx discuss trends across democratisation of data, skills and the great resignation, artificial intelligence and machine learning.

Digital Transformation 2.0 will usher in a culture of analytics across business units as larger enterprises provide the self-service technologies and training to ensure the average knowledge worker is set up for success and able to directly perform analytics.

2022 will be the year of the Chief Transformation Officer. We will see a title and focus shift from Chief Data Officer to Chief Analytic Officer to Chief Transformation Officer, as the role of those leading the digital transformation journey focuses more on the results than the data or the analytic methods used.

As the people move from company to company, we will see beloved technologies travel with them and become an established part of their new stack.

With the continued democratisation of analytics, data scientists need to evolve from problem solvers to teachers. Organisations are now looking to fill these roles with someone who can articulate and explain – not just code to encourage people to be creative and think critically. However, there is an existing skills gap between data scientists as practitioners and those as teacher.

We will see the rise of data trusts and frameworks evolve and organisations will shift their mindsets to sharing rather than data hording. We will see increasing use of synthetic data, differential privacy, other techniques to ensure security, privacy and legit use of data.

The digital world needs to ditch paper. Many organisations are still working from printed documents leaving pertinent data on the table that needs to be extracted. Getting the data out of paper has been difficult to date, but with computer vision and text analytics capabilities, organisations can extract insights from shipping invoices, paper records, receipts, etc.

The role of the citizen data scientist will evolve. Organisations will focus more on the relationship between people and AI, leading to increased spend on upskilling people as data literacy evolves into AI literacy.

We will move away from the term citizen data scientist and towards AI or analytics literate. Businesses will become more dependent on collective intelligence, the idea that better business decisions can be made by machines and humans working together.

More responsible AI will bridge the gap from design to innovation. While companies are starting to think about and discuss AI ethics, their actions are nascent, but within the next year we will see an event that will force companies to be more serious about AI ethics. An increasing number of companies will get more serious about AI ethics with transparent explain ability, governance and trustworthiness at the centre.

AI becomes demystified and more approachable for the everyday business user. No-code and low-code will simplify and democratise AI – although data scientists will continue to focus on high value problems, the number of people who are able to participate in advanced analytics utilising automation, computer vision, natural language processing, and machine learning will increase. More companies will invest in AI-driven automated insights to complement their existing dashboards. ë

No-code and low-code will simplify and democratise

Historical data and representations are not enough for successful decision making and predictive intelligence needs to be blended into the process.

Even with heads of state coming to an agreement on sustainability requirements, it will largely fall on individual companies to enforce these standards upon themselves. While many organisations have already expressed, and even implemented, plans to reduce or eliminate carbon emissions, many have yet to adopt any strategy to make both immediate and long-term impacts.

Without a unified, standardised pact that holds both countries and companies accountable, minimal change will be made. Until such a standardisation exists, consumers and investors are the ones most likely to force companies to make the necessary shifts, as the younger and more environmentally conscious generations continue to grow into the largest global consumer base.

Delivering information just in time, instead of in traditional dashboard forms, which look in the rear-view mirror, will be critical in 2022. Historical data and representations are not enough for successful decision making. Predictive intelligence needs to be blended into the process.

Ultimately, these insights are needed at the point of decision and action, instead of in a separate operational location. Data fabric, business intelligence, AI, machine learning, and user experience all must come together in a single solution to be meaningful.

2021 has been a crazy year for supply chain professionals. A world of people who had no idea what a supply chain was at the beginning of the year now have a much better understanding of how the goods they purchase get from one place to another. The reality remains that a lot of the transport issues manufacturers are facing in 2021 are not going away. Be ready for plenty of supply chain news in 2022 including these important trends.

As long as several global economies continue to thrive, the demand for goods and services will continue to hold transportation rates, particularly ocean, at record levels. However, with inflation rearing its head in various parts of the globe, higher prices on products may lead to a consumer slowdown, allowing manufacturers and their suppliers some breathing room to restock their supplies.

That said, the backlog of existing demand will keep ocean transport rates higher until bottlenecks drain out. As we see supply and demand begin to balance out in the second half of 2022, transportation rates should creep lower heading into 2023.

With supply shortages stretching from groceries to semiconductors, many organisations have been forced to examine ways to bring crucial components closer to final production process to ensure history doesn’t repeat itself. With many global organisations looking to localise larger portions of their supplier base, supply chains will find themselves better equipped to handle large demand spikes as they occur.

With the global vaccination effort proceeding at a slower than anticipated pace, new variants of the COVID-19 virus will continue to drive caution and hesitation regarding traveling and fully reopening businesses. As a result, organisations will move away from just-in-time JIT inventory strategies and will bulk up inventory levels, so they can avoid production disruptions.

This also allows organisations to use supply chain finance tools to extend payment terms to suppliers using innovative finance options with lenders. Organisations can build inventory strategies that are less susceptible to disruption while allowing their supply partners to maintain healthy capital levels.

As the business world continues to transition to remote-work environments, the definition of user experience continues to change. While voice access, capabilities have been heavily

Data fabric, BI, AI, ML, user experience must all come together in a single solution to be meaningful

hyped for some time in the enterprise arena, security controls will continue to tighten, and employees will need new ways of executing work away from traditional web screens.

In 2022, we expect that users will demand nearly full operational functionality through voice-enabled devices – with digital assistants that augment and automate tasks.

As ERP systems evolve to modern Enterprise Application Platforms EAPs, look for expanded platform definitions to provide not only for composability in cloud environments, but also across hybrid cloud, on-premises environments. Composability will be broken down further to the business process level, and not just at the application level.

This means that enterprises will need a standard operating model and platform for consistent integration, workflow, data analysis, and extensibility. Users will want to build their own processes and experiences to match their exact needs, not simply take what’s out of the box.

No two businesses are the same. Users will demand easy and simple ways to define their business interactions in a flexible system. Therefore, expect the microservices discussion to accelerate, as companies strive to build and assemble their software systems, as if designing a floor plan for a new home.

Businesses will start deploying EAPs, through which business processes not only will be assembled to match needs, but also will

be self-sustaining and corrective, based on AI and intelligence that is baked into the framework.

The actual convergence of analytics, intelligence, and user experience will enable successful, real-time decision making.

Core and edge solutions already are connected, for the most part, and edge solutions do not refer to devices anymore. This view acknowledges that some business operations still want to maintain local control on premise. Being able to navigate a true hybrid cloud, on-premises business, while not impacting productivity, will be key.

Customers will need cloud innovations in the form of machine learning, for example. At the same time, they need the ability to apply such technologies to their on-premises systems – not just to stereotypical edge devices.

Users will demand easy and simple ways to define their interactions in a flexible systemAMEL GARDNER, Vice President and General Manager, Middle East and Africa, Infor.

Energy network operators will be stretched as they are asked to perform the magic trick of increasing supply while decommissioning fossil fuel plants.

For data and the datacentre industry, the pandemic disruption was also a major catalyst for accelerated digitalisation. Thankfully, most of the technology needed during the crisis was already in existence, supported by datacentre and telecoms infrastructure.

According to a PWC survey, 67% of Middle East consumers think they have become more digital in comparison to the global 51%, with the highest percentage being in Egypt at 72%. This can be related to the fact that governments are moving towards smart cities.

The before-mentioned crisis drove the rapid adoption of these technologies and sped developments which were already underway. But what is most significant is that this change is likely to be irreversible. When you remove a catalyst, the reactions it caused do not reverse themselves. The increased reliance on datacentres and by extension the telecoms infrastructure which connects us to them is here to stay.

However, there are serious associated issues with this. A decades-long efficiency drive, which held datacentres to steady demand levels while processing much more, has run out of headroom.

Our economy and society have gone full throttle on data, exactly at the time when we need to put the brakes on energy consumption if we’re to combat climate change. There are no megabits without megawatts, and as we demand and produce more and more data, energy consumption levels will rise.

As the demand for electrical energy is set to soar, datacentre operators will face tough challenges in accessing scarce, new energy production.

The solution is to ramp up renewable energy production, not only to meet new demand, but to also displace current fossil-based production. So, it is not just the datacentre industry facing challenges. Energy network operators themselves will be stretched as they are asked to perform the magic trick of increasing supply while simultaneously decommissioning fossil fuel plants.

As such the challenge for datacentres will no longer be one of efficiency, but one of sustainability. New metrics, new approaches to datacentre design and operations will fall under greater scrutiny, as will the energy consumed by the overall

telecom infrastructure which has an energy requirement many times that of the datacentre industry.

We rely on data, data relies on power, and a significant gap between our wants and needs will soon emerge. On one side this appears as a crisis. However, on the other side, this will be the kind of gap that will attract serious investment and innovation. For the grid, this gap will enable new and existing private ventures to build out the renewable power we desperately need.

A seller’s market for power supply opens the door to new approaches and new models. For datacentres, it will solidify the economic case for a new relationship with power, not just as consumers but as sites which support the grid with energy services, storage and even power generation.

Data and power will realign and soon in some cases that alignment will become a physical proximity, too. With economics and policy beginning to align in this manner, there is a case for datacentres to offer not just frequency response, but also move into direct flexible supply to the grid. Sector coupling, then, could become one of 2022’s major headlines for the datacentre sector. ë

According to a PWC survey,

67% of Middle East consumers think they have become more digital

Once you understand your attack surface, you can create a threat landscape and threat profiles linked to cyber threat intelligence services.

The cybersecurity industry saw some key trends emerge from defenders and attackers in 2021. The defence trends were, in almost all cases, a direct result of the threat trend, these defence trends were reactive, and for many, it was too late.

Attack surface management is an important area. We are predicting growth in this area, which supports the concepts of predictive defence. Once you understand your attack surface, you can create a threat landscape and threat profiles linked to cyber threat intelligence services with Priority Intelligence Requirements and Organisation Specific Intelligence Requirements.

These allow an organisation to shift from a reaction-based defence right of boom to a proactive-based defence left of boom. The growth of proactive-based defence is an area where we push into 2022 and hope others will too.

One of the growing threat trends we have seen over the last year is targeting Managed Services Providers, MSP and Cloud Services Providers, CSP. This targeting allows an attacker to have a significant impact per attack as it can span numerous victims.

MSP and CSP have value but also risk. Running on someone’s infrastructure means you lost control of how and if that infrastructure is protected.

In response to this trend, defence trending is growing in attack surface awareness, commonly referred to as digital foot printing. We see a slow yet growing understanding of this need. As users of MSP and CSP now have a greater need to understand their entire attack surface, not just what is left in-house.

It’s no surprise that ransomware is still the leading threat trend. As the value of crypto rises, the greater the incentive for cybercriminals. Every time a victim pays, it guarantees further attacks against others and, in many cases, repeated attacks upon themselves.

In almost all cases of ransomware that we have investigated, unpatched remotely managed or cloud-hosted systems were the initial point of access. These systems loop back to the defence trend of attack surface awareness.

The world’s most fantastic AI threat prevention solution cannot save you if you leave the front door wide open with a welcome mat out and no one to check the IDs of the people walk-

DAVID BROWN, Security Operations Director, Axon Technologies

ing or out of that door. The same is true for MSPs — they need to take the security of their infrastructure as a critical service, offering complete vulnerability management and real-time monitoring and response within their managed infrastructure.

The evident concern is corporate assets operating outside of the controlled environment, this needs to be handled in a draconic manner. The best way to manage these devices is with combinations of application and access controls. It is deploying connection-aware host-based firewalls, remote gateway proxies, and MFA VPN solutions.

On top of this level of access control, other requirements are software inventory management, agent-based policy auditing, vulnerability management, and fully managed anti-malware, host intrusion detection prevention system, all with reporting to real-time monitoring and response. In a more straightforward statement, the more visibility, the greater the ability to protect, detect and respond. ë

In almost all cases of ransomware investigated, unpatched remotely managed or cloud-hosted systems were initial points of accessAxon Technologies

There is a culture shift happening, organisations are less focused on devices and capex and more focused on business outcomes of technology investments.

Digital transformation is driving a proliferation of IoT devices and Machine to Machine communications are growing rapidly. Connected devices outnumber people 5:1. Over the next three years, there will be 10x more connected devices as compared to people, making automated secure connectivity of IoT of paramount importance.

Without an automated way to onboard, provision, and secure these devices, organisations will be left vulnerable to security breaches, which are continually growing in sophistication.

As SASE deployments enter the early majority stage of the adoption lifecycle, the market will see a clear split in approaches. Small and medium size enterprises are likely to be attracted to the all-in-one SASE offerings, where simplicity and one throat to choke take priority over advanced capabilities. On the other hand, large enterprises will remain unwilling to compromise on security, reliability, or the quality of user experience.

They will look to a dual-vendor approach, pairing a best-of-breed SD-WAN partner for on-prem security and WAN facing capabilities, with a fully-fledged cloud-delivered security partner delivering secure web gateway SWG, cloud access security broker CASB, and zero trust network access ZTNA services.

While much of attention has been given to 5G cellular, on the campus and inside the enterprise, we are on the cusp of a fast transition to Wi-Fi 6E. Wi-Fi 6E delivers high capacity with an additional 1200 MHz of new spectrum, while retaining backwards compatibility.

Leading market intelligence firm 650 Group expects over 200% unit growth of Wi-Fi 6E enterprise APs in 2022, indicating that enterprise organisations recognise the potential of 6E, especially with the continued reliance on activities such as videoconferencing, telemedicine, and distance learning.

Even as the pandemic recedes, work-from-home is here to stay. This new normal will drive the emergence of the microbranch or branch of one. In the early days of the pandemic, organisations scrambled to expand VPNs and deploy remote access points RAPs to connect their locked-down workforce and implement pop-up testing kiosks.

In 2022, we will see enormous growth for purpose-built microbranch offerings that combine enterprise-class Wi-Fi access with sophisticated multi-path WAN connectivity and advanced AIOps for reliability and consistent user experience. These microbranch offerings

DAVID HUGHES, Chief Product and Technology Officer, Aruba HPE.will securely extend the enterprise to the branch of one.

There is a culture shift happening right before our very eyes – the increased value consumers are placing on experiences over things and the decline in needing to own something has already touched our everyday lives. This same shift will begin to play out in the enterprise as well in the coming year, with organisations being less focused on devices and capex and more focused on the business outcomes of their technology investments.

Organisations want greater financial flexibility and cost predictability, while being able to increase IT efficiency and keep pace with innovation. A flexible infrastructure consumption model allows for all of this, and for those organisations that are not fully ready to take the plunge, flexible consumption models provide the option to try before buying, so that enterprises can adopt the new model – or not – at their own pace. This will drive a big increase in demand for consumption-based services like NaaS in 2022. ë

Brands will continue to be judged on their digital experiences and only the best tools and insights to meet customer expectations will prevail.

The habits of consumers in the UAE mirror the world around them. The surging appetite for digital services, while initially a knock-on effect of a global pandemic, is unlikely to reverse itself when the crisis is over. Brands will continue to be judged on their digital experiences. Only the ones with the best tools and insights to meet heightened customer expectations will prevail.

A recent AppDynamics report App Attention Index 2021: Who takes the rap for the app? shows that 98% of UAE consumers, 14% higher than the global average, believe digital services have had a positive impact on their lives during the pandemic.