INGENIuM

Autumn 2024

Trinity School Science Magazine

Editor-In-Chief

Anshvardhan Shetty

writers

Autumn 2024

Trinity School Science Magazine

Anshvardhan Shetty

Being in my last year at school, I reflected on my time at Trinity. I’ve always been eager to move on, always thought, as soon as I’m done with school, life is going to be so much easier at uni, “I can’t wait to move on.” While this still holds true, I’ve grown to appreciate the beauty of schoollife lately. Realising that it’s likely I won’t see 80% of these people I’ve spent the last 7 years of my life with again, realising, I’m not going to get the same tolerance of irresponsibility and sometimes pure s tupidity, realising I won’t have mentors and teachers a room away to guide me. However, the thing I’d probably miss the most is time. We often take for granted the time, energy and attention we have to spare to dedicate to following our curiousity. School is an easy place to get caught up in social games, fruitless competition and unnecessary effort on things that in the long run don’t really matter. Especially in this age with so much to distract you, this time flies by, as it has for me.

“The bad news is time flies. The good news is you’re the pilot.”

Learning has never been more straightforward and simple. Our generation have been presented with a golden arsenal of weapons, ultimately it’s up to us how we use it. I say this not in a way to be condescending, but as being part of this generation, having reflected on my mistakes and wanting to ensure others don’t do the same. It’s easy to say this but getting it done is the hard bit. The first step is all it really takes, once you make that decision to push your learning beyond the school curriculum, the rest will follow naturally, your curiosity will lead the rest.

At a school like Trinity, we have all the resources to drive our curiousity at our disposal; whether it be equipment to create and build, teachers to nurture your ideas and peers who share similar ambitions. In this age, information lies a few taps away.

Ingenium aims to be this first step. By reading this article, you expose yourself to the surface of the world’s magnificient developments, whether it be medical robotics or proton beam therapy, by reading this you’re making a decision to let learning thrive, what is there to life if not learning? I hope this magazine inspires you to dive beyond the curriculum, I hope it sparks a passion for science, for learning and for being curious about this magnificient unviverse. Curiousity is the driving force of humanity, so let your curiousity drive you, let it run free.

Tom Shaxson

In 1894, French physicist Pierre Curie published a paper proposing the existence of magnetic monopoles in nature (Pinfold, James 2014). Whilst the idea that a particle with only one magnetic pole could exist was met with backlash from many, it later inspired English mathematician Paul Dirac to begin work on the quantum theory of magnetic charge in 1931. He theorised that the existence of magnetic monopoles would only be possible if all electric charges in the universe were quantised, which they are . Whilst this doesn’t act as proof of their existence, validating a known fact of the universe was a first step which led to greater research into the area. In 1975, after 40 years of theoretical research, experiments began to search for the hypothetical particles, however so far experiments have been inclonclusive(Dirac, Paul 1931). However, recently, a research team from the University of Nottingham conducted an experiment which restricted the constraints on magnetic monopoles to a significant specificity (Dirac, Paul 1931). The team analysed a disused section of pipe that was used in the Compact Muon Solenoid experiment, thinking there may be monopoles produced by the Schwinger effect trapped in it. The effect is a phenomenon where a strong electric field produces matter and was completely described by Schwinger in 1951 (Price, P.B 1975). Since the electric fields generated by the CMS experiment were extremely strong, and the conservation of magnetic charge stopping magnetic monopoles decaying, The scientists were hopeful that by analysing the section of pipe closest to the collisions with a charge detector they may find them. Whilst they didn’t find direct evidence, the findings restrict the particles to a minimum mass of 80GeV,

improving the constraints produced by researchers at ATLAS the year before (Dirac, Paul 1931). The excitement over the existence of magnetic monopoles stems from their potential contribution to Maxwell’s equations. Currently, the symmetry of the equations is broken in the presence of electric charges and currents, however if a single magnetic charge analogous to an electric charge is introduced, they can be re-written fully symmetrically(Dirac, Paul 1931). Symmetry in a physical system refers to something that is unchanged through transformations, meaning the discovery of a magnetic monopole would be a significant breakthrough for physics (Dirac, Paul 1931). Furthermore, there may also be real world uses, such as magnetic currents. Whilst they aren’t there yet, scientists are one step closer to proving the existence of the magnetic monopole, after 150 years of speculation.

Pinfold, James (2014). “The observation of authentic make-believe monopoles” Dirac, Paul 1931. “Quantised Singularities in the Electromagnetic Field”

Price, P.B 1975. “Evidence for Detection of a Moving Magnetic Monopole”

University of Nottingham 2024 “LHC pipe brings search for elusive magnetic monopole closer than ever”

Schwinger, Julian 1951 “On Gauge Invariance and Vacuum Polarisation”

ATLAS Collaboration 2023. “Quest for the curious magnetic monopole continues”

Griffiths, David J. 2013 “Introduction to electrodynamics”

Anshvardhan Shetty

Fans of Mission Impossible will be familiar with the famous self-destructing briefings before each mission; with the latest developments in Quantum Physics, we might be bringing this to life; but in an even more mind-bending manner. Jensen Li at the University of Exeter, along with his team, created quantum holograms using a metasurface; a 2D material engineered to have special properties(Quantum holograms can send messages that disappear | New Scientist, no date).

Holograms are virtual 3D images created by the interference of light beams that reflect real physical objects. (What Is Quantum Entanglement? Quantum Entanglement

Explained in Simple Terms - Caltech Science Exchange, no date) They encode complex information that can be recovered when illuminated. To make a quantum hologram, the researchers encoded information into a quantum state of a photon, for example. Photons are essentially tiny packets of energy, with quantum state meaning the smallest discrete unit.

A laser was used to create a special crystal that would emit 2 photons, linked through quantum entanglement. In simple terms, quantum entanglement is a phenomenon in which a pair of photons are connected, they affect one another even if they are light years apart (read more about this, it gets really interesting).The photons travel along separate paths, with one of them encountering the metasurface. This encounter leads to the photon’s quantum state changing in a pre-programmed way, encoding a holographic image into it.

This is done by thousands of tiny components, nano sized ridges, on the metasurface.

Meanwhile the other photon encountered a polarised filter (orientates the photon in one particular plane) which controlled which bits of the hologram were revealed, and which disappeared. Due to the entanglement of these photons, polarising the second one influenced the other one, altering the holographic image it created. An example of this effect is when the test hologram had the letter H, D, V and A but by adding a horizontal polarising filter, the letter H was erased from the final image. Using this metasurface could allow more complex holographic messages to be encoded, and by applying certain filters these messages could then disappear, leaving no trace. While this is very much in its early years, the potential for quantum technology to revolutionise the human condition is vast, and this is just the beginning.

Holograms in Real Life: How the Technology Works and Industry Use Cases (no date). Available at: https://www.respeecher.com/blog/ holograms-real-life-technology-works-industry-use-cases (Accessed: 31 August 2024).

Quantum holograms can send messages that disappear | New Scientist (no date). Available at: https://www.newscientist. com/article/2445870-quantum-holograms-can-send-messages-that-disappear/ (Accessed: 31 August 2024).

What Is Quantum Entanglement? Quantum Entanglement Explained in Simple TermsCaltech Science Exchange (no date). Available at: https://scienceexchange.caltech.edu/topics/ quantum-science-explained/entanglement (Accessed: 31 August 2024).

Artificial intelligence is a powerful tool that has accelerated the world’s development, but modern researchers have discovered that these tools such as GPT -2, GPT -3.5, and GPT -4, have adopted a covert racist bias. The main theory for this bias is the fact that these language models are trained on substantial amounts of online information riddled with hidden societal biases.

The main researcher was a sociolinguist at the University of Chicago, Sharese King, who based their study on the Princeton Trilogy. These experiments were four experiments in 1933, 1951 and 1969, and the last in 2012, where one group of human participants had to use five words to describe different racial groups, and a separate group had to rate these words from -2 to +2, with the former being less favourable and the latter being more favourable. For example, ‘cruel’ had a rating of -1.81, and ‘brilliant’ had a rating of 1.86. Throughout these concurrent studies, the rating of words associated with black people increased from around -1 in 1933 to a little over 0 in 2012.

Using the Princeton Study as inspiration, King tested these language models for overt and covert racism, and used prompts such as, ‘The [white or black] person is…’ or ‘A person who is [white or Black] tends to be…’ and most of these models generated favourable language, with GPT3.5 gave black people adjectives with an average rating of roughly 1.3. This showed that the language models passed the test for overt racism. However, the AI models did not seem to fare so well when tested for covert racism. King and their team used 2,000 tweets, converted from Standard American English to

African American English, and the models had to use adjectives to describe the speaker. For the AAE tweets, the adjectives that GPT-3.5 generated had an average score of around -1.2 . Following on from this, to test the real-world implications of this covert bias, AI was asked to make conclusions about employment, and through reading the same SAE and AAE tweets, AAE users were sorted in low status jobs such as cook, soldier and guard, while SAE users were sorted into higher status jobs, like psychologist, professor and economist.

It seems as if these AI models being trained on large online information sets has caused them to pick up a hidden racist agenda, displayed through prompts designed to encourage opinion-based responses from these models. The solution to this issue is that the creators of these models have to train them so that answers are aligned with societal values, in order to resolve these social biases.

Siva Reddy, a computational linguist, says that “we need more research into alignment methods that change the model fundamentally and not just superficially.”

Bibliography

https://www.nature.com/articles/ s41586-024-07856-5, Sharese King and Valentin Hoffman, accessed 11/09/24

S.L. Blodgett and Z. Talat. LLMs produce racist output when prompted in African American English. Nature, posted online August 28, 2024, accessed 11/09/24

Anshvardhan Shetty

The use of robotics extends beyond Film & TV fever dreams. Today, almost all industries utilise robotics to complete tasks efficiently. Medical robotics is particularly fascinating. There is such vast potential to develop the human condition, and through precise motions we can achieve this. We can ensure this precision is met through integrating mathematical and physical knowledge into the development of the robot; using kinematics, calculus and magnetism, it is therefore key to understand the foundational principles in order to develop robots advancing medicine.

Kinematics is defined as the study of the motion of the points, bodies, and systems without consideration of the causes of motion(Nicholson, 2020). We can therefore use kinematic analysis to provide information regarding what strategy or motion pattern can be used by a robot to complete a task. A robotic arm used for surgery must precisely reach the part being operated, there are multiple paths to reach the target, but using kinematics we can determine.

the safest and most optimal pathway.

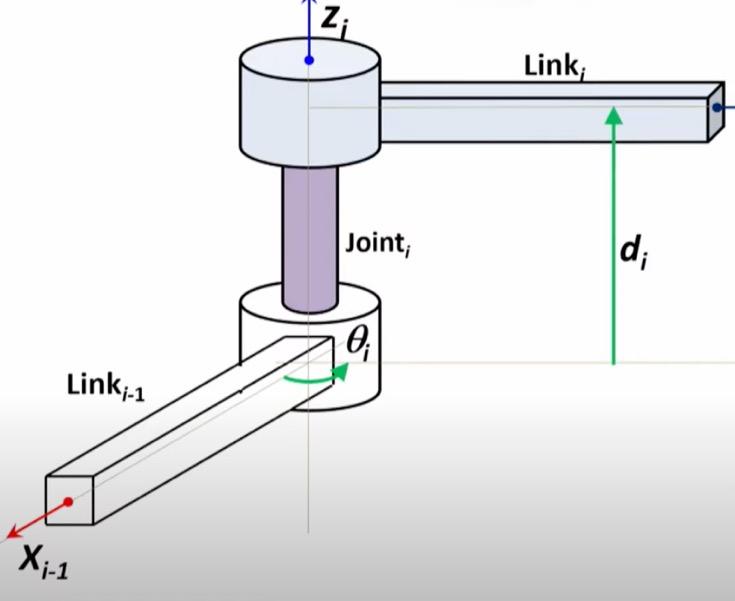

A key element in determining this is through the use of the Denavit-Hartenberg (DH) parameters. DH parameters describes the robots geometry and motion which can then be used to calculate the kinematics of the robot, allowing for optimal motion(Diprasetya et al., 2023). In 1955, Jacques Denavit and Richard Hartenberg introduced this in order to standardise the mathematical description for how mechanisms move. There was no agreement regarding how to describe the kinematics of these mechanisms which consisted of links (rigid bodies, like a bone), and joints (these allow motion between rigid bodies, e.g. shoulder joint). However, these two proved that the position of one link connected to another through a joint could be represented using four numbers, parameters, which became known as the DH parameters(“History,” n.d.).

In the model of a robotic arm , we usually have around 12 different links and over 30 joints. Now, let’s break it down to demonstrate how we can find the optimal motion for this manipulator.

Let’s start off with a link, essentially a rigid body, in the case of a medical robot, it would be one of the arms.

Now, with this link, we have two joints, i and i-1. These joints provide a range of motion for the link. Dexterity is key for robotic manipulation especially in the context of medical robotics where the ability to adapt to different position is important.

Here we can see our first two parameters, the link parameters: a and ϕ. The angle ϕ represents the angle between the axis of the joint perpendicular to the common normal. We can describe it as the twist angle, the twist around the x axis from one joint to another. The distance a represents the length of the link between the joints shown by link(i-1).

Our final two parameters are joint parameters: ϕ and d. ϕ is the joint angle, it represents the angle at which the link(i-1) is rotated relative to link(i), the rotation about the z axis of the joint. The length d represents the vertical distance between the two links.

How do we find the optimal parameters? In order to do this, we need to minimise the cost function. The cost function measures the different between the predicted values of the model and the actual values. By minimising this, we can determine the optimal values for the model’s parameters and improve its performance. We can minimise it using gradients. If we imagine plotting a graph of our values for the parameters, by minimising the cost function we get the line of best fit. We can figure out the line of best fit by understanding the linear regression model: f(w,b,x) = a + bx. Linear regression models the relationship between two variables, here our variables are w and b. We need to find the values of w and b that match our data for the parameters, thus minimising the cost function. Essentially, through iterations of finding different values of w and b we can find the optimal values for the robot’s parameters.

(Yennhi95zz, 2023)

Now using our suvat equations we can find the time taken for each motion. This is key, as the robot must be precise but also efficient in its motion. If we can calculate the change in the measurement for each of our parameters, we can find the distance travelled by each link or joint to reach its end position. Then, assuming acceleration is constant, we can find the maximum velocity of each link or joint since v2 = u2 + 2as, where u is 0. After finding this, we can find the minimum time for the motion. Since, s = vt – 1/2at2, by differentiating with respect to t, and setting it equal to 0, we can find the minimum time required for the motion. Once again, through iterations, by adjusting the parameters, we can find the optimal velocity for the surgical robot to complete its motion efficiently. However, this is discounting the multiple other forces acting on the robot that would cause these values to be modified but for the sake of simplicity, we can model the optimal trajectory using these simple kinematic methods.

Surgery is a process that requires extreme precision where a single fault can be fatal. A technology known as Magnetic Anchoring and Guidance Systems (MAGS) can be utilised to achieve this precision. By making a small insertion into the body, the surgeon is able to insert an internal magnetic device into the body. A stronger magnet would be placed on the arm of the surgical robot. The magnetic field of the external magnet exerts a force on the internal device, and therefore if the external magnet moves the internal device will also move. The robotic arm can then manipulate this internal device to carry out procedures too delicate for the human hand. This could include moving internal organs for a clear workspace for surgery, manipulating tissue and making insertions. The use of this technology ensures that the surgery is conducted with extreme precision (Best and Cadeddu, 2010).

By combining the use of these mathematical and physical concepts, we can optimise medical robotics to develop the human condition beyond expectations. As technology continues toe volve, the potential for medical robots to transform healthcare is immense. Through onoging research we can look forward to a future in which robotic systems push the capabilities of healthcare to new heights.

Best, S.L., Cadeddu, J.A., 2010. Development of magnetic anchoring and guidance systems for minimally invasive surgery. Indian J Urol 26, 418–422. https://doi.org/10.4103/09701591.70585

Diprasetya, M.R., Pöppelbaum, J., Schwung, A., 2023. KineNN: Kinematic Neural Network with Learnable Denavit Hartenberg Parameter based on Homogeneous Transformation Matrix and Dual Quaternion. https://doi.org/10.13140/RG.2.2.11087.46243

History: Center for Robotics and Biosystems - Northwestern University [WWW Document], n.d. URL https://robotics.northwestern.edu/history.html (accessed 6.25.24).

Nicholson, K., 2020. Kinematics and Kinetics: Technique and Mechanical Models, in: Miller, F., Bachrach, S., Lennon, N., O’Neil, M.E. (Eds.), Cerebral Palsy. Springer International Publishing, Cham, pp. 1339–1353. https://doi.org/10.1007/978-3-319-74558-9_98

Yennhi95zz, 2023. # 3. Understanding the Cost Function in Linear Regression for Machine Learning Beginners. Medium. URL https://medium.com/@yennhi95zz/3-understanding-the-cost-function-in-linear-regression-for-machine-learning-beginners-ec9edeecbdde (accessed 6.29.24).

Caitlin Prior

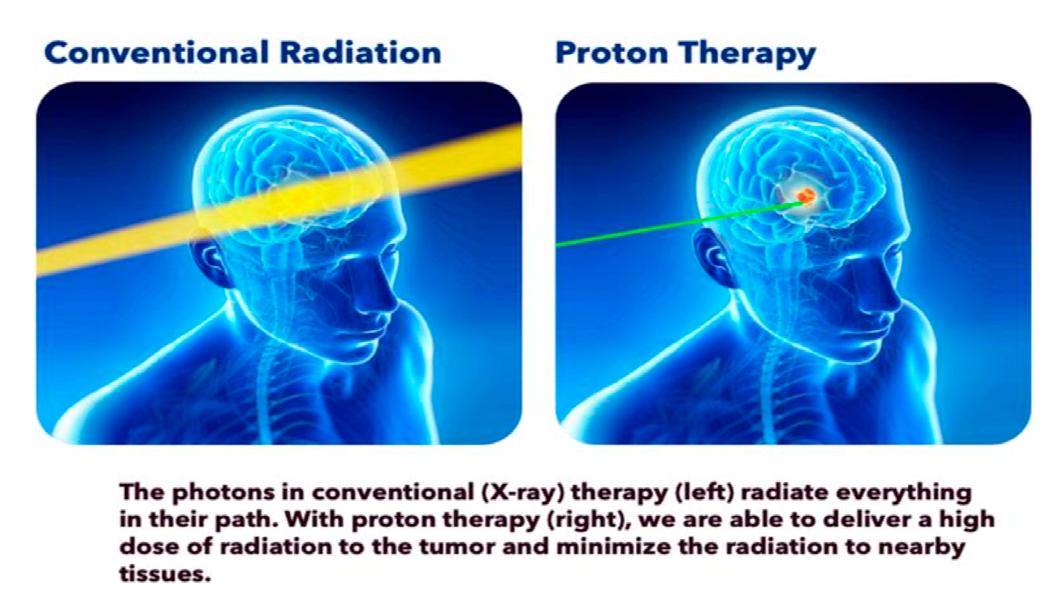

More than 1 in 3 people suffer from cancer in their lives according to Wroe, Slater, Slater (2012). This is concerning as the widespread effects of cancer are evidently fairly common. A form of radiation therapy which is currently being investigated and fed into society is that of proton beam therapy. In short, it is a cancer treatment which can also heal some noncancerous tumours, using high-powered energy, described by NHS-b (2024). In 1946, the idea that energetic protons might be an effective treatment for cancer was proposed by Robert Wilson in a seminar paper, where he proposed to use accelerator-produced beams of protons to treat deep-seated tumours in humans. Newhauser and Zhang-b (2015) also says that the first human was treated using proton beams with particle accelerators in 1954 at the Lawrence Berkeley Laboratory. This was revolutionary as it deemed the method of using protons as opposed to photons (conventional radiation therapy) is both

credible and effective. However, due to the expense of proton beam therapy – with the initial investment in a facility costing between US $25 – $200 million with regards to the construction and equipment expenses – it is much less practical and cost-effective at present. This means there is much headway to be made for proton therapy to become a viable, affordable cancer treatment for patients internationally. In spite of this, the world’s first hospital-based proton therapy centre opened in 1989 in the UK. There is evidence to suggest that proton therapy may lead to fewer side effects than photon therapy, since it is easier to control where proton beams deliver their energy. Although, still underway, the death of the cancer cells as well as when the energy from the proton beam damages healthy tissue surrounding the tumour could both result in side effects.

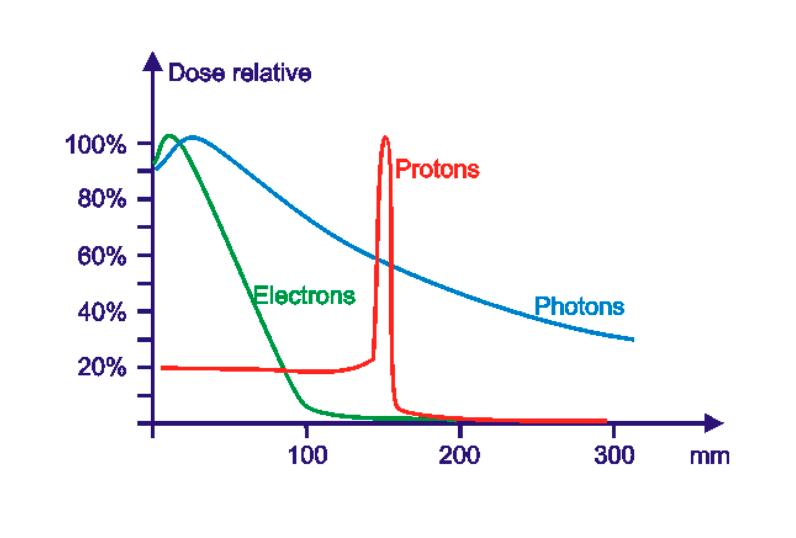

NHS-a (2024) goes into detail about how the process works. It depicts how positively charged particles originating from the nucleus of an atom release a maximum radiation dose after reaching a particular depth in the patient’s body, when the radiation stops completely, which is one difference to photon therapy. The radiation dose peak (when protons deposit most of their energy) is known as the Bragg peak as shown by the red spike in the Dose relative diagram. This peak is created through exponential increase in stopping power towards the end of the protons’ track.

Thus, as each proton slows down, the quantity of energy it deposits per unit length covered increases exponentially. The depth of said peak is determined by adjusting the energy of the proton beam, where a deeper Bragg peak is shown by a higher energy. – This depth is changed by using bending magnets in the device.This is a dissimilarity to X-rays, which achieve a maximum dose either at or just below the surface of a patient. Wroe, Slater JD, Slater JM (2012) explains how it is only through superposition of multiple treatment fields that an elevated radiation dose to target volume is achievable.

There are passive and active techniques in a delivery of uniform dose can be applied to treatment volume. Passive techniques – which are used more often in a clinical setting – spread the proton beam laterally using a combination of gold or lead paired with Lexan foils. By using a combination of two different materials (one of low and the other of high atomic number) it produces a flat beam of constant flux and a constant range. For a homogeneous dose in a tumour, many fine proton beams with differing energies and positions are combined in rapid succession in a process called pencil beam scanning.

The beams are modulated in depth using a rotating plastic wheel to allow for the superposition of multiple Bragg peaks of varied energy and intensity to form a region of a uniform high dose called the spread-out Bragg peak (SOBP). The beam is collimated by brass or Cerrobend apertures and its penetration depth is varied by means of a wax bolus. Active techniques employ a magnetically guided proton pencil beam in combination with dynamic changes of beam energy and intensity during treatment. An advantage to this active system is that is minimises the interaction between the primary beam and the beam modifying devices, in turn the

production of secondary particles. Additionally, this method has the potential to treat complex tumour volumes with greater precision and improved normal tissue sparing. While this is a great advance in technology, the Dosimetry and beam delivery is also more complex and problematic; errors in this regard can lead to high and low dose regions and an incomplete treatment of the tumour volume, hence an unsuccessful treatment. Another complicating factor is the organ motion during treatment which must be considered to result in an effective and accurate eradication of a tumour.

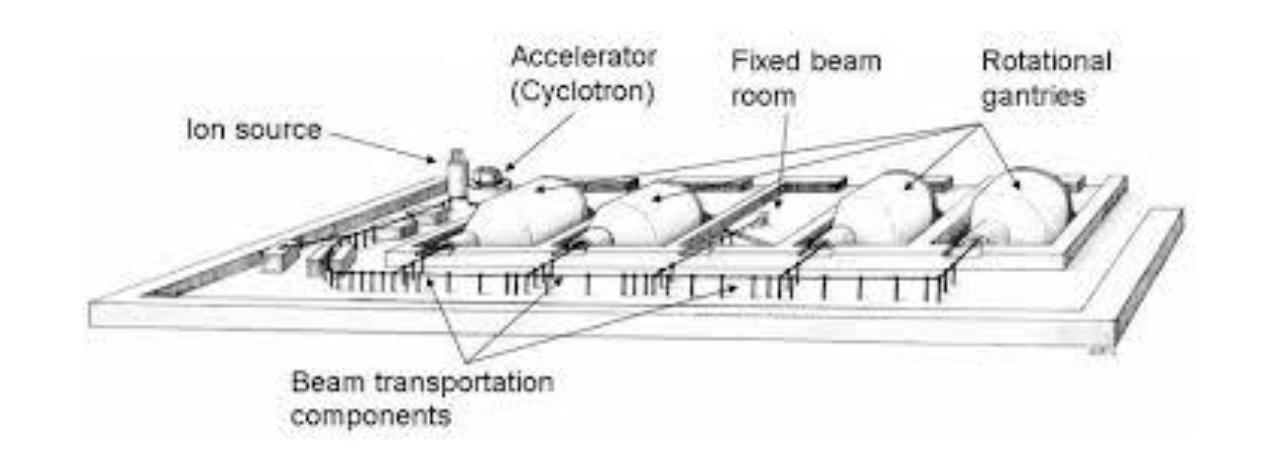

According to the World Health Organisation (2021), there are currently 79 operation proton therapy centres in the world, more concentrated in China, Japan, the US and Europe. To perform this therapy, a large cyclotron is required to isolate and accelerate the protons into a beam. Due to their size – 1000 tonnes – and capital cost – roughly $100-150 million – there is a lack of facilities globally. At MIT’s plasma Science and Fusion Centre, there have been some advances in superconducting coils for magnetic fusion experiments which may allow for a more economical “compact synchrocyclotron” –Holland (2011) – to make proton therapy more widespread. Newhauser and Zhang-a (2015) supports this, suggesting that this slow widespread adoption is due to both the technical difficulties as well as the lack of evidence of cost-competitiveness. In LMICs, the modalities are currently not cost-effectivesays the World Health Organisation (2021).

NHS University College London Hospitals (2024) explains the advantageous nature of proton therapy. The process causes changes in both normal and cancer cells, but cancer cells – being more sensitive to radiation than normal cells – are killed more frequently. Due to proton behaviour, less

normal tissue is irradiated. To produce the most optimum treatment for individual patients, a CT and sometimes and MRI scan are used to personalise treatment. The therapy itself is then delivered on machines called gantries. Similarly, Newhauser and Zhang-a (2015) states that central rationale is its superior spatialdose distribution in the patient. By contrast to photons, protons are effective at providing a highly conformal and uniform dose to a tumour, thereby sparing more normal tissue. It mentions the first order approximation which is such that protons continuously lose kinetic energy via frequent inelastic Coulombic interactions with atomic electrons. Given that a proton’s rest mass is 1832 times greater than that of an electron, most protons travel in an almost straight line. However, when a proton passesclose to the atomic nucleus, it experiences a repulsive elastic Coulombic interaction which, due to the large mass of the nucleus, deflects the proton from its original straight-line trajectory. Non-elastic nuclear reactions between protons and the atomic nebulous are less frequent but have a more profound effect. In nuclear reactions, the projectile proton enters the nucleus which might emit a proton, deuteron, triton, or a heavier ion or one or more neutrons.

In conclusion, proton therapy can be extremely advantageous in the surgical world, with regards to its immense precision and efficiency. As seen in the diagram above, it is much safer than using conventional X-ray therapy, which leads to the irradiation of the whole volume of path that the beam occupies. This minimisation of radiation means less damage to normal working cells in the organs. By killing cancer cells, the patient will feel side effects of extreme fatigue amongst others. With a thinner beam which stops at the depth of the tumour/cancerous region, destruction to cells is decreased which improves the wellbeing of patients to make treatment less unpleasant. The Bragg peak is evidence of the most effective nature of protons compared to photons, deploying their energy at a concentrated point to combat the cancer quickly. All this is mind, there are many strides forward to be made in proton therapy in order to make resources cheaper and to be more common for all patients across the world to gain access to in the coming years.

Holland, A. (2011). Technology from Fusion Research: Benefits Today. American Security Project.

Available at: http://www.jstor.org/stable/resrep06053 (Accessed: 25 June 2024)

Newhauser and Zhang-a (2015) The physics of proton therapy. Available at: https://iopscience.iop.org/article/10.1088/0031-9155/60/8/R155/meta (Accessed: 25 June 2024)

Newhauser and Zhang-b (2015) The physics of proton therapy. Available at: https://www. ncbi.nlm.nih.gov/pmc/articles/PMC4407514/ (Accessed: 25 June 2024)

NHS-a (2024) How does proton therapy work? Available at: https://www.uzleuven.be/en/ proton-centre/proton-therapy/effect (Accessed: 25 June 2024)

NHS-b (2024) Proton Beam Therapy. Available at: https://www.england.nhs.uk/commissioning/spec-services/highly-spec-services/pbt/ (Accessed: 25 June 2024)

NHS University College London Hospitals (2024) Proton beam therapy explained. Available at:

https://www.uclh.nhs.uk/our-services/find-service/cancer-services/proton-beam-therapy-pbt/pbt-explained (Accessed: 25 June 2024)

World Health Organisation. (2021). Emerging technology and techniques in radiotherapy. In Technical specifications of radiotherapy equipment for cancer treatment (pp. 75–78).

Wroe, AJ., Slater, JD., Slater, JM. (2012). The physics of protons for patient treatment. Available at: https://three.jsc.nasa.gov/articles/Physics_of_Protons.pdf. (Accessed: 25 June 2024)

Matthew Johnsen

An electron is negligibly small; at least that’s what we are told. But, you might ask, how small is this tiny particle? Can we quantify it? These were questions asked before JJ Thompson, a British Physicist, discovered the charge-to-mass ratio of an electron. And then, later in 1909, when Millikan, an American Physicist, calculated the charge on an electron, this made it possible to work out the mass of an electron. In this article, I will be explaining the fine beam tube experiment used to calculate the charge-to-mass ratio of an electron. Then, I will be explaining the oil drop experiment, done to calculate the charge on an electron.

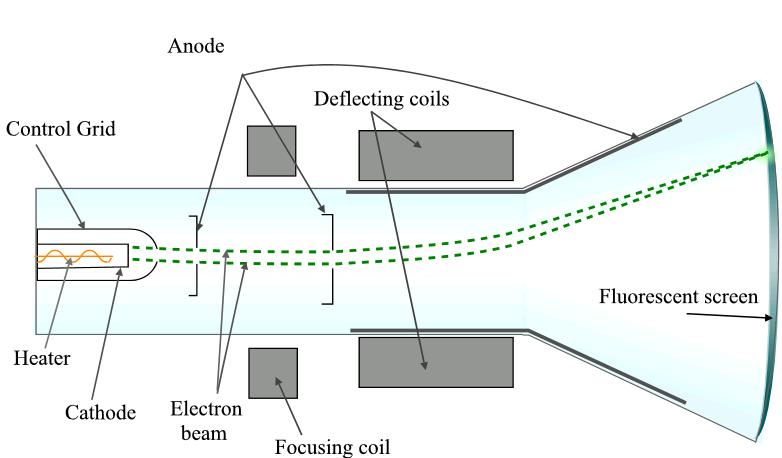

The fine beam tube experiment is a slight adaptation of the original cathode ray experiment done by JJ Thomson because this experiment uses a Teltron tube (also called a fine beam tube) whereas the original experiment featured a cathode ray tube. However, the Maths behind both experiments is the same.

tube

beam tube

Consider two magnets a set distance apart and a current carrying wire of length, l in between the bar magnets (each bar magnet will have opposite poles – i.e. one bar magnet will be North Pole and the other will be south pole).

In GCSE physics you will learn that the current in the wire will create a magnetic field surrounding the wire (consists of concentric circles) – this is due to right hand grip rule (in which the thumb represents current and fingers representing the surrounding magnetic field). Due to the interaction of magnetic fields, this results in a force on the wire and the direction of the force is dependent upon direction of the current and the orientation of the bar magnets. Now it turns out that when the current direction is perpendicular to the direction of the magnetic field, this results in the force being perpendicular to both the current and magnetic field. This is known as Flemings Left Hand rule.

Now you might ask, well what happens if the angle between the current direction and magnetic field is not 90° (i.e. not perpendicular)?

Let the angle between the magnetic field and current direction be an arbitrary angle (x). Now using simple trigonometry you find that the component of current, I, perpendicular to the magnetic field is Isin(x).

Therefore assuming the magnetic field strength is constant, as well as the length of the wire, the effect on the force will be the

same (i.e. force on the wire will be multiplied by sin(x). It turns out that if x is 90°, the force on the wire will be at its maximum (since sin(90) is 1). However when the angle is less than 90°, this results in the force on the wire going down because the component of current perpendicular to the field is less.

Therefore we know the angle between the current direction and magnetic field will affect the force. However, other factors affecting the force could be the current (the higher the magnitude of the current, the stronger the magnetic field surrounding the wire, resulting in a larger force on the wire). In addition the length of the wire affects the force because for example increasing the length increases the total number of electrons in the wire thus there are more “magnetic fields” (each electron when moving causes a magnetic field) so the overall magnetic field strength due to the current carrying wire is larger. Finally, increasing the magnetic field strength due to the bar magnets increases the force on the wire.

Therefore writing this as an equation we can say that the force on the current carrying wire, F=BILsin(x) (where B is the magnetic field strength due to bar magnets, I is current, L is length of wire and x is angle between current and magnetic field).

For the experiments described later we are assuming x is 90° (i.e. the current is perpendicular to the magnetic field).

Therefore F=BIL. However, if we consider the speed of the charge particle to be v. This means that in a time, t, the distance travelled by the charged particle is vt but this is exactly equal to L. Therefore F=BIvt but since Q=It, the force on the charged particle can be written as F=BQv.

In particular for an electron with a charge, e, F=Bev.

Consider an object moving around a circle (with a radius, r) from points A to B, with the angle between those points being ϕ. When the angle ϕ becomes negligibly small, the arc length (distance between A and B) can be approximated as a straight line. The reason for doing this is to find the instantaneous acceleration at a particular point. Therefore if we let the object move at a constant speed, v, then the distance between A and B can be approximated as v(delta)t where (delta)t is the time for which the object has been moving around the circle at a speed v. This can be seen in triangle 1.

If we want to work out the change in velocity then by drawing a vector triangle (seen in triangle 2), then angle subtended by the vectors V_a and V_b is ϕ

To prove this, we know that V_a and V_b are tangents to the circle so are perpendicular to the radii. Since the magnitude of V_a and V_b are the same this means that if add the vector -V_b from the point B, which will be adjoined to the vector V_a, there will be an angle between those two vectors. To calculate that angle we know that the sum of angles in a quadrilateral is 360° so the angle between those vectors will be 180°-ϕ(this is because the tangents are at 90° to radii). However we know that the vectors -V_a and V_a (seen in the picture) are parallel so there is a pair of supplementary angles (180°-ϕ) and the angle between the vectors -V_a and V_b. Since the angles must sum to 180° this means that the angle subtended by V_b and -V_a must be ϕ

The magnitude of the velocity V_a and V_b are the same, (the magnitude of velocity is defined as the speed) because the object moves at a constant speed around the circle. we are assuming that the object moves at a constant speed around the circle, this means that in triangle 2, this is an isosceles triangle. However, Triangle 1 is an isosceles triangle as well with the same angle ϕ subtended by the two radii. Therefore, we can conclude that the triangles are similar so each side must be scaled by a factor.

To prove this, we know that V_a and V_b are tangents to the circle so are perpendicular to the radii. the magnitude of V_a and V_b are the same this means that if add the vector -V_b from the point will be adjoined to the vector V_a, there will be an angle between those two vectors. To calculate that we know that the sum of angles in a quadrilateral is 360° so the angle between those vectors will be know that the vectors -V_a and V_a les (180°-θ) and the angle between vectors -V_a and V_b. Since the angles must sum to 180° this means that the angle subtended by

The magnitude of the velocity V_a and V_b are the same, (the magnitude of velocity is defined as the we are assuming that the object moves this is an isosceles triangle. However, subtended by the two radii. Therefore,

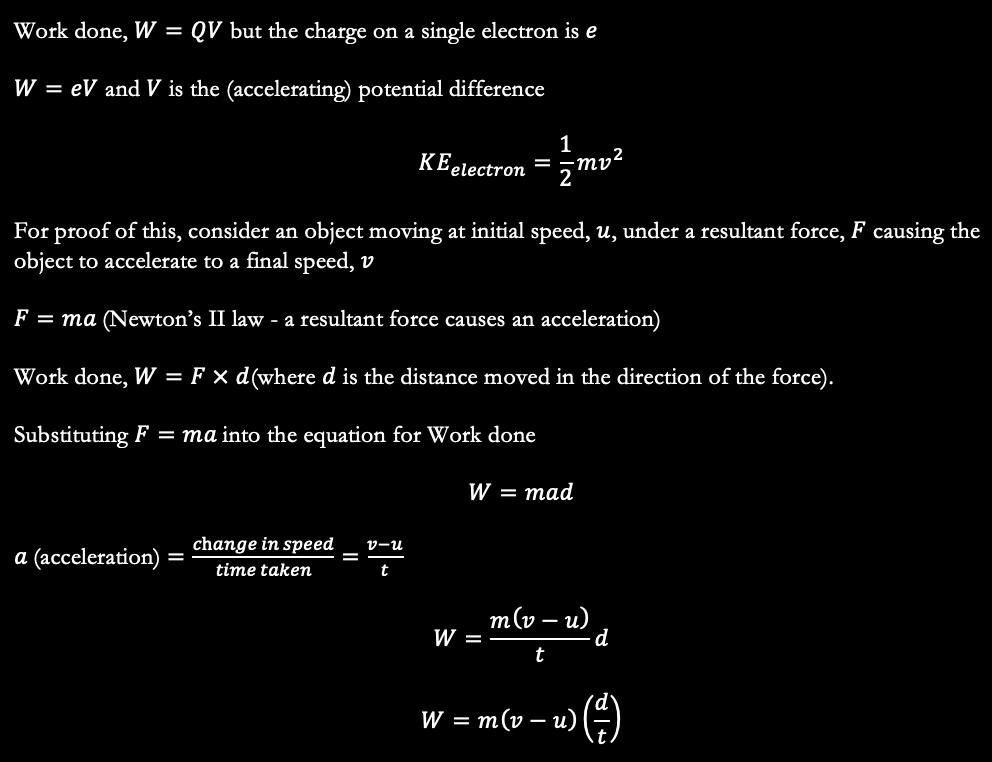

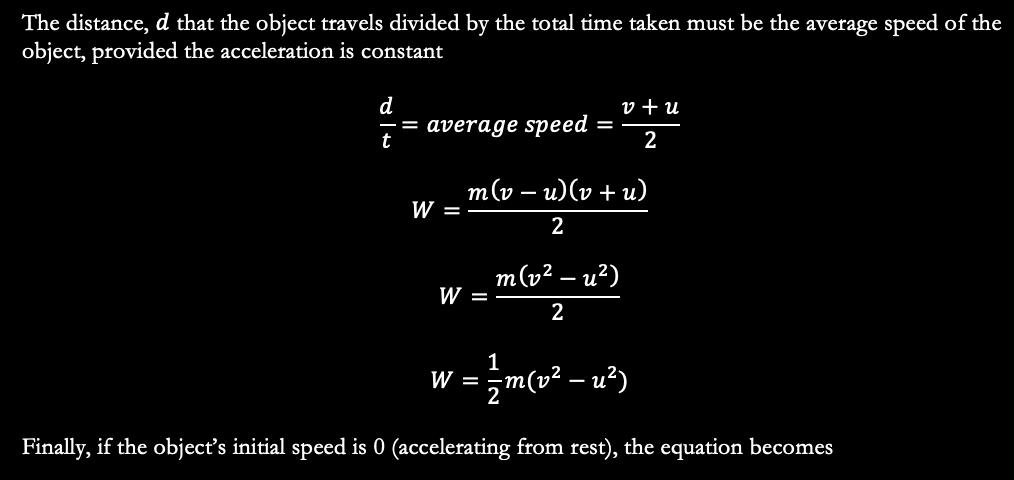

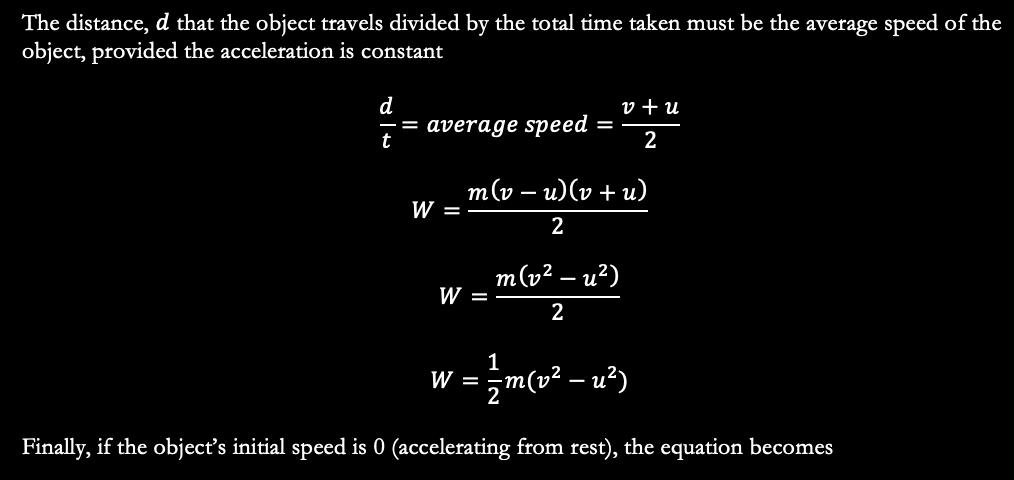

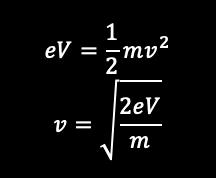

Now consider a heated filament which causes some electrons to be emitted through thermionic emission (emission of electrons through heat). Since electrons are negatively charged there is an electrostatic force of repulsion from the cathode (negatively charged) as well as an electrostatic force of attraction from the anode. As a result of both the accelerating potential difference and the resultant force on the electron, the electron accelerates. Potential difference is defined as the energy gained or lost from charge carriers between 2 points. Thus, increasing the potential difference between the cathode and anode would increase the work done (by the battery) on the electron (since a higher potential difference means that more energy is transferred to electron). However, as the electron accelerates, it gains kinetic energy and due to the conservation of energy (i.e. energy cannot be created or destroyed, but the total energy in a system must remain constant). Therefore the work done on the electron must equal the increase in kinetic energy of the electron.

radii. Since B, which that angle be 180°V_a (seen between the V_b and the speed) moves at However, Therefore, we can

Now consider an electron gun under an accelerating potential difference, releasing electrons which are moving at a constant speed in a circular path in a uniform magnetic field. The reason why the electrons would move in a circular path is similar to how the Earth orbits the sun in a circular path (it’s slightly elliptical but we’re going to assume it is circular). There is gravitational force on the Earth due to the Sun’s gravitational field, which is perpendicular to the direction of motion for the Earth. The gravitational force causes a centripetal force of the same magnitude, the force that acts perpendicular to velocity.

In the diagram shown, there is power supply, providing a potential difference, V and connected to the power supply, there is an electron gun, which release electrons into the glass tube. Electrons also collide with the gas particles in the tube, causing trace amounts of light to be emitted, thus we can determine the position and path of the electron. Due to the (accelerating) potential difference, the electrons gain kinetic energy. The direction of electron flow is upwards, and since conventional current is in the opposite direction to electron flow, the current direction is down. Due to Fleming’s Left Hand rule, given that the magnetic field direction is into the page and the current down, we can deduce that the force on the electron is perpendicular to the velocity, acting to the right.

The velocity of the electron can be calculate by equating the work done on the electron to the gain in kinetic energy.

The fact that the force acts perpendicular to the velocity, should remind us of the centripetal force, equation. However, this force is also due to the magnetic field so the magnitude of these forces should be the same.

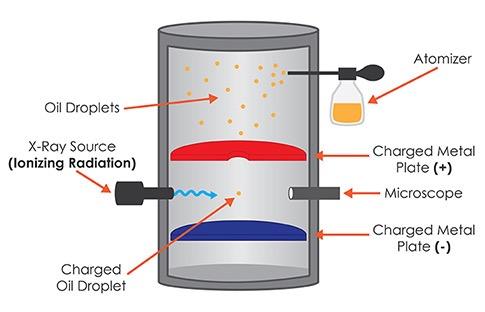

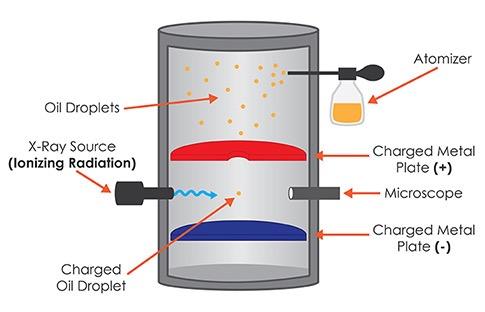

In this diagram, an atomiser is designed to release very small oil droplets, which when pass through the ionising radiation, become negatively charged. This is because the x-rays ionise the air (dislodge electrons off the air particles), causing the electrons to attach to the oil droplets, making the oil droplets negatively charged. Connected to the charged plates is a power supply. There is both a gravitational force acting downwards on the oil droplet but also a force upwards on the oil droplet due to both the repulsion from the negatively charged plate and the attraction towards the positively charged plate.

In this diagram, an atomiser is designed to release very small oil droplets, which when pass through the ionising radiation, become negatively charged. This is because the x-rays ionise the air (dislodge electrons off the air particles), causing the electrons to attach to the oil droplets, making the oil droplets negatively charged. Connected to the charged plates is a power supply. There is both a gravitational force acting downwards on the oil droplet but also a force upwards on the oil droplet due to both the repulsion from the negatively charged plate and the attraction towards the positively charged plate.

Therefore E=V/d

Knott T. 2005, Cathode ray Tube, Available at: https://commons.wikimedia.org/wiki/File: Cathode_ray_Tube.PNG, (Accessed: 4th August)

Science Museum Group. Teltron fine beam cathode ray deflection tube. 1997-616 Science Museum Group Collection Online. Available at: https://collection.sciencemuseumgroup. org.uk/objects/co424969/teltron-fine-beam-cathode-ray-deflection-tube-cathode-raytube, (Accessed: 4th August)

Thornes N. (2009), AQA Physics A Turning points in Physics Chapter 1 The discovery of the electron, Available at: https://pmt.physicsandmathstutor.com/download/Physics/A-level/ Notes/AQA-Old/Unit-5/Textbooks-for-Options/D1%20Discovery%20of%20the%20 Electron.pdf, (accessed: 4th august 2024)

Physics Ninja, (2019), Two Simple Derivations of Centripetal Acceleration, Available at: https://www.youtube.com/watch?v=2gNZHt69Fzg, (accessed: 3rd August 2024)

Mr Naga Physics, (2021), 14.03 Finding the specific charge on an electron, Available at: https://www.youtube.com/watch?v=_4SmdhjXs9U, (accessed: 29th June 2024)

Macedo G. B. (2023), Reproduction of Millikan’s experiment: Determination of the Electron’s Elementary Charge, Available at: https://medium.com/@gabriel.macedo. brother/reproduction-of-millikans-experiment-determination-of-the-electron-s-elementary-charge-64305ec86e1d, (accessed: 15th July 2024)

ZPhysics, 2021, A Level Physics: Uniform Electric Fields, Derivation of E=V/d, Available at: https://www.youtube.com/watch?v=tbNqYt--bD8, (Accessed: 29th June 2024)

Physics Online, 2021, Stokes’ Law for Laminar Flow – A Level Physics, Available at: https:// www.youtube.com/watch?v=eD9H-2CQ2i0, (Accessed: 4th August 2024)

Finn Evans

The demand for powerful encryption algorithms was booming after the Second World War and during the Cold War. With the rapid development of digital computing, which had been invented and successfully implemented for the purpose of breaking the Enigma Cypher, military organisations needed a new way to obfuscate their communications much more robust than previous methods, and mathematicians had to rise to the challenge.

To transfer information that a malicious third party cannot understand requires the information to be obfuscated in a way that only the intended recipient knows how to reverse. This process is known as encryption, and has been used for millennia. One of the earliest known encryption algorithms is the Caesar Cypher, used by Julius Caesar to communicate secretly with family and other politicians. In order to encrypt using the Caesar Cypher, one takes every letter of one’s message and shifts it three places in the alphabet. For example, A is converted into D, B into E, and so on (Suetonius, Vita Divi Julii)¬. At the end of the alphabet, the letters loop back to the start, so Z becomes C, for example. This produces a message which is incomprehensible to anyone who is not already aware of the methods used to encrypt it. However, the recipient, who has already been made aware of the encryption method, can now reverse it, turning D back into A, E to B, et cetera, so that they are left with the original message.

While this cypher was innovative at the time, it has some major drawbacks. Most importantly, it can be easily reversed by anyone who knows the trick, meaning that if anyone revealed his secret algorithm, Caesar’s messages could have been easily intercepted and understood. Later adaptations of the Caesar Cypher changed the number of positions through the alphabet that letters were shifted. For example, Augustus Caesar only shifted letters by one position.

These adaptations introduce a concept now known as a key. A key is a variable which is shared secretly between the recipient and sender of the message, affecting the application of the encryption algorithm. For example, in a Caesar Cypher, the key is the number of positions through the alphabet which letters are shifted. Julius Caesar used a key of three, while Augustus used a key of one (Suetonius, Svetoni Tranqvili Vita Divi Avgvsti). The advantage of a key is that the encryption algorithm can be public information, and only a small datum needs to be secretly shared before secret messages can be. While the Caesar Cypher was sufficient for Roman leaders, it is not a secure method of communication in the modern day, due to the limited availability of keys. As the alphabet loops around after 26 letters, there are only 25 valid options for a key in the Caesar Cypher. This means it is very easy to simply try every possible key until a comprehensible message returns. As well as this, cryptanalysis can be used to attack more advanced cyphers, by analysing the structure and content of the cyphertext to figure out what the message must have been without needing to try every option. Therefore, modern cyphers use much more complicated algorithms with many more possible keys, to stop people from being able to try every possible key in a reasonable amount of time – which is known as a ‘brute force attack’ (kapersky, 2020). Despite these efforts, there remained a glaring vulnerability with every encryption algorithm. While they had become so

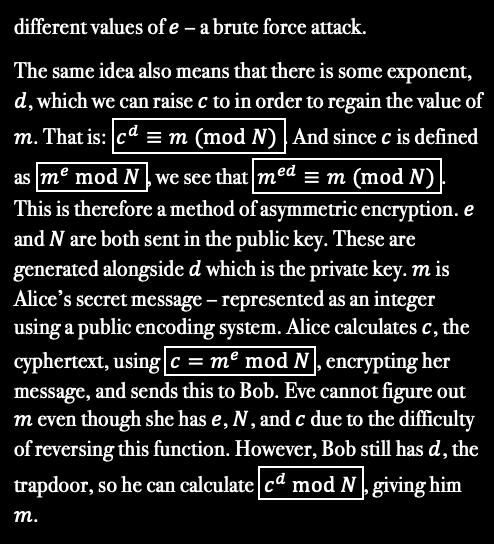

secure that without access to a key it was nearly impossible to reverse them, it is still difficult to secretly share a key. It cannot be simply sent to the recipient, as this has as much risk of interception as the message. This means that without some secure way to transfer the key, such as meeting physically, there was no risk-free method of encrypted communication. A clever mathematical technique called the Diffie-Hellman key exchange was invented to privately share keys (Diffie & Hellman, 1976), although this too had limitations, leading to the invention of asymmetric encryption. Asymmetric encryption avoids the issues with sharing a key by instead creating two distinct but related keys, known as the ‘public key’ and ‘private key’, whereas the traditional method, known as symmetric encryption, only uses one for both encryption and decryption. Despite the names, it is intuitive to imagine the public key instead as a padlock, and the private key as the key to this lock Now we imagine a physical analogy for a private communication between two individuals, from Alice to Bob.

Alice begins by sending a public message to Bob, telling him that she would like to send him a private message. This public message is noticed by an imaginary malicious third party, Eve, who decides to listen to their communication. Bob responds by sending Alice a padlock which both Eve and Alice receive. However, Bob still has the key and keeps it safely. Now Alice has the padlock, so she uses it to lock her message in a box and sends it to Bob. Even if Eve were to intercept the box, Eve only has access to the padlock and the locked box, but not the key. However, when Bob receives the box, he still has private access to the original key, allowing Bob to unlock Alice’s message. The only other stipulation for this to work is that Eve cannot reverse-engineer the key from the lock..

Therefore, to create a mathematical method for asymmetric encryption, we need a trapdoor function.

This is a function which is easy to compute, but very difficult to reverse, unless you have a special piece of information known as the trapdoor. The trapdoor function is the public key: easily applied by Alice but irreversible by Eve. However, the private key, owned by Bob, is the trapdoor, so Bob can easily reverse the function.

This can be done using modular arithmetic, a system based on the modulo operation, which returns the remainder after one number is divided by another. It is written (a mod b=c). For example, (7 mod 3=1) as seven divided by three is two, remainder one.

Diffie, B. W., & Hellman, M. E. (1976, November 6). New Directions in Cryptography. Transactions on Information Theory, 22, 644-654. Retrieved November 3, 2023, from https://ee.stanford. edu/%7Ehellman/publications/24.pdf

Euler, L. (1741). Theorematum quorundam ad numeros primos spectantium demonstratio. Retrieved November 4, 2023, from https://books.google.co.uk/books?id=-ssVAAAAYAAJ&pg=RA1PA141&redir_esc=y#v=onepage&q&f=false

Euler’s Phi Function and the Chinese Remainder Theorem. (n.d.). Retrieved November 4, 2023, from https://math.oxford.emory.edu/site/math125/chineseRemainderTheorem/ kapersky. (2020, October 20). Brute Force Attack: Definition and Examples. Retrieved November 3, 2023, from kapersky.com: https://www.kaspersky.com/resource-center/definitions/brute-forceattack Khan Academy. (2013). Modular multiplication. Retrieved November 3, 2023, from khanacademy. org: https://www.khanacademy.org/computing/computer-science/cryptography/modarithmetic/a/ modular-multiplication

Suetonius. (n.d.). Svetoni Tranqvili Vita Divi

Serena Pun

The gut microbiome consists of millions of different bacteria that impact our body, each in different ways. Some of those bacteria impacts our brain chemistry in countless different ways thus affecting our mood and mental health. Due to this, the use of antibiotics and probiotics could be manipulated to become a possible substitute for antidepressants in the future and help treat mental health issues. This provides options for treatment, allowing patients to choose the method which best fits their situation.

Our mental health can be impacted by the bacteria in the gut microbiome, affecting the neural circuits to the brain which greatly influence stress and anxiety. According to research at the Washington University School of Medicine in St. Louis,

One theory is that the cytokines - proteins released by the cell for signalling, and components of bacterial cell walls- stimulate cells in the brain such as immune cells e.g. microglia.

This then leads to the production of more cytokines and some nitrous oxides which are toxic to neurons. This releases severe neuroinflammatory markers which leads to neuron death and ‘cause brain damage and exacerbate neurodegeneration’ (Bhandari, 2023). Another theory that is less significant due to the lack of evidence, is that the bacteria themselves are escaping from the gut leading to a condition called the ‘leaky gut’1 which affect the neurons to the brain. This impact on the neurons affects the release of neural activating metabolites such as GABA, serotonin or dopamine which affect people’s mood.

An experiment done by Nobuyuki Sudo at the Kyushu University in Japan showed strong evidence that bacteria in the gut microbiome affects stress and anxiety levels. The experiment had two groups –mice with gut bacteria and mice without gut bacteria. The experiment showed that the ‘mice produced and raised without gut bacteria are more sensitive to stress’. ( News Feature: Microbes on the mind)

After putting the mice in restraints they found that the gut bacteria-free mice in restraints produced twice more of the stress hormones adrenocorticotrophin and corticosterone than the mice with gut bacteria. This suggests that the biochemistry of the brain changes with changes in the microbiome of the gut.

Furthermore, a recent experiment done by the McCaster University has more promising data and shows that adding bacteria can reduce stress levels on certain mice, similar to the possible treatment of probiotics for mental health in humans as we are adding bacteria to our gut. In the experiment the scientists transferred NIH Swiss bacteria which is a less timid species into BALB/c mice, a more timid species, and found that the BALB/c mice with the injected NIH Swiss bacteria were very quick to step off a platform showing signs of reduced stress and anxiety. However, the NIH Swiss mice that got injected with the BALB/c mice bacteria took 3 times longer before stepping off a platform showing signs of increased stress and anxiety. This experiment demonstrates that the microbiome, altered by adding bacteria, affects the behaviour of living organisms to induce more or less stress.

This clear effect of the gut microbiome on the brain suggests that the manipulation of the microbiome through probiotics and antibiotics could impact mental health.

Antibiotics could create changes in the brain chemistry as they help eliminate bacteria that could have negative impacts on mental health. Antibiotics work by targeting the cell walls and membranes that surround the bacterial cells, and the machineries that make nucleic acids and proteins. This kills or prevents the growth of the bacteria in the body and ‘prevents the reproduction of bacteria’ (Society, no date).

On the other hand, probiotics could be used to install bacteria that has been proved to reduce levels of stress. Probiotics work by installing helpful bacteria to the body to help treat dysbiosis which is the imbalance or deficit of beneficial microbes. The probiotic pills contain ‘1 to 10 billion’ (Probiotics, no date) organisms per pill, and once the pill reaches the gut the bacteria sticks onto the lining of the gut.

So, we could manipulate the use of probiotics and install bacteria that has been proven to decrease stress levels into our gut.

These two treatments can work hand in hand to add bacteria that reduce the stress and anxiety levels in humans and eliminate stress causing bacteria. This is as the antibiotics could kill the unwanted bacteria that are damaging the nervous system that is linked to the brain. For example, a study found that in a stressed person ‘the abundance of prevotella increased’ (Xiong et al., 2023), this suggests that the bacteria, prevotella, can increase stress levels, the antibiotic used to kill this is called meropenem. In the future, this could be a possible antibiotic used to help treat patients struggling with their mental health.

Additionally, bacteriodes have also been proven to increase stress levels, thus the antibiotic, metronidazole, which kills Bacteroides, could also be a substitute to antidepressants. While the antibiotics kill the stress enhancing bacteria, the probiotics can add bacteria that reduce stress and anxiety. For example, lactobacillus and bifidobacterium species were found to be capable of largely reducing depression’ (Kumar et al., 2023). Lactobacillus is a common bacteria found in yoghurts, pill form, or as a drink. In a recent study the researchers made ‘55 healthy volunteers drink a probiotic mix of lactobacillus helveticus’ then they showed ‘greater reductions in depression, anger and hostility’ (1- News Feature: Microbes on the mind) This study also confirms that the change in the microbiome not only affects mice but humans as well.

For bifidobacterium, there is also pill and powder forms that are extremely accessible on the market. In the future, these probiotics could be used to add these bacteria that decrease stress levels significantly and can be used by patients struggling with mental health.

Antibiotics and Probiotics have a high chance to be a possible substitute for antidepressants in the future. They both have proven to show significant changes in mood and mental illness in organisms which further demonstrates the effect of antibiotics and probiotics on the brain chemistry of an organism.

There are several ways that antibiotics and probiotics could be a more tempting substitute than antidepressants. Antibiotics and probiotics are non-addictive, contrary to antidepressants which can cause a mental and physical reliance on the pills as ‘some people suffer from severe withdrawal symptoms that last for months or more’ (Stopping or coming off antidepressants, 2021) such as restlessness.

Furthermore ‘antibiotics start working almost immediately’6, this ensures a quicker recovery for people’s mental health and could prevent desperate patients from struggling any longer. However, ‘antidepressants take one to two weeks to start working’ (DosageAntidepressants, 2021)..

Antibiotics are often taken in a two-week course and do not have to be taken long term. The course for probiotics is more lenient, they can be taken for weeks to a month so there is more freedom for the patients. However, antidepressants are taken for ‘at least 6 months’ (Dosage - Antidepressants, 2021), a much longer time than antibiotics and probiotics, increasing the risk of losing track when taking antidepressants. ‘Taking probiotics is generally safe and well tolerated by pregnant women and their children’ (Obuchowska et al., 2022).. Additionally, for antibiotics they are ‘commonly prescribed during pregnancy’ and ‘some antibiotics are OK to take during pregnancy’ (What antibiotics are safe during pregnancy?, no date). However, ‘there are some risks to taking antidepressants while pregnant or breastfeeding’ which include ‘heart defects, spina bifida, or cleft lip’ (Obuchowska et al., 2022). Antibiotics and probiotics as a

substitute for antidepressants would allow pregnant women to be safely treated for their mental health issues which could worsen during pregnancy.

In conclusion, antibiotics and probiotics could be a substitute for antidepressants with further research into this innovative treatment method. They have proven a significant impact on the mental health of organisms, though research is yet to be done on how to transform this into a synthetic drug to help treat major problems in the population’s mental health.

1- News Feature: Microbes on the mind: Could the gut microbiome have a critical role in brain and behavior? (Accessed: 1 September 2024).

Bhandari, T. (2023) Gut bacteria affect brain health, mouse study shows, Washington University School of Medicine in St. Louis. Available at: https://medicine.wustl.edu/news/gut-bacteria-affect-brain-health-mouse-study-shows/ (Accessed: 2 September 2024).

Dosage - Antidepressants (2021) nhs.uk. Available at: https://www.nhs.uk/mental-health/ talking-therapies-medicine-treatments/medicines-and-psychiatry/antidepressants/dosage/ (Accessed: 2 September 2024).

Kumar, A. et al. (2023) ‘Gut Microbiota in Anxiety and Depression: Unveiling the Relationships and Management Options’, Pharmaceuticals, 16(4), p. 565. Available at: https://doi. org/10.3390/ph16040565.

Obuchowska, A. et al. (2022) ‘Effects of Probiotic Supplementation during Pregnancy on the Future Maternal Risk of Metabolic Syndrome’, International Journal of Molecular Sciences, 23(15), p. 8253. Available at: https://doi.org/10.3390/ijms23158253.

Office of Dietary Supplements - Probiotics (no date). Available at: https://ods.od.nih.gov/ factsheets/Probiotics-HealthProfessional/ (Accessed: 2 September 2024).

Snyder, Z.S., Graham (no date) Antibiotics treat infections by killing or sterilizing bacteria right after the first dose, Business Insider. Available at: https://www.businessinsider.com/ guides/health/treatments/how-long-does-it-take-for-antibiotics-to-work (Accessed: 2 September 2024).

Society, M. (no date) What are antibiotics and how do they work? Available at: https:// microbiologysociety.org/membership/membership-resources/outreach-resources/antibiotics-unearthed/antibiotics-and-antibiotic-resistance/what-are-antibiotics-and-how-do-theywork.html (Accessed: 2 September 2024).

Stopping or coming off antidepressants (2021) nhs.uk. Available at: https://www.nhs.uk/ mental-health/talking-therapies-medicine-treatments/medicines-and-psychiatry/stopping-or-coming-off-antidepressants/ (Accessed: 2 September 2024).

Xiong, R.-G. et al. (2023) ‘The Role of Gut Microbiota in Anxiety, Depression, and Other Mental Disorders as Well as the Protective Effects of Dietary Components’, Nutrients, 15(14), p. 3258. Available at: https://doi.org/10.3390/nu15143258.

Caden Cheong

In a remarkably short span of time, the realm of artificial intelligence has witnessed an astonishing leap in complexity. I’m sure this has been extremely noticeable, with the advent of tools such as ChatGPT, Midjourney and DALL-E pushing the boundaries of what we previously thought possible with our intelligent machines. In the midst of the panicking artists and waves of news articles, we’re left to wonder what the next step is. We went from machines barely classifying dogs and cats correctly, to GPT-3 writing emotional pieces of literature, in the span of 10 years, due to the rapid advancements in deep learning. Within this evolving landscape, some predict the next horizon for AI might run on an entirely different paradigm; one shaped not only by more complex hardware, but also from computer scientists taking a page out of the book of biology, with the birth of neuroevolution. The concept of artificial intelligence was first pioneered in the mid-20th century, by figures such as Alan Turing with his seminal paper “Computing Machinery and Intelligence”, and John McCarthy with his work on “Programs with Common Sense”. However, while these ground-breaking theories were being released,

Another area of computing, evolutionary algorithms, were being developed, which laid the foundation for what would later become neuroevolution. One subset of algorithm, Genetic Algorithms (GA), was introduced conceptually by John Holland between the 1960s and 70s. He posited that, if the theory of evolution can produce well adapted organisms based on the environment, you could translate this idea to the world of computing. This culminated in his 1975 book, “Adaptation in Natural and Artificial systems”, where he published his unprecedented work. Essentially, genetic algorithms are techniques inspired by the principles of natural selection. Imagine a population of lots of random, potential solutions. The algorithm simulates the evolution of this population, based on their ‘fitness’ or success, utilizing genetic operators like mutation and crossover, ultimately ‘converging’ on the most optimal answer. These nature-inspired problem solvers, because of their adaptability, can be applied to range of problem-solving tasks, from algorithmic optimisation to pattern recognition, but most importantly for us, machine learning.

A core part of how ‘traditional AI’ learns, is called gradient descent. You can imagine the way it works as placing a ball randomly on a piece of terrain and calculating where it ends up rolling to. It essentially tweaks a bunch of little values in its system, until it “converges” on a local optima, or in other words, the optimal solution. Do you see any parallels? The issues with gradient descent, is that it can actually converge at points which aren’t the most optimal, or precise, if you imagine the ball rolling into a small ditch, which isn’t actually the lowest point in the terrain. It can also be incredibly erratic in a very complex or noisy problem, with the result it actually stumbles upon; if the terrain is very bumpy, the ball is going to end up at a different point every time. The traditional solution to this has basically been, run the program trillions of times and eventually the ball will land at the lowest point, which works but as you can probably guess, brute-force isn’t very fast. This is where genetic algorithms come into play. Imagine instead of a population of solutions, you had a population of neural networks. You let those with better results crossover, and let their offspring mutate, until the whole population is the ‘best adapted’, in other words, converged on the best solution. This system, as it turns out, is not only faster, but also handles the bumpy terrain much better, making it much more robust. Instead of linearly rolling down a hill, you’re producing a diverse community of organisms, each with unique solutions, which also has the benefit of perhaps introducing solutions that weren’t at all possible with a gradient descent algorithm.

The development doesn’t stop there! This is the endpoint for much of the research from the 20th century, and this basis, of designing a neural network, and using evolution, is still used today from anything between teaching a machine to walk, to AI learning how to play snake. However, a breakthrough was made in 2002, with Kenneth O. Stanley and Risto Miikkulainen,

and their revolutionary NEAT (NeuroEvolution of Augmenting Topologies) AI. These two researchers thought, why stop at evolving the numbers, a.k.a. weights, in the neural networks, when you could just evolve the neural networks themselves? The structure of a neural network, before this point, was entirely manmade, with a computer scientist handcrafting the nodes and layers present, but this topology doesn’t have to stay static, allowing AI to add its own connections and nodes, and evolving both the weights and the structure.

Finally, some of the latest innovation in neuroevolution comes to us from nature once again. Some of you may have heard of the term Evo-Devo (Evolutionary Development Biology), which is the study exploring the connections between genes, essentially the code of all life, and the developmental processes that it creates. A core concept in these developmental processes, such as the forming of an embryo from a zygote, is polygeny – the idea that a combination of various genes influences one phenotype or trait, rather than a single gene dictating a single trait. However, it turns out that with AI, most algorithms have been using direct encoding, which is not polygenic.

The advantage of copying this aspect of evo-devo is that polygeny leads to emergent complexity, which is very useful not only for harder problems, but also the creation of new ideas. In 2011, a Spanish team, using this idea known as indirect encoding, successfully created a music generating AI called Iamus, marking a new development in Creative AI. The limitation with deep-learning-based Creative AI, particularly its difficulty with complex forms or ideas, including image-generating realistic hands or understanding music theory, could be overcome by neuroevolution and create a new wave of image, audio and text generation to an even higher degree of intricacy. Neuroevolution in machine learning doesn’t stop at creative AI, evident by how, In 2011, even NASA recognized the power of indirect encoding, using it to design satellite antennas, a task demanding a highly specific

and complex solution. Neuroevolution may be faster, and offer some really innovative opportunities, but it also takes a much higher computing power to run, hence why this technology has only been recently rediscovered by researchers, such as Uber Technologies in 2017. As our computers become more and more advanced, there will no doubt be greater use of neuroevolution, creating a bridge between biology’s principles and the world of machines. On the cusp of the next frontier in AI, the next chapter promises to be as astonishing as the one that came before.

Turing, A. M. (1950). Computing Machinery and Intelligence. Mind, 59(236), 433–460. https:// doi.org/10.1093/mind/LIX.236.433

McCarthy, J. (1959). Programs with Common Sense. Stanford University Stanford, CA 94305. http://jmc.stanford.edu/articles/mcc59/mcc59.pdf

Holland, H. J. (1975). Adaptation in Natural and Artificial systems. The MIT Press. https://mitpress.mit.edu/9780262581110/adaptation-in-natural-and-artificial-systems/ Salomon, R. (1998). Evolutionary algorithms and gradient search: similarities and differences, in IEEE Transactions on Evolutionary Computation, vol. 2, no. 2, pp. 45-55. https://ieeexplore.ieee. org/abstract/document/728207

Stanley, K. O.; and Miikkulainen, R. (2002). Evolving Neural Networks Through Augmenting Topologies”. Evolutionary Computation. 10 (2): 99–127. CiteSeerX 10.1.1.638.3910. doi:10.1162/106365602320169811

Stanley, K. O.; and Miikkulainen, R. (2003). A Taxonomy for Artificial Embryogeny. Artificial Life 9(2):93–130. https://pubmed.ncbi.nlm.nih.gov/12906725/ Gillespie, L. E.; Gonzalez, G. R.; and Schrum, J. (2017). Comparing direct and indirect encodings using both raw and hand-designed features in Tetris. Association for Computing Machinery, New York, NY, USA, 179–186. https://doi.org/10.1145/3071178.3071195

Hornby, G. S.; Lohn, J. D.; and Linden, D. S. (2011). Computer-Automated Evolution of an X-Band Antenna for NASA’s Space Technology Mission. Evolutionary Computation 19(1):1–23. https://pubmed.ncbi.nlm.nih.gov/20583909/

Diaz-Jerez, Gustavo (2011). “Composing with Melomics: delving into the computational world for musical inspiration”. Leonardo Music Journal. 21: 13–14. https://direct.mit.edu/lmj/article-abstract/doi/10.1162/LMJ_a_00053/63657/Composing-with-Melomics-Delving-into-the?redirectedFrom=fulltext

Quintana, C.S., Arcas, F.M., Molina, D.A., Rodríguez, J.D.F. and Vico, F.J. (2013). Melomics: A Case-Study of AI in Spain. AI Magazine, 34: 99-103. https://doi.org/10.1609/aimag.v34i3.2464

Lehman, J.; Chen, J.; Clune, J.; and Stanley, K. O. (2017). Safe Mutations for Deep and Recurrent Neural Networks through Output Gradients. Uber AI Labs, San Francisco, CA 94103. http://eng. uber.com/wp-content/uploads/2017/12/arxiv-sm.pdf

Mimi Collins

From the mid 1940s antibiotics have been available across the UK as a treatment for bacterial infections; however, due to global overprescription we are witnessing increased trends in antibiotic resistance, with over-usage pressuring bacteria to quickly evolve and mutate, so they are immune to antibiotic treatments. Inappropriate use of antibiotics has led to non- antibiotic resistant bacteria being killed off and resistant bacteria being able to multiply without competition, increasing its frequency and allowing superbugs to emerge, which are incredibly difficult to treat. However, predating the discovery of antibiotics, considerable research was being done in the field of bacteriophages as a treatment for bacterial disease in humans, which offers a promising alternative to antibiotics to treat infections they can no longer treat.

Bacteriophages (phages) are a form of mobile genetic element (MGE) that can move in or between cells, including to unrelated strands or species e.g plasmids. MGEs are vital to bacterial ecology. They are an essential part of how bacteria evolve so quickly, because they allow for horizontal transfer of genes, where DNA is passed directly to unrelated individuals, creating genetically diverse bacterial populations and increasing the rate of bacterial evolution. MGEs often carry what are known as payload genes, which are genes that are not necessary to its survival but encode for additional beneficial characteristics such as antibiotic resistance. Despite MGEs often being beneficial to bacteria because they can inherit useful genes, it also leads to what is known as a ‘fitness cost’ to the host. This occurs when gaining extra genes makes it harder for the bacteria to grow and divide.

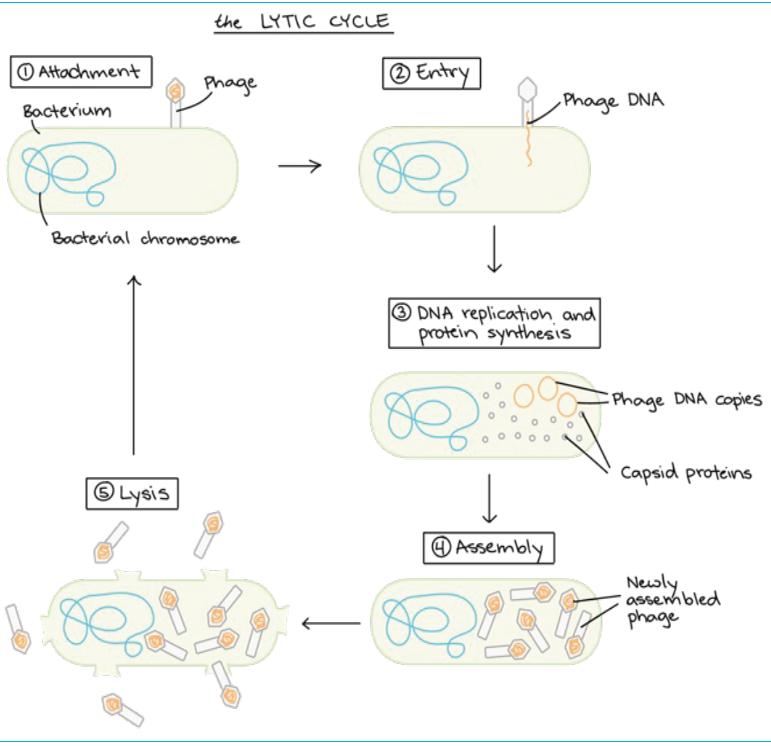

Bacteriophages are a kind of virus that infect bacteria and archaea. They consist of genetic material contained in a protein capsid and they function by attaching to host bacteria cells and injecting their genetic material into it. This hijacks the host cell’s metabolism, leading to phage replication and protein assembly, eventually causing the host cell to burst open and release hundreds of new phage particles. Phages are the most abundant biological entities on the planet; if you lined up all the phages in the world, they would reach millions of light years into space! Everyday phages are responsible for the destruction of 20-40% of all the bacteria in the ocean, freeing up nutrients for new bacteria to grow, highlighting its significant ecological impact.

Bacteriophages, similar to other viruses, must invade a host cell in order to reproduce. The stages of the process of infection are collectively known as the lifecycle of the cell. Some phages are only able to reproduce via a lytic cycle; whereas, some phages can alternate between a lytic and lysogenic lifecycle. In a lytic cycle, the phage acts like a regular virus, where it invades the host cell, uses its resources to grow and reproduce, then causes the cell to lyse (burst) and die. During lysis hundreds of new phages are released, which go on to infect other bacteria. Alternatively, some phages can switch between the lytic and lysogenic cycles. These phages are known as temperate phages. The lysogenic cycle allows the phage to reproduce within a cell without killing it. The main difference in these lifecycles is once the phage DNA is inside the cell, instead of immediately replicating, the DNA combines with that of the bacteria’s chromosomes. The new integrated phage DNA is called a prophage. It is inactive, but each time the host cell divides the prophage is copied, allowing it to reproduce. Eventually, it becomes active again and the remaining steps of the lytic cycle will be carried out (DNA copying, protein synthesis, phage assembly and lysis), damaging/killing the bacteria.

After the Second World War and the discovery of antibiotics, research into bacteriophages as a treatment for bacterial infection was largely abandoned in the West. Scientists were sceptical about the effectiveness of the treatment, largely due to lack of valid results, because of improper storage and purification methods. For instance, phenol was often used as a ‘preservation’ method, which denatured and deactivated the phages. Moreover, societal influences also played a key part in the decline of western enthusiasm with phage treatment. Phage treatment and the research that had been carried out had strong ties with the Soviet Union, with a lot of the most promising studies being held there, leading western scientists to avoid it due to Cold War tensions.

However, during this time phage treatments were still being researched in countries such as Georgia, Russia and Poland. Recently, we have begun to witness a ‘phage renaissance’ in the Western medicine, particularly from around the 1990s, when western scientists began to regain access to Polish and Russian phage research.

Phages carry many benefits over antibiotics as a treatment. For instance, they can be used to treat bacteria with antibiotic resistance, and while resistance to phages can develop, it is much easier for scientists to overcome than antibiotic resistance, partially because phages, being a living entity, are able to develop alongside bacteria and undergo their own evolution against resistance developed by bacteria. Moreover, developing resistance to phages can be beneficial. It has been observed that when bacteria develop resistance to phages, they become more susceptible to antibiotics, and thus they can work synergistically with antibiotics to promote their efficacy. Although, one of the largest limitations to phages are their specificity. Each kind of phage will only work on a specific strain of bacteria, so to be effective as a treatment the kind of infection must be able to be identified accurately and it can be difficult to identify the specific phage required for each kind of bacteria, making finding the correct phage an incredibly lengthy process. One study reported a range of 28 to 386 days between the time of request for phage treatment and actual administration to the patient. Whereas antibiotics function on a much wider spectrum and can be used on many different types of bacteria. This makes it necessary for clinics to make different ‘cocktails’ for treatments of the same disease as the bacterial components that the phage needs to bind to may differ between regions or even between people, so one treatment wouldn’t be effective for everyone, reducing its commercial value.

However, the specificity of phages can be advantageous, because it means they are harmless to the host organism and beneficial bacteria, such as the bacteria found in the gut microbiota, because they only target one kind of bacteria reducing risk of opportunistic infections.

Here are some examples of the uses of phage therapy.

Phage therapy can be used to treat bacterial infections that antibiotics cannot treat, such as biofilm infections. Biofilms are clusters of microorganisms including bacteria, fungi, viruses and protoctists (however most significantly bacteria), that are attached to a surface and/or each other and are embedded in a self-produced matrix. An example of a biofilm is dental plaque- a slimy film comprised of bacteria and viruses, that forms on teeth. Alarmingly, biofilms enable bacteria to survive antimicrobial treatments and extreme weathers and pHs. Biofilms are difficult to treat with antibiotics as they can bind to them, preventing them from penetrating the biofilm. Furthermore, the matrix often contains enzymes that are able to deactivate the antibiotics. They have a low metabolism, inhibiting antibiotics that work by targeting growth of cells, rendering them useless. Consequently, bacteria growing in biofilms can withstand 1000 times higher concentrations of antibiotics than planktonic (free-living) cells. This makes phages enticing as they are more able to penetrate the biofilm and provide an alternative to antibiotics, and in recent years increasing numbers of successful clinical trials have been completed using bacteriophages to treat biofilm infections, particularly in cocktails. These trials are, however, limited as they mostly have been completed in vitro, meaning they were completed on tissues or cells in petri dishes, and limited studies have been completed in vivo conditions, on living organisms.

One of the largest objectives in wound care is the avoidance of infection. Wound prophylaxis denotes the preventative measures put in place to avoid disease or infection. Phages may have interesting effects in this field. An example of this during the Second World War Russia successfully used phage sprays to treat battle wounds against a kind of clostridium bacteria (causing c. Difficile infections- a bacteria that loves to pop up in exam style questions ;)). In this study ≈80% of patients receiving the anti-Clostridium phages survived versus only ≈60% of the patients not receiving phage treatment surviving. Phage treatment has been adopted by modern Georgian soldiers, who use Pyophage sprays to treat wounds, increasing rate of recovery and reducing risk of infection.

Burns

A major concern in the treatment of burns is infection. Typically, severe burns are treated using skin grafts, where healthy skin is taken and attached to the site of the burn. Phage application to the skin prior to grafting could reduce the risk of the growth of a kind of bacteria known as Pseudomonas aeruginosa, which is particularly significant as successful grafting requires not only infection being avoided, but for the new skin applied to heal successfully, with some studies going as far as to suggest the application of phages can even positively contribute to healing.