6 minute read

Fluid Volume Measurement on a Microscope

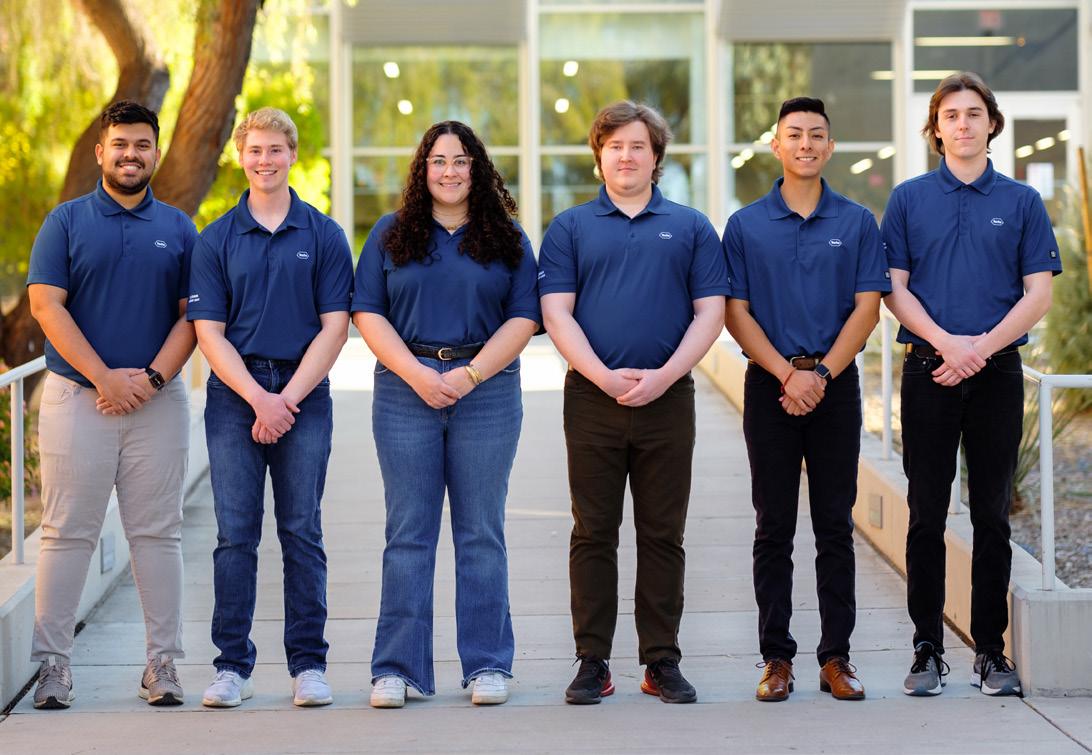

Team 23028

Slide

Project Goal

Design a system that measures the volume of residual fluid on a microscope slide with minimal user interaction.

Roche’s BenchMark ULTRA staining instrument has a variety of internal conditions, and it controls multiple chemical and physical parameters of the tissue staining process. The residual fluid volume on a microscope slide must be known and controlled. Currently, a technician measures the volume using a Kimwipe and an analytical balance. The team designed an optical approach to measure the volume of residual fluid on the microscope slide with less user interaction than the existing method.

The new system captures side-view images of fluid on a microscope slide and processes the images, measuring the height of the fluid at different points. A Raspberry Pi microcontroller directs the image processing, and a machine learning algorithm is trained to estimate and display the fluid volume onto an LCD screen.

The team designed and manufactured a plastic housing for the camera module that integrates directly onto the slide drawer of the staining instrument. They also manufactured an external housing for the Raspberry Pi and its associated components. Designed for simplicity, this system will greatly reduce the amount of time spent measuring residual fluid volumes.

Launch Vehicle Ground Support Equipment Frontend

Team 23029

TEAM MEMBERS

Kyle Ambrose Ambrose, Systems Engineering

Cody Truong Chi, Systems Engineering

Kevin Sean Grady, Electrical & Computer Engineering

Colin Herbert, Electrical & Computer Engineering

Braxton Montgomery Ulmer, Systems Engineering

COLLEGE MENTOR

Michael Madjerec

SPONSOR ADVISOR

Mark Hansen

Project Goal

Transition Northrop Grumman launch vehicle ground support equipment from a Javabased frontend to a web-based one. Apply a systems engineering approach for development of the Human-computer Interface design.

The defense industry typically uses software-based applications to test missiles and other types of flying projectiles. But as technology advances, the software requires numerous updates. By introducing a web-based frontend, the development, testing and release cycles of the system will be faster. The design provides the ability for multiple contributors to automate the integration of code and updates for new software.

By using a systems engineering approach, the team created a user-friendly interface prioritizing safety, performance and user satisfaction. A single client-side application receives data from the launch vehicle sensors and engineers, while simultaneously sending data packets back to the sponsor servers on any occasion of user interaction. With a graphical user interface paired with a backend, data forwarding allows the code to be maintainable and put in a single package. This design is a foundation for the transition to web-based applications for the launch vehicles.

Novel Inspiratory Muscle Strength Training (IMST) Device

Team 23030

Project Goal

Develop an innovative inspiratory muscle strength training device with enhanced user feedback, improving accessibility and impact for clinical and public applications.

Cardiovascular disease (CVD), the leading cause of death globally, results in over 19 million deaths annually, according to the American Heart Association. High blood pressure is directly linked to increased risk for CVD. While an abbreviated daily IMST regimen has been shown to significantly reduce systolic blood pressure, the exercises are difficult to perform effectively using current devices, which offer limited user feedback.

Our respiratory training system, ReTrain, is comprised of an ergonomic, handheld device containing a one-way breathing valve, pressure transducer and ESP32 microcontroller. It senses and transmits the user’s breathing pressure over a Bluetooth Low Energy interface to a paired smartphone application. The accompanying smartphone application monitors breathing pressure and provides live feedback. The device requires minimal user interaction, and the intuitive framework of the app enhances user experience. For standard training and calibration, a real-time waveform of the user’s pressure and current target level are displayed with an accuracy of 3.5%. Additionally, data from each session is stored on the user’s device and transmitted to a clinical program via Amazon Web Service.

Autonomous Mechanical Spider (AMS) Platform for Crop/Turf Management

Team 23031

Project Goal

Create a mid-sized autonomous utility robot with a simple, scalable and easily iteratedupon design, able to be adapted to a variety of missions, such as data gathering in industrial agriculture.

The Fourth Industrial Revolution is the idea that efficiency will be revolutionized by mass-data analytics fueling decisions made by AI. Large-area industries, such as agriculture, are racing to design a versatile drone capable of gathering this information. In this effort, ground based, hexapodal platforms have advantages over both flying drones and tracked/wheeled designs. These include the ability to mount heavier and more robust sensor packages, easy modification, longer loitering times, movement below and around foliage, and low impact on soil.

The AMS design uses off-the-shelf electronics, 3D printed parts and open-source software for simplicity, modularity and ease of modification. It includes a Raspberry Pi running the robot operating system, which has an abundant range of packages allowing for the easy integration of additional sensors, missions and parts. This current iteration uses lidar, or Light Detection and Ranging,=4ew31nd supersonic ranging devices, which provide robust obstacle detection and path planning. In addition, a GPS is used to define a roaming area for the robot, so this platform is ready to be adapted to large-area data gathering, especially within the agricultural sector.

TEAM MEMBERS

Tommy M Carder, Systems Engineering

Dylan McGuire, Biomedical Engineering

Collin Alexander Preszler, Biomedical Engineering, Electrical & Computer Engineering

Nicholas Sherwood Quatraro, Biomedical Engineering

Saul Silva, Systems Engineering

COLLEGE MENTOR

Steve Larimore

SPONSOR ADVISORS

Elizabeth F Bailey, Philipp Gutruf

TEAM MEMBERS

Ali Alaqeel, Electrical & Computer Engineering

Eli J Bitzko, Mechanical Engineering

Tam Friedman, Biosystems Engineering

Kaleb Gabriel Lucero, Engineering Management

Shambhavi Singh, Electrical & Computer Engineering

Ismail Abdul-Aziz Zaki, Mechanical Engineering

COLLEGE MENTOR

Pat Caldwell

SPONSOR ADVISORS

Pedro Andrade Sanchez, Brian Little, Mark C Siemens

TEAM MEMBERS

Alan Loreto Cornídez, Electrical & Computer Engineering

Jack T Moffet, Engineering Management

Victor Ferreira Silva, Mechanical Engineering

Nick Tolmasoff, Mechanical Engineering

Viru Vilvanathan, Mechanical Engineering

COLLEGE MENTOR

Bob Messenger

SPONSOR ADVISOR

Joseph Mueller

Autonomous Maintenance Identification Vehicle

Team 23034

TEAM MEMBERS

Ahmad Ashraf Eladawy, Electrical & Computer Engineering

Milad Ghaemi, Optical Sciences & Engineering

Salman Mohammed Khashoggi, Industrial Engineering

Wilson Liao, Electrical & Computer Engineering

Rafael Lomeli-Navarro, Optical Sciences & Engineering

COLLEGE MENTOR

Bob Messenger

SPONSOR ADVISOR

Joseph Mueller

PROJECT GOAL

Design a system of sensors that automates the rapid detection of material handling equipment degradation or failure in high-usage warehouse facilities.

The equipment in Amazon fulfillment centers across the globe are susceptible to premature degradation and failure. These issues include bearing overheating and failure, conveyor belt misalignment and debris. To combat these problems, the team developed an autonomous, self-powered maintenance unit that can rapidly identify supply chain issues and streamline maintenance. The design will dramatically reduce the time and cost of critical repairs.

A maintenance worker might need up to eight hours to walk and inspect just one conveyor. But the autonomous robot design can do the task in as little as 15 minutes, while costing less than $600. The system uses a suite of low cost, state-of-the-art commercial sensors and is capable of accurately identifying equipment issues on the conveyor line. The hardware and software quickly detect possible failures and provide near-real-time reporting capabilities to factory maintenance technicians and engineers. The system sends a user-friendly, intuitive report to the local team while being completely customizable and modular, all while adhering to safety protocols required to reduce the hazards to personnel in these industrial settings.

Virtual Subject Matter Experts (SME) Training, Critical Asset Control

Team 23035

PROJECT GOAL

Provide remote or in-person hands-on training to Amazon SMEs using virtual reality technology.

This project provides a VR training environment that simulates the conveyor belts that are critical to the operations of Amazon fulfillment centers. The system is designed to allow technicians to interact and gain experience with maintenance and repair of these assets. The company needs highly trained technicians because damage to critical assets can cost the company millions of dollars if the issue is not fixed quickly. Training in person is expensive because of travel, instruction costs and lack of experts for each specific asset. This project presents a more cost-effective method of training the technicians.

Through VR development in Unity, the team developed a training platform in which the user can perform functions that are critical to understanding the operation, repair, maintenance and part breakdown of the asset. Technicians can measure distances on an accurate 3D model of the asset. They can also identify part breakdown information of critical components, such as manufacturing, part number, part description and identification, allowing them to quickly procure replacements. The program also provides examples of faulty parts along with customizable procedures that allow technicians easier troubleshooting, repair, replacement and maintenance of the physical asset.