DUJS

Dartmouth Undergraduate Journal of Science FA L L 2 0 2 1

|

VO L . X X I I I

|

N O. 3

VITAL

REMEMBERING THE IMPORTANCE OF SCIENTIFIC COMMUNICATION

Doctors Under the Microscope: An Informative Look at Coping with Death in Health Care

Pg. 5 1

Meta Learning: A Step Closer to Real Intelligence

Pg. 35

Climate Change and its Implications for Human Health

Pg. 80 DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Letter from the President Dear Reader, Many of us like to believe that science exists in a neutral bubble. However, it has become clear that in the modern day, scientific research is increasingly taking on deeply historic and political roles across many fields. Science, it seems, is not purely an intellectual or academic practice. Rather, scientists must report their findings in a way that persuades their audience, regardless of what they choose to study. The theme of this journal is Vital, reflecting our editors’ and writers’ belief that the task of scientific communication – one that goes so often ignored – has become more important than ever. In Fall of 2021, 54 students wrote for DUJS, ultimately producing 11 individual articles and 5 team articles. You will find a variety of scientific and social-scientific approaches to various topics, including machine learning and traffic, diabetes and kidney disease, and the history and current state of virology. Of particular note are the four members of the class of 2025 who undertook the difficult task of writing individual articles their freshmen fall. Ethan Liu provided extensive evidence suggesting that hyperphosphorylated tau may be a potential culprit for Alzheimer’s Disease. Jean Yuan explored the implications of radiation therapies in cancer treatment. Shawn Yoon explained why soil microbes must not be ignored when studying climate change. Ujvala Jupalli wrote about how lactose intolerance has become a “norm,” both in the US and globally. These students wrote thoughtful and well-researched articles without being able to use foundational STEM knowledge from previous college-level courses, an achievement that is certainly worthy of recognition and celebration. I hope that the wide range of articles show you the Journal's deep commitment to producing good scholarship throughout various scientific disciplines. Perhaps more importantly, I hope that you notice that across each of articles, you will not find just a laundry list of scientific facts. Rather, each student wrote a subtle yet impassioned argument about a topic that they are deeply passionate about. I thank you for taking the time to read each article carefully and to think critically about the story that each writer is trying to tell, and I hope that within the 156 pages in this edition, you are inspired to research and write your own scientific tale. Sincerely, Anahita Kodali

The Dartmouth Undergraduate Journal of Science aims to increase scientific awareness within the Dartmouth community and beyond by providing an interdisciplinary forum for sharing undergraduate research and enriching scientific knowledge. EXECUTIVE BOARD President & Acting Editor-in-Chief: Anahita Kodali '23 Chief Copy Editors: Daniel Cho '22, Dina Rabadi '22, Kristal Wong '22, Maddie Brown '22 EDITORIAL BOARD Managing Editors: Alex Gavitt '23, Andrew Sasser '23, Audrey Herrald '23, Carolina Guerrero '23, Eric Youth '23, Georgia Dawahare '23, Assistant Editors: Callie Moody '24, Caroline Conway '24, Grace Nguyen '24, Jennifer Chen '23, Matthew Lutchko '23, Miranda Yu '24, Owen Seiner '24 STAFF WRITERS Abigail Fischer '23

Juliette Courtine '24

Andrea Cavanagh '24

Justin Chong '24

Anyoko Sewavi '23

Kate Singer '24

Ariela Feinblum '23

Kevin Staunton '24

Benjamin Barris '25

Lauren Ferridge '23

Brooklyn Schroeder '22

Lily Ding '24

Callie Moody '24

Lord Charite Igirimbabazi '24

Cameron Sabet '24

Matthew Lutchko '23

Camilla Lee '22

Miranda Yu '24

Carolina Guerrero '23

Nathan Thompson '25

Caroline Conway '24

Nishi Jain '21

Carson Peck '22

Owen Seiner '24

Daniela Armella '24

Rohan Menezes '23

David Vargas '23

Sabrina Barton '24

Declan O’Scannlain '23

Salifyanji Namwila '24

Dev Kapadia '23

Sarah Lamson '24

Elaine Pu '25

Shawn Yoon '25

Emily Barosin '25

Soyeon (Sophie) Cho '24

Ethan Litmans '24

Shuxuan (Elizabeth) Li '25

Ethan Liu '25

Sreekar Kasturi '24

Ethan Weber '24

Tanyawan Wongsri '25

Evan Bloch '24

Tyler Chen '24

Frank Carr '22

Ujvala Jupalli '25

Jake Twarog '24

Vaani Gupta '24

Jean Yuan '25

Vaishnavi Katragadda '24

John Zavras '24

Valentina Fernandez '24

Julian Franco Jr. '24

Zachary Ojakli '25 Zoe Chafouleas '24

SPECIAL THANKS

DUJS Hinman Box 6225 Dartmouth College Hanover, NH 03755 (603) 646-8714 http://dujs.dartmouth.edu dujs.dartmouth.science@gmail.com Copyright © 2021 The Trustees of Dartmouth College

Dean of Faculty Associate Dean of Sciences Thayer School of Engineering Office of the Provost Office of the President Undergraduate Admissions R.C. Brayshaw & Company

Table of Contents

Individual Articles Doctors Under the Microscope: An Informative Look at Coping with Death in Health Care Ariela Feinblum '23 & Dr. Yvon Bryan, Pg. 5

Potential Culprit of Alzheimer’s Disease - Hyperphosphorylated Tau Ethan Liu '25, Pg. 12

5

Machine Learning in Freeway Ramp Metering Jake Twarog '24, Pg. 19

Radiation Therapy and the Effects of This Type of Cancer Treatment Jean Yuan '25, Pg. 24

The Effects of Climate Change on Plant-Pollinator Communication Kate Singer '24, Pg. 30

12

Meta Learning: A Step Closer to Real Intelligence Salifyanji Namwila '24, Pg. 35

Microbial Impacts from Climate Change Shawn Yoon '25, Pg. 43

Ultrasound Mediated Delivery of Therapeutics Soyeon (Sophie) Cho '24, Pg. 47

35

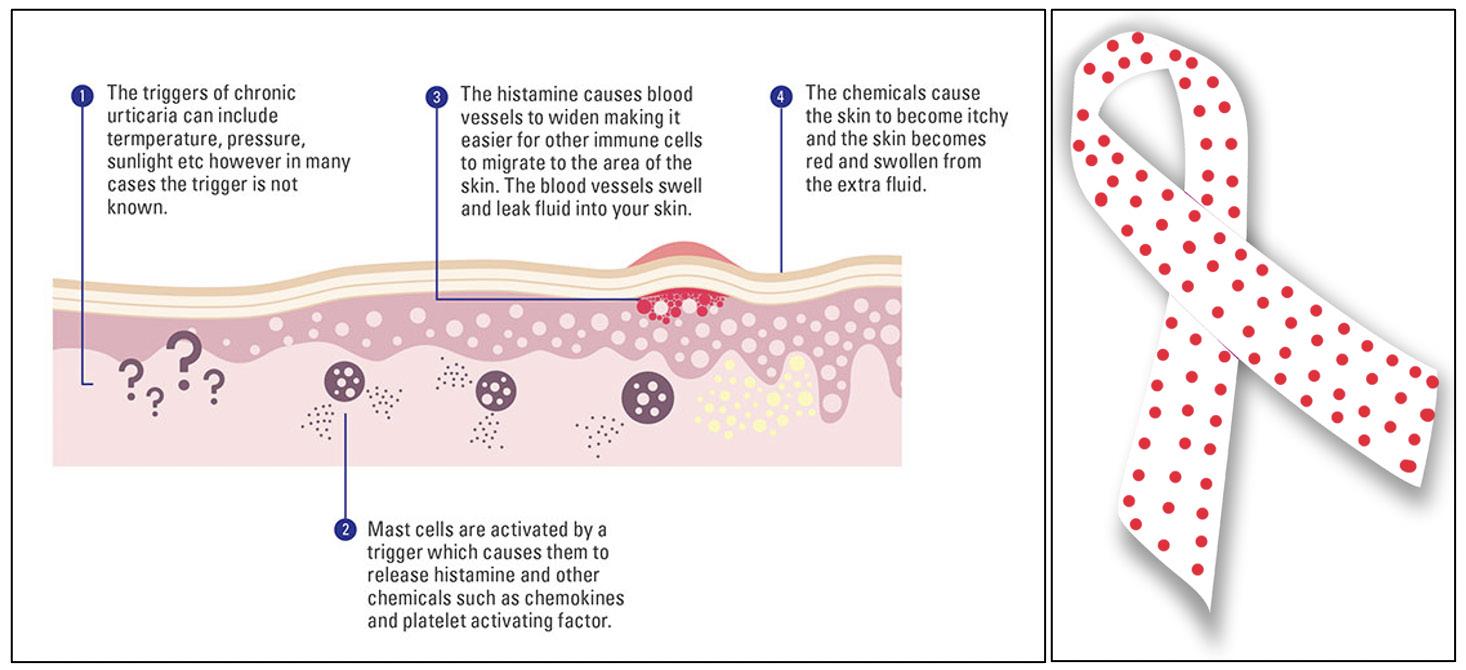

Pathophysiology, Diagnosis, and Treatment of Heat-Induced Hives: Cholinergic Urticaria Tyler Chen '24, Pg. 55

Lactose Intolerance as the “Norm" Ujvala Jupalli '25, Pg. 66

Understanding The Connection Between Diabetes and Kidney Disease: Are SGLT-2 Inhibitors the “Magic Bullet”? Valentina Fernandez '24 Pg. 71

80

Team Articles

Climate Change and its Implications for Human Health Pg. 80

History and Current State of Virology Pg. 98

HIV/AIDS and Their Treatments Pg. 120

98

The Psychedelic Renaissance Pg. 136

Vegetarianism Debate Pg. 148

4

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Doctors Under the Microscope: An Informative Look at Coping with Death in Health Care BY ARIELA FEINBLUM '23, DR. YVON BRYAN MD Cover Image Source: Unsplash

Image 1: A distraught doctor. Image Source: Unsplash

5

Introduction The COVID-19 pandemic has affected pre-med undergraduate students in many ways; though we often focus on the pandemic preventing students from shadowing doctors, it has also directed many undergraduates’ attention to the death that doctors encounter in their work. This has been an eye-opening experience for many, but doctors have had to deal with patients dying since long before the pandemic. This can be very upsetting for doctors, like that portrayed in Figure 1. As an undergraduate student and aspiring doctor, myself, I believe it is essential to understand how doctors deal with this difficult aspect of their jobs. Many undergraduate students do not think about how they will personally deal with the inevitable deaths of future patients. There are also many different types of patient deaths. It is important for undergraduates to think about such factors before they become practicing medical professionals. Deaths caused by things like suicide and murder can be very troubling and tragic. Other deaths, such as those of patients who die with the assistance of euthanasia, can be seen as ending the patients’ pain and suffering. A patient's death does not have to be violent for a doctor to have difficulty coping. Factors like

the patient's age can affect how hard it is for the doctor to process that patient’s passing (RhodesKropf et al., 2005). I used the field of anesthesiology as a lens into the greater field of medicine. To understand how difficult some cases are for doctors to

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

deal with, I asked Dr. Yvon Bryan, a pediatric anesthesiologist at the Dartmouth-Hitchcock Medical Center, about his experience in dealing with patient deaths. Through my discussions with Dr. Bryan, as well as through a literature review, I came across some important factors influencing how doctors conceptualize and deal with death. First, it is important for undergraduate students to understand the physiology of how a patient dies. Many doctors intellectualize death by thinking about the biological processes that led to a patient's ultimate death, compartmentalizing death’s physical properties from its philosophical implications. Next, pre-med students should know that different types of death tend to affect doctors differently. Factors like the patient's age and cause of death can affect how doctors view and personally handle a specific patient’s death. There are even fields in medicine that revolve around death such as palliative care or with assisting with euthanasia. In this paper. I will discuss some of the issues affecting how doctors cope with patient deaths (Danticat, 2017).

while their mind is viewed as a qualitative entity of who they are as an individual. Although, it is important to note that there are many cognitive scientists and doctors that believe that every aspect in one’s mind is a result of the workings of the physical brain. Yet, many still challenge this view on religious grounds (Trivate et al., 2019). Death may be viewed as the ultimate lack of function of one's anatomy or physiology, but it is also important to recognize the ambiguity between the dying cells and organs and lack of the individual’s life. It may sometimes be difficult for students to understand the transition between life, dying, and death. One explanation is that dying is a lack of action. While one is living and healthy, they have their organs and necessary

Image 2: The cardiovascular system. Image Source: Unsplash

Loss of Vital Signs: The Numerical Intellectualization of Death There are key physical systems to focus on when it comes to understanding death from a biological perspective. These systems include the cardiovascular, pulmonary, endocrine, and neurological systems. To understand how these systems work together, we can use the analogy of the body to a car. In the cardiovascular system, the heart is like an engine, which pumps blood and makes the body work. The exhaust would be the pulmonary system breathing in air and carbon dioxide. The endocrine system works to maintain the body's hormonal balance. Finally, the neurological system directs all other systems and collects sensory information to assess when functional adjustments are necessary (Dr. Bryan, Personal communication, October 2021). In medical school, students often spend their first-year learning about human anatomy and their second-year learning about potential bodily abnormalities. On a basic level, the main systems can malfunction in different ways. Learning how the brain works is different from understanding dementia. Often, when the cardiovascular system malfunctions, myocardial ischemia occurs, or when the pulmonary system malfunctions, the lungs suffer from bronchitis or emphysema. It is important to note that there is a difference between anatomy and/or physiology and the qualitative experience of a patient, and it may be troubling to separate the anatomy of the patient from their experience. In other words, a persons lived experience is different from their biology. For example, a person’s brain is often viewed as a structure in quantitative form, FALL 2021

systems active. However, life and death are not a completely binary phenomenon. There are many different examples that convey how these grey zones may present themselves in patients. First, when a patient has a traumatic brain injury (TBI), they traditionally have had relatively normal brain function before their injury. Depending on the part of the brain that is damaged, patients can have long or shortterm memory loss, trouble with attention, or even trouble recognizing objects (Goldstein, 2014). These types of injuries may not affect the main systems that the patient depends on to live like the cardiovascular or endocrine system, but it can nonetheless affect their abilities to function in their daily lives. For example, if patients can’t remember their way home, or where they put things, TBIs can be extremely debilitating and sometimes even dangerous. These patients are alive but cannot live their lives the same way they did prior to their brain injury.

"... I believe it is essential to understand how doctors deal with this difficult aspect of their jobs."

Another example demonstrating the nonbinary nature of life and death is abnormalities 6

in one's liver or kidneys. Abnormalities in the liver and kidney can present themselves in various ways, such as a change in personality or ability to function in one’s daily life. One example of how this grey area can present itself is with patients with both Type 1 and Type 2 diabetes. Those with diabetes have a slow deterioration of their anatomical and physiological systems. Diabetes can lead to the requirement for dialysis treatment or kidney transplants for some patients. People may begin to lose the physical ability to live without support as well as a sense of who they are and their qualitative experience of the world (Mertig, 2012).

"Physicians are passionate about saving lives, even when it means risking their own."

Overall, when examining death from a physiological perspective, it is also important to distinguish between brain physiology and the functions or states that the brains physiology facilitates, as many anesthesiologists do in monitoring patients. This is an important topic when looking at patient death through the lens of anesthesiology. A patient can technically have their biological systems intact but have changes in brain function. Different ways your brain can change include – but are not limited to – being fully unconscious by use of anesthetic agents or minimally sedated. In these cases, many doctors may not see the patient as awake in the same way they do a patient who can communicate with them. This sedated state puts patients in a gray area between life and death for some anesthesiologists. This begs the question of how doctors view neurological conditions such as dementia that may cause people to lose much of who they previously were and brings up questions of how physicians deal with, or internalize, the impact of their patients' conditions on themselves. Overall, these questions are ones that pertain to doctors in all fields of medicine and are particularly common in the field of anesthesiology (Dr. Bryan, Personal communication, October 2021).

Cause of Death: Qualitative Impact on Physicians Something I learned through speaking with Dr. Bryan is that physicians often have no way of knowing just how deeply a patient's death is going to affect them until they experience that patient's death. Different types of patient deaths can also make a person question various aspects of one's personal beliefs. For example, when a doctor has a pediatric cancer patient, it can cause them to question the unfairness of life. These types of questions stem from seeing so much suffering in such young patients and their families. Children may die from many different things, such as cancer, infection, and accidents. Pediatric patient

7

deaths can be especially hard for physicians due to the realization of the quantity of years the patient has lost. Specifically, when a child dies, there is lost potential, and one inevitably thinks about what type of person that child may have been. The child has lost their life, and with this comes the loss of the future years the child would have experienced. (Granek et al., 2016; Dr. Bryan, personal communication, October 2021). More generally, there are many different emotions and thoughts associated with doctors' grief over a patient. Doctors are troubled by the loss of life. They may also feel guilty because they made a mistake, or because they wish they had done more to try and save the patient. There are also potential legal and financial ramifications that physicians may encounter in addition to the other difficult parts of a patient's death. This can be true regardless of a doctor's actual ability to save a patient which can lead to feelings of helplessness. For example, a child may come in with a severe trauma, and even though there was no way to save the child, the physician may still feel bad for not being able to save them. Even when a doctor does everything they can, they may still feel guilty about a patient's death. Many doctors take patients’ deaths and try to learn something from them. Physicians often have a hard time knowing when to stop trying to save a patient. Additionally, especially during the time of COVID-19, many doctors have put their lives at risk in order to treat patients. Traditionally, one may not think of doctors as risking their lives to save others, but this is not true. Physicians are passionate about saving lives, even when it means risking their own. This makes it even more difficult to accept a patient's death that a physician works hard to save (Kostka et al., 2021; Dr. Yvon Bryan, personal communication, October 2021). Several factors play a part in how a patient's death may affect their doctor. A primary factor is whether the death was unexpected. Unexpected deaths may take a larger emotional toll on many doctors than expected deaths do. If a patient suffering from terminal cancer dies, it may be sad, but less difficult because it was expected. If a patient comes in from being in an accident and dies, this is often harder for doctors to deal with. This is especially true for children who die suddenly. However, not all deaths are tragedies. Some patients may have lived full lives and died from an illness later in life. In these cases, it may be easier for doctors to deal with their deaths (Dr. Yvon Bryan, personal communication, October 2021; Jones, & Finlay, 2014).

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Case Studies In this section, I will use several case studies to try and understand how various patient deaths affect doctors differently and the wisdom a doctor may gain from specific patients they lose. Dr. Bryan shared with me various cases he has encountered. He also explained what he learned from each case and how it may have changed how he thought about things in his own life. As a future physician, I am trying to learn about how the body works and understand the mechanistic aspects of the human body as well as its physiology. What I have learned is that the body is the addition of many parts. The cases below illustrate not just the loss of an organ system, but the loss of an entire life. Understanding the physiology of how someone dies and how one might try and save them is not the same as the tragedy that surrounds a patient’s death (Dr. Bryan, Personal communication, October 2021). When talking about anesthesia specifically, it is important to remember that anesthesiologists spend most of their time with their patient unconscious. This means that the patient doesn't necessarily show their humanity for the majority of the time that the anesthesiologist is with them. Dr. Bryan told me how he reflects on cases and the importance of seeing the patients not just as having a type of issue but rather as human beings. All the patient deaths had a common lesson/theme, each death taught something about humanity (Dr. Bryan, Personal communication, October 2021). The first case was his first patient death in his third year of medical school. A doctor’s first patient death is always significant. In this case, the patient, a farmer, came into the emergency room and ultimately died later during hospitalization.

This was difficult for Dr. Bryan, as he spent time with the patient and saw their humanity. Initially, the patient was just a human being who came into the emergency room with a problem. Dr. Bryan’s later familiarity with the patient made the death harder to deal with on a personal level. When describing the case, he stated that this patient essentially gave him a tour of the hospital because of all the complications the patient experienced. This specific patient was in the hospital for about a month before he died from vascular disease and end organ failure. One of the main lessons I learned from this case was that the most difficult part of seeing a patient die is sometimes watching the humanity be lost from the patient (Dr. Bryan, Personal communication, October 2021). Next, he told me about a case which involved a two-year-old boy who was attacked and bitten by a dog, unprovoked. The dog was the grandfather's rottweiler. The boy came in with bite injuries on his thigh, face, and head. Before going into the operating room, the boy was not thought to be at high risk of dying. This element of the case made it more difficult, as the death was unexpected. During surgery, the doctors found that a bone chip had gone into the boy's sagittal sinus in the boy’s brain. When the neurosurgeon tried to take it out, the child ended up bleeding to death in the operating room. This case was particularly difficult for Dr. Bryan for many reasons. The unexpected nature of the patient’s death meant that he had no time to reflect on the case before going into the operating room. This case was also very tragic and complicated, as it was the grandfather's dog that was ultimately responsible for the boy's death. The odds of dying from a dog bite are low, which made the death more surprising and therefore more difficult. Another more obvious factor in this case was the age of Image 3: An operating room. Image Source: Unsplash

FALL 2021

8

Table 1: The impact of death and what we can learn from it based on my interviews with Dr. Bryan. Table Source: Created by Author

9

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

the patient, in addition to the cause of death. The boy was just two years old when he died. The boy also died after being bitten by a pet, something many of us interact with every day (Dr. Bryan, Personal communication, October 2021; Gazoni et al., 2008).

though the period leading to death can involve a slow decline with a drastic decline at the very end. Overall, this paper raises many questions and considerations for undergraduate students considering a career in medicine. It also aims to better prepare students to shadow doctors in the future (Zheng et al., 2018).

How Does the Student Learn from the Wisdom of Death?

References

Through my research and conversations with Dr. Bryan, I learned a lot about how doctors deal with patient deaths. The main thing I have learned is that thinking about how I, a future doctor, will deal with death is helpful but will never fully prepare me to deal with a patient's death. Doctors cannot tell how a patient's death will affect them until after it happens, and for this reason, thinking about how one will deal with it is important but insufficient to prepare for the real experience. Each doctor comes with their own personal baggage, opinions, and histories. Doctors can sometimes react irrationally to patient deaths because of these factors. For example, Dr. Bryan did not allow his children to ever go on trampolines after having seen a patient whose injury was caused by a trampoline accident. This may not have been rational to never allow his children on a trampoline because of a single patient, yet this was his reaction. Another example Dr. Bryan gave me was how he never allowed his children to have a dog. Many dogs are not dangerous; nonetheless, Dr. Bryan did not allow his children to have a dog after seeing a child die because of one. A doctor's personal history will inevitably influence how each doctor handles the loss of each individual patient. Generally, not all deaths are the same, and some will affect doctors more or less than others (Gazoni et al., 2012). Another important thing I learned from Dr. Bryan is that thinking about patient deaths involves understanding the humanity of people and not just seeing a patient as a set of numbers. Patient deaths can have qualitative, long-term effects on their loved ones as well as on the doctors who treated them. This is a very important point to recognize. Dr. Bryan taught me that patient deaths begin to change you, and that is completely normal. Patient deaths can have lasting psychological impacts on doctors, enabling them to think about what they really value in life. Doctors also learn from patient deaths. Doctors see the value in enjoying life firsthand because they watch patients lose the ability to do the things those patients enjoy. Death can happen quickly, even in just a minute,

FALL 2021

Danticat, E. (2017). The art of death: Writing the final story. Graywolf Press. Gazoni, Amato, P. E., Malik, Z. M., & Durieux, M. E. (2012). The Impact of Perioperative Catastrophes on Anesthesiologists: Results of a National Survey. Anesthesia and Analgesia, 114(3), 596–603. https://doi.org/10.1213/ ANE.0b013e318227524e Gazoni, Durieux, M. E., & Wells, L. (2008). Life After Death : The Aftermath of Perioperative Catastrophes. Anesthesia and Analgesia, 107(2), 591–600. https://doi.org/10.1213/ ane.0b013e31817a9c77

"... thinking about patient deaths involves understanding the humanity of people and not just seeing a patient as a set of numbers."

Goldstein, E. B. (2014). Sensation and perception. Wadsworth. Granek, L., Barrera, M., Scheinemann, K., & Bartels, U. (2016). Pediatric oncologists' coping strategies for dealing with patient death. Journal of Psychosocial Oncology, 34(1-2), 39-59. doi: 10.1080/07347332.2015.1127306 Jones, R., & Finlay, F. (2014). Medical students’ experiences and perception of support following the death of a patient in the UK, and while overseas during their elective period. Postgraduate Medical Journal, 90(1060), 69–74. https://doi. org/10.1136/postgradmedj-2012-131474 Kostka, A. M., Borodzicz, A., & Krzemińska, S. A. (2021). Feelings and Emotions of Nurses Related to Dying and Death of Patients – A Pilot Study. Psychology Research and Behavior Management, 14, 705–717. https://doi.org/10.2147/PRBM. S311996 Mertig, R. G. (2012). Nurses’ guide to teaching diabetes self-management (2nd ed.). New York: Springer Pub. Rhodes-Kropf, Carmody, S. S., Seltzer, D., Redinbaugh, E., Gadmer, N., Block, S. D., & Arnold, R. M. (2005). “This is just too awful; I just can’t believe I experienced that...”: medical students’ reactions to their “most memorable” patient death. Academic Medicine, 80(7), 634–

10

640. Trivate, T., Dennis, A. A., Sholl, S., & Wilkinson, T. (2019). Learning and coping through reflection: Exploring patient death experiences of medical students. BMC Medical Education, 19(1), 451. doi: 10.1186/s12909-019-1871-9 Zheng, R., Lee, S. F., & Bloomer, M. J. (2018). How nurses cope with patient death: A systematic review and qualitative meta-synthesis. Journal of Clinical Nursing, 27(1-2), e39-e49. doi: 10.1111/ jocn.13975

11

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Potential Culprit of Alzheimer’s Disease Hyperphosphorylated Tau BY ETHAN LIU '25 Cover Image: Neurofibrillary tangles in the Hippocampus of an old person with Alzheimer-related pathology. Image Source: Wikimedia Commons

Abstract Alzheimer’s disease (AD) is an age-related neurodegenerative disorder and the leading cause of dementia. Since the first report of this disease by Dr. Alois Alzheimer in 1906, extensive studies have revealed several pathological features, including extracellular neuritic plaques containing Aβ and intracellular neurofibrillary tangles mainly composed of hyperphosphorylated tau. According to the Aβ hypothesis, which has dominated the field in the last two decades, the primary cause of neurodegeneration in AD is the abnormal accumulation or disposal of Aβ. However, the successive failure of several clinical trials targeting Aβ-associated pathology have raised doubts in its role in neurodegeneration. It is beneficial to revisit the importance of another essential protein, tau, in the pathogenesis of AD to explore more strategies for treating this devastating disorder. This report will review the physiological and pathological functions of tau and its relation to neurodegeneration in AD. Additionally, recent evidence supporting the tau hypothesis will be addressed.

Introduction Alzheimer’s disease (AD) is the most common 12

neurodegenerative disorder associated with aging. It is clinically characterized by dementia followed by other cognitive impairments, such as aphasia (inability to understand/express speech), agnosia (inability to interpret sensations), apraxia (inability to speak), inability to interpret sensations, and behavioral disturbance. The disease affects approximately 10% of individuals older than 65 and about 33% of individuals 85 and older. Progressive neuronal loss caused by the disease is associated with the accumulation of insoluble fibrous materials within the brain, both intracellularly and extracellularly. The extracellular deposits consist of aggregated betaamyloid protein (Aβ), which is derived from a precursor protein called β-amyloid precursor protein (APP) that undergoes sequential cleavages by proteinases (Hebert et al., 2003). Aβ is a short peptide, and, although it is initially nonfibrillar, it progressively transforms into fibrils called neuritic plaques. Intracellularly, cell bodies and apical dendrites of neurons are occupied by intraneuronal filamentous inclusions, which are called neurofibrillary tangles (NFTs). NFTs mainly consist of hyperphosphorylated forms of microtubule-associated protein (MAP) and tau (Serrano-Pozo et al., 2011). Although Alzheimer’s Disease is pathologically characterized by the DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Image 1: Diseased vs Healthy Neuron. Image Source: Wikimedia Commons

presence of both neuritic plaques and NFTs, recent evidence indicates that neurofibrillary tangles could have a more significant impact on the progression of the disease. First, the extent and topographical distribution of neurofibrillary lesions strongly correlates with the degree of dementia. The progression of NFTs in AD follows a typical spatiotemporal progression, which can be characterized in six neuropathological stages. During stages I and II, the superficial cell layers of the trans-entorhinal region and entorhinal regions are affected. Patients are still clinically unimpaired at this stage. In stages III and IV, severe NFTs develop extensively in the hippocampus and the amygdala with limited growth in the association cortex. In stages V and VI, significant neurofibrillary lesions appear in the isocortical association areas. The patient meets the criteria for the neuropathological diagnosis of AD during these two stages (Braak et al., 2014). The development of neurofibrillary lesions contrasts with the development of Aβ deposits. Aβ deposits show a density and distribution pattern that varies significantly amongst individuals and is not a reliable indicator of Alzheimer’s disease. In contrast, the gradual and patterned development of neurofibrillary lesions allows scientists to gauge the progression of the disease (Serrano-Pozo et al., 2011). Furthermore, FALL 2021

although

neuritic

plaques

are

exclusive to AD patients, Aβ deposition can be observed in healthy individuals. Neurofibrillary lesions are only observed in neurodegenerative disorders such as AD, amyotrophic lateral sclerosis (ALS), Down syndrome, and postencephalitic parkinsonism (Rodrigue et al., 2009). Therefore, it has been theorized that neurofibrillary lesions have a more significant impact on Alzheimer’s patients than neuritic plaques. This paper provides a summary of the physiological and pathological roles of tau in AD and discusses the advancement of the tau hypothesis in understanding AD disease progression.

"The disease affects approximately 10% of individuals older than 65 and about 33% of individuals 85 and older."

Tau Protein and its Physiological Function Tau is a phosphoprotein and belongs to the microtubule associated protein (MAP) family. Microtubules are structures in the cell cytoskeleton which supports neurons with structural integrity and cellular transport Tau t is an intrinsically disordered protein (lacking a fixed threedimensional shape) and is naturally unfolded and soluble under normal conditions. Although it is primarily found in neurons in the central nervous system, a relatively high concentration of tau is present in the heart, kidneys, lungs, skeletal muscles, pancreas, and testis. Trace amounts can also be found in the adrenal gland, stomach, and liver (Gu et al., 1996). In the adult brain, there 13

are six tau isoforms: products of alternative mRNA-splicing from a single gene, MAPT. The six isoforms, each ranging from 352 to 441 amino acid residues, differ in the number of amino acid N-terminal inserts (0N, 1N, or 2N) and the presence of three or four microtubule binding repeats (3R or 4R) near the C-terminal end. Each isoform has a different function on microtubules, and they are not equally expressed in cells. Tau is developmentally regulated since the abundance of different isoforms change as people age.

"More work is still required to understand the factors promoting tau hyperphosphorylation and the nucleating steps."

Tau plays an essential role in regulating microtubule dynamics and serves as a potent promoter of tubulin polymerization in vivo. The protein increases the rate of association and decreases the rate of dissociation of tubulin proteins at the growing ends of microtubules. It also reduces the dynamic instability of microtubules (the tendency for microtubules to change lengths rapidly) by preventing catastrophe, a period where microtubules rapidly shorten in response to stimuli (Barbier et al., 2019). These phenomena have been observed in vivo in neurons when tau is injected into fibroblasts. An increase in microtubule mass and increased microtubule resistance to depolymerizing agents were observed in those cells (Drubin & Kirschner, 1986). Other than directly binding to microtubules, tau proteins also bind to other cytoskeletal components, such as spectrin and actin filaments. These interactions may allow microtubules to interconnect with other cytoskeletal components, such as neurofilaments, to change cell shape and flexibility (Mietelska-

Porowska et al., 2014). Additionally, tau serves as a postsynaptic scaffold protein that modulates actin binding to substrates by regulating kinases which phosphorylates actin (Sharma et al., 2007). Overall, under normal physiological conditions, tau is involved in the regulation of microtubule dynamics, spatial arrangement, and cell stability, which affects a variety of cellular functions.

Tau Phosphorylation and Aggregation The phosphorylation of tau is regulated by kinase and phosphatase activity, although the specific kinases (which phosphorylate proteins) and phosphatases (which dephosphorylate proteins) involved are still under investigation. Phosphoryalted forms of tau are high in fetuses and decrease with age due to phosphatase activation. Glycogen synthase kinase 3 (GSK3), cyclindependent kinase 5 (CDK5), and microtubuleaffinity-regulating kinase (MARK) are promising candidates currently under study for regulating the phosphorylation of tau. Previous studies showed that the inhibition of GSK3 by addition of lithium chloride decreased tau phosphorylation and lowered levels of aggregated and insoluble tau in vivo, suggesting that GSK3 could be a drug target for treating AD (Noble et al., 2005). In comparison to kinases, phosphatases decrease tau phosphorylated forms. One study found that when PP2A protein phosphatase-2A (PP2A) activity is increased following downregulation of its endogenous inhibitors, I1pp2Aand I2pp2A, there is an increased level of phosphorylated tau (Chen et al., 2008). Therefore, disturbances

Image 2: Healthy Map2-tau in neurons. Map2 is stained in green. Tau is stained in red. DNA is stained blue. Tau is also in the yellow regions, where its red stain superimposes with the green stain in MAP to create the yellow colour. Image Source: Wikimedia Commons

14

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Image 3: Brain sections showing tau protein (brown spots). From left to right, 1) the brain of a healthy 65-year-old, 2) the brain of a former NFL linebacker who suffered eight concussions and died at age 45, John Grimsley, 3) the brain of a 73-year-old boxer who suffered from extreme dementia pugilistica. Image Source: Flickr

in the balance between kinase and phosphatase activities is suspected to contribute to the hyperphosphorylated status of tau protein and toxicity to neurons. In terms of pathological status, hyperphosphorylated tau that are dissociated from microtubules become unbound tau in the cytoplasm. It has been speculated that this increased hyperphosphorylated cytosolic tau promotes the conformational change in favor of tau misfolding and aggregation. NFTs are mainly made up of paired helical filaments (PHF), which are composed of tau in an abnormally phosphorylated state. All six isoforms have been detected in neurofibrillary lesions in varying levels. With the help of cryo-electron microscopy, a precise microscopy technique which involves cooling the sample to cryogenic temperatures, it was discovered that the 3R tau isoforms assembled into twisted, paired, helical-like filaments, while the 4R tau isoforms assembled into straight filaments (Fitzpatrick et al., 2017). Although the stepwise process for generating tau aggregation is not well-understood, one hypothesis for a nucleation-dependent assembly mechanism suggests that tau is dissociated from the microtubules and undergoes a conformational change more prone to misfolding. Early deposits of tau which promote the formation of pretangles are suggested to lack β-sheets structures, since they cannot be detected by histochemistry, which involves staining the protein with dyes that detect specific structures. However, later deposits of tau are detected to have β-sheets which can be detected by histochemistry, suggesting a structural transition. Recent evidence suggests that this structure change may be caused by tubulin dimers and oligomers, conglomerations FALL 2021

of microtubule subunits. After efficient filament addition and elongation, the mature PHFs are eventually formed (Meraz-Rios et al.). More work is still required to understand the factors promoting tau hyperphosphorylation and the nucleating steps.

Tau Hypothesis in the Pathogenesis of AD and Its Recent Updates The tau hypothesis is one of the two major hypotheses to explain the pathogenesis and disease progression in AD (the other being the Aβ hypothesis). The tau hypothesis states that tau phosphorylation and aggregation are the primary cause of neurodegeneration in AD. Firstly, the topographical distribution of NFTs can characterize the progression of AD. Clinicopathological studies have demonstrated that the amount and distribution of NFTs correlates with the severity and the duration of dementia, while the distribution of Aβ does not (Kametani & Hasegawa, 2018). Second, it has been demonstrated that tau-related pathology occurs before the onset of Aβ accumulation. According to recent findings from Dr. Braak and Dr. Del Tredici, who outlined the original six stages of AD (previously described in this paper), the system has been revised to include the progression of pre tangles formed in subcortical and transentorhinal regions of the brain prior to the development of NFT stage I. During these stages, Aβ deposits are not accompanied by tau lesions (Braak & Del Tredici, 2014). Third, tau has been involved in mediating Aβinduced neurodegeneration from animal studies. Knocking out the tau genes in the APP/PS1 (Amyloid Precursor Protein) mice conferred protection not only against memory impairment,

15

but also against synaptic loss and premature death. APP/PS1 mice that lacked tau had lesser plaque burdens than age-matched APP/PS1 mice that expressed tau (Leroy et al., 2012). Fourth, tau can elicit neuronal toxicity independent of Aβ. The pathological accumulation of tau within different cell types is responsible for a heterogeneous class of neurodegenerative diseases, which are termed tauopathies. Taumediated toxicity is extensively supported by findings from familial frontotemporal dementia (a condition formerly known as frontotemporal dementia with parkinsonism-17) (Goedert et al., 2017). With the advancement of AD studies through genetic animal models, the Tau P301S mouse model demonstrated synaptic deficits in the hippocampus before the formation of NFT. Another tau mouse model (rTg4510), which conditionally expresses P301L mutant tau, showed cell loss without the presence of NFTs, suggesting that early pathological species other than NFTs mediate cellular toxicity. The puzzle of how NFTs are formed is being solved. One step to solving this puzzle is identifying an intermediate species between tau monomers and NFTs, called tau oligomers. With the help of atomic force microscopy, granular oligomers were identified in Braak stage 0 brain samples. These oligomeric species were suggested to increase with the advancement of pre-symptomatic stages, possibly explaining the synaptic degeneration that

precedes NFTs. Oligomers were also detected in the P301L transgenic mice and rTg4510 mice (Meraz-Rios et al., 2010). While the results are still inconclusive, these oligomeric species could be the potential cause of NFT formation. Another recent update of the tau hypothesis has emphasized the propagation of tau lesions through a prion-like mechanism or “seeding” concept. Prions are misfolded proteins that convert normal proteins into their misfolded shapes. Although there is no evidence supporting tau as an infectious entity, its capacity to transform healthy tau species to aggregation-prone species resembles the cell-to-cell transmission of prions in vivo. Guo and Lee (2011) demonstrated that minute quantities of misfolded preformed fibrils could rapidly yield large amounts of filamentous inclusions resembling NFTs when the misfolded preformed fibrils were introduced into tau expressing cells. The aggregates they produced resemble the NFTs found in AD patients; they have highly ordered β-pleated sheet structures and resemble NFTs in AD patients based on several analyses, including immunofluorescence, amyloid dye binding, immune-EM, and biochemical analysis. These results indicate that preformed fibrils can potentially act as a prion to transform healthy tau species into NFTs (Guo & Lee, 2011). The hypothesis of tau-induced seeding was supported by numerous studies conducted in both cell and animal models. In these studies, tau seeds – derived from brain

Image 4: Model mouse brain with Neurofibrillary Tangles (blue). Neurons are stained green, while blood vessels are red. Image Source: flickr

16

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

homogenates of tauopathy patients, symptomatic tau transgenic mice, or from recombinant tau in vitro – generated tau aggregate-bearing transfected cells. In all instances, tau aggregates were competent for seeding, though to different degrees (Goedert et al., 1989). To demonstrate that tau aggregates engage in a prion-like behaviour, more evidence is required to elucidate the mechanisms of cellular uptake, templated seeding, and subsequent intercellular transfer to induce similar aggregation in recipient cells.

Conclusion Tau is a natively unfolded protein, and its ability to oligomerize and aggregate is regulated by post-translational modifications, with hyperphosphorylation being the most detrimental change. The pathological tau species confer their neurotoxicity through both gain-offunction and loss-of function mechanisms, which have been the foundations of the tau hypothesis in AD. A growing body of data further identifies that tau oligomers are the conformers mediating neurodegeneration. Anti-amyloid (Aß) therapy has been the focus of the field for the past 25 years, but due to a lack of clinical efficacy, scientists have recently been focused on anti-tau treatments (Vaz & Silvestre, 2020). Currently, there are studies targeting kinases involved in tau phosphorylation, such as glycogen synthase kinase 30beta (GSK-3ß) (Lauretti et. al., 2020). Several drugs, including lithium, a treatment for bipolar disorder, have been considered for their ability to inhibit GSK-3ß (Carmassi et al., 2016). The prevention of tau aggregation is also being researched using Methylthioninium Blue (also called Methylene Blue) or its derivatives, which have been shown to prevent aggregation in vitro (Wischik et al., 2015). There are also studies on tau clearance, which removes abnormal tau through immunotherapy. This approach is still in its early clinical process and requires more time to be tested (Bittar et al., 2020). However, all these therapies are still in phase II or III of clinical trial and are still in development. Hopefully, some of them will be successful and bring us closer to understanding and defeating this mysterious disease.

References Barbier, P., Zejneli, O., Martinho, M., Lasorsa, A., Belle, V., Smet-Nocca, C., Tsvetkov, P. O., Devred, F., & Landrieu, I. (2019). Role of Tau as a Microtubule-Associated Protein: Structural and Functional Aspects. Frontiers in aging neuroscience, 11, 204. https://doi.org/10.3389/ fnagi.2019.00204

FALL 2021

Bittar, A., Bhatt, N., & Kayed, R. (2020). Advances and considerations in AD tautargeted immunotherapy. Neurobiology of Disease, 134, 104707. https://doi.org/10.1016/j. nbd.2019.104707 Braak, H., & Braak, E. (1995). Staging of Alzheimer's disease-related neurofibrillary changes. Neurobiol Aging, 16(3), 271-278; discussion 278-284. Braak, H., & Del Tredici, K. (2014). Are cases with tau pathology occurring in the absence of Abeta deposits part of the AD-related pathological process? Acta Neuropathol, 128(6), 767-772. doi: 10.1007/s00401-014-1356-1 Carmassi, Claudia & Del Grande, Claudia & Gesi, Camilla & Musetti, Laura & Dell'Osso, Liliana. (2016). A new look at an old drug: Neuroprotective effects and therapeutic potentials of lithium salts. Neuropsychiatric Disease and Treatment. Volume 12. 1687-1703. 10.2147/NDT.S106479. Chen, S., Li, B., Grundke-Iqbal, I., & Iqbal, K. (2008). I1PP2A affects tau phosphorylation via association with the catalytic subunit of protein phosphatase 2A. J Biol Chem, 283(16), 1051310521. doi: 10.1074/jbc.M709852200 Drubin, D. G., & Kirschner, M. W. (1986). Tau protein function in living cells. J Cell Biol, 103(6 Pt 2), 2739-2746. doi: 10.1083/jcb.103.6.2739

"Tau-mediated toxicity is extensively supported by findings from familial frontotemporal dementia ..."

Fitzpatrick, A., Falcon, B., He, S., Murzin, A. G., Murshudov, G., Garringer, H. J., Crowther, R. A., Ghetti, B., Goedert, M., & Scheres, S. (2017). Cryo-EM structures of tau filaments from Alzheimer's disease. Nature, 547(7662), 185–190. https://doi.org/10.1038/nature23002 Goedert, M., Eisenberg, D. S., & Crowther, R. A. (2017). Propagation of Tau Aggregates and Neurodegeneration. Annu Rev Neurosci, 40, 189210. doi: 10.1146/annurev-neuro-072116-031153 Goedert, M., Spillantini, M. G., Jakes, R., Rutherford, D., & Crowther, R. A. (1989). Multiple isoforms of human microtubuleassociated protein tau: sequences and localization in neurofibrillary tangles of Alzheimer's disease. Neuron, 3(4), 519-526. doi: 10.1016/08966273(89)90210-9 Goedert, M., Spillantini, M. G., Potier, M. C., Ulrich, J., & Crowther, R. A. (1989). Cloning and sequencing of the cDNA encoding an isoform of

17

microtubule-associated protein tau containing four tandem repeats: differential expression of tau protein mRNAs in human brain. EMBO J, 8(2), 393-399. Gu, Y., Oyama, F., & Ihara, Y. (1996). Tau is widely expressed in rat tissues. J Neurochem, 67(3), 12351244. doi: 10.1046/j.1471-4159.1996.67031235. Guo, J. L., & Lee, V. M. (2011). Seeding of normal Tau by pathological Tau conformers drives pathogenesis of Alzheimer-like tangles. J Biol Chem, 286(17), 15317-15331. doi: 10.1074/jbc. M110.209296 Harada, A., Oguchi, K., Okabe, S. et al. Altered microtubule organization in small-calibre axons of mice lacking tau protein. Nature 369, 488–491 (1994). https://doi.org/10.1038/369488a0 Hebert, L. E., Scherr, P. A., Bienias, J. L., Bennett, D. A., & Evans, D. A. (2003). Alzheimer disease in the US population: prevalence estimates using the 2000 census. Arch Neurol, 60(8), 1119-1122. doi: 10.1001/archneur.60.8.1119 Hutton, M., Lendon, C. L., Rizzu, P., Baker, M., Froelich, S., Houlden, H., Pickering-Brown, S., Chakraverty, S., Isaacs, A., Grover, A., Hackett, J., Adamson, J., Lincoln, S., Dickson, D., Davies, P., Petersen, R. C., Stevens, M., de Graaff, E., Wauters, E., van Baren, J., … Heutink, P. (1998). Association of missense and 5'-splice-site mutations in tau with the inherited dementia FTDP-17. Nature, 393(6686), 702–705. https:// doi.org/10.1038/31508 Hyman, B. T., Phelps, C. H., Beach, T. G., Bigio, E. H., Cairns, N. J., Carrillo, M. C., . . . Montine, T. J. (2012). National Institute on Aging-Alzheimer's Association guidelines for the neuropathologic assessment of Alzheimer's disease. Alzheimers Dement, 8(1), 1-13. doi: 10.1016/j.jalz.2011.10.007 Kametani, F., & Hasegawa, M. (2018). Seeding of normal Tau by pathological Tau conformers drives pathogenesis of Alzheimer-like tangles. Front Neurosci, 12, 25. doi: 10.3389/fnins.2018.00025 Lauretti, E., Dincer, O., & Praticò, D. (2020). Glycogen synthase kinase-3 signaling in Alzheimer's disease. Biochimica et biophysica acta. Molecular cell research, 1867(5), 118664. https://doi.org/10.1016/j.bbamcr.2020.118664 Leroy, K., Ando, K., Laporte, V., Dedecker, R., Suain, V., Authelet, M., Héraud, C., Pierrot, N.,

18

Yilmaz, Z., Octave, J. N., & Brion, J. P. (2012). Lack of tau proteins rescues neuronal cell death and decreases amyloidogenic processing of APP in APP/PS1 mice. The American journal of pathology, 181(6), 1928–1940. https://doi. org/10.1016/j.ajpath.2012.08.012 Meraz-Rios, M. A., Lira-De Leon, K. I., CamposPena, V., De Anda-Hernandez, M. A., & MenaLopez, R. (2010). Tau oligomers and aggregation in Alzheimer's disease. J Neurochem, 112(6), 1353-1367. doi: 10.1111/j.1471-4159.2009.06511. Mietelska-Porowska, A., Wasik, U., Goras, M., Filipek, A., & Niewiadomska, G. (2014). Tau protein modifications and interactions: their role in function and dysfunction. Int J Mol Sci, 15(3), 4671-4713. doi: 10.3390/ijms15034671 Noble, W., Planel, E., Zehr, C., Olm, V., Meyerson, J., Suleman, F., Gaynor, K., Wang, L., LaFrancois, J., Feinstein, B., Burns, M., Krishnamurthy, P., Wen, Y., Bhat, R., Lewis, J., Dickson, D., & Duff, K. (2005). Inhibition of glycogen synthase kinase-3 by lithium correlates with reduced tauopathy and degeneration in vivo. Proceedings of the National Academy of Sciences of the United States of America, 102(19), 6990–6995. https:// doi.org/10.1073/pnas.0500466102 Rodrigue, K. M., Kennedy, K. M., & Park, D. C. (2009). Beta-amyloid deposition and the aging brain. Neuropsychol Rev, 19(4), 436-450. doi: 10.1007/s11065-009-9118-x Serrano-Pozo, A., Frosch, M. P., Masliah, E., & Hyman, B. T. (2011). Neuropathological alterations in Alzheimer disease. Cold Spring Harb Perspect Med, 1(1), a006189. doi: 10.1101/ cshperspect.a006189 Sharma, V. M., Litersky, J. M., Bhaskar, K., & Lee, G. (2007). Tau impacts on growth-factorstimulated actin remodeling. J Cell Sci, 120(Pt 5), 748-757. doi: 10.1242/jcs.03378 Vaz, M., & Silvestre, S. (2020). Alzheimer's disease: Recent treatment strategies. European journal of pharmacology, 887, 173554. https:// doi.org/10.1016/j.ejphar.2020.173554 Wischik, C. M., Staff, R. T., Wischik, D. J., Bentham, P., Murray, A. D., Storey, J. M., Kook, K. A., & Harrington, C. R. (2015). Tau aggregation inhibitor therapy: an exploratory phase 2 study in mild or moderate Alzheimer's disease. Journal of Alzheimer's disease : JAD, 44(2), 705–720. https://doi.org/10.3233/JAD-142874

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Machine Learning in Freeway Ramp Metering BY JAKE TWAROG '24 Cover Image: A ramp meter on the Sylvan westbound entrance to I-26 in Portland, Oregon. There is another meter not pictured on the other side. "STOP HERE ON RED" is illuminated when the ramp meter is active. Image Source: Wikimedia Commons

Introduction Despite their purpose as a means for mass transportation, freeways are known for the antithesis to that goal: traffic. They are susceptible to interruption and delay, which has a cascading effect and can keep traffic sticking around long after its initial source has been resolved. Traffic is difficult to predict, even with new, modernized equipment for measuring network flow, such as dedicated probe vehicles and smartphones (Zhang, 2015). In addition, after traffic becomes dense, it is hard to return flow to stable levels. Traffic generated by highway entrances, called "on-ramps," can be especially problematic at peak hours. On-ramps, particularly when their access is controlled by a traffic light, send large groups of cars known as "platoons" onto the highway. These platoons interfere with the ongoing flow of the freeway, causing significant jams. One technique that has been used to combat this is called ramp metering. Ramp metering was introduced in the United States in 1963 on I-290 in Chicago and has spread outward since then to other major urban areas (Yang, 2019). Despite being similar in appearance to traffic lights, ramp meters functionally act quite differently; they often lack

19

yellow lights, and when there is more than one lane, each lane gets their own light. Although often frustrating for drivers due to the addition of an additional light to a freeway commute, ramp metering is proven to help reduce on-ramp traffic by breaking up platoons. In the presence of either a reduction in flow or a bottleneck, reducing the number of vehicles that can access the freeway at once significantly helps reduce their impacts (Haboian, 1995). This paper will discuss the algorithmic implementation of ramp metering and how this can improve its implementation and use.

Fixed Parameter Algorithms To maximize the efficiency of on-ramp flow, the use of ramp meters must be tightly optimized to avoid delays when traffic on the freeway is light. Many different types of algorithms have been used to determine both when a ramp meter's active periods should be and what their light timings should be. Two currently existing strategies for ramp metering are known as the RWS strategy and the ALINEA strategy. The RWS strategy is a simple strategy that integrates the number of vehicles that can enter the freeway as a function of k, i.e. r(k). Metering systems DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Image 1: Ramp metering is used internationally in addition to in the U.S. Here, an on-ramp in Auckland, New Zealand sports a two-lane ramp metering system. Image Source: Wikimedia Commons

collect data about traffic flow through induction loops integrated into the freeway and on-ramps themselves, which each of the strategies can use. If the critical capacity has not been exceeded yet, then allowed flow is the difference between the last measured upstream freeway flow and the downstream critical capacity. A certain minimum ramp flow is specified so that the on-ramp is never completely halted. The downside to this approach is that minor disturbances in traffic, which often are hard to correct for, can heavily skew the data. For this reason, the smoothed version of the upstream flow is used instead of the raw values (Knoop, 2018). The ALINEA strategy focuses more on the downstream conditions than upstream (Knoop, 2018). It attempts to maintain a specific traffic flow at a certain value, ô. Variations of this algorithm incorporate traffic density or other factors to avoid downstream bottlenecks (Knoop, 2018). ALINEA is one of the more effective methods at preventing freeway slowdowns but can run into difficulties keeping queues short on on-ramps (Ghanbartehrani, 2020). Ultimately, both of these on their own fall somewhat short due to their use of fixed parameters, which need to be set at a specific, distinct value for each on-ramp. It is difficult and intensive for engineers to calculate what would be most optimal for each ramp, costing lots of money and time. Fixed parameters also do not correct for all edge cases. For these reasons, adaptive methods prove to be more effective in many scenarios. FALL 2021

To maximize the efficiency of on-ramp flow, the use of ramp meters must be tightly optimized to avoid delays when traffic on the freeway is light. Many different types of algorithms have been used to determine both when a ramp meter's active periods should be and what their light timings should be. Two currently existing strategies for ramp metering are known as the RWS strategy and the ALINEA strategy. The RWS strategy is a simple strategy that integrates the number of vehicles that can enter the freeway as a function of k, i.e. r(k). Metering systems collect data about traffic flow through induction loops integrated into the freeway and on-ramps themselves, which each of the strategies can use. If the critical capacity has not been exceeded yet, then allowed flow is the difference between the last measured upstream freeway flow and the downstream critical capacity. A certain minimum ramp flow is specified so that the on-ramp is never completely halted. The downside to this approach is that minor disturbances in traffic, which often are hard to correct for, can heavily skew the data. For this reason, the smoothed version of the upstream flow is used instead of the raw values (Knoop, 2018).

"It is difficult and intensive for engineers to calculate what would be most optimal for each ramp, costing lots of money and time."

The ALINEA strategy focuses more on the downstream conditions than upstream (Knoop, 2018). It attempts to maintain a specific traffic flow at a certain value, ô. Variations of this algorithm incorporate traffic density or other factors to avoid downstream bottlenecks (Knoop, 2018). ALINEA is one of the more effective methods at preventing freeway slowdowns but can run into difficulties keeping queues short on 20

on-ramps (Ghanbartehrani, 2020). Ultimately, both of these on their own fall somewhat short due to their use of fixed parameters, which need to be set at a specific, distinct value for each on-ramp. It is difficult and intensive for engineers to calculate what would be most optimal for each ramp, costing lots of money and time. Fixed parameters also do not correct for all edge cases. For these reasons, adaptive methods prove to be more effective in many scenarios.

Machine Learning "Freeway development has historically displaced minorities and underrepresented groups, tearing through parts of cities that once housed marginalized communities."

An intelligent algorithm could prove to be a good solution for the drawbacks of fixed parameters. In addition, it would be far more cost-effective and applicable to a wide range of scenarios, without having to be finely tuned for the dramatically different conditions on various freeways. A team of researchers headed by Saeed Ghanbartehrani and Anahita Sanandaji of Ohio University proposed the use of real historical data to train an algorithm which factors in unpredictable situations to dictate the timings for ramp meters. Their methodology focused on using four main machine learning modules: data refinement and selection; creating a regression; clustering; and creating a ramp metering algorithm (Ghanbartehrani, 2020). One section of the I-205 freeway, an auxiliary Interstate in Oregon, was used to train the algorithm. They gathered data throughout the week for the number of vehicles entering the ramp at five-minute intervals, which formed a pattern with two peaks on weekdays (morning and evening rush hours) and with one peak in the afternoon on weekends. In the regression stage, the team created a model to predict the volume of traffic, Vol(t*), from Time(t), Occupancy(t), Speed(t), and Vol(t). This model was consistently able to mirror the actual data with considerable accuracy, making it a good choice for the algorithm (Ghanbartehrani, 2020). For the clustering step, two k-means clustering approaches were used. The first was clustering based on time and Δvol/Δt to identify the traffic phases. This helped the algorithm compare the data it detected with a baseline to adjust for anomalies, like accidents. The other cluster was for traffic type and the rate which the traffic was expected to change, so it was only based on the change in volume with respect to time. This allowed the algorithm to select the correct values and model for the specific state (Ghanbartehrani, 2020).

21

Comparing the results with a standard ALINEA scenario found that ALINEA generated 8% more red lights than the proposed algorithm (Ghanbartehrani, 2020). The proposed algorithm also reduced maximum ramp queue length significantly, although the average length was similar, and each had comparable overall flow. These results were promising, as it showed that even relatively unsophisticated machine learning techniques can compete with ALINEA. The algorithm can be deployed far more inexpensively, as it only requires a few weeks of traffic data. Having a short data collection period is a large advantage for constructing ramp meters. Xiabo Ma et al. (2020) studied the challenges that transportation departments face in collecting data under standard conditions and argued that up to six to eight weeks of data is often needed. This is often infeasible for fixed ramp metering techniques due to budget or time constraints, which forces engineers to rely on less accurate data, decreasing ramp metering efficiency (Ma et al., 2020).

Other Adaptive Algorithms Another adaptive technique was studied by Kwangho Kim and Michael J. Cassidy of UC Berkeley. They proposed a ramp metering strategy based on kinematic wave theory by modeling traffic as a wave. They asserted that there were four main effects which happen in sequence that cause slowdowns when platoons of vehicles enter the freeway, which can be used to minimize overall traffic. The first of these is the "pinch effect," which is when merging and diverging maneuvers near a ramp form a jam. This jam then propagates upstream like a wave, which can cause another, even more restrictive jam if it encounters an upstream interchange. This triggers the "catch effect," as the bottleneck becomes "caught" at the new location. The jam will continue to propagate upstream slowly, while the original jam lessens. This causes an expanding free flow pocket as the jams propagate outward. There are positive consequences to this pocket, dubbed the "driver memory" effect and the "pumping effect," allowing for higher ramp inflow within this pocket as drivers adopt shorter headways due to its formation (Kim & Cassidy, 2012). To utilize these positive effects, the researchers tested an unconventional metering logic that intentionally allowed the traffic to slow substantially, but then maximized the recovery period created by the driver memory and pumping effects to allow the pocket to exist for as long as possible. The results of this study showed a 3%

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

gain over other alternatives in long-run discharge flow, which saved up to 300 vehicle-hours of travel for commuters in just the environment of the study. It also reduced on-ramp queues. The one downside over other adaptive algorithms is that it could inhibit access to off-ramps upstream due to intentionally reducing upstream flow for a duration of time (Kim & Cassidy, 2012).

Conclusion Despite the positive effects introduced by the new techniques, a consistent factor limiting the usage of ramp metering effectiveness is queue length. Geometric restrictions brought upon by geography and existing infrastructure present significant challenges. This is primarily because ramp meters are often installed on existing ramps, which were rarely designed for them. Because these ramps are frequently not long enough, designers need to either sacrifice queue space or post-meter acceleration distance (Yang, 2019). However, there are ways to optimize for this, such as introducing an area-wide system instead of having isolated ramp meters (Perrine, 2015). In addition, freeways have significant adverse effects on nearby communities. Freeway development has historically displaced minorities and underrepresented groups, tearing through parts of cities that once housed marginalized communities. Living in proximity to urban freeways has been linked to reduced socioeconomic status and even adverse birth effects (Genereux, 2008). Ramp metering, being a primary strategy used to increase the number and density of cars on freeways, exacerbates

their environmental and societal impacts. While increasing traffic flow is good for commuters that use a car, reducing the number of cars on the road through improved public transportation is even more effective, which itself requires disincentivizing car use (Wiersma, 2017). Overall, integrating modernized techniques into ramp metering such as machine learning can increase the effectiveness of an already useful strategy. While not without drawbacks, mitigating traffic on the freeways can help make them more efficient.

References Genereux, M., Auger, N., Goneau, M., & Daniel, M. (2008). Neighbourhood socioeconomic status, maternal education and adverse birth outcomes among mothers living near highways. Journal of Epidemiology & Community Health, 62(8), 695-700. ht t p s : / / d o i . o r g / 1 0 . 1 1 3 6 / jech.2007.066167 Ghanbartehrani, S., Sanandaji, A., Mokhtari, Z., & Tajik, K. (2020). A novel ramp metering approach based on machine learning and historical data. Machine Learning and Knowledge Extraction, 2(4), 379-396. https://doi.org/10.3390/make2040021 Haboian, K. A. (1995). A Case for Freeway Mainline Metering. Transportation Research Record, (1494), 11-20. https://onlinepubs.trb. org/Onlinepubs/trr/1995/1494/1494-002.pdf Kim, K., & Cassidy, M. J. (2012). A capacityImage 2: Los Angeles, Route 101. Los Angeles is known for its extensive network of freeways around and within the city. Unfortunately, the construction of freeways heavily negatively impacted minority communities in the city, much like most others in the United States. Image Source: Wikimedia Commons

FALL 2021

22

increasing mechanism in freeway traffic. Transportation Research Part B: Methodological, 46(9), 1260-1272. https://doi.org/10.1016/j. trb.2012.06.002 Knoop, V. L. (2018). Ramp Metering with RealTime Estimation of Parameters. In 2018 IEEE Intelligent Transportation Systems Conference: November 4-7, Maui, Hawaii (pp. 36193626). IEEE. doi:10.1016/j.trb.2012.06.002 Ma, X., Karimpour, A., & Wu, Y.-J. (2020). Statistical evaluation of data requirement for ramp metering performance assessment. Transportation Research Part A: Policy and Practice, 141, 248261. https:// doi.org/10.1016/j.tra.2020.09.011 Perrine, K. A., Lao, Y., Wang, J., & Wang, Y. (2015). Area-Wide ramp metering for targeted incidents: The additive increase, multiplicative decrease method. Journal of Computing in Civil Engineering, 29(2), 04014038. https:// doi.org/10.1061/(asce)cp.1943-5487.0000321 Wiersma, J., Bertolini, L., & Straatemeier, T. (2017). Adapting spatial conditions to reduce car dependency in mid-sized ‘post growth’ European city regions: The case of South Limburg, Netherlands. Transport Policy, 55, 62-69. https://doi.org/10.1016/j. tranpol.2016.12.004 Yang, G., Tian, Z., Wang, Z., Xu, H., & Yue, R. (2019). Impact of on-ramp traffic flow arrival profile on queue length at metered onramps. Journal of Transportation Engineering, Part A: Systems, 145(2), 04018087. https://doi. org/10.1061/jtepbs.0000211 Zhang, L., & Mao, X. (2015). Vehicle density estimation of freeway traffic with unknown boundary demand–supply: An interacting multiple model approach. IET Control Theory & Applications, 9(13), 1989-1995. https://doi. org/10.1049/iet-cta.2014.1251

23

DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

Radiation Therapy and the Effects of This Type of Cancer Treatment BY JEAN YUAN '25 Cover Image: This specific machine uses external beam radiation therapy in which the radiation oncologist can target a certain area of the body with radiation and damage the cells in that area. Image Source: Flickr

24

What is Radiation Therapy? Radiation oncology refers to the field in medicine in which doctors study and treat cancer with various methods of radiation therapy. Cancer cells, abnormal cells that are no longer responsive to body signals that control cellular growth and death, divide without control, leading to the invasion of nearby tissues (O’Connor & Adams, 2010). Radiation therapy involves targeting these cancer cells with regulated doses of high-energy radiation with the goal of killing these cells while also minimizing the amount of radiation dose to the normal cells, thereby preserving the organs and other healthy tissues. The therapy causes damage to the DNA of the cancer cells by ionization, leading to loss of the cell’s ability to reproduce and eventually leading to cell death (Baskar et al., 2012). Ionization is when ions are introduced to a certain part of the body in order to release their energy and remove an electron, ultimately causing damage to cells in that are. However, this kind of therapy also affects the division of cells of normal tissues. Damage to normal cells can cause unwanted side effects, such as radiation sickness (nausea, vomiting, diarrhea, etc.) and secondary cancer/malignancies. A radiation oncologist oversees finding the right balance between

destroying cancer cells and minimizing damage to normal cells. Radiation therapy cannot kill the cancer cells immediately. It takes only ten to fifteen minutes to conduct the treatment, but it can take up to eight weeks in order to see results. Even after months post treatment, cancer cells will continue to die (Baskar et al., 2012; National Cancer Institute, n.d.).

Types of Radiation Therapy There are two types of radiation therapy: external beam radiation therapy and internal beam radiation therapy (Baskar et al., 2012; National Cancer Institute, n.d.). The type that is administered depends on a few different factors: “type of cancer,” location of the cancerous tumor, health and medical history of the patient, previous treatments of the patient, and age of the patient (National Cancer Institute, n.d.). In external beam radiation therapy, a machine administers radiation at the affected area and sends radiation to that area from various directions in order to target the cancerous tumor. This type of radiation therapy is spot treatment and only treats a specific part of the body (Washington & Leaver, 2015). It is commonly used on cancers DARTMOUTH UNDERGRADUATE JOURNAL OF SCIENCE

of the breast, lung, prostate, colon, head, and neck. There are many types of external beam radiation therapy (Schulz-Ertner & Jäkel, 2006). They each rely on a computer to analyze the tumor in order to create an accurate treatment program. There is 3-D conformal radiation therapy (3DCRT), intensity-modulated radiation therapy (IMRT), image-guided radiation therapy (IGRT), and stereotactic body radiation therapy. 3-D conformal radiation therapy (3DCRT) uses the radiation beams to allow for accurate tumor and matches the shape of the tumor in order to minimize the growth of the tumor and leave less room for error. Intensity-modulated radiation therapy (IMRT) is linear and utilizes linear accelerators, high-energy rays with pinpoint accuracy, in order to precisely distribute radiation at the cancerous tumor and to try to prevent damage to the healthy tissue (Baskar et al., 2012). Image-guided radiation therapy (IGRT) is used when the area that needs to be treated is in close proximity to a critical structure, such as moving organs. It takes images and scans of the tumor to help doctors position the radiation beams in the correct spot. Lastly, stereotactic radiation therapy uses 3-D imaging to focus the high doses radiation beams on the cancerous tumor in order to try preserve as much healthy tissue as possible. Due to the high dose treatment, the tissue around will be damaged, but it lessens the overall amount of healthy tissue damaged. This type has proven positive results for treatment in early-stage lung cancer for patients unfit for surgery (Baskar et al., 2012; Sadeghi et al., 2010; Xing et al., 2005; National Cancer Institute, n.d.).

Internal radiation therapy, which is also more commonly known as brachytherapy, is more invasive in that an oncologist inserts radioactive materials at the site of the cancer. This radioactive material emits a high dose of radiation directly at the tumor, but also keeps the nearby tissue safe by a radioactive implant that is placed in or in close proximity to the tumor. The benefit of brachytherapy is the ability to deliver high doses of radiation to the tumor without damaging the surrounding healthy tissues. This method harms

FALL 2021

as few healthy cells as possible (Washington & Leaver, 2015; National Cancer Institute, n.d.). If an implant is delivered, the type of implant depends on the type of cancer. If a permanent implant is administered, the radiation fades over time. Examples of the cancers it is used to treat include prostate, gynecological organ cancer, and breast cancer (Sadeghi et al., 2010; National Cancer Institute, n.d.).

Dosage Since normal cells are also exposed to the radiation during treatment of the cancerous cells, the dose of radiation is very important in determining the maximum dosage that can safely be administered. Multiple doses of a smaller amount of radiation are less damaging to the normal cells than one dose equivalent to the total dose (Xing et al., 2005; National Cancer Institute, n.d.).The concept of the therapeutic ratio, a ratio that compares the blood concentration at which a drug becomes toxic and the concentration at which the drug is effective, becomes an important parameter when trying to achieve an acceptable probability of complications in the healthy tissue from the radiation and the probability of controlling the tumor (Tamarago et al., 2015). A key factor in the development, safety, and optimization of a certain treatment is to make sure there is a balance between the toxic and effective concentrations, which is why the therapeutic ratio is an integral part of radiation therapy. There must be balance between the dose prescribed, the tumor volume, and the organat-risk (OAR) tolerance levels (called isotoxic dose prescription (IDP)) (Zindler et al., 2018). IDP allows the radiation dose prescribed to be a predefined balance between the normal tissue complication (estimates of the tolerance of the dosage for specific organs and tissues) and the probability of controlling the tumor (Washington & Leaver, 2015; Zindler et al., 2018). The goal of the radiation therapy plays a large role in what kind of radiation therapy is needed. When only a small dose of treatment is needed, usually to ease cancer symptoms, it is defined as palliative care, in which the goal is to prevent or treat any symptoms or side effects of cancer as early as possible in order to improve the quality of life and reduce pain (National Cancer Institute, n.d.). Too much radiation can cause unwanted side effects due to the fact that radiation can kill healthy cells that are nearby. There is a limit as to how much radiation a body can receive (usually the limit of radiation in a lifetime for one person is about 400 millisieverts, and annual radiation limits is about 20 millisieverts (National Cancer Institute, n.d.; Hall et al., 2006)). Any more than the recommended amount of radiation can lead

"There are two types of radiation therapy: external beam radiation therapy and internal beam radiation therapy."

Image 1: Los Angeles, Route 101. Los Angeles is known for its extensive network of freeways around and within the city. Unfortunately, the construction of freeways heavily negatively impacted minority communities in the city, much like most others in the United States. Image Source: Wikimedia Commons

25

to long-term problems, such as a secondary cancer, which is a cancer that has either originated from the first kind and has mediatized to another location of the body, or a secondary type of cancer that was caused from the treatments of the first (which is more common with radiation therapy). Extreme amounts of radiation can even lead to death. The quantity of radiation can be determined by the concentration of radiation photons and the energy of the individual photons. If one area of a body has received the limit of radiation, another part of the body can only be treated with radiation therapy if it is far enough from the previous area (Sadeghi et al., 2010; National Cancer Institute, n.d.).

"Radiation therapy can be used as the only treatment for certain cancers, or it may be used in conjunction with chemotherapeutic agents and/or surgery."

Dosage is more of a concern in internal radiation therapy. There are two types of treatment: high-dose rate brachytherapy and low-dose rate brachytherapy (Xing et al., 2005; National Cancer Institute, n.d.). In high-dose rate (HDR) brachytherapy, the patient is treated for several minutes at a time with a powerful radiation source that is inserted in the body. The source is removed after ten to twenty minutes and can be repeated a few times per day or once a day over a course of a few weeks, depending on the type of cancer and the type of treatment needed. For lowdose rate brachytherapy, an implant is left in for one to a few days, in which it emits lower doses of radiation over a longer period of time, and then removed. Usually, patients stay in the hospital while being treated. If permanent implants are needed, once the radiation is no longer present, they are harmless and there is no need to take these implants out (Sadeghi et al., 2010; National Cancer Institute, n.d.).