Alot can happen in 24 hours with the current British Government so it’s with caution I say ‘at the time of writing’ that things are looking up for a potential renewal of connections between UK and European science teams, working on joint research together.

European President, Ursula von der Leyden declared talks around the UK joining the science research scheme, the Horizon programme can begin ‘the moment’ the socalled Windsor Northern Ireland framework protocol is approved.

The sticking point of the mechanics of post-Brexit trade in Northern Ireland has been an ‘elephant in the room’ for the UK and Europe since the slightly acrimonious breakup.

The reality is that Britain did very well with research funding from the near hundred billion euro Horizon 2020 coffers, receiving seven billion euros, as one of the leading beneficiaries.

For science, Brexit was enormously frustrating for British universities, losing not only important research funds but equally important collaborations with European researchers.

As a seasoned editor and journalist, Richard Forsyth has been reporting on numerous aspects of European scientific research for over 10 years. He has written for many titles including ERCIM’s publication, CSP Today, Sustainable Development magazine, eStrategies magazine and remains a prevalent contributor to the UK business press. He also works in Public Relations for businesses to help them communicate their services effectively to industry and consumers.

What’s more, earlier, the Campaign for Science and Engineering (CASE) group indicated that over one and a half billion pounds, that was supposed to be set aside for UK research by the Conservative Party, was returned to the Treasury.

All this to one side, it is with great hope for science in general, that Britain and Europe can re-establish a strong relationship in research, to tackle some of the most important scientific challenges together, rather than apart.

Hope you enjoy the issue.

Richard Forsyth Editor

4 Research News

EU Research takes a closer look at the latest news and technical breakthroughs from across the European research landscape.

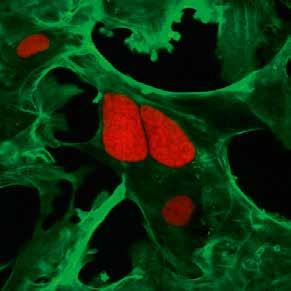

10 Diversity and compartmentalisation of monocytes & macrophages and immuneparesis in patients with cirrhosis

The project: ‘Diversity and compartmentalisation of monocytes & macrophages and immuneparesis in patients with cirrhosis’ aims to understand the immune response in cirrhosis patients, as Dr. Christine Bernsmeier, explains.

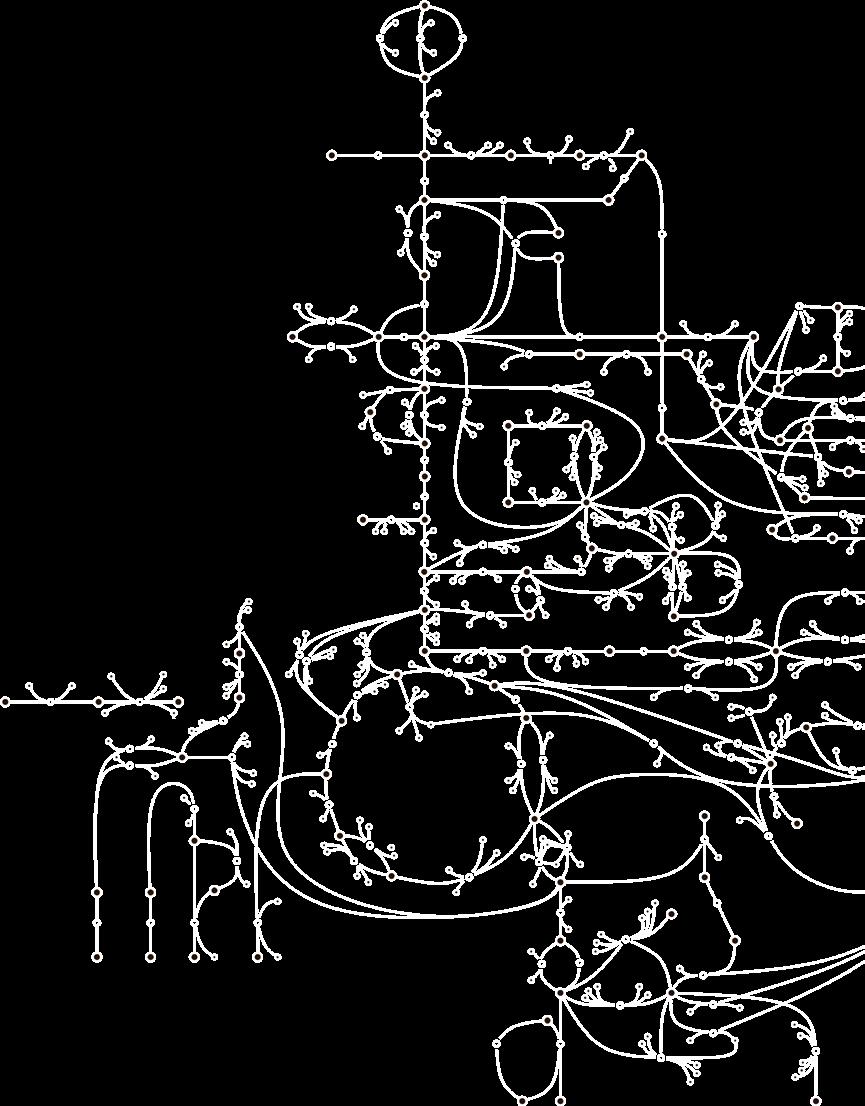

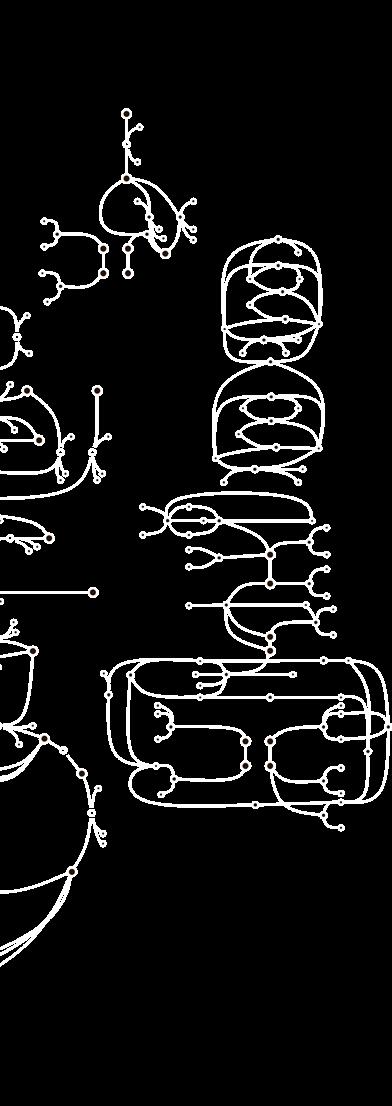

12 PATHOGEN HOST CELL INTERACTION

We spoke to Dr. Volker Heussler, who researches the development of the Plasmodium parasite inside the hepatocytes, and the factors that influence its survival or elimination.

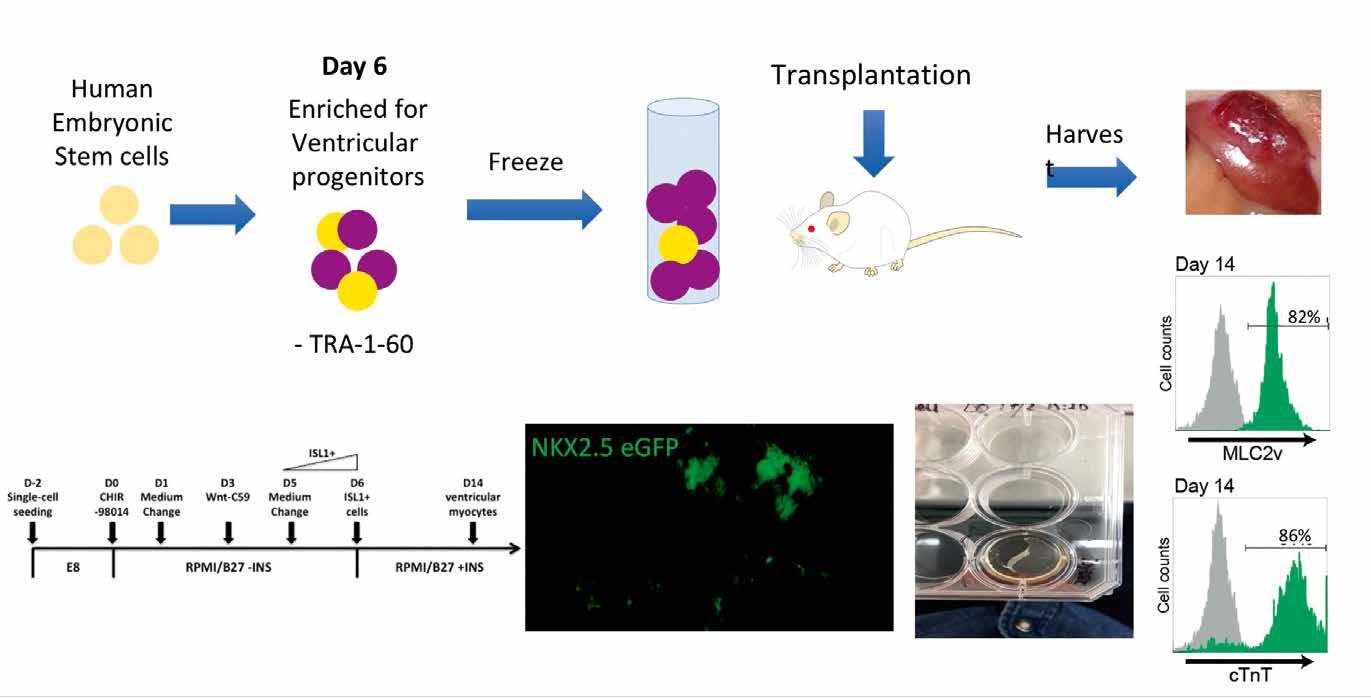

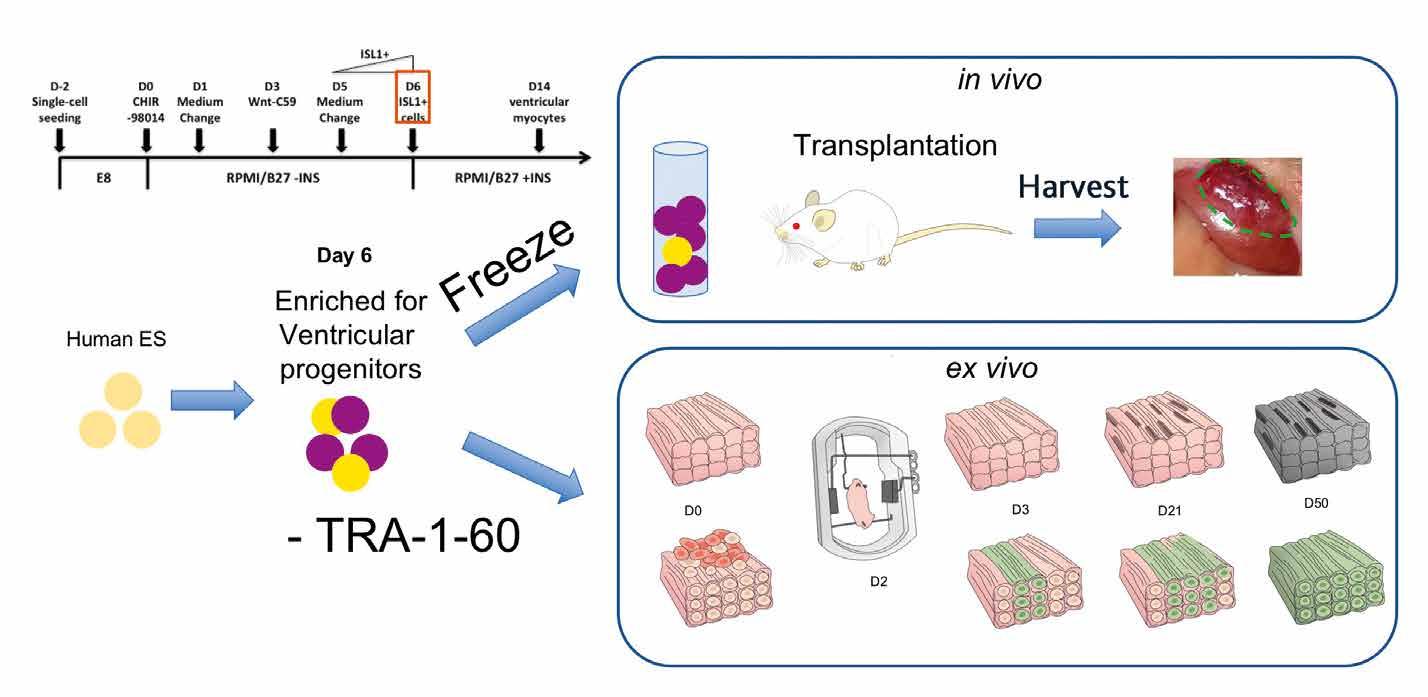

14 5D HEART PATCH

The 5D Heart Patch Project has identified human ventricular progenitor (HVP) cells that can create self-assembling heart grafts in vivo, offering hope to people suffering from heart failure.

17 CSI-FUN

Researchers in the ERC-funded CSIFUN project are investigating the underlying molecular mechanisms behind CSI and how it spreads through the body, as Erwin Wagner explains.

20

It’s essential to ensure that implants are safe, a topic at the heart of Dr. Anna Igual Munoz and Dr Stefano Mischler’s work in collaboration with Prof. Dr Brigitte Jolles-Haeberli

22 SAHR

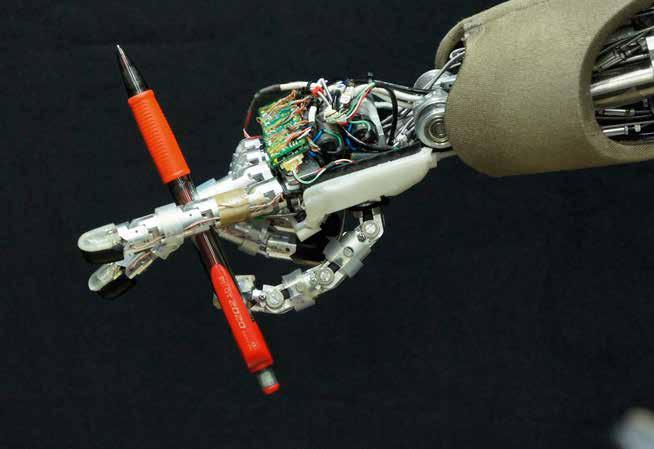

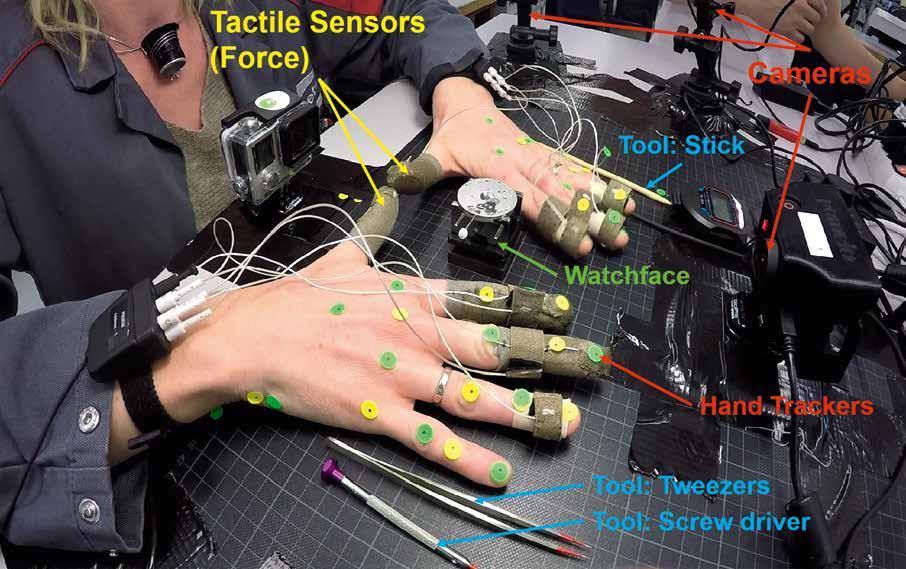

Researchers in the SAHR project are studying how humans acquire fine motor skills and looking to develop new learning and control algorithms for robots, as Professor Aude Billard explains.

24

Innovation can make a difference in earthquake-prone areas, for prediction, resistance and search and rescue. Here are some ways science can mitigate the horrors that can occur in earthquakes. By Richard Forsyth.

28

Researchers in the REALM project are looking again at earth system modelling practice as they work to develop a new kind of vegetation model, as Professor Colin Prentice explains.

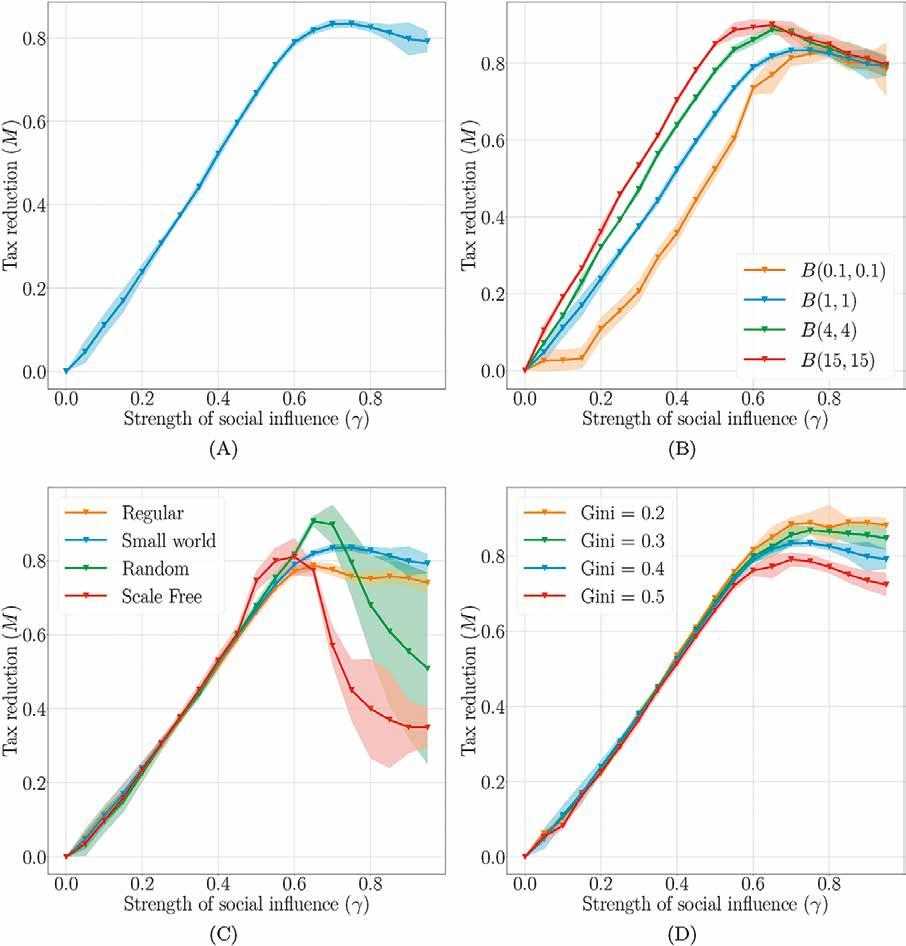

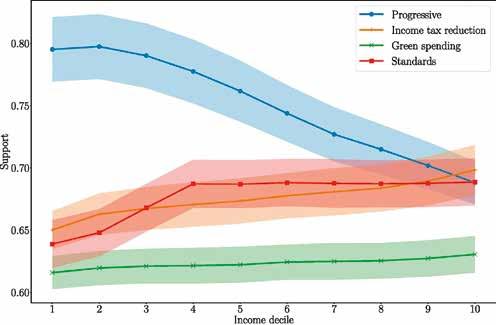

New assessment models will provide a more solid basis for comparison of instruments like carbon pricing, regulation and information provision, as Professor Jeroen van den Bergh explains.

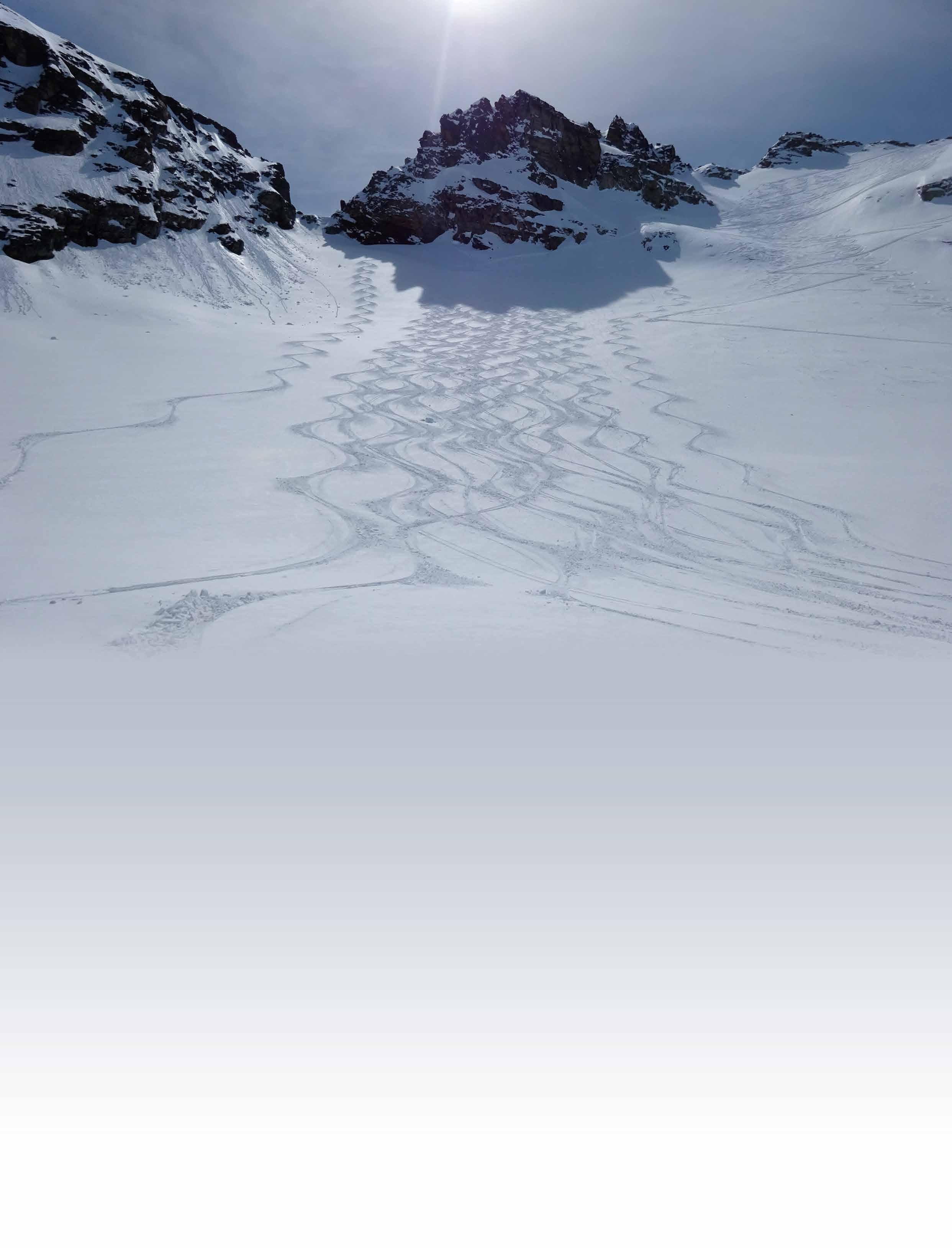

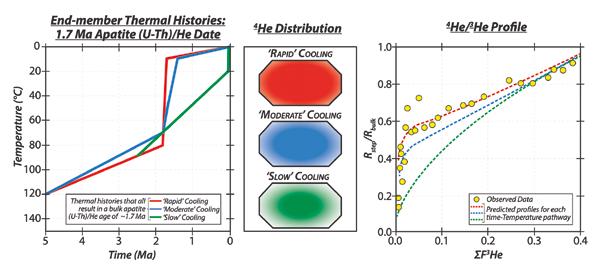

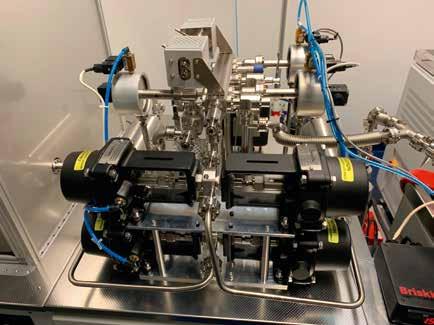

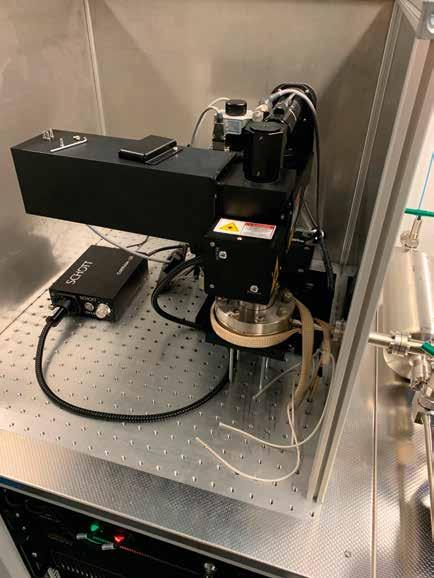

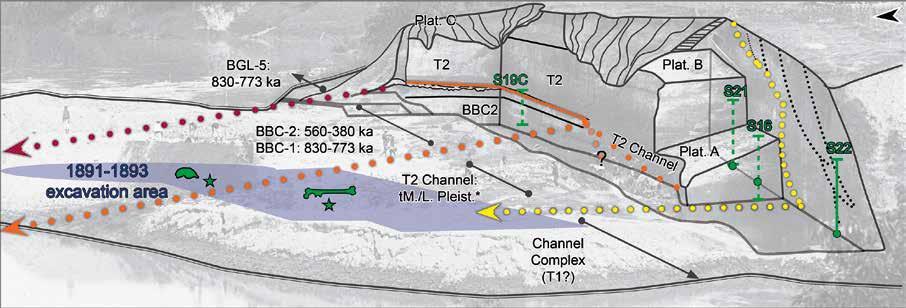

Professor Peter van der Beek and his colleagues in the COOLER project are investigating the transformation of fluvial landscapes into glacial landscapes.

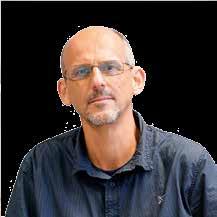

In their research, Dr Axel Franzen and Fabienne Woehner are investigating how digitalizing the labour market could help transform the mobility sector.

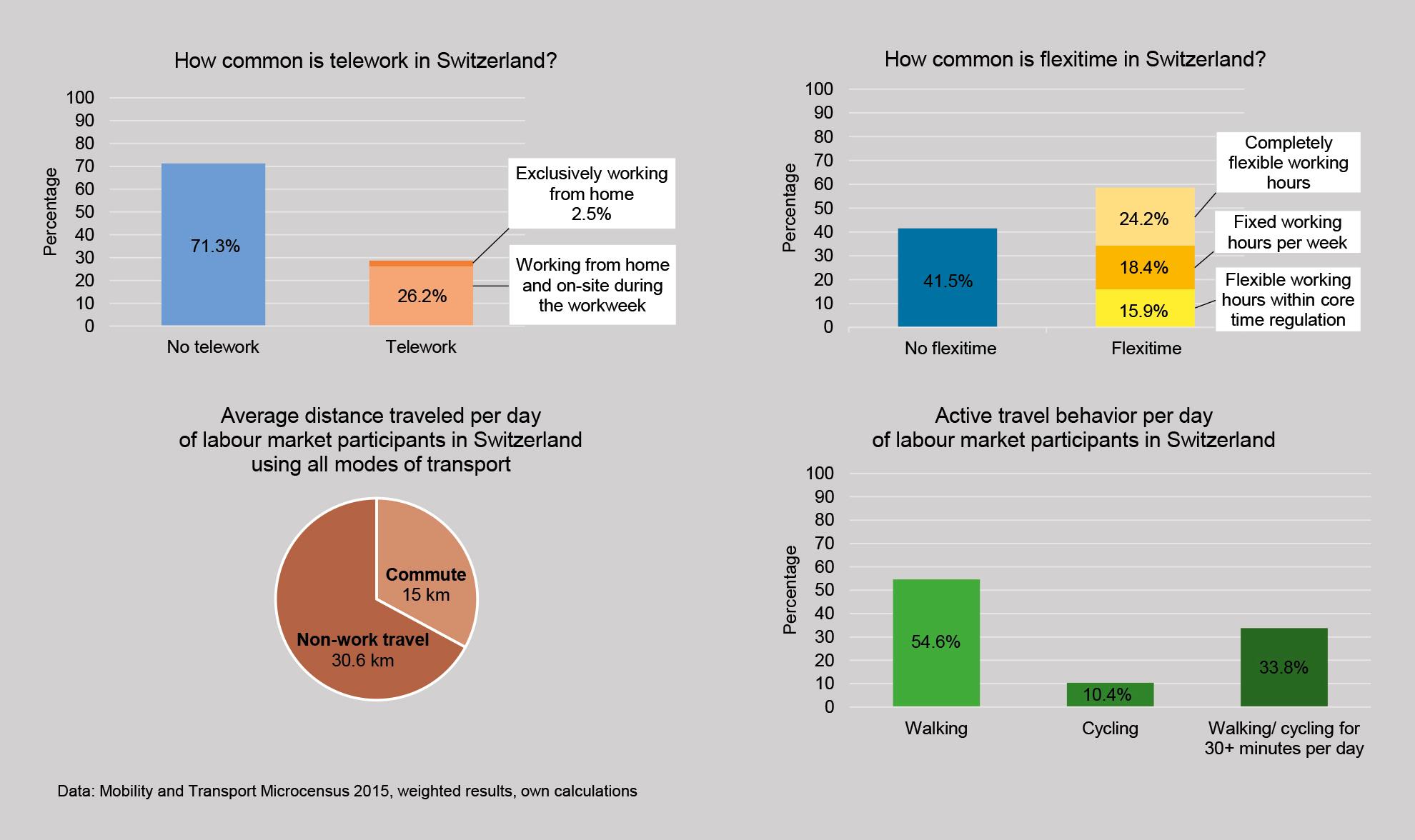

Researchers in the Circular Flooring project are using the CreaSolv® Process to recover PVC from postconsumer waste flooring, enabling its eventual re-use, as Thomas Diefenhardt and Dr. Martin Schlummer explain.

Why do companies choose to enter moral markets? Dr Panikos Georgallis says it’s not just about resources, but also a firm’s identity and the social context.

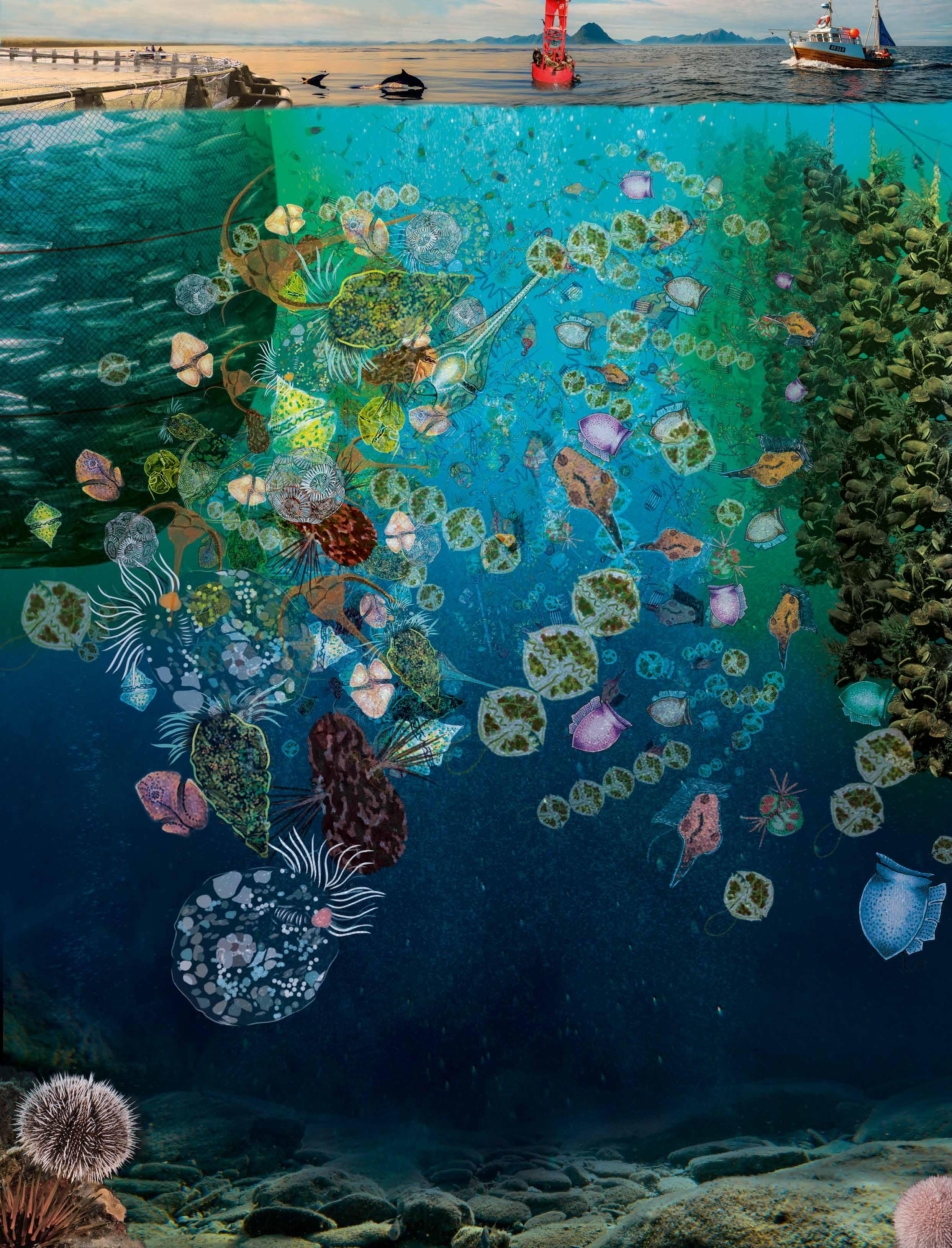

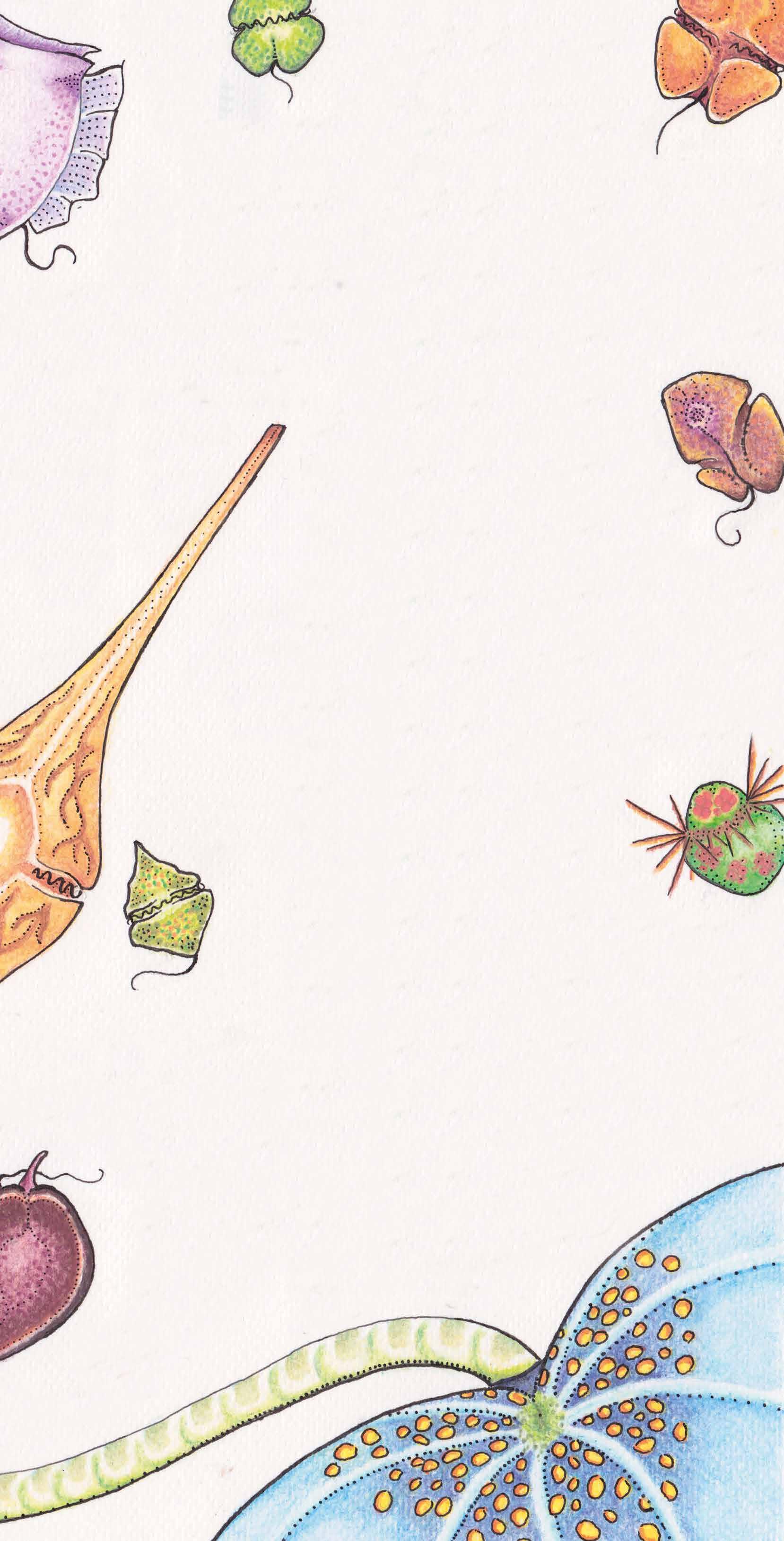

39 MixITiN

Dr Aditee Mitra and Dr Xabier Irigoien tell us how the MixITiN project has been bringing marine ecology into the 21st century, work which holds important implications for ocean health and policies.

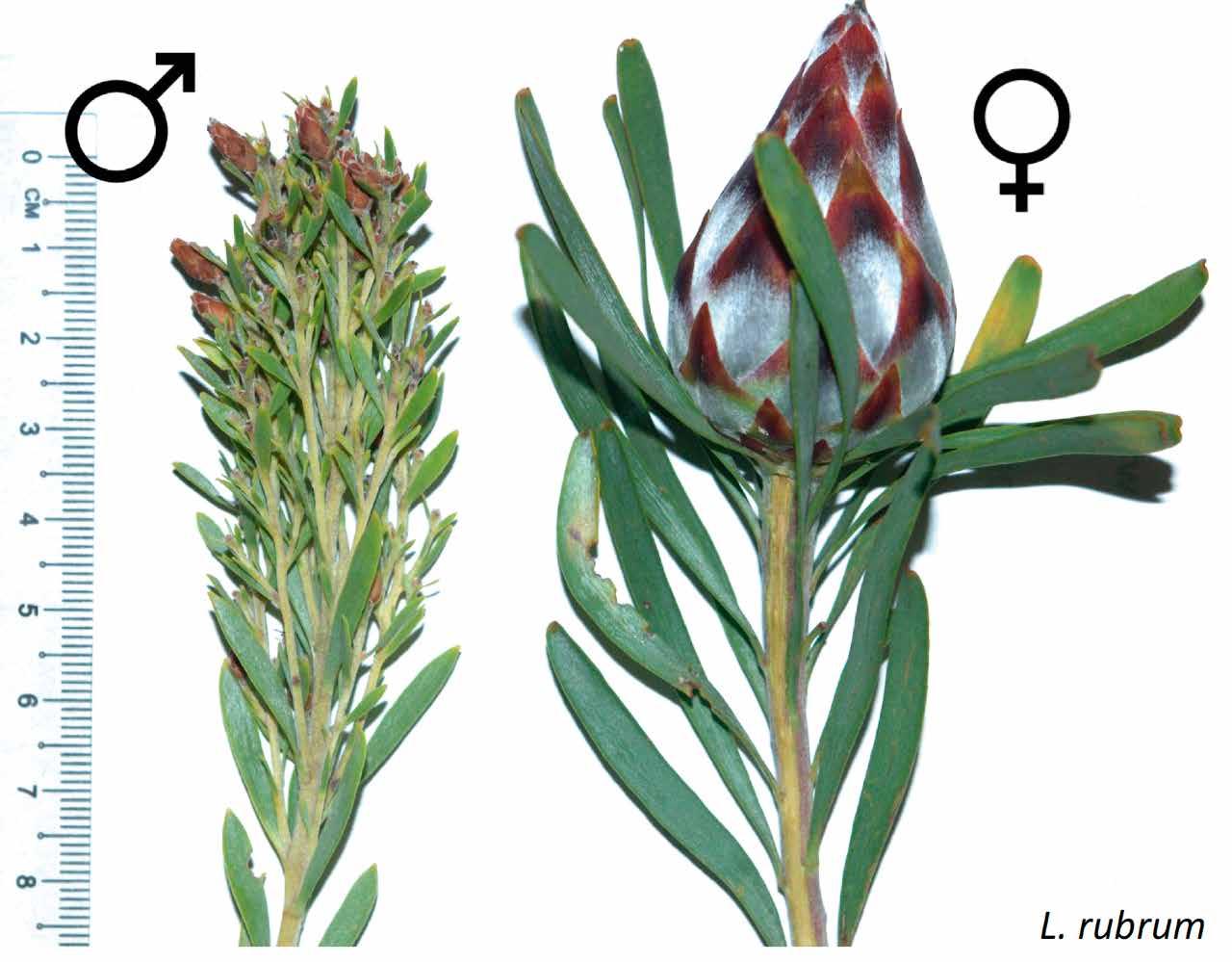

Why is it that one plant species has separate sexes, while its sister species in the phylogeny remains hermaphroditic? This question lies at the core of Professor John Pannell’s research.

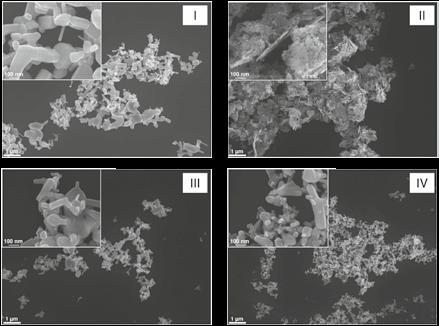

45 NanoInformaTIX

We spoke to Lisa Bregoli about the NanoInformaTIX project’s work in developing a framework and web-based platform to predict the behaviour of nanomaterials, which will support their safe-by-design development.

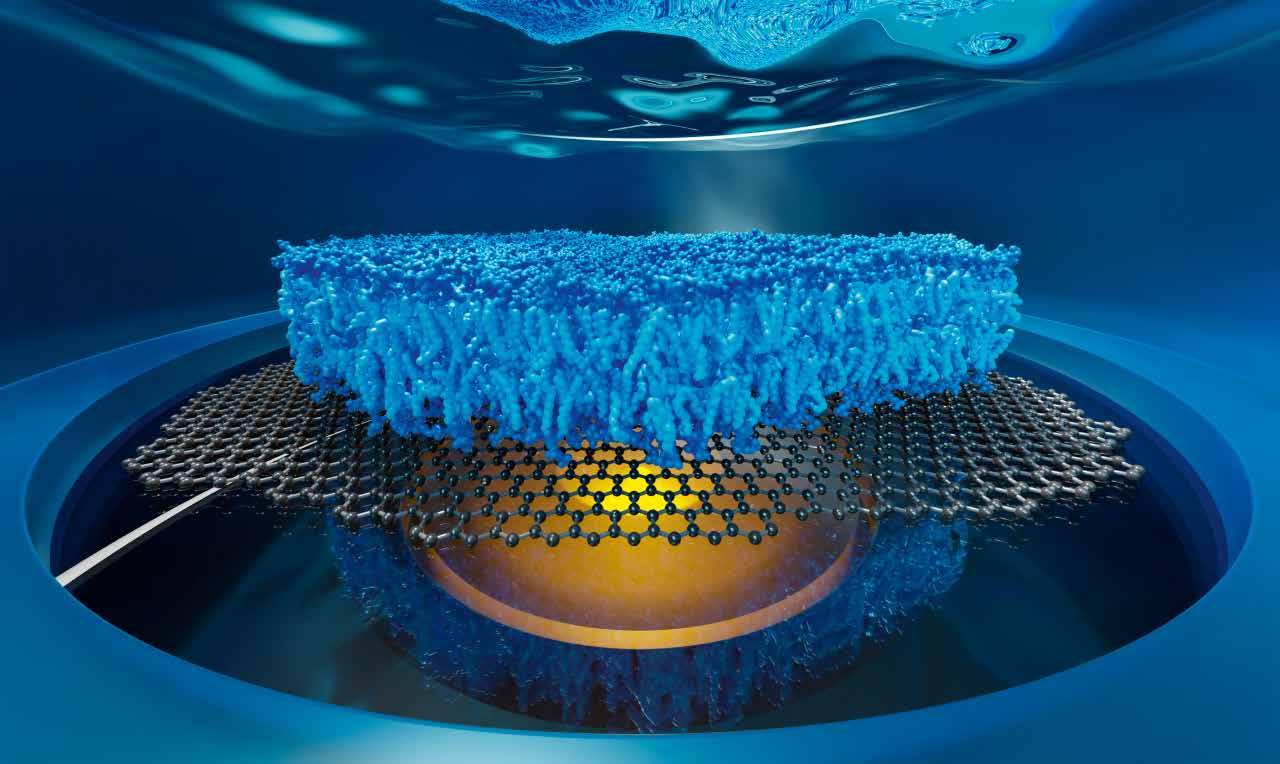

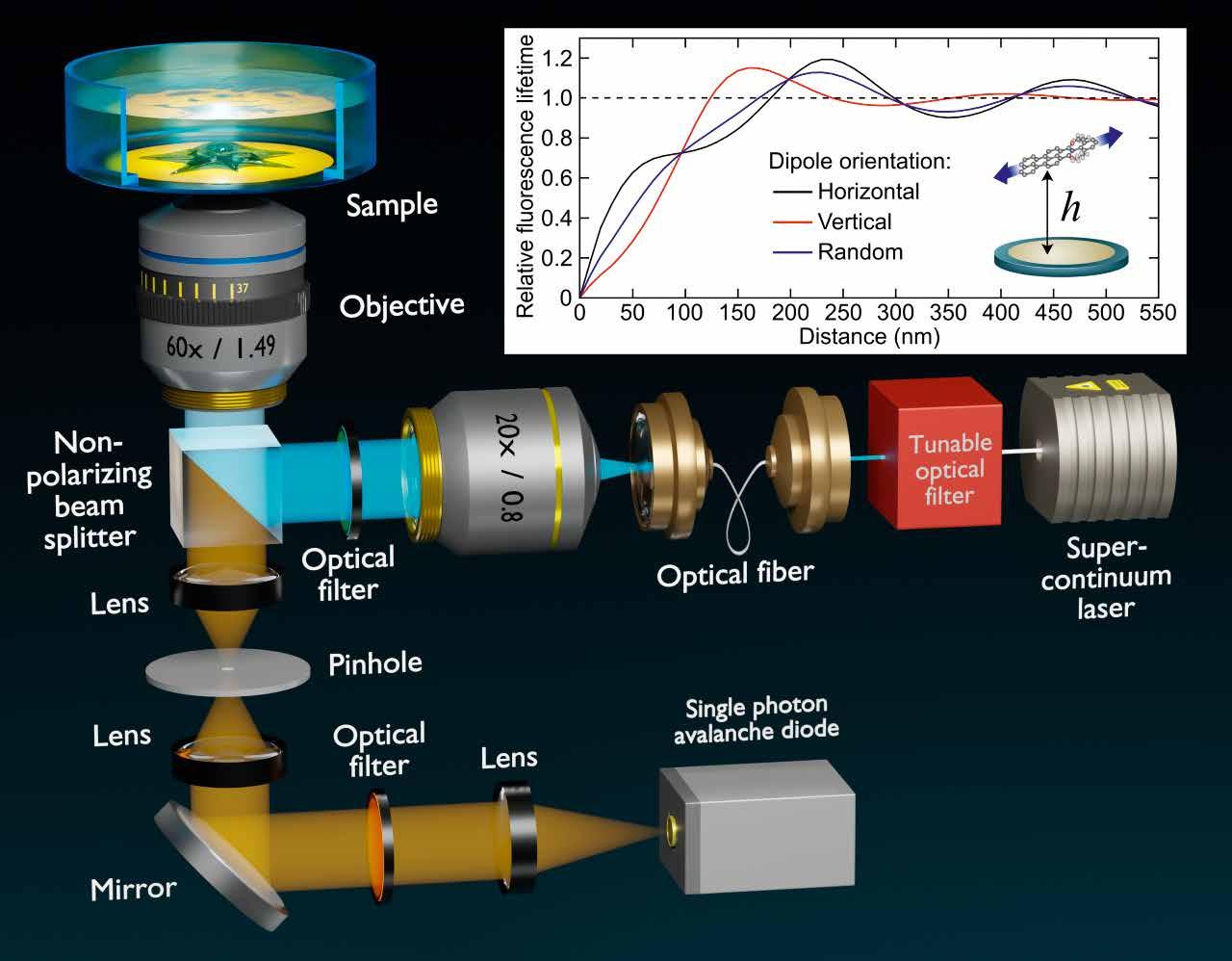

48 smMIET

We spoke to Dr. Jörg Enderlein, about his project Single-Molecule Metal-Induced Energy Transfer, a new method of three-dimensional fluorescence microscopy with a resolution on a molecular scale.

50 ION4RAW

The ION4RAW project aims to develop new, more sustainable and environmentally-friendly methods to recover critical raw materials and metals from mining sites, as Maria Tripiana explains.

53 ALPHA

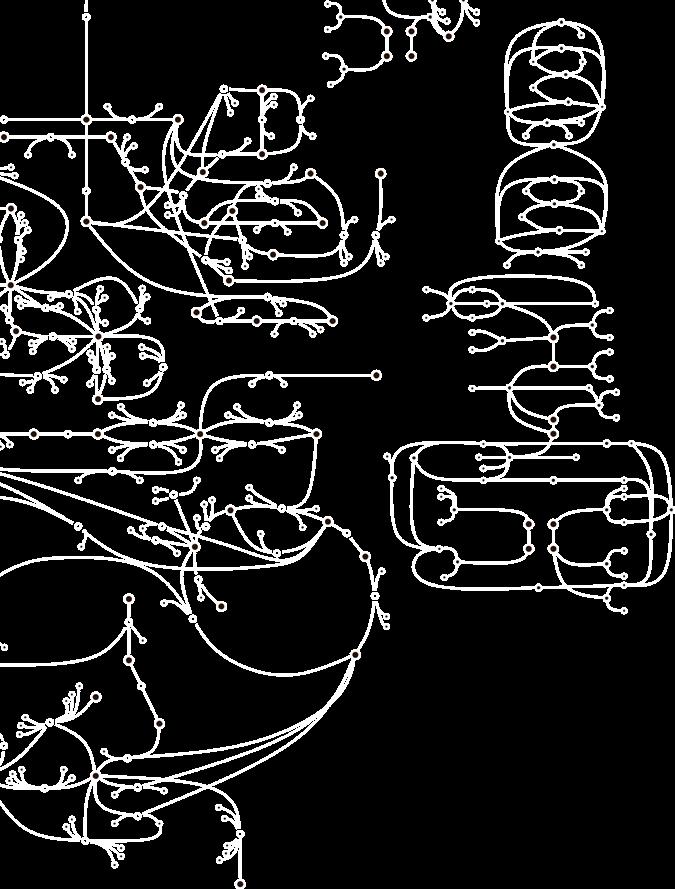

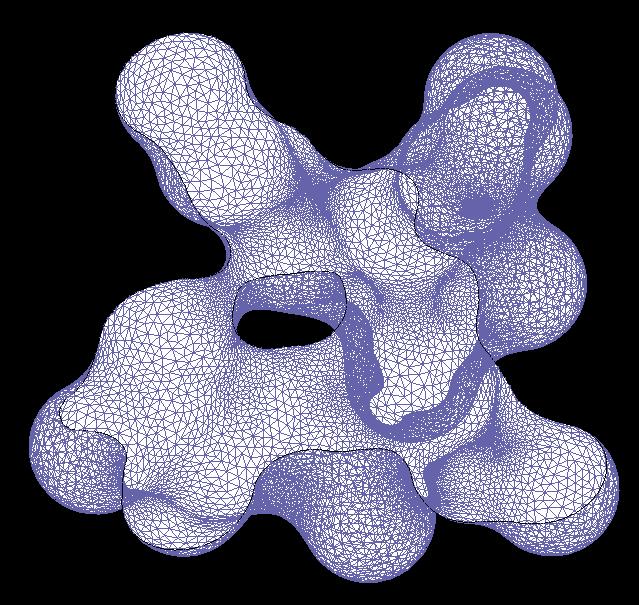

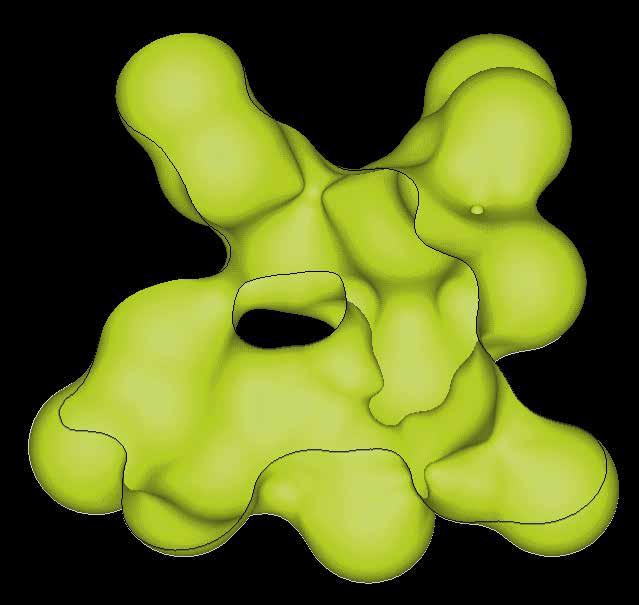

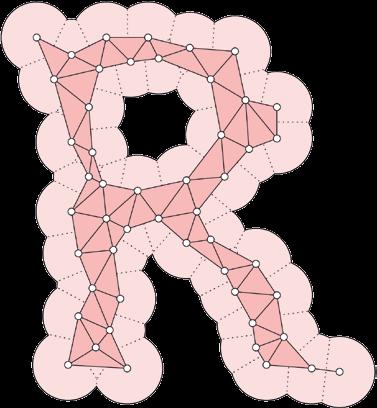

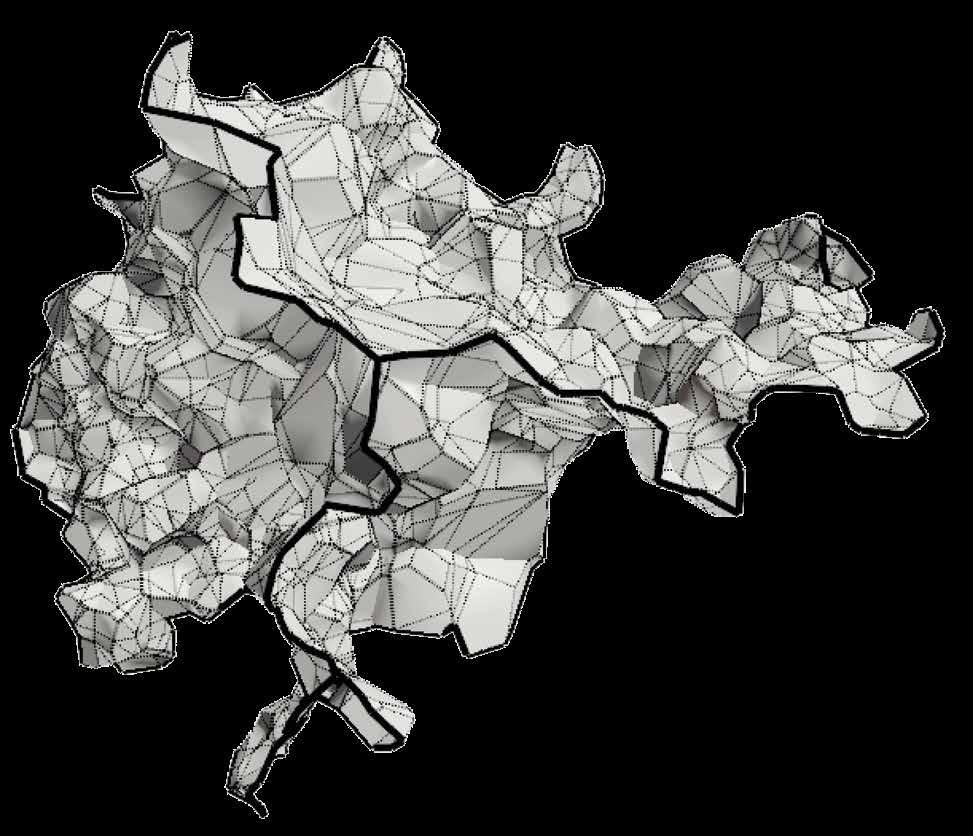

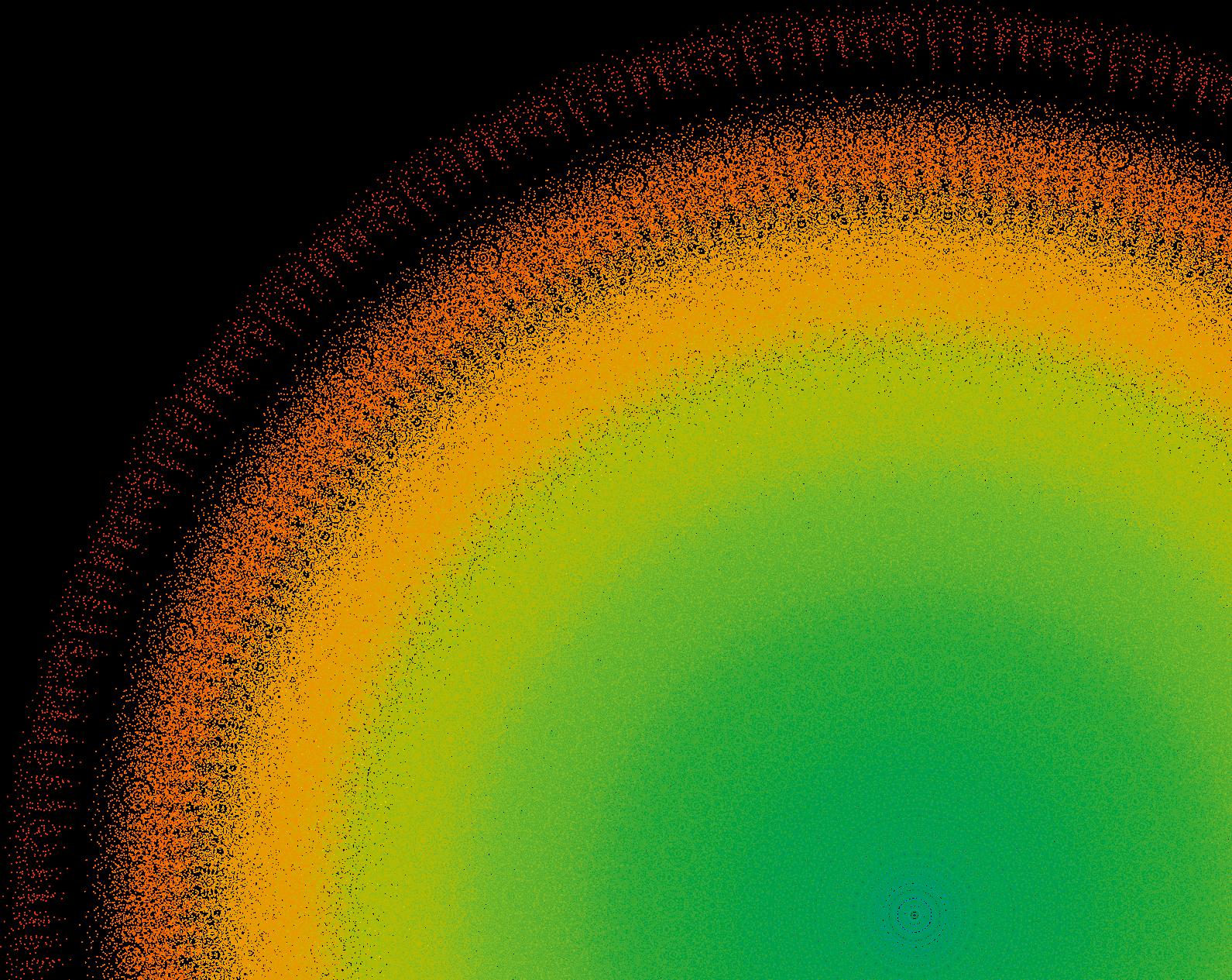

Herbert Edelsbrunner, a pioneer of alpha shapes and persistent homology, is using these ground-breaking computational geometry ideas to empower new applications such as cancer detection and modelling.

56

ChatGPT has arrived and demonstrated that AI can present research articles, write creatively, program code, do your child’s homework accurately and write poems instantaneously. Where is language AI going and is it a good place? By Richard Forsyth.

60 BALIHT

We spoke to Marta Pérez and Thomas Hoole about the work of the BALIHT project in developing and testing a redox flow battery capable of working at high temperatures.

62 SHeLL

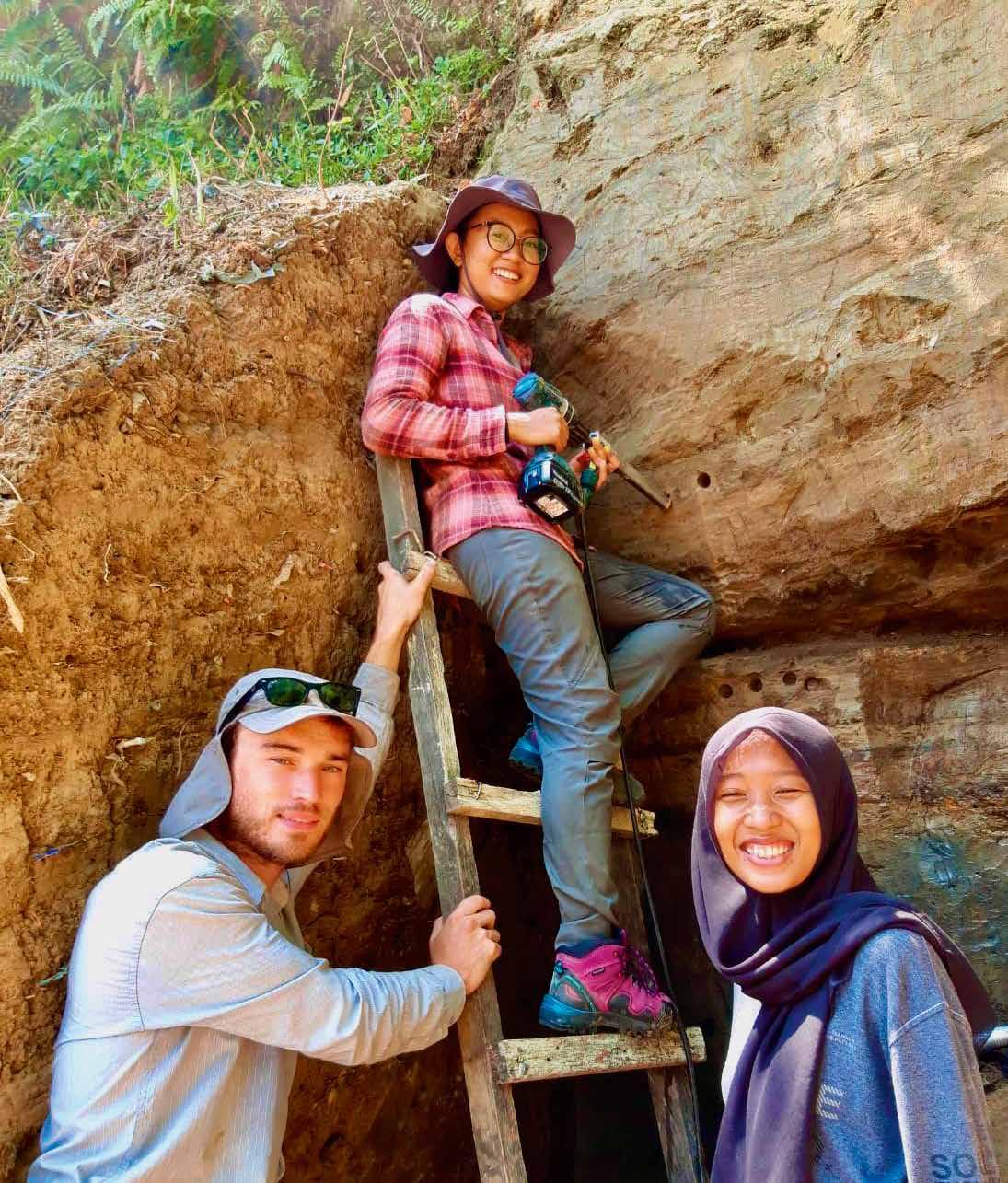

Prof. Josephine Joordens shares revelations unearthed in the project Studying Homo erectus Lifestyle and Location (SheLL), in which she is reassessing a dig site in Trinil (Indonesia) through a geoarchaeological re-excavation.

65 PASSIM

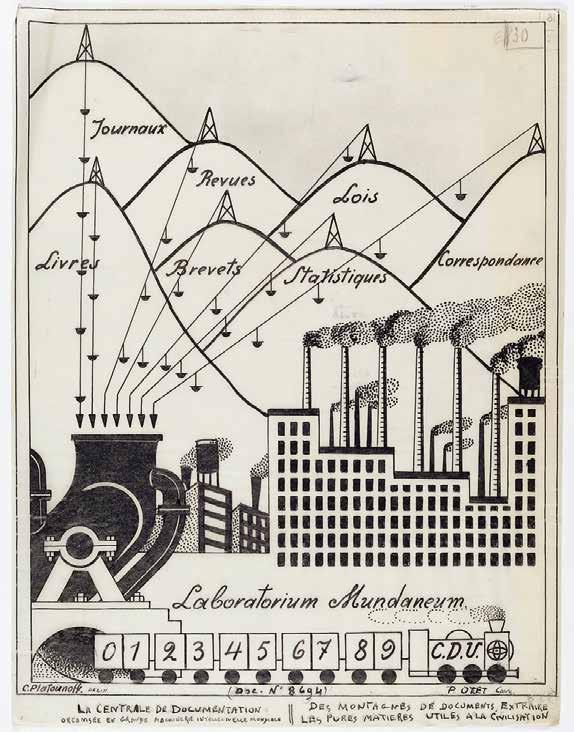

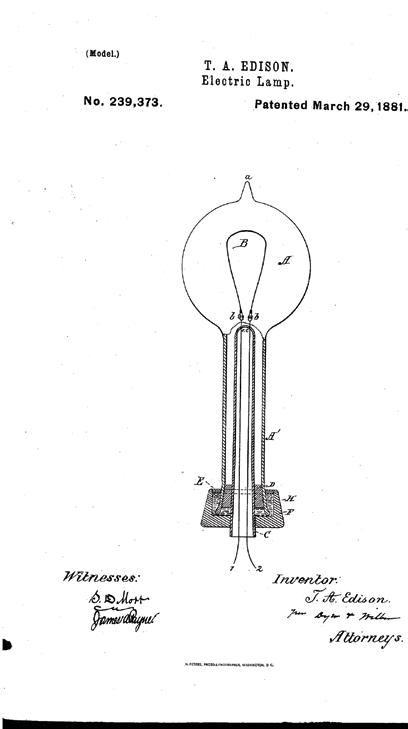

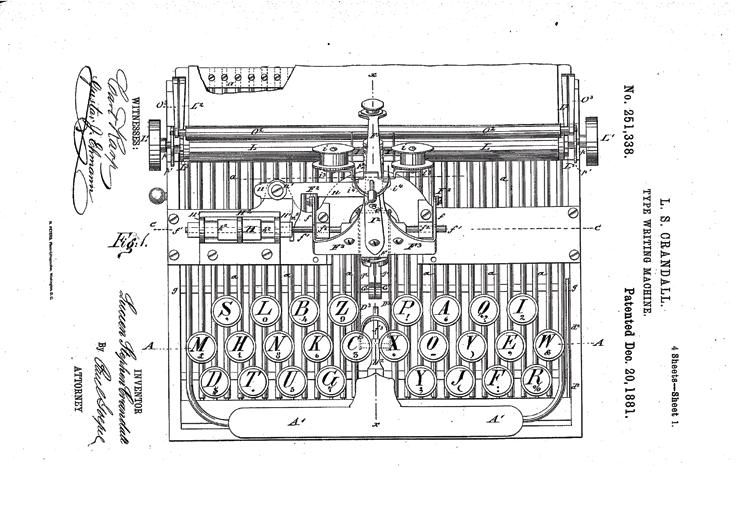

We spoke to Professor Eva Hemmungs Wirtén about her work in investigating the patent system and its role in creating the information infrastructure that shapes our lives today.

68 PHILAND

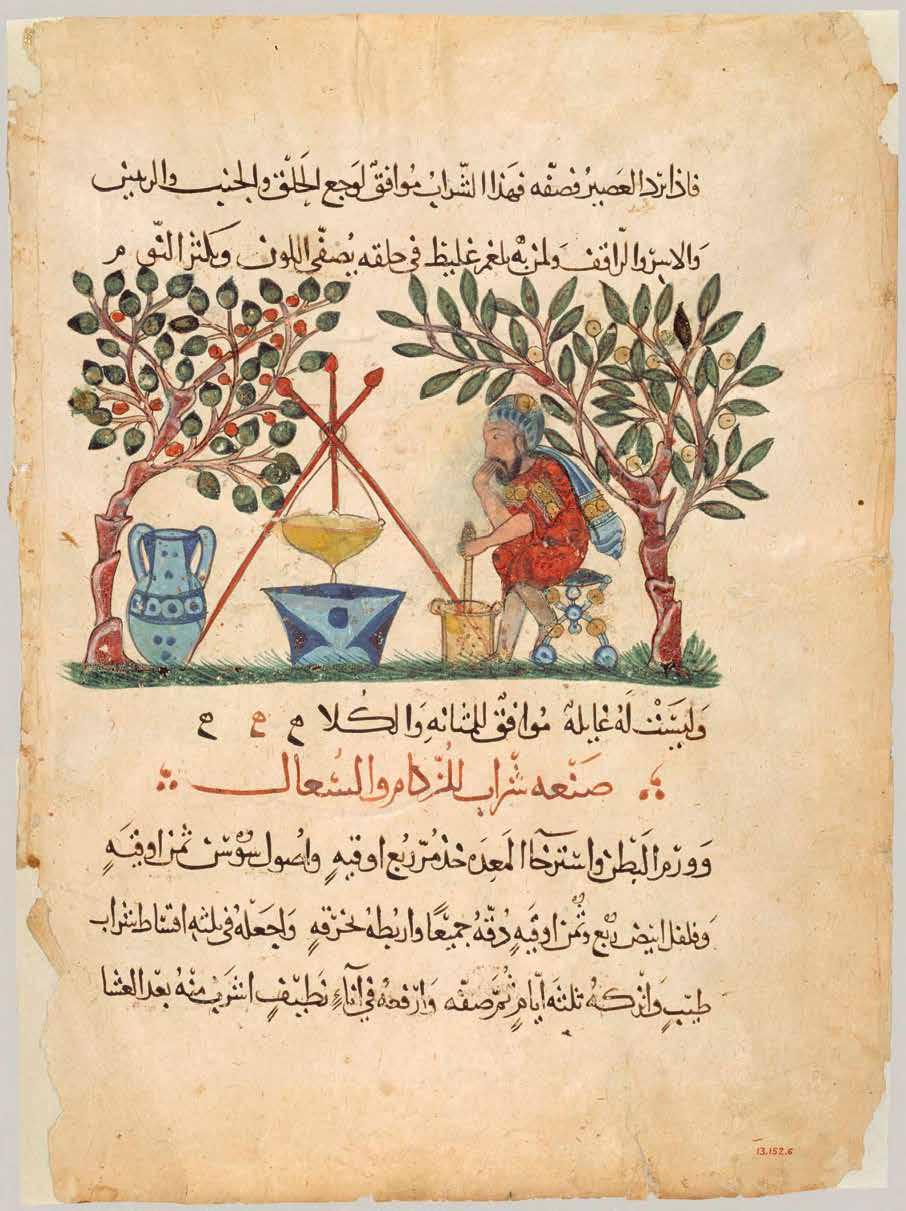

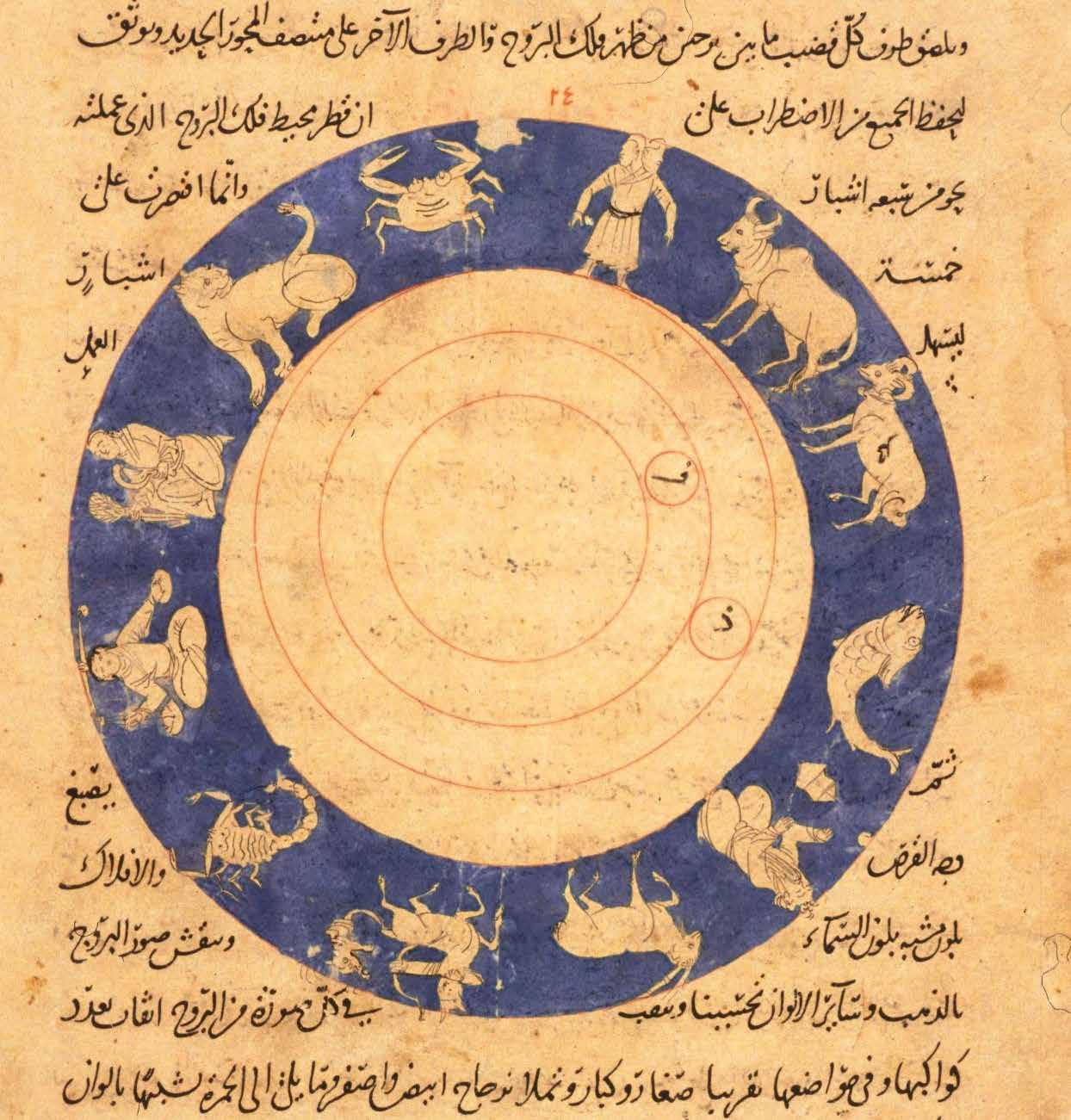

We spoke to Professor Godefroid de Callataÿ, Sébastien Moureau and Liana Saif about their work investigating the origins of philosophy in al-Andalus, and its importance to the history of ideas.

72 CROSS-POP

How do right-wing populists adapt their discourse in cross-border regions characterised by strong economic interdependences? Dr Christian Lamour and Professor Oscar Mazzoleni are investigating this question.

74 eQG

Professor Hermann Nicolai and his colleagues in the eQG Group are working to develop a new theory of quantum gravity, bringing together general relativity and quantum mechanics.

EDITORIAL

Managing Editor Richard Forsyth info@euresearcher.com

Deputy Editor Patrick Truss patrick@euresearcher.com

Science Writer Holly Cave www.hollycave.co.uk

Science Writer Nevena Nikolova nikolovan31@gmail.com

Science Writer Ruth Sullivan editor@euresearcher.com

PRODUCTION

Production Manager Jenny O’Neill jenny@euresearcher.com

Production Assistant Tim Smith info@euresearcher.com

Art Director Daniel Hall design@euresearcher.com

Design Manager David Patten design@euresearcher.com

Illustrator Martin Carr mary@twocatsintheyard.co.uk

PUBLISHING

Managing Director Edward Taberner ed@euresearcher.com

Scientific Director Dr Peter Taberner info@euresearcher.com

Office Manager Janis Beazley info@euresearcher.com

Finance Manager Adrian Hawthorne finance@euresearcher.com

Senior Account Manager Louise King louise@euresearcher.com

EU Research Blazon Publishing and Media Ltd 131 Lydney Road, Bristol, BS10 5JR, United Kingdom

T: +44 (0)207 193 9820

F: +44 (0)117 9244 022

E: info@euresearcher.com www.euresearcher.com

© Blazon Publishing June 2010

ISSN 2752-4736

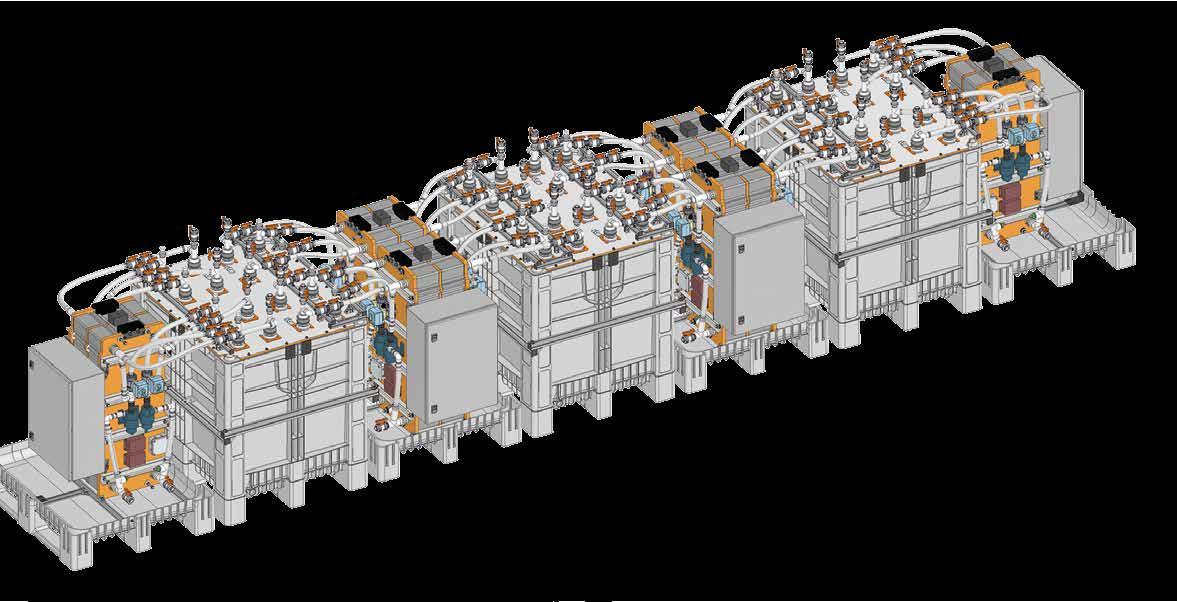

The Clean Hydrogen Partnership, under the Horizon Europe Programme, has announced it is investing €105.4 million ($114.45 million) for funding nine hydrogen valleys across Europe, and negotiations for the grant agreements for these projects have begun and are expected to conclude before summer 2023. The hydrogen valleys projects will focus on the production of clean hydrogen, address various applications in energy, transport and industry sectors, and are expected to mobilize investments of a minimum of five times the funding provided by the European Union or above €500 million ($541.17 million).

Additionally, the European Commission allocated an extra €200 million ($216.47 million) to the Clean Hydrogen Partnership via REPowerEU to further benefit the hydrogen valleys. A publicprivate partnership supporting research and innovation activities in H2 technologies in Europe under the Horizon Europe Programme, The Clean Hydrogen Partnership has multiple members, including the European Commission, Hydrogen Europe (representing hydrogen industries), and Hydrogen Europe Research (representing the research community).

The Clean Hydrogen Partnership has already started the grant process for two flagship hydrogen valleys.The first of these valleys will be spread across the North Adriatic region. This comprises Slovenia, Croatia, and the Autonomous Region of Friuli Venezia Giulia in Italy. The goal of the second valley is to build a hydrogen corridor across Estonia, South Finland and other Baltic Sea countries. Beyond these, seven smaller-scale H2 valleys projects have been planned for areas of Europe that have no or a limited presence of these valleys, such as regions in Ireland, Luxembourg, Turkey, Italy, Greece, and Bulgaria.

Mariya Gabriel, Commissioner for Innovation, Research, Culture, Education and Youth said “Hydrogen Valleys are key for the creation of a European research and innovation area for hydrogen. They prove that European cooperation can catalyse innovation, create jobs and opportunities while tackling the great energy challenges of our times. And we will rapidly hit the target of doubling the number of operational Hydrogen Valleys by 2025.”

Years of support to research and innovation on hydrogen have put the EU in the global lead for key hydrogen technologies, notably electrolysers, hydrogen refuelling stations and megawattscale fuel cells. Horizon Europe supports the Clean Hydrogen Joint Undertaking (CHJU) with €1 billion, matched by the same amount from industry and research partners. As part of REPowerEU, the Commission has allocated an additional €200 million to the CHJU to accelerate the rollout of Hydrogen Valleys. The Commission also recently granted approximately €4 million under Erasmus+ for a long-term partnership between industry and education to develop advanced skills for the hydrogen economy.

Other EU programmes also offer opportunities for investment in Hydrogen Valleys such as the Recovery and Resilience Facility, the Cohesion policy funds under the relevant smart specialisation priorities, and the Connecting Europe Facility.

Scientific leaders have urged the UK government to re-join the EU’s €95.5bn Horizon research programme as soon as possible, after prime minister Rishi Sunak questioned whether membership represented value for money. “The UK will find it extremely difficult to be an effective research power if it is . . . not part of the European research network,” Sir Paul Nurse, head of the Francis Crick Institute in London, said on Tuesday at the launch of a government-commissioned report into Britain’s scientific research base. “Frankly, alternative arrangements that are being discussed elsewhere will be utterly inadequate in comparison,” he added. The FT reported last week that Sunak was looking at other options in place of Horizon membership, including the UK’s “plan B” global research plan. “Of course we should extend connections to the rest of the world, but first we have to do association with Europe, our closest neighbours, where we have networks already working,” Nurse said.

Tom Grinyer, chief executive of the UK’s Institute of Physics, agreed. “The government’s continued hesitation on Horizon puts the government’s tech ambitions — and the UK’s future as a science superpower — at risk,” he warned. The country’s science leaders have become increasingly alarmed that Sunak might decide not to re-join Horizon. On Monday, the UK’s science and technology secretary Michelle Donelan underlined the government’s stance on membership: “It would have to be on acceptable and favourable terms,” she said. “It would have to be value for money for the taxpayer.”

Last week Ursula von der Leyen, European Commission president, said work would begin on Britain’s associate membership of Horizon after a breakthrough on a new post-Brexit deal on Northern Ireland trade, known as the Windsor framework. Brussels had blocked British scientists from joining the scheme last year because of the dispute over Northern Ireland. But negotiations on re-joining are expected to take between six and nine months, as London and Brussels work out financial arrangements and agree on the extent of UK participation in Horizon. The top priority for British scientists is regaining access to the European Research Council, which funds the highest-quality scientific projects.

The UK had originally allocated as much as £15bn for participation over the seven-year Horizon programme that runs to 2027, but with just three to four years left that figure will fall significantly. The cross-party UK trade and business commission wrote to British Prime Minister Rishi Sunak on Tuesday evening, calling on him to “urgently recommit” to joining the EU programme. “The longer the UK delays on Horizon membership, the longer the UK will be unnecessarily excluded from significant funding opportunities, and from the economic benefits that international scientific collaboration can bring,” said the commission, whose joint leaders are the Labour MP Hilary Benn and Peter Norris, Virgin Group chair. The government responded: “We will continue to discuss how we can work constructively with the EU in a range of areas, including future collaboration on research and innovation.”

The UK’s new science and technology minister said Britain is willing to “go it alone” if an agreement cannot be reached.

Scientists call for UK to re-join EU’s Horizon

The European Research Council (ERC) is set to introduce lump sum funding to its Advanced grants for experienced researchers starting in 2024. The decision was made by the ERC Scientific Council on Friday, with the two provisos that the move must not affect the autonomy of the principal investigator in managing their grants and that there will be not explicit “deliverables” or milestones to be reached that could undermine scientific excellence by preventing researchers from acting upon unexpected findings.

ERC is set to give out almost €16 billion to researchers under Horizon Europe. Like most EU research funding, its grants are given based on the real cost of a project, but the introduction of lump sums will lift the burden of reporting, and will instead mean researchers are paid on the basis of activities carried out. Laura Keustermans, senior policy officer at the League of European Research Universities (LERU), says universities in the LERU network welcome the pilot and are particularly happy the Scientific Council acknowledged the autonomy of principle investigators and unpredictable nature of fundamental research. “We do see advantages of lump sum funding as long as the ERC is not losing what makes it so special,” said Keustermans.

Thomas Estermann, director for governance, funding and public policy development at the European University Association (EUA), is more sceptical about the move. While he says there’s nothing wrong with lump sum funding if it’s done right, the ERC should not rush it. Instead, beneficiaries should be given a choice on how they want to be reimbursed. “The easiest way would be to provide an option for the applicants,” he said.

Whether lump sums work will depend on the implementation of the approach, the details of which remain to be seen. Estermann urges cautiousness, warning “the devil is always in the detail and implementation.” He adds that a well-designed lump sum system must be transparent, support the principles of financial sustainability, the autonomy of research and not add another administrative burden to the beneficiary in terms of reporting.

Keustermans says it’s important to continue monitoring the situation and to talk to applicants, successful and not, “to try to get an idea on how much additional work there is with the lump sum approach.” The ERC promises the flexibility will remain unchanged. The ERC notes the decision to adopt lump sum funding is tentative and the final decision is expected “in accordance with the timeline set out for the adoption of the ERC work programme 2024.”

Nearly 200 countries have agreed to a legally-binding “high seas treaty” to protect marine life in international waters, which cover around half of the planet’s surface, but have long been essentially lawless. The agreement was signed on Saturday evening after two weeks of negotiations at the United Nations headquarters in New York ended in a mammoth final session of more than 36 hours – but it has been two decades in the making.

The treaty provides legal tools to establish and manage marine protected areas – sanctuaries to protect the ocean’s biodiversity. It also covers environmental assessments to evaluate the potential damage of commercial activities, such as deep sea mining, before they start and a pledge by signatories to share ocean resources. “This is a historic day for conservation and a sign that in a divided world, protecting nature and people can triumph over geopolitics,” Laura Meller, Oceans Campaigner at Greenpeace Nordic, said in a statement.

The high seas are sometimes called the world’s last true wilderness. This huge stretch of water – everything that lies 200 nautical miles beyond countries’ territorial waters – makes up more than 60% of the world’s oceans by surface area. These waters provide the habitat for a wealth of unique species and ecosystems, support global fisheries on which billions of people rely and are a crucial buffer against the climate crisis – the ocean has absorbed more than 90% of the world’s excess heat over the last decades.

Yet they are also highly vulnerable. Climate change is causing

ocean temperatures to rise and increasingly acidic waters threaten marine life. Human activity on the ocean is adding pressure, including industrial fishing, shipping, the nascent deep sea mining industry and the race to harness the ocean’s “genetic resources” –material from marine plants and animals for use in industries such as pharmaceuticals.

“Currently, there are no comprehensive regulations for the protections of marine life in this area,” says Liz Karan, oceans project director at the Pew Charitable Trusts. Rules that do exist are piecemeal, fragmented and weakly enforced, meaning activities on the high seas are often unregulated and insufficiently monitored leaving them vulnerable to exploitation. Only 1.2% of international waters are protected, and only 0.8% are identified as “highly protected.”

Douglas McCauley, professor of ocean science at the University of California Santa Barbara said “There are huge unmanaged gaps of habitat between the puzzle pieces. It is truly that bad out there,” Countries now have to formally adopt and ratify the treaty. Then the work will start to implement the marine sanctuaries and to attempt to meet the target of protecting 30% of global oceans by 2030. “We have half a decade left, and we can’t be complacent,” Meller said.

“If we want the high seas to be healthy for the next century we have to modernize this system – now. And this is our one, and potentially only, chance to do that. And time is urgent. Climate change is about to rain down hellfire on our ocean,” McCauley said.

The council says yes to no-strings-attached funding for experienced researchers. The move is meant to reduce the administrative burden.

After almost 20 years of talks, UN member states agree on legal

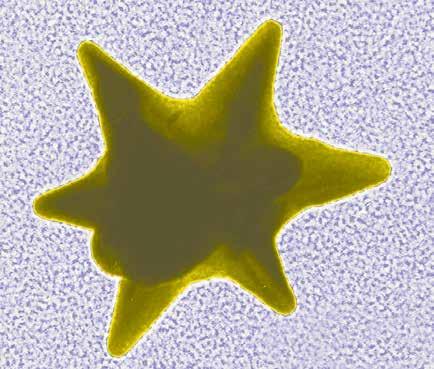

If Professor Ranga Dias of the University of Rochester, New York, and his team have observed room-temperature (294 K), near-ambient pressure superconductivity, their discovery could rank among the greatest scientific advances of the 21st century. Such a breakthrough would mark a significant step toward a future where room-temperature superconductors transform the power grid, computer processors, and diagnostic tools in medicine.

But for the past three years, the Rochester team—and Dias in particular—has been shrouded in allegations of scientific misconduct after other researchers raised questions about their 2020 claim of room-temperature superconductivity. In September, the Nature paper reporting that result was retracted. Further misconduct allegations against Dias have recently emerged, with researchers alleging that Dias plagiarized substantial portions of someone else’s doctoral thesis when writing his own and that he misrepresented his thesis data in a 2021 paper in Physical Review Letters (PRL). Jessica Thomas, Executive Editor of the Physical Review journals, confirmed that PRL has launched an investigation into that accusation. “This is a pretty serious allegation,” she says. “We are not taking it lightly.”

Scientists have been working on identifying new superconductors for decades—materials that can transmit electricity without friction-like resistance. However, previously discovered superconductors only work at super cold temperatures, and under incredibly high pressures. The newly discovered superconductor, lutetium, could be much more useful in applications, like strong magnets used in MRIs, magnetically floating trains, and even nuclear fusion, than those which must be kept super-cold.

Dirk van der Marel, a University of Geneva physicist who wasn’t involved in the new research or Dr. Dias’s other work, was among those who raised issues about the 2020 data. Dr. Dias said the retracted paper has been resubmitted to Nature after he and his colleagues collected new data in front of other scientists at the Argonne and Brookhaven National Laboratories, in Illinois and New York, respectively. He added that his group made all their data around “reddmatter” available during the peer-review process for the new paper. Although Dr. van der Marel said the new study appeared to properly demonstrate the effect in “reddmatter,” he said he feels “extremely uncomfortable about the whole thing.”

For his part, Dr. Dias said his group is already looking to tweaking their “reddmatter” recipe to try to achieve superconductivity at even warmer temperatures and lower pressures. One idea is throwing other rare-earth elements that are similar to lutetium into the mix, though these rare elements are expensive, Dr. Dias said. He hopes to try a different approach—maybe aluminum with a dash of something else—which is cheaper to make and can mimic lutetium’s effects.

The group will start using machine-learning to select their next superconductor recipes. They are training algorithms with data from this new work and previous experiments to help the AI better predict what combinations of hydrogen and other elements may yield superconducting materials. “It is remarkable that Mother Nature allows us to use different pathways to get to these remarkable superconducting states,” Dr. Salamat said, who added that dropping the pressure down to zero is the group’s next goal. Dr. Dias said he’s confident that achievement is coming: “It is just a matter of time.”

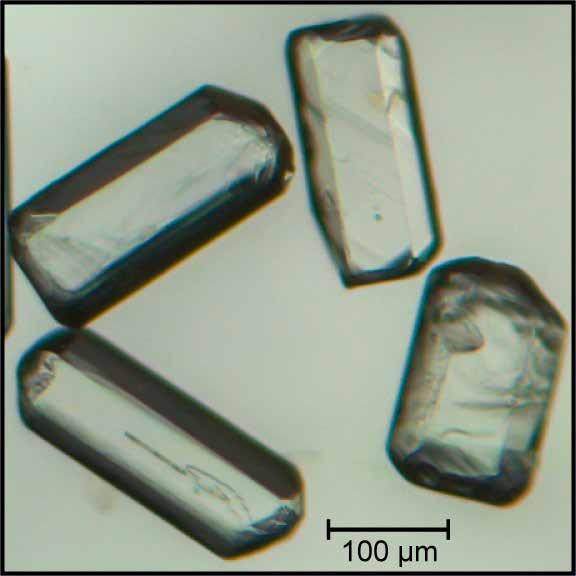

Despite superconductor breakthrough, some scientists remain sceptical with concerns of data manipulation and plagiarism.A 1-mm-wide sample of the nitrogendoped lutetium hydride created by Dias et al. | Photo Credit: University of Rochester/J. Adam Fenster

After the one year anniversary of the war passes, despite EU support for Ukraine, the future of Ukraine’s research community remains uncertain.

A year ago, Russia’s full-scale invasion of Ukraine redefined geopolitics in a shockwave that is still reverberating through the science world. The EU research community was quick to cut ties with Russia and lend Ukraine a helping hand – but now it is grappling with resulting instability and uncertainty as the war climbs into its second year. Lucian Brujan, programme director for international relations and science diplomacy at the German National Academy of Sciences Leopoldina, says it’s too early to say what the long-term impact will be on research and innovation - and urges patience.

“I think many in the community are waiting to see how the political problems will be solved and how this war will end; and after that, we’ll need to have a discussion,” Brujan says. “We have to be honest with ourselves in the scientific community. We are dealing with political and security uncertainty.” But what is clear already is the shift in discourse on international cooperation. While it’s hard to judge the effect in hard terms, conversation has shifted away from blanket arguments in favour of openness, towards a more careful attitude, observes Thomas Jørgensen, director for policy coordination and foresight at the European University Association.

As global tensions intensify, eyes turn to China, which in recent weeks has been deliberating sending weapons to Russia. That the EU has a complex relationship with China isn’t new, but the tense geopolitical situation and China’s equivocation on the war in Ukraine, has added has a new dimension to it, says Lidia BorrellDamian, secretary general of Science Europe.

The complexity isn’t just about big politics but “comes from different legislative approaches in the EU and China regarding open access, open science, treatment of data, the outcomes of research. The difficulties of research collaboration with China have been there for years now,” adds Borrell-Damian. The EU cut off all research ties with Russia as the war broke out, and now the big question is whether it was a one-off extreme measure, or a realisation about the complexity of the world we live in that will lead to reappraisal of research ties with others, including China.

Overall, Brujan notes, “The war has shown one clear trend: scientific organisations and even scientists are way more careful than they have been before. Various aspects are being reconsidered, from security to fundamentals to practicalities. The scientific community will see how this prudence is going to affect cooperation globally. This is a normal reaction to navigating an unstable environment, he adds. We need to see how this prudence is going to affect scientific cooperation. It doesn’t mean we have to give up our way of doing: my message is to have patience and observe sharply what’s going on.”

The important thing now is to keep discussions going, at all levels. Talks under the European Research Area (ERA) framework have been fruitful, but “what we don’t know, and where there can be a mismatch, is between high-level diplomatic geopolitics discussions and research policy discussions,” said Jörgensen. For this discussion to happen, scientists and policymakers will need to learn to speak each other’s languages, and take a more practical approach, Brujan says.

With $200 million to invest in start-ups and scale-ups, Taiwan’s Central and Eastern Europe Investment Fund is becoming a significant force in the region.

A $200 million venture capital fund set up by Taiwan’s National Development Fund is filling a gap for start-up funding in central and eastern Europe and building connections with Taiwan’s industry and research base. Following its formation in March 2022, the fund has made three investments to date, and is shaping up to be a significant player in a region where local venture capital is scarce. “From the $200 million fund, we are looking to invest in 20-25 companies,” said Mitch Yang, managing partner of the Central and Eastern Europe (CEE) Investment Fund at venture capital firm Taiwania Capital. “We have just started, and we are going to speed up, so this year our target is to invest in six to eight companies.”

The fund has a broad range of interests, taking in semiconductors, laser optics, biotechnology, aerospace, fintech, electric vehicles, smart manufacturing, artificial intelligence and smart cities. Its first investments are in Litilit, an industrial femtosecond laser start-up based in Lithuania; Photoneo Brightpick, a computer vision and robotics business in Slovakia; and Oxipit, an AI medical imaging start-up in Lithuania. The fund is open to all countries in the region, but prioritises investments in companies from Lithuania, Slovakia, and the Czech Republic. This focus reflects where the fund feels its investments can have the most impact and find the best fit with Taiwan’s industrial strengths. But it is also influenced by broader

developments in the relationship between Taiwan and the region.

The origin of the fund lies in the COVID-19 pandemic, which helped forge new connections between Taiwan and countries in central and eastern Europe. In the early months of the pandemic, Taiwan sent masks and medical supplies to the region, with countries such as Lithuania, the Czech Republic, Slovakia, and Poland returning the favour with vaccines when the virus spread in Taiwan. This mutual aid resulted in a government delegation from Taiwan to the region in October 2021, and discussions about how cooperation could be extended. The fund is one result of these discussions.

Looking to the future, Yang sees the fund as the first step in sustained cooperation between Taiwan and the region, which will be driven by current events such as the war in Ukraine. “Once the war is over, there will be reconstruction and something like a Marshall Plan,” he said. “That will be focused on Ukraine, of course, but it will affect the whole region. Ukraine, Poland, Slovakia, the Czech Republic, and the Baltics are going to play important roles.” While the US is likely to drive that reconstruction effort, it will need to be a collaboration. “I want Taiwan to play a part, in our strengths, our values, and our convictions,” Yang said. “We want to be a player, and to invest based on our values.”

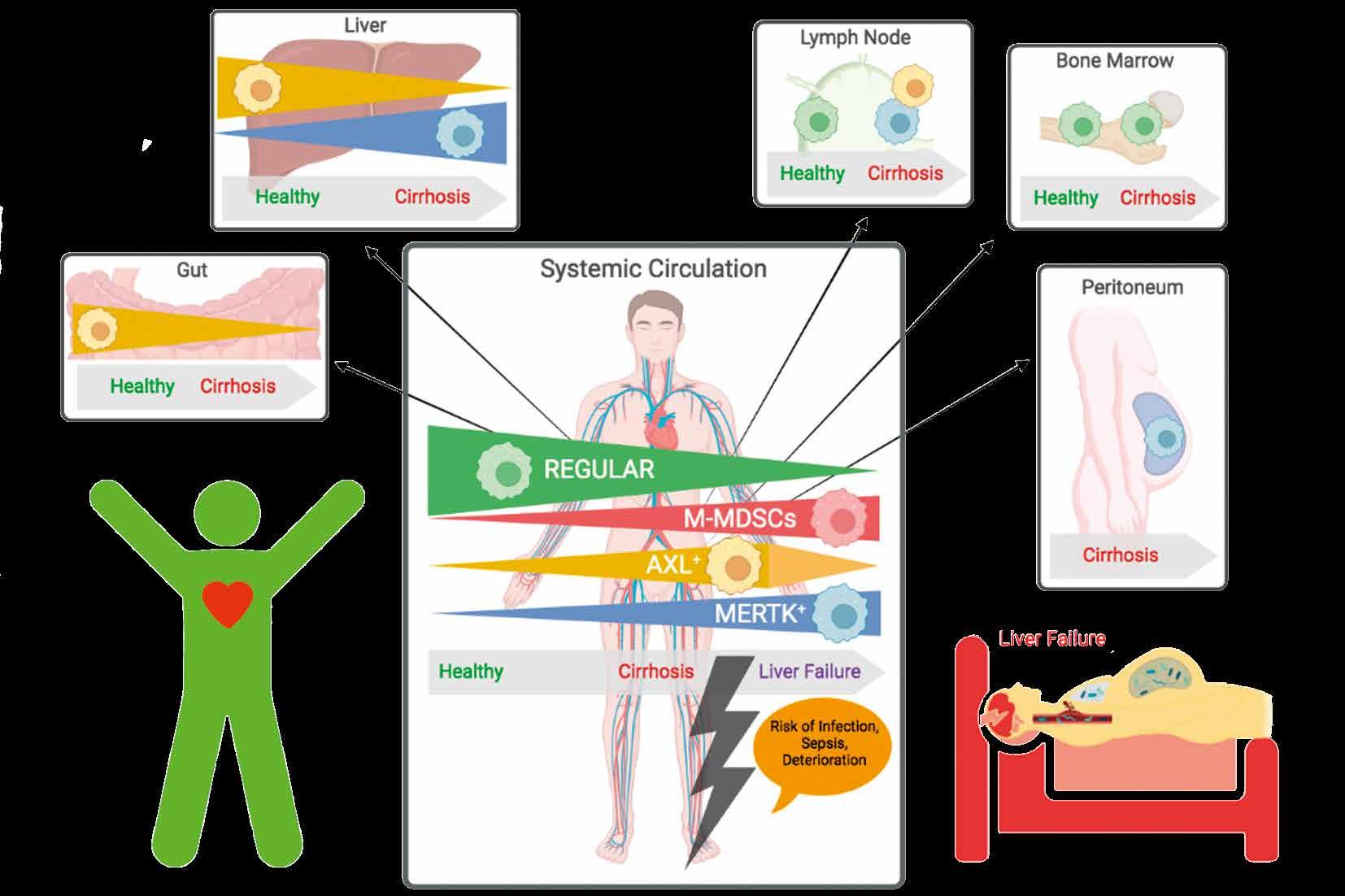

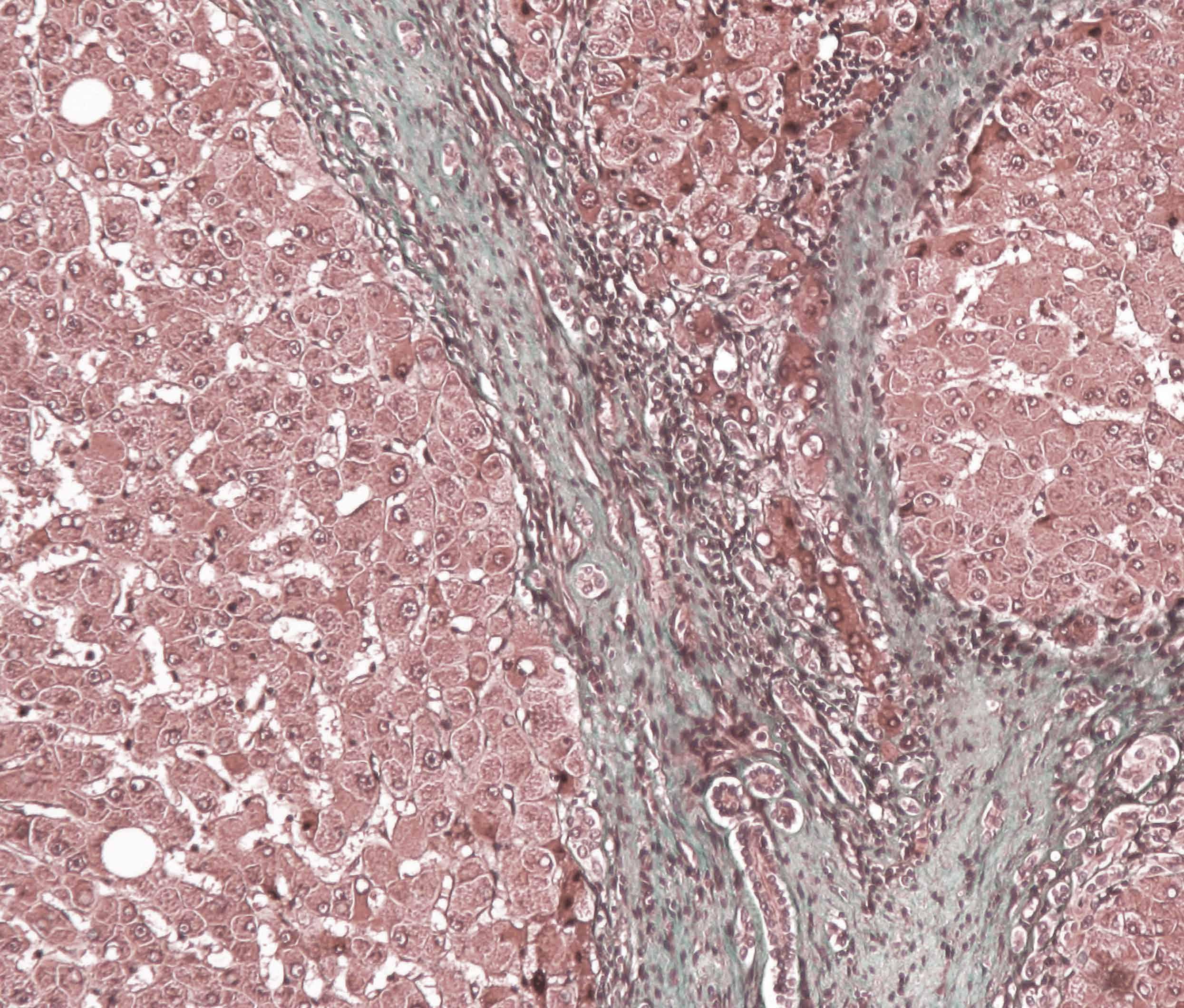

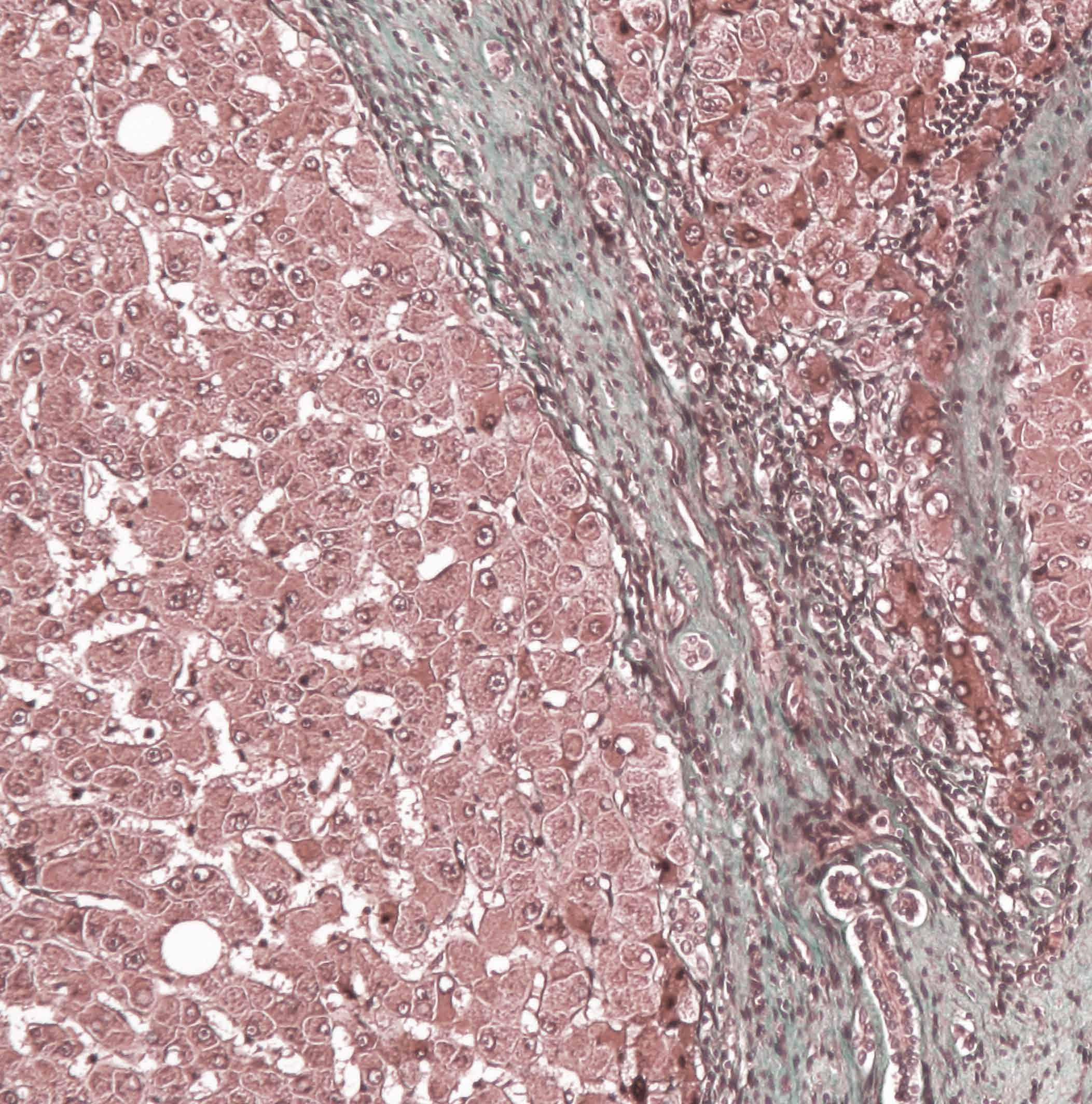

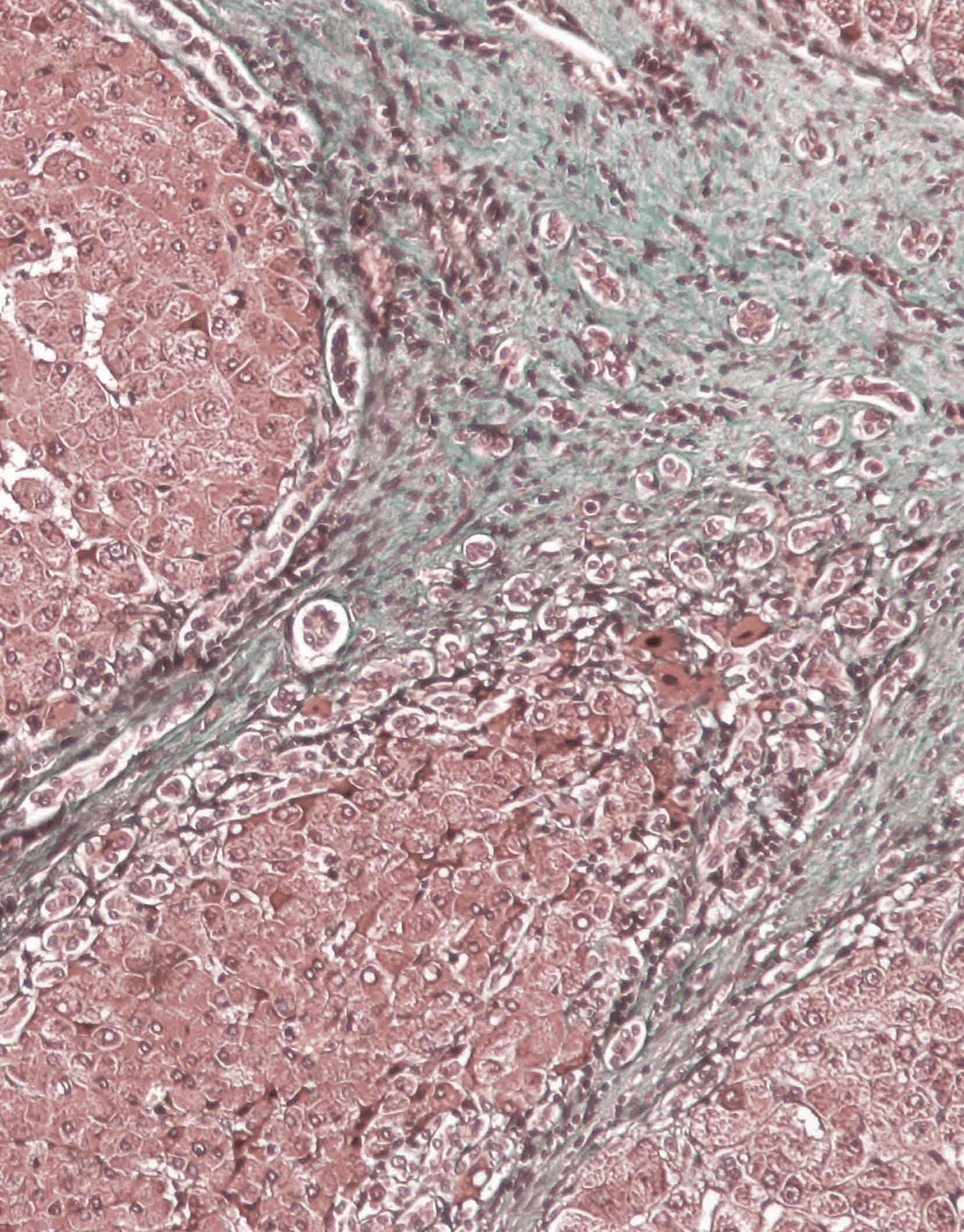

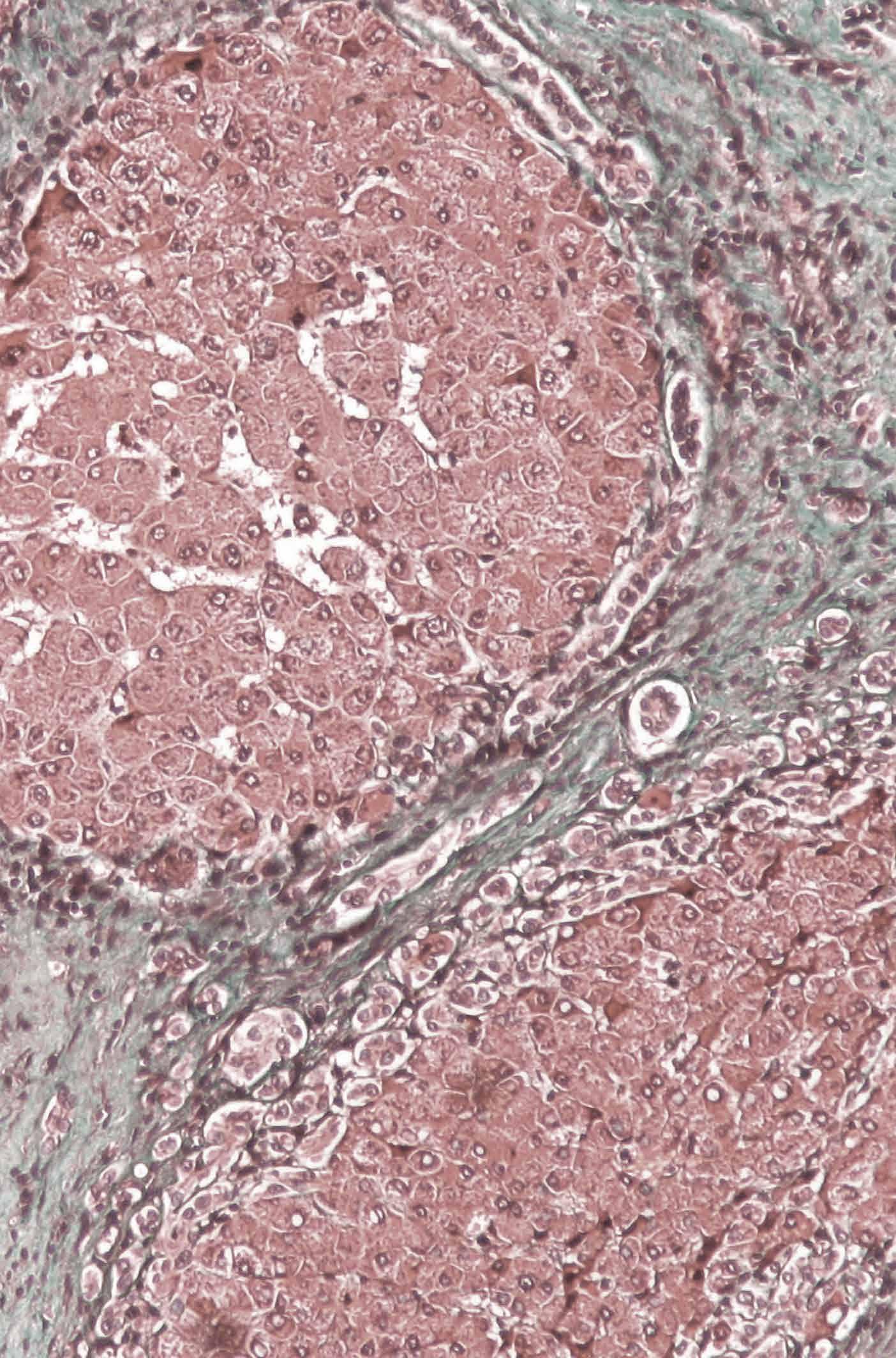

Liver cirrhosis is scarring (fibrosis) of the liver, which impedes or prevents the function of the organ, and this occurs after long-term liver damage. Liver cirrhosis can go unnoticed or unchecked until significant damage is done, or it is too late to save the organ, and often the life of the patient.

Liver cirrhosis is the eleventh leading cause of death worldwide, and there is no cure for decompensated liver failure, meaning transplants are the only option open to those with failing livers. With such a destructive, widespread disease and with no cures available, finding effective ways of defining a damaged liver’s condition is important. A major cause of mortality in cirrhosis patients is due to infections, and about a quarter of hospitalised cirrhosis patients are admitted due to infections. Being able to understand the way the immune system fails on a cellular level,

could potentially help pave the way for a route toward new therapies specifically aimed at thwarting infection.

“Cirrhosis can occur in any person who has chronic liver disease. If cirrhosis occurs, it can be a bad prognosis but it is not always related to liver failure only, as most of these people die from infections. The underlying mechanisms are not well understood, and this is why we are working on this,” confirmed Dr. Bernsmeier. “It is easy to assume that in the person with the diseased liver, the liver gets worse until it fails, and they die. In reality, infection is a critical step because if infection occurs, the liver function gets worse. A downward spiral occurs which leads to death from sepsis, and this is what we are trying to understand because in many cases it is not the liver disease specifically that is fatal, it’s the infections from a failed immune response.”

This infection susceptibility, due to a failing immune system (immuneparesis), appears to accelerate cirrhosis. The project team are tasked with investigating the mechanisms of this process.

In our innate immune system, monocytes and macrophages are crucial white blood cells that fight infection. In patients with liver disease, these cells change in different ways during the stages of cirrhosis, making them ultimately ineffective against harmful bacteria. Finding out how they change in each stage could create a gauge, allowing healthcare professionals to understand how critical the condition of the liver is.

“With this project, we are trying to more systematically see which kinds of subsets or states of monocytes are differentiating,

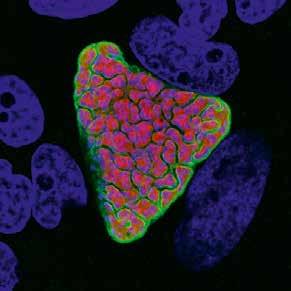

The project: ‘Diversity and compartmentalisation of monocytes & macrophages and immuneparesis in patients with cirrhosis’ explores new ways to understand the failing immune response in cirrhosis patients, as Research Group Leader, Dr. Christine Bernsmeier, explains.Created

in that kind of disease, and so far, we have identified different subsets but we are convinced that there are more states that can be found.”

These discovered subsets in the circulation of patients with cirrhosis were specifically Monocytic myeloid derived suppressor cells (M-MDSC), immune-regulatory CD14+DR+AXL+ and CD14+MERTK+ cells. In advanced stages of cirrhosis these dysfunctional subsets were more prevalent than the typical monocytes found in health, and were less able to fight infections.

“With MDSCs for example, these cells have also been found in tissues surrounding cancers so there is something similar, where monocytes and macrophages are somehow immobilised so they could not defend against cancer or infection. It would be interesting to see what happened if we could rebirth these cells or attack them or eliminate them.”

Thanks to funding by the SNF (Swiss National Science Foundation) the research team involving Dr. Bernsmeier’s group as

The research has been conducted on biological materials in the laboratory environment and there have been no interventions on patients to date, as much research is still needed, especially to locate more desired targets.

“We have identified these subsets but the problem is that we now have realised that in every compartment of the body, like the liver, the abdominal cave, the blood circulation and so on, these targets are differently expressed. I think if we want to have an immunomodulatory approach, that needs to be not only stage specific but also compartment specific. This is the next step we are working on and wish to investigate,” said Bernsmeier.

It is the main objective of the project to decipher the diversity of monocyte and macrophage differentiation alluding to different compartments as well as different disease stages in patients with cirrhosis. This approach has many complex challenges but the pay-off could potentially save many lives.

Diversity and compartmentalisation of monocytes & macrophages and immuneparesis in patients with cirrhosis

Project Objectives

Given patients with liver cirrhosis most frequently die of sepsis rather than liver failure, the objective is to delineate the immune (dys-)function of their monocytes & macrophages in the blood and in tissues. Properties of these immune cells are assessed ex vivo using single cell transcriptomics, immunohistochemistry and flow cytometry-based techniques. Ultimately, the results may translate to therapies restoring the immune function and preventing sepsis and death in cirrhosis patients.

Project Funding

Swiss National Science Foundation (SNF)

- Projects Nr 159984 (2015-2019); https:// data. snf.ch/grants/grant/159984 and 189072 (20202023); https:// data.snf.ch/grants/grant/189072

Project Partners

• Prof. Burkhard Ludewig, Institute of Immunobiology, Medical Research Centre, Cantonal Hospital St. Gallen • Prof. Mark Thursz, Hepatology and Gastroenterology, Faculty of Medicine, Imperial College London • Prof. Julia Wendon, Liver Intensive Therapy Unit, King’s College Hospital, King’s College London

• Dr. Christopher Weston, Centre for Liver & Gastro Research, College of Medical and Dental Sciences, University of Birmingham

This project is financed by the Swiss National Science Foundation (SNSF)

Contact Details

Principal Investigator

Prof. Dr. Dr. Christine Bernsmeier, MD, PhD Research Group Leader Translational Hepatology, Department of Biomedicine and Department of Clinical Research

University of Basel

Consultant Hepatologist

University Center for Gastrointestinal and Liver Diseases

University Hospital Basel CH-4031 Basel, Switzerland

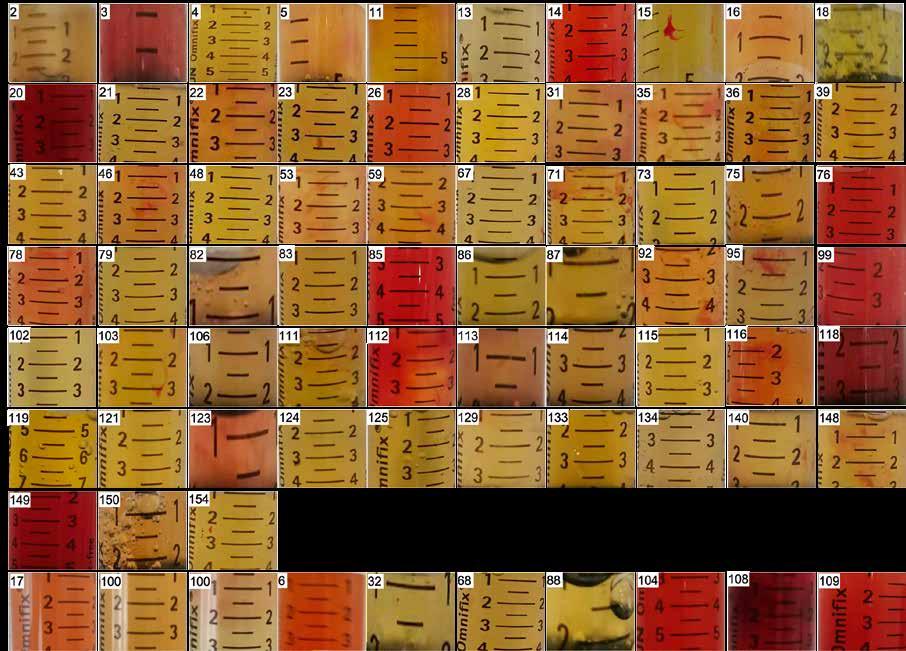

well as their important project partners are examining biological samples from cirrhosis patients at various stages of deterioration, to systematically identify which cells have changed and become ineffective. They examine blood or ascites, which is fluid from the abdominal cave, or use liver biopsies.

“We can identify in the blood, the monocytes and then we can investigate those and characterise them. We can do that as a systematic approach. We did that with single-cell RNA sequencing which is a relatively new approach to looking at the transcriptome of every single cell that we isolate. We also use classic approaches such as flow cytometry, where we can look at the specific targets that we want to stain and see their expression, and we also look at the function of these cells to see whether they still function in the sense they would defend against infection. We are trying to look at a variety of patients at different stages, which may be advanced or not advanced and we try to find out the difference and also the cut-off point, where the system will collapse.”

“There are not many groups working on this, on a molecular basis. Other groups are looking at slowing down or reverting fibrosis, to prevent cirrhosis to happen or persist but we are working on the potential to intervene in the very last stages and I think that that is new, and is a different approach. We do not favour to work on animal models because this is a multi-systemic human disease, which addresses the entire body with all its compartments and this cannot be mimicked in an animal. It makes it difficult for us to foresee everything at a glance, for example, we cannot take out a spleen and have a look at its macrophages, so these are obstacles that we have to find ways to overcome.”

The long-term aim of this research is to offer hope for patients, especially those in the direst stages of liver cirrhosis, beyond the desperate solution of a liver transplant. Further to this, is a wish the research can be a stepping stone to finding a way to restore the immune system’s functionality, enabling patients to fight infection and in turn, allowing more time and space for physicians to treat and reverse the underlying liver disease.

T: +41 61 7777400

E: c.bernsmeier@unibas.ch

W: https://forschdb2.unibas.ch/inf2/rm_ projects/object_view.php?r=4514612

W: https://biomedizin.unibas.ch/en/ research/research-groups/bernsmeier-lab/

Christine Bernsmeier is a clinician scientist holding a MD and a PhD in cell biology. She specialised in Hepatology and pursued a Postdoc with Prof. Julia Wendon at the Institute of Liver Studies/ Liver Intensive Care at King’s College London. She leads the Translational Hepatology Lab at the Department of Biomedicine, and has been appointed Adjunct Professor for Hepatology at the University of Basel in 2022.

Further to this, is a wish the research can be a stepping stone to finding a way to restore the immune system’s functionality, enabling patients to fight infection and in turn, allowing more time and space for physicians to treat and reverse the underlying liver disease.

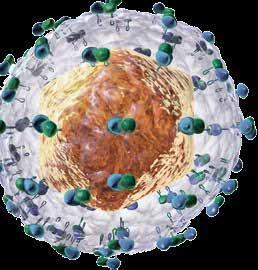

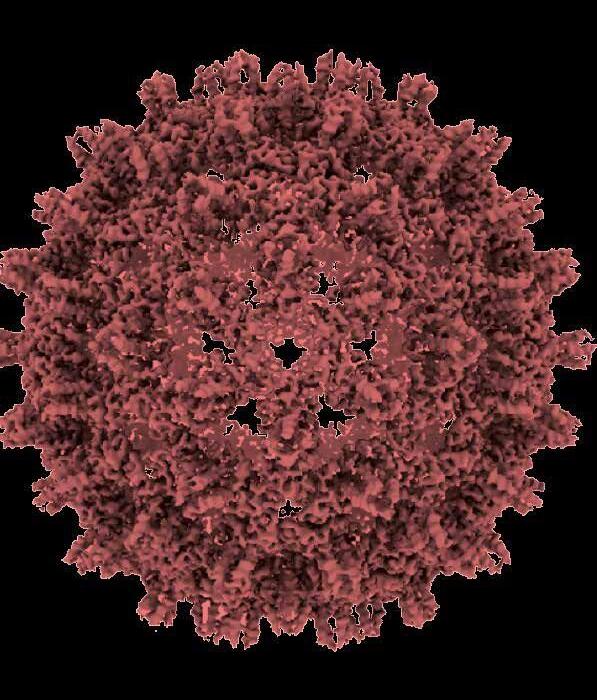

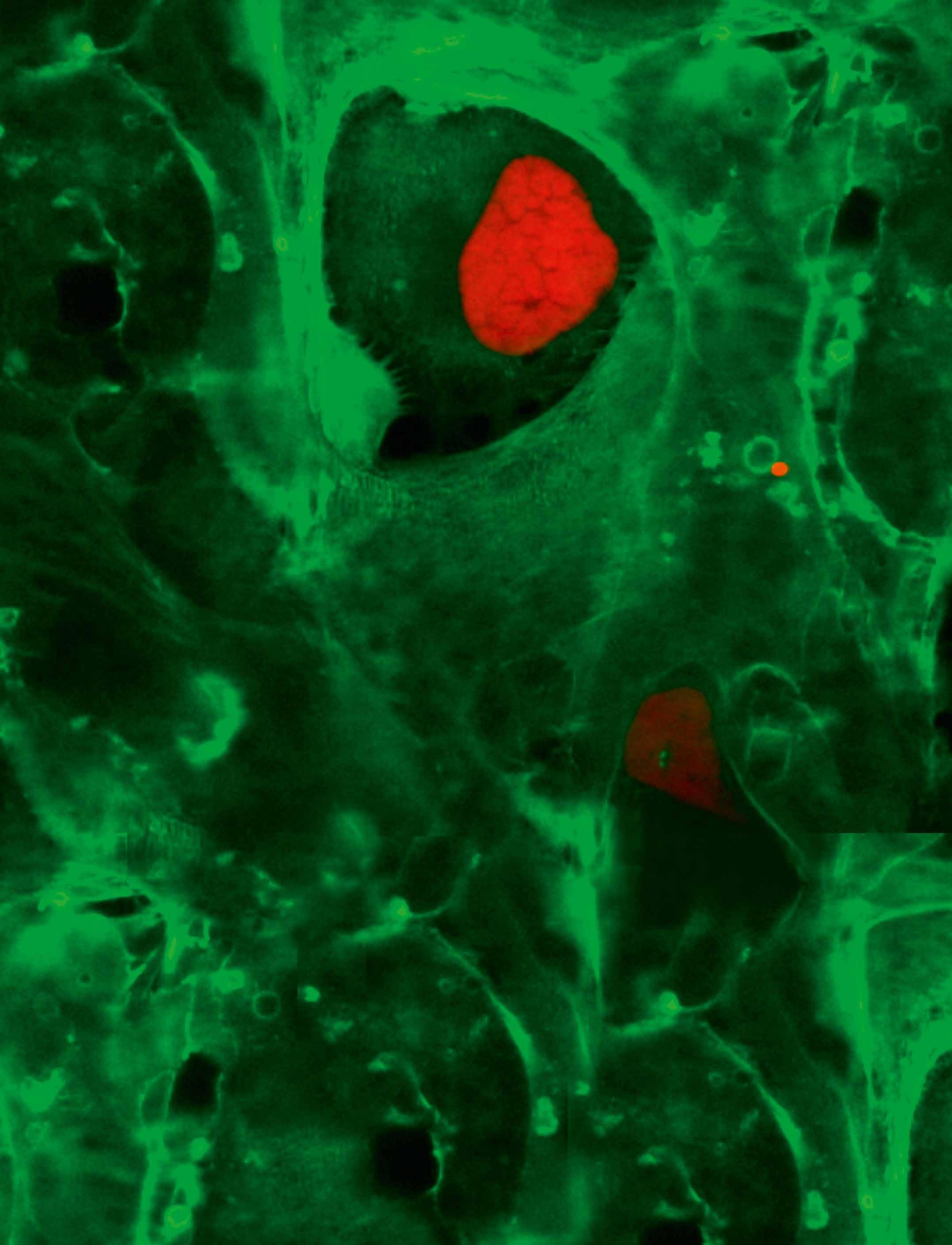

Many microorganisms have managed to escape from host immune responses by hiding inside cells. However, cells have evolved various intracellular, immune response-like mechanisms to eliminate pathogens. We spoke to Dr. Volker Heussler, who researches the development of the malaria parasite inside the hepatocytes, and the factors that influence its survival or elimination.

Malaria is a dangerous, potentially fatal disease, caused by the parasites of the genus Plasmodium. Malaria was responsible for 600,000 deaths in 2022. Every year, there are over 200 million cases of malaria worldwide. This devastating disease is transmitted exclusively via a bite by a female Anopheles mosquito. The Plasmodium species undergoes many life cycle stages in the mammalian host and in the mosquito. Sporozoites are the highly-motile crescent-shaped form of the parasite that the mosquito injects into the skin of the mammalian host during a blood meal. The sporozoites glide in the skin until they find a blood vessel, which they enter and then get passively transported with the bloodstream to the liver. Inside liver cells also called hepatocytes, the sporozoites transform and start dividing their nuclei finally resulting in thousands of merozoites. The merozoites are released from the liver cell and then repeatedly infect and destroy red blood cells. The destruction of red blood cells is one of the causes of the symptoms of malaria. Some merozoites develop into gametocytes, which can be ingested by a mosquito. Gametocytes develop into sporozoites within the mosquito, and then the cycle starts again.

The liver stage of development is a crucial part of the Plasmodium life cycle and revealing the complexities behind its outcome is the core of Dr. Heussler’s project. Dr. Heussler’s past research has discovered that a large percentage of the sporozoites that invade hepatocytes, the main parenchymal cells of the liver, fail to form infectious merozoites. “There appears to be a delicate balance between parasite survival and

elimination and we now start to understand why this is so. It all depends on the fitness of the parasite and of course, on the state of the host cell,” explains Dr. Heussler. “When the parasites are in the liver, they transmigrate through a number of hepatocytes before they finally settle in one. This happens in a very special waythe parasite invaginates the host cell plasma membrane and forms a parasitophorous vacuole which is surrounded by a host cell membrane. So, the parasite lives in a special compartment in the hepatocyte which separates it from the host cell, but still is completely dependent on the host cell for nutrients. Therefore, the parasite must ensure that the host cell survives and does not undergo self-destruction (apoptosis).” continues Dr. Heussler.

His team has found that there is a type of intracellular immune response that is responsible for the selective elimination of

intracellular pathogens, such as Plasmodium This mechanism is called autophagy. Autophagy is a cellular process for the natural elimination and degradation of unnecessary or dysfunctional cell components. It was originally considered to be a primordial degradation pathway that protects cells against starvation. Starved cells can digest part of their cytoplasm to provide enough nutrients for the most essential metabolic pathways of the cell so it can survive. However, it has been shown that autophagy has many other roles, and one of them includes the elimination of intracellular parasites, viruses, and bacteria.

“We call this elimination of intracellular parasites selective autophagy because it’s not approaching the host cell generally but very selectively the pathogen invader. Selective autophagy was originally described for the elimination of damaged organelles. For example, when a cell contains damaged mitochondria then it is not the whole canonical autophagy that is switched

on, but just the selective autophagy. The damaged mitochondria are then surrounded by a membrane and this finally leads to elimination.” explains Dr. Heussler. Even more importantly, selective autophagy is used to eliminate intracellular pathogens.

Selective autophagy is provoked while the parasite resides inside the parasitophorous vacuole that is surrounded by a membrane, the PVM for Parasitophorous Vacuole Membrane. “Autophagy has a lot to do with membranes. The PVM is completely covered with autophagy molecules and this results in the recruitment of lysosomes, the digestive organelles of a cell. We have shown that this is deleterious for about 50% of invading parasites. However, the interesting part is that the surviving parasites are also covered with these autophagy molecules but they still survive. The reason for that is that they can control the number of these autophagy molecules on the PVM by inducing a very high turnover of the PVM. They shed the PVM all the time and with it, they shed these autophagy molecules. The interesting part comes when we knocked out the host cell molecules which are responsible for the autophagy reaction, and we found that the number of surviving parasites is reduced. We were completely puzzled by this since we thought that stopping the autophagy reaction would provide an advantage for the parasite. And that is obviously not the case. This means that the parasite partially depends on this reaction” Dr. Heussler provides us with a detailed explanation.

researchers found in the first part of the project, with the help of super-resolution microscopy and many other cell biological techniques.

The second part of the project is focused entirely on the parasite side. The researchers have completed a high throughput knockout screen of parasite genes in collaboration with colleagues at the Sanger Institute in Kingston in the UK. The research group in Bern has established the entire life cycle of Plasmodium berghei, a model Plasmodium species that is infective for rodents, but not for humans. “We introduced many bar-coded knockout constructs at the same time into blood stage parasites and then extracted the DNA at all the different lifecycle stages and sent them for sequencing to the Sanger institute. Sequencing of the barcodes told us exactly where these knockout parasites were eliminated at any given life cycle stage. A very simple but powerful system to follow these parasites. In this way we knocked out more than 1300 parasite genes and identified about 180 genes that are essential during the liver stage, which is the most interesting stage for us” says Dr. Heussler. The potential implications of this knockout screen could be the generation of genetically attenuated parasites, which could then be used as live vaccine strains. It also revealed essential metabolic pathways that can be the target for new anti-malarial drugs.

Pathogen-host cell interactions during the liver stage of Plasmodium parasites

Project Objectives

The project aims to decipher the mechanisms underlying the liver stage in the life cycle of the Plasmodium parasite, the causal agent of malaria. Dr. Heussler and his team are interested in the factors that influence the potential survival or elimination of the parasite during its development in the hepatocytes, focusing on autophagy, a newly discovered intracellular immune response.

Project Funding

This project is funded by the Swiss National Science Foundation (SNSF), Grant number 182465.

https://data.snf.ch/grants/grant/182465

Project Partners

• Prof Oliver Billker, Umea University, Sweden, formerly at the Wellcome Trust Sanger Institute, UK

• Prof. Dominique Soldati, University of Geneva

• Prof. Chris Janse, Leiden University Medical Center, The Netherlands

• Prof. Vassily Hatzimanitakis, EPFL, Lausanne

Contact Details

Project Coordinator, Prof. Volker Heussler

Director

Institute of Cell Biology

University of Bern

Baltzerstr. 4

3012 Bern

T: +41 31 68 44650 (office)

E: volker.heussler@unibe.ch

W: https://www.izb.unibe.ch/research/ prof_dr_volker_heussler/index_eng. html#pane427241

The researchers went further and managed to find the reason behind this paradoxical response. “By moderate recruitment of autophagy molecules to the PVM, the parasite attracts and activates host cell signaling molecules. This in turn activates a transcription factor that regulates the expression of survival factors. In the end, it makes sense that the parasite needs to somehow influence the survival pathways of the host cell because if the host cell cannot eliminate the invader, it could simply commit suicide, which we call apoptosis. To avoid host cell apoptosis, the parasite induces the survival pathways of the host by controlling its autophagy machinery” this is what the

From a more basic research point of view, the researchers hope that with this genome-wide knockout approach, they can identify the genes that are essential for parasite survival during the liver stage of development. “Our main interest is to understand what the function of these genes is. Once we have knocked out a gene, we then really look very carefully for the phenotype of the corresponding parasites. Ideally, we would then finally combine the two big projects - the autophagy project and the parasite project, with the hope that the screen will reveal the parasite molecules that are responsible for attracting autophagy molecules to the PVM, and basically, understand the respective mechanism and the bigger picture behind it” concludes Dr. Heussler.

Dr. Volker Heussler is a Professor in Molecular Parasitology and Cell Biology at the Institute of Cell Biology, University of Bern. He is an acting director of the Institute of Cell Biology. His research is focused on the liver stage of the Plasmodium parasite, malaria’s causal agent.

There appears to be a delicate balance between parasite survival and elimination and we now start to understand why this is so. It all depends on the fitness of the parasite and of course, on the state of the host cell .

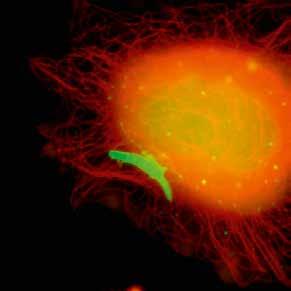

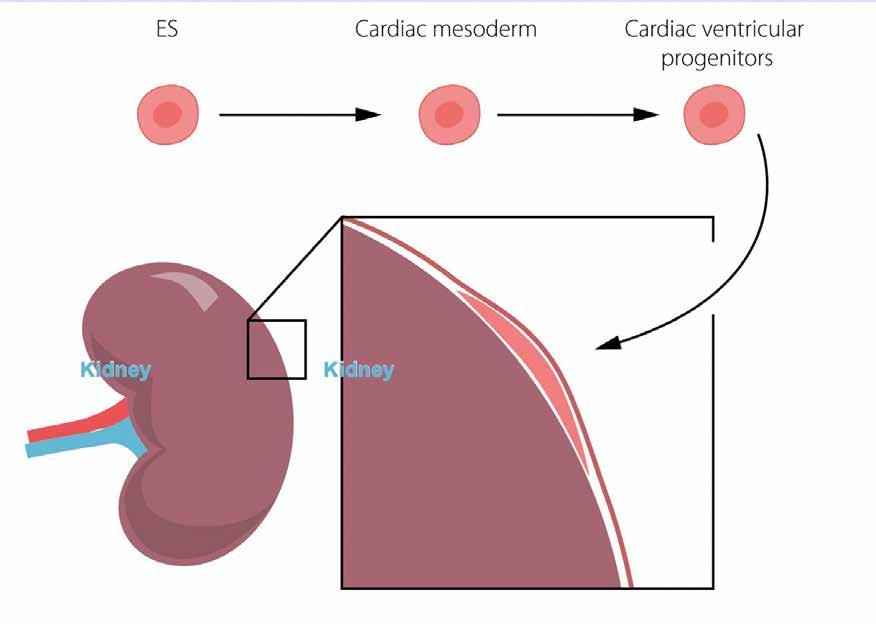

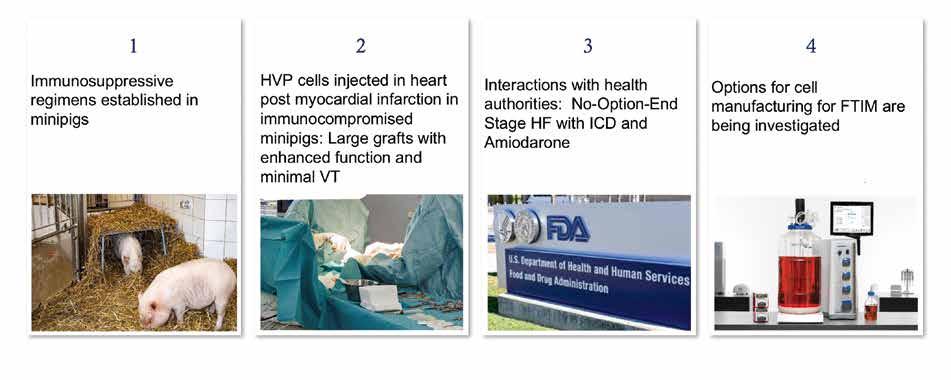

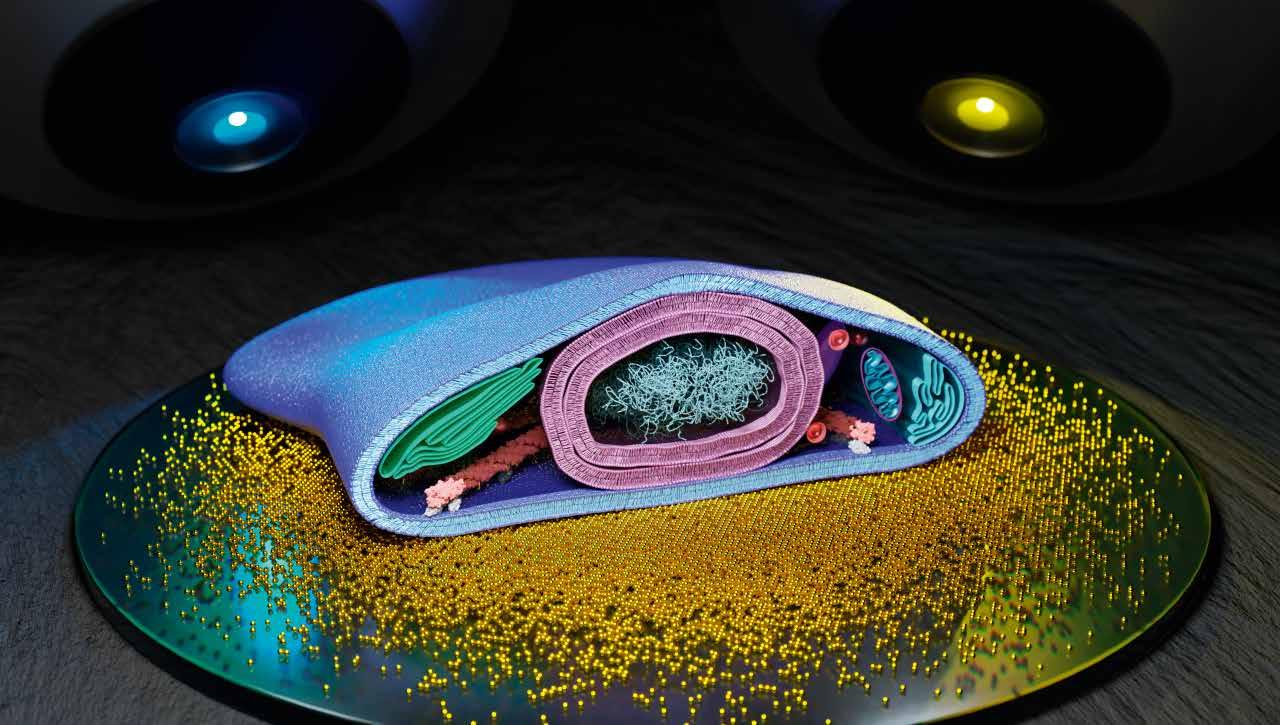

The 5D Heart Patch Project, led by Prof Kenneth Chien, has identified human ventricular progenitor (HVP) cells that can create self-assembling heart grafts in vivo. The research has the potential to offer hope to millions of people suffering from heart failure.

The 5D Heart Patch Project forms a vital step in a scientific journey that could be on the cusp of something extraordinary, with the goal of generating a fully functioning heart graft patch in vivo, in humans. Enabled with the backing of an ERC grant and with the support and involvement of Swedish pharma, AstraZeneca, and the biotech SmartCella, the ground-breaking project has profound implications to potentially provide a viable treatment for the millions of people who suffer from heart weaknesses, heart failure and damaged hearts after heart attacks.

The World Health Organisation (WHO) has stated that cardiovascular diseases are the leading cause of death in the world, responsible for the death of an estimated 17.9 million people every year, which equates to around 32% of all deaths worldwide.

“Heart failure is a progressive, chronic disease. There are drugs that work, primarily symptomatically, but nothing that stops the fundamental process of heart failure,” said Kenneth Chien, Professor of Cardiovascular Research at Karolinska Institute, and cofounder of Moderna.

“It’s the number one cause of morbidity and mortality as a single entity and is growing exponentially. The drivers are diabetes and hypertension and it’s a disease of the elderly although it can affect anyone of any age, including neonates if they have a genetic abnormality. This is one of the

The project is the latest step after twenty years of diligent searching for a specific type of progenitor cell for the heart.

“We want contracting muscle cells and it has to be a specific type because there are many different types of heart muscle cells and

What we have done is identify a cell, a kind of ‘master ventricular progenitor’ that makes only one type of cardiac muscle, but also can make its own matrix, trigger the formation of blood vessels following transplantation, migrate to the site of heart injury, prevent fibrosis, and then go on to expand to form huge grafts of functioning cardiac muscle.

single largest unmet clinical needs in all of medicine. For end-stage heart failure, there is no cure other than heart transplantation and there is a limitation in the number of available donors. Finding ways to rebuild heart muscle in heart failure is one of the holy grails in regenerative medicine, specifically regenerative cardiology and in medicine in general.”

each of the heart muscle cells has a different electrical signature. All the heart cells need to beat in synchrony, to beat ‘to a single drummer’ if you will – that’s your pacemaker. What if you have a rogue cell and it doesn’t listen to your pacemaker? You’re going to have electrical confusion and you are going to have an arrhythmia that could be life-threatening,” explained Professor Chien.

Arguably, the first milestones in this scientific quest were based on studies between 2005-2009 in a mouse, where a marker of master heart progenitors, which could make any type of heart cell, was identified. Subsequently, a progenitor cell was identified in the mouse heart that would make only ventricular muscle, the main type of muscle responsible for propelling blood into the circulation. After a search spanning over a decade, the Chien lab at Karolinska Institutet managed to identify a similar cell from human embryonic stem cells, that would only make the right type of contracting muscle cell and nothing else. The search for human ventricular progenitors or HVPs was over, but the work toward developing human cell therapies for regenerating healthy heart muscle was just beginning.

“What we have done is identify a cell, a kind of ‘master ventricular progenitor’ that makes only one type of cardiac muscle, but also can make its own matrix, trigger the formation of blood vessels following transplantation, migrate to the site of heart injury, prevent fibrosis, and then go on to expand to form huge grafts of functioning cardiac muscle. When you put this cell anywhere; in a dish, in a mouse heart, in a pig heart – and this has all been done – you make a chunk of human ventricular muscle, and it does it all on its own, sort of a ‘self-assembly’ process that is a natural program of the cell. When we saw this, initially in a mouse, I thought, this is a cell, after twenty years of searching, that is worthy of trying to put into the clinic.”

Such a heart patch can repair a damaged heart in just six weeks and the cells do the majority of repair intuitively. The process is less invasive than open heart surgery and requires an injection of the cells into the heart. The HVPs appear to be incredibly adaptive and focused in carrying out their ‘pre-programmed’ task.

The research team were encouraged by the cell’s ability to generate contracting ventricular heart tissue in so many circumstances and with just one cell it was possible to make billions more. In one experiment they found when the cells were introduced to a mouse kidney they made a beating, moving, heart muscle patch

on the kidney despite the difference in the organ. It was the same result, putting human heart progenitors from embryonic stem cells in slices of a dead monkey’s heart in a petri dish. The human progenitors took over to build a human patch on top of the dead monkey’s heart tissue and the tissue started beating.

The HVPs apparently navigate many of the challenges that present themselves with cell therapy for hearts. A major problem with implanting heart cells is when they already have a beat. This is because the heart has to maintain a consistent rhythm and a clash of rhythms in beating cells put together, can be dangerous or fatal.

Project Objectives

The discovery and development of human ventricular progenitors that form large, functional heartgraft-patches in injured pig hearts could lead to clinical studies within a few years, offering new hope to patients waiting for a heart transplant.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under ERC AdG grant agreement No 743225.

Swedish Research Council Distinguished Professor Grant Dnr 541-2013-8351, Astra Zeneca research collaboration agreement Dnr 4-2539/2021.

Contact Details

Kenneth R. Chien MD PhD

Distinguished Professor of Swedish Research Council

Department of Cell and Molecular Biology

Karolinska Institutet

T: +46 70-676 6365

E: kenneth.chien@ki.se

W: ki.se

W: https://ki.se/en/cmb/kenneth-r-chiens-group : linkedin.com/in/kenneth-chien

“The beauty of these progenitors is that they are not beating when we put them in and what we found before was that most arrhythmias occur when you put the cells in, so if the cells are already beating, they are beating to their own ‘drummer’, but when we put our cells in there, they are not beating and they have a chance to get ‘trained’.”

A team led by Karl-Ludwig Laugwitz, Professor of Cardiology at Technical University of Munich, (TUM) and Regina Fritsche Danielson, SVP and Head of Early CVRM, Biopharmaceuticals R&D, AZ, demonstrated that HVPs could form functional grafts in pig hearts following heart attack. In addition, the team demonstrated in a laboratory how the HVP cells migrated to actively seek out damaged regions of the heart to repair. When a heart attack occurs, as many as a billion heart muscle cells, known as cardiomyocytes, can die from the reduced blood supply, causing scar tissue which further deteriorates heart function. Normally, this could present an irreversible decline in heart function and health.

Chien said: “They migrate to the area of injury because they move, as they are progenitors, whereas cardiac muscle cells just want to beat. It was also discovered that they break up scar tissue, which was very surprising.”

To keep his laboratory available for purely academic research, Professor Chien created a ‘bridge’ company, called SmartCella, to work with the industry partner, AstraZeneca on the path to the development of a new heart regeneration therapy, with Chien as a senior advisor.

“I started the company that makes these cells, for toxicology studies, for the pig studies, because you don’t want your lab turned into a company – I am against that. What you want to do is enable your lab to lead to the evolution of a company. And you can work interactively with the company if it is allowed, which at KI it is. And you let people who know how to make drugs do that and you help them. It’s early days still.

Dr Kenneth Chien is a Distinguished Professor of the Swedish Research Council in the Department of Cell and Molecular Biology at the Karolinska Institutet and the Co-Founder of Moderna, and is a global leader in cardiovascular biology, medicine, and biotechnology.

For hearts that are damaged by heart attacks and strokes, this could be game-changing, in terms of making a recovery. This kind of selfrepair treatment has not been possible before.

“Conveniently, they don’t keep dividing forever,” Chien continues. “They divide, and they stop growing at the right time because that is how a heart is built, so they’re programmed for true self-assembly. We are still trying to understand everything they can do.”

“We have discovered that these cells exist and the amazing properties they have. We show that it improves function after a heart attack in a pig, which is an important step. Now what we have to do is to identify an appropriate dose, decrease the risk for arrhythmias and devise an initial clinical study. At this stage, I don’t want to over-promise and I don’t want to say this is guaranteed to work. What I think is, this is worthy of further testing.”

The project has moved over into industry and a lot of work remains to be done. Despite the tests and trials that are ahead, there is a tangible sense of anticipation from those involved that a novel therapy could emerge, which has the potential to address one of the biggest health challenges of our time.

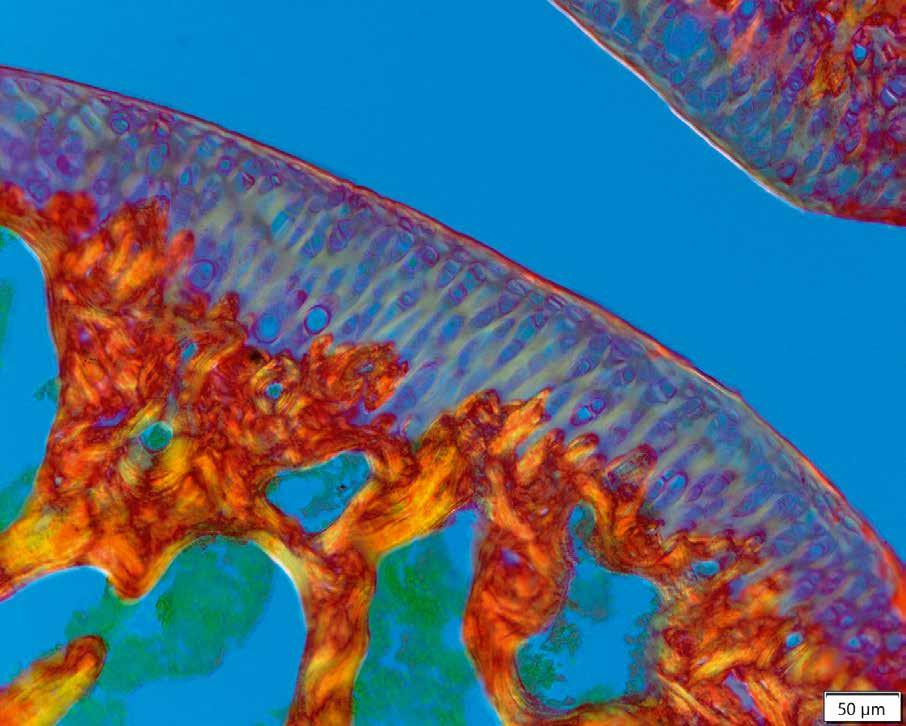

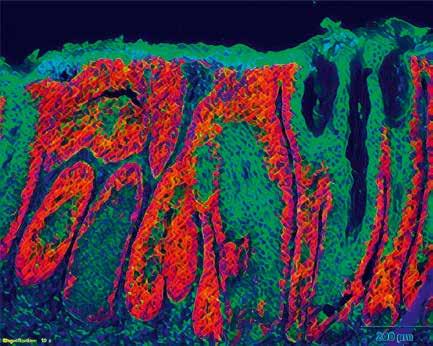

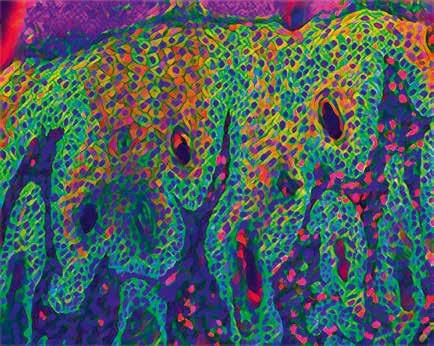

Chronic systemic inflammation (CSI) is associated with the progression of different diseases, including psoriasis, atopic dermatitis and cancer. Researchers in the ERC-funded CSI-FUN project are investigating the underlying molecular mechanisms behind CSI and how it spreads through the body, as explained by Erwin Wagner, the principal investigator of the study.

An inflammatory response may originate in a given organ like the skin, but it can then spread more widely and affect the entire body, causing serious health problems. Based at the Medical University of Vienna (MUV), Erwin Wagner and his group are investigating the underlying mechanisms behind CSI. “We are trying to find the mechanisms by which the original signal – which elicits the inflammatory response – spreads through the body in a systemic way,” he outlines. This research relies to some extent on mouse models for two common inflammatory skin diseases –psoriasis and atopic dermatitis – in which CSI plays a major role in progression. “We aim to describe how inflammation from the skin spreads through the body and affects different sites and organs,” says Erwin Wagner. “Around a third of psoriatic patients develop psoriatic arthritis, a form of joint disease, which often emerges many years after patients are diagnosed with psoriasis.”

The aim of the study is to build a broader picture of the communication between the skin and the joints, and to identify which molecules, signalling pathways and cells are involved. Researchers in this project are also looking at CSI in the context of cancer associated cachexia (CAC), a metabolic syndrome which can develop in the late stages of cancer and is associated with weight loss. “Many tumours are highly inflamed, and this inflammation can then spread throughout the body, leading to weight loss,” explains Wagner. Rather than looking at the actual tumour itself, the focus of the Wagner group

is the communication between the tumour and the whole organism. “We’re looking at the cross-talk, the communication that takes place between a tumour and the organism. We’re investigating what happens when the tumour sends out signals to start inflammation,” he says. “In each of these cases we’re looking systemically. For instance, in cachexia we look across the different organs in the body, keeping an open mind about what we might find.”

Researchers in the Wagner group ultimately aim to identify the factors behind the initiation of CSI, which then kicks off a cascade leading

to the progression of disease. One important event in the development of CSI is the release of inflammatory mediators, including inflammatory cytokines like IL-6 and IL-17, which activate pro-inflammatory signalling pathways. “These cytokines are important therapeutic targets for both skin diseases and CAC,” outlines Wagner. In his research, Wagner is using mouse models to investigate the molecular mechanisms behind CSI, while he also has access to samples from human patients. “My lab is well-known for generating innovative mouse models of human diseases,

but it was clear from the outset of this project that we would need to have both mouse mechanistic data and human patient samples, in parallel,” he stresses. “One of the attractions of moving to the MUV was to be close to clinicians, so I can now work with them on samples they collect from patients with psoriasis, atopic dermatitis and CAC.”

This research has yielded some important insights. On the basis of their work with mouse models, Wagner and his colleagues have published a paper on CAC demonstrating that after a tumour becomes inflammatory, the patient first loses fat. “The fat burns away, then the muscle becomes affected, and then the other organs,” he explains. A patient who

the first event that happens when disease is induced,” outlines Wagner. In his research, Wagner has explored the impact of removing these proteins on psoriasis and psoriatic arthritis. “Is the disease prevented? Or does the disease have a less severe impact, is it milder?” he asks. “We generated a mouse in which these proteins are absent in all cells, which we call a complete knock-out mouse. In this case the psoriatic disease is very mild. This is positive, but is this because of a systemic effect? Or is it because the S100 proteins have been removed from the skin?”

The next step is to remove the S100 proteins only in the skin, and then assess whether the same effect is observed. If it’s an organspecific effect then removing these proteins only in the skin should also lead to a milder disease, but Wagner says this is not what he and his colleagues observe. “We get more disease, so it’s actually worse. Therefore, it’s not the organ-specific effect but rather the systemic effect which needs to be treated,” he says. Researchers have demonstrated that the important factor is the CSI, rather than celltype specific inflammation in the skin, while Wagner is also pursuing further research into the S100 proteins. “We are also trying to understand whether these proteins play a role

The work with mouse models within the project involves modulating certain genetic events, which affect the severity of a given pathology. For example, in the context of psoriasis, specific proteins are removed from the skin of these mice and within two weeks this genetic alteration will cause a lesion resembling the human disease. “We can then essentially follow the fate of this lesion over time,” outlines Wagner. The development of the lesion can be followed over extended periods, an important consideration in the project given that the systemic manifestations of CSI will not typically be apparent in two weeks. “We can modulate the severity of the lesion, to look at both severe and mild examples. The mice are raised in pretty much standard conditions, so factors like diet and the light/dark cycle aren’t particularly influential. We essentially rely on the genetic manipulation leading either to the induction or removal of a gene - or genes - to cause certain pathologies,” continues Wagner.

loses a certain amount of weight within six months is categorised as pre-cachexia, which is an important window for treatment, before their condition worsens. “A lot of research focuses on this pre-cachexic stage, where we can study what signals might be important in terms of kicking off the cascade,” says Wagner. “In the pre-cachexic stage the patient has not yet suffered from any major weight loss, but if it progresses further then they enter cachexia, a deadly condition. New treatments should ideally be targeted at the early changes before the patient comes down with a metabolic impairment.”

Another important paper arising from the group’s research is related to the function of the S100 proteins, a family of molecules that are highly up-regulated when something goes wrong in the body, acting effectively as a danger signal. “We’ve seen that these S100 proteins, which are also called alarmins, are highly up-regulated in our mouse models and also in samples from human patients. In the mice, the up-regulation of these proteins is

We are trying to find the mechanisms by which the original signal – which elicits the inflammatory response – spreads through the body in a systemic way.The CSI-Fun research team in 2023 with early stage researchers, post-docs and technical assistants from 7 nationalities. Photo credit: Andreas Ebner (MUV).

in atopic dermatitis. These alarmins are also anti-microbial proteins, which can reduce the microbial load, that might be a factor behind the phenotypes that we see,” he continues.

The role of inflammation in osteoarthritis is another interesting avenue of research. “It has long been thought that inflammation has nothing to do with osteoarthritis, but more and more evidence is now emerging that in fact it may play a role. We have good models for osteoarthritis and for psoriatic arthritis and we are studying and comparing the two diseases,” says Wagner.

Professor Wagner is also very interested in investigating the importance of inflammation in the context of skin cancer. A number of reports have suggested that fewer cases of specific skin

cancers are found in patients with psoriasis than in the general population. This would suggest that CSI does not always promote cancer, but in some cases can in fact suppress certain types, a topic that the Wagner group is currently exploring. “We have established several mouse models for psoriasis in which we induced a mutation, which normally leads to skin cancer development. On top of this mutation, we also induce CSI and then we ask; does this suppress or promote skin cancer development?” These experiments are ongoing and we are excited to find out whether CSI is tumour-promoting or suppressive in this context. For this challenging project, we received additional EU support from H2020 (MSCA-ITN-CancerPrev-859860).”

Chronic Systemic Inflammation: Functional organ cross-talk in inflammatory disease and cancer

Project Objectives

The goal of CSI-Fun is to understand how Chronic Systemic Inflammation (CSI) is involved in Inflammatory Skin Diseases, Arthritis and Cancer. We focus on whole body physiology and perform experiments in model systems, from the petri dish to mice and samples donated by patients, to discover new biomarkers and better preventive and therapeutic strategies.

Project Funding

This project has received additional support from the Medical University of Vienna and the European Union’s Horizon 2020 research and innovation programme (MSCAITN-2019 -859860: CANCERPREV)

Project Partners

This ERC advanced grant has been fully allocated to the host institution (Medical University of Vienna).

Contact Details

Principal Investigator, Erwin Wagner, PhD Univ.-Prof. Dipl.-Ing. Dr. Medical University of Vienna

Department of Dermatology

Department of Laboratory Medicine

Anna Spiegel Research Building

Lazarettgasse 14, AKH BT25.2, Level 6 1090 Vienna, Austria

T: +43 1 40400 77020

E: erwin.wagner@meduniwien.ac.at

W: https://www.meduniwien.ac.at/hp/ dermatologie/wissenschaft-forschung/ genes-and-disease-group-erwin-wagner/

Erwin Wagner has pioneered technologies for engineering the mouse genome producing highly instructive mouse models that have revealed mechanisms of development, inflammation, metabolism and cancer. He made ground-breaking discoveries about the roles of AP-1 (Fos/Jun) transcription factors and recently provided key insights to the pathogenesis of cancer cachexia, offering new therapeutic interventions.

Latifa Bakiri joined Erwin Wagner´s team in 2001 as a post-doc and then staff scientist, she focusses on optimising genetically engineered mouse models to study inflammation, metabolism and cancer.

Implants can enhance quality of life, but it’s essential to first ensure that they are safe and will function effectively in the body. We spoke to Dr. Anna Igual Munoz and Dr Stefano Mischler about their work with Prof. Dr Brigitte Jolles-Haeberli in investigating electrochemical reactions at the interface between biomaterials and the surrounding biological environment, such as body fluids and tissue.

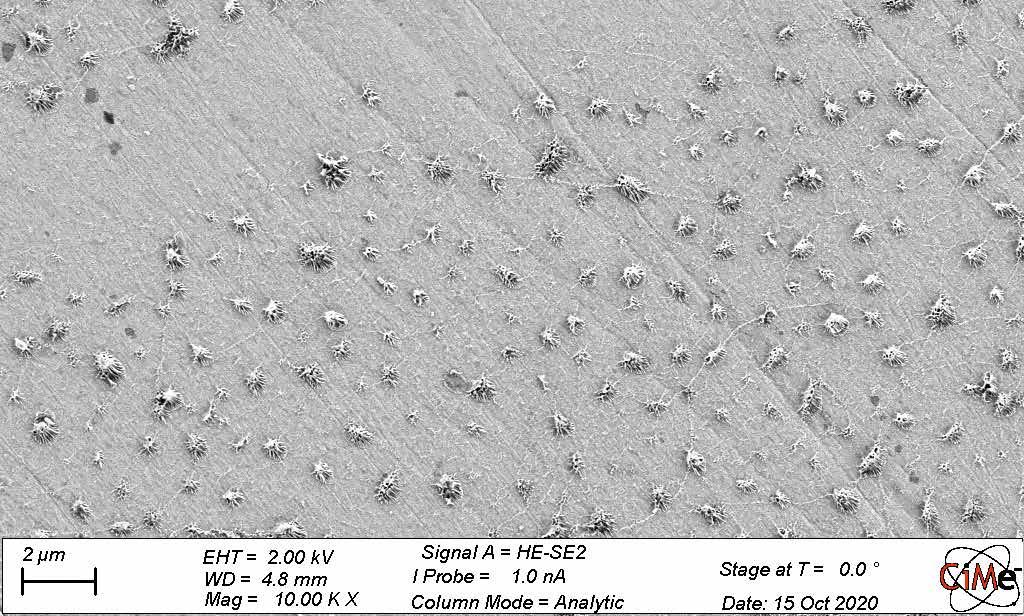

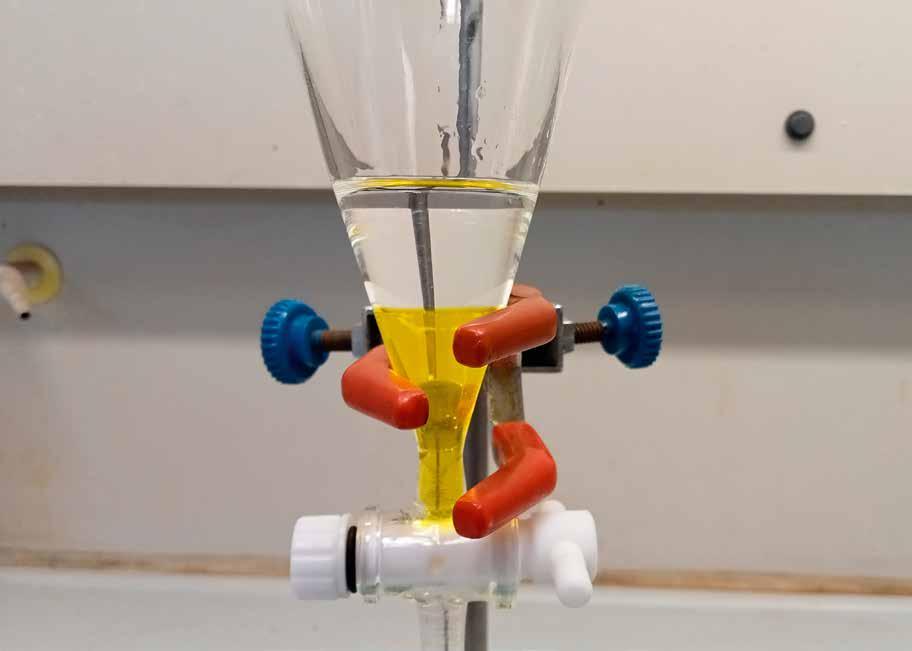

A high level of resistance to corrosion is highly desirable in an implant material, as the release of metal ions can cause adverse tissue reactions and eventually lead to the body rejecting the implant. As Senior Scientist in the SCI STI SM Group at EPFL in Lausanne, Dr Stefano Mischler is investigating how metals like titanium react with synovial fluid, the liquid which effectively lubricates our joints. “We carry out electrochemical measurements and look at the reactions that take place on the metal,” he outlines. While previously most researchers used simulated body fluids to investigate electrochemical reactions in the body, Dr Mischler and his colleagues, in particular Yueyue Bao, PhD student, are using synovial fluids collected directly from patients during implant surgeries done by Prof. Jolles-Haeberli. “We have brought our lab very close to the surgery room. Once the synovial fluid is recovered when it falls into the operative field during the procedure, it’s rapidly transferred to us and we can directly do the measurements,” explains Dr. Anna Igual Munoz, Scientific Consultant to the SCI STI SM Group.

This approach could lead to deeper insights into the reactions which occur at the interface between biomedical materials and surrounding fluids, and ultimately help scientists develop improved and patienttailored implants. The metal is exposed to the synovial fluid, and then electrochemical measurements are taken, from which researchers aim to build a fuller picture.

“With our knowledge of corrosion and electrochemistry, we can interpret the results and obtain information about not only the corrosion rate, but also the behaviour of the liquid,” says Dr Mischler. Researchers are using several electrochemical and surface analysis techniques including Auger Electron Spectroscopy (AES), to determine surface changes of the metal after being in contact with the body fluid. “AES is a very effective technique, it’s surface sensitive and it has a high lateral resolution,” continues Dr Mischler. “With AES we look at the topography of

the material but we also have chemical information from the outermost surface, the first atomic monolayers.” A technique called infrared spectroscopy is also being used in the project, which provides information about the different functional groups which are present and enables researchers to discriminate between them.

proteins on the surface,” he explains. “The higher the oxygen concentration, the higher the corrosion rate of the metal. But the higher the protein concentration, the more adsorption and lower oxygen. A good patient has low oxygen, and a lot of proteins.”

This type of patient is less likely to react aggressively to the implant and reject it. While

The evidence gathered so far suggests that the key parameters in terms of determining the corrosion rate of implants are the interplay between oxygen content and the amount of organic components (i.e. proteins) in the body fluid. “Titanium is passive and its corrosion rate is affected by the reduction of oxygen. Electrochemical measurements suggest that oxygen content varies between patients,” outlines Dr Mischler. Another important consideration is the amount of proteins or organic molecules in the synovial fluid, says Dr Mischler. “This affects the adsorption of

titanium implants work pretty effectively in general, some patients experience adverse reactions, which will affect durability. “In cases where patients react unfavourably, implants don’t last more than 10-15 years on average. This may be fine for an older individual, but not for a younger person,” says Dr. Igual Munoz. A major challenge now is to increase the durability of these implant materials, making them suitable for all patients, particularly with demand set to increase further as life expectancy rises. “Implants are intended to improve a patients’

The higher the oxygen concentration, the higher the corrosion rate of the metal. But the higher the protein concentration, the more adsorption and lower oxygen. A good patient has low oxygen, and a lot of proteins.Human synovial fluid samples.

quality of life, enabling them to keep doing the things they enjoy,” points out Dr. Igual Munoz. “We have an aging population, and many of these people want to stay active for longer.”

The ideal scenario is to give each patient the right implant for them individually, improving biocompatibility so that the implant is accepted by the body. The project’s research will make an important contribution in this respect, with Dr Mischler and Prof. JollesHaeberli aiming to help more accurately predict how an individual patient will react to an implant material. “This is a really longterm oriented project, and we can follow the release of the material following surgery over the course of years. We have a predictive tool, and are also interested in blood analysis,” he outlines. The project’s work could also help researchers anticipate the sensitivity of patients to the wear of a joint, as well as point the way towards improved testing methods to assess the long-term performance of implant materials.

“Some companies are using hip joint simulators to test implants in vitro. These machines are used to test the materials in mechanically demanding circumstances over extended periods” continues Dr Mischler. A lot of attention has historically been focused on assessing the impact of weight and the biomechanics of patients on the material, while by contrast the chemistry of the synovial fluid has been relatively neglected. However, it has since been shown that the interactions of the metal with the synovial liquid are in fact highly important. “This importance is now more widely recognised, and people are thinking about how they can improve their simulator tests and make them much more predictive,” says Dr Mischler. This research could also inform work to develop

and manufacture more effective implants which are less likely to be rejected. “There are some surface structures that are more prone to the adsorption of proteins, which is a favourable mechanism. We can promote this with certain materials,” says Dr Mischler. “There is interest in developing in vivo surfaces tailored to patients.”