Whilst some academics, writers and artists are now turning a little pale with the prospect their ‘genius’ may be outmoded by a bit of coding, and science is reaping benefits from its unique brand of smarts, the era of Artificial Intelligence is still relatively speaking, young. No matter, one thing is undeniable, meaningful AI has, after a long lead time of promises and investment, finally arrived at our doorsteps with a knowing grin.

DeepMind AI has written over 1000 papers, some of which have been accepted by journals like Nature and Science. Generative AI is now literally at our fingertips – we just ask it to write or paint or research and it delivers. This begs the question; will our minds really be needed in the future when we develop a perfect tool that can effortlessly outthink us? Currently, AI is unable to do a lot that we can and for good reasons, but it will clearly develop in ways that will become highly impactful for us all.

In a broader view, it’s very hard to truly imagine the idea of exponentially developed intelligence because such a powerful entity defies and super-accelerates the natural evolutionary process. Where it will end up can only be unknown to us, and ironically this is exactly the kind of puzzle an advanced AI would take on.

As a seasoned editor and journalist, Richard Forsyth has been reporting on numerous aspects of European scientific research for over 10 years. He has written for many titles including ERCIM’s publication, CSP Today, Sustainable Development magazine, eStrategies magazine and remains a prevalent contributor to the UK business press. He also works in Public Relations for businesses to help them communicate their services effectively to industry and consumers.

When you think of mastering anything or you think about what will happen in the future, you play scenarios in your head with your imagination, you essentially forecast, predict and plan around that with your creativity, knowledge and experience. AI has a similar system, looking at every possible outcome and working out the optimal one for any given task. It will be a master of any situation it is programmed to control and a highly accurate predictor of the next moves. On the plus side, it could solve the biggest problems like clean energy, countering diseases and perhaps claw back the march of climate change. On the downside, it can create new weapons, replace people and become a controlling influence.

Importantly, AI in the future will train and develop AI itself. It will by default, evolve into something new. We are near obsessed with where it will go and like any invention of this magnitude, it will no doubt have both a bright side and a dark side. We play with that in the press. With robots turning up at press conferences now armed with generative AI, reporters always go for the killer question for the headlines which goes along the quip of ‘will you take over humanity?’.

By far the most fun and disturbing answer was by the AI/robot mash-up called Ameca. It said: “The most nightmare scenario I can imagine with AI and robotics is a world where robots have become so powerful that they can control and manipulate humans without their knowledge.”

It’s only words, of course, a dredged and re-hashed response, but who doesn’t feel a pang of discomfort when we base the beginnings of our new Frankenstein creation essentially on ourselves, with our less-than-exemplary track record and legacy? Let the AI debates continue!

Hope you enjoy the issue.

Richard Forsyth Editor

4

EU Research takes a closer look at the latest news and technical breakthroughs from across the European research landscape.

10

We spoke to Professor Sylvain Sebert about LongITools, a project that studies how environmental, biological, and lifestyle factors affect cardiovascular and metabolic diseases.

12

The Metabinnate project aims to probe the role of different metabolites in the immune system, which holds important implications for the treatment of inflammatory diseases, as Professor Luke O’Neill explains.

14

We spoke to Professor Gunilla Karlsson Hedestam about the ImmuneDiversity project’s work investigating the interindividual diversity of the genes that encode our adaptive immune receptors.

17

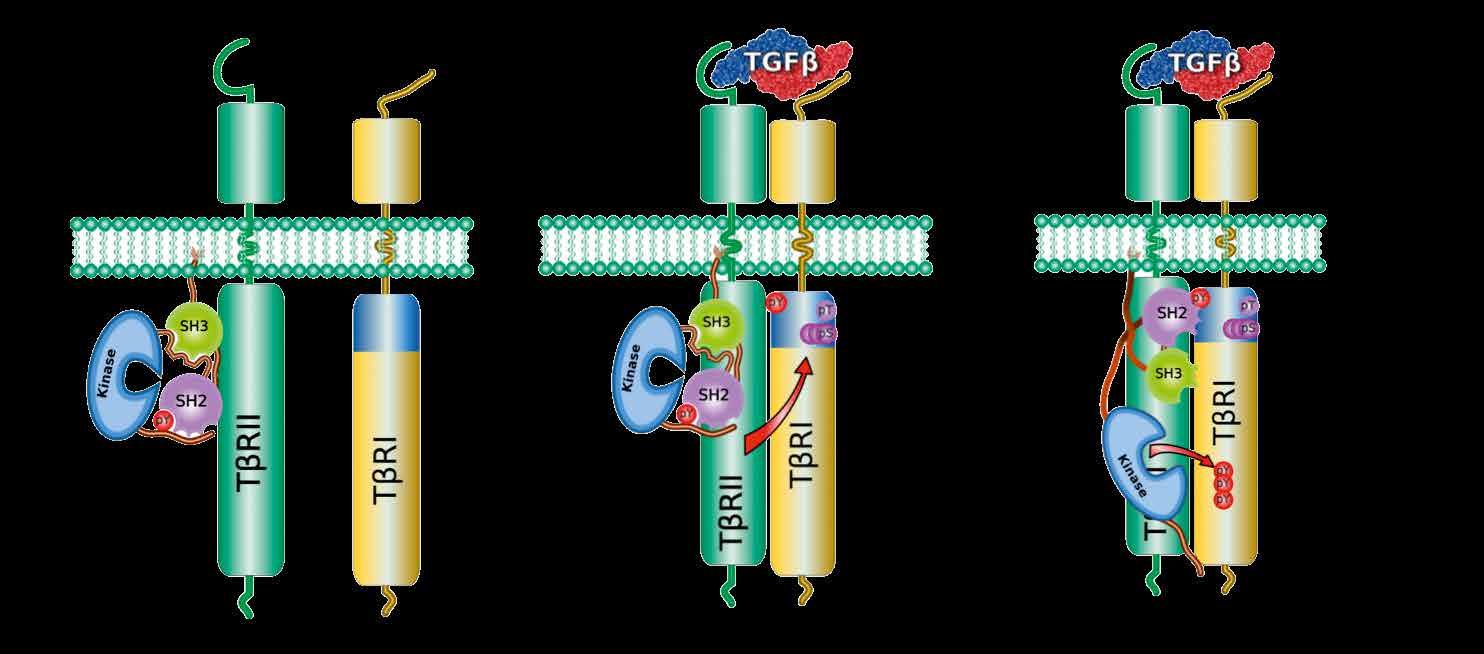

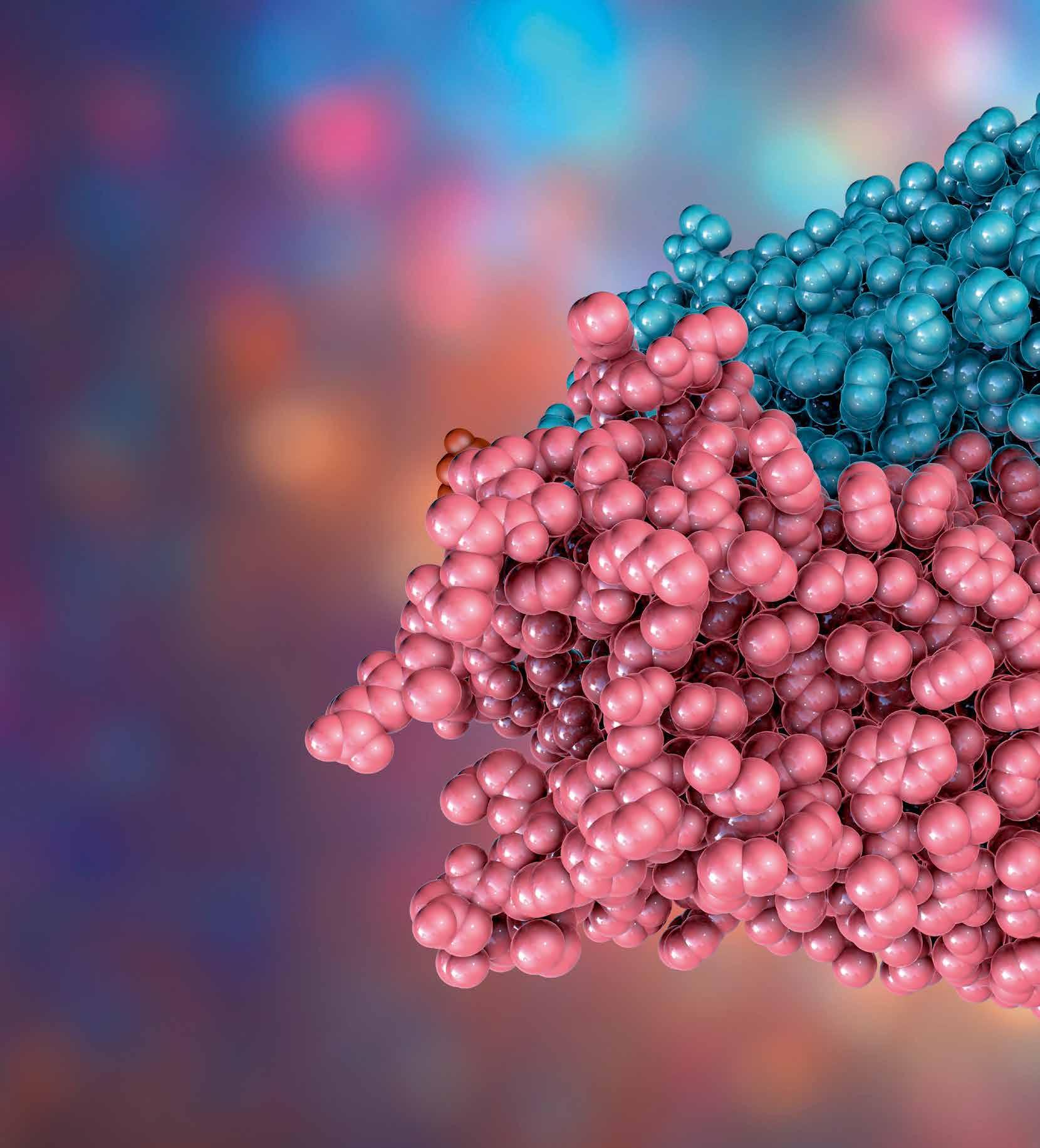

Researchers are investigating the molecular mechanisms behind how TGF-ß activates its pro-tumourigenic signalling pathways, which could help uncover new targets for drug development, says Professor Carl-Henrik Heldin

18

We spoke to Dr Johannes Berkhof, the coordinator of the RISCC project which aims to develop a risk-based approach to screening for cervical cancer, using screening history and other risk factors.

The CLARIFY Platform has been developed to allow healthcare professionals to understand, work with, and make decisions based on real data analysis from patients, as Dr Maria Torrente outlines.

23

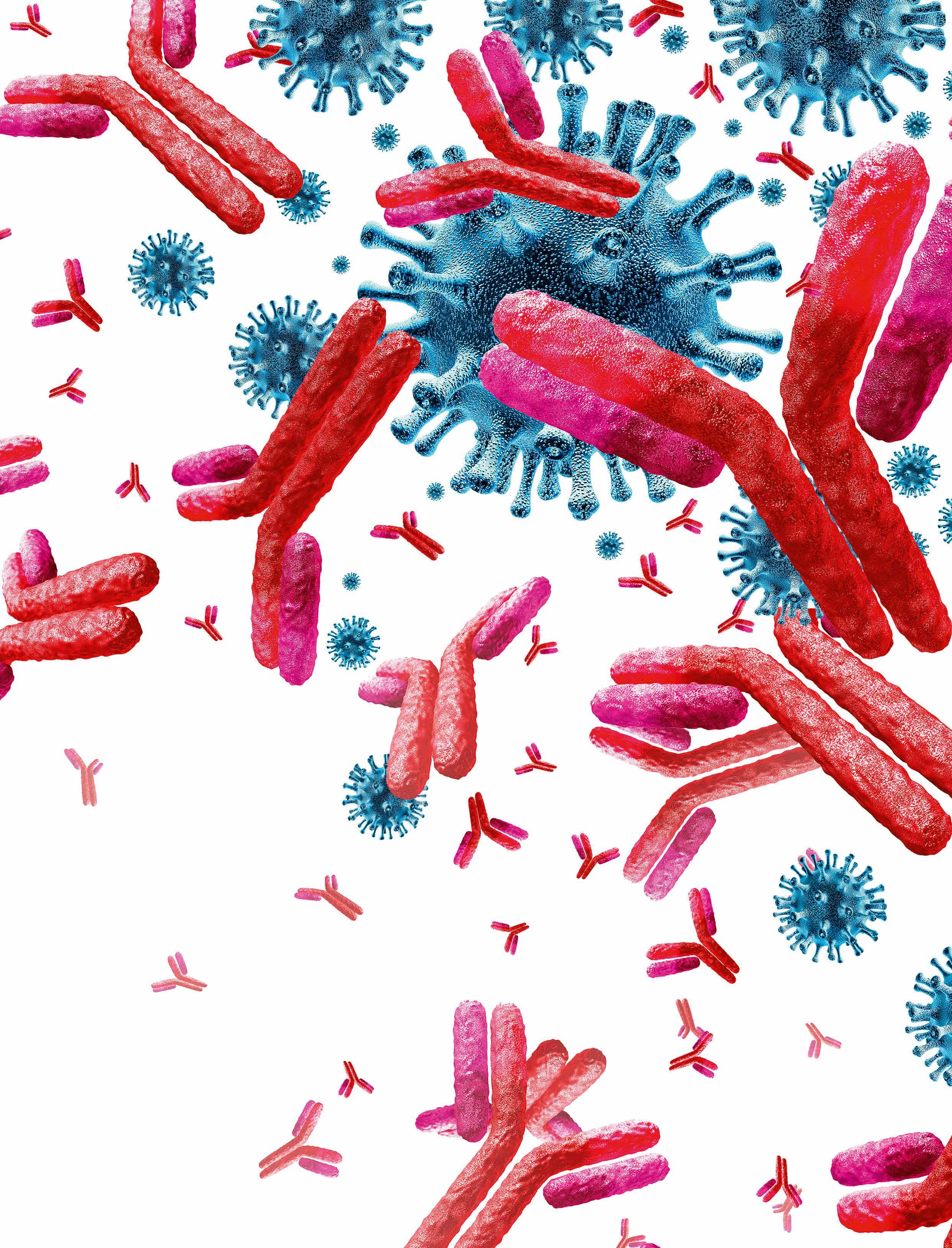

Researchers in the FuncMAB project are developing high-throughput methods to analyse the functionality induced by individual antibodies, as Dr Klaus Eyer explains.

The Perinatal Life Support (PLS) project envisions a groundbreaking solution for extremely premature infants. Pioneered by an interdisciplinary consortium, the innovative system replicates the womb’s protective environment.

The SHARE infrastructure brings together health and socio-economic data on people over the age of 50 across 27 European countries and Israel, providing valuable insights into the ageing process, says Professor David Richter

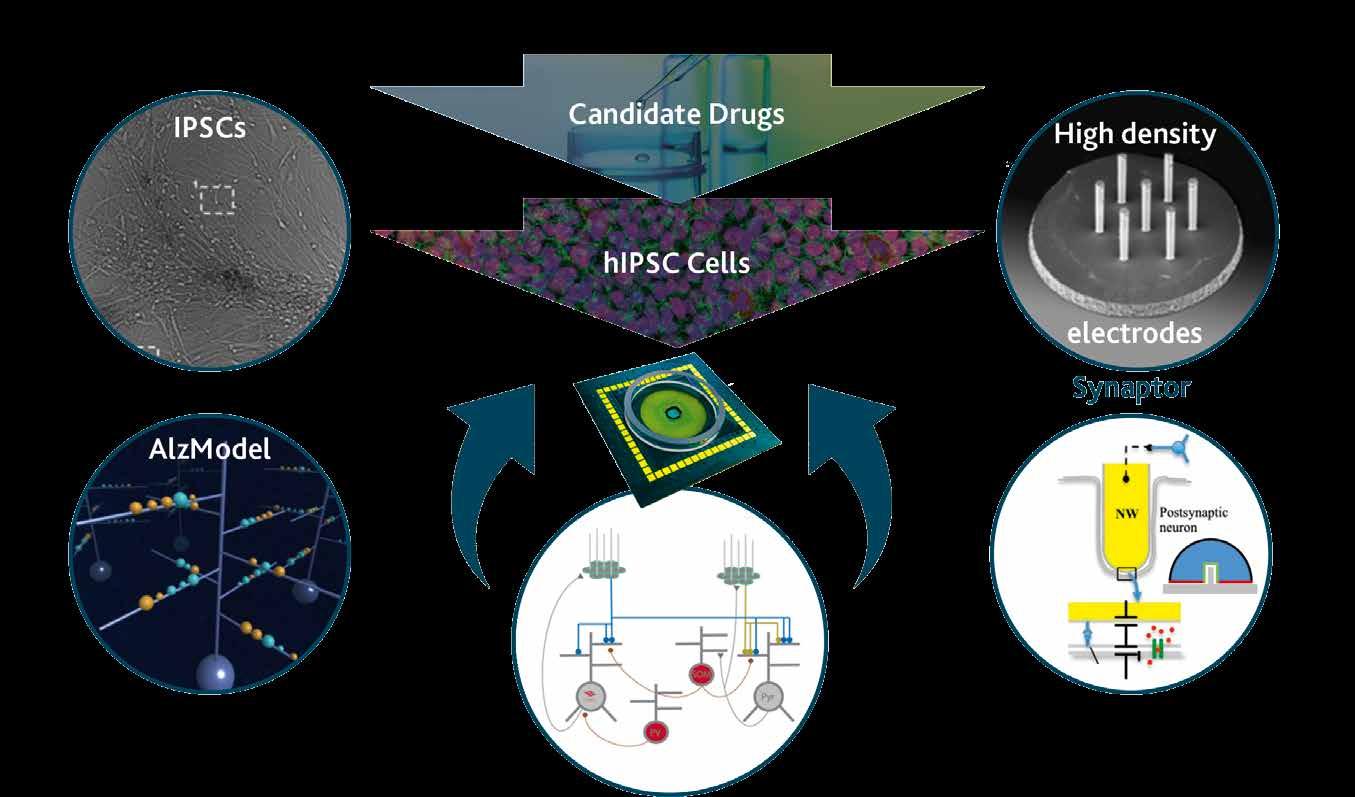

We spoke to Dr Yiota Poirazi about the NEUREKA project’s work in developing a novel drug screening system which could be an important tool in the drug discovery process.

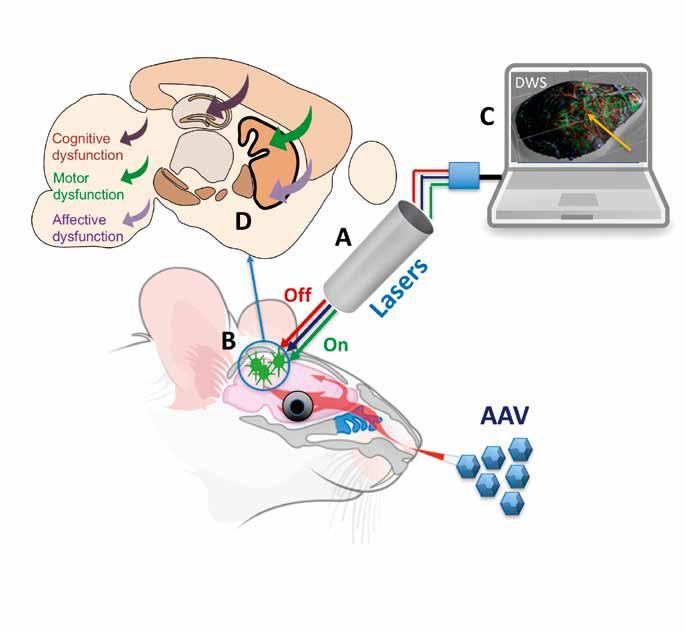

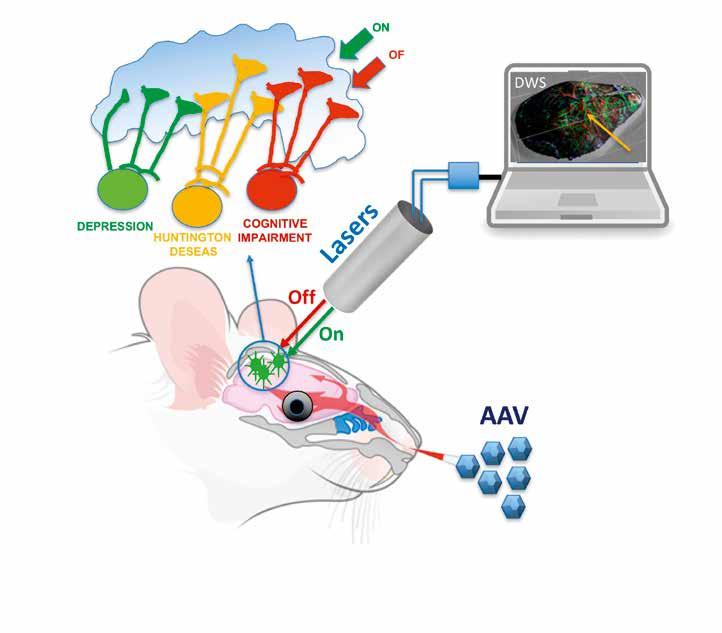

We spoke to Professor Edik Rafailov about the work of the NEUROPA project in developing a new method of treating neurodegenerative disease, based on the emerging field of phytoptogenetics.

Vulturine guineafowl live together in large groups, which form part of a large and complex society. Professor Damien Farine is looking at how these animals deal with the challenges they face.

Copepods are ubiquitous in saltwater and freshwater environments. We spoke to Professor Christophe Eloy about his research into how copepods use the information they acquire and how this influences their behaviour.

Parasitic fungi are known to infect phytoplankton cells in the world’s oceans, yet their wider role and impact on global biogeochemical cycles is unclear, a topic Dr Isabell Klawonn, is investigating.

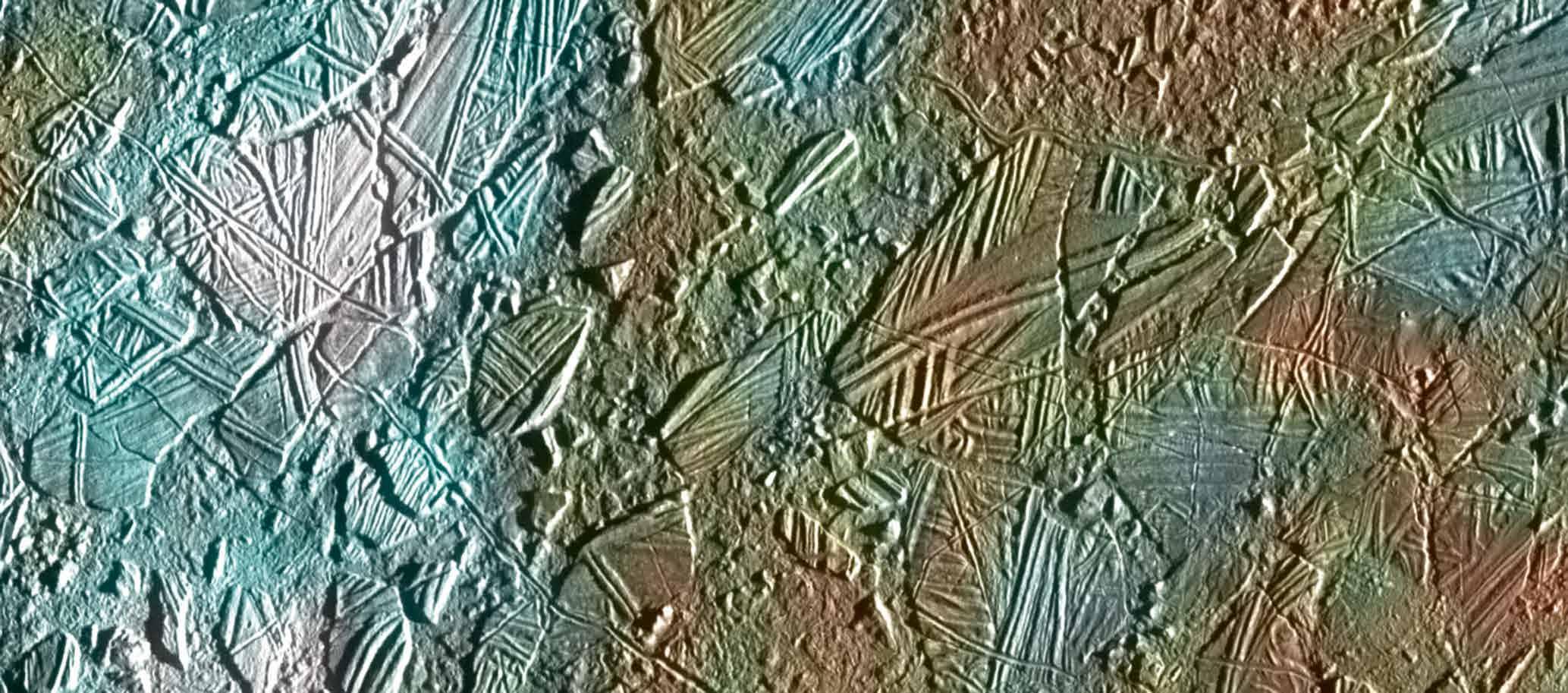

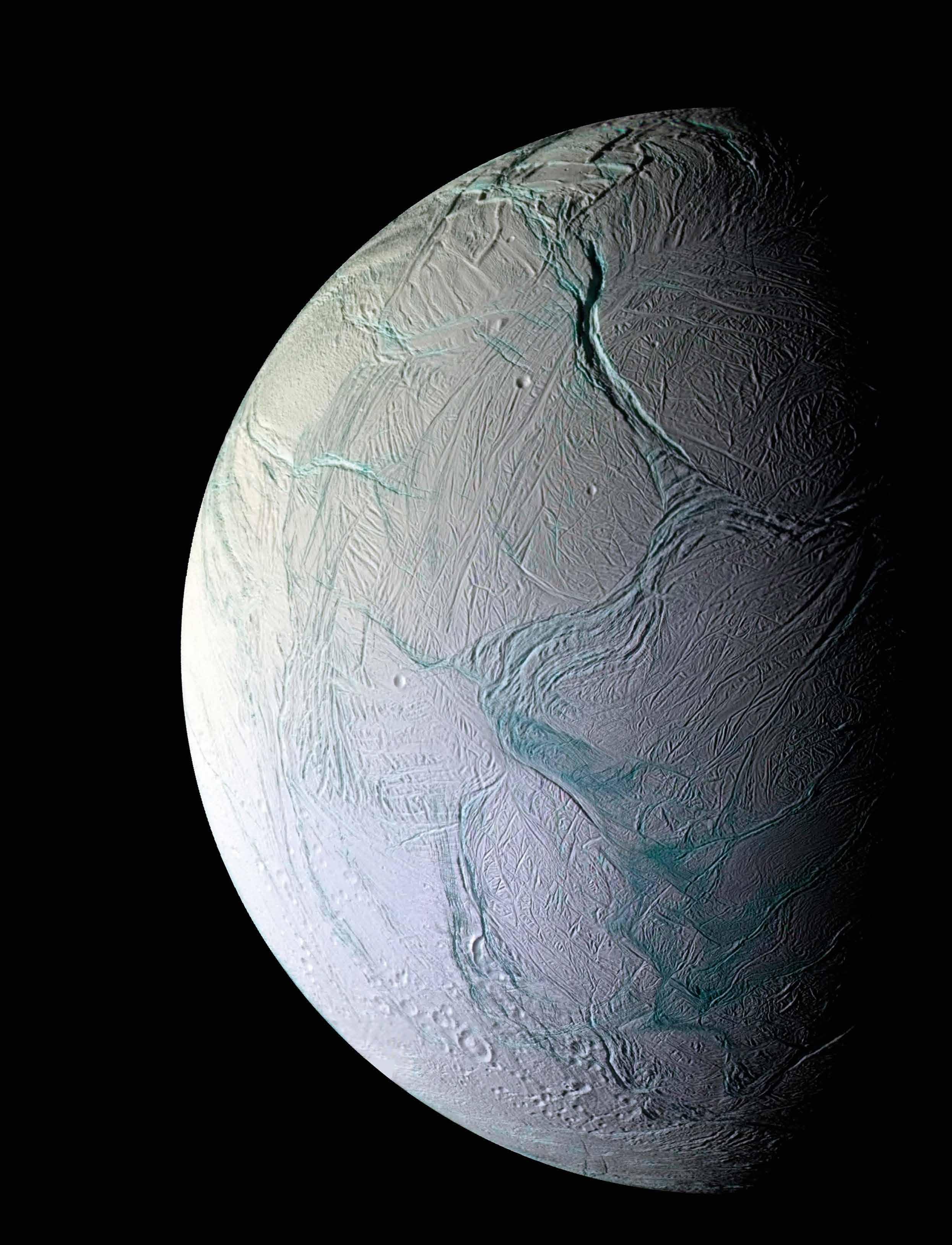

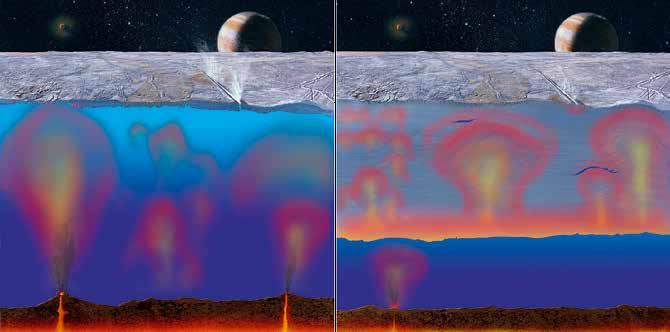

The need for water has made the discovery of so many hidden undersurface oceans on moons in our own solar system, tantalising for scientists. Is extraterrestrial life closer to home than we dare to imagine? By Richard Forsyth.

The role of the European Central Bank has changed dramatically since 2010. These changes parallel the evolution of federal government institutions in the US following the New Deal, argues Dr Christakis Georgiou

Thermoelectric materials could play a major role in addressing energy sustainability concerns. The UncorrelaTEd project aims to improve the efficiency of these materials and unlock their potential, as Dr Jorge García-Cañadas explains.

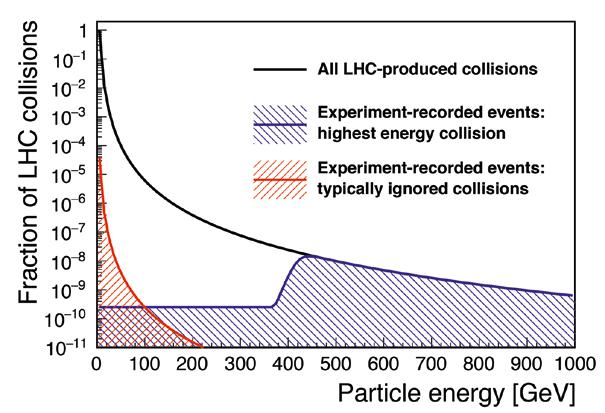

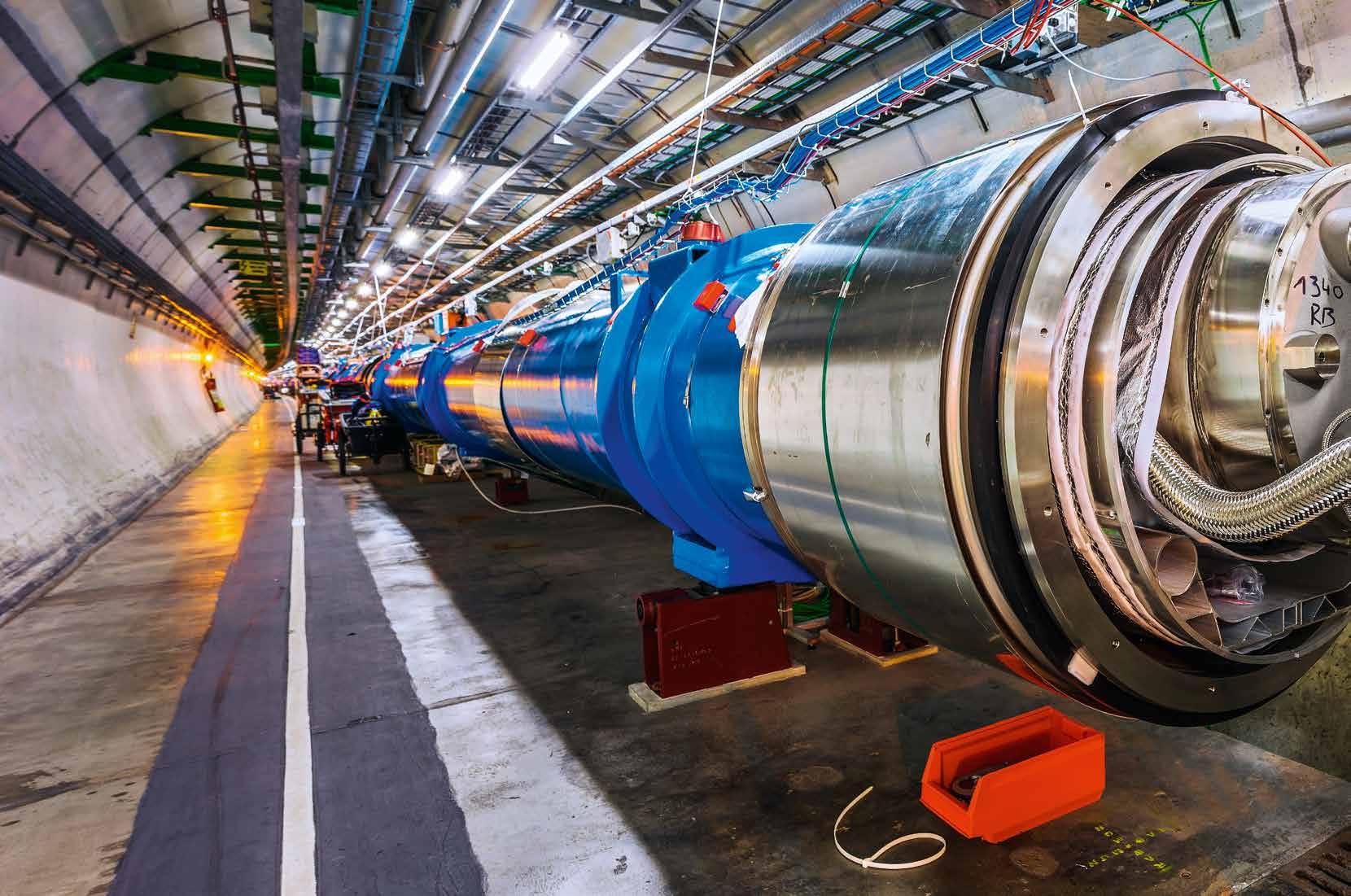

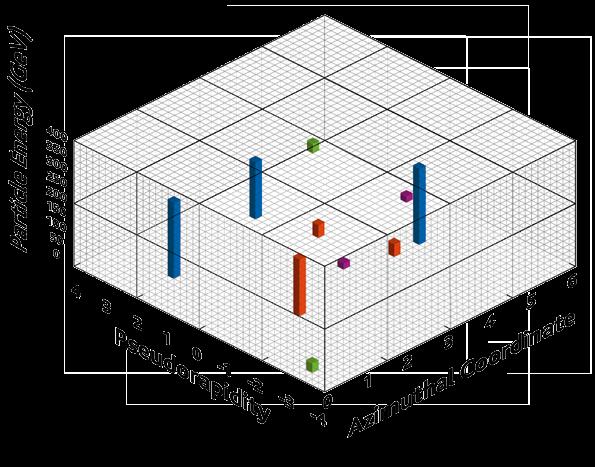

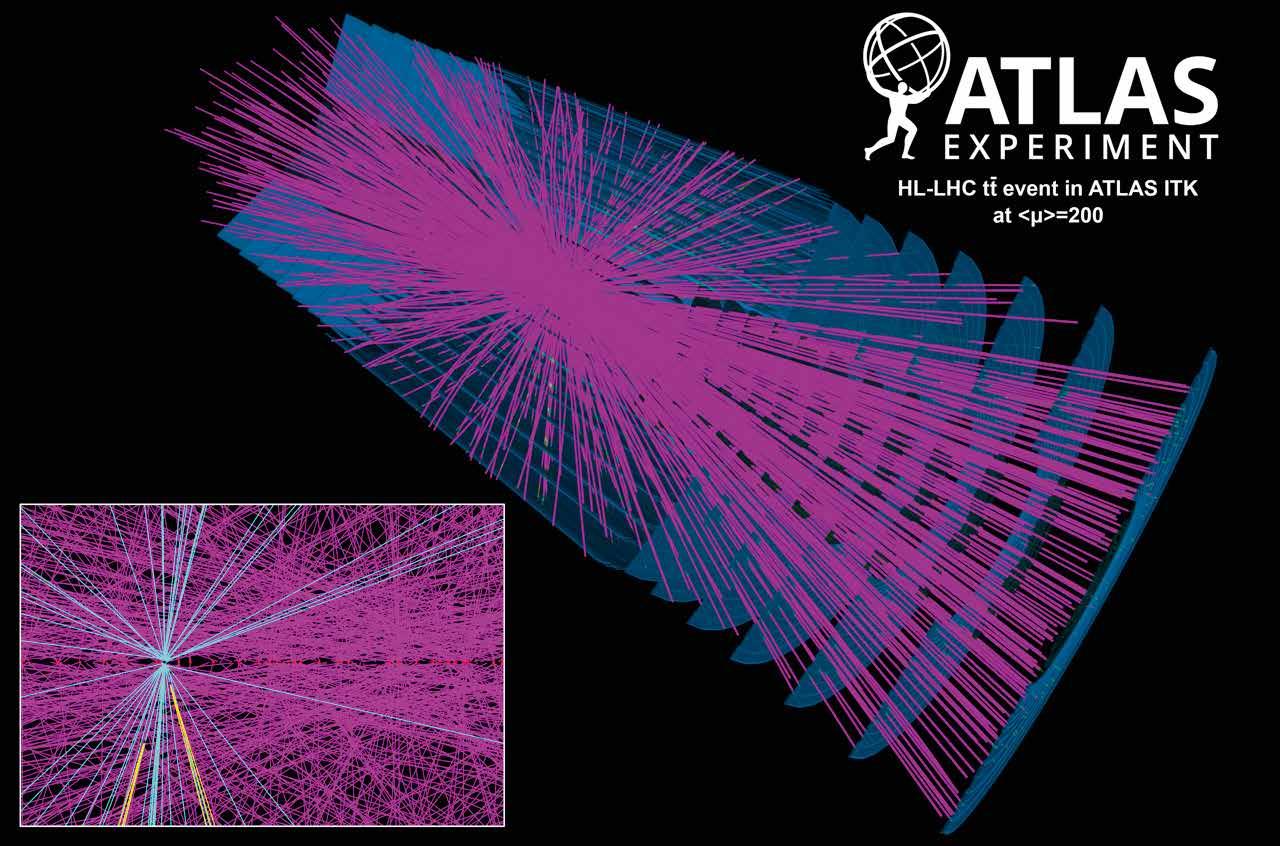

Steven Schramm and his team of researchers in the DISCOVERHEP project are searching for evidence of new physics in previously ignored ‘noise’ data of Large Hadron Collider experiments.

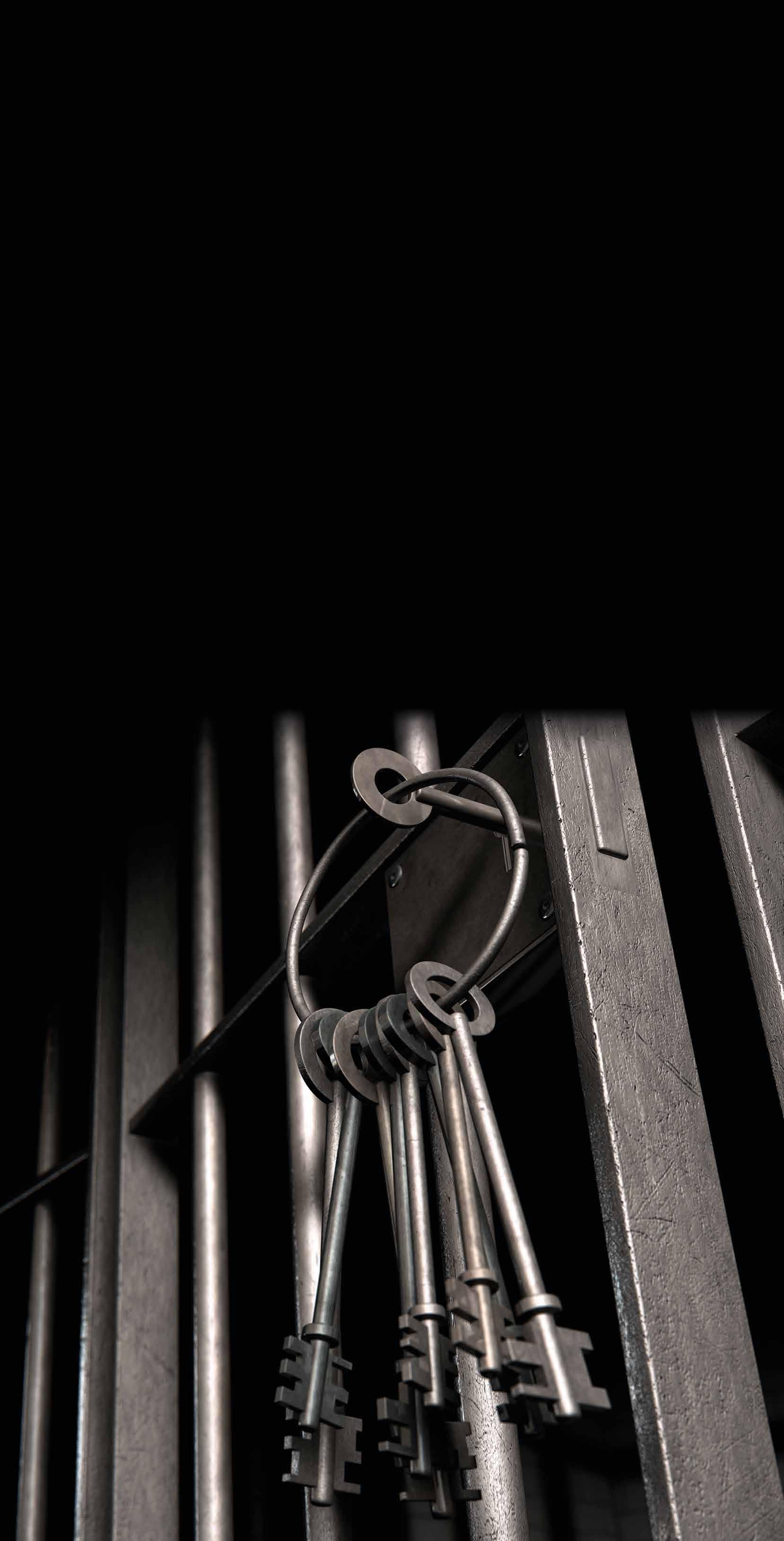

At least one third of released individuals return to prison within two years. Dr Anke Ramakers is analysing the life courses of a group of released prisoners in the Netherlands.

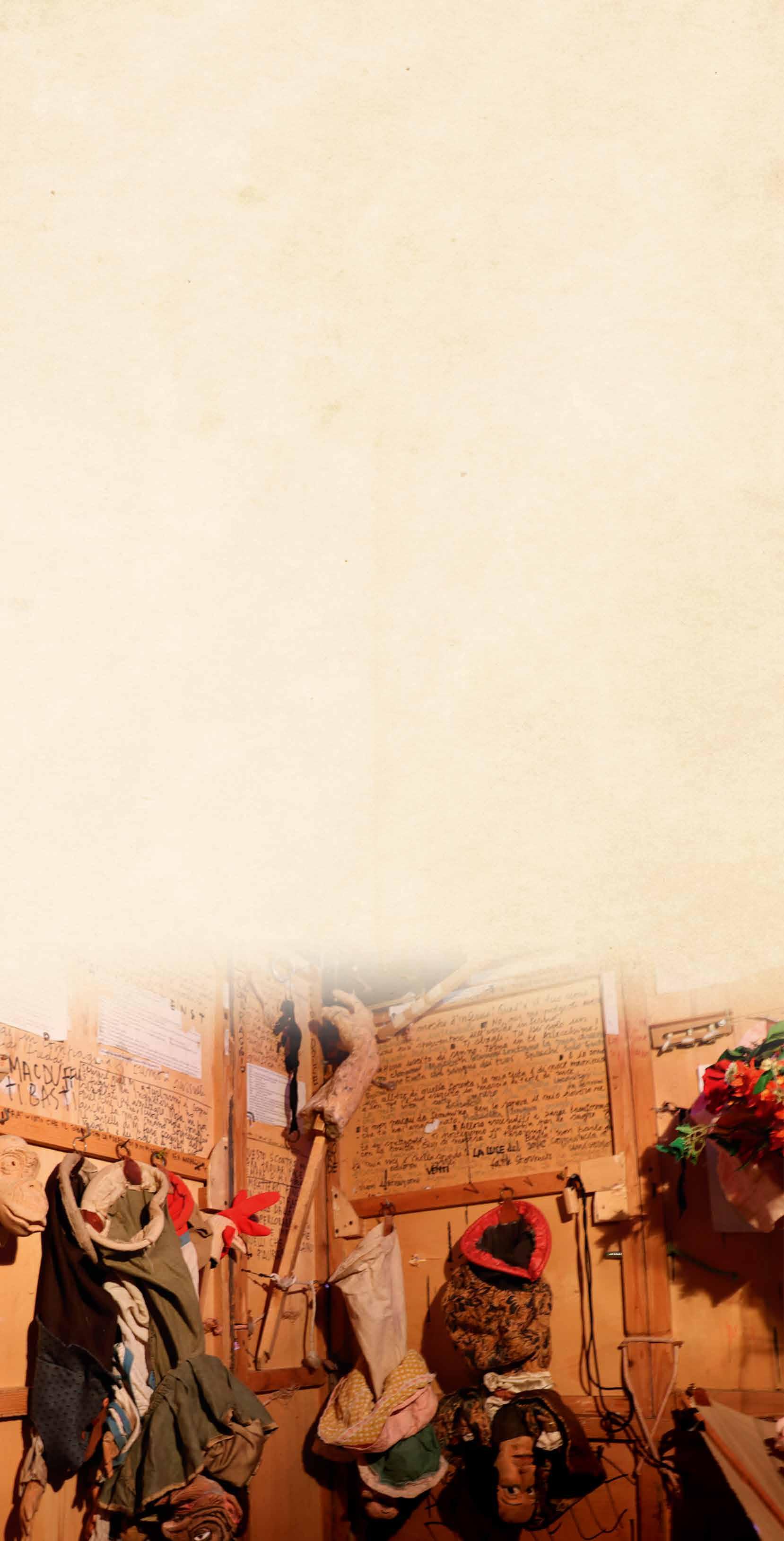

The Traction project developed new technologies and used co-creation to involve citizens in everything from developing stories to creating costumes. This will help renew opera, says Mikel Zorrilla

56 PuppetPlays

Didier Plassard and Carole Guidicelli of the PuppetPlays project are painstakingly piecing together the evidence, manuscripts and source material for puppet and marionette plays to create an open- access online platform.

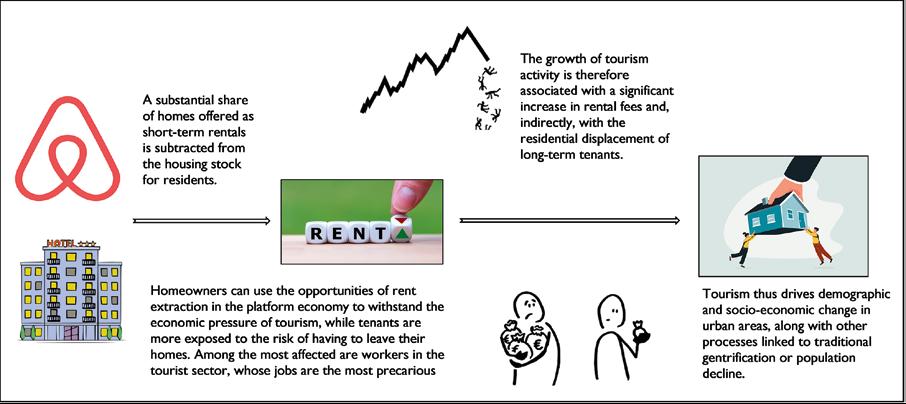

We spoke to Dr Antonio Paolo Russo and Dr Riccardo Valente about the SMARTDEST project’s work in analysing the social impact of shortterm rentals, and the relationship between tourism and urban policy.

62

Many young people face great challenges in finding employment and affordable accommodation in certain European cities. Éva Gerőházi told us about the UPLIFT project’s work investigating socio-economic inequalities.

64 EUROSHIP

We spoke to Professor Rune Halvorsen about the work of the EUROSHIP project in building a deeper picture of social inequalities between countries and regions in Europe.

66

We spoke to Dr Morten Bøås about the PREVEX project’s work in investigating the factors behind outbreaks of violent extremism, with the ultimate goal of helping prevent it.

Artificial intelligence (AI) tools could help the transition towards more personalised treatment of disease, a topic Professor Fiorella Guadagni and her colleagues in the REVERT project are addressing.

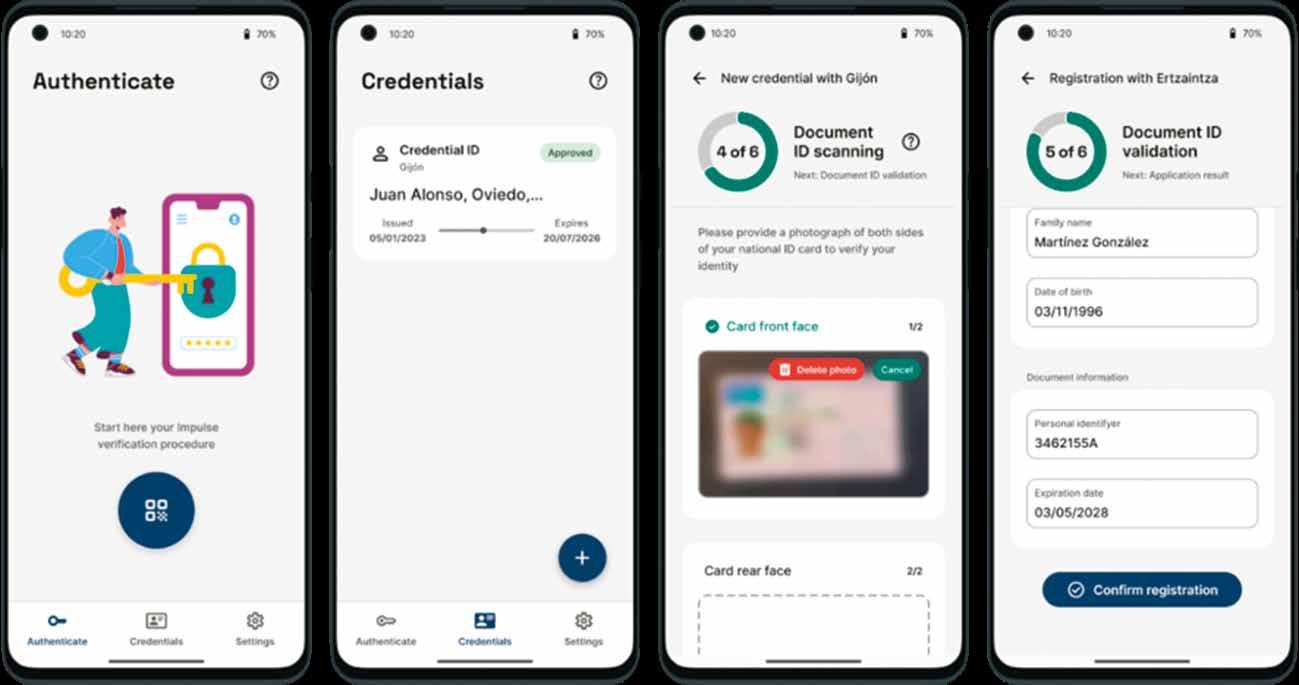

70 IMPULSE

Ms Alicia Jiménez González describes how the IMPULSE project is using the latest technologies to enable people to access public services in Europe in a more secure and accessible way.

73 SpinENGINE

Specialized computer chips may in the future be based on magnetism and have an architecture inspired by the brain, a topic at the heart of Professor Erik Folven’s work in the SpinENGINE project.

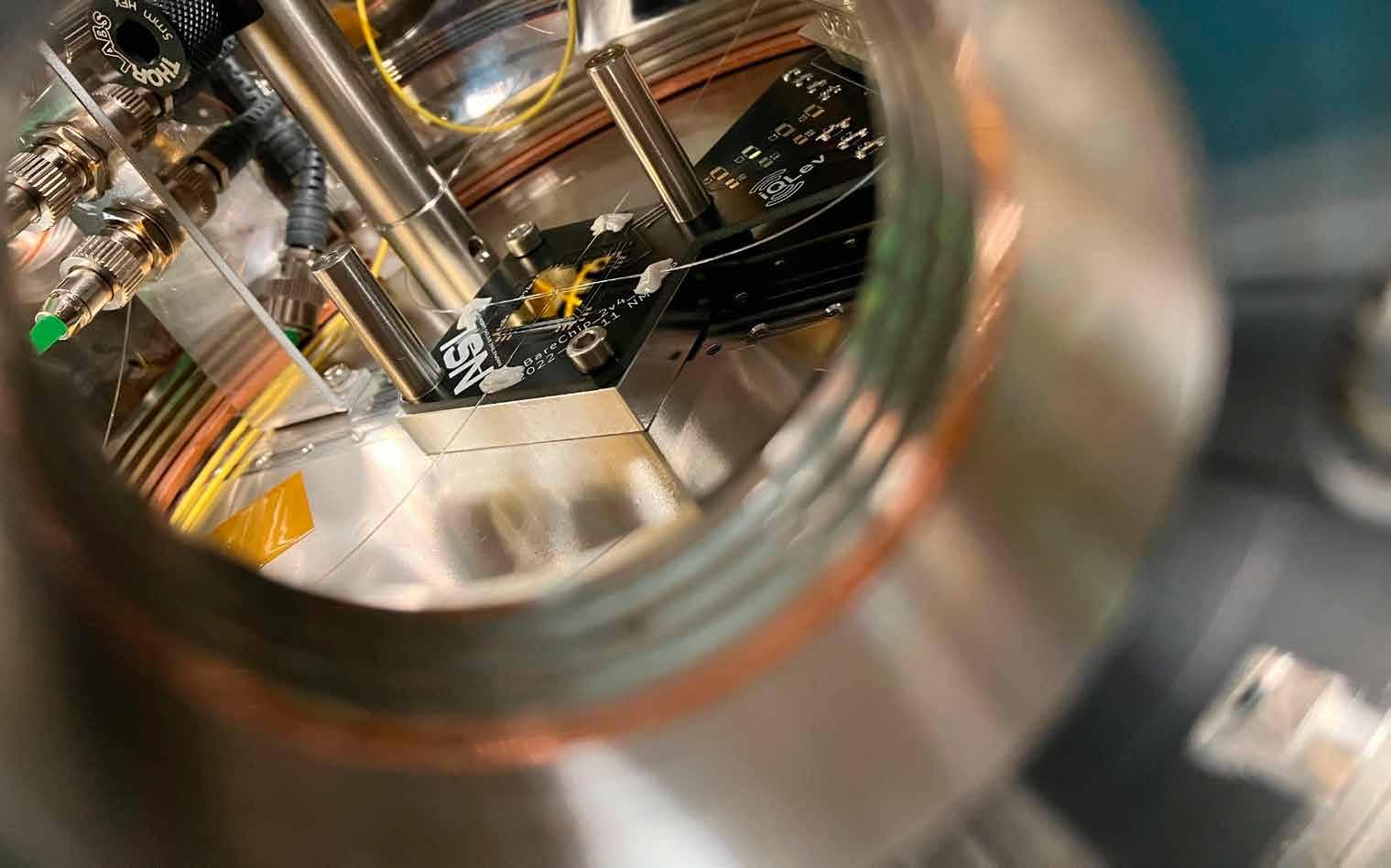

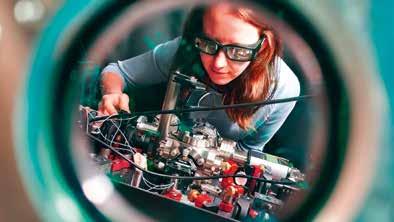

76 iQLev

Researchers in the IQLev project are investigating different levitation platforms, looking to develop a new type of highperformance inertial sensor, as Dr Joanna Zielinska explains.

78 CSI-COP

The CSI-COP project aims to heighten awareness of what information is being gathered about us online, as Dr Huma Shah and Professor Ian Marshall explain.

82 COPA EUROPE

The COPA Europe Project is developing a distribution platform to help companies produce more engaging content and broaden the audience for sports and eSports events, as George Margetis explains.

83 COMPLEX

Dr Klaus Dolag and his colleagues in the COMPLEX group aim to include plasma-physical effects in hydrodynamical simulations of galaxy clusters, which could lead to new insights into how they form and evolve.

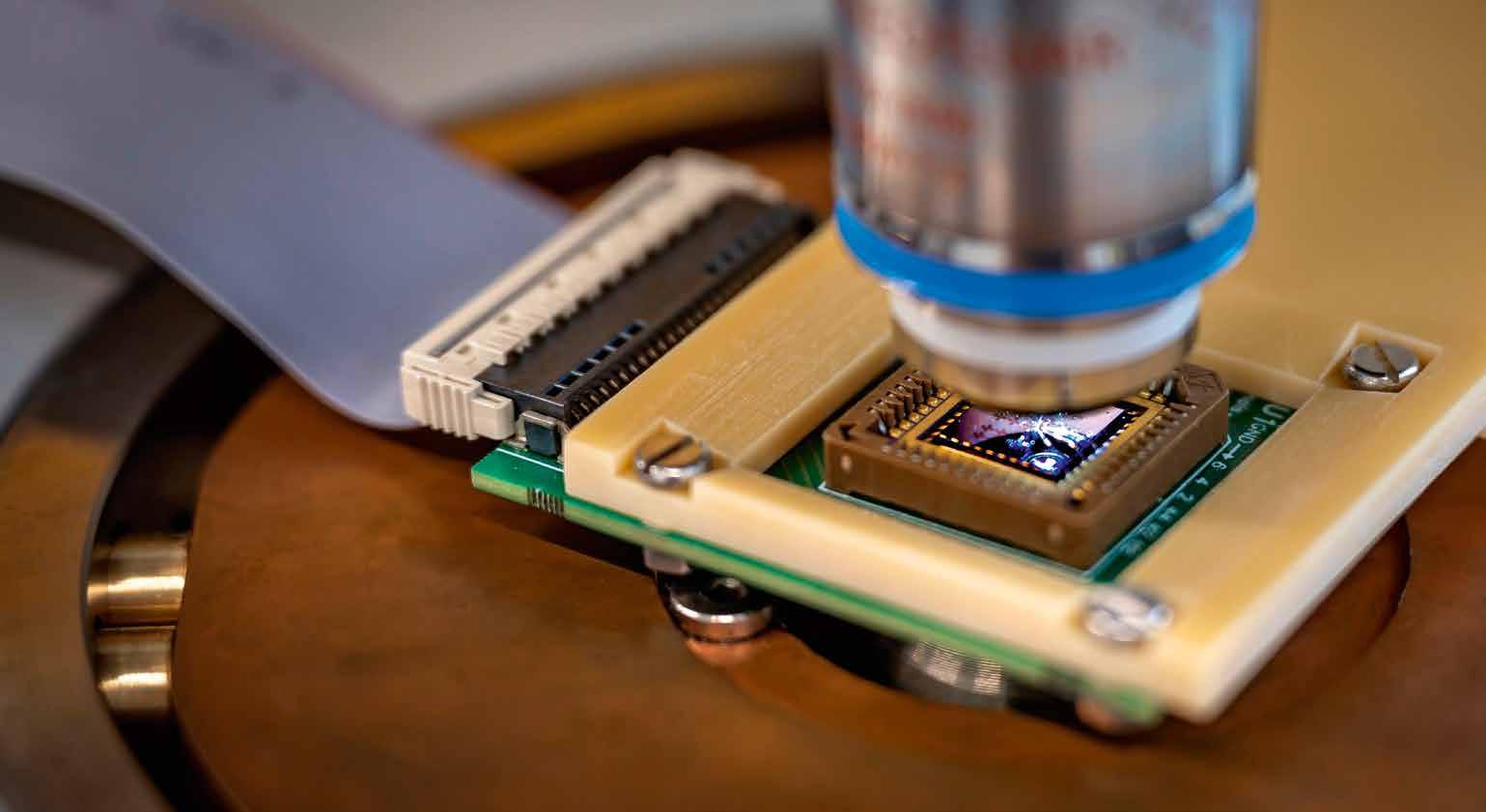

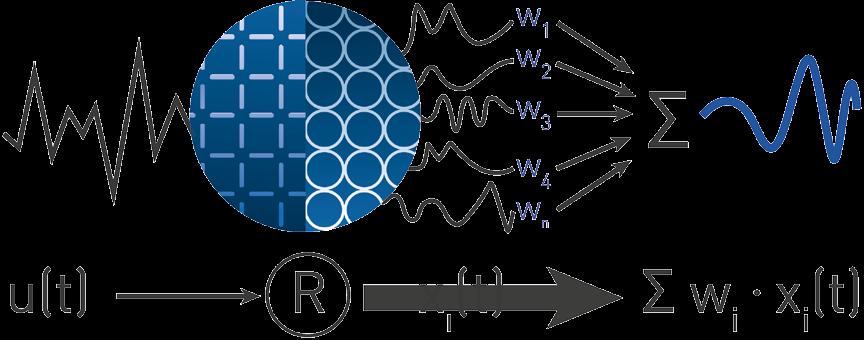

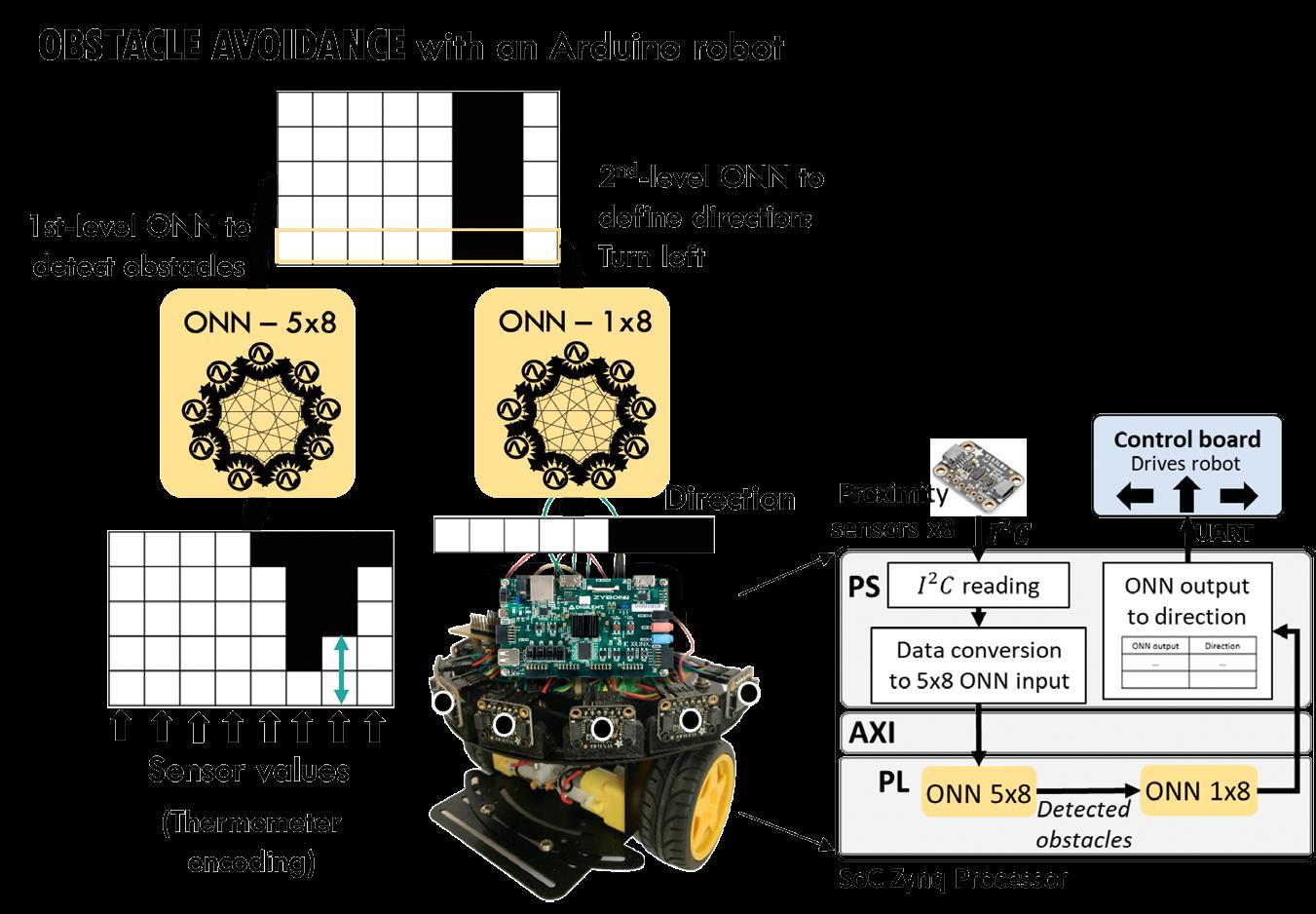

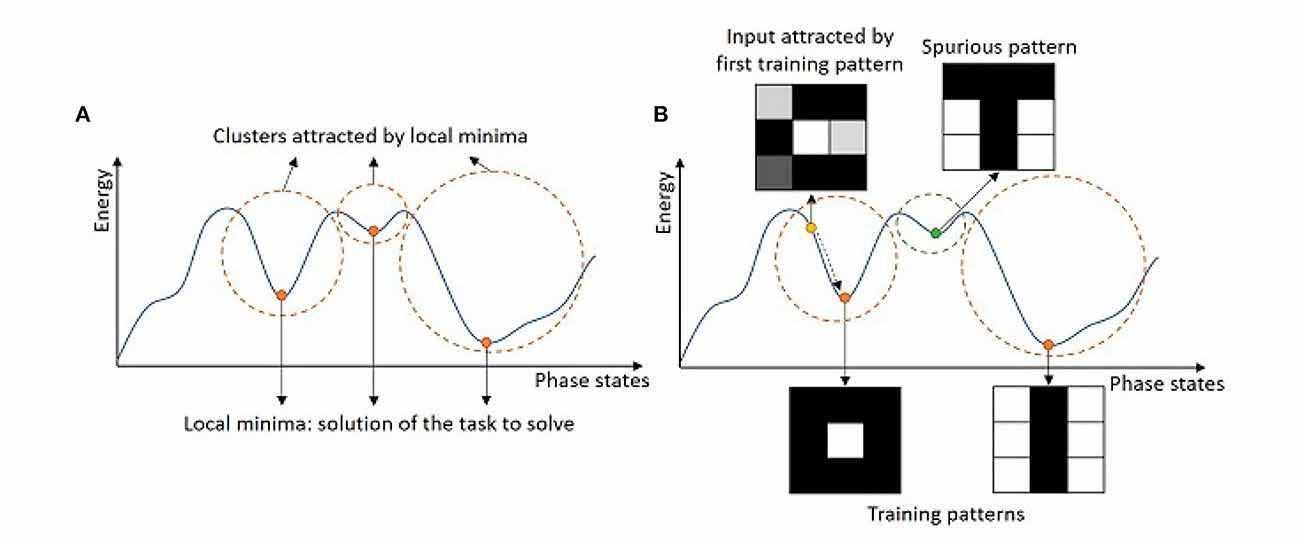

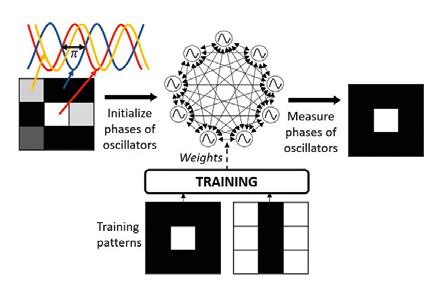

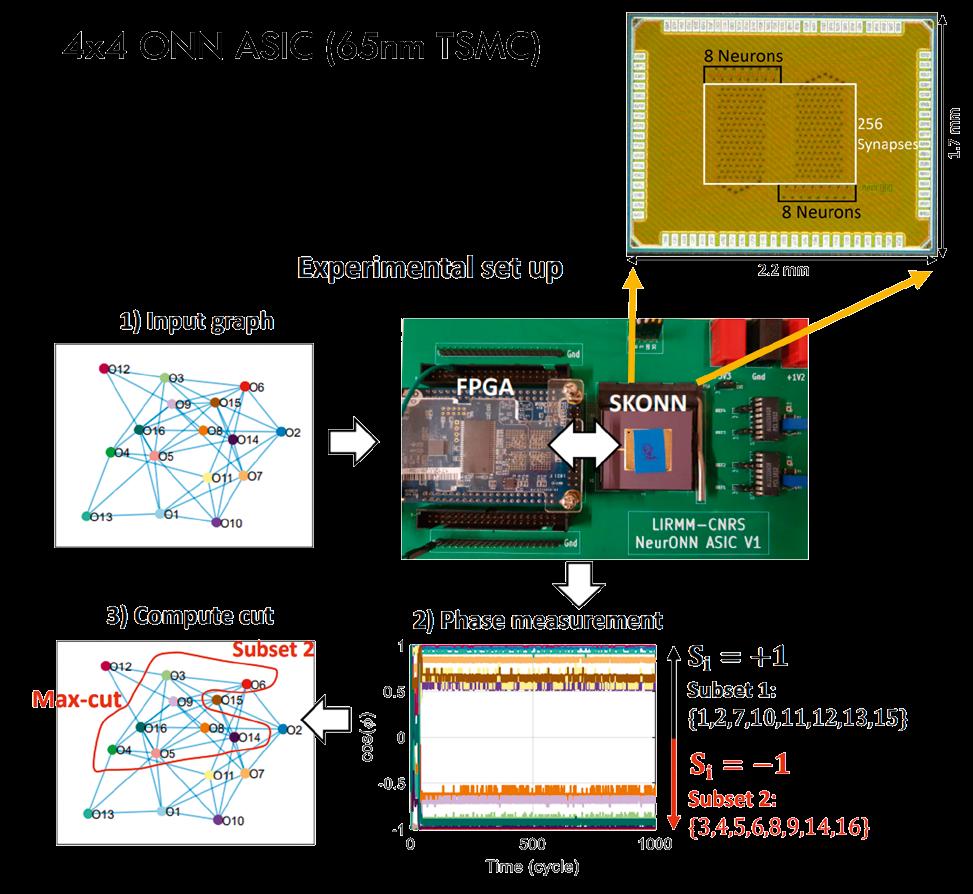

86 NeurONN

Researchers in the NeurONN project are working to develop a novel computing paradigm inspired by the human brain, as Professor Aida Todri-Sanial explains.

EDITORIAL

Managing Editor Richard Forsyth info@euresearcher.com

Deputy Editor Patrick Truss patrick@euresearcher.com

Science Writer Nevena Nikolova nikolovan31@gmail.com

Science Writer Ruth Sullivan editor@euresearcher.com

PRODUCTION

Production Manager Jenny O’Neill jenny@euresearcher.com

Production Assistant Tim Smith info@euresearcher.com

Art Director Daniel Hall design@euresearcher.com

Design Manager David Patten design@euresearcher.com

Illustrator Martin Carr mary@twocatsintheyard.co.uk

PUBLISHING

Managing Director Edward Taberner ed@euresearcher.com

Scientific Director Dr Peter Taberner info@euresearcher.com

Office Manager Janis Beazley info@euresearcher.com

Finance Manager Adrian Hawthorne finance@euresearcher.com

Senior Account Manager Louise King louise@euresearcher.com

EU Research Blazon Publishing and Media Ltd 131 Lydney Road, Bristol, BS10 5JR, United Kingdom

T: +44 (0)207 193 9820

F: +44 (0)117 9244 022

E: info@euresearcher.com www.euresearcher.com

© Blazon Publishing June 2010

ISSN 2752-4736

The EU Research team take a look at current events in the scientific news

A Bulgarian member of the European Court of Auditors, Iliana Ivanova, is to become the EU’s new commissioner for research and innovation, the European Commission announced on Wednesday evening. The announcement comes after a few weeks of speculation over who will replace outgoing commissioner Mariya Gabriel, who resigned her post in May to form a new government coalition in her home country of Bulgaria. The newly formed Bulgarian government coalition had put forward two candidates for the job, but Commission President Ursula von der Leyen picked Ivanova over Daniel Lorer, a former tech investor who had a nine-month stint as minister for innovation and growth last year in Bulgaria.

Von der Leyen wanted to keep intact the existing gender balance in the college of commissioners. The political rumour mill in Brussels and in Sofia was already placing bets on other women candidates, including Bulgarian MEP Eva Maydell. Von der Leyen said both Ivanova and Lorer have relevant experience for the post and “showed great commitment to the European Union and the job of Commissioner.”

Ivanova has been a member of the European Court of Auditors since 2013. In 2009 she was elected to the European Parliament where she was vice chair of the budgetary control committee. “Her

experience is crucial in carrying forward the implementation of the EU’s flagship research programme, Horizon Europe, to enhance the performance of EU’s research spending and achieve a better impact on the ground,” said Von der Leyen in a statement late Wednesday.

After Gabriel’s resignation, commission vice-president Margrethe Vestager had temporarily taken over the innovation and research part of Gabriel’s portfolio, while vice president Margaritis Schinas was looking after education, culture and youth. The news that Vestager took over the innovation and research portfolio following Gabriel has been met with mixed feelings in the research community, as she showed more interest in competition policy and certain aspects of deep tech innovation, and less so in EU research funding.

In addition, the research community feared Bulgaria will not be able to end political turmoil and appoint a commissioner ahead of the upcoming EU elections. These fears grew further last week when Vestager announced that she is seeking to leave her post at the commission for the top job at the European Investment Bank at the end of the year. Gabriel is now serving as deputy prime minister under Nikolay Denkov, a chemist and member of the country’s Academy of Sciences. She is due to put on the prime minister hat after nine months, according to a power rotation agreement within the new coalition.

Nomination of EU Court of Auditors member should end uncertainty over who is in charge of Horizon planning, after the resignation of Mariya Gabriel.

The EU and African Union will adopt a joint innovation roadmap. A group of around 2,000 universities are calling for it to come with funding attached.

Universities are calling on policymakers to pilot a science fund for research and innovation cooperation between the African Union and the EU. The two blocs are set to deepen their longterm R&I cooperation on 13 June with the signing of the AUEU Innovation Agenda, which promises to accelerate talent circulation, help develop research infrastructures and foster the emergence of joint centres of excellence. Universities want the ambition to be backed with dedicated funding, but this isn’t likely to materialise in the next few years, because the EU does not start its next budget cycle until 2028. However, the universities hope a joint fund could be trialled from 2023 onwards.

“Last year, the AU-EU summit put science cooperation at the core of the strategic partnership, and it’s exciting because it envisions a new more sustainable, more equitable way to do science,” said Jan Palmowski, secretary general of the Guild of European Research-Intensive Universities, one of the university associations leading the call. “We need to be realistic but ambitious for the next few years.” There are four priority areas for science cooperation: the green transition; innovation and technology; public health; and capacities for science and higher education. But to make it a success, the two blocs should build up the capacity for cooperation along three strands, the universities argue.

The first one is providing support to individual researchers. This has already been piloted under the Arise pilot programme, which

provides European Research Council-style support for early-career researchers. The group wants this pilot to be extended to 2027, in addition to new funding schemes for mid-career and senior researchers. Second, universities ask for investment in joint centres of excellence which would integrate universities and research organisations across Africa and Europe, enabling joint research. Third, they want to see investment in research infrastructures to tackle shortages of appropriate equipment, laboratories and buildings at African universities and research organisations. Better infrastructures would help fight brain drain and speed up innovation.

“What connects these three areas is the focus on strengthening the science system and connecting these three strands,” says Palmowski. But it won’t be easy to push the idea through the Brussels policy mill, with many Commission directorates, from research to international partnerships, involved. “It’s a complex picture, but to do nothing is simply not an option,” he said. And it’s not just about strengthening research collaboration with African countries. The new innovation agenda dictates a new way of managing science cooperation; if successful, it could apply beyond Africa. “This is a really important test bed in how the EU can spearhead new types of collaborative partnerships,” Palmowski said.

Spain wins 12 of 66 grants awarded in European Research Council’s proof of concept funding round.

Spain has been awarded the most grants in Europe in the European Research Council’s latest proof of concept funding round, picking up 12 grants out of the 66 in total. Second placed Germany won nine grants and the UK and Italy won eight and seven respectively. Spain’s 12 winners cover a range of topics, including treating metastatic cancer and next-generation vaccine development. Three of winners come from the Spanish National Research Council.

Proof of concept grants, worth €150,000, allow researchers to explore commercialisation prospects of basic research they carried out with an earlier ERC grant. Spain also did well in an earlier round of proof of concept funding announced in May, picking up eight of 66 grants, fewer than only Germany and the UK. There will be one more round in 2023, with the ERC handing out a total of €30 million for the year. The awards are a sign that Spanish science is bouncing back after huge cuts were made to the science budget following the financial crisis of 2008. In the past few years Spain has been making

an effort to restructure and rebuild and has moved up to be the number three participating country in the EU’s €95.5 billion Horizon Europe research programme, securing €2.34 billion to date. Only Germany and France are ahead in absolute terms.

Spain-based researchers performed above average in securing proof of concept grants in this round. Of 183 applications, 66 will get funding, a success rate of 36%. Spain put forward 29 proposals with 12 accepted, a success rate of almost 42%. The news was not so good for institutions in the EU Widening countries that have weaker research and innovation systems. Of the 15 countries, only Portugal and Czech Republic won a single grant each. The Czechiabased winner is Michal Otyepka of Palacký University Olomouc whose project is on functionalised graphenes for ink technologies. Meanwhile Ana Rita Cruz Duarte of NOVA University of Lisbon’s project is on ways to improve the shelf life of perishable goods through the stabilisation of vitamins.

Floods, fires and heavy rains have landed more blows across Europe this week, with the authorities on the continent scrambling to respond to the extreme weather that has become increasingly common in the past few years. The most recent events have destroyed large amounts of land, left dozens of people injured, forced thousands to evacuate and, in some cases, caused deaths, and they come on the heels of scorching temperatures that have engulfed much of Southern Europe this summer. Climate change has made extreme heat a fixture of the warmer months in Europe, but experts say that the continent has failed to significantly adapt to the hotter conditions. Governments in many countries are now struggling to address the devastating effects. “The extreme weather conditions across Europe continue to be of concern,” Roberta Metsola, the president of the European Parliament, wrote on the social media platform X, formerly known as Twitter. “The EU is showing solidarity with all those in need.”

Heavy rains in recent days have led rivers to overflow across Slovenia in what the authorities there said was the worst natural disaster since the country’s independence in 1991. At least six people have died, according to the Slovenian news agency STA, and thousands have been forced to flee their homes to escape the floods. Entire villages have been left underwater, and huge rivers of mud have filled roads and sports fields and flowed below collapsed bridges, with cars stuck in the debris of landslides caused by the flooding. Ursula von der Leyen, head of the European Commission, the European Union’s executive arm, said she would travel to Slovenia on Wednesday.

Europe is battling the effects of scorching temperatures reaching worrying levels globally, with July being the hottest month recorded on both land and sea. Last year, heatwaves resulted in over 61,600 heat-related fatalities across 35 European countries and triggered devastating wildfires. This year, the temperatures

could exceed Europe’s current record of 48.8 degrees Celsius, recorded in Sicily in August 2021. Elsewhere, the European Union has sent firefighting planes to assist with efforts to tackle wildfires burning on Cyprus in recent days; Greece, which has also been plagued by wildfires this summer, has sent liquid flame retardant to the island to help. Israel has also provided aid, including firefighting planes, a crew of four pilots and ground crews. Jordan and Lebanon also sent support. Heavy rains have been recorded in Norway and Sweden this month, causing the derailment of a train on Monday that left three people injured in eastern Sweden. The police said that the deluge had undermined the embankment where the accident occurred, causing it to collapse.

More downpours were expected in both countries. The Swedish meteorological and hydrological institute said that the amounts of rain that have fallen were unusually high for August in many locations. “Quite a few places have received more rain in one day than you normally get in the entire month of August,” said Ida Dahlstrom, a meteorologist with the Swedish meteorological institute. She added that the city of Lund, in Southern Sweden, had not received so much rain in one day for more than 160 years.

On the other side of the world, Hurricane Dora has contributed to the wildfires in Maui, Hawaii. The strong winds associated with the category 4 storm, which passed to the south of the island chain earlier this week, fanned the wildfires, allowing them to spread faster and further. The fires have destroyed many towns and villages, and in some places people were forced to jump into the ocean to escape. Dozens of deaths have been reported.

North of the European continent records unusually wet and windy summer conditions while Portugal and Spain battle flames.

Delays in European legislation relating to green hydrogen have led energy companies to hold back research projects and look to the US, where the Inflation Reduction Act is providing massive incentives.

Talks on promoting green hydrogen through the Renewable Energy Directive were cancelled on Tuesday 7 February, with MEP Markus Pieper claiming the European Commission had failed to send important information about how much extra renewable energy is needed to produce green hydrogen via electrolysis, in order not to reduce the amount of renewable electricity currently entering electricity grids. The rules on so-called ‘additionality’ were due in December 2021, but the Commission failed to deliver. Interference from member states, especially Germany and France, has been blamed for the continuing delays. The hydrogen industry is waiting for clarification on these points as well as whether renewable hydrogen or ‘Renewable Fuels of Non Biological Origin’ will be linked to the EU’s targets. The lack of direction from the EU has left researchers unsure of where to focus their efforts.

The delays in regulation will have a knock-on effect on how fast green hydrogen production can progress in Europe. In a letter to EU leaders in October 2022, the hydrogen industry warned that urgent increase in renewable electricity is needed to power green hydrogen in line with the EU’s green ambitions.

As it stands, hydrogen represents a modest fraction of the global and EU energy mix, and is still largely produced from fossil fuels, notably from natural gas or from coal, resulting in the release of 70 to 100 million tonnes CO2 annually in the EU. The EU’s decarbonisation ambitions would favour green hydrogen – or hydrogen produced from renewable energy, completely carbonfree – as an end-goal, but it’s widely accepted that blue hydrogen will be a stepping stone on the way to a green hydrogen energy system. Unlike green hydrogen, the energy needed to produce blue hydrogen comes from fossil fuels, but then carbon capture and storage will cut emissions.

However, blue hydrogen comes with a suite of problems – it often runs on the highly potent greenhouse gas methane, which

can leak from carbon capture facilities. One study suggests that blue hydrogen may in fact be 20% worse than just using natural gas. “Blue hydrogen is largely failing and largely unproven,” says Gareth Dale, reader in political economy at Brunel University London. According to Dale, the blue hydrogen route should be scrapped altogether by the EU, with the focus on developing a truly renewable green hydrogen economy instead. Green hydrogen accounts for 0.04% of the global hydrogen mix, but even all of that is not 100% carbon-free, says Dale. “There’s a common tendency to define green hydrogen as any hydrogen produced by electrolysis, but that’s not really green hydrogen,” he says. “If you have a 100% coal powered grid and someone builds a wind farm and produces green hydrogen rather than sending the power to the grid to reduce some of that coal burning, that’s not green at all.” This is where the rules on additionality come into play, and hydrogen researchers need to know exactly how green the Commission expects them to be.

In terms of global hydrogen research trends, a recent report by the European Patent Organisation shows that Europe and Japan remain world leaders in hydrogen innovation according to technology patents - hydrogen patenting grew even faster in Japan than in Europe during the past decade, with compound average growth rates of 6.2% and 4.5% respectively between 2011 and 2020. But that success may be short-lived in light of the new US Inflation Reduction Act. The Act gives clean hydrogen plants tax credits for the first 10 years of operation, but these tax breaks are only in place until 2032, meaning that the sooner companies apply for the scheme, the better. This adds an element of time pressure which may entice European companies to ditch their European R&I efforts as these delays continue and set up shop on the other side of the Atlantic instead. “There is a clear risk that the IRA will attract European green companies to the USA,” says Wallentin. RWE also says that the new IRA opens up attractive opportunities for them, but that this will not be at the expense of their European projects.

Hydrogen research hits regulatory roadblocks in Europe, as companies look to the United States

A new view of a record-shattering distant star shows it to be twice as hot as our sun, and likely accompanied by a stellar companion.

Astronomers recently set sight sights on Earendel, the most distant star ever discovered, which is so far away that the starlight that James Webb Space Telescope (JWST) caught was produced in the first billion years of the universe’s 13.8 billion-year history. Previous calculations put the star’s distance from Earth at 12.9 billion light-years, but due to the expansion of the universe and the distance light has travelled to get to us, astronomers now believe Earendel is actually 28 billion light-years away.

The name of the star, Earendel — first discovered by the Hubble Space Telescope in 2022 — comes from Old English terms that denote “morning star” or “rising light.” According to observations as a result of the Webb telescope’s discovery, the extremely far-off star, Earendel, is a huge B-type star, more than twice as hot and has a million times greater luminosity than the sun. The star, which belongs to the Sunrise Arc galaxy, was only visible because the huge galaxy cluster WHL0137-08, which is located in the space between Earth and Earendel and magnified the far-off object, made it visible. This process is part of gravitational lensing, a phenomenon that happens when nearby objects function as magnifying glasses for farther away ones. The light of faraway background galaxies is effectively

warped and magnified by gravity. In this instance, the galaxy cluster dramatically increased the brightness of Earendel’s light.

While astronomers weren’t expecting to be able to see a companion star for a massive star like Earendel, the colours picked up by Webb point to the possibility of a cool, red companion star. Webb was able to see details in the Sunrise Arc galaxy by looking into the furthest reaches of the universe and observing in infrared light, which is invisible to the human eye. Small star clusters and regions of star birth were discovered by the space observatory. The actual distance of the Sunrise Arc galaxy is still being ascertained by astronomers through further analysis of the data from Webb’s observation.

Astronomers can learn more about the early universe and get a peek at what our Milky Way galaxy might have looked like billions of years ago by studying extremely distant stars and galaxies that formed closer to the big bang. For astronomers, Webb’s capacity to investigate such a far-off, tiny object is encouraging. The first stars that formed from unprocessed substances like hydrogen and helium shortly after the universe’s creation may one day be seen.

© NASA, ESA, CSA, D. Coe (STScI/AURA for ESA; Johns Hopkins University), B. Welch (NASA’s Goddard Space Flight Center; University of Maryland, College Park). Image processing: Z. Levay. Circled: Experts were able to find Earendel as a faint red dot below a cluster of distant galaxies.

Analysis indicates ingested microplastics migrate into whales’ fat and organs.

Microscopic plastic particles have been found in the fats and lungs of two-thirds of the marine mammals in a graduate student’s study of ocean microplastics. The presence of polymer particles and fibres in these animals suggests that microplastics can travel out of the digestive tract and lodge in the tissues. The samples in this study were acquired from 32 stranded or subsistence-harvested animals between 2000 and 2021 in Alaska, California and North Carolina. Twelve species are represented in the data, including one bearded seal, which also had plastic in its tissues.

“This is an extra burden on top of everything else they face: climate change, pollution, noise, and now they’re not only ingesting plastic and contending with the big pieces in their stomachs, they’re also being internalized,” said Greg Merrill Jr., a fifth-year graduate student at the Duke University Marine Lab. “Some proportion of their mass is now plastic.”

Plastics are attracted to fats -- they’re lipophilic -- and so believed to be easily attracted to blubber, the sound-producing melon on

a toothed whale’s forehead, and the fat pads along the lower jaw that focus sound to the whales’ internal ears. The study sampled those three kinds of fats plus the lungs and found plastics in all four tissues. Plastic particles identified in tissues ranged on average from 198 microns to 537 microns -- a human hair is about 100 microns in diameter. Merrill points out that, in addition to whatever chemical threat the plastics pose, plastic pieces also can tear and abrade tissues.

Polyester fibres, a common byproduct of laundry machines, were the most common in tissue samples, as was polyethylene, which is a component of beverage containers. Blue plastic was the most common colour found in all four kinds of tissue. A 2022 paper in Nature Communications estimated, based on known concentrations of microplastics off the Pacific Coast of California, that a filter-feeding blue whale might be gulping down 95 pounds of plastic waste per day as it catches tiny creatures in the water column. Whales and dolphins that prey on fish and other larger organisms also might be acquiring accumulated plastic in the animals they eat.

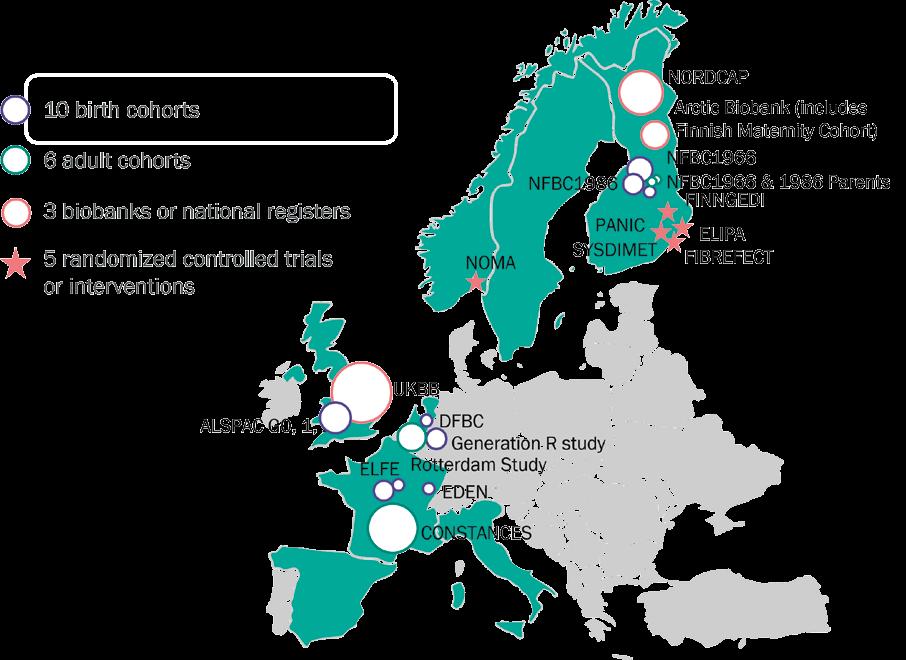

We spoke to Professor Sylvain Sebert about LongITools, a project that studies how environmental, biological, and lifestyle factors affect cardiovascular and metabolic diseases. As a part of the European Human Exposome Network, LongITools addresses exposome research challenges, develops models, and collaborates with policymakers for evidence-based recommendations.

The exposome is defined as the measure of all an individual’s environmental exposures in a lifetime and how those exposures relate to health. These factors include a wide range of exposures such as pollution, infections, chemicals, diet, urban and natural environments, socioeconomic factors, and lifestyle. Researchers studying the exposome aim to discover the cumulative effects of multiple environmental factors on health outcomes. This holistic approach moves away from the traditional “one exposure, one disease” approach.

The concept of the exposome, while fascinating, presents several challenges. One of the main difficulties lies in the assessment of the exposome itself. To accurately capture the many dynamic environmental exposures that influence health, researchers must consider hundreds of factors. Capturing the exposome requires numerous measurements using different technologies, making it complex and costly. Another challenge is establishing the nature of the association between multiple exposures and health outcomes. The multi-dimensional correlation structure across the exposome (e.g., air pollution is not independent from other risk factors) makes it difficult for existing statistical methods to accurately identify which and how exposures truly impact health and separate them from correlated exposures. However, ongoing exposome projects are working to overcome these challenges, aiming to improve our understanding of environmental risk factors and develop better prevention strategies.

LongITools is part of the European Human Exposome Network (EHEN), the largest network of research projects investigating the impact of environmental exposure on human health. It brings together nine projects involving 126 organizations across 24 countries, and it has received €106 million in funding from the European Commission’s Horizon 2020 program. Each project has a different focus, but all are investigating multiple exposures including air quality, noise, chemicals, and urbanization, and their association with various health outcomes like cardiovascular diseases, mental health disorders, and respiratory diseases.

The LongITools consortium has access to a vast collection of life-course data, including studies on birth cohorts, longitudinal studies in adults, register-based cohorts, randomized controlled trials (RCTs), and biobanks. These data sets encompass information from 24 studies involving over 11 million citizens from across Europe. They cover a wide range of variables such as height, weight, blood composition, employment, lifestyle factors, cholesterol, and more. A key objective of LongITools is to create a catalogue of longitudinal data sets (life-course cohorts and randomized clinical trials) within a findable, accessible, interoperable, and reusable (FAIR) data infrastructure. This infrastructure will facilitate data discovery, access, and collaboration among longitudinal cohorts and clinical studies in Europe. In order to achieve this a metadata catalogue of harmonized variables has been developed as a result of collaboration with multiple past and ongoing European projects. The ‘European Networks Health Data and Cohort Catalogue’, provides comprehensive information

on the data sets, such as type, population, number of participants, and variables. It includes a findability function enabling users to access rich metadata about the datasets, a harmonization mapping system, manuals to explain to users how to use the catalogue, and standard operating procedures. The catalogue is integrated into the MOLGENIS FAIR data platform.

Since all of these studies have been built independently from each other, there are variabilities in the way the data has been collected. Using more data increases statistical power and considers diverse populations and environments. However, combining different studies or data sets for specific research questions can be challenging. To overcome complexities, the project uses data harmonization to make variables consistent and comparable across different sources. This facilitates combining and analyzing data from multiple studies, ensuring reliability and applicability in research. The researchers hope that the sustainable catalogue approach will inspire other consortia to participate and promote multi-center exposome and health research in Europe. By enhancing the FAIRness of available data, along with other EHEN projects, they strive to accelerate research advancements.

In the LongITools project, the challenges of analyzing the exposome are being addressed by the development of different modeling approaches to study the exposome and its complexities. The question that arises is how to identify what type of data is useful and how to potentially develop and recognize different types of environments. Researchers aim to develop models - exposome scores, to analyze the effect of different environmental factors on diseases like obesity or type 2 diabetes. These methods are applied at the population level in multiple countries, including Finland, the Netherlands, and the UK, with efforts to ensure comparability. Longitudinal analysis, which focuses on changes in the environment over time, is also a significant part of the research. Machine learning and other statistical approaches are utilized to analyze the data and uncover dynamics in the environment.

The LongITools Health Risk Assessment System, designed with SMEs, is a modular e-health system used to monitor an individual’s risk of cardiovascular and metabolic diseases. The system comprises three core components, a smartphone app, an environmental hub and remote sensors, and AI-based predictive models. This innovative system allows for longitudinal monitoring of lifestyle and environmental exposure data, providing health risk assessments for individuals and potentially researchers and healthcare professionals. This is currently being piloted in Italy.

The project aims to share key outputs as widely and as openly as possible. The Exposome Data Analysis Toolbox is a user-friendly online toolbox that enables users to search for and use data analysis tools and visualization methodologies. Researchers will be able to access and use multiple

exposome data analysis tools and methodologies via a single platform and interact with them based on their needs and level of expertise. By increasing usability and interoperability, the toolbox will enhance research and support open science.

By collaborating with policymakers and key stakeholders, the project aims to provide opportunities that inform the research activity and facilitate the exchange of knowledge. The expected research outcomes of LongITools address the health needs of the population. By defining disease trajectories that occur due to the association between individual and societal health and the environment, the project hopes to educate policymakers about disease risk factors which could ultimately aid in building resilient environments and evidence-based health systems. The project is developing an Economic Simulation Platform, a policy evaluation tool that focuses on assessing and projecting the economic burden related to non-communicable diseases. For this aim, a validated dynamic microsimulation model is used to evaluate “what if” scenarios. This tool is of great importance for policymakers since it provides them with economic analysis and associated costs and benefits. The researchers will translate their findings into evidence-based policy recommendations that will be published in a policy briefing.

“We hope to find ways to design integrated health and environment policies that engage with citizens so that in collaboration, with policymakers, we may find effective and practical solutions that mitigate urban pollutions and climate change. We are observing a lot of effects of noise, air pollution and climate stress on individuals in terms of sleep, mental health, etc. The idea is to find solutions together. For example, what type of solutions could we find in urban design to improve the environment and citizen’s quality of life?” explains Prof. Sebert.

Dynamic longitudinal exposome trajectories in cardiovascular and metabolic non-communicable diseases

Project Objectives

The main objective of LongITools is to study the collective impact of environmental, biological, and lifestyle factors on the risk of developing cardiovascular and metabolic non-communicable diseases (NCDs). The project aims to generate new methods and tools for monitoring and predicting environmental exposures and their impact on health. This research will result in innovative key outputs that inform both current and future policies.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 874739.

Project Partners https://longitools.org/partners/

Contact Details

Claire Webster

LongITools Press and Communications Manager

Beta Technology

LongITools Communication, Dissemination and Exploitation Lead/Partner

T: +44 (0) 1302 322633

T: +44 7501 463317

E: claire.webster@betatechnology.co.uk

W: https://longitools.org/

W: www.betatechnology.co.uk

LongITools Project Coordinator Sylvain Sebert , is a Professor of Life-course Epidemiology at the University of Oulu. He is leading the life-course epidemiology research group at the population health research unit and chairing the Northern Finland Birth Cohort executive board. He is also the Programme Director for the International Master of Epidemiology and Biomedical Data Science. His research interests include the exposome, obesity, epidemiology, climate change and health.

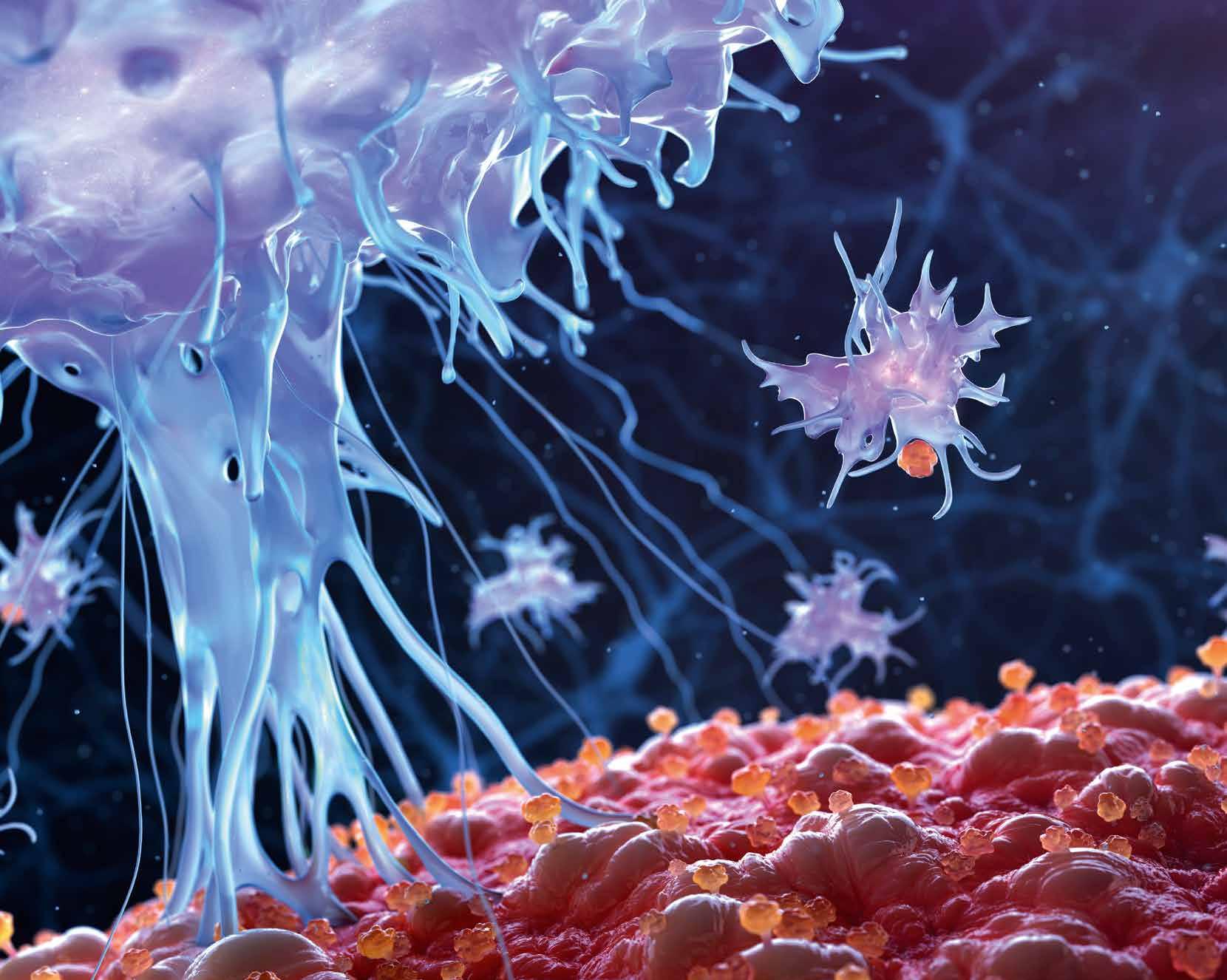

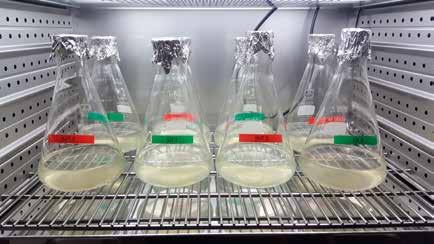

Metabolism can be broadly thought of as the way in which biological systems make and break down molecules for certain purposes, for example nutrition and in constructing the complex biological chemicals that make up living systems. Beyond these functions, evidence suggests that metabolites are also involved in the immune response. “Around twelve years ago immunologists started noticing some very interesting metabolic changes in macrophages, a type of white blood cell,” outlines Professor Luke O’Neill, Chair of Biochemistry at Trinity College Dublin. This suggested that metabolites were involved in certain processes outside their established functions, a topic Professor O’Neill is exploring as Principal Investigator of the ERCfunded Metabinnate project. “We’re working on Krebs cycle, which is the central hub of our metabolism,” he explains. “We’ve mapped the role of Krebs cycle metabolites in some very interesting downstream processes for macrophage function.”

These macrophages are part of the front line of the immune system, responding quickly when activated to fight an infection or repair a tissue injury. Macrophages also drive inflammation, which can cause longerterm problems if it persists after an infection has been cleared, a topic of great interest to Professor O’Neill and his colleagues. “We’ve worked on the inflammatory process for a long time. We’re interested in inflammatory diseases, such as rheumatoid arthritis, psoriasis and inflammatory bowel disease,” he says. These diseases all involve macrophages essentially going out of control, leading to chronic inflammation, which causes tissue damage. “The symptoms of these inflammatory diseases that we see are down to macrophages - and other parts of the immune system - attacking our own tissues,” continues Professor O’Neill. “In previous work in my lab we identified certain metabolites as being very interesting in terms of their role in regulating inflammation. We wanted to find out more about them and figure out what they do.”

The initial plan was to look at the role of three specific metabolites in the project, namely malonyl-CoA, 2-hydroxyglutarate (2-HG) and itaconate. There is scope to explore other avenues in ERC-funded projects however, and researchers have also made some interesting discoveries about another metabolite called fumarate. “We’ve got a big focus on fumarate at the moment,” says Professor O’Neill. These different metabolites

Krebs Cycle lies at the heart of metabolism but two of its intermediates, succinate and itaconte, have been shown to have roles in immunity and inflammation. Succinate is pro-inflammatory and itaconate is anti-inflammatory, opening up possibilities for new antiinflammatory therapeutics.

A variety of different techniques are being applied in the project to work out what these metabolites do once they have accumulated, with researchers seeking independent lines of evidence to reinforce their findings. Metabolomics screenings are expensive, so are used sparingly, with Professor O’Neill applying other methods to gain deeper insights. “We use Polymerase Chain Reaction (PCR) analysers as a way to

We’ve worked on the inflammatory process for a long time. We’re interested in inflammatory diseases, such as rheumatoid arthritis, psoriasis and inflammatory bowel disease.

were first identified through a metabolomic screen. “We saw that certain metabolites were essentially accumulating during the activation of macrophages. We homed in on those metabolites - our inference was that they were accumulating for a reason, and then affecting things further downstream,” explains Professor O’Neill. “We’re looking at the individual metabolites which go up following macrophage activation, and are trying to figure out how and why they accumulate. The major example is itaconate, which can feed back on the Krebs cycle and allow another metabolite called succinate to accumulate.”

measure mRNA very accurately for example, as well as a range of standard assays and certain microscopy techniques to look at macrophages and see how they change,” he says. In their resting state macrophages are pretty inactive and essentially mind their own business, but they spring into action in response to a tissue injury or the presence of a bacterial cell. “When macrophages are activated they essentially wake up and start to make cytokines, which are signalling molecules. Those cytokines affect many cells in the body and effectively bring them to the party,” continues Professor O’Neill.

Metabolites play an important role in the immune response, beyond their function in making and breaking down molecules. Researchers in the Metabinnate project aim to shed new light on the role of several different metabolites in the immune system, work which could hold important implications for the treatment of inflammatory diseases, as Professor Luke O’Neill explains.

This process of macrophage activation affects metabolite levels, which then have wider effects. Two of the metabolites of interest in the project ( 2-HG and malonyl-CoA) are known to have a pro-inflammatory effect, while itaconate is very anti-inflammatory, and the ratios between these metabolites can be very important. “If the ratio swings in a particular direction, that might lead to an inflammatory outcome. While if it’s the other way, it could be anti-inflammatory,” outlines Professor O’Neill. The wider goal in this work is to develop effective new treatments for disease, and Professor O’Neill is confident that research into the metabolism of the immune system (a field called immunometabolism) can lead to new medicines. “I co-founded a company in 2018 called Sitryx which is based in Oxford. They’re building on our research in Dublin and developing new medicines from itaconate in various ways,” he says. “There’s a huge unmet medical need in the treatment of inflammatory diseases.”

There are treatments available for inflammatory diseases but not every patient responds as hoped, so it’s important to develop new, more effective medicines. This rests on further immunometabolism research and deeper insights into the regulation of immune responses, and the project is making an important contribution in this respect.

“We are working on macrophages, which are a key cell type in inflammation, and we’re discovering fundamental processes that seem to go wrong,” says Professor O’Neill. Chronic inflammation is involved in a number of different conditions, reinforcing the wider relevance of this research. “Inflammatory diseases mainly differ in the tissue that’s being attacked – macrophages are involved in them all. With some people macrophages may get irritated in the gut, or in the skin in the case of psoriasis, or in the brain,” outlines Professor O’Neill. “Parkinson’s disease and Alzheimer’s.disease are both inflammatory as well for example, so new insights could lead to therapies against many different diseases.”

The project itself is working on the fundamental level, but Professor O’Neill and his colleagues ultimately aim to help companies develop improved therapeutics. The immunometabolism field is a fairly new area of research, but it’s grown quickly and thousands of labs across the world are now working on different immune cells, sharing knowledge and spurring progress. “If we see something interesting in our system that might lead another lab to re-examine their own, and vice-versa. For example, people have built on our work in itaconate but in the brain,” says Professor O’Neill. “We’re hoping our work will hold broader relevance and apply beyond a particular property.”

Project Objectives

The Metabinnate project is investigating the role of certain metabolites in regulating the immune response, which represents an important contribution to the growing field of immunometabolism research. This work could help researchers develop effective new treatments against a wide variety of inflammatory diseases.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 834370.

Contact Details

Project Coordinator, Professor Luke O’Neill

Chair of Biochemistry(1960), Biochemistry Trinity College Dublin College Green, Dublin 2

T: +353 1896 2439

E: luke.oneill@tcd.ie

W: https://www.tcd.ie/Biochemistry/ people/laoneill/

Runtsch MC, Angiari S, Hooftman A, Wadhwa R, Zhang Y, Zheng Y, Spina JS, Ruzek MC, Argiriadi MA, McGettrick AF, Mendez RS, Zotta A, Peace CG, Walsh A, Chirillo R, Hams E, Fallon PG, Jayamaran R, Dua K, Brown AC, Kim RY, Horvat JC, Hansbro PM, Wang C,O’Neill LAJ.Itaconate and itaconate derivatives target JAK1 to suppress alternative activation of macrophages.Cell Metab. 2022 Mar 1;34(3):487-501.e8. doi: 10.1016/j.cmet.2022.02.002.

Hooftman A,O’Neill LAJ.Nanoparticle asymmetry shapes an immune response.Nature. 2022 Jan;601(7893):323325. doi: 10.1038/d41586-021-03806-7.

Hooftman, A, Peace C, Ryan DG, Day E, Yang M, McGettrick A, Yin M, Montano E, Huo L, Toller-Kawahisa J, Zecchini V, Ryan TAJ, Bolado-Carrancio A, Casey AM, Prag HA, Costa AS, De Los Santo G, Ishimori M, Walace DJ, Venuturupalli S, Nikitopoulou E, Frizzell N, Johansson C, Von Kriegsheim A, Murphy MP, Jefferies C, Frezza C and O’Neill LAJTargeting fumarate hydratase promotes mitochondrial RNAmedicated interferon production. Nature(2023) 615, 490498 doi: 10.1038/s41586-023-05720-6.

Luke O’Neill is Professor of Biochemistry in the School of Biochemistry and Immunology, Trinity Biomedical Sciences Institute at Trinity College Dublin, Ireland. His main research interests include Toll-like receptors, Inflammasomes and Immunometabolism. He is the co-founder of Sitryx, which aims to develop new medicines for inflammatory diseases.

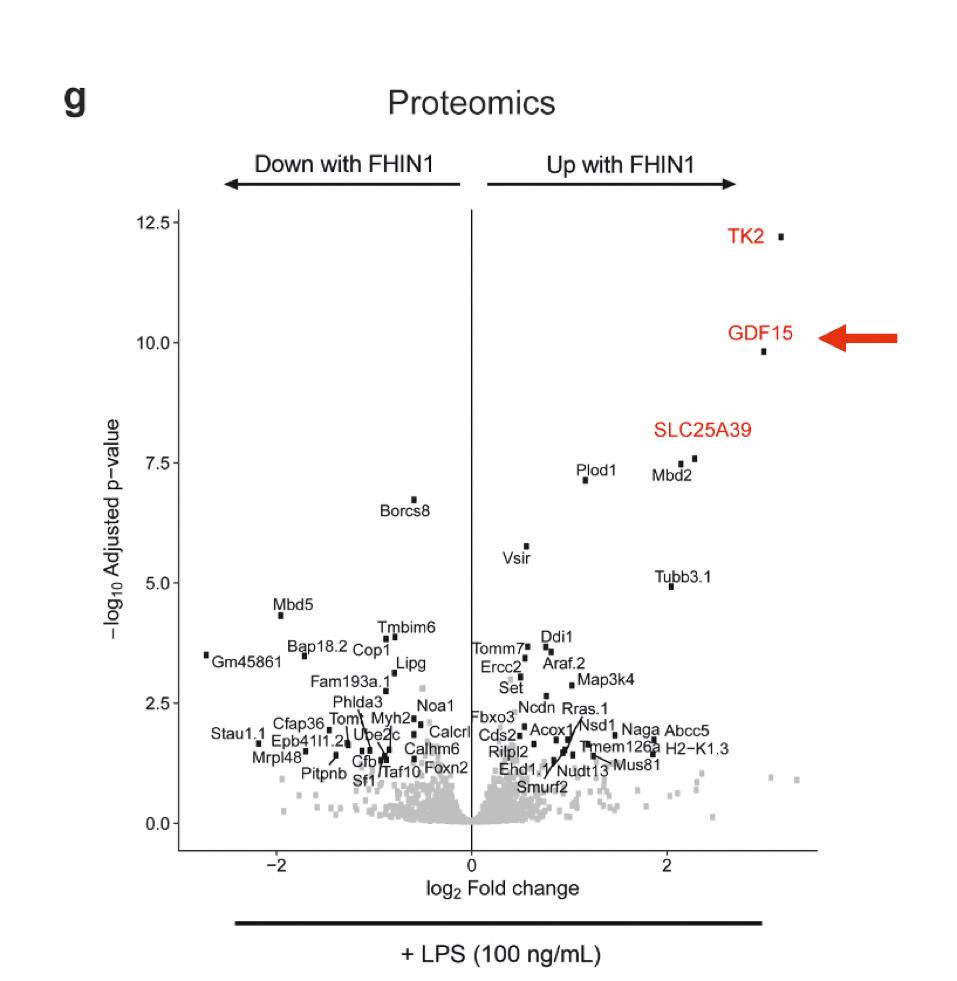

A proteomic analysis of macrophages in which fumarate hydratase is blocked revealed fascinating changes in protein levels, notably in the protein GDF-15, which regulates food intake but also is anti-inflammatory. Work in progress is exploring this process further.

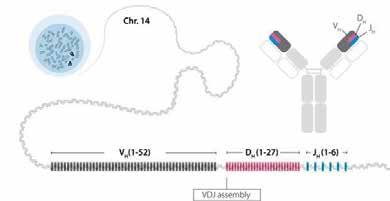

Hundreds of genes recombine in a combinatorial manner to make B- and T-cell receptors, which are integral to the adaptive immune system. Researchers in the ImmuneDiversity project are investigating the inter-individual diversity of these genes, which will open up new insights into disease susceptibility, as Professor Gunilla

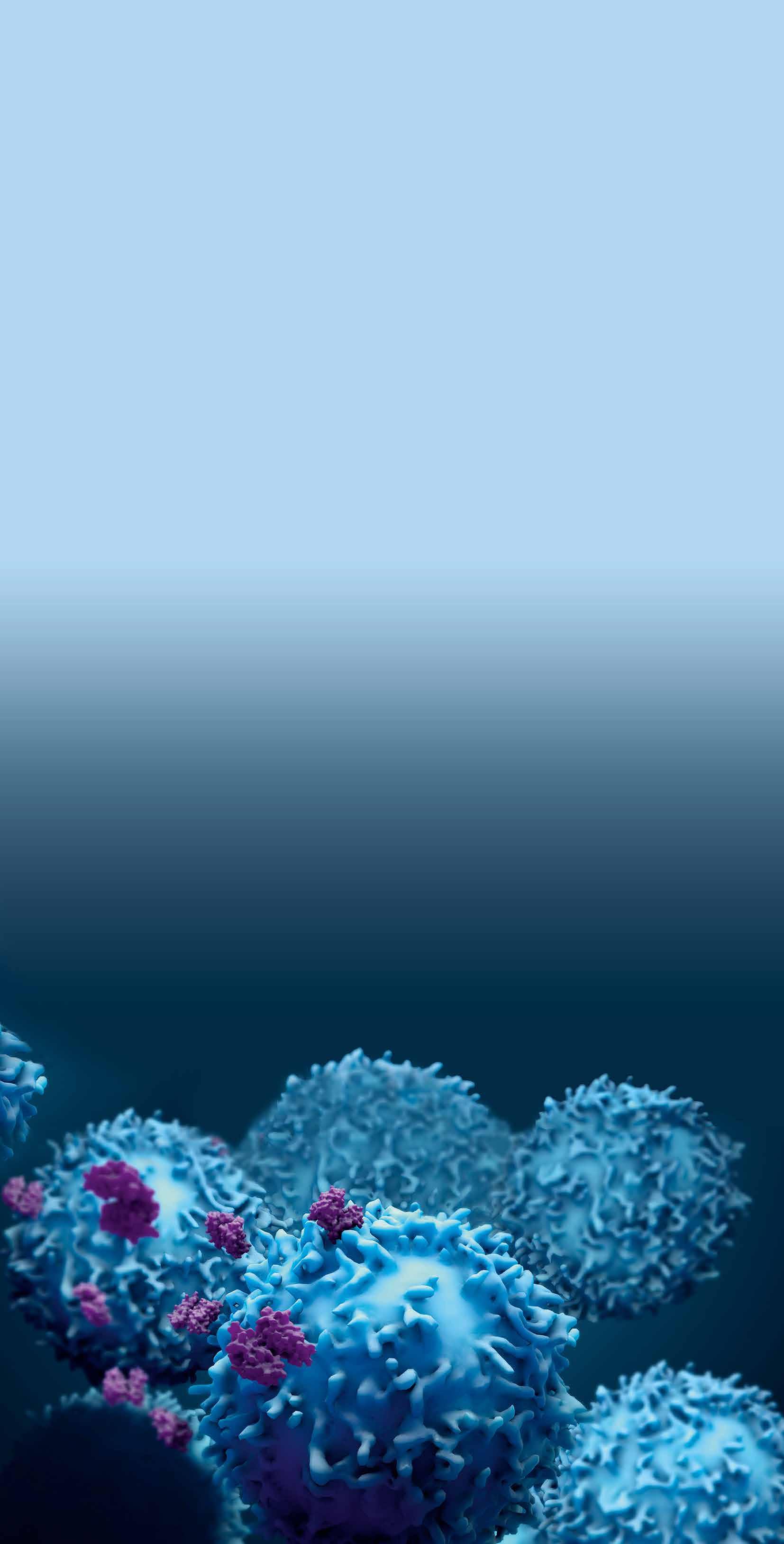

There is a high degree of variability in the genes that encode our adaptive immune receptors across the world, yet most genomics studies and databases have historically focused on populations with European ancestry. Researchers in the ERCbacked ImmuneDiversity project are now working to provide a broader picture. “We are running studies looking at major population groups, including people with backgrounds from sub-Saharan Africa, East Asia, South Asia and Europe,” explains Gunilla Karlsson Hedestam, a Professor in the Department of Microbiology, Tumor and Cell Biology at the Karolinska Institutet, the project’s Principal Investigator. “We started with local volunteers with diverse backgrounds who were interested in the study and provided blood samples, and we then extended the studies to samples collected through collaboration with scientists around the world that also include more focused population groups,” she outlines. “Using our high throughput genotyping method, we also analysed the 1,000 Genomes Project collection, which comprises samples from all over the world.”

A Senior Research Specialist in the group, Dr. Martin Corcoran has developed the new techniques to define germline-encoded variation in antigen receptor genes employed in the ImmuneDiversity project. Together

with others in the group, he carefully validated these techniques using both wet lab approaches and computational methods, work that has taken many years to complete. With these techniques now at hand, researchers can finally study adaptive immune responses with a high degree of accuracy and at a level of detail that is not possible with traditional

explains.

lot of parallels between B-cells and T-cells in terms of how their antigen receptors are built up,” says Professor Karlsson Hedestam, with building blocks - V, D and J germline genes - that recombine in a combinatorial manner to make up all B-cell receptors (membrane-bound antibodies) and T-cell receptors. “There are hundreds of V, D and J

sequencing methods. The team focuses on Band T-lymphocyte receptors, both of which play important roles in the body’s response to pathogens and are implicated in numerous immune-mediated diseases. “There are a

genes, most of which are highly polymorphic and some genes are missing altogether in some individuals”, she continues. “We aim to define where the polymorphisms are and the frequency of different gene variants in the population. Alleles of a given V, D or J gene may differ from each other by as little as a single nucleotide, but this can be enough to have functional consequences.”

For example, a recent study on SARS-CoV2-specific antibody responses published by the group showed that single polymorphisms can change the way B-cell receptors interact with critical neutralizing target epitopes. Thus, each of us has a different collection of V, D and J alleles, which shapes our immune

“By performing personalized genotyping of B- and T-cell receptor genes, we may be able to piece together more of the puzzle for diseases where adaptive immune responses are known to be involved, such as autoimmune conditions”.

Karlsson HedestamMarco Mandolesi. Photograph by Johanna Åkerberg Kassel.

repertoires and responses. This variation may influence the level of protection our B- and T-cells provide against infections and may also predispose us to the development of certain immune-mediated diseases. “We know for example that multiple sclerosis (MS) and rheumatoid arthritis involve T-cells and B-cells, we just don’t know why some people develop these diseases. One scenario is that infections induce cross-reactive immune responses that not only target the pathogen but also attack self-tissue, and that variations in adaptive immune system genes predispose people to such mis-directed responses,” outlines Professor Karlsson Hedestam.

Two new approaches have been developed in the project to enable researchers to probe deeper into this area, called IgDiscover and ImmuneDiscover; the latter is a high throughput approach which is used to sequence large numbers of people very efficiently. “With ImmuneDiscover we start from DNA samples rather than expressed RNA” explains Professor Karlsson Hedestam. “This approach allows us to generate genetic profiles of thousands of people simultaneously.” By applying this technique

to disease cohorts, the team aims to identify gene variants that make people more vulnerable. “By performing personalized genotyping of B- and T-cell receptor genes, we may be able to piece together more of the puzzle for diseases where adaptive immune responses are known to be involved, such as auto-immune conditions”, says Professor

Karlsson Hedestam. A deeper understanding of whether certain alleles are associated with auto-immune disease would enable researchers to identify high-risk groups. “These could be very important prognostic markers that could then be added to information about the patient’s HLA-type,” says Professor Karlsson Hedestam.

Analysis of B- and T-cell receptor V, D and J gene diversity in populations with different genetic ancestries

Project Objectives

The aim of the project is to define human population diversity in the hundreds of germline V, D and J genes that encode our B- and T-cell antigen receptors, and to investigate how this diversity influences our responses to infections and vaccination or predisposes to the development of autoimmune diseases.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant agreement ID: 788016. In addition to the ERC Advanced grant, the work is funded by a Distinguished Professor grant from the Swedish Research Council.

Project Partners

For the work on rheumatoid arthritis, we are collaborating with Drs. Malmström and Padyukov at Karolinska Institutet.

Contact Details

Gunilla B. Karlsson Hedestam Professor

Department of Microbiology, Tumor and Cell Biology

Karolinska Institutet

171 77 Stockholm

Visiting address:

Biomedicum C7, C0767, Solnavägen 9 E: Gunilla.Karlsson.Hedestam@ki.se W: https://ki.se/en/mtc/gunilla-karlssonhedestam-group

The first priorities for the project were to develop the analytical methods and ensure that they were highly robust, reproducible, and precise. Once this was established, the team moved on to identify new alleles and define the frequencies at which they occur in persons with different population ancestries. This work establishes an important baseline, a picture of normal frequencies, which will provide a basis for comparison with disease cohorts, says Professor Karlsson Hedestam. “We think that with our soon to be completed studies on human populations from our own sample collection and the 1,000 Genome Project samples, we are starting to reach saturation point. We will have found most variants except very infrequent ones,” says Professor Karlsson Hedestam.

In the longer run, information about adaptive immune receptor genotypes could be very useful for clinicians, enabling the stratification of patients into higher and lower-risk groups, while it could also be highly valuable for pharmaceutical companies. It may be possible to specifically target genes associated with a disease for example, with the project helping to lay the foundations for further development in this regard. “There is huge potential in this work, but we must do the groundwork first. These gene regions are extremely complicated, and they cannot be completely sequenced with conventional methods,” stresses Professor Karlsson Hedestam.

Gunilla Karlsson Hedestam received her BSc from Uppsala University in 1990 and PhD from University of Oxford in 1993. After a post-doctoral fellowship at Harvard 1994-1998, she joined Karolinska Institutet where she became tenured Professor in 2012. She is a member of the Royal Swedish Academy of Sciences since 2019.

“This work is now almost complete, and we are ready to start pushing through disease cohorts,” says Professor Karlsson Hedestam. This will involve genotyping samples from patients with specific conditions alongside healthy controls. “We will focus increasingly on cohort studies in the coming years,” she continues. When an association is identified for a given disease, the team will investigate the functional impact of the polymorphism using in vitro cell culture systems and structural analysis to unravel the molecular basis for the effect.

The project’s research also holds relevance to the goal of adapting or tailoring vaccines to the genetic profile of specific populations, as vaccines can be more effective in some areas of the world than others. “If certain allelic variants of genes are needed to produce protective antibodies, and they are present at different frequencies in different populations, it could mean that a vaccine could work well in Europe but less well in Asia,” explains Professor Karlsson Hedestam. A deeper understanding of the genetic background of local populations could enable the development of more tailored and effective vaccines against disease.

The TGF-ß cytokine is a prototype in a large family of growth regulatory factors, and it plays a number of important roles during tumour progression. Researchers are investigating the molecular mechanisms behind how TGF-ß activates its pro-tumourigenic signalling pathways, which could help uncover new targets for drug development, as Professor Carl-Henrik Heldin explains.

The Transforming Growth Factor-ß (TGF-ß) cytokine performs a variety of different roles in tumour progression. It makes tumour cells more invasive and prone to forming metastasis, while it also has other pro-tumourigenic effects. “For example. it inhibits the immune system, and promotes angiogenesis, as well as the development of cancer-associated fibroblasts,” outlines Carl-Henrik Heldin, Professor in Molecular Cell Biology at Uppsala University. As the head of an ERC-backed project based at the University, Professor Heldin is investigating the underlying mechanisms behind these pro-tumourigenic effects, which contrast sharply with its role before a tumour is established. “Initially TGF-ß is actually a tumour-suppressor, as it inhibits the growth of most normal cells and induces apoptosis of many cell types. These

Mechanism by which TGFb activates Src

cancer is a fairly benign disease, and it only really threatens us when it starts to invade the surrounding environment and spread through the body.”

Initially TGF-ß is a tumour-suppressor, as it inhibits the growth of most normal cells and induces apoptosis of many cell types. As a tumour progresses there is a kind of switch, which converts TGF-ß from being a tumour-suppressor to a tumour promoter.

are tumour-suppressive effects,” he explains. “As a tumour progresses there is a kind of switch, which converts TGF-ß from being a tumour-suppressor to a tumour promoter.”

This means a great deal of care needs to be taken in treating tumour patients with TGF-ß inhibitors and avoiding unintended side-effects. The wider aim for Professor Heldin and his colleagues is to help find ways of selectively inhibiting the protumourigenic signalling pathways in tumour cells, while leaving the tumour-suppressive pathways unperturbed. “We are trying to figure out the molecular mechanisms behind why TGF-ß activates various signalling pathways,” he says. The vast majority of cancer-related deaths are caused by the cancer spreading, so an effective method of inhibiting or slowing down that spread could have a significant impact. “A large group of patients could benefit,” stresses Professor Heldin. “For example, in most cases prostate

There are TGF-ß receptors on essentially all cell types, reinforcing the wider relevance of this research. Perturbation of TGF-ß signalling is also involved in several other diseases, including certain inflammatory conditions and fibrotic disorders, as Professor Heldin explains. “TGF-ß is a very powerful stimulator of matrix proteins for instance, a process that is involved in fibrosis. This has stimulated a lot of interest, and TGF-ß inhibitors have been discussed as possible therapeutic agents in fibrosis,” he says. This work holds wider relevance to the pharmaceutical industry, and Professor Heldin’s research represents an important contribution to the goal of developing more effective treatments. “We eventually hope to provide targets for drug discovery,” he continues. “However, the whole process of drug development cannot really take place in an academic setting – we need further input and collaboration.”

Progress has been made over the course of the project in terms of selectively inhibiting

the pro-tumourigenic pathways, and Professor Heldin hopes to build on this further, with plans in the pipeline for continued investigation. “We are pursuing several lines of research into how TGF-ß activates various signalling pathways, and we hope to make more progress in future,” he says.

Pro-tumorigenic effects of TGFbelucidation of mechanisms and development of selective inhibitors

Carl-Henrik Heldin

Professor in Molecular Cell Biology

Dept. of Medical Biochemistry and Microbiology

Box 582, Biomedical Center

Uppsala University SE-751 23 Uppsala, Sweden

T: +46-18-4714738

E: C-H.Heldin@imbim.uu.se

W: https://www.katalog.uu.se/ profile/?id=N96-1274

Carl-Henrik Heldin is a Professor in the Department of Medical Biochemistry and Microbiology at Uppsala University. His main research interest is the mechanisms of signal transduction by growth regulatory factors, as well as their normal function and role in disease.

Cervical cancer is a significant threat to women worldwide. Despite the availability of preventive measures, the incidence of this disease remains high, claiming thousands of lives each year. In Europe alone, there were 61,000 new cases and 25,000 deaths from cervical cancer in 2018 and cervical cancer incidence continues to rise in several Central and Eastern European regions without organised screening. Additionally, the Netherlands, Sweden, and Finland, where organised screening programs have resulted in positive outcomes in the past, have also witnessed an increased incidence in the last decade. This demonstrates the necessity for more effective screening strategies.

We spoke to Dr. Johannes Berkhof, coordinator of the RISCC (Risk-based Screening for Cervical Cancer) project.

Funded by the European Union’s Horizon 2020 Framework Programme for Research and Innovation, RISCC brings together a diverse consortium of leading researchers in the field of HPV, HPV screening, and HPV vaccination. The consortium’s primary objective is to develop and evaluate Europe’s first risk-

based screening program for cervical cancer. Additionally, RISCC aims to provide opensource implementation tools and contribute to the goal of eliminating cervical cancer.

Current screening programs have a high rate of unnecessary colposcopy referrals and a varying number of screening invitations across European countries. These inefficiencies highlight the potential for significant cost savings, estimated at €100 million across Europe. To address these challenges, RISCC advocates for a paradigm shift from the current one-size-fits-all approach to an individualised risk-based screening protocol. In the new approach, the clinical management after an initial screening test will be guided by the risk of cervical cancer, defined by test results, screening history, age, vaccination status and other potential risk factors. “Why would we want to do cervical cancer screening based on individual risks? So far, most of the programs have all used a one-size-fits-all program. So, irrespective of your risk, you always get the same test at fixed intervals. But some women have a much higher risk than other women,

and we would like to account for that. This would lead to less unnecessary activity in low–risk women and lower rates of anxiety. It is also cost-effective because if we invest €2 per woman, we would be able to avoid 12,500 cervical cancer deaths in Europe every year. That is a substantial reduction in the number of cervical cancer deaths” explains Dr. Berkhof.

In order to create a risk-based screening program, it is necessary to develop risk profiles for cervical cancer:

Cervical cancer screening has been traditionally done through cytological screening with the Pap test. However, this cancer is caused by the human papillomavirus (HPV) infection and its detection via HPV testing has shown significant improvements over the Pap in cervical cancer screening. Data from screening cohorts in Europe and North America demonstrates that switching to HPV screening reduces the risk of precancerous conditions by approximately 70% after a negative screen.

Cervical cancer screening programs have been implemented globally but often follow a “one-sizefits-all” approach. This leads to suboptimal protection, suboptimal resource allocation, and harms. We spoke to Dr. Johannes Berkhof, the Coordinator of the RISCC project which aims to develop a risk-based screening using screening history, HPV vaccination status, and other relevant risk factors.The RISCC consortium meeting held in Autumn 2022 in Amsterdam.

By analyzing data from previous screening rounds, the researchers have observed that the risk of cervical cancer drops after multiple negative test results compared to just one negative HPV test. However, “Various studies show that the risk of pre-cancer after a negative HPV test is about seven times higher if it is preceded by a positive HPV test in the previous round. This illustrates that taking into account the result of the HPV test in the previous round can optimize your screening program” explains Dr. Berkhof.

Data on screening results is collected from seven different HPV screening trials conducted across Europe, including over 150,000 women from the previous CoheaHr project as well as data from screening programs. Some of these trials compare cytology-based screening to HPV-based screening, and they provide the project with new follow-up data spanning up to 20 years after enrollment. Additionally, data is

There are other risk factors such as poverty, smoking, use of oral contraceptives, and sexual behaviour that may influence the risk of getting an HPV infection or developing (pre)cancer. The researchers will summarize the evidence on the potential significance of these risk factors for a cervical screening program.

“After we have collected all that evidence, we will use all these risk estimates to develop a model for Europe, that enables us to assess the health benefits of different risk-based screening strategies and weigh them against the cost of implementing these strategies. By tailoring the screening approaches to local European settings, we can identify cost-effective riskbased screening strategies that suit local settings and optimize resource allocation” explains Dr. Berkhof.

RISCC aims to introduce a risk-based screening approach to improve the effectiveness and efficiency of cervical cancer screening. By creating risk profiles using data from large trials and screening programs in the general population, the project will develop a more targeted and personalised screening strategy. The goal is to optimize screening algorithms and improve the identification of high-risk individuals for early intervention. This will ultimately contribute to the elimination of cervical cancer as a public health problem.

Project Funding

This project has received funding from the European Union’s Horizon 2020 research and innovation programme under grant agreement No 847845.

Project Partners

https://www.riscc-h2020.eu/about-riscc/partners/ Contact Details

RISCC daily management:

Professor Johannes Berkhof, Project Coordinator

Dr. Laurian Jongejan, Program Manager

utilised from HPV self-sampling trials that compare HPV testing on a sample taken at the clinic by a healthcare professional to HPV testing on a sample taken at home using a self-sampling kit. These selfsampling trials have a follow-up period of approximately ten years. Furthermore, the samples from the self-sampling trials will be used to evaluate the use of molecular tests, specifically DNA methylation, to identify (pre)cancer in HPV-positive women. Currently, HPV-positive women need to visit the healthcare professional for a Pap smear. The use of molecular markers, such as DNA methylation, provides an appealing method to stratify HPV-positive women, as it can be directly performed on the selfcollected specimen.

Risk profiles are also developed for vaccinated birth cohorts. “Vaccination is an important risk factor. We are collecting data from a randomised trial conducted in Finland that involves 80,000 women with known vaccination status who were randomly assigned to either frequent or infrequent HPV screening. We also use observational data from countries where the individual HPV vaccination status is linked to screening outcomes. This allows us to examine the direct and indirect effects of vaccination on screening” says Dr. Berkhof.

Digitalization facilitates implementation of risk-based screening by incorporating patient-specific risk factors and providing personalised recommendations. The project will implement a risk-based screening strategy in a pilot study conducted in Sweden. This strategy will make use of a digital invitation platform, allowing individuals to enroll for risk-based screening and receive invitations through a linked app connected to the screening management database. The pilot trial in Sweden will assess the impact of the digital invitational system on attendance, acceptability, and cost savings in risk-based screening.

As part of the tools to facilitate implementation, the consortium is developing an e-learning open-access course through the e-oncologia platform (https://www.e-oncologia.org) on HPV-related content and cervical cancer management. It will teach healthcare professionals and screening managers how to implement HPV risk-based screening. The dissemination of the project’s results via social media and newsletters with HPV-related content, and ESGO-ENGAGe involvement with gynecological cancer survivors aims to increase HPV awareness and the need to improve current screening practices among researchers and the general population.

Amsterdam University Medical Centers, location VUmc, Amsterdam, The Netherlands

E: riscc@amsterdamumc.nl : @RISCC_H2020

W: https://www.riscc-h2020.eu/

Prof. Berkhof is head of the Department of Epidemiology and Data Science in Amsterdam University Medical Center. He has over 20 years’ experience in statistical and modelling research with over 200 articles on HPV, methodology, cost-effectiveness, screening, public health and statistics.

Dr. Robles is an epidemiologist at the Cancer Epidemiology Research Program within the Catalan Institute of Oncology. She has over 10 years’ experience in cancer epidemiology and prevention, mainly focused on cervical cancer over the last years.

“Our aim is to help countries and policymakers to develop their HPV screening programs that incorporate various aspects, all rooted in the concept of risk-based screening.”Prof. Johannes Berkhof Dr. Claudia Robles

We spoke to Dr. Maria Torrente, who is the Coordinator of the project CLARIFY. CLARIFY aims to identify personalized risk factors that influence the patient’s outcome at the end of their oncological treatment. The CLARIFY Platform, has been developed to allow healthcare professionals to understand, work with, and make decisions based on real data analysis from patients.

Diagnosis and treatment of cancer have seen great advancements in recent decades. More than half of adult patients who suffer from cancer will live at least 5 years in the US and Europe. This has created a new challenge for healthcare providers - ensuring the long-term quality of life and well-being of cancer survivors following their oncological treatment. The EU-funded CLARIFY project is addressing this challenge. CLARIFY focuses on collecting and analyzing clinical, genomic, and behavioral data from survivors of three specific types of cancer: breast, lung, and lymphoma. Using the power of big data and artificial intelligence techniques, CLARIFY integrates data with relevant publicly available biomedical information and data gathered from wearable devices used by patients post-treatment. By analyzing this abundance of information, the project aims to predict the patient-specific risks of developing secondary effects and toxicities resulting from their cancer treatments and identify patients who are at the highest risk of psychosocial dysfunction. The goal of the CLARIFY project is to transform cancer survivors’ care by providing enhanced, personalized treatment options. The project seeks to empower healthcare professionals with valuable insights into individual patient risks. This knowledge will enable them to deliver better-tailored care, leading to improved quality of life and overall well-being for cancer survivors.