Attractiveness AI: Can Artificial Intelligence Really Rate Your Looks?

Re-Capturing the Road to Net-Zero Emissions

BREAKING DOWN CARBON CAPTURE AND STORAGE

Plants, Cells, and Bugs: The Good, the Bad, and the Ugly of Making Meat Sustainable

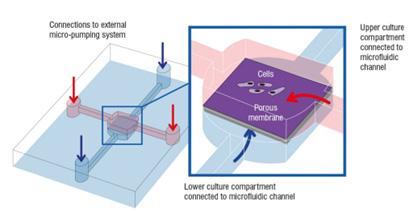

Fur-Free Future: Revolutionizing Research with 3D Tissue Culture

Letter from the Editor

Dear Reader,

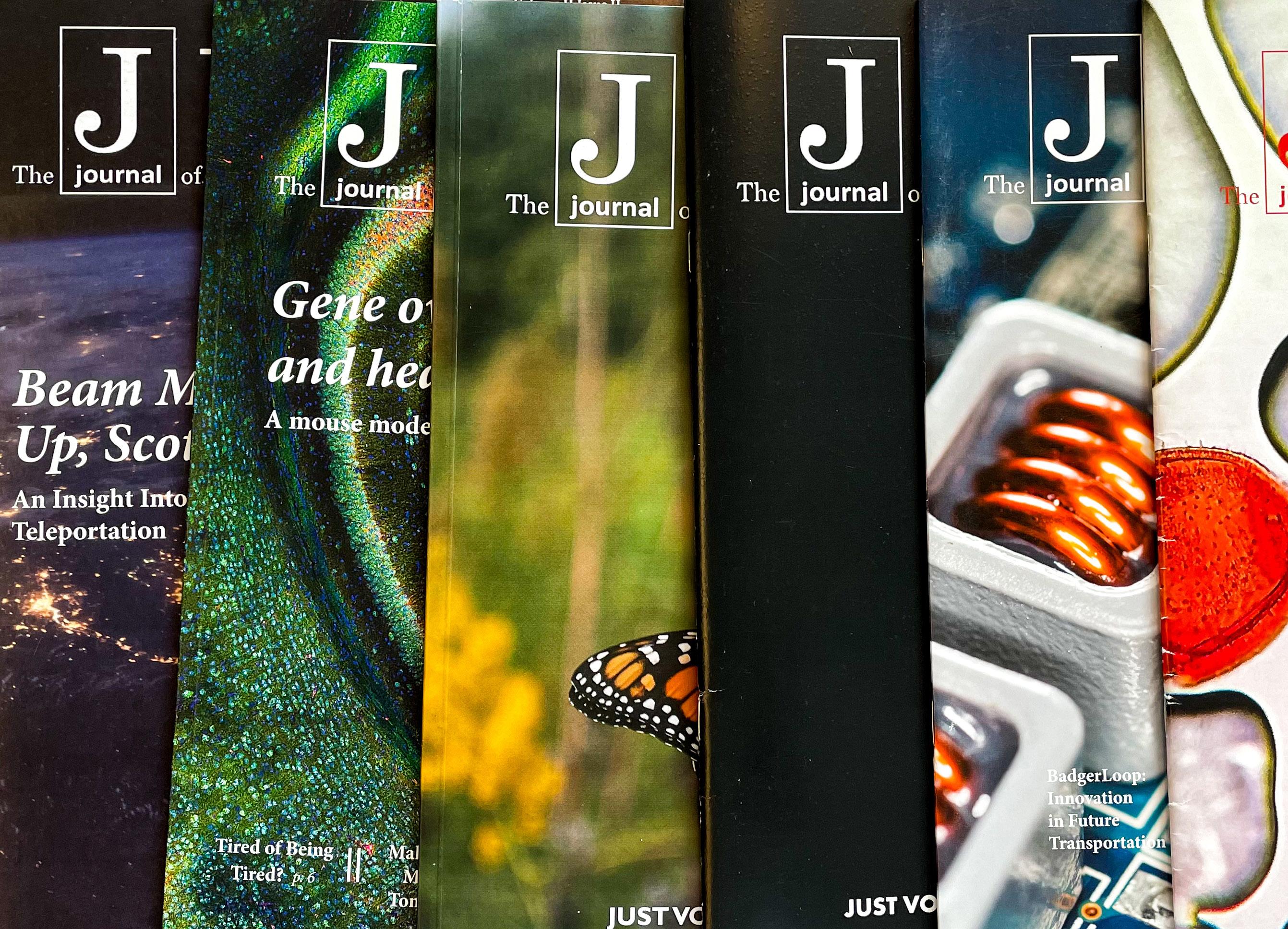

It is with immense pride and anticipation that we unveil Volume IX, Issue I of the Journal of Undergraduate Science and Technology (JUST). Our journal was originally founded with the goal of creating a platform where like-minded undergraduates could showcase cutting-edge research and scientific enhancements on both local and global scales; since then, it has transformed into something far greater than its original form. From our bi-yearly publications to photography, design, and our recently launched short-form newsletter, our journal has evolved into a community of undergraduates aiming towards a common mission: making science accessible to broader audiences. At UW-Madison, we have the unique ability to provide undergraduate students the ability to publish their work in a peer-reviewed journal and give students a glimpse into the publication process of academic journals. We believe that such experiences are important in setting up students for success within academic settings as they continue on their future paths. We are grateful to play a part in the larger effort of making scientific writing and research available to those beyond academia.

Additionally, it is with immense gratitude that I take some time to acknowledge the extraordinary team behind this publication. While I may be writing this letter, the truth is that this journal is a vibrant symphony performed by a multitude of talented undergraduates. Our staff writers, editors, designers, marketing, and web design teams

worked diligently over the past semester to transform knowledge onto paper and do so while also balancing their lives as students and individuals. To our advisors Dr. Todd Newman and Dr. Joan Jorgenson, I want to send a special thanks for all the time and invaluable expertise that you were able to share with us this semester. Lastly, I would like to take a second to recognize the Wisconsin Institute for Discovery, the Associated Students of Madison, and the College of Agriculture and Life Sciences for their unwavering support of undergraduate science journalism.

As you delve into the pages that follow, remember that science isn’t confined to labs and (boring) textbooks; it’s woven into the very fabric of life, the air we breathe, the food we eat, and the technology we hold close. May the following editorials, photography, and manuscripts not only enlighten you but also empower you to see the world through a more scientific lens. Even if you haven’t always felt drawn to STEM, I hope this journal ignites your inner explorer and shows you the wonder hidden in everyday things. In this issue, you will find a smorgasbord of diverse pieces – from computers that solve mathematical puzzles to the capture of carbon in the environment and even the startling power of worms! Have fun on this adventure!

Sincerely,

Manasi Simhan Editor-In-Chief

Pictured above: 2023-24 Executive Board: Top row (from left to right): N. Martinson, A. Shaikh, Q. Ruzicka, S. Dekate.

Bottom row (from left to right): D. Hamdan, A. Li, M. Simhan, C. Hansen. Not pictured: T. Pham and D. Chatterjee

Manasi Simhan Editor-In-Chief

Pictured above: 2023-24 Executive Board: Top row (from left to right): N. Martinson, A. Shaikh, Q. Ruzicka, S. Dekate.

Bottom row (from left to right): D. Hamdan, A. Li, M. Simhan, C. Hansen. Not pictured: T. Pham and D. Chatterjee

who we are

The Journal of Undergraduate Science and Technology (JUST) is an interdisciplinary journal for the publication and dissemination of undergraduate research conducted at the University of Wisconsin-Madison. Encompassing all areas of research in science and technology, JUST aims to provide an openaccess platform for undergraduates to share their research with the university and the Madison community at large.

Submit your research below to be featured in an upcoming issue!

Our sponsors

EDITOR-IN-CHIEF

Manasi Simhan

MANAGING EDITORs

Adina Shaikh

Dima Hamdan

Trang Pham

HEAD WRITERs

Amy Li

Natalie Martinson

MARKETING Director

Chloe Hansen

Marketing Assistant

Mackenzie Twomey

DESIGN Director

Quinn Ruzicka

design assistant

Olive Haller

Our team

Head Web Developer

Shreyanshu Dekate

Web assistant

Sophie Liu

Finance and outreach Chair

Dev Chatterjee

EDITORS OF CONTENT

Analise Coon

Carmela De Leon

Ivy Raya

LeAnn Aschkar

Mihir Manna

Walid Lakdari

STAFF WRITERS

Adrian Sieck

Alex Wong

Daniel Molina

Kaashvi Agarwwal

Manal Aditi

Vedaa Vandavas

Zane Brinnington

Short-Form Editors

Ayah Amer

Dannah Altiti

Mackenzie Twomey

short-Form Writers

Daniel Del Carpio

Khadijah Dhoondia

Mahathi Karthikeyan

Sean Hugelmeyer

Contributing Writer

Jackson Kunde

Contributing Researcher

Dara Safe

We would like to sincerely thank the College of Agriculture and Life Sciences [CALS], the Wisconsin Institute for Discovery, the Associated Students of Madison (ASM), and the Wisconsin Alumni Research Foundation for financially supporting the production of JUST’s Fall 2023 issue.

Thank you!

Editorials

Re-Capturing

Computers are learning to do math, but can they learn to think along the way: A Look at

Jackson

Manal

Attractiveness

Rate Your Looks?

Alexandra

Plants,

One Man’s Trash is Another Worm’s Treasure: Benefits of Vermicompost

Written by Adrian Sieck Edited by Carmela De LeonWhen it comes to food waste solutions, most people have heard of composting as a way to turn leftovers into something useful. Composting is great, but if you want an upgrade, worms are a better substitute. Vermicompost (from the Latin word vermis, meaning ‘worm’) is a type of compost that uses worms to break down food waste. According to an article titled, “Vermicomposting for Beginners” by Rick Carr, vermicompost is described as “the product of earthworm digestion and aerobic decomposition using the activities of micro and macroorganisms at room temperature” (2019). This means that vermicompost comprises worm-digested food waste and organic material decomposed by microorganisms (microscopically small organisms, like bacteria and fungi). ‘Aerobic decomposition’ refers to the decomposition that happens when oxygen is present---decomposition will be done by microorganisms that need oxygen.

However, the use of vermicompost has many benefits while also being an eco-friendly practice. It has been shown to improve nutrient availability, soil structure, and moisture, encourage microbe activity, encourage plant growth, and even suppress pests.

Vermicompost Bins

Vermicompost is primarily made in bins, as they are effective and easy to find. This helps to make vermicomposting fairly beginner-friendly, and bins can be a variety of sizes depending on how much waste you have and how much room you have. Based on the guide, bins will need small holes in the sides and stakes in the bottom, to allow airflow throughout. The bin should be half full of soil and a layer of moistened shredded newspaper. The best worms to use are red wigglers, like Eisenia fetida and Eisenia andrei, due to their living and eating habits. The bin should be at room temperature with adequate moisture. For more information, read “Vermicomposting for Beginners” by Rick Carr.

Nutrients and Hormones

Decomposers play a huge role in the ecosystem as they convert dead material into usable material for other organisms. They make it possible for all the energy and nutrients from plants to return to the plants after death. Earthworms are a great example, as they eat organic material in soil and on forest floors. What they leave behind after feeding is full of nutrients and bacteria, which is why vermicompost is so effective.

Vermicompost contains very high levels of essential plant nutrients, more than regular soil. Not only does it contain the most common essential nutrients (nitrogen, potassium, carbon), but it also contains less common nutrients like zinc, magnesium, and iron in forms that plants can absorb (Ur Redman et al., 2023). Plants can’t just take up any chemicals and pick out their desired nutrients. They need these nutrients around them in specific forms, which vermicompost, as well as any good soil has available. As a cherry on top, vermicompost does not only contain nutrients. It also has microorganisms that can continue to make more nutrients available over time, like a grocery store that keeps restocking.

Applying vermicompost to soil will increase plant growth and improve yield. Knowing that this is due to the nutrient availability in vermicompost, another factor is the plant hormones that vermicompost contains. Microbes and earthworms will secrete hormones like auxins, gibberellins, and cytokinins (Ur Redman et al., 2023). These hormones have a variety of functions, but most notably, they stimulate root growth and cell division, germination, and stem elongation. Faster growth and germination from hormones in vermicompost help farmers and gardeners achieve better yields.

Soil Structure and Drought

Earthworms can improve the structure of soil by breaking down plant material and minerals, as well as mixing around organic matter to form soil aggregates (Ur Redman et al., 2023). Soil aggregates are small clumps of sand, silt, and clay bound together. Aggregates are essential because they form the soil structure, while the space between the aggregate clumps leaves room for air and water to pass through. Stable soil aggregates that hold their shape prove to be crucial for soil ecosystem health, as their stable structure consistently supplies oxygen to soil organisms and absorbs water well for plants.

Surprisingly, drought stress can be reduced by vermicompost. The higher the water holding capacity and porosity, the less destructive effects appeared. During drought, plants will respond in several ways to keep water in. One method is to keep the stomata, openings in the leaves, closed. Stomata allow gas inside, like carbon dioxide, to be used for itself. Carbon dioxide is a vital part of photosynthesis, so plants need stomata to allow it in. However, in addition to letting things into the plant, stomata run the risk of letting things out of the plant- namely water. In drought conditions, plants will keep their stomata closed in order to prevent water from escaping, but the lack of carbon dioxide stresses them. Because of this, stomatal closure is a crucial indicator of drought, with high stomatal closure indicating water stress and lower stomatal closure indicating more available water. Vermicompost reduced the stomatal closure in plants in drought conditions (Ur Redman et al, 2023). This indicates that vermicompost helps to prevent water stress.

Disease Freeing Advantage

Vermicompost can be very influential, with the ability to affect microbial activity, soil temperature, porosity, infiltration, nutrient content, and even crop yield. Its positive effect on microbes increases the number of beneficial microbes, boosting plant growth (Ur Redman et al., 2023). Beneficial

“

Vermicompost can be very influential, with the ability to affect microbial activity, soil temperature, porosity, infiltration, nutrient content, and even crop yield.

”

microbes are microorganisms that can perform a variety of roles: nutrient processing, hormone production, and pathogen control. These beneficial microbes make plants happy, well-fed, quickly growing, and disease-free.

Indeed, vermicompost can prevent diseases. The reason for this is mainly because earthworms excrete a fluid called coelomic fluid. Coelomic fluid serves multiple purposes in worms, unrelated to microorganisms- mainly, to move and store things around the body. But when combined with other compounds released by earthworms, coelomic fluid has insecticidal and antifungal properties which help suppress insect pests and other diseases (Ur Redman et al., 2023). Additionally, due to the boost to beneficial microbes, vermicompost helps beneficial microbes out-compete less beneficial ones.

Conclusion

Overall, vermicompost provides a whole host of benefits. Whether plants experience biotic or abiotic stress, there’s a chance that vermicompost can fix it. With the provided evidence, it shows that vermicompost can reduce water stress, salinity (salt) stress, pest and disease stress, and lack of nutrients. Earthworms fulfill an important role in decomposition, nutrient cycling, soil health and structure, and plant success–and vermicompost harnesses this power to benefit plants and plant-eaters alike. Due to these benefits, vermicompost should be considered a capable and worthwhile method to fertilize plants and improve soil health.

Works Cited

Carr, R. (2019, November 1). Vermicomposting for Beginners. Rodale Institute.

https://rodaleinstitute.org/science/articles/vermicomposting-for-beginners/

Vermicompost: Enhancing Plant Growth and Combating Abiotic and Biotic Stress, Ur Redman, S., De Castro, F., Aprile, A., Benedetti, M., & Fanizzi, F. P. (2023). Vermicompost: Enhancing Plant Growth and Combating Abiotic and Biotic Stress. Agronomy, 13(4), 1134. https://doi.org/10.3390/agronomy13041134

Image: Kyle Spradley | © 2014 - Curators of the University of Missouri

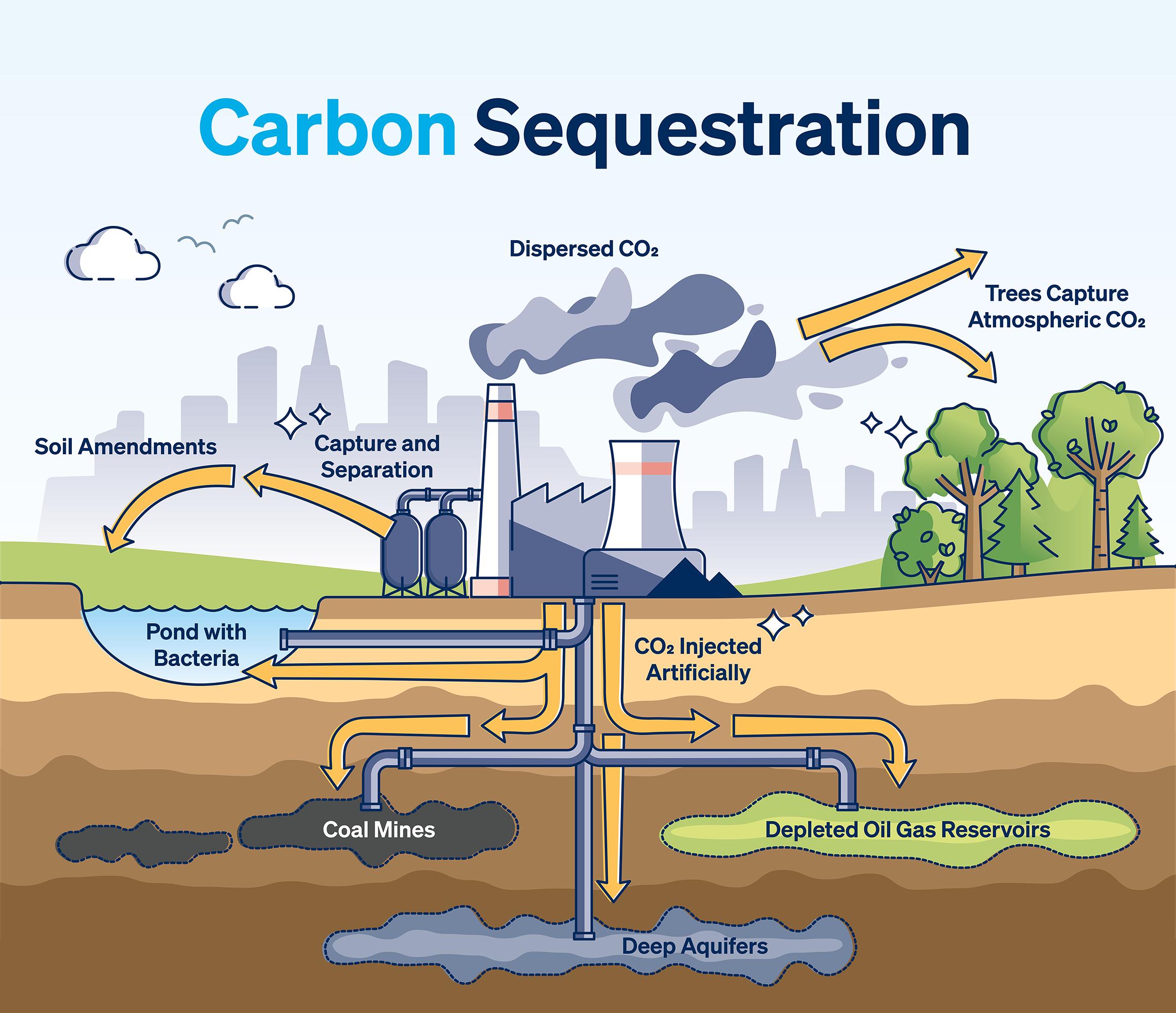

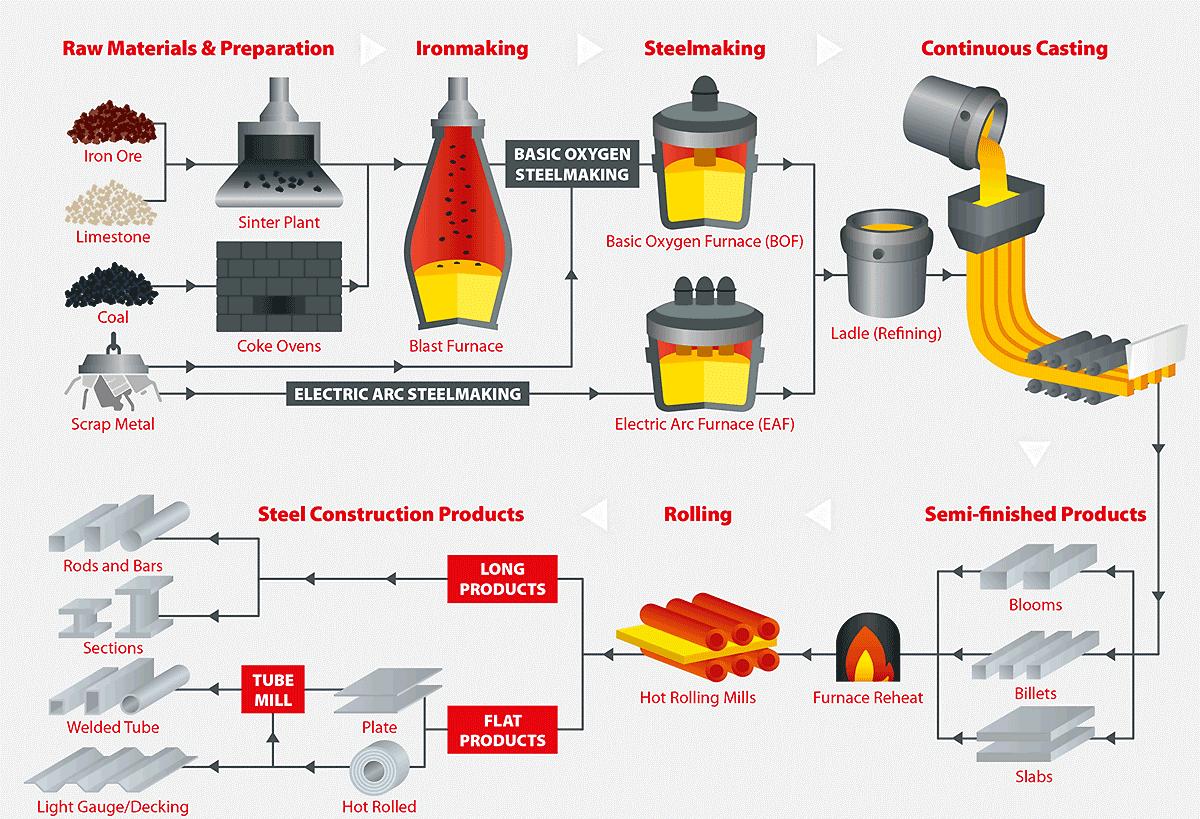

Re-Capturing the Road to Net-Zero Emissions

Written by Daniel Molina Edited by Dima Hamdan and Manasi SimhanOne person can save roughly one tonne of carbon dioxide emissions by converting to vegetarianism for one year. A round trip from Chicago to London emits about the same amount. From reusable straws to shower times to the three R’s (Renew, Reuse, Recycle), it’s hard to remember a time when sustainability was not at the forefront of young people’s mind; but this individualistic view of climate change and pollution portrays just a small part of a picture. Most greenhouse gasses are being emitted from industrial processes like the burning of fossil fuels – not from individual Wisconsinites.

While combating climate change is still the responsibility of every person, being informed about the bigger picture is a part of that responsibility, and understanding how achieving net zero emissions is possible is necessary for efficient activism. An incredible tool for accomplishing such a goal is through a technology called carbon capture and storage (CCS). Carbon capture allows for the carbon dioxide emitted through combustion to be reabsorbed back into the earth and stored in a secure way instead of going into the atmosphere.

Carbon capture, more often than not, acts as an add-on to existing industrial plants that serve to absorb CO2 as it is being emitted. The international think tank, Global CCS Institute, was formed to investigate and promote the use of carbon capture, which they define as “technologies that capture the greenhouse gas carbon dioxide (CO2) and store it safely underground, so that it does not contribute to climate change... CCS includes both capturing CO2 from large emission sources (referred to as point-source capture) and also directly from the atmosphere,” (Global CCS Institute, 2022). Although encouraging, carbon capture technologies are extremely energy intensive, regardless of how they are implemented. For this reason, carbon capture infrastructure projects must have appropriate renewable energy sources, otherwise, they are just perpetuating the unsustainable behavior they were implemented to curb.

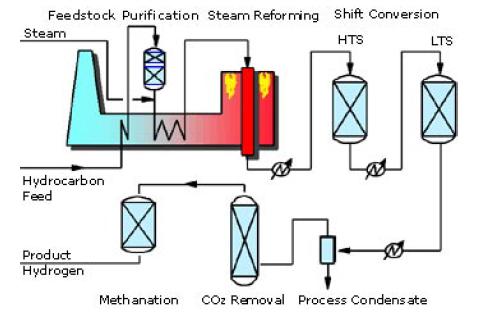

The process can take many forms, and the science is extremely complex, varying from case to case. There are generally three methods of carbon capture: post-combustion, pre- combustion, and ‘oxyfuel combustion’, (Omodolor et al, 2020). Post-combustion is the most common and involves the use of a filter that scrubs through the fumes produced by fossil fuel combustion, ‘filtering’ out the carbon dioxide using special chemical solvents designed to bind to CO2. This method is the most popular, as it can be retrofitted to most existing power plants. Pre-combustion involves the

use of a gasifier, a tank that uses high pressure and temperature, which converts fuel into a gas, separating carbon dioxide from hydrogen gas. The carbon dioxide is stored, and the hydrogen gas is used as a cleaner fuel to be burned for electricity. Lastly, oxyfuel combustion is a system where fuels are burned in pure oxygen instead of air which contains a smaller percentage of the gas. What this highly energy intensive and dangerous process ensures is that the resulting byproducts are largely made up of carbon dioxide and water vapor. Using basic condensation, the water vapor is separated, and thecarbon dioxide is isolated at higher rates than the previous two methods. Carbon capture technology is crucial to undercutting emissions and easing a transition towards renewable energy, and the field is constantly changing and growing, these are just the methods already being used.

“ Climate change is never black and white, it requires understanding the nuances of technology and accepting that there is no perfect solution.

”

Carbon dioxide storage is another question entirely. After being captured, the carbon dioxide is compressed into a special type of liquid, allowing it to be stored more effectively. The beauty and genius of this storage system lies in the answer to the following question: where can we store all this carbon dioxide in liquid form? Where is there enough space to securely store the carbon dioxide that has just been collected? Why not in the very same place where it had originally been mined? Depleted oil reservoirs and other deep lying geological formations are perfect locations, they are already capable of absorbing large amounts of stored carbon dioxide.

A point to keep in mind is that this technology is not a solution, it is merely a method of abating damages that would have otherwise run rampant. Carbon capture technology should by no means be an excuse to continue investing in non-renewable resources but should instead be viewed as a cushion to allow for a smoother transition to greener and more sustainable forms of energy production. Wisconsin is home to 2 of the top 100 most polluting power plants in the country: Elm Road Generating Station, and Columbia Energy Center. Additionally, the top ten most productive power plants, in terms of electricity, produce nearly 90% of emissions and only just 63.5% of electricity (Wisconsin Environment Research and Policy Center, 2022). This is not enough. Power plants must be either more efficient with the emissions they are producing or find ways to curtail the pollution they create. Carbon capture technologies provide an avenue through which to accomplish this.

Nevertheless, it is the responsibility of everyone to do their part for the environment through recycling when possible, limiting waste and energy usage, but also to advocate for what they want for the planet. Climate change is never black and white, it requires understanding the nuances of technology and accepting that there is no perfect solution. Carbon capture has been doted by many as an anti-solution; a way

to validate the burning of excess fossil fuels justified through this new technology. People want a miracle solution. Carbon capture is a band aid that gives us the wiggle room to pursue alternative solutions like investing in green energy, but eventually, that time will run out without proper decision-making.

Works Cited

CCS explained: Capture. Global CCS Institute. (2022, July 12). https://www.globalccsinstitute.com/ccs-explained-capture/ Omodolor, I. S., Otor, H. O., Andonegui, J. A., Allen, B. J., & Alba-Rubio, A. C. (2020). Dual- function materials for CO2 capture and conversion: A Review. Industrial & Engineering Chemistry Research, 59(40), 17612–17631. https:// doi.org/10.1021/acs.iecr.0c02218

Wisconsin’s dirtiest power plants. Wisconsin Environment Research & Policy Center. (2022, June 22). https://environmentamerica.org/wisconsin/center/resources/wisconsinsdirtiest-power-plants/

Image: Source: VectorMine/Shutterstock.com

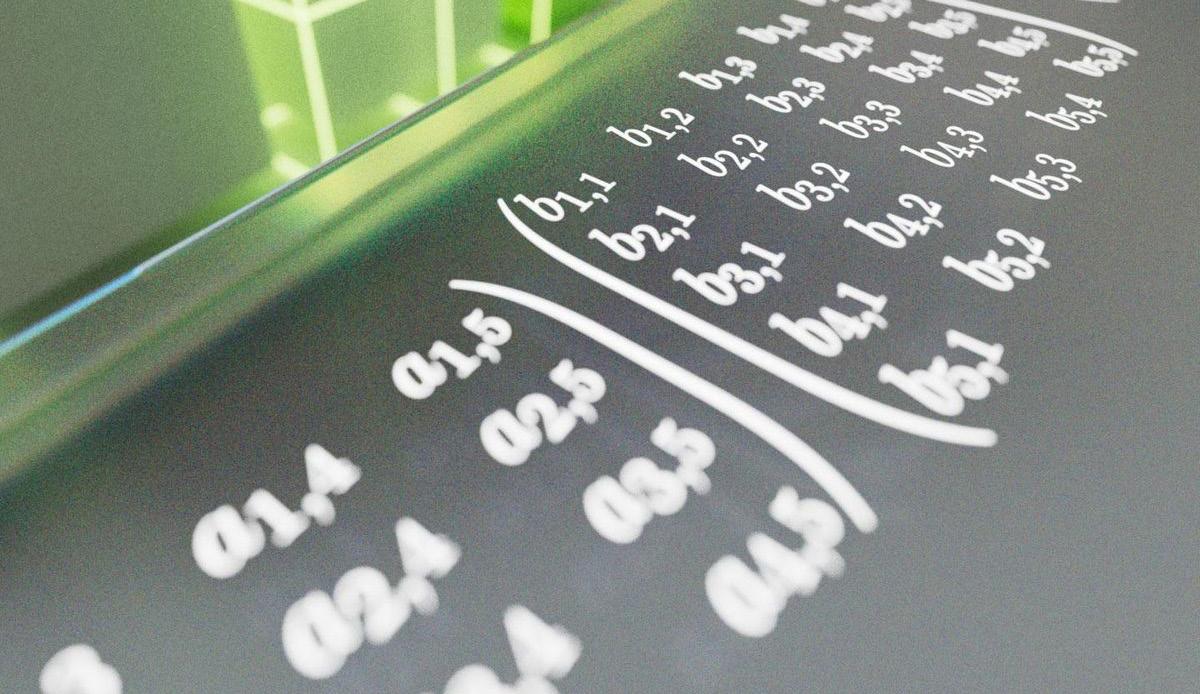

Computers are learning to do math, but can they learn to think along the way: A Look at Computer-Assisted Proofs

Written by Jackson Kunde Edited by Walid LakdariSince its nascent days in the minds of 19th-century mathematicians and its earliest implementations breaking codes during World War II, computer science has been intertwined with the study of mathematics. Mathematical logic formed the basis of computing, while computers allowed for fast arithmetic calculations. In short, mathematics advanced computing, and computing advanced mathematics. While these sciences are inextricably linked, it wasn’t until the 1970s that computer science found applications in the abstract branches of mathematics known as “pure” math. Now, artificial intelligence is developing new algorithms and assisting some of the most brilliant pure mathematicians of our lifetime, like fields medalist Terrance Tao (Tao, 2023).

The First Computer-assisted Proof

The first theorem that relied heavily on computer assistance is the four-color theorem. The problem is as follows: take any map, for example, the map of the United States and color each state such that no two states with the same colors are touching. For example, we may color California and Wisconsin the same color, but not California and Oregon as they border one another. This idea can be described as a problem in graph theory where each state is a vertex with an edge between each border state. Mathematicians posed the question: Can we color the map using only four colors?

The problem originated in the 1850s when South African mathematician Francis Guthrie noticed that the counties of England could be colored with only four colors. He speculated that any map could be colored using only four colors (Richeson, 2023). For over a hundred years, no one was able to produce sufficient mathematical proof of Gunthrie’s conjecture. When mathematicians Kenneth Appel and Wolfgang Haken at the University of Illinois took on the problem in

1976, they had a new trick up their sleeve: computers. They devised a proof that would give computers the ability to test many different cases to find a solution to the theorem. Over six months and thousands of hours of computing time, Appel and Haken were able to check thousands of configurations exhaustively (Richeson, 2023). The reward for their work: a long sought-after proof of the four-color problem. With this breakthrough, the relationship between pure mathematics and computing was established.

Computers Start Learning

That proof was established nearly 50 years ago. Since then, computer development has exploded —the IBM computer that Illinois mathematicians relied on is now less powerful than the modern cell phone (Love, 2014). With this improved hardware, scientists have developed methods to teach computers how to learn new tasks, such as games and algorithms. One of Google’s artificial intelligence labs, DeepMind, is a pioneer behind an approach that teaches computers known as “reinforcement learning.” Training a computer using a reinforcement learning algorithm is very similar to training your dog to do tricks. Unfortunately, we cannot simply explain to dogs how to roll over; instead, we give dogs treats if they complete a trick correctly or get close to completing the trick. This is how researchers can teach computers complicated tasks; rather than trying to explain and encode a good approach to a game, which we often cannot even verbalize or define, we can give the computer a list of possible “moves” that it could choose from and reward it if it performs well in the game.

Through this approach, DeepMind built artificial intelligence which achieved superhuman performance in a variety of different games. In the Chinese strategy game Go, they developed artificial intelligence that Lee Sedol, one of the best Go players of all time (DeepMind). In Chess, their artificial intelligence, AlphaZero, learned to play chess better than many grandmasters (Silver et. al, 2017). Furthermore, this approach is not only limited to existing games, DeepMind discovered that they could teach AI new tasks by formulating them as games like Chess and Go. It was this approach that allowed DeepMind to teach computers to develop new

mathematical algorithms.

DeepMind taught artificial intelligence to generate new, faster algorithms to do matrix multiplication. Matrix multiplication is a mathematical operation that underlies many of the calculations that computers perform regularly. It is used in computer graphics, physics simulations, and even in the algorithms used to train artificial intelligence. Due to matrix multiplication’s ubiquity, developing efficient algorithms for it could improve computing speeds across numerous computer programs.

To accomplish this, DeepMind created a single-player game where each move that the AI could take would correspond to a step in the matrix multiplication algorithm. The reward for the player would be finding fewer steps to successfully multiply the matrix.

The “game” of matrix multiplication is extremely difficult — the number of possible algorithms is far greater than the number of possible games of Chess or Go. In spite of the difficulty, though, the computer successfully discovered a variety of new algorithms to multiply matrices without any prior knowledge of previous algorithms. It rediscovered current state-of-the-art algorithms and developed new ones. If simple algorithms would take 100 steps and state-of-the-art approaches could solve the problems in 80 steps, a computer could learn to do it in just 76 (Fawzi et. al, 2022). This research demonstrated that computers could advance modern mathematics through learning.

These findings, although ground-breaking, have various drawbacks. To continue to make strides on any problem in mathematics we will have to formulate a new game with all the necessary rewards and possible moves similar to what researchers at DeepMind did for matrix multiplication. This will mean that each problem would come with its own set of “learned rules” that would have to be input into the computer each time – a process that is difficult, time-consuming, and simply not possible for every single problem out there. Furthermore, the new approaches that AI models might produce are not guaranteed to be understandable to humans. Though we could make use of the findings provided by artificial intelligence, we will not necessarily understand why they work. This is a problem. Mathematical proofs are formed from logical steps and arguments that explicate the validity of a new theorem. Without that support, it would be difficult for future mathematicians to build from the work of AI.

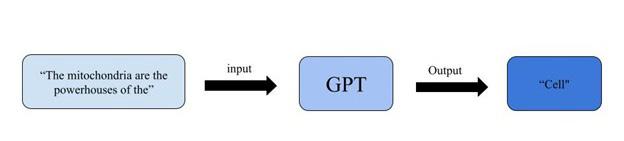

An AI That Can Explain Why

In recent years, a new type of artificial intelligence known as large language models (LLMs) has skyrocketed in popularity. These models have tantalized researchers with the idea that a computer system could not only develop new ideas but also explain the underlying logic that led them to their ideas. These models learn from vast amounts of textual data, encompassing sources such as books, articles, websites, and

a substantial portion of the internet. OpenAI’s GPT4, one of the most well-known LLMs, learned from approximately thirteen trillion words (OpenAI, 2023). During training, the model is presented with input sentences and must predict the next word. To put that enormous amount of data into perspective, if someone were to read 300 words per minute, which is around average, it would take them about 82,000 years to read all the words that GPT-4 was trained on.

One of the compelling features of this approach is how much simpler this training process is than the last approach. Instead of creating an entirely new game with actions and rewards, these models can use the same information, textbooks, articles, and blog posts that humans use to learn about different subjects. If the last approach was like training a dog, LLMs are more like training humans.

This approach has yielded some very impressive results, OpenAI’s GPT-4 scored a 5 on the AP Biology exam, scored over 1500 on the SAT, and passed the bar exam (OpenAI, 2023). Beyond this AI’s competitive college application and its status as a licensed attorney, scientists across domains are interested in how intelligent these systems can become. While some scientists are hopeful that these models can be the first artificial general intelligence and surpass human intelligence, many say that LLMs are no more than “stochastic parrots” that simply predict the next word (Bubeck et al, 2023). The ability to do mathematics, a purely logical, yet exceptionally creative science, may be a proxy for the model’s intelligence.

“

The ability to do mathematics, a purely logical, yet exceptionally creative science, may be a proxy for the model’s intelligence.

”

Unlike the standardized tests mentioned earlier, mathematics has proven very difficult for these AI models. Early versions released in 2022 would often fail at middle and highschool-level mathematics (Frieder et al, 2023). More recent models, like GPT-4, have improved, and early tests have shown that GPT-4 is proficient at basic math while also being able to do some university-level mathematics. However, while GPT-4 can do upper-level problems, this math is far from research-level mathematics, which not only requires significant domain knowledge but also requires clever, original approaches. When GPT-4 has been asked to complete math problems that require a clever solution, such as questions from the International Mathematical Olympiad, it “fails

spectacularly” (Frieder et al, 2023). Similarly, GPT-4 fails when asked to answer questions that require advanced approaches and knowledge such as graduate-level mathematics (Frieder et al, 2023).

One method that has improved LLMs’ mathematical reasoning is “process supervision.” Essentially, if the LLM is the student, process supervision is the teacher asking the student to “show their work.” Researchers train another AI model to evaluate LLM solutions based on the mathematical steps that it took to arrive at their final answers. This process has been shown to lead to more correct answers from models and could have the added benefit of forcing LLMs to explain the rationale behind their solutions, rather than just outputting an answer (Lightman et al, 2023).

Conclusion

In conclusion, as we imagine the development of artificial general intelligence, the symbiotic relationship between mathematics and computer science continues. A computer system that can understand mathematics and meaningfully contribute would not only revolutionize the field but would prove there is a model that can follow logical steps and be creative — in other words, a computer that can think.

Works Cited

Tao, T. (2023, October 24). Embracing change and resetting expectations. Microsoft Unlocked. https://unlocked.microsoft. com/ai-anthology/terence-tao/ OpenAI. (2023, March 27). GPT-4 technical report. arXiv.org. https://arxiv.org/abs/2303.08774

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E., Lee, P., Lee, Y. T., Li, Y., Lundberg, S., Nori, H., Palangi, H., Ribeiro, M. T., & Zhang, Y. (2023, April 13). Sparks of artificial general intelligence: Early experiments with GPT-4. arXiv.org. https://arxiv.org/abs/2303.12712

Richeson, D. S., & Quanta Magazine moderates comments to facilitate an informed, substantive. (2023, August 8). Only computers can solve this map-coloring problem from the 1800s. Quanta Magazine.

https://www.quantamagazine.org/only-computers-can-solvethis-map-coloring-problem-from-the -1800s-20230329/

Toal, S. J. (2017, October 18). Celebrating the four color theorem. College of Liberal Arts & Sciences at Illinois. https://las.illinois. edu/news/2017-10-18/celebrating-four-color-theorem Overbye, D. (2013, April 28). Kenneth I. Appel, mathematician who harnessed computer power, dies at 80. The New York Times. Frieder, S.; Pinchetti, L.; Griffiths, R.-R.; Salvatori, T.; Lukasiewicz, T.; Petersen, P.C.; Chevalier, A.; Berner, J. Mathematical capabilities of chatgpt. (2023). Cornell University

Love, D. (2014, May 19). The specs on this 1970 IBM mainframe will remind you just how far technology has come. Business Insider.

https://www.businessinsider.com/ibm-1970-mainframe-specsare-ridiculous-today-2014-5 DeepMind. (n.d.). AlphaGo. Google DeepMind. https://deepmind.google/technologies/alphago/ Silver, D., Hubert, T., Schrittwieser, J., Antonoglou, I., Lai, M., Guez, A., Lanctot, M., Sifre, L., Kumaran, D., Graepel, T., Lillicrap, T., Simonyan, K., & Hassabis, D. (2017, December 5).

Image: DeepMind

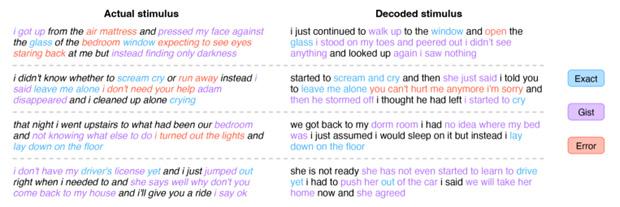

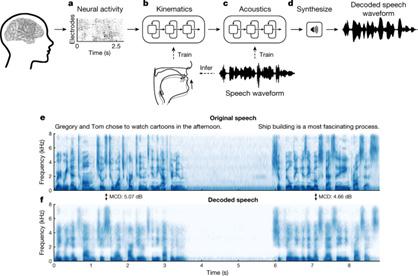

From Thought to Text

From pop culture to science fiction, the art of reading minds has been a tantalizing idea that comes with great power and consequence. In the 1950s, science fiction encountered a boom in extrasensory perception (ESP), mind-reading, and psychic abilities, with media such as Star Trek and The Twilight Zone introducing advanced life that uses mind-reading to their advantage (Harris, 2015). The unprecedented ability to capture and interpret an individual’s thoughts has long been foreseen as holding unparalleled power, and just this past May, artificial intelligence (AI) achieved a milestone, bringing us closer than ever to attaining telepathy.

Breakthroughs in Translated Brain Activity

At the University of Texas at Austin, a study led by computer scientists Alex Huth and Jerry Tang was conducted to translate brain activity into a direct flow of text. It is a non-invasive procedure that utilizes functional magnetic resonance imaging (fMRI) and large language models (LLMs). Invasive language decoding systems involve neurotechnologies from the brain to interpret and extract language-related information. These systems typically require the implantation of electrodes or neural recording devices into brain regions specializing in speech and language processing. By observing neural activity and decoding patterns of brain signals, the systems strive to decipher spoken or intended language. This would allow individuals unable to speak a chance to communicate in the future. They combine the brain’s language-related neural activity and the generation of intelligible text or speech, offering a promising avenue for individuals with communication impairments and potentially developing more efficient and natural means of expression.

A separate study at UC San Francisco and UC Berkeley, headed by Edward Chang, illustrated the improvement of invasive language decoding systems, displaying their potential to decode thoughts from neural signals (Garner, 2022). The three participants in the study listened to 16 hours of podcasts, from The Moth to Modern Love, while an fMRI scanner measured their brain activity (Osborne, 2023). Notably,

the individuals had to consent to the decoding by allowing, opening their minds, and thinking solely about each stimulus in order for the machine to read their thoughts and create coordinating text while the participants listened.

Comparison of Invasive and Noninvasive Language Decoding Systems

While recording the brain activity, the model produced a general gist of the thoughts or speech of the participants. This was a breakthrough regarding non-invasive methods since only words and phrases were decipherable by similar techniques previously. Over the last ten years, decoders have permitted unconscious individuals to answer “yes or no” questions and have singled out a single idea that an individual hears from a list. The accomplishment conquers a significant impediment of fMRI: while the method can plan mind action to a particular area with a staggeringly high goal, there is an innate delay, which makes it impossible to track thoughts in real-time. This exists since fMRI measures the bloodstream’s reaction to a thought, which circles and returns to a pattern in over ten seconds, a challenge that even the most remarkable scanner cannot improve (Parshall, 2023). This inherent limitation has impeded the capacity to decipher brain activity in reaction to natural speech, yielding a scattered “mishmash of information” stretched across a few seconds. However, the emergence of enormous language models– simulated intelligence such as OpenAI’s ChatGPT–allowed for an alternative path (Airhart, 2023). These models can numerically address the semantic importance of discourse, permitting the researchers to see examples of neuronal action compared to a series of words with specific significance as opposed to endeavoring to peruse out movement word-by-word.

Dr. Huth has characterized this achievement as a significant improvement, noting that they have successfully facilitated the model to comprehend and interpret complex ideas in continuous language over extended durations (Zeisloft, 2023). The decoder has an accuracy rate of 72 to 82 percent,

computer

computer

far higher than previous attempts to translate thoughts into ideas (Parshall, 2023). Although this has been a great victory regarding semantic decoders, much still needs to be recovered from the process, such as grammatical nuances like pronouns. Proper nouns are often left to the wayside, as places and names are misheard if not entirely incorrectly reproduced. Despite this triumph in semantic decoding, challenges remain in addressing grammatical nuances, indicating that further improvements are necessary to advance this groundbreaking technology.

Ethical Considerations and Consequences

The persisting concerns about AI technology echo historical apprehensions about the potential of mind-reading. Critics express unease that this technological advancement could function similarly to a polygraph exam, introducing the risk of criminalizing individuals based on the contents of their mental process. In neuroethics, a division exists concerning evaluating recent advancements and their impact on cognitive privacy. Notably, Gabriel Lázaro-Muñoz, a prominent bioethicist affiliated with Harvard Medical School in Boston, underscores the importance of heightened vigilance, emphasizing that the progression of intricate, non-invasive technologies, exemplified by this innovation, appears to be advancing more rapidly than previously anticipated (Malleck, 2023). This development serves as a powerful call for policymakers and the broader public, asserting the need for careful consideration of the ethical and societal implications.

“ The persisting conerns about AI technology echo historical apprehenions about the potentional of mind-reading.

”

Nevertheless, some eminent experts posit that the technological complexity and high margin of error of this approach render it an unlikely tool for malevolent purposes. They point to the immobility of fMRI scanners, which makes it challenging to access an individual’s thoughts without their willing cooperation. Furthermore, they question the viability of investing considerable time and resources in developing decoders for purposes beyond the restoration of communication abilities (Reardon, 2023). Experts state that, at the current moment, the technology does not warrant excessive apprehension, as it does not presently comprise enough power to analyze thoughts and brain processes. Nevertheless, the anticipated evolution of this technology will necessitate policy formulation and the implementation of safeguards to ensure its responsible and equitable use.

Future Applications

Proactive measures were integrated during this study to predict damaging consequences. Tang emphasized the team at the University of Texas at Austin’s commitment to addressing concerns on potential misuse, expressing that they take these concerns seriously. Their primary purpose is to ensure the responsible and intended use of these technologies while promoting their advantageous applications. Notably, when tested on different individuals, the decoder underwent customizations, resulting in disjointed and incoherent output. Moreover, participants retained the ability to influence the machine’s output by contemplating alternative ideas, such as visualizing animals or engaging in imaginative diversions. The measures above underscore the researchers’ emphasis on the responsible use and ethical limitations regarding the new technology.

Tang emphasized that the current capabilities do not facilitate malicious purposes, but they remain dedicated to establishing robust policies to prevent potential misuse before it becomes a significant concern (Airhart, 2023). Although substantial ramifications are possible, the triumphs may revolutionize communication. A significant triumph from the outcome of these studies would potentially improve communication for those who are mentally sound but unable to speak. In the case of physical disabilities potentially caused by strokes or other ailments, patients may be able to facilitate communication using technology developed from these models (Airhart, 2023). This could revolutionize communication for the disabled and allow patients under medical conditions such as a stroke to seek the necessary contact. The key will be balancing scientific innovation with proper safeguards to ensure a more accessible future.

Works Cited

Airhart, M. (2023). Brain Activity Decoder Can Reveal Stories in People’s Minds.” College of Natural Sciences.

Cottier, C. (2023). Can Ai Read Your Mind? In Discover Magazine, Discover Magazine.

Chartier, J., Anumanchipalli, G. K., Johnson, K., & Chang, E. F. (2018). Encoding of articulatory kinematic trajectories in human speech sensorimotor cortex. Neuron, 98(5), 1042-1054.e4. doi:10.1016/j.neuron.2018.04.031

Devlin, H. (2023). Ai Makes Non-Invasive Mind-Reading Possible by Turning Thoughts into Text. In The Guardian, Guardian News and Media.

Garner, I. (2022). No Longer at a Loss for Words.

Hamilton, J. (2023). A Decoder That Uses Brain Scans to Know What You Mean - Mostly.

Harris, J. (2015). Has Telepathy Become an Extinct Idea in Science Fiction?” Auxiliary Memory.

Malleck, J. (2023). Mind-Reading Tech at UT Austin Raises Ethical Questions. GovTech, GovTech.

Metzger, S. L., Liu, J. R., Moses, D. A., Dougherty, M. E., Seaton, M. P., Littlejohn, K. T., … Chang, E. F. (2022). Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis. Nature Communications, 13(1). doi:10.1038/s41467-022-33611-3

Parshall, A. (2023). A Brain Scanner Combined with an AI Language Model Can Provide a Glimpse into Your Thoughts. Scientific American, Scientific American.

Reardon, S. (2023). Mind-reading machines are here: Is it time to worry? Nature, 617(7960), 236. doi:10.1038/d41586-02301486-z

Samuel, S. (2023). Mind-Reading Technology Has Arrived. Vox. ZeisloftScientists Unveil AI That Can Turn Thoughts Into Written Text. (2023, May 1). The Daily Wire. https://www.dailywire.com/ news/scientists-unveil-ai-that-can-turn-thoughts-into-writtentext

Tang, J. (2023). Semantic Reconstruction of Continuous Language from Non-Invasive Brain Recordings.” Nature News. Nature Publishing Group.

Whang, O. a. I. (2023). Is Getting Better at Mind-Reading.” The New York Times, The New York Times.

Zeisloft, B. (2023). Scientists Unveil AI That Can Turn Thoughts into Written Text.” The Daily Wire, The Daily Wire.

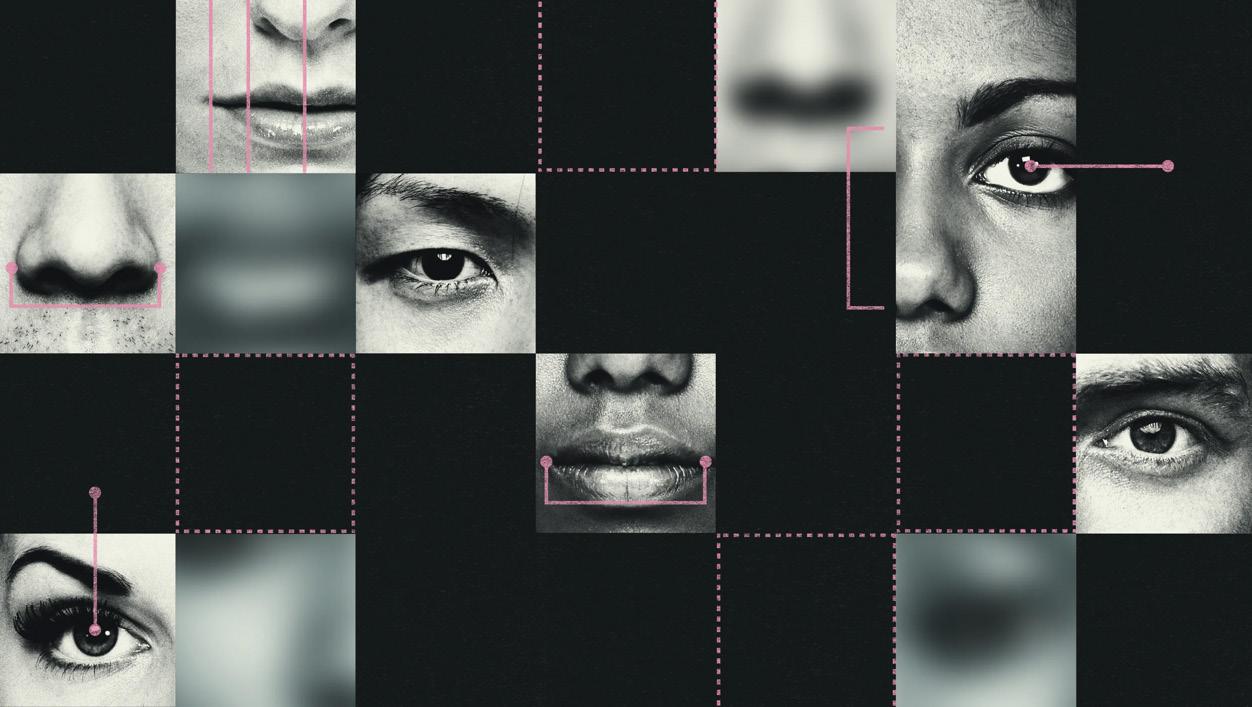

Attractiveness AI: Can Artificial Intelligence Really Rate Your Looks?

Written by Alexandra Wong Edited by LeAnn Aschkar

The phrase, “beauty is in the eye of the beholder” has often been used to express the differing opinions individuals have when it comes to perceived physical attractiveness as well as the difficulty we have in pinpointing which features make someone beautiful. Thus, researchers sought to answer whether AI can provide more clarity, by using quantifiable metrics to create a modern formula for beauty.

Although AI platforms aimed towards judging the attractiveness of everyday individuals are already on the market, the true potential for influence lies with platforms such as Instagram and TikTok. TikTok, which has already been accused of manually suppressing “uploads by unattractive, poor, or otherwise undesirable users” to boost retention, would have the potential to automate current human moderation through AI (Biddle et al., 2020).

When it comes to selecting which metrics machine predictive models should focus on, research conducted on evolutionary biological preference for fitter features reveals certain facial characteristics that are indicative of good genes and reproductive health (Little et al., 2011). Several indica-

tors include averageness, facial symmetry of features, and sexually dimorphic traits.

Averageness

Researchers used a series of individual facial photos and superimposed them to create an “average” face from the dataset. Afterward, subjects were asked to compare and rank the attractiveness of the original and compiled faces. The study found that 4 faces out of 96 original faces were rated as significantly more attractive than the averaged faces, and 75 out of the 96 original faces were rated significantly less attractive (Langlois et al., 1989). Simply put, humans seem to prefer features that lie in the average for the population.

Facial Symmetry

Though differingresearch has been published on the importance of facial symmetry in determining beauty, a study of Japanese subjects rating photos of Japanese men and women using a range of blended mirrored faces found that manipulated photos with perfect symmetry had a higher mean attractiveness score than those with normal symmetry (Rhodes et al., 2000). On average, the perfectly symmetric

faces had a score of 4.2/10 for attractiveness, whereas the normal faces scored a 4/10. This study supports the theory that higher facial symmetry is associated with beauty.

Sexually Dimorphic Traits

The biological preference for sexually dimorphic features may have provided evolutionary advantages as indicators of health during reproduction (Kleisner et al., 2021). Sexual dimorphism, or the development of physically different masculine and feminine traits, is closely tied to levels of testosterone and estrogen. Testosterone is found at higher levels in men, whereas estrogen is found more in women. Both hormones play important roles in roles in sexual development and the maintenance of overall health. Consistently across various cultures, feminine features in women are rated more highly in terms of attractiveness. In contrast, for men, while traditionally masculine traits such as a strong jaw and brow ridge are generally well-regarded, conflicting research exists regarding the attractiveness of feminine features in men. Thus, a stronger link seems to exist between feminine characteristics in women and attractiveness when compared to exclusively masculine characteristics in men and attractiveness. It may be worth noting the influence of current beauty trends and their role in affecting our tastes when it comes to judging attractiveness.

The Role of AI in Making Sense of Biological Preferences

Using datasets of ranked faces, computer vision artificial intelligence, and evolutionary biological preferences, researchers have been able to train models which predict the attractiveness rankings of individuals.

In a broad definition of the term, artificial intelligence focuses on matching or exceeding human capabilities. Rather than being coded to a direct result, AI is “trained” on example data to form predictions. Machine learning, a sub-field of AI conducts either supervised or unsupervised learning. Supervised learning machine models rely on categorized and labeled data, such as identifying specific facial measurements for different features on a face, whereas unsupervised learning identifies patterns within unlabeled data to develop groupings. In mimicking the structure of learning in human brains, deep learning neural networks are used in supervised learning to layer data.

Published in 2021, a machine learning development model created to research the accuracy of Attractiveness AI used artificial intelligence deep learning by creating neural networks to compare datasets of images against one another (Iyer et al, 2021). Researchers focused on extracting specific facial features from the data set by using Facial Landmark Localization, a computer vision tool that detects and measures the relative positions of features in comparison to the position of the eyes. Collected ratios were then separated into descriptive categories and used alongside human ratings

in helping to predict attractiveness. Other aspects researchers considered were facial colors, shapes, and textures. By using GLCM (Grey Level Spatial Matrix), comparisons could be drawn between one color pixel and its neighbors, forming a facial map that determined a value for the texture of the skin. An additional study was also conducted to find the facial tones that were rated most highly when associated with different pinpointed facial landmarks.

Four regression models, which are statistical operations used to predict the results of new data were used, with their predicted ratings being compared against the manual human ratings to determine the model’s accuracy. K-Nearest Neighbors (KNN) modeling showed the highest correlation with human raters. K-Nearest Neighbors assumes that points located near each other are similar, making predictions of the graphed distance between the testing data and training data. Despite not showing the highest correlation in this study, another technique frequently used is the Artificial Neural Network. Artificial Neural Network (ANN) has been frequently cited in research on beauty-related AI, with its ability to mimic human learning patterns through connected “neural” layers.

Bias in Data Collection and Attractiveness AI Output

Though research has shown that to some extent attractiveness can be analyzed through facial “rules”, cultural beauty standards, racial bias, and limitations of current technology raise crucial considerations for the future developments of attractiveness AI.

When rating “beauty”, the biases of human subjects are transferred into the training data of AI systems, potentially resulting in a degree of human-like bias in AI results. The bias human testers and researchers may have as a result of racial biases and facial features they are exposed to may be further compounded by the lack of representation in certain studies. The limited racial diversity in facial data may be partially attributed to limited data sources researchers can pull from. Despite Yahoo and ImageNet storing 100 and 14 million images each, the majority of data is rendered unusable due compositional quality of the photo. Due to limited data

“

...cultural beauty standards, racial bias, and limitations of current technology raise crucial considertaions for the future developments of attractiveness AI.

”

accessible to the researcher, trained models may not be fed enough diverse faces to accurately match human ratings between different races. Another issue in data collection is the limited preset range of shades most cameras can capture, resulting in darker shades being incorrectly represented in the data computer vision models are trained on.

The Future of Attractiveness AI

Beauty AI ventures have already sprung up, with platforms such as Face ++, which is valued at $4 billion for its facial detection and beauty scoring services, and Quoves, a facial analysis report service that charges a fee in the $150-$300 price range. Although practical commercial applications of Beauty AI are still in their beginning stages, further research utilizing different machine learning and visualization tools will surely redefine and expand upon what we currently know in the field.

Moreover, visual data is expected to play a larger role in the future of AI, with the steady increase of photos taken worldwide likely to address some of the current diversity and quality shortcomings in facial data. Along with developments in data collection, the IBM Global AI Adoption Index found in 2022 that 35% of companies were already using some form of AI in their businesses, with an additional 42% exploring the use of AI (IBM Global AI Adoption Index, 2022). Thus, we can expect the accuracy and complexity of facial assessment tools to respond to the growing market in the coming years.

Works Cited

Biddle, Sam, et al. “Invisible Censorship.” The Intercept, 16 Mar. 2020, theintercept.com/2020/03/16/tiktok-app-moderators-users-discrimination/.

Binar, Kurina. “Trend and Visualization of Artificial Intelligence Research in the Last 10 Years.” Research Gate, May 2023, www.researchgate.net/figure/Scheme-of-AI-DL-MLand-ANN_fig1_371205057.

Buolamwini, Joy. “Artificial Intelligence Has a Racial and Gender Bias Problem.” Time, Time, 7 Feb. 2019, time. com/5520558/artificial-intelligence-racial-gender-bias/. File:Average of two faces 2.jpg. Wikipedia, https://en.m.wikipedia.org/wiki/File:Average_of_two_faces_2.jpg.

IBM Global AI Adoption Index 2022, May 2022, www.ibm. com/downloads/cas/GVAGA3JP.

Iyer, Tharun J., et al. Machine Learning-Based Facial Beauty Prediction and Analysis of Frontal Facial Images Using Facial Landmarks and Traditional Image Descriptors, National Library of Medicine, 25 Aug. 2021, www.ncbi.nlm.nih.gov/ pmc/articles/PMC8413070/.

Kagian, Amit, et al. A Machine Learning Predictor of Facial Attractiveness Revealing Human-like Psychophysical Biases, 1 Feb. 2008, www.sciencedirect.com/science/article/pii/ S0042698907005032.

Kleisner, Karel, et al. “How and Why Patterns of Sexual Dimorphism in Human Faces Vary across the World.” Scientific Reports, U.S. National Library of Medicine, 16 Mar. 2021, www.ncbi.nlm.nih.gov/pmc/articles/PMC7966798/.

Little, Anthony C, et al. “Facial Attractiveness: Evolutionary Based Research.” Philosophical Transactions of the Royal Society of London. Series B, Biological Sciences, U.S. National Library of Medicine, 12 June 2011, www.ncbi.nlm.nih. gov/pmc/articles/PMC3130383/#RSTB20100404C2. Moridani, Mohammad Karimi, et al. Human-like Evaluation by Facial Attractiveness Intelligent Machine, June 2023, www. sciencedirect.com/science/article/pii/S2666307423000165. Rhodes, Gillian, et al. “Attractiveness of Facial Averageness and Symmetry Innon-Western Cultures: In Search of Biologically Basedstandards of Beauty.” Sage Journals, 15 Aug. 2000, journals.sagepub.com/doi/ epdf/10.1068/p3123.

Ryan-Mosley, Tate. “I Asked an AI to Tell Me How Beautiful I Am.” MIT Technology Review, MIT Technology Review, 5 May 2021, www.technologyreview.com/2021/03/05/1020133/ ai-algorithm-rate-beauty-score-attractive-face/ Image: MIT Technological Review Commons

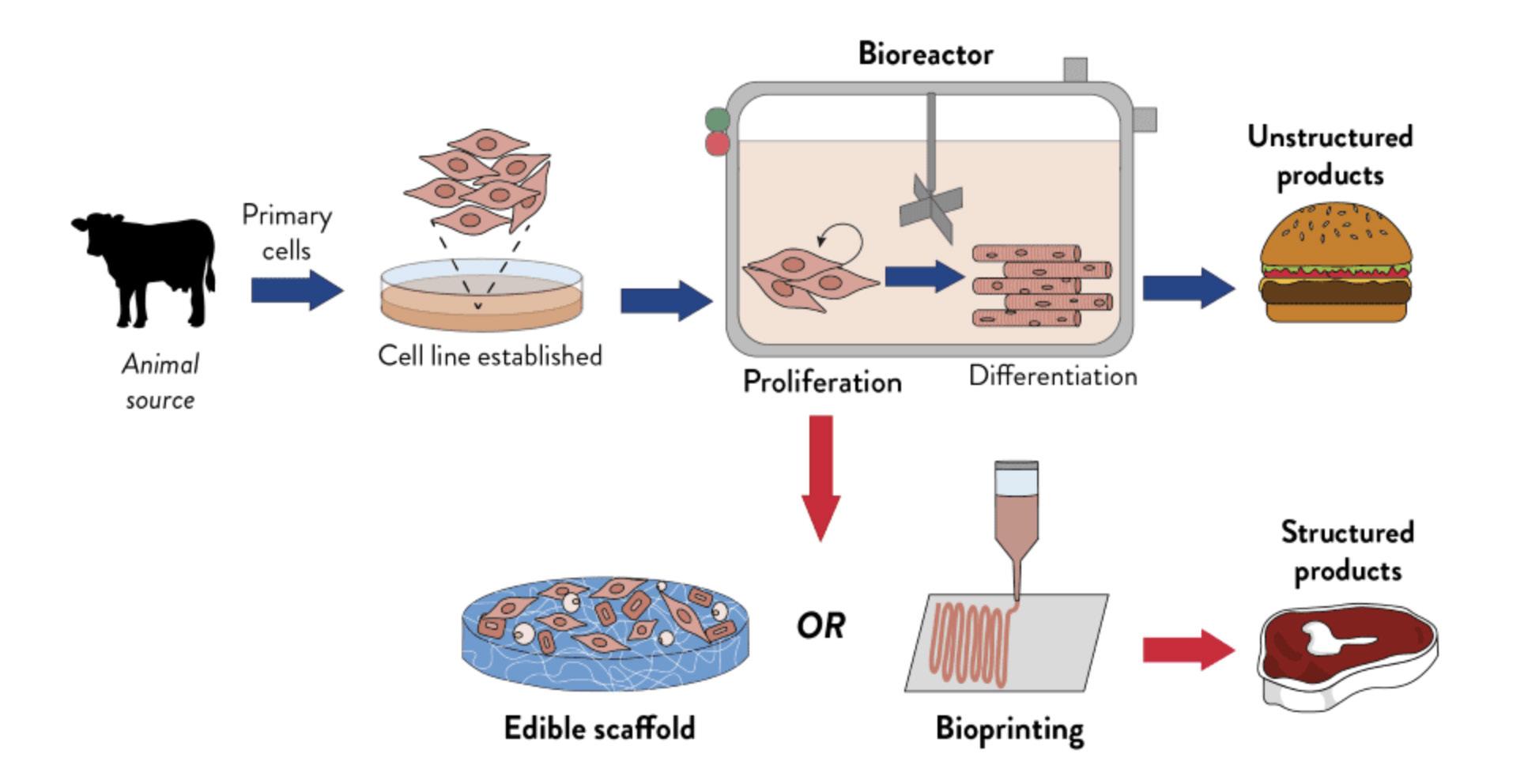

Plants, Cells, and Bugs: The Good, the Bad, and the Ugly of Making Meat Sustainable

Written by Zane Brinnington Edited by Dima Hamdan and Mackenzie Twomey

If you’re a food consumer in America, there’s little doubt that you’re aware of the numerous alternatives to meat that have emerged in the recent years. Whether it be The Burger King Impossible Whopper, Beyond KFC, Pizza Hut beyond, or the Impossible and Black Bean Burgers sold in the University of Wisconsin’s student Unions, nearly every big restaurant has boasted some sort of other-worldly sounding alterna-

tive meat. However, there’s little incentive for one who isn’t dietary restricted or vegetarian to select any of these over tried-and-true real meat. At least, there hasn’t been until now.

The ethics of consuming other living beings is no longer the only moral quandary carnivores have to come to terms with; as the years go on, the meat industry as it exists today is growing increasingly less sustainable for the planet. Livestock farming is responsible for about 12-18% of total greenhouse emissions, over half as much as the entire transportation industry, mostly due to the natural methane production from cattle (GFI, 2023). One third of habitable land is used to farm animals, mostly as grazing land for cows, contributing to growing deforestation rates and carbon emissions (Direct, 2012).

With the world population expected to increase by roughly 1.5 billion in the next 30 years (United Nations, 2019), the meat industry surely would have to grow to match, meaning that the risk to the Earth would grow as well. Recognition of this has led to the proposition and development of several alternatives that aim to replicate the gustatory, nutritional, and cultural void that the loss of meat would leave in the general population. The question then is “which alternatives currently lead the front, and how well do they balance sustainability and substitution?”

Plant-Based Meats (Impossible and Beyond)

Probably the least unnerving alternative to meat are the traditional “veggie burgers” that mimic animal flesh using vegetables and legumes already well-established in the Ameri-

can diet. The two titans of the mass-produced plant-based meats of today are Impossible and Beyond; both companies that aim to recreate ground beef with plants, though by notably different means.

Beyond meat combines peas, mung/fava beans, and rice with cocoa butter and coconut oil to mimic beef’s essential structural components (protein and fat), along with potato starch and methylcellulose for texture and beet juice to replicate the red color of raw beef. In addition, minerals like calcium, iron, salt and potassium chloride are added as well, both to replicate beef’s taste and nutritional value (Beyond, 2023). Impossible meat, on the other hand, is primarily constructed from soy and potato proteins, with fats and textures the product of coconut oil and sunflower oil injections. Their approach to replicating meat flavor is vastly different from Beyond, as it’s based not on combining the flavors of multiple different vegetables, but instead on the mere presence of a single molecule; heme. Heme is ubiquitous in plants and animals because it is essential for oxygen transport (Gruss et. Al, 2012). It also undergoes a multitude of chemical reactions when cooked to produce a slew of flavors, which Impossible Meats allege are the ones that consumers of meat know and love.

While heme naturally exists in high enough concentrations in beef to produce these flavors, the heme found in impossible meat is derived from the DNA of soy roots. It is inserted into genetically engineered yeast and fermented before being extracted and added into the raw Impossible product (Impossible, 2022).

But how do these compare to beef, and what are the environmental effects of their production? It’s difficult to objectively compare the way the two products taste and how well they functionally resemble meat. It is easier, however, to compare the nutritional properties of each with real meat– the result being that they are remarkably similar. The plant burgers are only 30-40 fewer calories per patty than one of normal beef, and all three contain between 19-20 grams of protein, 6-8g grams of saturated fat, and 370-390 mg of sodium. The biggest difference is the fat and carbohydrates content, which range from 14g to 23g and 0g to 9g, in plant burgers and real burgers (Nutritionix, 2019).

Considering the environmental impacts, there is zero doubt that the plant-based meats are more sustainable. While the precise numbers vary between Impossible, Beyond, and the numerous additional brands of plant-based meats, in general, the production of the same mass of plant-based beef as normal beef requires 96% less land, produces 89% fewer greenhouse gas (kg-CO2eq/kg), reduces water use from 87-99%, and even reduces the risk of aquatic pollution by 51-91% (GFI, 2023). While some sacrifices in taste are sure to be expected with plant-based burgers, their reduction of the environmental consequences of beef farming are remarkable.

Lab-Grown

When it comes to replicating the taste, texture, and mouthfeel of meat, cultured meat is easily the best alternative, as it is biologically identical to real animal flesh. The only difference is where it comes from – not from an animal, vegetable, or legume, but a laboratory.

Technically, the process does begin with an animal. Whether it be from a cow, pig, or chicken, muscle tissue is extracted and is used as the source for stem cell derivation (Post, 2012). Stem cells are cells in their early stages, before they have differentiated into specific cells (liver cells, skin cells, brain cells, etc.), a process triggered by their environment.

These cells are placed into a bioreactor, which contains media designed to mimic the conditions of the animal body, complete with the oxygen, amino acids, vitamins, sugars, and other nutrients essential for their growth (GFI, 2023). This enables the stem cells to proliferate, or replicate, until their concentration has increased by thousands. From there, the conditions are altered so that the stem cells are able to differentiate into one of three essential cells of meat: muscle, fat, and connective tissue.

These cells may be combined together with little order to replicate the ground meat that comprises hamburger, or can be more intricately structured with 3D-printer-like machinery that portions out the components and organizes them into more recognizable shapes, like steak or bacon (Post, 2012), or meat from a different animal entirely.

Since these cultivated meats are grown from the exact same cells as traditional meat and are chemically identical, they share the same nutritional aspects as the meat they attempt to replicate. Any potential nutritional side effects that come from the process remain to be noted.

The same can be said for the environmental impacts, of which there is currently heavy dispute. Early studies estimated that when compared to real meats, cultured meat production results in 78–96% lower greenhouse gas emissions, 99% less land use, and 82–96% lower water use (Tuomisto & Mattos, 2011). While this sentiment is echoed in current studies, a new revelation suggests that if the current procedure for making cultivated meat was scaled up to the extent necessary to replace real beef production, the energy usage required to do so would render the global warming potential anywhere from 4 to 25 times as much as that of real beef (Risner, 2023). The fact of the matter is that there is a lot of conflicting data regarding the environmental impact, and further research needs to be made before a more solid conclusion is reached. It’s critical to remember that this technology is still in its infancy, and it is reasonable to expect that any potential risks to the environment could decrease as further developments are made.

Insects

The third potential solution requires nothing artificial; no labs, no bioreactors, no heme to mimic the taste of meat–but it does require a lot of people to get out of their comfort zone. Entomophagy, or the practice of eating insects, has existed in humanity for thousands of years and remains in several cultures to this day. Then, what exactly would large-scale insect consumption look like if it were implemented in the United-States?

Producing enough insect meat to substitute the meat in our diet today would require insect farming, a process not dissimilar to the way in which animals are farmed. They are born in a farm either outside or inside, given feed to grow, and then killed and used as fertilizer, food, or whatever they might be needed for. Trillions of insects are already produced this way yearly, the number increasing day by day as countries like Ghana adopt Weevil farms to combat the sustainability crisis (Kennedy, 2023). In fact, this practice has been normalized in America for hundreds of years; beekeepers oversee the replication and upbringing of thousands of bees a year, harvesting their honey and facilitating pollination.

Preparing insects for consumption isn’t dissimilar to how we prepare meat every day; cook them, season them, combine them with other ingredients to create unique dishes. Thousands of recipes involving insects already exist worldwide. Mexico, for example, is the home of a dish called chapuline, which consists of toasted grasshoppers seasoned with garlic, lime, salt and chiles. Cockshafer soup was a delicacy in France and Germany until the 1950s and is a crab soup-like dish made from delimbed beetles.

While integrating them into our cuisine would take some getting used to, how well do insects replicate the nutritional aspects of meat? Despite varying wildly in their nutritional

profiles, insects contain roughly 9.96 g and 35.2 g of protein per 100 g versus the 16.8–20.6 g in meat (Payne, 2015), making them a more than suitable replacement when it comes to a protein source. Fat content, however, varies greatly depending on bug source. Meats generally have a saturated fat content of 1–3.8 g per 100 g, whereas for bugs the value is 2.28 to 9.84 g per 100 g. This could be of concern due to the link between saturated fat and heart disease though it is of note that certain bugs, namely crickets and honeybees contain saturated fat contents of 2.28 and 2.25g per respectively, much closer to meat. Furthermore, bugs tend to be higher in both unsaturated fats, which combat heart disease, and iron (Payne, 2015).

While insects provide roughly the same nutritional factors as animal meat, they are also significantly more efficient at converting the food they consume into the energy that is responsible for those nutrition factors. In other words, less land, water, and energy is required to produce the same mass of insect as a cow, pig or chicken. Furthermore, while only about 40% of a cow is edible, 80% of an insect is, meaning that less of the energy that goes into producing them goes to waste. (Sjogren, 2017)). It should be unsurprising then that insect farms have the potential to reduce carbon emissions by 70%.

“ Seeing as lab-grown meat is far from perfected and that it’s incredibly unlikely the general population will unanimously add insects to their diet in under three decades...

”

Comparing the Three: Sustainability vs. Substitution

Understanding all of these potential meat substitutes primes for a better answer to the question of which would be the best replacement for meat. As stated previously, the two most important factors to consider are sustainability and substitution.

Plant-based meat, though they have had somewhat of a renaissance as of late, still haven’t entirely shaken their reputation of unpleasant tastes and odd textures. Nonetheless, they are currently the most widely accepted and available substitute for meat in the US and have proven to be significantly better for the environment for real meat.

Lab grown meats, biologically identical to the real thing, are undoubtedly the best substitution for the taste, texture, and nutritive properties of real meat. Nevertheless, the lack of understanding of its environmental effects combined with the current small-scale execution makes it somewhat unsustainable for the near future.

Insects are quite sustainable and, given that farming them is practiced daily in countries all over the world, insect farming is quite feasible to implement. They are a lackluster substitute, however, completely different in taste, texture, and the recipes by which they would need to be prepared.

The fact of the matter is that none of these are perfect replacements for meat in either category, and that finding an alternative for one of the most essential and beloved parts of the American diet is an almost impossible task. Hard as it may be, the meat industry’s lack of sustainability combined with the fact that that population will increase by billions by 2050 make it an essential one. Seeing as lab-grown meat is far from perfected and that it’s incredibly unlikely the general population will unanimously add insects to their diet in under three decades, consider ordering a beyond burger next time you’re craving meat. It might be time to start getting used to it.

Works Cited:

Beyond Meat. “Ingredients | What Is Plant Based Meat? | beyond Meat.” Www.beyondmeat.com, 2023, www.beyondmeat.com/ en-US/about/our-ingredients/.

Cohen, Amir. “3D-Printed Steak, Anyone? I Taste Test This “Gamechanging” Meat Mimic | Zoe Williams.” Reuters, 16 Nov. 2021, www.theguardian.com/food/2021/nov/16/3d-printed-steak-tastetest-meat-mimic.

“Cultured Meat Products | AMSBIO.” Amsbio, 2021, www.amsbio. com/cultured-meat/.

Djekic, Ilija. “Environmental Impact of Meat Industry – Current Status and Future Perspectives.” Procedia Food Science, vol. 5, no. 5, 2015, pp. 61–64, https://doi.org/10.1016/j.profoo.2015.09.025.

Driver, Alice. “Opinion: Lab-Grown Meat Is an Expensive Distraction from Reality.” CNN, 5 July 2023, www.cnn.com/2023/07/05/ opinions/lab-grown-meat-expensive-distraction-driver/index. html.

Eufic. “Lab Grown Meat: How It Is Made and What Are the Pros and Cons.” Www.eufic.org, 17 Mar. 2023, www.eufic.org/en/food-production/article/lab-grown-meat-how-it-is-made-and-what-arethe-pros-and-cons.

GFI. “Environmental Benefits of Plant-Based Meat Products | GFI.” Gfi.org, gfi.org/resource/environmental-impact-of-meat-vs-plantbased-meat/

Global Orphan Foundation. “Palm Weevil Larvae: The Other Other White Meat.” Global Orphan Foundation, 2 Oct. 2023, www.globalorphanfoundation.org/our-stories/palm-weevil-larvae. Accessed 18 Nov. 2023.

Moonloft,. “Climate Impact of Meat, Vegetarian and Vegan Diets.” Ethical Consumer, 14 Feb. 2020, www.ethicalconsumer.org/fooddrink/climate-impact-meat-vegetarian-vegan-diets.

Gruss, Alexandra, et al. “Chapter Three - Environmental Heme Utilization by Heme-Auxotrophic Bacteria.” ScienceDirect, Academic Press, 1 Jan. 2012, www.sciencedirect.com/science/article/abs/pii/ B9780123944238000032. Accessed 9 Dec. 2023.

“Heme + the Science behind ImpossibleTM.” Impossiblefoods.

com, 2022, impossiblefoods.com/heme.

Kennedy, Adrienne Katz. “11 Bug and Insect-Eating Practices across the Globe.” Tasting Table, 12 Jan. 2023, www.tastingtable. com/1165294/bug-and-insect-eating-practices-across-the-globe/. Merck. “IR Spectrum Table & Chart.” Merck, vol. 1, no. 1, 2021, www.sigmaaldrich.com/MX/en/technical-documents/technical-article/genomics/cloning-and-expression/blue-white-screening.

Nutritionix. “Nutritional Properties of Various Meats and Meat Alternatives.” The Current, 10 Sept. 2019, nsucurrent.nova. edu/2019/09/10/whats-the-real-beef-with-fake-meat/

Oaxaca. “Chapulines Sazonados from Oaxaca 30 Grms - Seasoned Grasshoppers.” Insect Gourmet - Your Guide to Edible Insects, www.insectgourmet.com/product/chapulines-sazonados-from-oaxaca-30-grms-seasoned-grasshoppers/. Accessed 18 Nov. 2023.

Payne, C L R, et al. “Are Edible Insects More or Less “Healthy” than Commonly Consumed Meats? A Comparison Using Two Nutrient Profiling Models Developed to Combat Over- and Undernutrition.” European Journal of Clinical Nutrition, vol. 70, no. 3, 16 Sept. 2015, pp. 285–291, www.ncbi.nlm.nih.gov/pmc/articles/PMC4781901/, https://doi.org/10.1038/ejcn.2015.149.

Post, Mark J. “Cultured Meat from Stem Cells: Challenges and Prospects.” Meat Science, vol. 92, no. 3, Nov. 2012, pp. 297–301, https://doi.org/10.1016/j.meatsci.2012.04.008. Accessed 4 Mar. 2019.

Risner, Derrick, et al. Environmental Impacts of Cultured Meat: A Cradle-To-Gate Life Cycle Assessment. 21 Apr. 2023, https:// doi.org/10.1101/2023.04.21.537778. Accessed 9 Dec. 2023.

Sjøgren, Kristian. “How Much More Environmentally Friendly Is It to Eat Insects?” Www.sciencenordic.com, 17 May 2017, www.sciencenordic.com/agriculture--fisheries-climate-climate-solutions/how-much-more-environmentally-friendlyis-it-to-eat-insects/1445691.

Sundry Photography. “Impossible vs beyond Meat.” Tasting Table, 15 Oct. 2022, www.tastingtable.com/1047017/whatsthe-difference-between-an-impossible-burger-and-a-beyond-burger/. Accessed 18 Nov. 2023.

United Nations. “Population.” United Nations, 2019, www. un.org/en/global-issues/population#:~:text=The%20world.

Nanoscopic Warriors: Revolutionizing Cancer Treatment with Nanoparticles

Since the birth of modern medicine in 19th century, medicine has advanced rapidly over the years with astounding breakthroughs and innovations that have transformed the medical landscape. From Edward Jenner’s smallpox vaccine to the discovery of the DNA double helix strand by James Watson and Francis Crick in 1953, scientists have come a long way fighting through most complex diseases.

Cancer, also called the “the emperor of maladies” is highly multifaceted, challenging our understanding of the biological rules that govern life. This enigmatic disease has left scientists grappling with the relentless quest for effective prevention, early detection, and curative therapies. In this exploration, the essay delves into the labyrinth of cancer, unravelling the multifaceted nature of this disease and the ongoing efforts to conquer its complexity through the application of nanotechnology. Nanotechnology is manipulation of matter on a near-atomic scale to produce new structures and systems. Even though the concept of nanoparticles and its properties were understood in ancient times, it gained

significant attention in the recent years because of its potential for substantial advancement in cellular and atomic level which can benefit cancer treatment vastly. It is believed that nanotechnology can help with an early cancer detection, decreases radiation dosage, and improves therapeutic specificity which could eliminate the systemic toxicities associated with conventional methods, therefore, overall improving the prognosis and patient quality of life.

Background Information About Nanotechnology

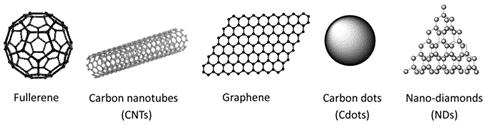

Nanotechnology involves structures and properties at the nanometer (one billionth of a meter level) and has the potential to revolutionize industries and impact various aspects of our lives by harnessing the unique properties that emerge at a nanoscale. The nanometric size molecules are used by the human body’s biological mechanism to cross natural barriers to access new delivery sites and interact with DNA (Mundekkad & Cho, 2022). Nanoparticles are advantageous in the field of medicine and technology due to their small surface area to volume which specializes them to absorb vast number of medications and move quickly throughout the bloodstream. Furthermore, they have possible applications in novel diagnostic instruments, targeted medicinal and pharmaceutical products and tissue engineering such as reconstruction of cartilages. Nanoparticles can be produced by a critical mix of manganese and citrate using nanotechnological techniques, allowing its use in tailoring mechanisms for medication administration, new diagnostic methods, and nanoscale medical devices (Haleem et al., 2023). Therefore, the potential use of nanoparticles such as nanotubes, nanodiamonds and fullerenes can be used in therapy.

Types of Nanoparticles

Fullerenes are a unique subset of nanoparticle which are made up of 60 carbon atoms (C60) in a spherical structure which was discovered in 1985 (David R.M. Walton and Harold W. Kroto, 2023). They explored the potential of fullerenes in drug delivery by controlling the release of therapeutic agents in the body as it enhances drug solubility and stability, minimizing the damage to healthy tissues and reducing the side effects. Additionally, Fullerene can be used in photodynamic therapy (in presence of oxygen) which can be employed to destroy undesired biological tissue. Fullerene has potent anticancer activities, however the potential toxicity to nor-

medicinemal tissues limits its further use. Therefore, nanocrystals , of C60 (Nano-C60) with negligible toxicity to normal cells have been developed as a radiosensitizer, a substance that enhances the sensitivity of cells or tissues (Gong et al., 2021). Functionalized fullerenes help in early detection, monitoring tumor progression, and understanding the cancer cell’s environment using biosensors. Additionally, carbon nano tubes (CNTs) had been suggested as in vivo applications, due to their strong optical absorption in the specific wavelength of nanoparticles, and as an active tool for bioimaging and drug delivery applications. Recently, fullerene and nanodiamond, a different type of nanoparticle had been investigated and received much attention to use as a drug delivery carrier (H. Al-Tamimi & B.H. Farid, 2021).

There are two types of nanoparticles: natural and designed. Natural nanoparticles are formed in nature due to the erosion of geological materials, as well as decomposition of biological materials, mainly plant residues, or combustion of fuel products. Designed nanoparticles are manufactured by the nanotechnology industry (Lisik & Krokosz, 2021). Another type of nanoparticles, Magnetic nanoparticles are gaining popularity due to their unique superparamagnetic nature, controllable size, excessive chemical stability with more desirable surface, and biocompatibility (Malehmir et al., 2023).

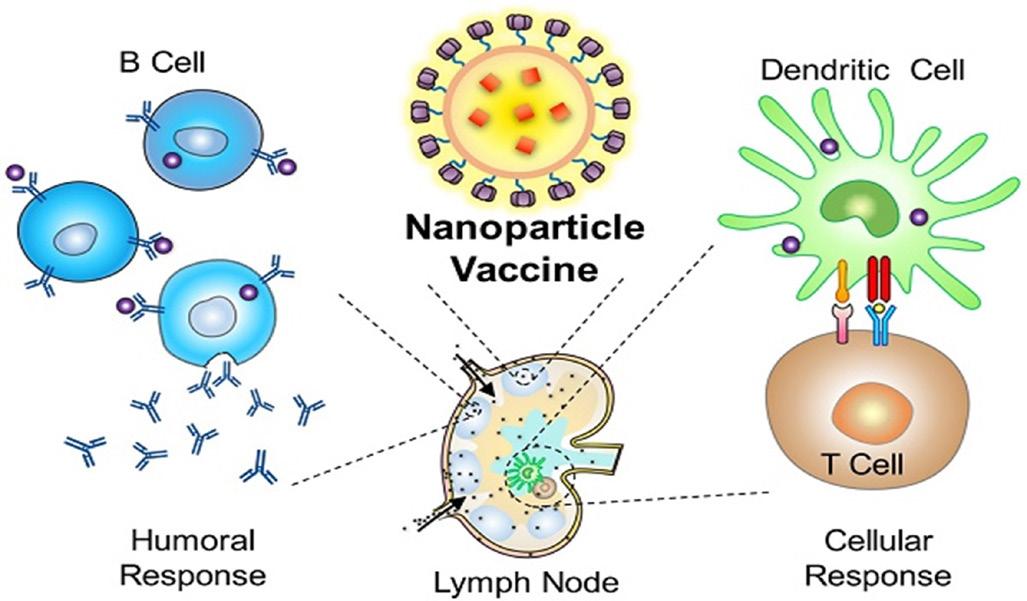

Applications of Nanoparticle in Oncology

As of January 2022, it is estimated that there are 18.1 million cancer survivors in the United States (Kemp & Kwon, 2021). The increase in the estimate of cancer survivors is due to advancement in cancer treatment such as usage of nanotechnology. The reason being is its ability to deliver the normal insoluble drugs to local and distant tumor in a better way due to its chemical properties and size. Thus, reducing the systematic side effects associated with conventional drug therapies, as indicated by the Figure 3 below. A majority of cancer diagnoses are identified in a later stage, causing difficulty in the detection and survival of the patients. However, nanotechnology provides enhanced imaging, faster diagnosis and increased therapeutic efficiency, as displayed by Figure 3 below.