periodical for the Building Technologist

82. Data

featuring OMRT,

AiDapt

A(BouT) Building Technology

Eren Gozde Anil, Namrata Baruah, ETH Zurich, Saint Gobain, Superworld, CORE Studio, Link Arkitektur, Packhunt, Vasilka Espinosa,

Lab,Bout

Cover page

Distribution of access graphs of small-scale residential floor plans.

A floor plan can be represented in various way, e.g., as an image. A more unknown, but noticeable, representation is the access graph: a topologicallystructured object in which nodes are rooms and edges are room connections, i.e., a door or passage. An access graph has the potential to directly inform us about properties or functions of a building or part of it. For example, access graphs provide information about the closeness of rooms which might be needed for determining privacy, i.e., shortest distance between two nodes in the graph, or whether a room is central and suited to be a communal area i.e., a node with many edges. Large scale analyses of floor plan access, remarkably, remain a relatively unexplored territory. However, the analysis of access graphs has the potential to reveal common and uncommon compositional patterns which could indirectly help the design of and research about buildings. The picture depicts the distribution of access graphs of 3000 randomly picked real-world floor plans of residential building in Asia from the RPLAN dataset. The number above the graph as well as the shade of blue indicates the graph's occurrence. Note that the position of a node is not necessarily the centroid of the corresponding room: the access graphs solely depict the topological relations between the rooms. Do you see any remarkable patterns?

@AiDAPT

https://www.tudelft.nl/ai/aidapt#c871580

RUMOER 82 - DATA

4th Quarter 2023

28th year of publication

Rumoer

Rumoer is the primary publication of the student and practice association for Building Technology ‘Praktijkvereniging BouT’ at the TU Delft Faculty of Architecture and the Built Environment. BouT is an organisation run by students and focused on bringing students in contact with the latest developments in the field of Building Technology and with related companies.

Every edition is covering one topic related to Building technology. Different perspectives are shown while focussing on academic and graduation topics, companies, projects and interviews.

With the topic 'Data', we are publishing our 82nd edition.

Printing

www.printerpro.nl

Circulation

The RUMOER appears 3 times a year, with more than 150 printed copies and digital copies made available to members through online distribution.

Membership

Amounts per academic year (subject to change):

€ 10,- Students

€ 30,- PhD StudWents and alumni

€ 30,- Academic Staff

Single copies

Available at Bouw Shop (BK) for :

Praktijkvereiniging BouT Room 02.West.090

Faculty of Architecture, TU Delft

Julianalaan 134

2628 BL Delft

The Netherlands

www.praktijkverenigingbout.nl rumoer@praktijkverenigingbout.nl instagram: @bout_tud

Interested to join?

The Rumoer Committee is open to all students. Are you a creative student that wants to learn first about the latest achievements of TU Delft and Building Technology industry? Come join us at our weekly meeting or email us @ rumoer@praktijkverenigingbout.nl

€ 5,- Students

€10,- Academic Staff , PhD Students and alumni

Sponsors

Praktijkvereniging BouT is looking for sponsors. Sponsors make activities possible such as study trips, symposia, case studies, advertisements on Rumoer, lectures and much more.

For more info contact BouT: info@praktijkverenigingbout.nl

If you are interested in BouT’s sponsor packages, send an e-mail to: finances@praktijkverenigingBouT.nl

Disclaimer

The editors do not take any responsibility for the photos and texts that are displayed in the magazine. Images may not be used in other media without permission of the original owner. The editors reserve the right to shorten or refuse publication without prior notification.

Systemic Integration of Urban Farming into Urban Metabolisms

The Augmented Architect

-LINK Arkitektur

16

-Eren Gözde Anıl

TU Delft

Graduate Article 06

Data and AI in Core Studio

-Jeroen Janssen Core Studio Company Article

56

Company Article

64

Sageglass

-Raimond Starmans

Saint Gobain Project Article 24

70

Superworld - Architects of Conditions

Superworld Company Article 30

-Thomas K rall and Maxime Cunin

78

AiDAPT Lab

Academic Article 38

- Dr Charalampos Andriotis, Dr Seyran

hademi, AiDAPT Lab

84

The Participatory Design Game for Social Housing in Manaus, Brazil

Machine Learning for the Built Environment

-Raffaele Di Carlo

OMRT Company Article

Integrated Bio-Inspired Design by AI

- Namrata Baruah

TU Delft

Graduate Article

AI in Design - Patrick Janssen and Maarten Mathof Packhunt

Company Article

Core Studio

- Adalberto De Paula and Patrick Ullmer

TU Delft

Academic Article

Bout Events and Trips

50

-Vasilka Espinosa

TU Delft

Graduate Article 44

Deep Learning Based Structural Bridge Design

-Sophia K uhn, Dr.Michael K raus, Prof.Dr.Walter K aufmann, ETH Zurich

Academic Article

94

-Praktijkvereniging Bout

TU Delft

96

28th Board Signing Off

-Lotte K at Praktijkvereniging Bout

TU Delft

CONTENT

EDITORIAL

Dear Reader,

It is with great pleasure and the highest enthusiam that I present my last edition of Rumoer as the editor-in-chief. I am honoured to have had the opportunity to work with an extremely talented editorial team. The team showed real faith in me through the past year and helped me improve the work we do at Rumoer. I would also like to express my gratitude to all the sponsors, contributors and companies who have colaborated with us in the past year. I hope we continue to expand our circle to learn more through the next editions.

I would like to congratulate and wish the best of luck to Ramya Kumaraswamy to continue and improve in her tenure what the last 28 editors-in-chiefs have been working on . Having worked with Ramya over the past academic year, I believe she has the skills and the ability to make the Rumoer bigger and further work towards improving the quality of the editions to come. I wish her the best of luck for the same.

The past year has been big on Artificial intelligence and we are thrilled to announce our upcoming issue on the topic of Data and its impact on the architecture and built environment industry. With the rapid advancement of technology, data-driven approaches, artificial intelligence, and machine learning are transforming the way we design, construct and operate buildings.

In this issue, we will explore the potential of data-driven architecture to optimize building performance, enhance sustainability, and improve user experience. We will examine how machine learning algorithms can help architects and designers to make informed decisions based on real-time data, enabling them to create more responsive and adaptable buildings. Decisions taken at any phase of the design can influence countless other aspects of the design and consequently becomes a task of balancing the benefits and compromise of decisions.

I invite all the readers to join us on this exciting journey of exploration and discovery as we delve into the fascinating world of data-driven architecture, AI, and machine learning in the built environment.

Pranay Prakash Swati Khanchandani Editor-in-chief | Rumoer 2022-2023

Pranay Prakash Swati Khanchandani Editor-in-chief | Rumoer 2022-2023

5

Rumoer committee 2022-2023

Alina Aneesha Bryan Fieke

Editorial

Pavan Ramya Shreya Pranay

Food Production as an Attraction , © Eren

Anıl

Gözde

SYSTEMATIC INTEGRATION OF URBAN FARMING INTO URBAN METABOLISMS

Eren Gözde Anıl

This Building Technology (TU Delft) Master Science Thesis research investigates how to integrate urban farming into cities by utilising under-used spaces and existing waste sources via using a decisionmaking tool. It is a tool aimed to make data-driven correlations based on different urban farming systems, their inputs, outputs and system characteristics, and typical urban waste types, product types, and their interrelations, in order to provide a Decision Support System.

Modern day cities are the consumers of natural resources, as well as primary producers of waste and greenhouse gases. However, the supply of resources and the handling of the city's wastes are not sustainable processes, and are vulnerable to fluctuations in the larger external and infrastructure services. Eren's student work is a response to this challenge.

Heat

* Lime Bath is used for pasteurization of substrate.

Vermiculture

Mushroom

Raised Bed Aquaculture

Hydroponic - NFT

Hydroponic - Water Culture

EBB & Flow Gravity Trickle Water Culture

Plant Factory

Aeroponics

Hydroponic - Media Bed

8 82 | Data

System Type Bi-Product Main

Medium Supplement Waste Space

Growing Technique Design Characteristic

Product

Roo op Facade Intermediate Floor Ground Floor Basement Food Waste Co ee Waste Fertilser Nutrient Solution Other Waste Food Waste Co ee Waste Other Waste Clay Balls

CO2 Calcium Lime Bath* Soil Water Fish Tank Water Air Aeroponics

Raised Beds Compost Spawning NFT

Aquaculture Horizontal Fish Tank Tank Food Production Supplementary Food Producing Supplementary Vertical Modular Frame Stacked System

Worms Small Crops Large Crops Fish Mushrooms

Fertiliser Heat Food Waste Fish Tank Water Spent Mushroom Substrate Rainwater

Fig. 1: Urban Farming Systems (Input, System, Output) © Eren Gözde Anıl

Cities consume 60-80% of natural resources while producing 50% of waste and emitting 75% of greenhouse gases globally (Tsui et al., 2021). Demands of the city are supplied externally resulting in a dependent system that is not resilient to fluctuations. Demands and waste flows of cities follow a linear process line resulting in high GHG emissions, making the 2050 goals (European Commission, 2020)unachievable if a change in the design of urban metabolism is not made. Integrating urban farming as part of urban metabolism has the potential to close the loop of some organic materials and resources within the urban environment however vast amounts of waste types and urban farming systems complicate the decision-making process.

As a response to this challenge, this research investigates how to integrate urban farming into cities by utilising under-used spaces and existing waste sources via using a decision-making tool. The design rules and the methodology are formed based on a literature review regarding different farming systems, varying waste flows and computational approaches. A prototype of the tool is generated and tested on case studies in order to showcase the potential of such an approach combining food production with waste management.

First of all, in order to formulate the decision-making rules an extensive literature review, investigating 9 different urban farming systems, their inputs, outputs and system characteristics in addition to 6 waste types, 4 main product types, and the interrelation between these aspects, is conducted. A database of these inputs, systems and outputs is produced and findings are formulated into step-by-step decision-making rules (Figure 1)

Input - System - Output

The decision-making tool is designed to guide the designers towards the optimal options depending on a set of variables and the relationship between these variables. These variables are product types, byproducts, production method, production characteristics, inputs to the system, vacant spaces, their locations, load-bearing capacity of the supporting structures, solar exposure of the spaces, their distance to existing waste flows, their distance to vacant spaces and demands given by stakeholders.

9 Academic

Fig. 2: Inter-relation of Variables © Eren Gözde Anıl

As illustrated in Figure 2, the selection of urban farming systems is dependent on these variables and their interrelations. The selection may seem rather simple for one vacant space and a set of existing conditions, however, there might be multiple vacant spaces. The multitude of available spaces complicates the design problem as it introduces the possibility to build a network of urban farming systems serving each other. Consequently, combinations of urban farming systems depend on a range of circumstances including the distance between waste output and vacant space which can utilize the waste. These interrelations and circumstances are translated into design rules and used for step-by-step decision-making in later stages.

Data Flow

After collecting the necessary data, they should be represented in an accessible manner for the upcoming steps. For each urban farming system data regarding what kind of supplementary resources are needed, system’s weight, solar demand, inputs and outputs are stored in the following way:

Vermicompost :

{ UF1 :{ type: Supplementary, weight: 3, solar demand: 1, inputs: [food waste, rainwater,sawdust, paper], supplement: None, output: [fish food, fertiliser]}}

Similarly, for each waste output source in the case study its coordinates, which building it is located in, the quantity of waste and type of waste are stored in the following format.

Waste Output Point 1 : WO1 :{ location: (17,28,0), tag: WO1, building: BK, size: 3000, type: sawdust, node: waste}}

The last dataset needed for decision-making is vacant spaces. For every vacant space in the case study, its location, the building it is situated in, how big it is, its structural capacity and solar exposure is used in the next stage.

Vacant Space 1: { V1 :{ location: (15,20,0), tag: V1, building: BK, size: 3, structure: 3, solar:1, node: vacant}}

It should be noted that for research purposes, waste quantities, the structural capacity of spaces, system weights, solar exposure and solar demands are divided into ranges starting from 1 (low) to 3 (high).

After preparing the datasets, information is processed, used for step by step decision making (Figure 3), outputs are analysed and visually illustrated.

Food Cycle

Even though the decision-making tool is composed of a set of technical reasonings, the interaction between the designer and the tool has a significant value. The tool should be easy to operate and flexible for various project parameters. The methodology and the prototype created within the scope of this research can run in the background to make decisions. To make the decision making clear and less intimidating, a representative user interface- called Foodcyle- is designed.

10 82 | Data

-

-

-

-

- 5. Assign supplementary systems based on demand for the supplements and availability of resources

- 6. If there are still vacant spaces available increase the search radius and repeat the previous steps

-

11 Academic

Fig. 3: Step-by-Step Decision Making: © Eren Gözde Anıl

1. Collect data

2. Determine potential food-producing urban farming systems for each vacant space based on space characteristics

3. For each space and each potential system, look for available waste. If demands are fulfilled assign the system

4. Assign food-producing supplementary systems based on demand for the supplements and availability of resources

1 4 7 2 5 3 6

7. Visualise the results

The user interface is simply used to translate designer demands to design parameters. There are mainly 5 panels in the interface: Data Input, Design, Analysis, Customisation and Adaptability. The project-specific design rules are set by filling out a design questionnaire followed by interactive design panels. (Figure 4). In the end, the suggestions made by Foodcycle can be altered by the user. And after all the decisions are set a breakdown of quantifiable results is represented.

Case Study (TU Delft) Data & Findings

To test the prototype (FoodCycle), TU Delft Campus is used as the primary case study. 145 vacant spaces and 86 waste sources on the campus are mapped and necessary calculations are made to determine the waste quantities and other essential aspects. For this case study, a search radius, the maximum distance between vacant space and waste source, of 100 m, 200 m and 500 m are used.

12 82 | Data

Fig. 4: The Foodcycle Program Interface © Eren Gözde Anıl

Decision-making is divided into 3 stages and the only difference between these is the gradually increased search radius. During the first 3 stages, a system is assigned only if a minimum of one resource is found, with a maximum of 2 missing non-critical resources. Foodcycle is run 3 times while the search radius is increased step by step. It is observed that not all the spaces are occupied. Therefore to illustrate the food production potential of the campus, stage 4 design rules are applied. In stage 4, Foodcycle looks for at least one found resource first. If there is a found item and if the missing resources are not critical for that system’s productivity, the system gets assigned to the vacant space. And the decision-making tool runs again to assign food-producing supplementary and supplementary systems. Lastly, as there were still unoccupied spaces left, the rules are eased even more and a system is assigned even if there are no found resources based on the number of missing items. After this stage, the yields, system decisions and used waste quantities are calculated.

At the end of all 4 stages, 125 vacant spaces are used for farming purposes reaching a total of 22.18 hectares. The most assigned urban farming system is NFTs with 62 farming locations while raised beds are second on the list with 40 locations due to the lightweight of NFTs and limited waste demands of raised beds. Other than these, 9 vermicomposting areas, 2 aquaculture spaces, 9 mushroom production areas and 1 plant factory are suggested (Figure 5).

By covering almost 22 hectares of the campus, enough vegetables for 88% of the Delft population can be produced at TU Delft Campus. These numbers are based on daily fruit and vegetable consumption recommendations by the government. Also, while producing food, more than 50% of waste of each waste type, except sawdust, can be used on campus as shown in Figure 6. Lastly, more productive greenery can be incorporated into the campus encouraging people’s engagement with food production.

13 Academic

Fig. 5: TU Delft Campus Stage 4 System Decisions © Eren Gözde Anıl

Conclusion

Foodcycle is designed to choose an urban farming system based on existing conditions such as waste flows and underused spaces, as well as to generate a network of farms. After testing the tool on different case studies it is concluded that Foodcycle generates a more complex network with bigger data sets due to strict design rules and the availability of waste. If the tool is utilised for smaller data sets with fewer vacant spaces and fewer waste sources then the design rules need to be eased. Another strength is that the reasoning behind each decision can be tracked. From a technical point of view, even if the tools used for Foodcycle such as python and grasshopper

become outdated in the future, the methodology remains valid. And the same methodology can be used in the background of the user interface. Currently, the prototype gives 1 system option for each vacant space however with further developments, it can be designed to give a set of options to let the designer make an informed decision.

14 82 | Data

Fig. 6: TU Delft Campus Stage 4 Breakdown of Used Waste © Eren Gözde Anıl

w

References:

European Commission. (2020). 2050 long-term strategy. https://ec.europa.eu/clima/eu-action/climatestrategiestargets/2050-long-term-strategy_en

Tsui, T., Peck, D., Geldermans, B., & van Timmeren, A. (2021). The Role of Urban Manufacturing for a Circular Economy in Cities. Sustainability, 13(1), 23. https://doi.org/10.3390/su13010023

Eren is a computational designer with a strong interest in urban farming and sustainability. After completing her bachelor’s degree in Architecture she started building technology master's at TU Delft’s Architecture faculty. During the first year of her studies, she focused on sustainability and climate design while she discovered her fascination with computational design and coding during the second year of her degree. After graduation, as a continuation of the winning team of Urban Greenhouse Challenge, she started working on Lettus Design along with her teammates. Currently, she is working at Packhunt as a customer success agent.

15 Academic

Eren Gözde Anıl @erengozdeanil

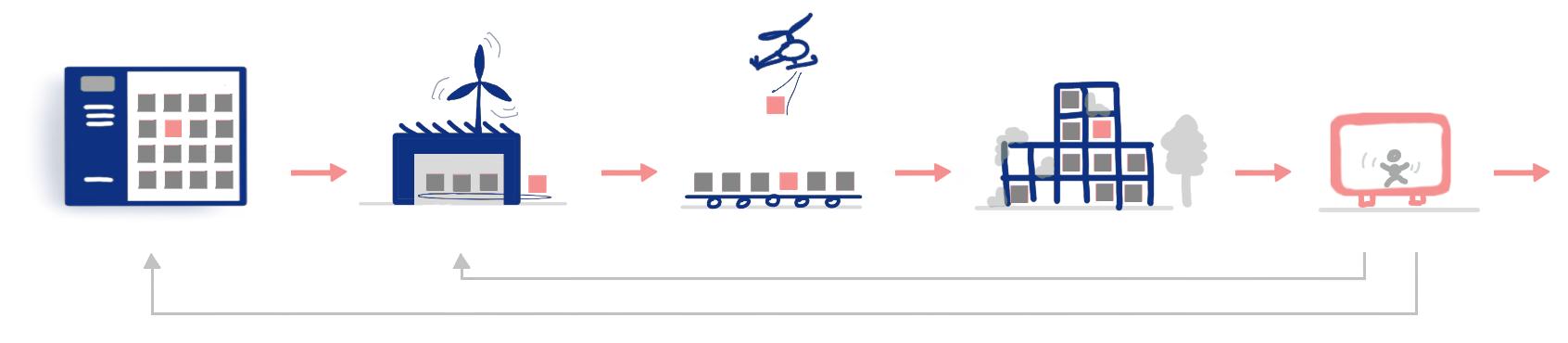

DATA AND AI AT CORE STUDIO

Jeroen Janssen

Jeroen Janssen

Data is all around us. It has been playing an important role in our day to day lives as designers and engineers and it only seems to become ever more important as time moves along. With the advent of large language models (LLM) such as ChatGPT and tools like ChatGPT, the world of data feels to be on fire now with everyone trying to have a piece of the cake. At CORE studio, the research and innovation team at Thornton Tomasetti, data is at the centre of everything we do, ever since the incorporation of the team in 2011. From visualising embedded metadata within large BIM models, developing applications to improve our workflows and data handling, all the way to AI and machine learning applied to our daily engineering tasks.

Fig. 1: BIM model loaded into Mirar, coloured by member section size. © CORE studio

Thornton Tomasetti has been designing buildings and structures for over 70 years and as such has accumulated a wealth of knowledge and expertise. What if we can make the entire firms’ knowledge, all our previous projects and all the expertise of our leadership, accessible to every engineer in easy-to-use design applications? At CORE studio we believe this is now possible and can be achieved with the help of artificial intelligence.

Visualisation of metadata

Data can only be put to good use as long as we make it insightful to the designer. Therefore, for many years CORE studio has been developing tools for data visualisation

and manipulation. Two examples of mature and widely used tools created by the team are Mirar and Thread. Both are web applications that easily communicate with metadata embedded in design models and directly connect to various desktop software packages.

Mirar is a lightweight 3D viewer that connects to Rhino or Revit for easy input and visualises complex 3D BIM models in the browser, interrogating individual elements and their attributes and then use advanced filtering and colour-by visual aids to highlight the embedded metadata. 3D views with their camera position and preferred filtering options can be saved and shared with external parties such as the

18 82 | Data

Fig. 2: A custom dashboard in Thread highlighting various structural metadata. © CORE studio

wider design team or clients, without the need to have any of the desktop clients installed on their machines.

Thread takes that a step further, by using those 3D models with metadata from Mirar, creating stunning and insightful data dashboards, allowing the user to really let the data tell the story. The user can arrange various graphs, filters and user interface controls on a set of custom dashboards and reports. The metadata within each individual element can then be interrogated by filtering and highlighting those elements, with colour-by properties showing up synchronised through all widgets on the page at the same time.

Machine learning in design

CORE.AI is the specialist team within CORE studio, dedicated to Artificial Intelligence (AI) and Machine Learning (ML), with the objective to find new ways to apply these novel tools to our day-to-day engineering practice. Three equally important ingredients come to play when we think about automating and accelerating our structural engineering workflows with AI: Data, Machine Learning and MLOps.

The first ingredient on the journey is the Data feeding into any of our processes. Seven decades of engineering buildings and projects enable us to leverage and generate massive synthetic datasets exploring every corner of the design space. In a matter of days, CORE’s physics-based design engines can efficiently create millions of data points that would take decades to create by humans and even months by typical commercial software packages. Critical here is the curation of that data ensuring it can be contribute meaningfully to the next steps in our AI journey.

The next crucial step is applying the correct machine learning architecture and training strategies to the right question. CORE.AI’s data scientists have been training large deep learning models on massive datasets, tuning them to the specific design task at hand and applying them into design engineering apps. CORE’s journey in machine learning started several years ago with an app called Asterisk . This web-based tool predicts an entire buildings’ structural frame in a matter of seconds. The user input required is limited to the massing of the building, the size of the core, and whether it is an office, residential or commercial building. Asterisk would then utilise a trained ML dataset to generate the appropriate framing geometry and beam and column sizes for the entire building.

The app was a huge success and has been used many times during plenty of early-stage design options and was particularly well suited for super-tall tower design. Although showing early success, the design of real-life buildings required a more flexible approach, rather than the one-stop shop that Asterisk proved to be. Over time CORE studio started to pick apart this one large model into smaller and more specialised apps, delicately tuned to specific sub-tasks such as a single timber bay design, steel-braced frames, and the likes. These could then in turn be put to work in concert creating compositions of larger, more intelligent models.

Creating those larger workflows then automatically leads us to the third ingredient: Machine Learning Operations (MLOps) – or, how can we create an infrastructure for easily turning our AI experiments into lightweight microservices that can power other applications. For this reason, CORE studio has developed Cortex. Cortex

19 Company

20 82 | Data

Fig. 3: Structural frame designed by Asterisk within seconds. © CORE studio

is a platform and infrastructure that allows the user, with only a few clicks, to turn their trained ML models into a powerful web API that can easily be consumed by other applications, whether that is a Python script, a web page, a Grasshopper definition, or a custom-made ShapeDiver app.

Cortex brings the power of machine learning experts and computational designers together allowing their skill set to complement one another. The ML expert can post their experiments on the platform, consisting of one or several ML models organised and trained with different versions of parameters, all within an intuitive user interface. When the ML expert is happy with a specific model and version, they can easily create an API endpoint with a specific schema

of inputs. This endpoint can then be shared and used in a design application put together by the computational designer.

Expanding the earlier example for the timber bay design; the Grasshopper definition computes the span and width of a standard floor bay spanning between columns, defines the loading conditions, and then calls the API endpoint which communicates with the trained ML model that lives on the Cortex platform. This API call returns a set of beam sizes for the main girders, secondary joists and slab thickness, which is then rendered to the correct size directly in the Rhino viewport. The app can subsequently be packaged and shared with every engineer in the firm, allowing them to quickly design a typical floor bay to a

21 Company

Fig. 4: Typical Timber Bay app deployed and shared on CORE studio’s Swarm platform. © CORE studio

high level of detail in a matter of seconds, satisfying code standards for both strength and serviceability, including floor vibrations.

The field of machine learning is moving fast. Generative ML is one of those fast moving and exciting fields. CORE. AI has been experimenting with models such as DALL-E 2 and Stable Diffusion creating an app that takes a simple text prompt and optionally a reference image as input, while generating 3d designs directly in Rhino. And furthermore, the team has been looking for this

app to utilise proprietary architects’ image libraries and therefore produce entirely new design options, based on a specifically trained style and materiality. Another experiment the team has been working on is to see how a pre-trained LLM such as GPT-4 and Natural Language Processing (NLP) can be utilised and further enhanced with information from all of Thornton Tomasetti’s previous projects’ metadata. This suddenly brings the entire firms’ knowledge to all engineers’ fingertips by just a simple text prompt.

22 82 | Data

Fig. 5: The Cortex platform lets users control and deploy trained machine learning models via API endpoints © CORE studio

The future of AEC

CORE studio is very excited about the future. AI is a powerful tool for the AEC industry, but of course, with great power comes great responsibility. CORE studio’s team is comprised of architects and structural and machine learning engineers. In addition, CORE.AI’s efforts are constantly being evaluated by seasoned engineers within the wider Thornton Tomasetti team. With those quality measures in place, combined with years of experience of developing applications for data visualisation, manipulation and machine learning, CORE studio sees a huge potential for AI to enhance the AEC industry going forward into the future. All the while, ensuring that this integration is carried out properly, and executed and constantly reviewed by experienced AEC professionals.

Jeroen Janssen

@tt_corestudio

Jeroen is an Associate Director at Thornton Tomasetti and is leading the CORE studio team in London. CORE is Thornton Tomasetti’s Research and Development incubator, enabling collaboration with project teams and industry colleagues to drive change and innovation. Jeroen particularly focuses on the design of microclimates for urban spaces

23 Company

SAGEGLASS

Raimond Starmans, Country Director Germany and Benelux, SageGlass.

Founded in 1989, SageGlass is the pioneer of Smart Windows. SageGlass electrochromic glass automatically tints and clears according to daylight levels to control the natural light and heat entering in buildings. Thanks to the intelligent daylight management, SageGlass does not require the installation of blinds or shades and therefore allows for more minimalist and modern building designs. Since 2012, SageGlass has been a wholly owned subsidiary of the Saint-Gobain Group, the world leader in light and sustainable construction.

SageGlass is a unique product resulting from more than 15 years of R&D work. To date, the company offers two fairly similar products: SageGlass Classic® and SageGlass Harmony®.

Fig. 1: SageGlass used in Raiffeisenbank, Switzerland © SageGlass

Fig. 1: SageGlass used in Raiffeisenbank, Switzerland © SageGlass

SageGlass Classic offers four tints: clear, light, medium and full. When the tinted states are activated, the entire pane is tinted to control luminosity. In addition to these four tints, Harmony has no less than four additional tints (eight in total). Harmony is the latest solution invented by SageGlass’ team. This revolutionary Smart Window is the only product capable of tinting on a gradient, delivering targeted glare control and maximizing daylight with a more natural aesthetic.

When tinted, SageGlass products can block up to 96% of solar heat, helping to optimize energy efficiency and thermal comfort in the building. It also allows 60% of daylight to pass through in its clearest state, and blocks up to 99% of daylight in its darkest state, optimizing light

levels without visual discomfort while preserving the view to the outside.

But how does SageGlass work?

First of all, it is important to know that SageGlass electrochromic glass works thanks to three components: the insulating glass, the control material and the intelligence of the system.

- The insulating glass unit (IGU) consists of five layers of ceramic material that are coated onto a thin piece of glass. Applying a small electrical charge causes lithium ions to transfer layers making the glass tint. Reversing the polarity causes the glass to clear.

- The second component is Controls Hardware. This is the communication hub. It has interfaces for customer

26 82 | Data

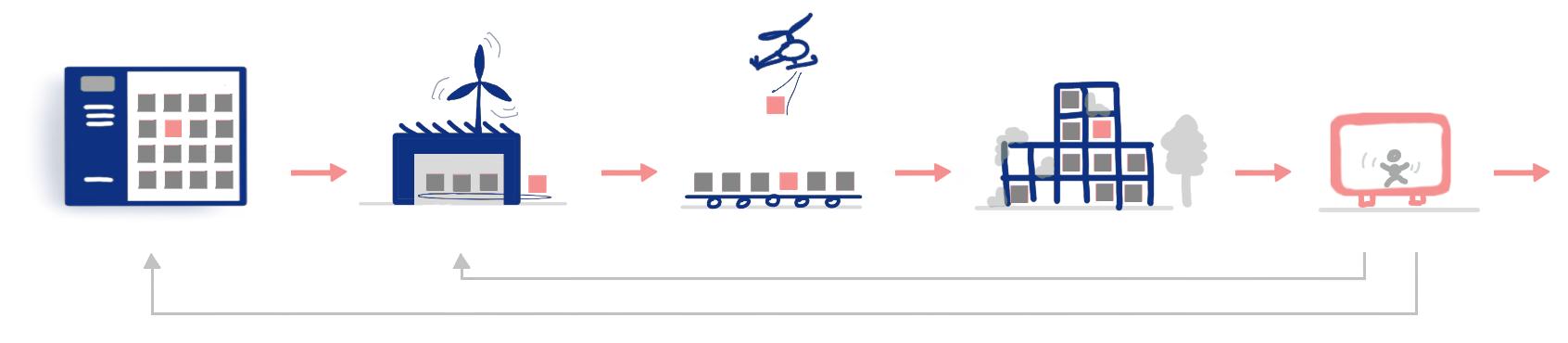

Fig. 2: System overview , © SageGlass

interactions and then transmits information across the building.

- The final component, the System Intelligence, takes in the outdoor and indoor factors such as the weather, the sun’s position, building orientation, location, occupancy, and time of day to create optimal comfort for every occupant.

Thus, to perform in the field, electrochromic glass needs software and controls to dictate when and how it should tint. Using a combination of predictive and real-time inputs, the software and controls manage daylighting, glare, energy use and color rendition throughout the day. At any point you can control the glass, manually adjusting the settings to your desired tinting preference from a wall

27 Project

Fig. 3: The glass tinting process , © SageGlass

touch panel, mobile app or BMS (Building Management System).

SageGlass is therefore far from being a traditional glass. The attraction for SageGlass is not by chance. Indeed, there are many advantages in the installation of this Smart Window, which, let's not forget, tints automatically. Its primary function is to control the luminosity and heat entering the room. Traditional buildings use manual blinds, which are typically down 50%-70% of the day, blocking daylight and views. Smart windows solve that problem. They allow natural light to flow in the building and thus considerably improve the comfort and well-being of the occupants who are no longer bothered by glare or heat.

Our latest study at Brownsville South Padre International Airport shows that when SageGlass is on, occupants are 3 times more satisfied with thermal comfort, 2.5 times more satisfied with light levels and 2.5 times more satisfied with glare control.

Electrochromic glass can significantly improve energy efficiency and help you achieve green building certifications for buildings. Indeed, smart glass responds to changes in the environment, limiting the heat or cold entering the space, and can reduce loads on HVAC systems. It saves an average of 20% of energy.In addition, thanks to dynamic glass, the occupants of a building continue to have a direct view of the outdoors, which

28 82 | Data

Fig. 4: SageGlass at Schwarzsee Hostellerie in Schwarzsee. ©SageGlass

clearly improve the occupant experience. Numerous studies have shown that the direct connection to nature and the provision of natural light is beneficial to the mental and physical health of building occupants. In addition, there is evidence of improved productivity in offices and classrooms, faster recovery in health care buildings, and more purchases in commercial buildings and airports.

Smart Windows provide also a best exterior aesthetics. Architects can design buildings without worrying about the installation of blinds or shades: they can therefore design buildings that are fully glazed, comfortable and beneficial to the health of the occupants.

To date, SageGlass Smart Windows has been installed in many buildings around the world. These include Nestlé's headquarters in Vevey (Switzerland), Schneider Electric's new building called IntenCity and located in Grenoble (France), and Powerhouse Telemark in Porsgrunn (Norway). SageGlass is not just for offices. It can also be installed in buildings in other industries: for example, in airports (Nashville airport in the United States), in buildings in the hospitality sector (Marriott Hotel in Geneva, Schwarzsee Hostellerie in Schwarzsee), or in educational buildings (Ruselokka Skole in Sweden), and many others.

Sageglass continues to refine its dynamic glass: the teams are trying to improve the comfort and aesthetics of the glass even further.

@sage_glass

Raimond Starmans is the Country Director for Germany and The Netherlands for Saint-Gobain's SageGlass since the end of 2019. Based in the Euregio in the Netherlands, Raimond is a big promotor of sustainable dynamic glass solutions together with his sales and technical support team. He has more than 30 years of work experience in the architectural glass industry, collaborating with architects, consultants, main contractors, applicators and fabricators in major projects

29 Project

Raimond Starmans

Architects of Conditions SUPERWORLD

Thomas Krall and Maxime Cunin.

Thomas Krall and Maxime Cunin.

As architects, we oftentimes propose inspiring images of alternative futures. We imagine how our cities could look like, how our homes could feel like. But we oftentimes fall short in providing the conditions for these futures to become reality. Designing the future is both a question of creating its image as well as the conditions of the future as a common goal. As architects, we thereby should not stop at the image, but craft the necessary infrastructures to translate our visions into reality.

This is precisely what we at Superworld are thriving in - the intersection of inspiring tangible futures, supported by the intangible context, and modified conditions, that can foster them. The creation of the necessary protocols, tools, contracts, and their digital or analog materializations creates a new kind of architect. An architect who designs his or her context, rather than only responding to it.We are condition architects.

Fig. 1: Negotiating Energy- Designing the processes of retrofitting, © Superworld

Supported spatial mediation of the roofscape

Above Rotterdam, a landscape of rooftops slowly appears in our common consciousness. Some of these rooftops are already today being used for various purposes such as creating additional living spaces, setting up communal food gardens, and setting up temporary cafes. Such activities prove the vast potential for systematically intensifying urban areas, addressing some of the city's broader development needs such as promoting biodiversity, ensuring affordable housing, generating renewable energy, and mitigating flood risks.

Roofs are private spaces evolving in a tight relationship with its public environment. The question is how to interweave the very individual spatial agenda of each roof and its owner with the development of sustainable futures for the collective. RoofScape aims to build that bridge. It aggregates individual contributions to shift from the few large to the many small urban interventions planning, and what it means to support it by policy making.

The project proposes a data-driven method that, on one side evaluates the existing capacity of roofs, and on the

32 82 | Data

Fig. 2: Evaluation of existing capacity of the rooftops using roofscape , © Superworld /MVRDV

other side simulates scenarios of desired alternative futures. In its prototype stage, RoofScape is the first selfinitiated collaboration between Superworld, MVRDV Next, and the Municipality of Rotterdam, a prototype of how to co-create experimental tools and push the boundaries of the current protocols in city-making.

RoofScape’s capacity analysis is carried out via a computational model that systematically evaluates individual buildings and compares their properties with the requirements of different rooftop programs. It

answers questions like: How high is a roof? How much solar radiation does it receive? Is it in proximity to an urban green corridor? This analysis is performed on the scale of the entire city, allowing users to explore both a macro level and the micro level of individual rooftops, through multi-layered open-source data and linked to the digital twin of Rotterdam. The result of it determines how well distinct functions, such as green roofs, solar panels, public program or housing can be allocated onto a single roof.

33 Company

Fig. 3: Roofscape's capacity analysis , © Superworld / MVRDV

Rather than coming up with definitive proposal, or a masterplan of the entire rooftop landscape, RoofScape envisions a playful way to generate scenarios through iterative negotiations between what is possible on each roof and what is needed for the city and neighborhood. Functional capacities can be linked to user-defined targets for individual programs. Algorithms value trade between the capacities of the rooftops and the desires of the user, creating a variety of plausible rooftop scenarios. Each scenario is visualized in an interactive 3D city model, which allows for qualitative evaluation by the user in addition to quantitative evaluation such as housing units, kWh of energy production, or m3 of water retention that is automatically provided by the tool.

If we truly aim to see private roof spaces participating to collective climate transitions, one needs to critically integrate all the different stakeholders that make up the ownership fabric of a city. When we look at the wide range of ownership type in the city, ranging from individuals to housing cooperatives, associations, corporate and public buildings, a comprehensive strategy for rooftop activation needs to engage stakeholders more than anything else. It needs to present potential roofscape developers with an idea of spatial qualities as well as the environmental and economic impacts of these activations.

The question then becomes not only one of data, but instead one about infrastructure. It is about crafting the conditions for institutional and citizens initiatives to not only co-exist but complement and amplify each other. It is about providing the capacities for each to become gardeners of building processes. Fundamentally, it is about creating tools for a participatory infrastructure,

in which citizens are at the heart of a process. And digitalization will play a crucial role in making this next step.

Augmented citizens negotiations of retrofit

The energy transition of the already existing built environment is more than solely a technical issue; it is also a social challenge. It means coping with existing ownership situations, intrusive retrofit measures, slow decision-making processes and uneven value distribution of eventual retrofits. Large scale retrofitting activities are urgently needed to reach our climate targets but the decision-making process in buildings with shared ownership models is challenging. In the Netherlands, over 1.5 million homes are part of associations of coowners, called VvEs (Vereniging van Eigenaars), making the decision-making process towards more sustainable buildings increasingly complex, but highly relevant.

Negotiating Energy is a research project prototyping new methods for a more inclusive energy retrofitting process within home co-owner associations. The project is putting in place the scaffolding for a scalable model of simulation-based retrofit measures through Augmented Negotiations. It combines a digital energy modelling system to support the human and intrinsically social experience of collective decision making.

The project develops this approach within the real conditions of two communities. The collective governance model of a self-organized living group, where ongoing decision-making about energy as a resource is common practice already. The model is then tested in a more

34 82 | Data

35 Company

Fig. 4: Augumented collective negotiations. ©Superworld

Fig. 5: Negotiating energy retrofit possibilities - Facade and windows ©Superworld

regular association of co-owners in the historic center of Amsterdam, exploring how negotiations of retrofit measures can be driven collectively. The selected cases we focused on monumental, protected buildings. These are notoriously the most difficult to retrofit – adding another layer of complexity for homeowners who need to deal with extra constraints and approval from monumental comity – and therefore the most challenging to see clear paths of upscaling.

Through interviews and workshops, the researchers include the community’s preferences into a parametric modelling process to guide them in their decision. Parametric design and energy modeling tools like grasshopper and ladybug were used to create the multicriteria model and iterate all possible solutions, not as simple data assessment. The goal is to define a catalogue of realistic and best-performing collective energy measures (post-insulation, architectural interventions), which the group can decide and negotiate upon. In the playful form of a physical card game, complex digital modelling is translated into an inclusive medium, accessible not only to experts in retrofitting, but every citizen. It helps a community to define if they wish to insulate the facade, replace the windows or/and change their boiler, based on information like costs, carbon savings, energy bill reduction for each measure.

The energy transition is about defining new protocols in which societal missions are pursued and facilitated by better system design. At the intersection between strategic design, building technology and citizen science, the exploratory research Negotiating Energy aims to design a replicable and collective retrofit approach by reframing

the cultural notion of energy. It brings together the architects from Superworld with knowledge institutions TU Delft and AMS Institute and combines data-driven and social science approaches to solve the decision bottleneck faced by VvEs regarding energy retrofitting.

The project amplifies the conventional negotiation procedure by integrating a data-driven assessment of possible retrofitting measures and a collectively accessible negotiation medium for non-experts into the process. It explores on how to translate energy modelling into a enhanced social experience for constructive collective negotiation and decision-making.

Deepening citizen's understanding of their retrofitting possibilities supported by institutional and local knowledge aims to increase capacities within owner associations for more impactful energy refurbishments. Ultimately, the project seeks to facilitate the energy transition in the built environment by proposing an intrinsically social, yet data-driven framework for truly collective energy retrofitting.

Designing conditions

These two projects are some of Superworld’s recent probes on how, using data and tooling, we can create the conditions for sustainable futures. Both explore the design of the object and the medium, the support of conversations and negotiations, whether it is about space or resources. They emphasize the importance of imagining not only the inspiring tangible futures, but also the necessary organizational and social infrastructures to support that vision.

36 82 | Data

Today, if we, as architects, are willing to be conditions builders for complex social, environmental, and economic challenges of our era, we must be dealing with the technologies, processes, and culture which can enable or prevent alternative inspiring futures. We need to adapt to emerging technologies, and navigate complex social, political, and economic environments. We can design our own context, rather than being restricted to responding to it. We can be conditions architects.

@build.superworld

Superworld is an international practice operating at the interfaces of architecture, technology and strategic design. Our work focuses on system thinking as a framework for societal transition and social change, and is guided by discovery, design and implementation. Our approach is to explore and challenge the underlying operating systems governing the built environment and prototype the physical embodiment of how would like sustainable futures.

37 Company

Thomas Krall

Maxime Cunin

AiDAPT LAB

AI lab for design, analysis and optimization in Architecture and Built Environment

AI FOR A SUSTAINABLE AND RESILIENT BUILT ENVIRONMENT

Empowering decision-making processes of architects and engineers through AI, across different scales and life-cycle design phases of the built environment, is a key lever for the necessary sustainability transitions in the age of data and digitalization.

Dr. Charalampos Andriotis, Dr. Seyran Khademi

Fig. 1: Exploiting topology © Casper van Engelenburg

At AiDAPT, computer vision, data science, and decision optimization methods come together through the development of deep learning, reinforcement learning, and uncertainty quantification frameworks that help us analyze and synthesize decisions for architectural and structural systems. This entails operation and reasoning in the complex spaces created by the confluence of diverse imagery data, noisy sensory measurements, virtual structural simulators, and uncertain numerical models. Application themes of interest range from automatic recognition of architectural drawings in large databases and generative design recommendations (initial design phase) to predictive intervention planning for life extension, structural risk mitigation, and multi-agent optimization of built systems (life-cycle optimization phase).

Bridging fundamental and applied AI, AiDAPT aims at creating new scientific paradigms towards a more reliable and sustainable built environment.

The AiDAPT Lab is part of the TU Delft AI Labs programme belonging to the university wide initiative of AI Labs. The 24 TU Delft AI Labs are committed to education, research and innovation in the field of AI, data and digitization. These labs are an embodiment of the bridge between research 'in' and 'with' AI, data and digitization. They promote cross-fertilization between talent and expertise and work to increase the impact of AI in the fields of natural sciences, design techniques and society.

AiDAPT Lab is a BK lab connecting two departments, AE+T & Architecture, maintaining also strong connections in research and education with other departments and faculties, such as the Computer Science Department.

AiDAPT is co-directed by Seyran Khademi (Architecture) and Charalampos Andriotis (AE+T), currently involving 4 core PhD students, who work on some of the grand challenges of the built environment, at the interface of architectural design and structural engineering. Directors (Dr. Charalampos Andriotis, Dr. Seyran Khademi)

40 82 | Data

Fig. 2: AiDAPT logo. © AiDAPT

Deep Image Search of Architectural Drawings

Casper's research is about the development of selflearning algorithms that can effectively understand visual as well as compositional patterns from architectural image data, ultimately to equip software to become a more competent partner in design. He develops deep learning frameworks that enable us to learn low-dimensional, task-agnostic representations of architectural drawings, specifically focusing on deep image search, i.e., image retrieval algorithms. This research line builds a foundation for large quantitative analysis of archival and linked visual data. Besides theoretical work, his aim is to connect it to the practice by enhancing Architectural-specific search engines.

Visible Context in Architectural Environmental Design for AI-driven Building Design

To decarbonize by 2050, as agreed in the Paris Climate Accord, immediate action for lowering the environmental impact of the building sector is needed. Fatemeh’s research, titled “Visible Context in Architectural

Environmental Design”, aims to incorporate computer vision to help designers navigate through environmental building design. Accordingly, the following projects are framed to be conducted along her Ph.D. trajectory:

(1) Building context visualization pipeline based on the big data on the environmental simulation results (e.g. daylight, view to outdoors, and noise), (2) Environmental spatial zoning design using computer vision, (2) Intelligent environmental design tool development to be served as building performance analysts’ assistant.

Structural Integrity Management of Large Scale Built Environment Networks

Structural systems must satisfy multiple performance and functionality requirements during their life cycle. However, they are subjected to different degradation mechanisms due to time-dependent stressors and hazards, affecting the aforementioned requirements and preventing the structural systems from functioning properly. In view of an aging and growing built environment, intervention and data-collection strategies need to be planned efficiently

41 Academic

Fig. 3: Compositional thinking. © Casper van Engelenburg

to monitor and maintain structural integrity so that total life-cycle costs and risks are minimized. To this end, Ziead aims to develop a Deep Reinforcement Learning (DRL)-based optimized decision making framework that possesses the potential to tackle the structural integrity management problem considering different structural, functional and socioeconomic metrics.

Value of Information and Multi-Agent Learning for Structural Life-Cycle Extension

Structures in the built environment continuously deteriorate and make inspection and maintenance an integral part of their life cycle. Within this realm, Prateek’s research centers on fast and efficient computation of optimal maintenance plans for prolonging the life of structures to harness its downstream socioeconomic benefits. To achieve this, I am interested in leveraging deep reinforcement learning, a machine learning paradigm designed to learn optimal plans via experience, to devise plans that encompass constraints and tackle real world challenges, whilst also being reliable and explainable.

42 82 | Data

Dr. Seyran Khademi

Dr. Charalampos Andriotis

Casper van Engelenburg

Prateek Bhustali

Fatemeh Mostafavi

Ziead Metwally

44 82 | Data

Fig. 1: Game Piece configurations.

A PARTICIPATORY DESIGN GAME FOR SOCIAL HOUSING IN MANAUS, BRAZIL

Vasilka Espinosa, TU Delft

Manaus is a city located in the north of Brazil, marked by two periods of strong economic growth in successive centuries, which led to rapid population growth and high demand for homes. Unfortunately, the formal market did not attend to the increasing housing demand of the population, especially among lower-income families, who had to resort to slums, squatters, and informal settlements. During this period, migrants from different backgrounds came to Manaus looking for job opportunities. Among them could be identified indigenous people from different ethnicity, traditional people from the Amazon, and people from other states.

Mentors

Dr. Ir. Pirouz Nourian

Dr. Bruno Amaral de Andrade

Ir. Shervin Azadi

Problem assesment

The Government implemented housing policies with the goal to provide low-income residents with access to adequate and regular housing. For that reason, Manaus has the biggest social housing complex ever made in Brazil, with almost 9.000 housing units. Despite the initiative to build this complex, the residents did not participate during the design process, and just two housing configurations was built. Those houses could not fulfil the users’ requirements since they can differ between dwellers in an objective and subjective matter. Each family has a different understanding of the social logic of space and how space is configured. Some families, which eventually lived in this complex, struggled to adapt to the new housing, leading them to sublet and return to live in their previous houses or build illegal and inadequate extensions of their houses to fulfil their spatial needs.

Being born and raised in Manaus allowed me to follow the transformation and impacts that the city went through during the construction of this housing complex. This subject motivated me to think and debate ways to allow inhabitants to custom design their homes so their social and cultural patterns of using space can prevail while keeping the construction cost down. Another question raised was how to facilitate the discussion and participation of multiple actors in the design process while keeping the design process simple without the obstacle of technical knowledge and still ensuring a certain level of quality. Although I used my hometown as a study case, similar situations exist in other countries, and it would be relevant to create a game that can adapt to any context. Therefore, I developed a meta-game framework,

highlighting what must be changeable to be suitable for any particular context.

Research Framework

The post-occupancy renovation process mentioned earlier highlights a couple of gaps in the mass housing production, like lack of fulfilment of user needs, minimum or no user participation during the design process, and the need for renovation of the housing unit to adapt it to the user’s preference and need. Through the literature research, I could match adequate methods and concepts to solve those problems. For example, a participatory design method would allow multiple actors in the design process, and a serious game method can engage and empower people to express their requirements. While mass customization, modularity and generative design enable replicability and affordability to be achieved together with meeting the individual needs of diverse users.

46 82 | Data

Fig. 2: User-built housing renovation to existing social housing.

Other methods and concepts used to study the context and to facilitate the applicability of the game were (a) Space Syntax that exams the space independently of its forms, shape, dimension, orientation, or location, instead concentrating on the topology of the space, which reflects and embody a social pattern, incorporating collective values and the social structure of a society, (b) Cultural Values, focusing specially in Social Values which make people create a memory of the space and feel attached to it, consequently this brings a sense of identity and belonging to a place, and (c) Open building, a bottom-up design approach which works with the introduction of several levels of decision-making in the building process, and the possibility of decoupling building parts with different life cycles.

The literature research helped in developing design principles for the game. And the result was a construction game that is a mix of a board game with Lego pieces. Each Game Piece is compatible to Lego and has different dimension, giving freedom to players to choose the possible function that can represent, while the gameboard represents the plot on which the housing is implemented. Additionally to this, cards are used as play mechanism to engage the players. The game is divided into five stages which will order the design decision-making process from abstract to concrete.

47 Graduate

Fig. 3: Game Piece variation.

Game stages

Planning is the first stage where the end users will express and specify their criteria, preferences, program of requirement, spatial organization, and social logic of the space. This stage aims to reach spatial specification, spatial relation, and collective design goals. One of the outcomes will be a draft configuration made of Game Pieces that they are going to use as a guide for the next stage of the game.

The Zoning phase involves game mechanisms, which role is to engage players, motivate them to work together, and induce them to discuss and make commitments. Activity Cards used during this stage of the game are adapted to the context, since they translate the possible needs and preferences of the user, based on Context Information from the previous stage.

Routing allows the end user to customize the interior of each space module through a modular system of furniture, allowing the visualization of hidden corridors and understanding of the walking flow from one space to

another. As part of this procedure, the end-user must specify where the openings will be placed and which type. The shape grammar and modular furniture can vary according to the Context.

The Evaluation phase checks the spatial quality and validity of the user-generated design. This stage aims to inform if a design is valid or not. In case of being invalid, point out a recommendation to improve the design and return to the Zoning Stage. The criteria used to evaluate the design should be adjusted to the reality in which is being applied, therefore it should follow the Building Code.

Shaping is the last stage, it concerns the materialization of the building geometry, determining the aesthetics of the design and concretization of the building. Different options will be available so the players can select the type, size, design and position of walls and openings from the wall modules. This stage must be crafted to the local architecture and style of where the building is being inserted.

48 82 | Data

Fig. 4: Example translation Game Pieces to floorplan.

Design Game Applicability

For future work and potential application, this game could be used as: (1) a learning tool to make players gain knowledge about their spatial decision and their effect on the spatial quality and validity or make them understand the quality and validity difference between different contexts, (2) a research tool to identify social and cultural patterns of using the space and its space syntax relation, (3) a computational tool that can generate automatically 2D and 3D drawing, and (4) a game to be used in any other housing development.

Reflection

The proposed game can only provide the right mechanism for a particular social and cultural context through (1) end-user participation, (2) context information and (3) adaptation of some of the game development processes, like Activity Cards, Evaluation, and Shape Grammar. The danger of proposing design solutions reflecting only on our views and preferences is the same of trying to fit one solution to a problem with plural solutions. Design is not neutral and is never a solitary act. Besides, design influence how people behave and live their lives. That is why is important to understand that design is an ethical activity on its own and it is fundamental to consider the complexities of the user, such as its background, pattern of habitation, spatial values, and social logic of the space.

Vasilka Espinosa graduated in Architecture and Urbanism from UFAM in Brazil, followed by a Master's in Building Technology from the TU Delft. Vasilka believes that design should have a societal relevance and that the technology we have access to today should provide a better life for everyone; independent of social, economic, cultural and geographic background, gender, race and ethnicity. Even though this is not a reality yet, she thinks we all have a responsibility to make it accessible to everyone.

49 Graduate

Vasilka Espinosa

@vasilkaespinosa

50 82 | Data

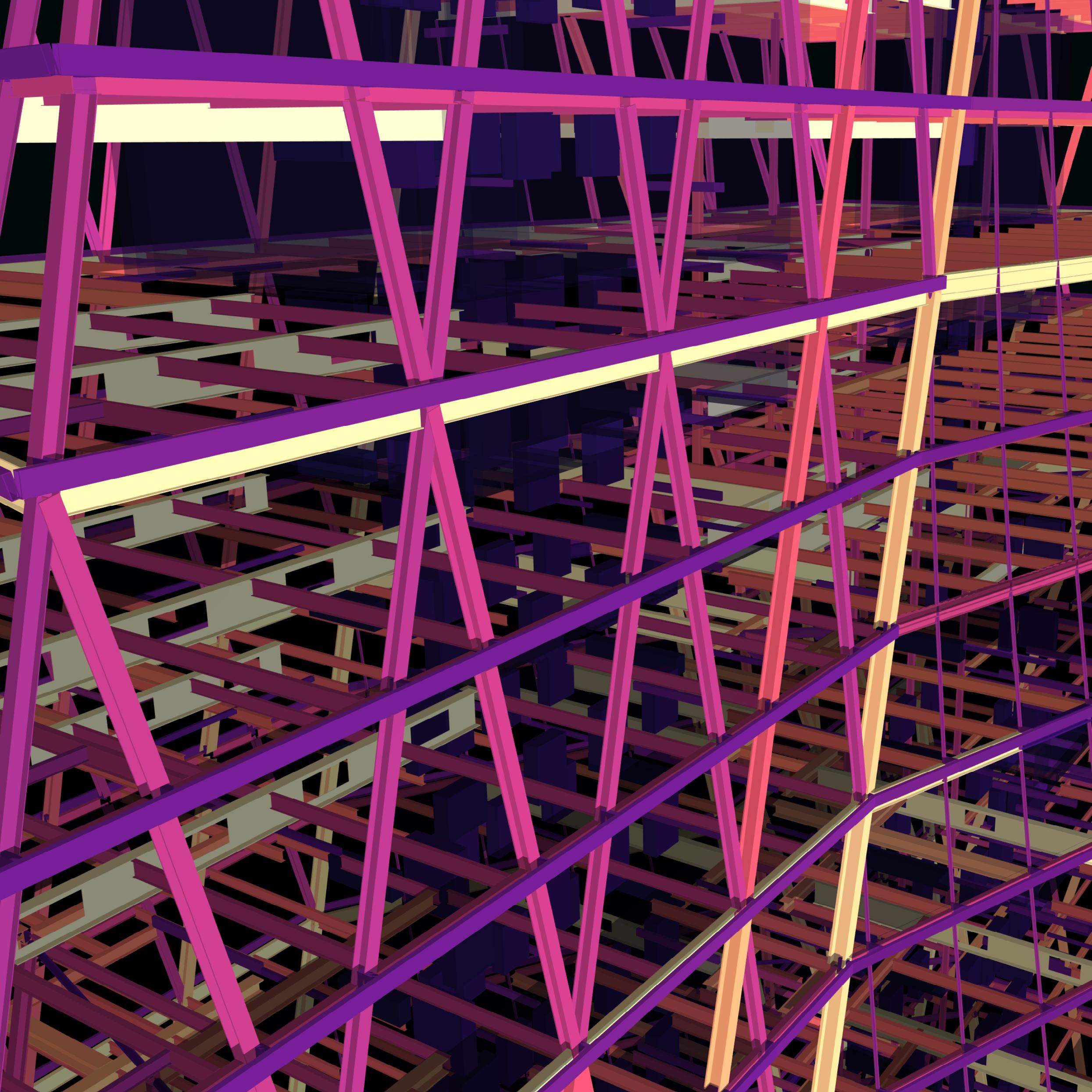

Fig. 1: Prototype user interface within Dynamo (Revit) developed for the project “Brücke über den Graben” in St. Gallen, Switzerland (Senn Resources AG) (Balmer, uhn et al, 2022)

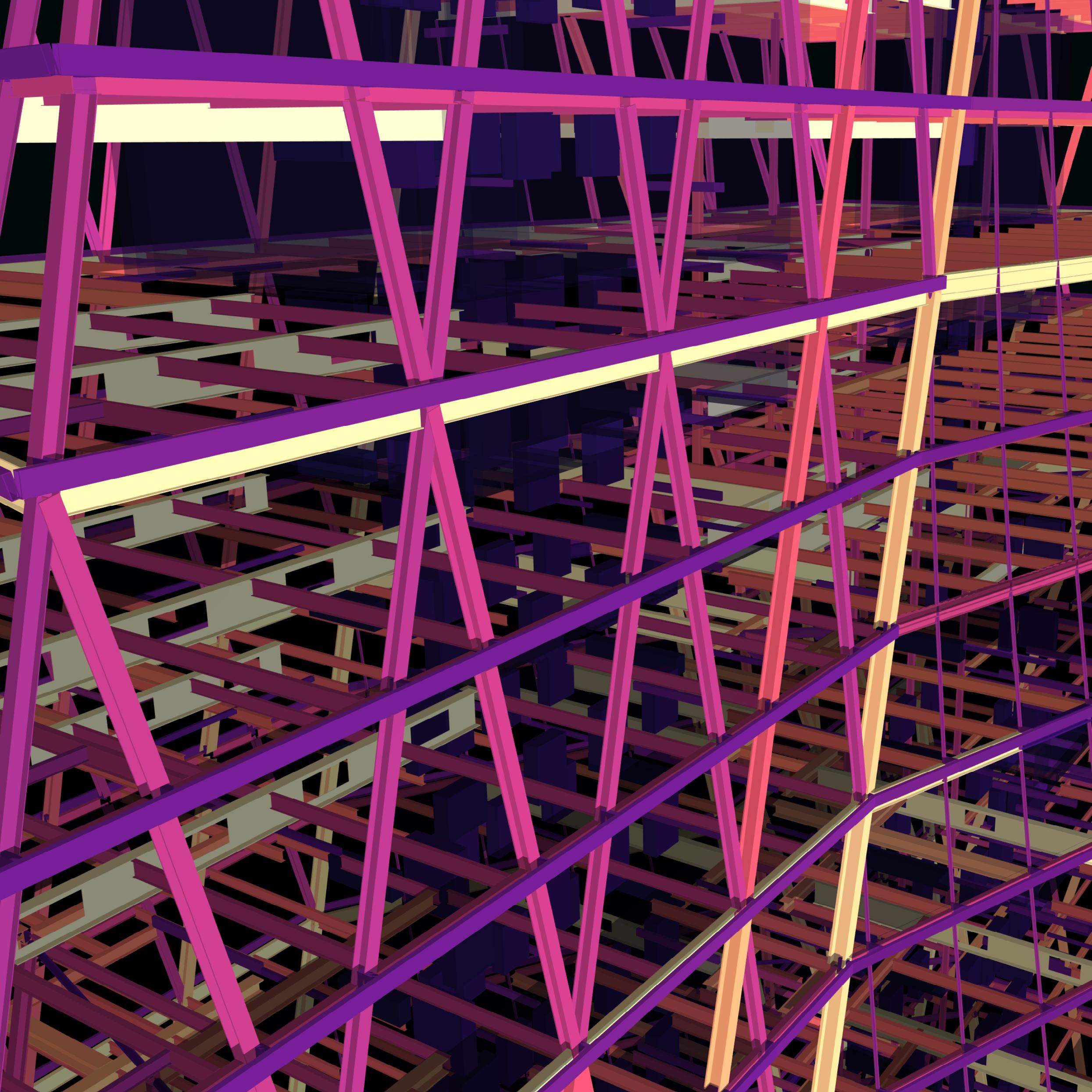

DEEP - LEARNINGBASED STRUCTURAL BRIDGE DESIGN

We investigate AI-augmented generative design, analysis and optimisation of concrete bridge structures. This research is conducted as an interdisciplinary collaboration between the Chair of Concrete Structures and Bridge Design at ETH Zurich (kfm research) and the Swiss Data Science Centre (SDSC). The project is associated within the Design ++ research initiative at ETH Zurich focusing on advancing the field of design through interdisciplinary collaboration and innovation. The interdisciplinary project team involves: Sophia Kuhn, Rafael Bischof, Vera Balmer, Dr. Michael Kraus, Dr. Luis Salamanca, Prof. Dr. Walter Kaufmann and Prof. Dr. Fernando Perez-Cruz

Sophia Kuhn, Dr. Michael Kraus, Prof. Dr. Walter Kaufmann

As our society faces increasing demand for sustainable infrastructure, the need for efficient and effective design tools is greater than ever. One promising approach is the use of artificial intelligence (AI) in the conceptual design stage of a construction project to allow for informed decisions based on data. Our research project focuses on using AI for the design, analysis and optimisation of concrete bridge structures.

The conceptual design stage of projects in the architecture, engineering and construction (AEC) industry commonly lacks performance evaluations but relies predominantly on the structural designer’s expertise. However, particularly bridge design projects involve a great complexity due to the tremendous amount of interdependent design parameters, multiple often conflicting objectives, and many involved stakeholders. Consequently, the current design practice leads to a time-consuming, iterative design process, often requiring

expensive design alterations in later project phases. Emerging generative design methods facilitate design space exploration based on quantifiable performance metrics, yet remain time-consuming and computationally expensive. On the other hand, pure optimisation methods ignore non-quantifiable aspects (e.g. aesthetics or constructability). Recent breakthroughs in AI have shown their great potential to improve this situation by transforming many industry and research fields. However, adoption to the AEC has hardly been done, mainly due to the lack of systematised, freely accessible data and the need for incorporation of in-depth domain knowledge. At its core, our approach is based on our developed variant of conditional variational autoencoders (cVAE) (see Figure 2). We generate synthetic data using parametric modelling and state-of-the-art simulation software, which we then use to train the cVAE. The advantage of the cVAE over traditional simulation software is that it can speed up the performance prediction based on a

52 82 | Data

Fig. 2: Conditional Variational Autoencoder (cVAE) architecture implemented within the toolbox (Balmer, Kuhn et al, 2022). x: Design feature vector, y = P(x): Performance vector,ŷ: Predicted performance vector, x: Generated design feature vector conditioned on a chosen performance vector y, z: latent representation.

set of design parameters (Forward Design) while also being able to generate new designs conditioned on a set of performance objectives (Inverse Design) (see Figure 2). The latter remains impossible with state-of-theart simulation software, such as finite element analysis software.

From a dataset of synthetic bridge structures, we extract design features x such as dimensions, materials and loads and performances P such as load-bearing capacity, environmental impact and cost. We then use the features as inputs to the cVAE, which at the same time learns to (i) generate a latent representation z, (ii) predict the performance ŷ, and (iii) reconstruct x . Once we have trained the cVAE on synthetic data, we can use it to generate new designs. To do this, we start with a set of performance objectives y, such as a desired load-bearing capacity or a target cost. We then condition the cVAE on these objectives and use it to generate a set of design parameters x that satisfy these objectives.

A first prototype was developed for the pedestrian bridge

“Brücke über den Graben” in St. Gallen (Switzerland) planned by Basler & Hoffmann AG (Balmer, Kuhn et al, 2022). Using Dynamo within Revit and SOFiSTiK a data set was generated for the real-life project situation at hand. It was shown that both the forward and inverse design mappings can be learned accurately by the cVAE implementation and the evaluation time is substantially reduced, after an initial data generation and training phase. Additionally, the deep neural network model also provides partial derivatives which gives an understanding of the parameter and performance sensitivities. The prototype interface within Dynamo can be seen in the

Researchers from kfm research and SDSC are developing a toolbox which implements the introduced approach to be used within the structural design stage of construction projects today. The software toolbox is developed structure agnostic and can therefore be applied to any structure type with parametric representation. The implemented software modules are shown in the system map in Figure 3. Additional to the synthetically generated data set, the framework is further informed by a second data base of existing bridge structures. Prior models are trained to extract utilised design patterns form existing bridge structures to inform and validate the Forward and Inverse Design Models (Kuhn et al, 2022).

Applied to a structural design project, the framework in development allows to rapidly explore the design space and identify optimal designs that meet the given performance objectives. It provides an understanding of the dependencies of parameter and performances of the high-dimensional design spaces and allows for the derivation of design rules for the analysed structure types. It can therefore boost key decisions of the conceptual design stage of an AEC project and foster efficient, economic and sustainable bridge structures.

However, the use of AI in bridge design has limitations. The generated designs must still be evaluated and refined by a structural engineer to ensure their safety and feasibility. Therefore, a focus within the framework development is the interactivity between the AI and the structural designer. While the AI augments the analytical and creative capabilities of the engineer, the structural

53 Academic

Figure 1 (previous page).

engineer should be able to steer the design process, bringing in additional information about the individual project context and non-quantifiable or even subjective performance goals (e.g. constructability and aesthetics). In conclusion, the use of AI in the conceptual design stage of a bridge design project has the potential to greatly enhance the efficiency and effectiveness of the design process. Our research project primarily focuses on using cVAE architectures as meta models for analysing and optimising concrete bridge structures. However, we furthermore apply the toolbox in the scope of research collaborations to other structural design examples (e.g. steel grid shell structures and timber frame connections), demonstrating the broader applicability of our approach and its potential to revolutionise the design process of bridge design and beyond.

References:

(Balmer, uhn et al, 2022): V. M. Balmer, S. V. Kuhn, R. Bischof, L. Salamanca,. K aufmann, F. Perez-Cruz, and M. A. Kraus, “Design space exploration and explanation via conditional variational autoencoders in meta- model based conceptual design of pedestrian bridges,” 2022 .

(K uhn et al, 2022): S. V. uhn, R. Bischof, G. Klonaris, W. Kaufmann, and M. A. raus, “ntab0: Design priors for AIaugmented generative design of network tied-arch-bridges,” Forum Bauinformatik 2022.

54 82 | Data

Fig. 3: Software modules and their connectivity of the toolbox in development. (DSE: Design Space Exploration, MOO: Multiple Objective Optimisation, XAI: Explainable Artificial Intelligence)

Sophia is a doctoral researcher at the Chair of Concrete Structures and Bridge Design at ETH Zurich. Her research focuses on the intersection of structural engineering and artificial intelligence. Within Design

++ projects together with the Swiss Data Science Centre (SDSC) she currently works on the development of a domain aware AI Co-Pilot for the conceptual design of concrete bridge structures. She is teaching assistant for classes on Scientific Machine Learning and Structural Concrete. Sophia Kuhn studied civil engineering at the ETH Zurich and University of California Berkeley.

Dr. Kraus is a Senior Researcher at the Chair of Concrete Structures and Bridge Design and Co-leader of the Immersive Design Lab of the Design++ Initiative at ETH Zurich. He researches and teaches in Scientific Machine Learning for the built environment. His work includes the development of theory-informed machine and deep learning methods and their application with a particular focus on structural design, component verification and generative design. In order to ensure the transfer of knowledge into practice, he runs companies with a focus on industry-related applications of artificial intelligence in addition to his university activities.

Prof. Dr. Kaufmann is the Chair of Structural Engineering (Concrete Structures and Bridge Design) at the ETH Zurich. His research focuses on the loaddeformation behaviour of structural concrete, structural safety evaluation of existing bridges and buildings, as well as innovative interdisciplinary research projects. He additionally acts as board member and expert consultant for the engineering firm dsp Ingenieure & Planer AG. He has been successfully involved in several bridge design competitions, structural engineering projects, and expert appraisals, particularly regarding the structural safety evaluation and retrofit of existing bridges.

55 Academic

Prof. Dr. Walter Kaufmann @ethzurich

Dr. Michael Kraus @ethzurich

Sophia Kuhn @ethzurich

THE AUGMENTED ARCHITECT

LINK Arkitektur

LINK Arkitektur

ENHANCING THE ARCHITECTURAL PROCESSES WITH DATADRIVEN METHODS FOR SUSTAINABLE AND HIGH-QUALITY DESIGN

The demand for sustainability and documentation in construction projects has led to unforeseen obstacles and challenges. This results in unnecessarily complex projects that struggle to meet financial requirements, schedules, and architectural quality. The risk of taking a wrong turn based on poor analysis can decide the faith of a project. At LINK Arkitektur, we address these challenges by combining data-driven methods with an architectural mindset to create higher efficiency and quality in our projects.

Fig. 1: Visualised data for everyday use.

Taking the right turn

Our process, called “The Augmented Architect,” involves using digital processes and workflows based on AI and automation to connect data-driven tools with the architectural design process. We have established LINK IO, a computational network connecting architects, computational designers, and data specialists to develop a toolbox for augmented analysis. Our approach enables us to evaluate our designs throughout the entire process and create architecture with impact.

Developing Building Sketcher 2.0: From Grasshopper prototyping to software development

Most craftsmen rely on specialized tools for their exact tasks. In LINK Arkitektur we have embraced that designers work in different digital tools in different locations and roles. Our Norwegian colleagues make their detailed design in Archicad whereas in Denmark it happens in Revit. Our landscape architects use Autocad with a sprinkle of Sketchup. Most of our early phase planning, volume studies and sketching are conducted in Rhino. Because of the robustness, fair license costs and the well documented API to extend the functionality, we decided to develop a framework that would give the architects superpowers in Rhino3d while maintaining a good interconnection to all of the other tools.

Over the years we have used various simulation tools ranging from Energy Simulations in EnergyPlus, Daylight Simulations in Radiance, Climatic studies in Ladybug and more. Creating automated calculations from various disciplines inside of the visual programming tool of Grasshopper allowed us to setup prototypes and apply our specialist knowledge on projects. While this has

worked well for our specialists, we wanted to democratize these tools in a holistic framework to make it easier for the designers in LINK Arkitektur.

Rewriting everything from scratch with C# code in a framework allowed us to make things more fluid, faster, user-friendly, and long term maintainable. We call the framework LINK_EP, short for Early Phase. Introducing a few simple data classes of a Building, Room, Windows, Balconies and a few other widely used objects in BIM modelling while still having the agility of “just sketching”. This ended up being implemented into plugins in Rhino and Grasshopper which allow us to make general analyses for energy and performance but also more bespoke analyses for different national requirements in Norway, Sweden, and Denmark. You can hear more about LINK_EP at the McNeel webinar, using link number 1 in the footnotes.

The implementation, which we call Building Sketcher 2.0, presents a user interface which allows architects to access essential microclimate simulations, including Sunlight Hours, Shadows, MOA (minimum outdoor area), Pedestrian Comfort, and sustainability analysis like early phase LCA Guesstimating, Daylight with the Vertical Sky Component or the 45° / 10% rule (Scandinavian standards) and Radiation. Additionally, an Area Analytics module is integrated to enhance early phase massing and planning. This is, of course, customized to national area requirements. By writing this in a modular code base, additional modules can easily be added.

We had a few projects that needed bespoke tools such as a view analysis and a safety analysis. After completing and evaluating these projects, the code was generalized

58 82 | Data

and integrated into the LINK_EP framework. Currently our development is leading us towards interoperability through industry standards such as IFC, Speckle, JSON, and even automated PDF reports. This will be developed in parallel with demands on the projects. With a good foundation, it only requires the extra 20% to generalize a workflow so it’s useful for others.