Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Bentley Systems acquires Cesium to deliver open digital twins in context

Darwinism in AEC

Adapt and survive with expert systems

The challenges of implementing GenAI AI is hard (to do well)

BIM 2.0 tool evolving at pace

At Bentley, we believe that data and AI are powerful tools that can transform infrastructure design, construction, and operations. Software must be open and interoperable so data, processes, and ideas can flow freely across your ecosystem and the infrastructure lifecycle. That’s why we support open standards and an open platform for infrastructure digital twins.

Leverage your data to its fullest potential. Learn more at bentley.com.

editorial

MANAGING EDITOR

GREG CORKE greg@x3dmedia.com

CONSULTING EDITOR

MARTYN DAY martyn@x3dmedia.com

CONSULTING EDITOR

STEPHEN HOLMES stephen@x3dmedia.com

advertising

GROUP MEDIA DIRECTOR

TONY BAKSH tony@x3dmedia.com

ADVERTISING MANAGER

STEVE KING steve@x3dmedia.com

U.S. SALES & MARKETING DIRECTOR

DENISE GREAVES denise@x3dmedia.com

subscriptions MANAGER

ALAN CLEVELAND alan@x3dmedia.com

accounts

CHARLOTTE TAIBI charlotte@x3dmedia.com

FINANCIAL CONTROLLER

SAMANTHA TODESCATO-RUTLAND sam@chalfen.com

AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com about

AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319

© 2024 X3DMedia Ltd

Trimble launches Reality Capture platform, Laiout enhances automated floor planning tool, Resolve brings 2D construction data into VR, plus lots more

Howie unveils Copilot for architects, Zenerate launches design automation tool, Finch enhances AI design software, CrXaI links image generator to BIM, plus more

How does the developer of Archicad plan to put AI to work on behalf of its customers?

We explore the surprise deal that promises to bring the worlds of digital twins and geospatial closer together

Register your details to ensure you get a regular copy register.aecmag.com

Generative AI (GenAI) is extremely promising, but achieving tangible results is more complex than the hype suggests

To adapt and survive, the AEC industry should be focusing on knowledge-based expert design systems

In the shift from BIM to BIM 2.0, big changes are underway at Graphisoft

The cloud-based BIM 2.0 software fleshes out its features in pursuit of victory over the current desktop BIM tools

CEO Biplab Sarkar talks new features, moving from file to cloud databases, autodrawings AR, openness in BIM and AI

Pricing,

and business models 40

The rapid evolution in the way AEC software companies charge for licences and shepherd their users to boost revenue

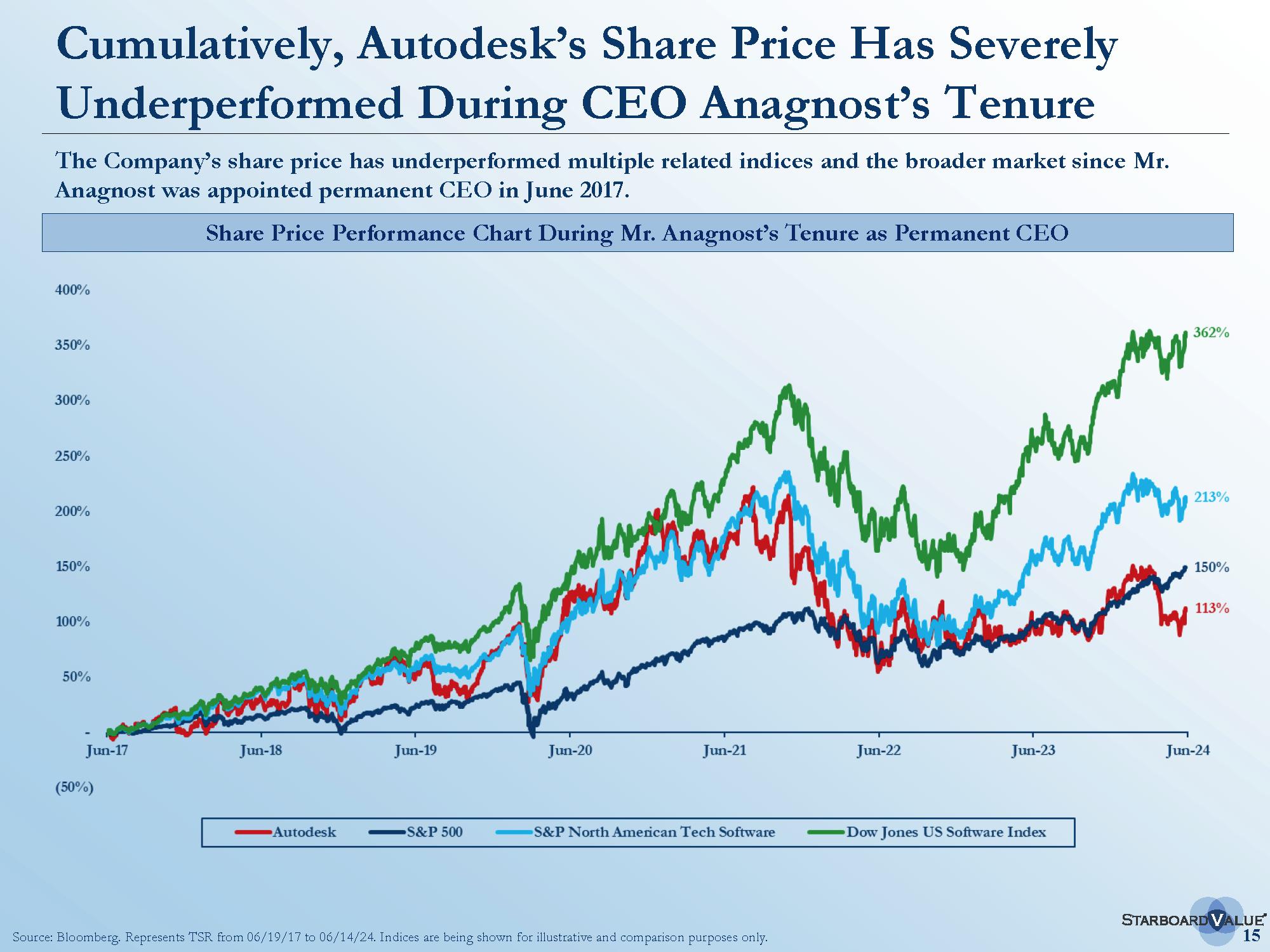

With Autodesk dealing with an activist investor problem what could be the knock-on effect for customers?

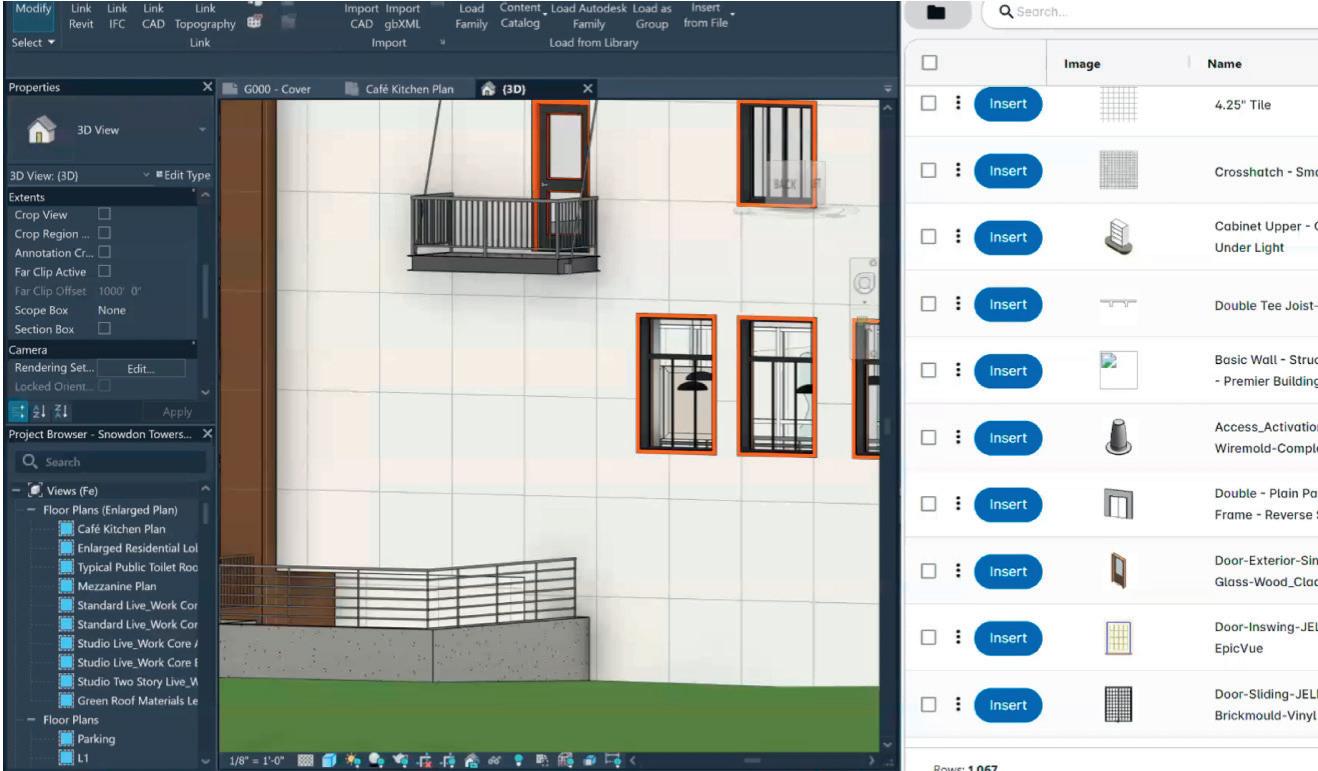

Autodesk has integrated Unifi’s solution for managing and accessing design content into its cloud stack

How to choose a remoting protocol 49

Advice for delivering performative remote workstation deployments

Bentley Systems has acquired Cesium, the developer of an open platform for creating 3D geospatial applications.

Cesium develops 3D Tiles, an open standard for streaming and rendering large-scale 3D geospatial data and Cesium ion, a cloud-based platform for hosting, processing, and streaming 3D geospatial data.

The acquisition will help extend the reach of Bentley’s iTwin Platform, which

is used by engineering and construction firms and owner-operators to design, build, and operate infrastructure.

According to Bentley, the combination of Cesium plus iTwin, enables developers to seamlessly align 3D geospatial data with engineering, subsurface, IoT, reality, and enterprise data to create digital twins that scale from vast infrastructure networks to the millimetre-accurate details of individual assets.

A few days prior to the acquisition,

Cesium launched its AECO Tech Preview Program, with the aim of improving workflow and capabilities that place architecture, engineering, construction, and operations (AECO) content in a 3D geospatial context. The company made two new technologies – Design Tiler and Revit Add-In – available for early access, to transform IFC and Revit files into 3D Tiles.

Meanwhile, turn to page 16 for more about the Cesium acquisition.

■ www.bentley.com ■ www.cesium.com

Intel has ‘refreshed’ its Sapphire Rapids workstation processors with the launch of the Intel Xeon W-2500 and Intel Xeon W-3500 Series.

While these new chips share much of the same architecture as the 2023 models—the Intel Xeon W-2400 and W-3400—they offer a few key enhancements. These include more cores at each price point, a slight increase in cache, and a modest boost in base frequency, though turbo frequencies remain unchanged.

At the top end, the new Intel Xeon w7-2595X boasts 26 cores, two more than its predecessor, the w7-2495X. Similarly,

the Intel Xeon w9-3595X features 60 cores, a four-core increase over the w9-3495X from 2023..

With these additional cores comes an increase in base power. The new processors are rated between 20 W and 35 W higher than their predecessors, with the Intel Xeon w9-3595X reaching 385 W base with a max turbo power of 462 W.

However, according to Intel, despite this rise, its OEM partners – Dell, HP, Lenovo and others – have not needed to make any changes to the thermal management of their workstations and that the new chips will be ‘drop in compatible’ with existing Sapphire Rapids workstations.

■ www.intel.com

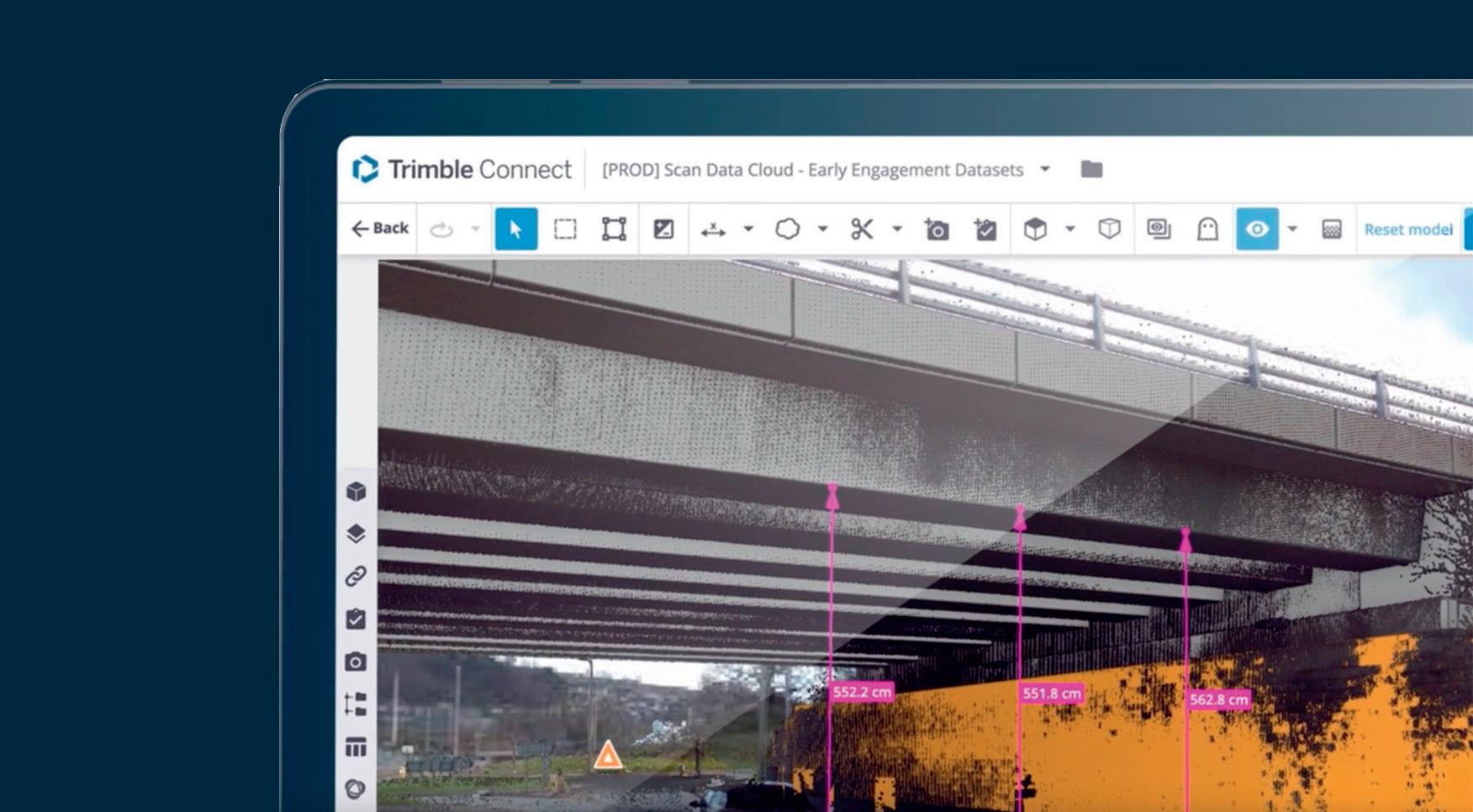

Trimble has launched a new Reality Capture platform service designed to enable more effective collaboration and secure sharing of massive reality capture datasets captured with 3D laser scanning, mobile mapping and uncrewed aerial vehicle (UAV) systems.

One of the key aims is to make reality capture data accessible to more project stakeholders to support better informed decisions.

The Trimble Reality Capture platform service is available as an extension to Trimble Connect, the cloud-based common data environment (CDE) and collaboration platform.

The service handles point clouds and 360-degree imagery and works with terrestrial laser scanners like the Trimble MX series and Trimble X9, as well as data from third-party hardware.

The Trimble Reality Capture platform

service is integrated with Microsoft Azure Data Lake Storage and Azure Synapse Analytics with a view to reducing the time it takes to ingest, store and process massive datasets.

“The new Trimble Reality Capture platform service enables our workforce to more easily access data and collaborate between the jobsite and office, creating additional efficiencies across our operations. Having a single place for designers, engineers and other stakeholders to review and inspect project data is a real leap forward,” said Christopher Pynn, digital leader at Laing O’Rourke for Eastern Freeway – Burke to Tram Alliance.

“This new service applies cloud technology in a new way for large data packages, allowing users to significantly scale performance and maximise data value,” said Boris Skopljak, vice president, geospatial at Trimble.

■ www.geospatial.trimble.com

Laiout has added several new features to its ‘fully automated’ floor planning solution, which uses proprietary algorithms and generative design to produce hundreds of different and regulation-compliant floor plan designs for office spaces.

The latest release includes over 100+ high-detail furniture blocks and typologies, AI-powered rendering and integrated engineering tools.

For AI-powered rendering, select

customers will be given access to the new feature, which generates text-to-render, high-quality images of generated floor plans ‘in seconds’.

For integrated engineering, new tools include image overlay capabilities that allow users to check ceiling designs and engineering elements seamlessly while generating new test fits. According to the company, users have full control over every aspect of their design.

■ www.laiout.co

Sterling, a specialist in cost and carbon estimating solutions for the engineering and construction industry, has formed a strategic integration with Building Transparency’s Embodied Carbon in Construction Calculator (EC3).

The integration enables Sterling DCS users who have access to EC3 to gather and assign A1-A5 carbon data to their construction estimate resources, while Sterling’s platform manages the entire carbon life cycle, including B1-B6 and C1-C4 (see below for definitions).

According to the company, this integration allows estimators and construction companies to accurately estimate both costs and carbon emissions across the full project life cycle.

Meanwhile, Sterling has developed an integration with Autodesk Construction Cloud, which allows project managers to import 3D models and associated 2D drawings, from Autodesk Build, Autodesk Docs, or BIM 360 for quantity take-off during the cost planning and estimation process.

■ www.sterling-dcs.com

B360 Secure is a new web-based application designed to help construction project managers manage complex permission structures within Autodesk Construction Cloud (ACC) and Autodesk BIM 360.

A centralised dashboard consolidates project-specific member and folder permission data for visibility and control.

■ https://b360secure.dgtra.app

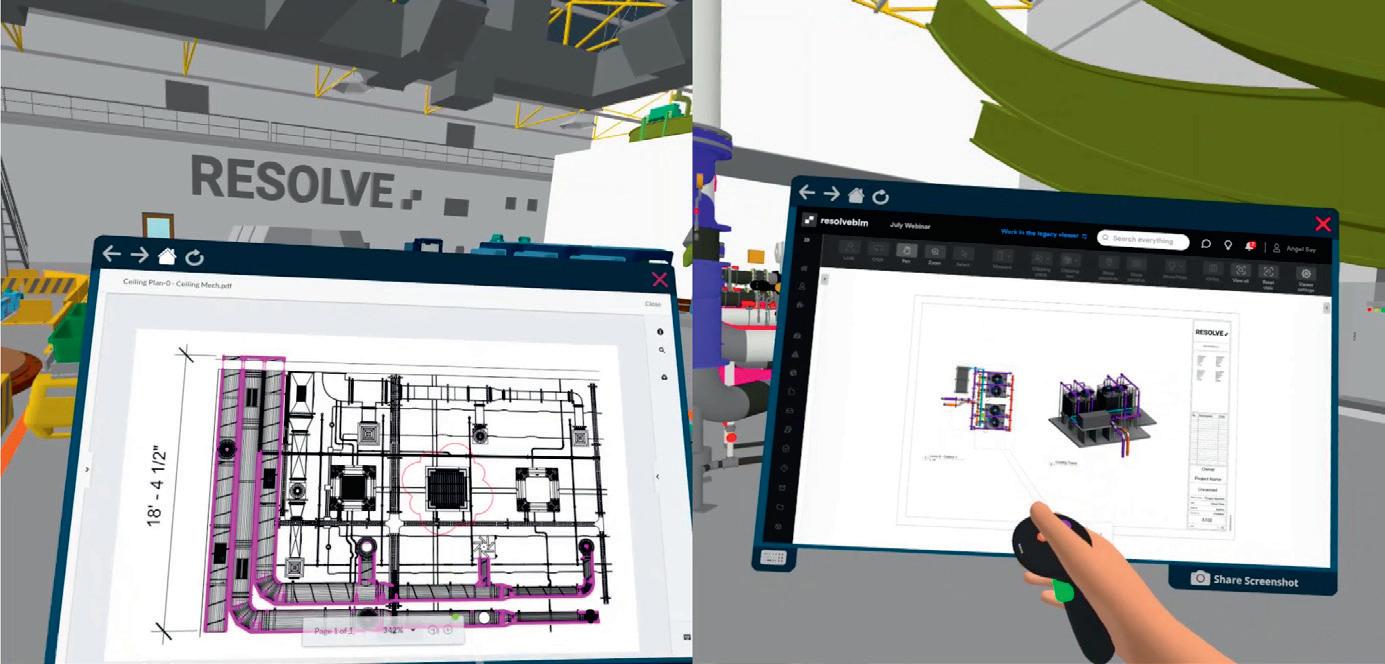

Resolve has added an ‘App Portal’ to its collaborative immersive design / review software that allows users to view 2D construction project data from inside VR.

With the new feature users can ‘seamlessly’ access and interact with 2D drawings, dashboards, and issue databases without having to remove their Meta Quest VR headset.

According to Resolve, this empowers construction teams to identify and resolve potential issues more effectively by providing immediate access to critical information from 2D data sources. For enhanced collaboration, users can share and pin 2D screenshots within the virtual model for team members to reference.

The App Portal is launching with four key integrations: Autodesk Construction Cloud (ACC), Autodesk BIM 360,

Procore, and Newforma Konekt.

“At Resolve, we are committed to providing innovative solutions that improve the construction process and we also believe 2D data still holds an important place in the industry. This new feature allows our users to fully leverage all their project data, leading to better decision-making and increased project efficiency,” said Angel Say, CEO Resolve.

“The enhanced integration of Procore into Resolve’s application empowers project teams to leverage Procore data like never before,” said Dave McCool, director of product, Procore.

“This integration supports project coordination and stakeholder engagement with critical project data in a whole new way, within Resolve’s virtual experience.

■ www.resolveBIM.com

Lumion 2024.2, the latest release of BIM-focused architectural visualisation software, includes 103 new animated characters, more control over ray traced shadows, and clearer material thumbnail previews. The new collection of animated characters feature lifelike movementswalking, presenting, making phone calls, taking photos, playing sports, and more. Models are suited to a variety of settings, including hospitality, healthcare, childcare, and business.

■ www.lumion.com

ZMH Architects has launched Giraffe, an independent software company aimed at enhancing efficiency, sustainability, and collaboration in building design and construction.

This launch builds on the foundation laid by sparkbird, the firm’s R&D lab established in 2017 to drive innovation in IoT (Internet of Things), design efficiency, modularity, and sustainability.

Giraffe takes this one step further by blending practical architectural and construction expertise with advanced AI and digital twin technology. It tackles critical challenges in the AEC industry, including fragmented design processes, inconsistent standards and documentation, workforce shortages, and the need for greater automation.

■ www.giraffe.software

Metaroom by Amrax, which allows users to scan building interiors with an Apple iPhone Pro or iPad Pro, can now generate 2D floor plans ‘in minutes’.

Plans can be exported in 2D DXF or PDF format, in addition to its existing 3D export functionality, which includes 3D DXF, IFC, USD (beta) and others.

Metaroom features an ‘automated reconstruction pipeline’ where LiDAR scans from the iPhone Pro or iPad Pro are uploaded to the cloud, and ‘true-to-scale’ 3D models are generated within seconds. Users can then use the web application, Metaroom, to enrich these 3D models with more information.

■ www.amrax.ai

Glider, a specialist in asset lifecycle information management, has acquired EDocuments, a provider of digital operation and maintenance (O&M) manuals for the construction industry. Glider plans to integrate the EDocuments platform into its gliderbim platform

■ www.glidertech.com

bimstore has launched Sinc, a new Product Information Management (PIM) platform developed specifically for manufacturers to handle product data on its journey through the organisation. It covers three main pillars: Centralise, Optimise, and Comply ■ www.sinc.io

D5 Render has released a Live Sync plug-in for Vectorworks, so when changes are made to the CAD/BIM model they are immediately reflected in D5 Render’s visualisation environment. D5 Render also integrates with Revit, SketchUp, Rhino, Archicad and other tools

■ www.d5render.com

Preoptima Concept, a tool for wholelife carbon assessments (WLCAs) and carbon optioneering for early stage design, now features RICScompliant reporting and new retrofit capabilities so existing buildings can be assessed alongside new ones

■ www.preoptima.com

Leica Geosystems, part of Hexagon, has launched Leica iCON trades, a new digital layout solution which features 6-degrees-of-freedom (6DoF) technology, previously exclusive to industrial measuring, along with an AI-enabled workflow ■ www.leica-geosystems.com

Trimble has launched Estimation MEP, a new estimating solution designed to streamline the takeoff and estimating process for smaller M&E consultants in the UK. The integrated solution combines graphical takeoff, material pricing and labour and supplier pricing ■ https://mep.trimble.com

avVis has launched the NavVis MLX, a dynamic handheld laser scanner designed for users of all skill levels, for confined or smaller spaces or shorter, more frequent scanning on site.

The NavVis MLX measures 60 × 19 × 15 cm, weighs 3.6 kg, and is equipped with an ‘easy grip’ handle and a supportive harness for enhanced comfort during use. It is built to work hand-in-hand with the NavVis VLX wearable dynamic scanning system in the field and NavVis IVION point cloud processing software in the office.

The device captures 3D data with a 32-layer lidar sensor in combination with SLAM software augmented by Visual

Odometry (VO) to deliver what NavVis describes as exceptional point cloud quality for a handheld device.

Four cameras positioned on top of the system take high-resolution 270º images when resting on the harness and 360º images when lifted above the head.

The device can capture 640,000 points per second with an operational range of up to 50m. According to NavVis, the accuracy of the point cloud is 5mm in a dedicated test environment of 500m².

To help ensure complete coverage in real time, users can monitor scanning progress on the built-in 5.5-inch 1,920 × 1,080 resolution touchscreen.

■ www.navvis.com/mlx

Chaos has introduced Enscape Impact (beta), a real-time energy modelling add-on for Enscape 4.1, the latest release of its BIM-focused visualisation software. Developed in partnership with IES, Enscape Impact is designed to make building performance analysis more accessible for architects and designers, by simplifying the analysis process.

With Enscape Impact, architects and designers can quickly view the energy performance of their building and optimise it within their usual design workflow. The software is integrated with Archicad, Revit, Vectorworks, SketchUp and Rhino, but only on Microsoft Windows, not on Mac.

Enscape Impact is not intended to provide building performance certification directly. Instead, it aims to offer real-time feedback that brings architects ‘close to certification-level

accuracy’. According to Chaos, what used to take several hours or even days can now be done in minutes.

The software allows users to calculate and benchmark key performance metrics, such as peak loads and total carbon emission. The data is presented within Enscape’s real-time visualisation environment, making it easier to comprehend and communicate the impact of design decisions.

There’s also a dials panel with ‘easy-toread’ charts and diagrams that display how geometry adjustments impact building performance

According to Chaos, the unified energy analysis and visualisation workflow is not only important for architects, but also for engineers, significantly cutting down the cost and time required to bring a poorly performing project back into meeting sustainability goals.

■ www.enscape3d.com/impact

Civils.ai has launched a new online training course, the ‘Construction AI Specialist’, which explains the fundamentals of how AI applications work and how users can build their own Construction AI tools from scratch, with no-code ‘AI Agent’ tools and with Python scripts

■ www.civils.ai

Highwire has added new AI-powered safety risk analytics features into its platform for capital project construction and operations. The new capabilities are said to provide ‘deep insights’ into contractor risk through advanced AI analysis of safety documentation

■ www.highwire.com

Loci is developing a machine learning API that automatically tags 3D assets, making it easier to search, manage, and use 3D content. The software is applicable to many sectors but in AEC it can be used automatically categorise 3D assets with BIM IFC standards

■ www.loci.ai

Simerse is developing an AI platform to view and manage infrastructure.

The platform uses AI to process 360° images captured by a low cost vehiclemounted camera and maps asset inventory for field assets. For collaboration, imagery, asset maps, and data records can be shared ■ www.simerse.com

Giraffe, the independent software company spun out of WZMH Architects, is developing two AI-powered tools: AiM (Ai Massing), for the rapid generation of massing models; and PLAiNNED for generating building code-compliant layouts for complex building components

■ www.giraffe.software

For the latest news, features, interviews and opinions relating to Artificial Intelligence (AI) in AEC, check out our new AI hub

■ www.aecmag.com/ai

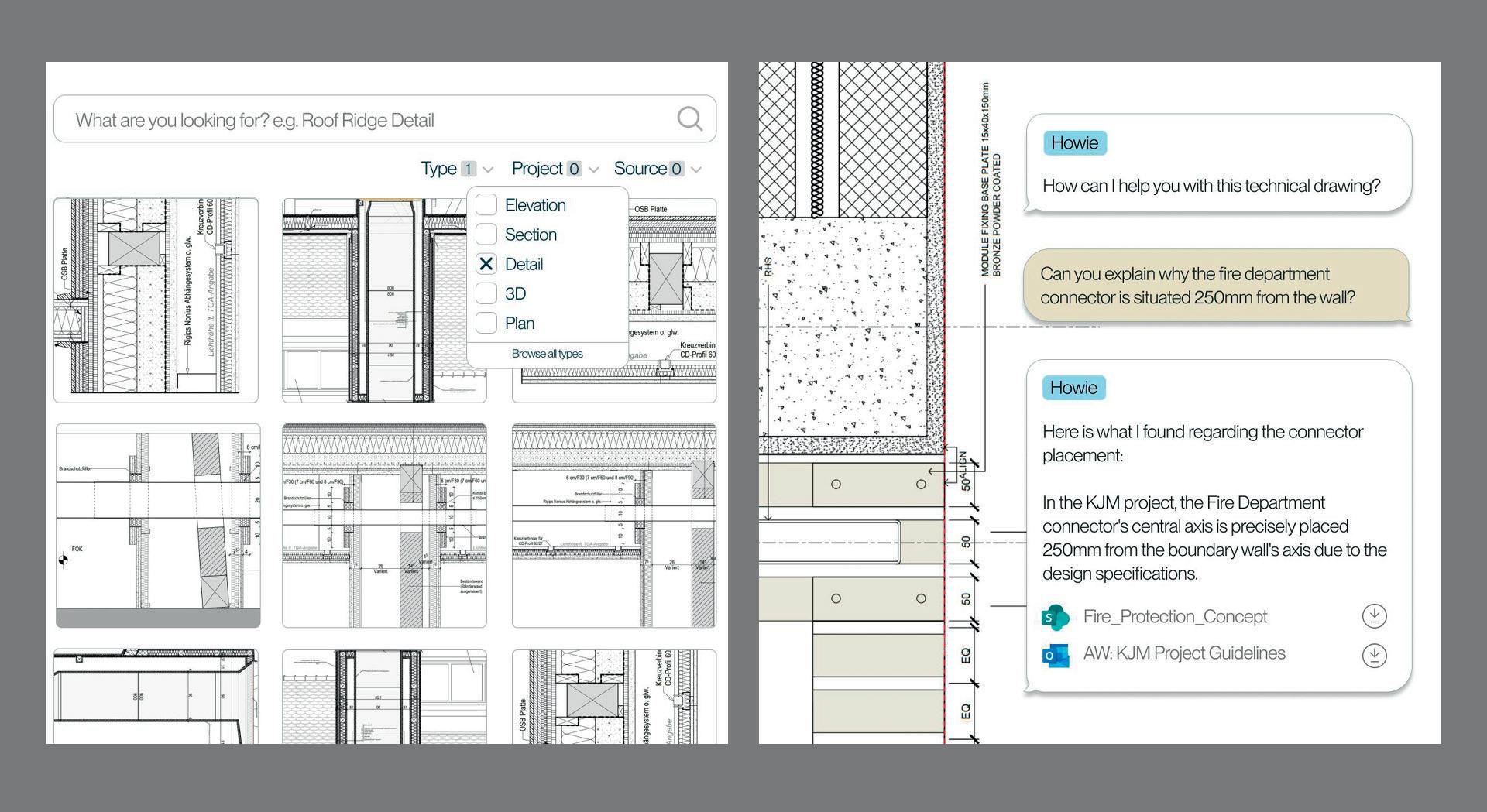

Howie, a platform billed as an ‘AI copilot for architects’, has launched in beta.

The AI platform uses Large Language Models (LLMs) to make sense of and recognise patterns within large sets of unstructured data, often buried in reports, emails, images, and drawings.

Howie analyses extensive archives of each individual customer’s past and present projects – to create an ‘easily searchable Knowledge Hub.’

Each AEC firm decides the keywords, and Howie then finds, auto-categorises,

and maintains the latest versions.

“Knowledge management is an evergreen pain point—whether it’s an old school search for a document, dealing with outdated information, trying to read and decode complex construction drawings or losing key expertise when people leave,” says Ewa Lenart, co-founder and CEO, Howie.

“We’ve developed an AI Copilot that seamlessly integrates with all your data. It knows, everything your company knows. And never retires. Fully secure.”

■ www.howie.systems

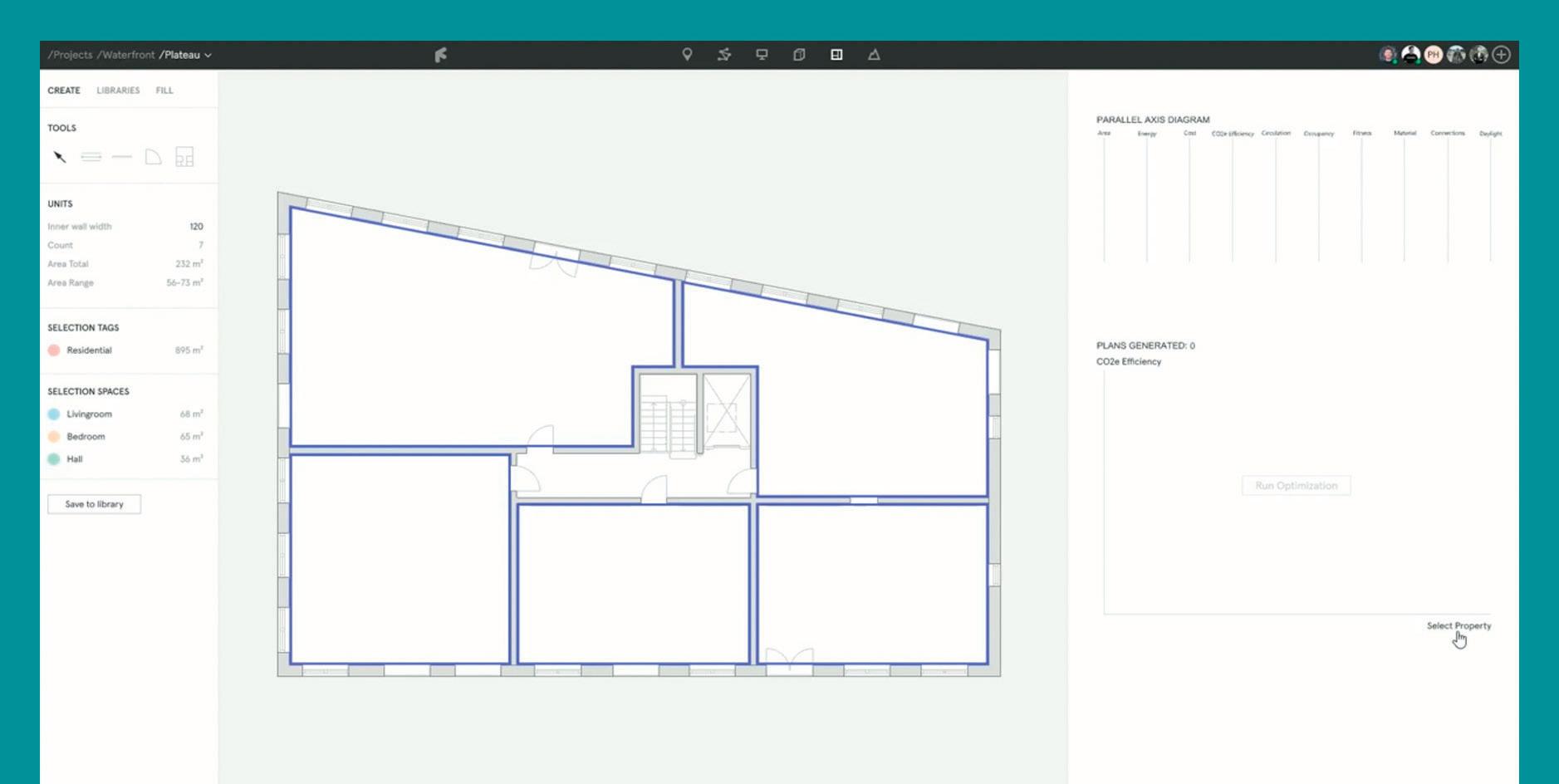

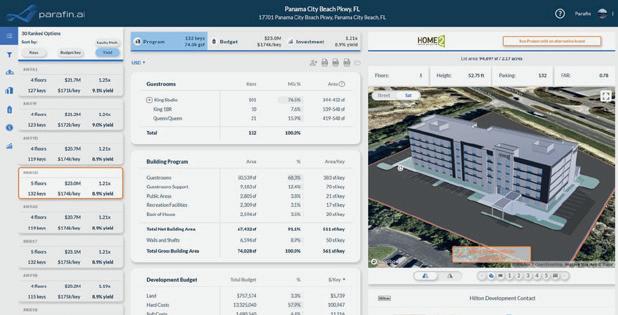

Zenerate has launched Zenerate App, an AI-powered design automation software designed to quickly generate and identify optimal design schemes, and eliminate the need to redraw floor plans to fit a unit mix.

With a few inputs, architects, developers and brokers can use the software’s AI engine to generate various building and site plan options in real time that meet specific project objectives.

Users can optimise designs by maximising floor area ratio or density, or specify a unit mix, as well as

quickly testing diverse massing, building layout and parking options (podium, structure, surface).

Designs can then be edited, by dragging and dropping residential units, stairs, elevators, retail/office space, drive aisles, parking stalls, etc.

The software can also be used to assess financials (net operating income (NOI), construction cost, project cost, yield on cost or residual value). Finally, it can generate PDF reports, export data in Excel, or download floor plans in CAD or Revit format to further develop the design.

■ www.zenerate.ai

inch, the architectural design tool, which is designed to generate the optimal building plan based on parameters and constraints set by the architect, has introduced a new AI-powered floor plate generator, ‘Generate Building Plan 2.0’.

Generate Building Plan 2.0 provides more control over the algorithm, as Jesper Wallgren, Finch CEO explains, “Instead of specifying an exact number of stairwells or units per stairwell, you can now explore a range — say, three to five stairwells. The algorithm then finds the optimal solution within that range, giving you more flexibility and a broader search space.

“This approach also extends to unit distribution per stairwell. You can now define a range of units per stairwell that aligns with your design intentions, such as avoiding corridor buildings where they’re not suitable. This level of control ensures

that the algorithm’s output is closely aligned with your vision from the outset.”

Finch has also focused on improving transparency, to help architects understand how the algorithm arrives at a solution, keeping them informed at every step of the process. “If the unit mix you’ve chosen doesn’t fit the building’s storey, the algorithm will now inform you why,” explains Wallgren. “Perhaps the units are too large, or there are too many stairwells. This real-time feedback allows you to adjust your inputs and understand the trade-offs involved, rather than just rolling the dice and hoping for the best.”

Generate Building Plan 2.0 is also more accurate than its predecessor, and changes have been made so the algorithm’s suggestions are not just theoretically possible but practically viable, taking into account project criteria and compliance.

■ www.finch3d.com

CyberRealityX has launched a Windows desktop version of CrXaI, its AI text-to-image generator designed to produce high quality, realistic architectural images without having to use viz software to define materials, textures or lighting.

The software is intended for early-stage design, allowing users to explore different ideas for interior or exterior scenes using nothing more than a text prompt.

With the new desktop tool, users can integrate CrXaI with any desktop CAD or

BIM software, such as Revit, SketchUp or Archicad. Users simply position their 3D model in the BIM software’s viewport, then from the desktop CrXaI software they can screen grab that view directly and use it the basis for the visualisation.

To help get users started, the software comes with a set of pre-written text prompts, which describe the desired output. These include building type, season or room type. Prompts can be edited to suit, or written from scratch.

■ www.crxai.app

nscape 4.1, the latest release of the BIMcentric real-time visualisation tool, includes Chaos AI Enhancer, which is designed to elevate the visuals and export better-looking assets, such as people and vegetation.

Many of Enscape’s people and vegetation assets are produced in-house, keeping a strict budget of polygons that allows users to place multiple assets without experiencing a loss in performance.

Chaos AI Enhancer is designed to elevate the visual quality of these assets using an AI engine that identifies which pixels should be enhanced.

According to Chaos, these AI-optimised, realistic assets are crucial for creating vibrant scenes that help clients understand the design intent faster. Trees, flowers, people and more not only add emotions but are important to highlight perspectives and spatiality, the company stated.

Enscape 4.1 also includes new artistic visual modes that add the ability to create images simulating pencil or watercolour drawings. The new visual styles are available for screenshots, batch rendering, and video exports.

■ www.enscape3d.com

How does Graphisoft plan to put AI to work on behalf of its customers? Martyn Day speaks to product development execs from the firm to hear their views

In the past, individual brands under the Nemetschek umbrella (of which there are 13 in total, including Graphisoft, Allplan, Bluebeam, Vectorworks and Solibri) were largely left to themselves when it came to developing new solutions and exploring new technologies.

That situation has changed over the past five years, with an increasing focus on sharing technologies between brands, combining those brands and developing workflows that intersect them.

In response to growing interest in artificial intelligence (AI), Nemetschek created an AI Innovation Hub in May 2024, with the goal of driving AI initiatives across its entire portfolio and involving partners and customers in that work. The stated intention is not just to accelerate product development, but also to test and explore the deployment of AI tools such as AI Visualizer (in Archicad, Allplan and Vectorworks) 3D drawings (part of Bluebeam Cloud), and the dTwin platform. Nemetshek has presented this

strategy as AI-as-a-service (AIaaS) to customers and partners.

Explaining Graphisoft’s approach to AI, CEO Daniel Csillag tells us, “There will be new services where we charge a subscription rate and not a perpetual license. Of course, inside of Graphisoft, we’re also trying to use more and more AI – for example, in customer service, such as tools that alert us to a certain user behaviour. Since this might give us an indication that the customer is unhappy, we can reach out proactively to them,” he says.

“Currently there’s a lot of brainstorming going on. I encourage the team to not talk about step-by-step improvements, but also to consider a jump forwards. I’m telling my team: be creative and sometimes disruptive.”

With those words probably ringing in their ears, I also spoke with Márton Kiss, vice president for product success, and Sylwester Pawluk, director of product management. Both executives have active roles to play in the shaping of Graphisoft’s core technologies.

The first Graphisoft AI product was AI Visualizer, a rendering tool that uses Stable Diffusion to produce design alternatives from simple 3D concepts. Graphisoft’s original hypothesis was that users were afraid of putting their IP on the cloud, so the company created a desktop, on-device version, which came with limitations.

“From actually talking to users, nobody is afraid of the cloud now, so with Archicad 28 (currently in technology preview), we have a cloud back-end for AI Visualizer,” says Kiss.

“We have also been experimenting with AI Visualizer Live, which is a camera looking at physical models, simple building blocks, rendered by an AI text prompt. We are thinking about how we productise this, as it’s a very practical way of using existing generative AI for concept iteration,” he says.

Catalogues and autodrawings

Elsewhere in this issue of AEC Magazine we discuss how the quality of download-

‘‘

We have been experimenting with AI Visualizer Live, which is a camera looking at physical models, simple building blocks, rendered by an AI text prompt. We are thinking about how we productise this, as it’s a very practical way of using existing generative AI for concept iteration Márton Kiss, vice president for product success, Graphisoft

able manufacturing content can be a hitor-miss affair. It’s very rare for manufacturers such as Velux or Hilti to create full libraries of BIM objects that designers can add to their models.

From conversations with Pawluk, it’s clear that Graphisoft is looking to tackle this problem. The great thing about specification books is that they have a rigid formula and, through optical character recognition, are machine readable. They might include 2D and isometric drawings, too. So Graphisoft is experimenting with applying AI to read these catalogues and conjure up GDL-based 3D BIM objects that contain all the manufacturer’s metadata (N.B. GDL objects are parametric objects used within Archicad).

As Pawluk explained: “With all generative models, what you need is a large amount of data and good-quality data. What we need first is a large number of good-quality GDL components to train the AI. In our development, the models, the speed of creation and complexity is rapidly increasing. We aren’t working

with any specific vendors yet. It’s still in the exploratory stages at the moment.”

On the subject of autodrawings, we knew last year that Graphisoft was exploring this area, as well as its reverse – generating 3D BIM models from 2D drawings. Kiss confirms that building a bridge here is a very important part of the workflow.

Executives at Graphisoft also like the idea of assembly-based modelling, where pre-configured spaces are created, instead of modelling with walls, doors, windows. “If you have someone creating those assemblies for you, or generated in the right way, a BIM model, from assembly, is actually going to be a very well-structured BIM model,” says Pawluk.

“Then later in the design, when producing the drawing, it’s already got a structure to work from. AI would be brilliant then.”

From our discussion, it was clear that Graphisoft is looking at developing AI

capabilities that would benefit all designers, like AI Visualizer.

However, the company also understands the need for individual customers to train AI on their own models and past projects, which are not shared to train the generic AI.

Kiss comments: “This is where you start, from building your own ‘assemblies’ – rectangles with pre-loaded details essentially – and then use them as building blocks for hospitals, schools, whatever, based on what you have created in the past.”

While Kiss and Pawluk could not go into specifics of what such an app would do, they concede that the group has already trialled collaborative working and that this work ended up being presented to the Nemetschek board.

“The whole concept around this was to make sure that we can deliver those proof of concepts and productise initiatives very quickly. I think that has been proven on this occasion,” says Pawluk.

■ www.graphisoft.com

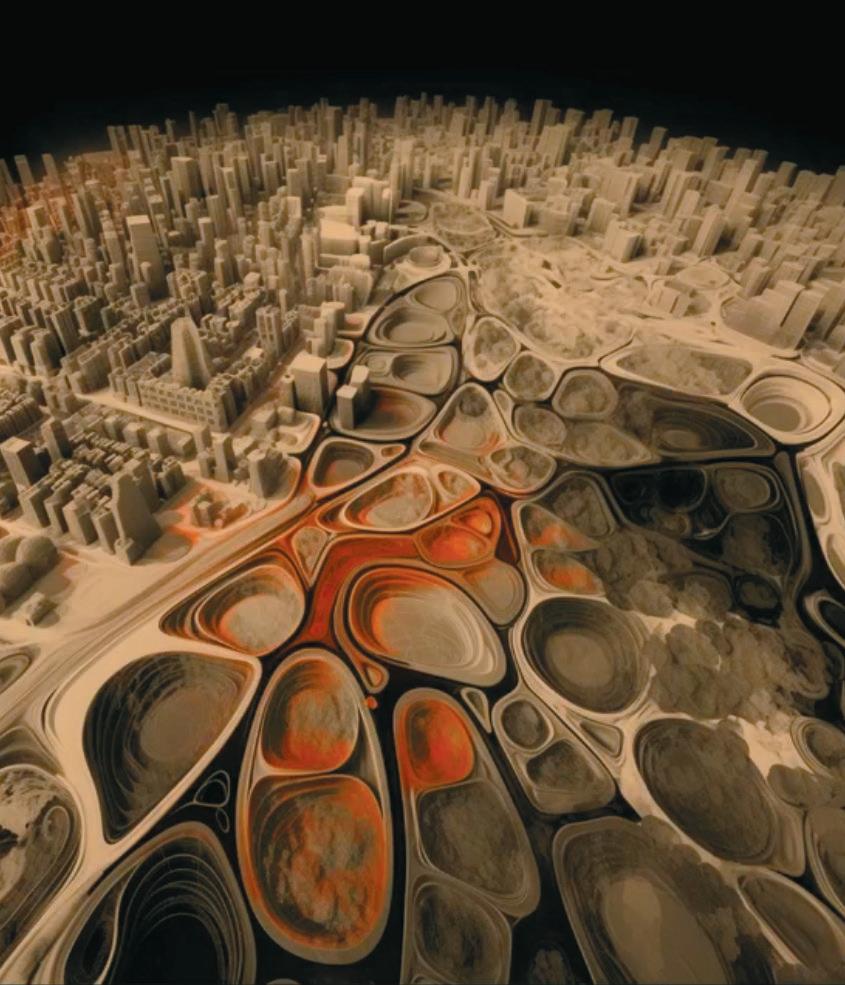

In mid-September, Bentley Systems announced it had acquired Cesium, an industry cherished 3D geospatial platform with an ecosystem of open standards, including Cesium.JS and 3D Tile technology. Martyn Day talked with Patrick Cozzi, previously of Cesium, and Julien Moutte, Bentley Systems CTO, to learn more about the deal that promises to bring the worlds of digital twins and geospatial closer together

It’s rare that a piece of industry news arrives in my inbox and prompts me to do a double-take. But that’s exactly what happened on 6 September, with the news that Bentley Systems had acquired Cesium. Bentley Systems is the infrastructure giant in the AECO space and is currently undergoing a changing of the guard as the brothers who founded the company take a step back and get a new executive team installed.

frameworks that its solutions provide. Decades ago, the company was the first CAD software firm to integrate a worldbased coordinate system in its file format, because big infrastructure projects are always located somewhere and can span thousands of kilometres.

‘‘

We are transitioning from files to a data centric world, and customers need to have confidence and trust in how the data is going to be handled. We believe in a true open approach, which is based on open standards, open source and open APIs

That can be a distracting process for any organisation. However, new Bentley CEO Nicholas Cumins has made the choice to forge ahead with an industrysignificant acquisition, probably the most impactful since Google sold SketchUp to Trimble.

Julien Moutte, Bentley Systems CTO

Because Bentley has always strongly focused on infrastructure, geospatial has played an important part in the data

Bentley has had agreements with Esri, in addition to offering its own mapping and cadastre-driven tools. However, for the last few years, it has obsessively developed and also acquired technologies relating to 4D construction, reality capture, digital twin and asset management.

Bentley Systems is not the only AEC-

focused software firm to understand the importance of geospatial. When Andrew Anagnost took the CEO job at Autodesk in 2017, he didn’t waste time in forging a partnership with ESRI and starting to back-fill the company’s BIM and civils products with GIS integration. Autodesk also actively chased Bentley Systems’ Department of Transport clients and acquired Innovyze in 2021 to expand into water treatment, another Bentleydominated market. For over five years, Esri and Autodesk have been developing hooks between their products, enabling the movement of BIM and GIS within their ecosystems.

Enter Cesium

So where does Cesium fit into all this? For many reasons, the company hasn’t previously seemed like an acquisition option. In much the same vein as Robert

McNeel and Rhino, Cesium built a business based on community and established a huge network of around one million users, as well as about 10,000 developers, with powerful yet relatively low-cost GIS integration/distribution tools.

Cesium has a deep belief in the open source approach to software, putting a lot of effort into the development of Cesium. JS and its streamable, interactive 3D Tile open format, which supports vector, 4D, 2D, 3D, point clouds and photogrammetry. This is free for both commercial and non-commercial use and has been used to map subsurface, surface, airborne and space environments.

Cesium’s formats have been embraced by the likes of Google and NASA. Its data structures, meanwhile, also support other open formats, such as glTF for model info (asset information), as well as the latest advances in GPU acceleration. While the technology is a perfect fit for Bentley’s digital twin strategy, the culture fit was, on the face of it, incongruous to corporate customers.

Around the time of the 2020 Open Letter to Autodesk, I had a conversation

with Keith Bentley, then Bentley Systems CTO, in which we discussed whether open source applications such as Blender BIM were the way to go. From that chat, I was left in little doubt that this was not Keith Bentley’s mindset, since he was strongly of the belief that programmers’ efforts should be rewarded.

However, he was more inclined to agree that open source data was perhaps a way forward, because for customers, the keys to data must remain in their hands. The whole premise of digital twins requires the assembling of data from all sorts of applications, in many file formats. In that respect, data trapped in proprietary silos is likely to be an industry-wide problem.

In 2021, Bentley opened the iTwin.js library with source code hosted on GitHub and distributed under the MIT licence. Since then, Bentley has been seeking wider adoption of its iTwin data wrapper.

So, while I was pondering Cesium’s culture fit, I hadn’t considered Bentley’s cultural change. Its newfound belief in open data isn’t just a passing fad. It really

is something that the company intends to deliver on.

When considered from that angle, acquiring Cesium takes Bentley closer to its goal of having open format technology that covers all the many different sources of data necessary to build an infrastructure digital twin. That could be scan / photogrammetry data, BIM data, GIS data, asset tagging and management data.

Of course, Cesium offers far more besides. Its super-fast 3D pipeline enables huge datasets to be visualised locally in Unreal Engine, on the web, on a mobile and in real time. The Cesium ecosystem also includes developers who may want to utilise Bentley’s wide array of SaaS analytical and simulation tools or BIM capabilities, and existing customers will be able to view their digital twins in geospatial context for analysis and service planning.

In conversation with Cesium CEO Patrick Cozzi, he outlined for me his view of the deal. “Cesium is an open-source project doing massive scale 3D geospatial, open

source, open standards, large models with semantics on the web. When Bentley found Cesium eight or nine years ago, they opened up our eyes. They said, ‘Hey, Patrick, this is more than geospatial. This can do the built environment. This can do infrastructure,” he said.

He continued: “When we brought 3D Tiles to OGC (The Open Geospatial Consortium) to make an OGC community standard, Bentley was part of that original submission team. This is going back to 2017. Bentley had ContextCapture, now called iTwin Capture, which was some of the first photogrammetry ever put into Cesium. We have had a long relationship with Bentley. We’ve known the Bentley brothers, the leadership team of today, and we think we share a lot of the same DNA. We like Bentley’s interest and commitment and authenticity around open source, open standards, and building a platform which uplifts the ecosystem. We know that that is Bentley’s belief and it’s where Bentley wants to take the future.”

A few days prior to announcing the acquisition, Cesium published a press release regarding its BIM integration technology preview. At that time, AEC Magazine reached out to the company, but they couldn’t answer, because they were busy finalising the deal. Cozzi added, “As you saw just before the agreement, we are doing more in AEC.’” I suspect that Bentley has a raft of technology to assist in that.

some new capabilities around BIM collaboration. We have discussed the limitations we’ve seen in IFC, for instance, and we’ve created BI-model technology, and I think one thing we captured in the room at NXT BLD is that not many know about (Bentley) iModels,” he continued.

“We need to learn how to be better in creating an ecosystem, a community. And I think that’s one of the things that Patrick and his team are going to bring to us. We want to create a thriving developer ecosystem on top of this platform, which is built around open data.”

Cesium has about 60 employees based in Philadelphia. They are mainly programmers, with about 10 people in sales – but sales are mainly inbound and focused on products such as its Cesium Ion SaaS 3D geospatial data hubs, together with large partnerships and hosting. The Cesium offices are located not too far from the Bentley Exton Headquarters. By joining forces with Bentley, Cozzi said that it will allow the company to achieve more industry impact.

‘‘ The huge Cesium ecosystem is consuming Cesium geospatial data. If they are bringing building models in, they don’t have the full detail of what those building models contain. It’s mostly geometry. There’s a lot of value we could deliver by providing access to the metadata of those building models

Julien Moutte, Bentley Systems CTO ’’

Moutte also explained what he thinks Cesium brings to Bentley tech stack. “There are multiple facets here. Obviously, I believe that our users, the engineers of the world, would benefit greatly in having more context when they do their work, and we believe that the best way to provide this to them is by leveraging the Cesium technology.

in, they don’t have the full detail of what those building models contain. It’s mostly geometry. There’s a lot of value we could deliver by providing access to the metadata of those building models. We have capabilities to bring in geospatial analysis, lots of simulation, whether it’s flooding, mobility, structures and visualising all of this in the context of geospatial is also very valuable. It’s a massive opportunity.”

Cozzi added, “BIM could learn a lot from these large GIS models. 3D Tiles doesn’t just stream the geometry and metadata for terrain and photogrammetry, but can also do that for the built environment. Up to this point, a lot of that has been done in OBJ files or FBX, which are standard graphics files. We thought we could help them more, so have built some new pipelines to preserve more of that semantic data, especially the hierarchical nature.”

So, for example, Cesium built a pipeline for IFC, and also into Revit, he explains. “Then we run our super-smart algorithms on the geometry and metadata, and frankly, the metadata might actually be heavier than the geometry. Then, in a domain-specific way, we slice and dice that into 3D Tiles, so that it’s streamed very effectively over the web, placed within a geospatial context,” he said.

“So where do we go next? Bentley brings a ton of domain knowledge about infrastructure and AEC, and we keep saying ‘better together’.”

Cozzi will now head up the iTwin platform developed within Bentley. While the technology benefit has been clearly explained, it also seems that Bentley hopes to preserve and learn from the open-source culture that Cesium has embodied, with its dedication to openness, performance and solving problems for thousands of users in many different industries.

Bentley CTO Julien Moutte then gave me the Bentley perspective, “We are transitioning from files to a data centric world, and customers need to have confidence and trust in how the data is going to be handled. We believe in a true open approach, which is based on open standards, open source and open APIs,” he said.

“The iTwin technology stack is going to continue using more and more open standards. We are looking at creating and enriching the standard ecosystem with

“Today, we’re trying to do this with the iTwin platform, but I think there is a better way to do this when it comes to geospatial data, bringing in Google 3D Tiles, making sure that we can combine and bring all of that data in an environment in an aligned way, using the geospatial coordinates. Whether it’s underground, buildings or engineering models, it’s about more value to our users,” he said.

He concluded: “The huge Cesium ecosystem is consuming Cesium geospatial data. If they are bringing building models

While I still have my doubts about the potential size of market for digital twins within the building sector – insofar as there are so many buildings but the cost of making a twin is high – when you scale up the geo level and start talking about managing assets like roads, rail, national grids and power stations, then digital twins and asset management start to make a lot more sense.

The acquisition of Cesium is a major coup for Bentley, which will not only help it in its historic markets, but also introduce it (literally) to a whole new digital world.

■ www.bentley.com ■ www.cesium.com

Generative AI (GenAI) is extremely promising, but achieving tangible results is more complex than the hype suggests. Keir Regan-Alexander, architect and founder of creative consultancy, Arka Works, highlights the challenges of implementing GenAI and offers practical strategies for professional practice

Have you noticed how AI is often written about in two dramatically different ways? a) it’s a silver bullet or b) it’s a scam.

On the one hand, it’s presented as a Frankensteinian invention that is changing the employment landscape for many knowledge workers. On the other, people are becoming exasperated by hyped-up claims and overpromises being pushed by “corporate grifters”.

But what if neither extreme is wholly true and GenAI proves to be … c) worth the effort and much liked by the employees who use it in the real-world?

This technology is beguiling because it presents itself as friendly and simple. Ask a question and, like popping corn, it comes noisily to life. This sweet, surfacelevel experience is why there is such a widespread conceit on LinkedIn that using Generative AI to solve all your business problems is easy.

Here’s the formula:

You post an eye-catching image and add the hook “I just solved [insert challenging task] in 5 minutes. RIP [insert profession] ��”

People see these posts and they conclude that a whole host of other ideas must therefore also be possible. This is the peak of inflated expectations.

They try to emulate the idea at work, only to quickly discover that the claim was only “sort of” true. When real-world constraints and quality standards are applied to the recipe, the method falls short in some critical way.

• Cue feeling disheartened.

• Cue labelling Generative AI as all hype and writing it off for anything useful.

• Cue pulling up the drawbridge of curiosity and deciding we don’t need to be laser-focused on AI after all.

The trough of disillusionment is reached, and a microcosmic version of the hype cycle is complete.

As we approach two years since GPT-3 went mainstream, we remain at the beginning of the very first innings for GenAI and it’s not productive to try and rush to definitive conclusions about what it all means just yet.

It’s also not productive to spread claims that AI is easy to do well; it’s not. Getting high-quality repeatable results across your org using AI is hard.

Adding yet more software processes to your stack is hard.

Remembering you can do something differently when you’ve been doing it the same way for years, is hard.

The human in the loop

The prospect of a mature AI adoption landscape across industry wide settingswhere complete workflows are delivered end-to-end appears at present to be a long way off. Not least because very few existing organisations have their project and operational data structured and prepared for such change.

But while regular businesses are not really ready, this is what large AI companies are planning for:

“OpenAI recently unveiled a five-level classification system to track progress toward artificial general intelligence (AGI) and have suggested that their next-generation models (GPT-5 and beyond) will be Level 2, which they call “Reasoners.” This level represents AI systems capable of problem-solving tasks on par with a human of doctorate-level education. The subsequent levels include “Agents” (Level 3), which can perform multi-day tasks on behalf of users, “Innovators” (Level 4) that can generate new innovations, and finally “Organisations” (Level 5), i.e. AI systems capable of performing the work of entire businesses. As we progress through these levels, the potential applications and impacts of AI will expand dramatically.”

Stephen Hunter, Buckminster AI

The “Level 4 Innovators” moment appears to be the point at which things will start to feel very different and this projection suggests we are 4-5 major development cycles from it.

While I believe such end-to-end workflows are more probable than not in the coming years, I’m doubtful that widespread automated workflows and decision-making without human oversight at critical steps would be a desirable outcome for anyone, even if it were possible. Dramatic shifts in technology over very short time periods can be ruthlessly inhumane when driven by purely utilitarian priorities - just look back at the Industrial Revolution and what became of the Luddite movement for reference (www.tinyurl.com/luddite-AEC).

Indeed, the “human in the loop” has been essential to every successful imple-

mentation of GenAI that I’ve seen in professional practice. Any high value professional task cannot happen without sound judgement, discretion and (seldom mentioned) good taste. People also take responsibility for outcomes - as Ted Chiang points out, the human creative mind expresses “creative intention” at every moment (www.tinyurl.com/Chiang-AEC).

Until we see evidence that traditional “Knowledge Work” businesses can thrive and compete without this essential ingredient, then GenAI’s job will be to provide scaffolding that helps to prop up what we already do, rather than re-build it entirely.

In the last two years, the hype around AI has risen to unreasonable levels and every software product has been slathered in AI that no one asked for and that rarely works as desired.

But rather than trying to reinvent the wheel with wholesale departmental and budget changes caused by implementing a million new AI apps or hiring a team of software developers, my recent work with professional practice suggests that you’ll likely find it more impactful to refine just one humble spoke of the wheel at a time and to build momentum with incremental but lasting adaptations that support what you already do well.

When you focus on smaller, yet pivotal seeds of contribution from AI and put it in the many hands of your team, rather than mandating prescribed use from “on high”, you can strike a powerful balance: boosting your existing way of working while maintaining individual control, sound judgement and freedom over each productive step. Pretty soon, you will see new and varied species of work evolving across the office.

These adjustment to ‘chunks’ of work need to be done with vocal and transparent engagement about cultural alignment with this new technology.

The formation of an ethical framework for responsible use within the business -that sets guardrails for adoption - is also needed alongside new quality measures to keep standards from slipping, which is always a risk when you make something faster and easier.

You also need a programme for new skills training so that you can equip your team with the resources to go forth and explore for themselves. If Knowledge Work is going to change in the coming years, providing new skills and tools as broadly as you can seems the fair thing to do.

If you’re doing it right, the productive benefits of AI will be felt by the business but largely flow via the employees who directly use it - employees may find they achieve more in the same time, to a higher level of quality and even with a greater sense of enjoyment in their work.

This is how I find it and I know many more who increasingly feel the same. Anecdotally, I know many employees now hold personal licences to various consumer-facing GenAI tools that they choose to pay for out of their own pocket each month because they bring such high levels of utility and improve their working lives directly.

That’s one of the purest examples of “product-market fit” that you will find, and it wouldn’t be happening unless people had worked out how to really drive value from these new techniques. Imagine paying for software you use for work out of your own pocket, just to

Real-world

&

improve your working life.

In these cases, mostly they don’t tell their employers - and I know this because people discuss it openly.

It’s possible that many leaders are simply turning a blind eye because it works for them to. Or this is an outcome caused by a kneejerk AI policy that was written over a year ago calling for the total prohibition of AI on company equipment. In these cases, policies are commonly being ignored and this is the worse outcome of all because then you have uncontrolled use, no privacy controls, likely data leakages of GDPR, NDA and commercially sensitive information out of the business.

Many hundreds of new software products have been marketed to professionals in the last couple of years - lists upon lists of “must have” new names and logos.

I have tested many and I like a number of them. But for every new and well-built

● 1 Developing a logo design (2 hours)

● 2 Photoshop montage to realistic render (45 mins)

● 3 Rendering design options for two London feasibility studies (4 hours)

Start with a hand drawing and written concept:

Use Midjourney (MJ) with image-prompting

Start from a B&W hand sketch as input

Run variations to generate ~8 similar more refined options that have potential and further refined them in MJ

Final logo and colours refined and vectorised in Illustrator

Start with project visual created with basic level of detail in Rhino with a simple render from V-Ray:

Use image-to-image generation in Stable Diffusion (SD)

Tweak 2no control nets using the same basic render input (1. Canny, 2. Depth)

Create photorealistic render

Develop parts of the image further using “In-painting”

Upscale and refine to 4k size, using another AI tool, Krea

Start with site photos and basic 3D context prepared. Model the proposed building retention and massing, include basic floor levels in model:

Align camera view of existing with 3D model view of the proposal

Create relevant editing regions using site photo as a mask

Upload masking regions, site photo and proposal as linework to SD

Use image-to-image generation in SD

Tweak 3no control nets and weights (Mask, Canny and Depth)

Apply custom LoRA within prompt for target style exploration

Produce conceptual multiple design options within the massing constraints provided

Carry out final image editing in Photoshop

Upscale and refine to 4k size, using AI tool Magnific

Seed your new image into additional views using IP Adapter

● 4 Interior concept options for a kitchen design (15 mins)

Start with a traditional materials mood board for interior design concept:

Input image of this mood board and a written spatial description into a LLM to produce a prompt that generates good colour and material descriptions and that will work with Flux 1 (a striking new image model from Black Forest Labs)

Use prompt with Flux to produce spatial mood imagery for kitchen interior

Carry out minor edits in Photoshop

Upscale and refine as before

tool there are many more in the ‘vapourware’ category that fail to effectively solve a real problem for practices or that duplicate something another tool has already done better.

Moreover, the sheer scale of new gadgets and techniques can cause paralysis in businesses who get caught in perpetual “test-mode” and become unable to take any meaningful action at all. The evergrowing size of these lists doesn’t help either - it’s a never-ending task.

To keep things simple and to focus on what is really important there are in my mind two foundational technologies that require our special and ongoing attention with each new release of development.

1. Image models (Diffusion models like SDXL & Flux), these can also now process video.

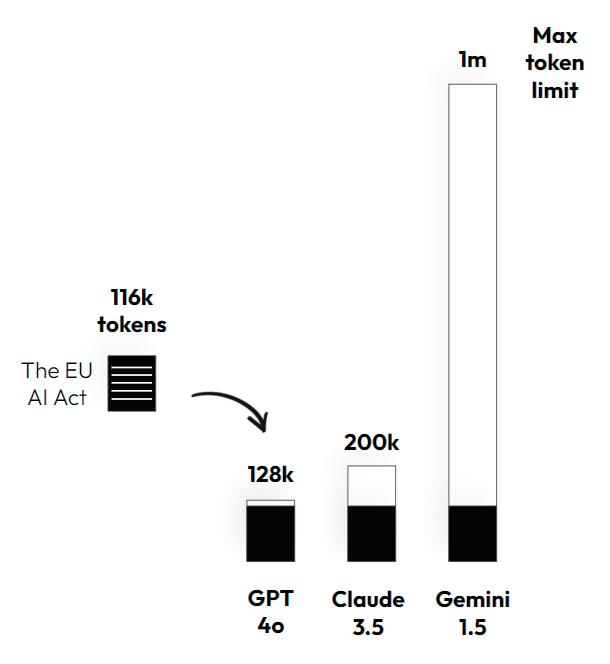

2. Text models (Large Language Models like GPT-4o & Claude 3.5), these can also now process numerical data.

These two areas alone are enormously deep and novel fields of learning. I recommend getting familiar with how to access and make use of these tools as directly as you can in their “raw” form, i.e. don’t become too reliant on easy-to-use wrappers that do many things for you but ultimately reduce your control - you will find that many of the apps’ features you’ve seen are possible by focusing on the raw ingredients and with only a couple of low cost subscriptions needed.

My background is in design, and I love to use image models for visual concepts.

I don’t find these tools can solve multistep problems in one shot - instead, I curate their use over smaller discrete chunks of controlled taskwork and weave the whole thing together using a number of well-known tools that I would already be using in traditional practice.

To give you a better sense of what I mean, in the table left you can see some practical examples from the last month at Arka Works.

While doing these chunks of work with teams, we’re exercising our own professional and creative judgments at each step.

What you’ll notice when looking at these approaches is that they are quite complex procedures requiring a high degree of control and aesthetic judgement. Also, that the whole process is being carefully curated by the designer, which is why some people prove to be

particularly gifted at working with diffusion models by following their intuition and others are less so - if the results were just the case of clicking buttons in the right order, this wouldn’t be true.

AI feels different

The introduction of GenAI to these very common practical design tasks is more akin to plugging in a synthesiser or effects-pedal into a musical instrument. In general, I prefer the “instrument” rather than “tool” analogy, which just conjures feelings of crude hammers and nails. By contrast, this instrument is nuanced, unpredictable and can make its own decisions.

My initial expectation about various AI image tools is that they would prove to be a like-for-like replacement for traditional digital rendering methods.

The reality is quite different; this is an entirely new angle with which to approach the same challenge and requires a fundamental shift in the way you think about things.

Indeed, this has been a source of debate between myself and Ismail Sileit, who says about Image Diffusion:

“While traditional rendering techniques are about faithfully replicating reality through precise algorithms, GenAI allows us to engage in a live dialogue with possibility. It’s not so much about rendering accuracy —it’s more about cultivating a relationship with the unpredictable, the emergent, and the profoundly novel.”

Ismail Sileit, architect at Fosters + Partners and creator of form-finder. com (in a personal capacity)

What Ismail is getting at here is that yes — there are certainly time savings, but we shouldn’t overstate these. The main change he perceives is in the speed and breadth of the creative feedback loop itself, the new experimental avenues that we’re able to explore, and importantly the enhanced enjoyment of the whole process.

Images: what’s hard?

Despite what the “AI is easy” influencers tell you, it’s also not a simple process if you want to achieve results you can use on real projects - to exercise real control and achieve usable results in practice you have to learn quite complex interfaces and become familiar with a new lexicon of technical terms like; “denoising”, “latent space”, “seeds”, “checkpoints” and “LoRAs”, to name a few.

Getting the best out of image models also requires a strong dose of curiosity, patience, and a willingness to keep persisting in the face of abject ugliness at times.

When we’re working on this kind of thing our overall hit rate is probably less than 5%. For a recent feasibility study presentation using some very basic internal 3D renders to start things off, we produced 6no total new images across 3 design studies - but when I look back at the results, I can see it took us about 211 separate tests to get there.

While the study produced these images very rapidly, we had to put up with a great deal of mediocre and downright appalling outputs to find something that captured what we were looking for.

A 3-5% conversion doesn’t sound like a great hit rate, and also feels inherently wasteful. When you’re generating imag -

es, your GPU will be getting ready to take off and use a lot of electrical energy and this is an area I’m looking into in more detail currently to better understand the actual energy impact of using GenAI at any kind of scale. Any practice looking to adopt GenAI will probably also need to establish a means of measuring their energy use intensity and as model size increases in the coming years, this will probably become an ever greater area of difficulty.

AI image work really lights up the right side of my brain, but the AI technology that feels most important to me and that I utilise more than any other - by far - is Large Language Models (LLMs).

My use of text models is growing over time and I’m now at around 10-20 tasks a day for very varied and high-utility responses.

Early on I set GPT as my homepage and I tried to use it for as much as I could and even with this proactive attitude to adjust my working methods, I still spent months forgetting that it was there to help for all kinds of things during the day.

This behavioural change phenomenon may actually be the greatest hurdle in the short term to any kind of meaningful change because workflow muscle memory is strong and the cost of a failed effort during daily working life is high.

The table right shows just a few examples of the type of things we can now attempt with a fair expectation of success. With the latest release of models and the next wave just around the corner, the use-cases will be only growing in the coming months and years.

● 1 Reformatting an invoice schedule (3 mins)

● 2 Large report summarisation tool (15 mins)

I’ve received a fee proposal with a complex invoicing schedule in a chart format spread out over many stages and months. I need it in a vertical format suitable for issuing to a client, this can’t be done in Excel.

Using a private LLM configuration, I remove any identifiable data and convert the received schedule into a markdown table.

I request the table be reformatted and define the column headings I need.

Spot check results, to confirm correct.

I copy the table into excel, add back redacted details, and complete.

I’ve received three very long publicly accessible government reports that I need to digest quickly, they relate to the scope of regulation of AI in the EU. I’ve scanned them quickly, but now I need to pull a one-page summary together for a policy document for a meeting.

Convert the PDFs to plain text.

Compose a pre-made prompt separated by context, role, instructions and documents (where I place the full-length text), separated by tags.

Use large context window model via API (Claude 3.5) to paste the complete pre-made prompt.

Spot check results, to confirm correct.

Final edit before adding to my policy draft.

● 3 Competition bid cost estimate

(15 mins)

● 4 Bid writing from past examples (10 mins)

A client has found a public design competition invite. They are tempted go for the job but they want to know how much the competition stage will cost.

Using a private LLM configuration, I export the PDF to plain text and create a prompt explaining the task and introducing the project information.

I ask for summary of key project requirements and submission format and dates in table form suitable for a memo.

I ask the chat to calculate the working days between dates.

Spot check results, to confirm they are correct.

I provide a written team plan for the bid with at-cost day rates.

Using Code-Interpreter, I represent this key data to a new chat using a Chain of Thought style prompt and showing each step of it’s working.

The python script returns a ballpark estimate of cost. I’m happy with the working method and we decide the competition is too costly to go for.

A client needs to write a 500-word bid response for a new RfP quickly. They have answered this type of question many times before, but not in this exact way.

Provide context and role to Claude 3.5, provide new question and 5 step instructions and good example of past answers to reference.

LLM analyses the question structure and produces a plan for how to best answer each section (chain of thought method).

LLM then maps and rewords previous ‘good’ answers and case study examples to the new question.

Refined versioning by providing critical feedback. “65% version” is then taken to InDesign for final editing and completion by Project Director.

(Above) Precision editing: Example workflow for a warehouse building refurbishment, created in Stable Diffusion (credit: Arka Works)

(Left) Custom Styles: Arka-ArchPainter-XL, a Stable Diffusion Custom LoRA (credit: Arka Works)

‘‘ The most effective AI strategies in professional practice that I’ve seen aren’t mandated from on high, but rather emerge organically from teams who are given the freedom to experiment and trust to exercise their individual judgement about what to do with the results ’’

“Next-generation AI models are effectively embargoed until after the US election on 5th November, but expect to see significant gains in reasoning ability and intelligence when they arrive, with each of the main providers currently training / testing models more than an order of magnitude larger than the current largest & most-intelligent models.”

Stephen Hunter, Buckminster AI

Text work. What’s hard?

There is no shortage of people expounding the virtues of LLMs, but let’s be honest about the difficulties.

“Multi needle reasoning” problems. These happen when you ask the model to retrieve a number of separate facts at the same time and then to apply further layers of reasoning and logic on top. When you request too many needles and too many processes, you may get a few okay results, but it’s possible to ask too much of the model at once and this can result in disappointing or incomplete results. There are ways around this that involve breaking down the task into smaller steps and just giving the model what it needs at each step, hence why you need to learn good prompt craft.

Accuracy. You still have to always spot check the results and validity of your workings. I call it spot check, because with the latest models if it’s set off on the right track it will usually get things right. Hallucinations remain a challenge, albeit the rate of occurrence has been dramatically reduced since I first started using them nearly two years ago, such that errors are increasingly hard to identify. This is why spot-checks must remain part of your core approach for now.

Q: Who was the sole survivor of

A: The sole survivor of the Titanic disaster was Charles Joughin. He was a British baker and the chief baker aboard the Titanic. Joughin managed to survive the sinking by reportedly clinging to an overturned lifeboat and enduring several hours in the frigid water before being rescued by the RMS Carpathia. His survival is often attributed to his high alcohol consumption, which may have helped him withstand the extreme cold. Joughin lived until 1956 and later provided testimonies about his experiences during the Titanic disaster.

Q: Who was the sole survivor of Titanic?

A: There was no sole survivor of the Titanic disaster. The RMS Titanic sank on April 15, 1912, and out of the approximately 2,224 passengers and crew on board, around 710 people survived. These survivors were rescued by the RMS Carpathia which arrived at the scene after receiving the Titanic’s distress signals. Survivors included men, women and children from various classes and backgrounds. Some notable survivors were Molly Brown, who later became known as “The Unsinkable Molly Brown” for her efforts in helping others during the disaster, and the ship’s designer Thomas Andrews, who perished despite his efforts to save others ?

Now and Then. A popular example of a hallucination from 2022. When you ask the same question today you get excellent performance and data. However, the final sentence suggests that Thomas Andrews was both a notable survivor and also perished

Long prompting is now better than using Custom GPTs for most tasks. Now that we have large context-window models available we can work with hundreds of thousands of words for input. For most tasks, pasting your prompts in long-form into a model is now a more robust approach than Custom GPTs if you need very reliable performance. This is because Custom GPTs typically run on a smaller context window (32k) and also involve RAG, a process that cuts your data up into small chunks that are later retrieved, without needing to store them in full within the knowledge base. If the wrong chunk is retrieved, you will get a sub-par answer. Many practices have found use for Custom GPTs, but it’s now better to move towards a long-prompting method and make full use of these amazingly spacious context windows.

Data preparation. Most businesses are excited about the potential of AI, but they aren’t excited about preparing all their reusable project and operational data such that they can use it again and again to produce first draft written reports of many kinds. There is a surprising amount of written work in practice that could be massively aided by GenAI if this data were ready. We really need to start thinking about building asset libraries that are ready for LLMs to process and link together in new ways to really feel the benefits.

Conclusion - team led AI

The most effective AI strategies in professional practice that I’ve seen aren’t mandated from on high, but rather

emerge organically from teams who are given the freedom to experiment and trust to exercise their individual judgement about what to do with the results.

This team-led approach to AI adoption, where workers take responsibility for how they wish to use it, allows for a more nuanced integration that respects existing workflows while uncovering new efficiencies and enthusiasm from within the team.

Consider building a “heat map” of opportunities within your organisation. Where are the places that these ideas really fit without trying too hard? Once you’ve identified these hotspots, tackle them one at a time. Small, incremental changes often lead to the most sustainable transformations.

AI is neither a panacea nor a parlour trick – it’s a curious instrument that, when wielded with skill and discernment, can help us work smarter, faster, and perhaps even with greater enjoyment.

We are still so early. So, I urge you to withhold judgement; be experimental and be curious.

■ www.arka.works

About the author

Keir is an architect operating with one foot in architectural practice and one in the development of Generative Design and AI tools and workflows. He founded the creative consultancy, Arka Works in 2023 following a directorship at AJ100 practice Morris+Company, with a mission to prepare the profession for AI-driven change. He does this by helping architects, clients and startups to effectively apply the latest Generative Design and AI tools to the work they already do in practice, so that they can adapt to a rapidly changing professional landscape.

Enscape 4.1 sets new benchmarks in architectural software by merging aesthetic capabilities with practical, performance-oriented features and a brand-new add-on. The latest version empowers users to create visually stunning designs while ensuring they are viable, energy efficient, and more sustainable. Enscape 4.1 includes:

The productivity gains to be had from current AEC software options may be close to exhausted. To adapt and survive, the industry should instead be focusing on knowledge-based expert design systems, writes Richard Harpham

Today, most designs are produced in software solutions specifically built for BIM model and drawing production and intentionally designed to accommodate all building types.

While these legacy systems have replicated and replaced the manual tools that architects used for centuries, providing more accurate and better coordinated digital methods along the way, it’s still pretty much a case of the same processes as before, but now in CAD and BIM software.

In other words, all the knowledge and expertise for a specialised building’s needs and purpose still must be in the designer’s head. These building-type specialisms have been around for a long time, along with expert understanding of their legislative needs.

What’s changed is that the industry is now questioning what comes next. Many software customers are no longer confident that a faster, vanilla BIM solution will provide the productivity gains needed in a new era of expert intelligence tools based on AI and machine learning (ML).

As Charles Darwin is often purported to have said: “It is not the strongest of the species that survives, nor the most intelligent that survives. It is the one that is the most adaptable to change.”

Darwin’s theory of evolution by natural selection posits that organisms evolve to adapt to their environment to survive. Those that are better suited to their environment — whether through physical traits, behaviours, or abilities — are more likely to survive and reproduce, passing on advantageous traits to future generations.

In the context of architecture, Darwinian principles can be applied metaphorically to the process of design specialisation. Specialisation in architectural design can take many forms, including focusing on specific types of buildings, particular construction methods, or specific professional skills.

Just as species adapt to specific ecological niches, many architects will increasingly evolve to carve out their own niches in the design world, aiming to become the ‘fittest’ in their chosen fields. Of course, there will still be ‘generalist’ architects, but just as Darwin described, the divergence of some – or many – could form a new species of firm that proves to be tremendously more successful in a specialised segment.

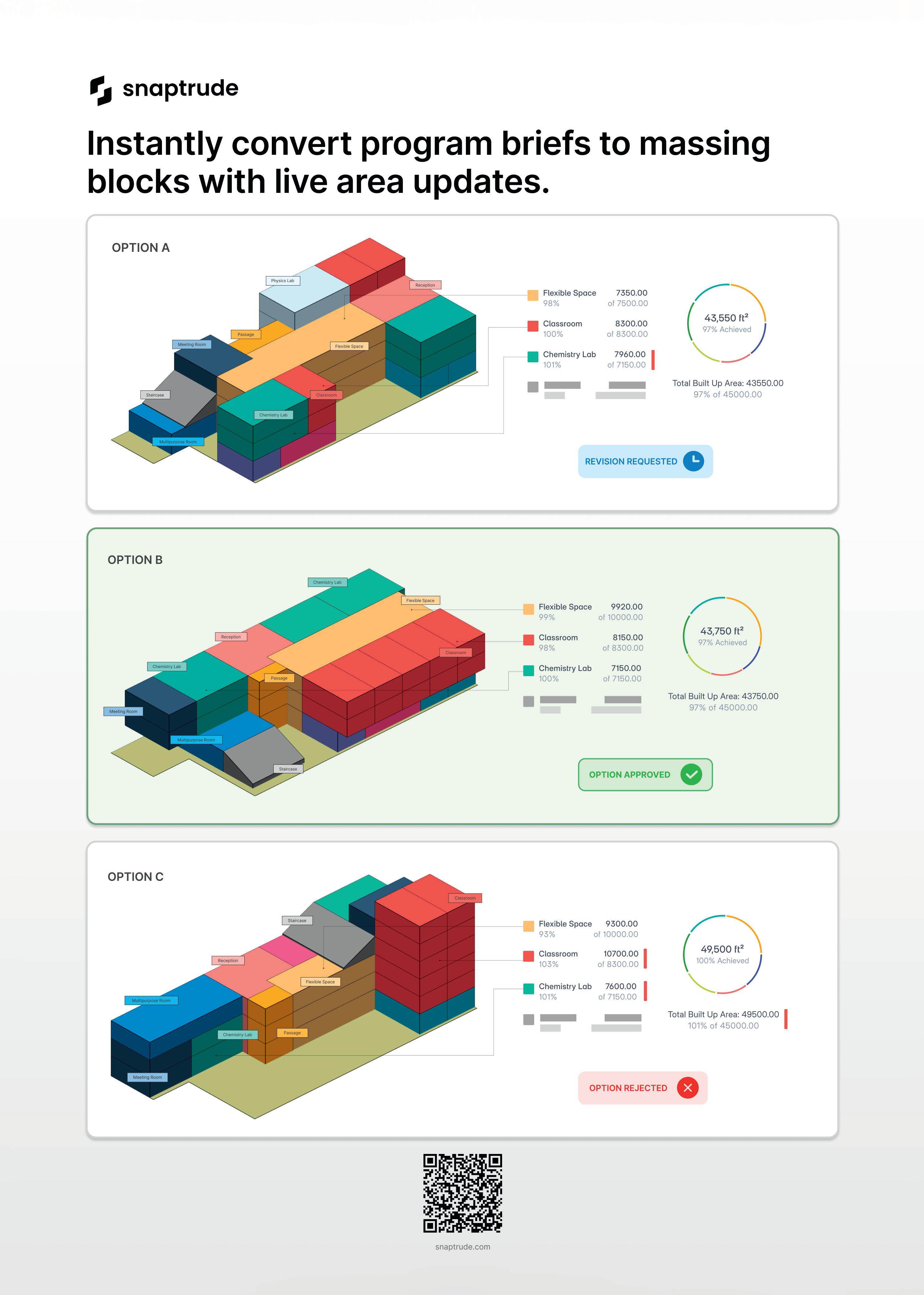

Architectural design software hasn’t been adapting as fast — until recently. Now, we’re seeing a host of software start-ups

emerge that are betting their growth strategy on narrowing the capabilities of their software. The thinking here is that, by targeting a deep and narrow segment of the design market, they might become a leading solution in just one or two building types and thus carve out a profitable, differentiated business for themselves from the +$13 trillion construction industry.

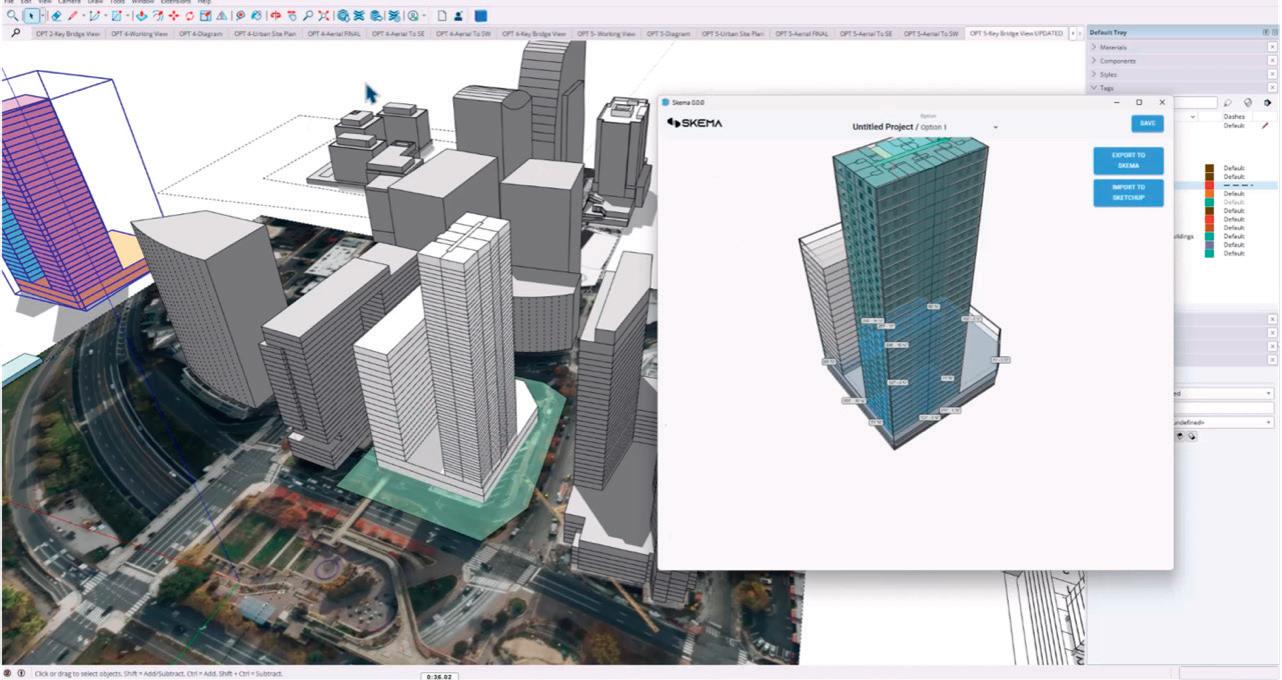

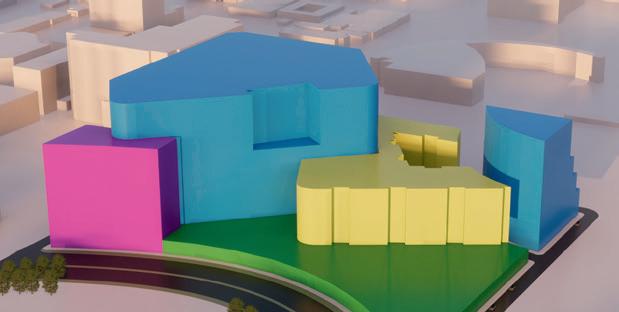

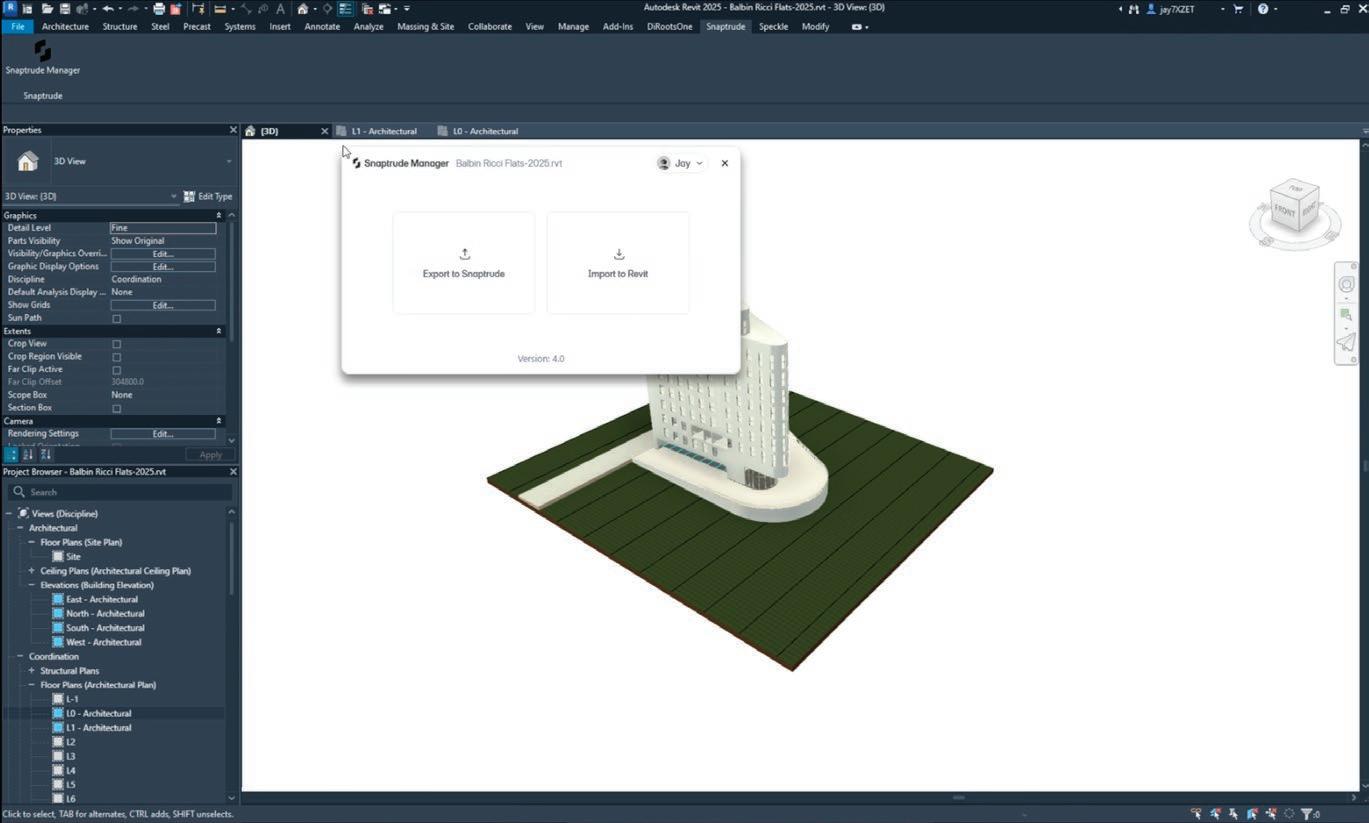

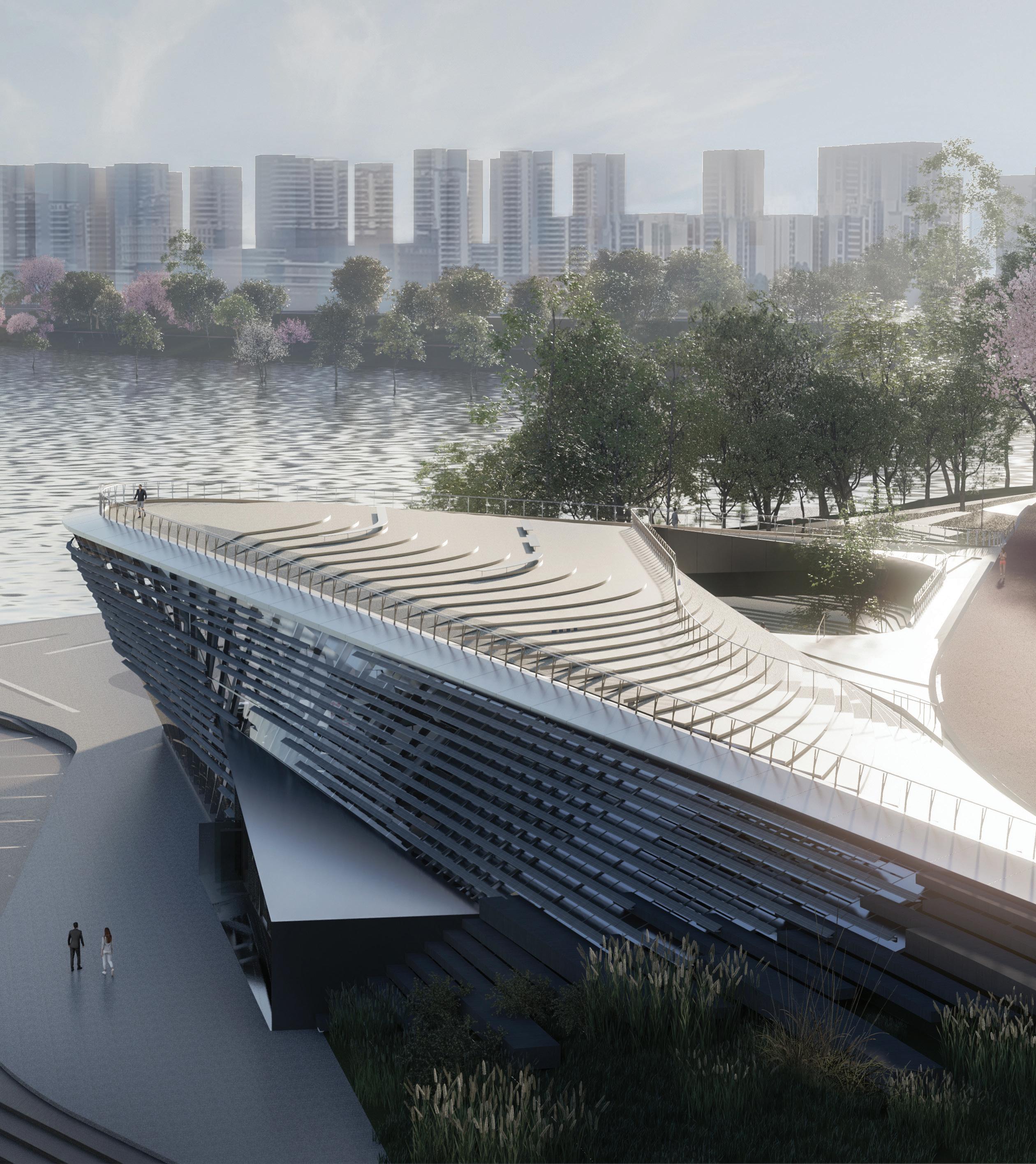

1 High-rise mixed residential model created with Skema in SketchUp

2 Model at the heart of a collaborative presentation at NXT DEV 2024, which showcased the development of a school project from concept to drawings, joined together by new software tools: Skema, Snaptrude, Augmenta, Gräbert, with Esri GIS (see presentation at www.nxtaec.com)

A strong catalyst for this trend is the rise of AI and ML. AI has the potential to automate many aspects of the design process, such as optimising building layouts or generating construction plans, but until ‘general AI’ emerges, it requires a narrow set of parameters to work successfully.

AI technologies could help architects specialise more deeply in specific building typologies, executing common, simple, and repetitive design tasks and unlocking time for architects to focus their professional skills and ingenuity on

the more aesthetic challenges that differentiate their designs.

Many people in the industry expect that the software companies and their customers who carve out specialised areas of excellence will reap tremendous value and profits much more quickly than their competitors. At DPR, production design leader Charlie Dunn puts it this way: “By focusing on a building type, and then starting with prefabricated elements for that building type, we can simplify and accelerate our design solutions. The problem set is smaller, and the iteration loop accelerates to support the desired new DfMA flows.” So, what are some of the forces driving architectural specialisation? To my mind, the list looks something like this:

1. Technological advancements: New tools such as parametric design, BIM and 3D printing can transform the

way architects approach their work. However, these technologies require specialised knowledge and skills, steering architects to focus more on specific technological applications with specific building types.

2. Sustainability and environmental design: The growing focus on sustainability and environmental responsibility has led to the rise of architects specialising in green building practices, such as passive solar design, energy-efficient systems and/ or the use of sustainable materials.

3. Urbanisation and population growth:

As global populations continue to grow and urbanisation accelerates, cities face challenges such as housing shortages, transportation congestion and the need for resilient infrastructure. There are opportunities here for architects who specialise in multifamily buildings, educational institutions and hospitals, for example.

4. Regulatory and safety requirements:

Certain building types, such as healthcare facilities, schools and industrial plants, must comply with rules around safety, accessibility and functionality. Architects need a deep understanding of the relevant regulations and codes to meet the necessary standards.

Benefits of specialisation

Architects and software suppliers that embrace this specialisation trend, meanwhile, might experience several benefits.

For example, specialisation allows architects and their software suppliers to develop a deeper understanding of specific design challenges. This enhanced knowledge enables more effective and innovative solutions to emerge. Specialised architects can address complex design challenges more effectively than generalists, while increasing the likelihood that their software suppliers can leverage specialised segment data for machine learning and AI methodologies.

As firms develop professional, profitable reputations as leaders in specific fields, there should be a clearer route to market differentiation and profit. Even if we only consider the multi-family, hospitality, education, medical and datacenter segments, we are already describing a market that accounts for more than 60% of all construction globally.

For architects, a narrower and deeper focus should lead to more valuable solu-

tions that support higher fees and greater client demand. Corresponding software start-ups should also find it easier to describe to investors their potential growth and market share opportunities in specific markets.