TIME TO HIT START ON AI

Accelerating Design and Manufacturing Teams with Advanced Cloud-Native Solutions

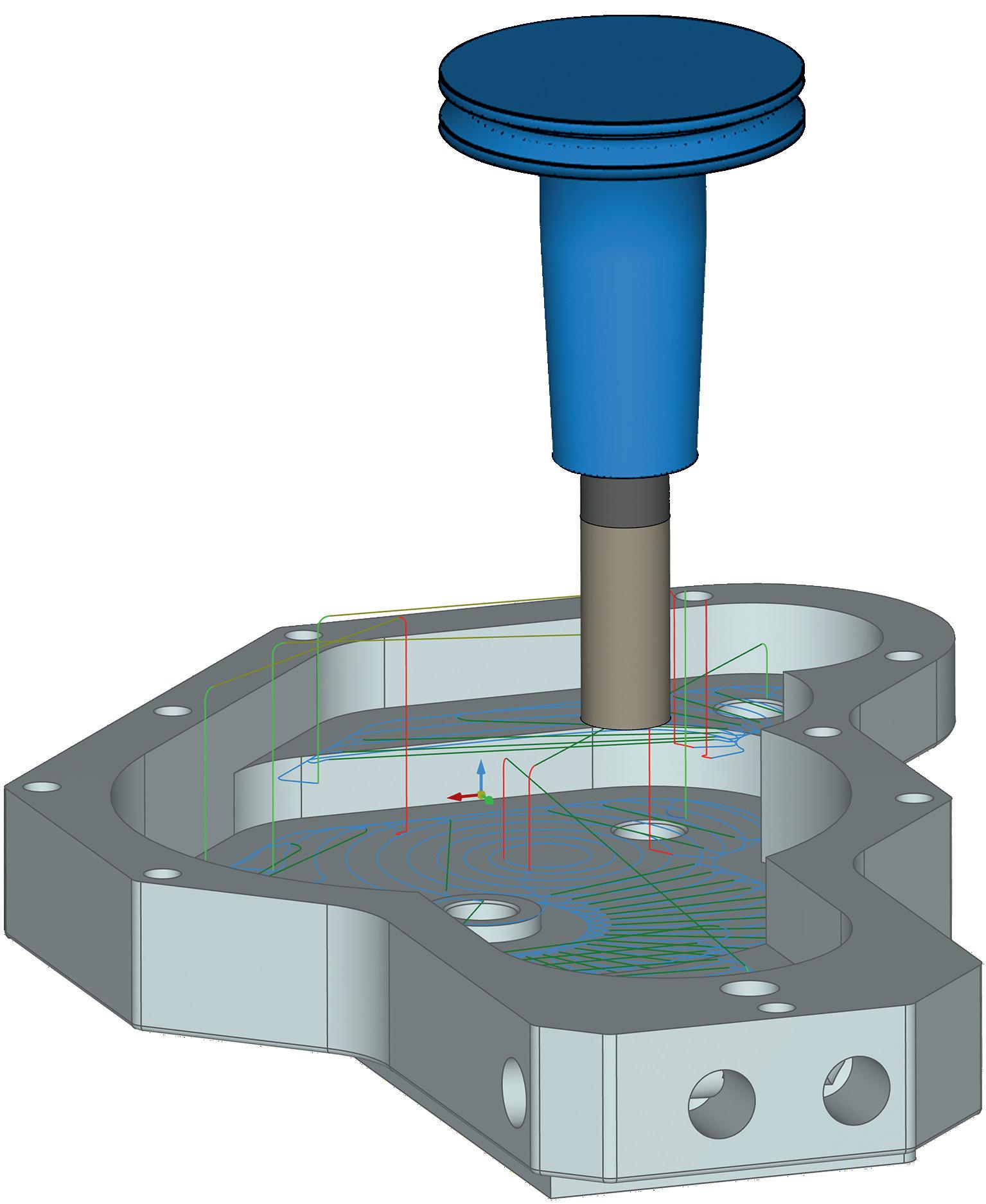

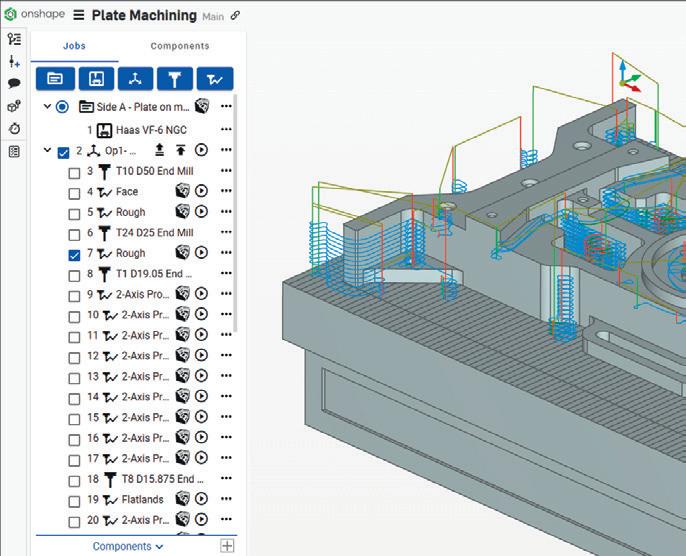

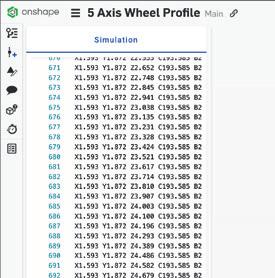

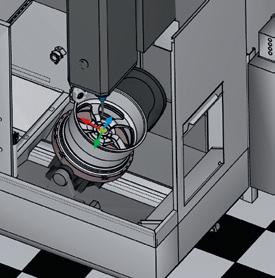

ONSHAPE CAM STUDIO DELIVERS:

Faster DFM reviews with real-time collaboration between design and manufacturing

Faster tool path generation and simulations with the cloud HPC at your fingertips

Reduced total time to market with the single source of truth for a combined CAD/CAM workflow

EDITORIAL

Editor

Stephen Holmes

stephen@x3dmedia.com

+44 (0)20 3384 5297

Managing Editor

Greg Corke

greg@x3dmedia.com

+44 (0)20 3355 7312

Consulting Editor

Jessica Twentyman jtwentyman@gmail.com

Consulting Editor

Martyn Day martyn@x3dmedia.com

+44 (0)7525 701 542

Staff Writer

Emilie Eisenberg emilie@x3dmedia.com

DESIGN/PRODUCTION

Design/Production

Greg Corke

greg@x3dmedia.com

+44 (0)20 3355 7312

ADVERTISING

Group Media Director

Tony Baksh tony@x3dmedia.com

+44 (0)20 3355 7313

Deputy Advertising Manager

Steve King steve@x3dmedia.com

+44 (0)20 3355 7314

US Sales Director

Denise Greaves denise@x3dmedia.com

+1 857 400 7713

SUBSCRIPTIONS

Circulation Manager

Alan Cleveland alan@x3dmedia.com

+44 (0)20 3355 7311

ACCOUNTS

Accounts Manager

Charlotte Taibi charlotte@x3dmedia.com

Financial Controller

Samantha Todescato-Rutland sam@chalfen.com

ABOUT

Welcome to the 150th issue of DEVELOP3D! (There’ll be cake later.) In the modern age of gnat-like attention spans, multi-screen content consumption and chaotic work/life balances, our continued existence is testament to the longstanding appeal of magazines. A paper magazine is still a wonderful thing to hold and even more fun to look back on years later. Back when we published our first issue in 2008, AI was still a sci-fi movie plotline. Fast-forward to today, and it’s the major focus of this issue, as new AI-powered tools look to enhance, automate and optimise all aspects of our workflows.

As part of this special AI-themed issue, we take a look at an array of these new tools and what they have to offer the different stages of product development workflows. We also hear from NASA about how the space agency is automating its generative design tools with AI. We hear from executives at the major CAD vendors about how they view AI and speak to leading educators about how they cover these new tools in the courses they’re delivering to members of tomorrow’s workforce.

Elsewhere, we look at how we’re all going to need to keep our IP secure in a world where AI tools produce masses of ideation; learn how one design studio has gone ahead and built its own AI tools; and we speak to a visualisation artist who feels that AI isn’t quite ready to replace the fine eye of a human - or not just yet.

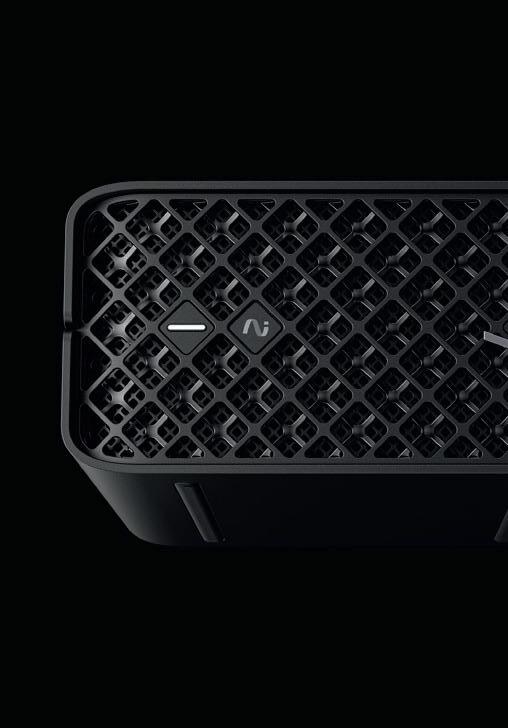

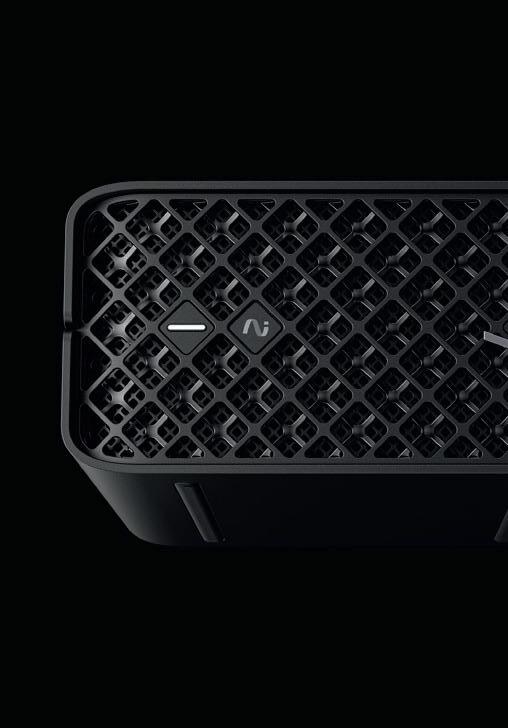

We’ve also got first looks at Onshape’s new cloud native machining package CAM Studio and the Sony XR headset developed in collaboration with Siemens Digital Industries.

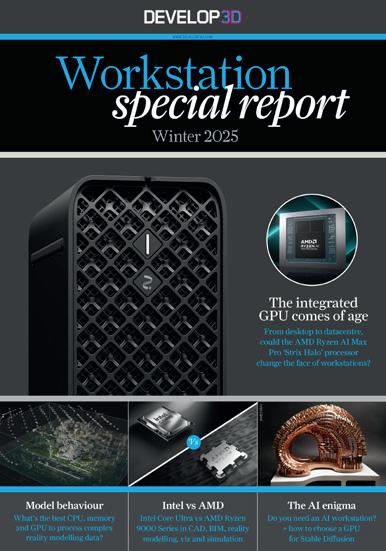

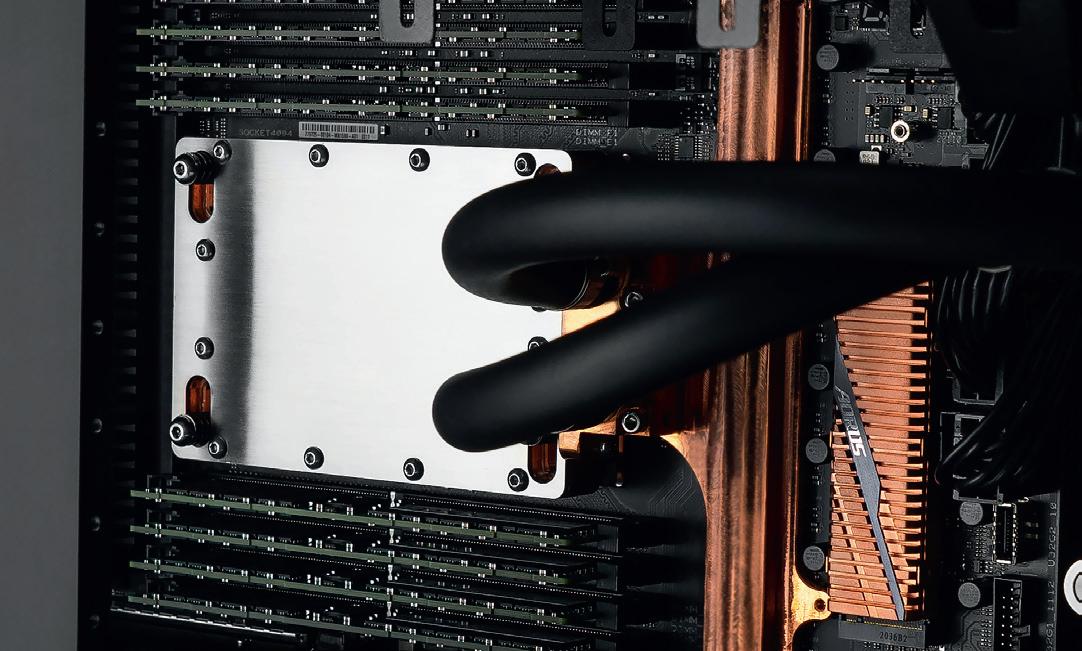

If that wasn’t enough, there’s also a huge, not-to-be-missed workstation special, packed with expert reviews and insight to power you through 2025.

So, here’s to the next 150 issues. Of course, by the time Issue 300 rolls around, we’ll all have been replaced by AI – and I, for one, will welcome our robot overlords.

DEVELOP3D is published by X3DMedia 19 Leyden Street London E1 7LE, UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319

X3DMedia

Stephen Holmes Editor, DEVELOP3D Magazine,

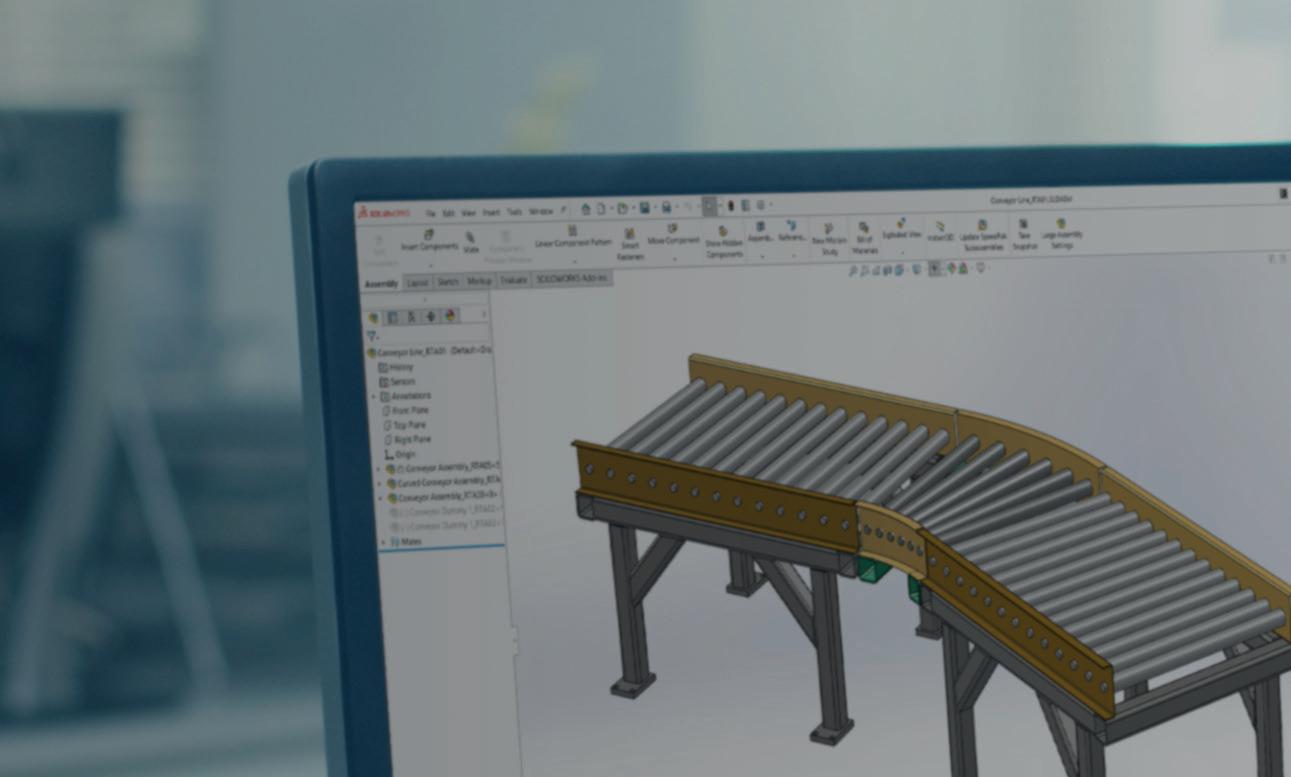

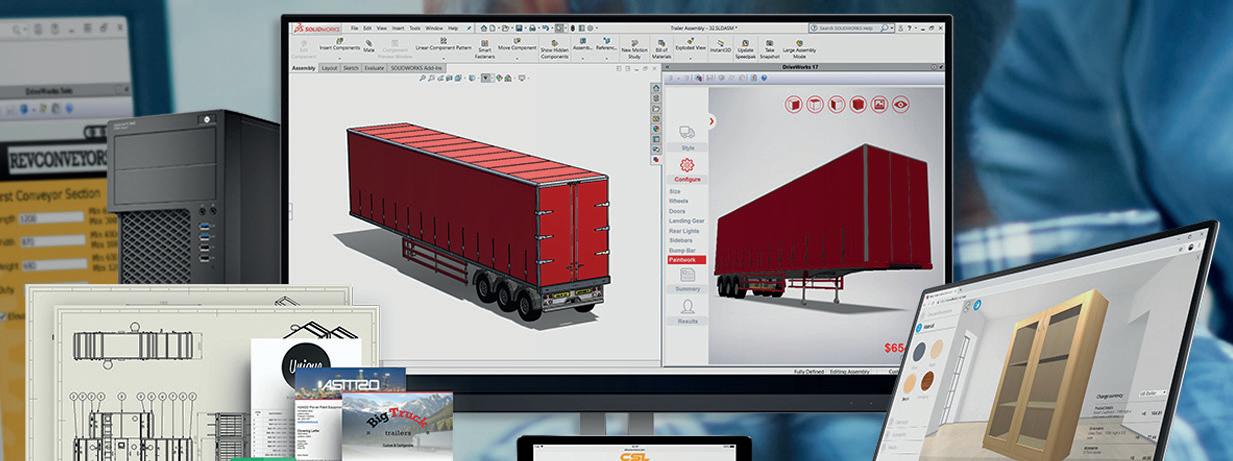

DriveWorks is flexible and scalable. Start for free, upgrade anytime. DriveWorksXpress is included free inside SOLIDWORKS or start your free 30 day trial of DriveWorks Solo.

Entry level design automation software included free inside SOLIDWORKS®

Entry level SOLIDWORKS part and assembly automation

Create a drawing for each part and assembly

Find under the SOLIDWORKS tools menu

Modular SOLIDWORKS® automation & online product configurator software

One time setup

SOLIDWORKS® part, assembly and drawing automation add-in

Automate SOLIDWORKS parts, assemblies and drawings

Generate production ready drawings, BOMs & quote documents automatically Enter product specifications and preview designs inside SOLIDWORKS

Free online technical learning resources, sample projects and help file

Sold and supported by your local SOLIDWORKS reseller

Complete SOLIDWORKS part, assembly and drawing automation

Automatically generate manufacturing and sales documents

Configure order specific designs in a browser on desktop, mobile or tablet

Show configurable design details with interactive 3D previews

Integrate with SOLIDWORKS PDM, CRM, ERP, CAM and other company systems

Scalable and flexible licensing options

Sold and supported by your local SOLIDWORKS reseller

Set up once and run again and again. No need for complex SOLIDWORKS macros, design tables or configurations.

Save time & innovate more

Automate repetitive SOLIDWORKS tasks and free up engineers to focus on product innovation and development.

Eliminate errors

DriveWorks rules based SOLIDWORKS automation eliminates errors and expensive, time-consuming design changes.

Integrate with other systems

Connect sales & manufacturing

Validation ensures you only offer products that can be manufactured, eliminating errors and boosting quality.

DriveWorks Pro can integrate with other company systems, helping you work more efficiently and effectively.

Intelligent guided selling

Ensure your sales teams / dealers configure the ideal solution every time with intelligent rules-based guided selling.

DEVELOP3D LIVE returns on 26 March 2025, Intel launches new ‘Arrow Lake’ laptop processors, Innoactive drives XR work at Volkswagen and more

Comment: Greg Mark of Backflip on the AI opportunity

Comment: Sara El-Hanfy of Innovate UK on AI adoption

Visual Design Guide: Nike x Hyperice boot

COVER STORY AI IN PRODUCT DEVELOPMENT

Expert Panel: How do the Big Four view AI?

Interview: Ryan McClelland of NASA

Top of the class: Teaching AI on design courses

DIY AI: Vital Auto builds its own toolset

Protecting innovation and IP at Yamaha Motors

Interview: Spencer Livingstone of S.VIII

First look: Sony and Siemens bring XR to life

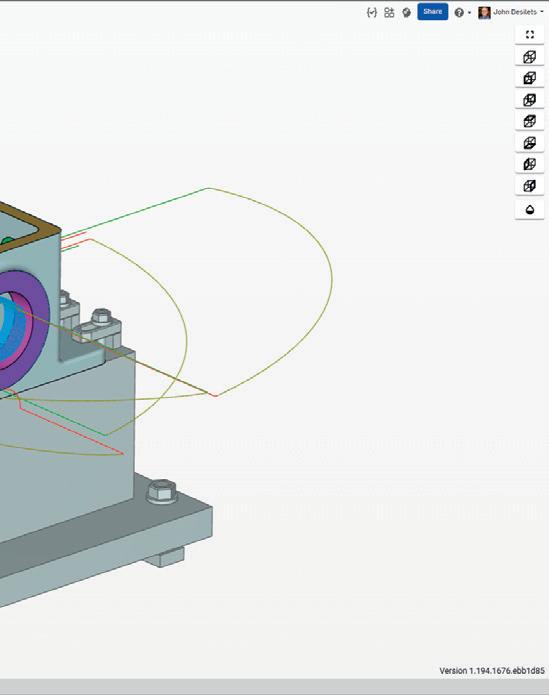

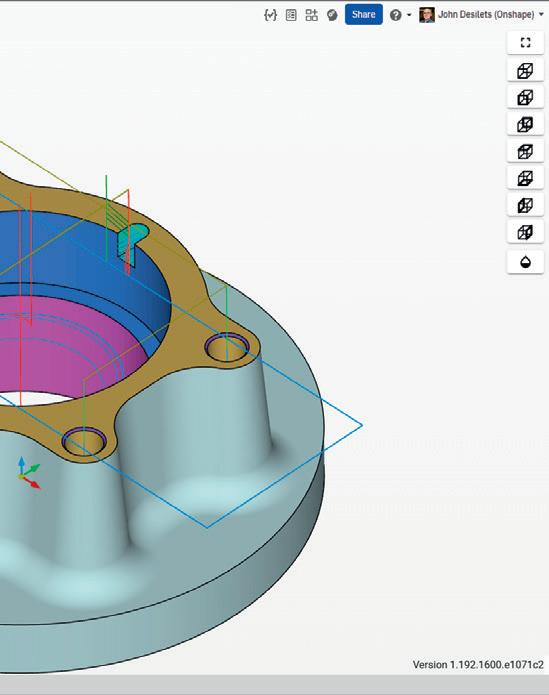

Onshape unveils an impressive CAM offering

As this 150th issue of DEVELOP3D demonstrates, AI has come a long way – but it still has much further to go, with huge implications for product designers and engineers writes Stephen Holmes

» Top executives from Catia, Solidworks, Siemens, Autodesk and Shapr3D will share the stage with exciting designers and engineers at our one-of-a-kind annual show

Astellar line-up of technology executives from some of the leading CAD companies has been announced for DEVELOP3D Live, to be held on 26 March 2025 in Coventry, UK.

DEVELOP3D LIVE is the UK's leading conference and exhibition celebrating design, engineering and manufacturing technologies and how they bring worldleading products to market faster.

Solidworks CEO Manish Kumar is returning to the event, bringing the latest updates and news about Solidworks fresh from the 3DExperience World user event straight to our UK audience.

Also representing the Dassault Systèmes family will be Catia CEO Olivier Sappin, with the engineering software company making its DEVELOP3D LIVE stage debut this year. Sappin’s exciting presentation focuses on the future of engineering and how human imagination can successfully leverage AI and generative design to sustainably redefine the next generation of products.

Siemens Digital Industries Software will be represented on the main stage by Oliver Duncan, senior product manager for Siemens Cloud Solutions, giving attendees the benefit of his insights into the latest developments from NX, Solid Edge and Designcenter.

And Autodesk’s Clinton Perry will discuss the latest developments from the company’s product design and manufacturing portfolio, including Fusion.

Shapr3D CEO István Csanády is also heading to the UK, with an update on the increasing number of features that Shapr3D offers professional designers.

The main stage of the event has always seen exciting presentations from companies that we feel are leading the way in using new technologies in their design processes. In this respect, 2025 will be no different, with keynotes covering topics as diverse as renewable energy, AI, automotive design, consumer electronics and others yet to be announced.

The other stages will be the venue for panel discussions, looking at XR for product design, the rise of new head mounted display technologies, and software built to offer designers new ways to approach design and collaborate with team members around the world. Another panel will look at computational design tools and their relationship with digital manufacturing technologies, as well as how to take generative design technology and make its output more accessible to wider swathes of the industry.

Allowing attendees the chance to try out many of the technologies discussed

via hands-on demos has always been a focal point of the exhibition space at DEVELOP3D LIVE, providing an exciting learning arena for attendees.

Our free-to-attend, single-day event incorporates three conference streams running alongside the exhibition space, all based at the Warwick Arts Centre. This venue is located in the heart of the West Midlands, a powerhouse area for British product development, automotive engineering and advanced manufacturing.

Within just over one hour’s reach of London and the aerospace engineering hubs of Derby and Cranfield, and within two hours of Bristol, Manchester and Liverpool, the event consistently attracts a huge range of industries and professionals.

DEVELOP3D LIVE show director Martyn Day says the 2025 event is lining up to be an unmissable episode in the show’s history. “As always, DEVELOP3D LIVE is the only place where you can see the major design software companies on the same stage, giving attendees the best chance to see where the newest and most exciting developments are being realised,” said Day.

DEVELOP3D readers can find out more about speakers as they’re announced, and also obtain their free access pass from the event website. www.develop3dlive.com

Join us at DEVELOP3D LIVE to hear about the latest developments in design, engineering and manufacturing technologies

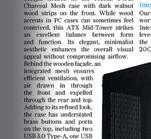

Intel has introduced new ‘Arrow Lake’ laptop processors, which should make their way into mobile workstations later this year. The new offerings include six Intel Core Ultra 200HX series processors aimed at high-performance laptops, and five Intel Core Ultra 200H series processors for mainstream thin-and-lights.

Intel’s new processors prioritise general processing performance over AI capabilities. Compared to last year’s ‘Lunar Lake’ Intel Core Ultra 200V series, the new chips feature significantly more CPU cores, but come with a much less powerful Neural Processing Unit (NPU). With 13 TOPS compared to 48 TOPS, the NPU falls short of meeting the requirements for Microsoft Copilot+ compatibility.

The integrated GPU in the Intel Core Ultra 200HX is also less powerful than the one that comes with the Intel Core Ultra 200V. This suggests that in a mobile workstation, Intel’s flagship laptop processor is most likely to be paired with a discrete GPU, such as Nvidia RTX.

Key features of the Intel Core Ultra 200HX and H series mobile processors include up to 24 cores for HX-series (eight Performance-cores (P-cores) and 16 Efficient-cores; and up to 16 cores for H-series (six P-cores, eight E-cores and two low-power E-cores).

According to Intel, the flagship Intel Core Ultra 9 285HX processor offers up to 41% better multi-threaded performance, as tested in the Cinebench 2024 rendering benchmark, compared to the previous generation Intel Core i9-14900HX. www.intel.com

Innoactive XR Streaming and the Nvidia Omniverse platform are enabling automotive giant Volkswagen to validate photorealistic digital twins using Apple’s Vision Pro XR headsets for the first time.

By harnessing the power of the OpenUSD format and Omniverse, combined with Nvidia’s newly released spatial streaming, Innoactive’s XR Streaming uses this workflow to bring industrial digital twins to spatial devices anywhere, anytime.

Innoactive executives say that its product’s one-click XR streaming is capable of streamlining XR workflows with instant access to immersive 3D environments, supporting browser streaming and streaming to standard VR headsets for cost-effective solutions, while supporting the Apple Vision Pro.

The resurrection of online 3D printing service provider Shapeways has continued with its announcement of the acquisition of a controlling share in Thangs, a collaborative 3D file sharing and discovery community.

Shapeways executives say that the acquisition marks the second step of a relaunch plan created by its new management team to drag the company back from its July 2024 bankruptcy.

The Thangs platform hosts 3D search with more than 24 million 3D printable models in its index, along with tools, IP protection and membership options www.shapeways.com

Nvidia DLSS 4, the latest release of the suite of neural rendering technologies that use AI to boost 3D performance, will be supported in visualisation software – D5 Render, Chaos Vantage and Unreal Engine – from February 2025.

The headline feature of DLSS 4, Multi Frame Generation, brings revolutionary performance versus traditional native rendering. It is an evolution of Single Frame Generation, which was introduced in DLSS 3 to boost frame rates with Nvidia Ada Generation GPUs using AI. www.nvidia.com

resh from a tumultuous year of acquisition rumours, Stratasys has announced a $120 million investment by Israeli private equity fund Fortissimo Capital.

The financial boost aims to help Stratasys strengthen its balance sheet and be better positioned to capture new market opportunities.

With this transaction, Fortissimo will hold approximately 15.5% of Stratasys’ issued and outstanding ordinary shares. Fortissimo managing partner Yuval Cohen will join the Stratasys board of directors, replacing a Stratasys director who had yet to be named at the time of going to press.

www.stratasys.com

Daimler Truck is collaborating with 3D Systems, Oqton and WibuSystems on a new initiative aimed at producing spare parts for buses, on demand.

This will enable Daimler Buses-certified 3D printing partners to produce parts as and when they are needed, avoiding supply chain bottlenecks and reducing delivery times by a claimed 75%.

Digital rights management capabilities, meanwhile, will protect all intellectual property (IP) belonging to Daimler Buses.

The process will rely on the expertise of 3D Systems in 3D printing technology, materials and applications. Its former software arm Oqton will provide process know-how, while Wibu-Systems will provide digital rights and IP management technology.

According to executives at Daimler Truck, the collaboration will enable it to manufacture spare parts locally for various underhood and cabin interior applications. These are likely to include pins, covers and inserts.

Commercial truck, bus and touring coach companies, meanwhile, will likely realise substantial indirect cost savings if vehicle downtime due to maintenance can be reduced.

“The digital rights management enables us to shorten service times through decentralised production in order to further maximise productivity and revenue for commercial vehicle companies,” explained Ralf Anderhofstadt, head of the Center of Competence in Additive Manufacturing at Daimler Truck and Buses.

“In addition, the sensible use of industrial 3D printing results in reducing the complexity in the supply chains.”

Participating bus companies or service bureaus can join Daimler Buses’ network of 3D printing certified partners once they have purchased a license for 3DXpert through Daimler Buses’ Omniplus 3D-Printing License eShop.

The prepare-and-print licence enables the customer or service partner to decrypt design files relating to the part needed for a specific repair job and only produce that part in the exact quantity required.

Currently, the solution is designed to 3D print parts on 3D Systems’ SLS 380.

In the future, Daimler Buses anticipates that service bureaus will also be able to connect any of 3D Systems’ polymer or metal 3D printer to the solution. www.3dsystems.com

Bus operators will soon be able to print their own parts in the event of a breakdown

Siemens Digital Industries Software has announced updates to its Simcenter portfolio that look set to benefit customers in the automotive and aerospace industries, since they focus on aerostructure analysis, electric motor design, gear optimisation and smart virtual sensing www.sw.siemens.com

Autodesk VRED has announced its latest updates for its 2025.3 release, implementing a new colour management system for visual quality called OpenColorIO to give users improved control over the colour in a scene and introduce new colour grading methods www.autodesk.com

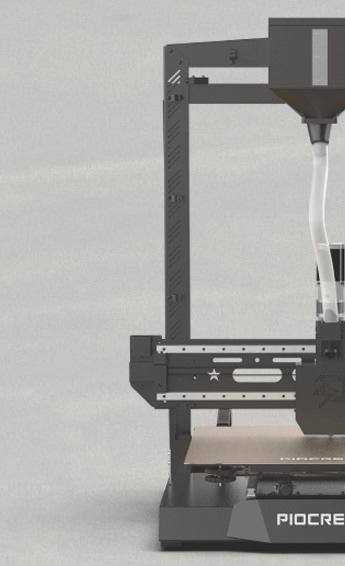

Rapid Fusion has announced Medusa, the first UK-built large format hybrid 3D printer, backed by Innovate UK, along with project partners RollsRoyce, AI Build and the National Manufacturing Institute Scotland (NMIS). The gantry-style machine is expected to cost in the region of £500,000 www.rapidfusion.co.uk

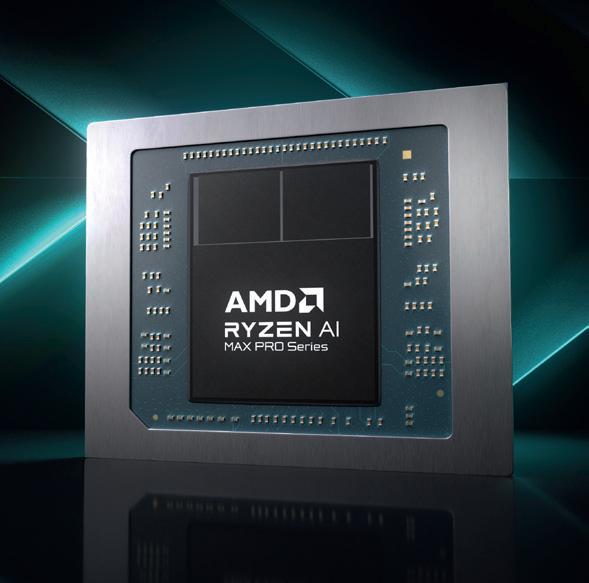

HP has launched two new workstations built around the AMD Ryzen AI Max PRO, a new single chip processor with up to 16 ‘desktop-class’ Zen 5 CPU cores, RDNA 3.5 integrated GPU, and an integrated XDNA 2 Neural Processing Unit (NPU) for AI.

Both the HP Z2 Mini G1a desktop workstation and 14-inch HP ZBook Ultra G1a mobile workstation support up to 128 GB of unified 8000MT/s LPDDR5X memory of which 96 GB can be assigned exclusively to the GPU. As HP points out, this is the equivalent to the VRAM in two high-end desktop-class GPUs.

According to AMD, having access to large amounts of memory allows the processor to handle ‘incredibly large, high-precision AI workloads’, referencing the ability to run a 70-billion parameter large language

HP ZBook Ultra G1a and HP Z2 Mini G1a workstations are expected to be available in Spring 2025. Pricing will be announced closer to availability. www.hp.com/z The new HP ZBook Ultra G1a mobile workstation is built around AMD's newest single-chip processor

3D printing service provider 3DPrintUK, which was acquired by the TriMech Group and Solid Solutions back in 2023, has announced a £2 million internal investment that will boost its HP Multi Jet Fusion capacity by up to 60% and enable it to reduce prices by around 20% www.3dprint-uk.co.uk

Sweden-based Sandvik has announced its acquisition of ShopWare, MCAM Northwest and the CAD/ CAM solutions business line of OptiPro Systems, three US-based resellers of CAM solutions in its Mastercam network, in order to serve customers in the region and expand its customer base www.sandvik.com

To

the

we need to massively

the number of people who

Today, the number of people who have ideas that could transform the world dwarfs the number of people who have the practical skills and ability to turn those ideas into reality.

In all of the narratives we’ve seen over the past decade about the need for reskilling or upskilling professionals amid technological upheaval, we have never had a discussion about the power and potential of helping more people achieve the ability to create 3D models and adding easier on-ramps to CAD, which I believe to be one of the most transformative technologies of the last several decades.

I’ve spent 20 years in manufacturing and design. I’ve been to automotive supplier plants where you’ve got five overworked engineers working in CAD, supporting 800 incredibly mechanically talented manufacturing employees who are operating and maintaining production lines.

The latter group always finds creative and resourceful ways to support and improve operations, but they just don’t do 3D design.

You’ll talk to them, and they have a torrent of pent-up ideas for how to make specific areas of a manufacturing line faster, with reduced scrap rates and better processes.

But those ideas don’t become reality, in large part because these workers can’t easily create a 3D model to plug into the infrastructure of modern manufacturing, like CNC machining and 3D printing.

But imagine if those 800 automotive workers were each empowered to more easily learn to design the fixtures, tools, or other process aids that they know would make their job easier.

We’d make better cars, faster, and at lower cost. And then plant engineers – who are spread thin working on many projects at once – could better focus, and more

responsively support other areas of the plant and more technically complex initiatives.

I see a fundamental shift coming in the ways that people are introduced to 3D design that unlocks a future that’s always lived in our imagination.

Other industries have broken down barriers for innovation and unlocked human imagination through education, tools and training. And the last five years has laid the foundation for us to build AI-powered tools that simply couldn’t exist before.

We don’t need to change our current 3D modelling programs, but we can help people adopt them faster and get started more easily.

At Backflip AI we’re building AI tools for the physical world, and my hope is that we can be a key part of this sea change.

With our first product, we’re already seeing people who have never touched traditional 3D design tools take an idea in the form of a photo, a sketch, or a text description, and turn it into a 3D mesh that can be 3D-printed into existence.

It’s not perfect, but we’ve heard from many users that have, for the first time in their lives, been able to get started creating what they previously could only imagine.

This is a stepping stone, and a way to help more people get familiar with 3D design before making the transition into existing, deeply featured CAD tools easier. In that way, we hope more people can help build the technologies that make our collective lives better.

In a prior life, I focused on transforming the downstream side of manufacturing, or how you get from an existing 3D model to a physical part faster and easier, by developing advanced carbon fibre and

Other industries have broken down barriers to innovation and unlocked human imagination. We don’t need to change our 3D modelling programmes, but we do need to help people adopt them faster and get started more easily

metal 3D printing technologies.

At Backflip, we’re now focused on the front end: making 3D design more accessible.

My team, which comprises some really smart software and mechanical engineers, has an intimate knowledge of how physical things are designed and manufactured, and the techniques for how to train cuttingedge AI models.

We’ve created state-of-the-art AI models based on our proprietary data, the world’s biggest synthetic data set of 3D models. Our models are constantly improving, following the exponential technology growth curves pioneered by AI companies like Midjourney and OpenAI. And we will keep getting better as we continue to refine our technology and grow our data set.

I believe we are on the threshold of a massive transformation in human innovation which stands to benefit all of us. What is important is the imagination in our heads, and technology, training and education can help lead the way into this next frontier.

ABOUT THE AUTHOR: Greg Mark is an American inventor, engineer and entrepreneur and the founder and CEO of Backflip AI, which has built a foundational model for 3D generative AI that turns ideas into reality. Prior to Backflip, Mark invented the process for carbon fibre 3D printing and founded 3D printing company Markforged. www.backflip.ai

Since its explosion into the mainstream, artificial intelligence (AI) has begun to disseminate almost every corner of the workforce.

While the adoption of AI has immense potential to streamline processes in the workplace, some sectors – particularly the creative sector –have been slower to absorb this new technology into their processes.

From developing graphics to 3D design, AI can automate mundane tasks and to assist in the design process, increasing profits and unlocking the full potential of the various fields in the design landscape.

The creative sector in the UK is a powerhouse, employing over 2.3 million highly skilled individuals who have honed their crafts over many years. While AI boasts impressive capabilities in generating text, images and video, the adoption of this technology into the creative sector does not come without challenges.

It is no surprise that some creatives rightly feel threatened by this emerging technology. Companies looking to cut costs could deploy generative AI to develop and design content for their business, replacing the need to hire designers and laying off existing creatives on their teams.

But AI technologies cannot replace the intuitive thinking of human creativity. Instead, AI should be embraced as a tool to augment creatives, expanding possibilities and accelerating workflows.

When it comes to generative 3D modelling, AI technologies certainly hold potential but are unable to fully replace the skills and nuance of human designers working in the field.

While there are less concerns about job security in the 3D design sector for now, a shift in the way these 3D designers are working is necessary to take full advantage of AI capabilities to automate as many processes as possible, increasing productivity in the sector as a whole.

The traditional 3D design process is quite complex and laborious, with designers requiring a high level of skill to operate in this sector. To maximise efficiency in this sector, AI can be integrated to speed up the time-consuming aspects of design like duplicating tedious design elements.

AI can identify potential issues and suggest process adjustments by analysing data from previous manufacturing runs. Similarly, AI can be used to create complex geometries that were previously impossible or difficult to achieve with traditional design methods.

While the creative sector stands to greatly benefit from the adoption of AI, uptake has been rather slow. Creative industries are vital to the UK economy, contributing £109 billion in 2021.

Without adequate investment from the government to overcome barriers to AI adoption, the full potential of the creative sector will remain untapped.

From training programmes to grants for overcoming financial constraints relating to AI adoption, government investment may be key in upskilling creatives on how to utilise AI most efficiently in the creative field to maximise creative output.

The Innovate UK BridgeAI programme, which is delivered by Innovate UK and its partners The Alan Turing Institute, BSI, Digital Catapult and STFC Hartree Centre, is ensuring that the foundations exist to support responsible technology development.

BridgeAI is fostering collaborations across the ecosystem through accelerator programmes, workshops, and toolkits to ensure those working in the creative sector are as equipped as they can be for this shift towards an AI-enabled future.

The Innovate UK BridgeAI programme has already demonstrated how targeted investment and collaboration can have a positive impact on low-adoption sectors.

Without adequate investment from government, the full potential of the UK creative sector will remain untapped

One such collaboration was between Lancaster University’s School of Engineering and Batch.Works, a design and additive manufacturing business. Batch.Works’ design process for additive manufacturing was both manual and time-intensive. Funded by Innovate UK, this collaboration sought to revolutionise the design process for additive manufacturing through integration of AI.

By implementing AI-based support systems, Batch.Works minimised manual tweaking, reduced trial and error and enhanced overall product quality. In reducing the number of design iterations required, designers were able to focus more on creativity and on accelerating the design-to-production timeline. This resulted in improved efficiency, reduced costs, and enhanced competitiveness.

While AI does not have the potential to replace 3D artists, and should not do so, this technology will undoubtedly change how all 3D artists and those in the creative sector work in the future. With government investment and training in AI systems, the creative sector holds massive potential to boost its contributions to the UK economy. Through adopting this new tech, creatives are uniquely positioned to maximise creative output in the face of strict deadlines, acquiring new and valuable skills in the process.

ABOUT THE AUTHOR: Sara El-Hanfy is Head of Artificial Intelligence and Machine Learning at Innovate UK, a part of UK Research & Innovation. She works to identify, support and accelerate high growthpotential innovation in the UK, based on cutting-edge AI and data research and technology. www.ukri.org/councils/innovate-uk/

G5

G12

Parts

» Sportswear brand Nike has teamed up with California-based wellness company Hyperice to release the Nike x Hyperice boot, a mobile solution for recovery trialled by some of the world’s best athletes, including LeBron James and Sha’Carri Richardson

A battery pack situated in the insole of each boot powers three different levels of compression and heat, giving athletes the choice of running each shoe individually or synchronising them via a control button

Once switched on, the boot starts working instantly, inflating and heating up the foot before massage begins, and inflating and deflating it periodically to apply and remove pressure

Velcro straps secure the boot to the foot, and each size corresponds with two traditional American shoe sizes (for example, men’s size 10-12). In future, Nike plans to offer individual sizes

A vest is also part of the Nike x Hyperice collaboration and uses instant heating and cooling technology to regulate athletes’ body temperatures in both warm-up and recovery

SIT BACK AND RELAX

Dynamic air compression massage is available through controls on the boots, warming up muscles before training sessions and easing foot pain afterwards

The boots are also intended for use on flights, with the Normatec system promoting blood flow by raising fluid through the legs, reducing puffiness and acting as a high-tech version of compression socks

Dual-air Normatec bladders are bonded to warming elements that evenly distribute heat throughout the boot, driving it deep into the muscle to speed recovery

Development of the boot is ongoing and Nike and Hyperice continue to gather athlete feedback www.nike.com www.hyperice.com

» AI technology looks set to impact every aspect of product development: dreaming up concepts, building parts, and optimising and automating the way they are manufactured. With new AI-enhanced tools being launched now on a regular basis, and their forerunners rapidly improving in leaps and bounds thanks to increased training, this is a fast-moving trend that leaves many prospective customers struggling to keep up. To bring you up to speed, we’ve picked out a few notable companies working in some of the key sectors

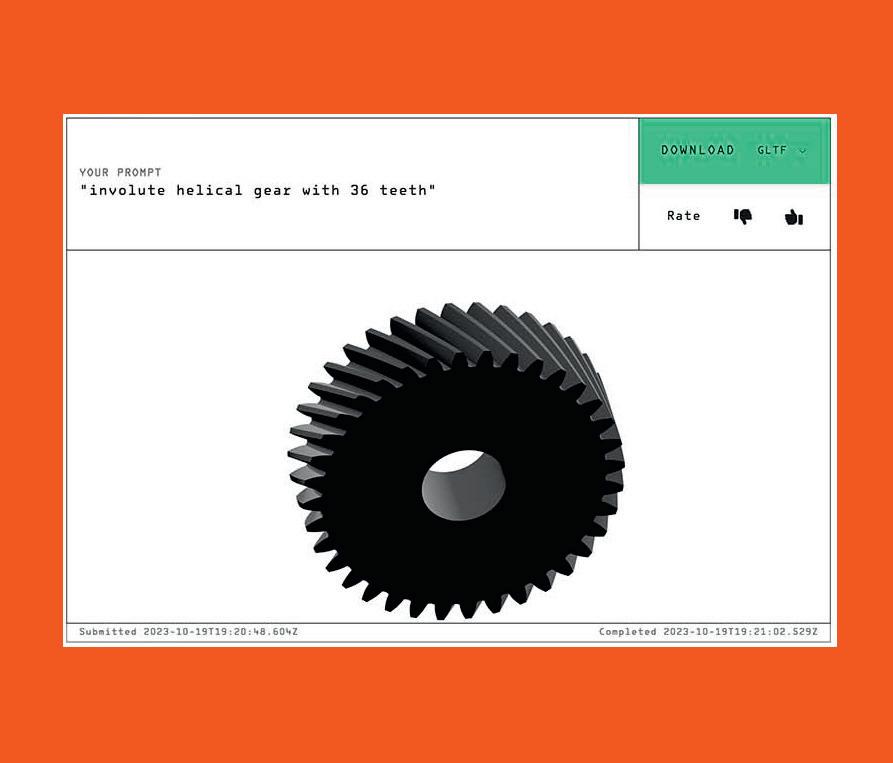

While text-to-image technology is already widespread and popular in 2D work, 3D parametric models present a trickier challenge. That said, start-ups specialising in professional tools for building physical products are able to make some impressive

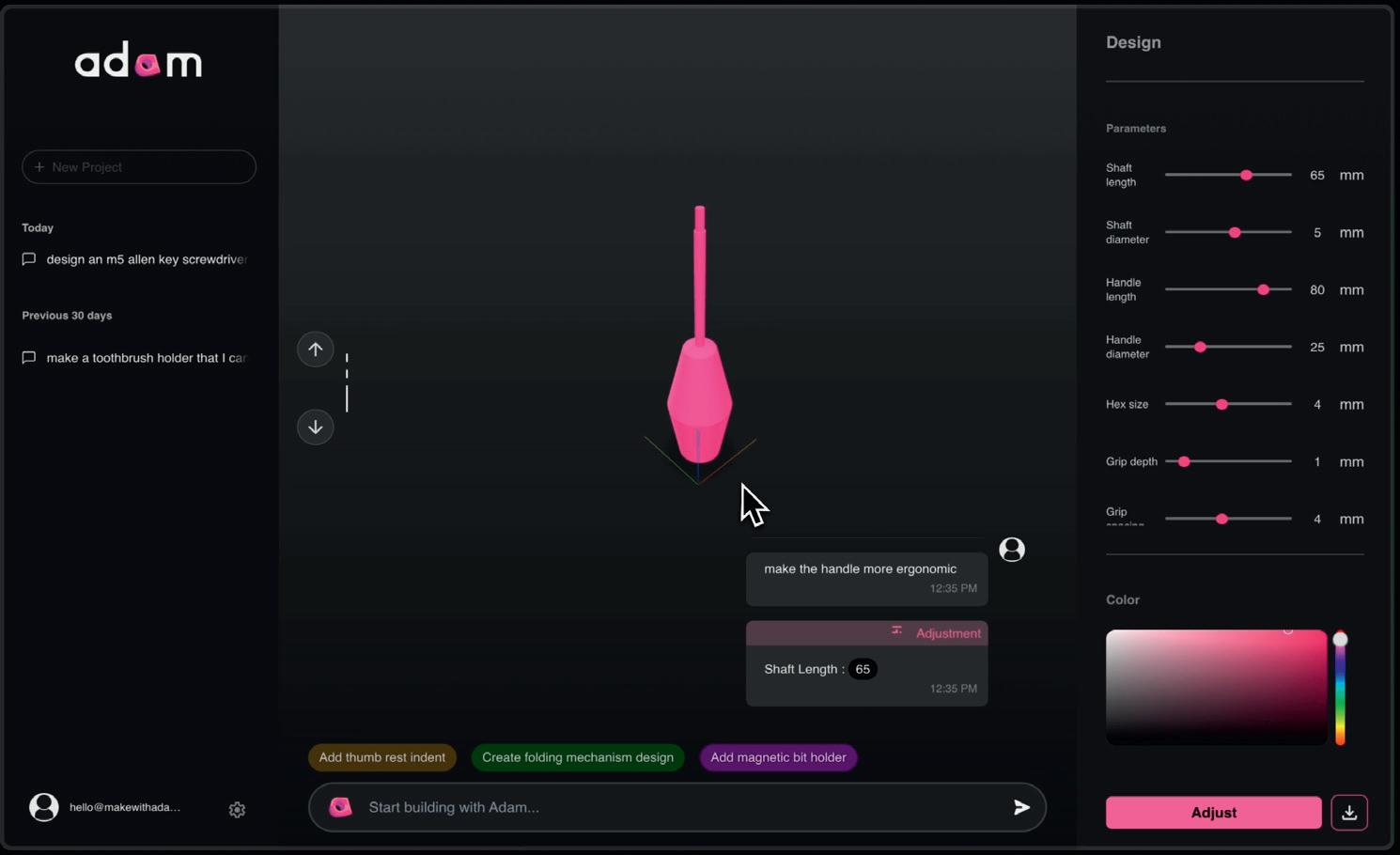

Adam is the newest tool on this list, but only by a matter of days, such is the speed at which this market moves. Its USP is that it offers a more conversational platform than competitors, with a text-to-CAD interface that feels more like firing messages to a customer service chatbot than simply typing into the void. Sliders are a big help in controlling measurements, such as setting a wall thickness or a corner radius, for example. From there, parts can be exported straight to 3D printing or pushed into CAD. Adam is a member of Y Combinator’s Winter 2025 cohort, and looks set to benefit from its involvement with the start-up accelerator, which is backed by the VC firm behind Airbnb, Dropbox, Twitch and many more. www.adamcad.com

boasts about their capabilities.

The idea is straightforward enough: the user enters as much information about the desired end result as they can succinctly describe. What they get back is a 3D model of a part that can be edited further or exported to your CAD package.

Taking in user input text prompts or images, Backflip then generates multiple designs as high-res renders, before allowing users to edit further and generate a 3D model that can be exported as an STL or OBJ file. Backflip’s development continues to be rapid, with its founders, the team behind 3D printer company Markforged, looking to build the ultimate design tool for creating ‘real things’ and having ambitious plans to link their technology to existing CAD tools, parts catalogues and more.

www.backflip.ai

HP’s AI3D Design Services software – internally named 3D Foundry – has come along just when the 3D printing mainstay is looking to unlock new AM applications. This simple toolset uses text-to-CAD to generate a design, a 3D lattice and then export it to 3D print using HP technology. HP executives have been keen to explain that they see businesses using the tools to support product customisation. While professional designers would design the key elements of a product in order to comply with standards and regulations, customers could contribute to other design elements, such as the lampshade on a light fitting.

www.tinyurl.com/text-to-3D

The Zoo Text-to-CAD modeller generates B-rep surfaces, enabling 3D models to be imported to and edited in any existing CAD software as STEP files. That’s a contrast from other text-to-3D examples that generate meshes – which, once imported, can’t always be edited in a useful manner. Much of Zoo’s achievement is down to its hard work on the infrastructure behind Textto-CAD, which uses its own proprietary Geometry Engine, Design API and Machine Learning API to analyse training data and generate CAD files. The capability for a CAD model to be edited naturally makes generated models far more useful. www.zoo.dev/text-to-cad

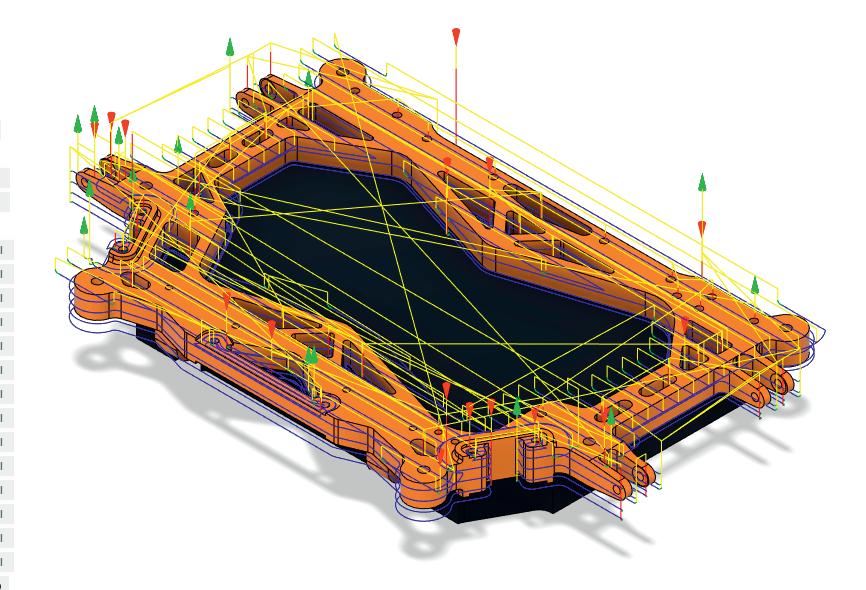

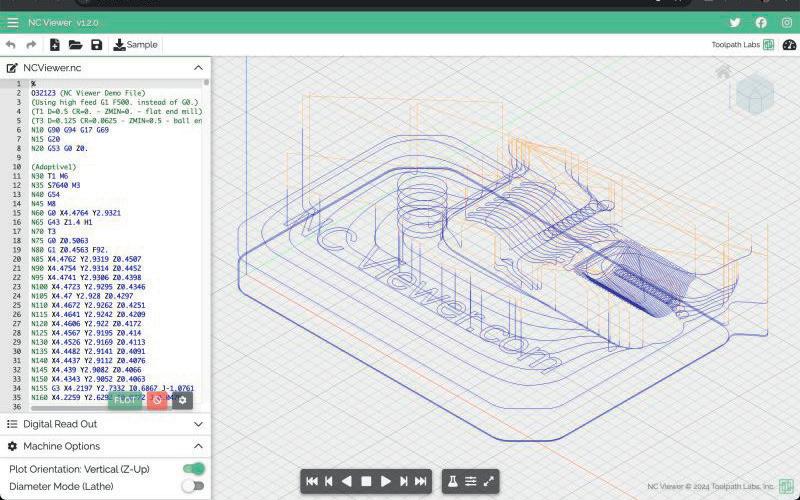

With the manufacturing sector rarely out of the headlines, securing the future of how parts, moulds and dies are built is important. Compared to today’s often error-prone, time-consuming and expensive process,

Single-click manufacturing is still be some way off for more complex designs, but CloudNC is making bold steps with CAM Assist, especially in 3-axis machining. This is available as a plug-in for Autodesk Fusion, Mastercam, Siemens NX CAM and Solid Edge CAM Pro. Once you’ve opened your CAD model and added in your tool library, machine, stock material and machining mode (3-axis or 3+2), CAM Assist creates the machining strategies, feeds and tool speeds. You then export the G-code and manufacture the part on your machine. CloudNC executives claim that its software takes users 80% of the way. Its ability to balance cycle time, surface finish and tool make it an impressive piece of technology. www.cloudnc.com

InfinitForm aims to increase the manufacturability of a design long before any metal gets cut, by offering tailored design constraints for parts in relation to the manufacturing processes you have available. Built by the founder of generative design tool ParaMatters, which focused on topologyoptimised designs for 3D printing, InfinitForm creates optimal, machine-friendly prismatic models from the design stage onwards. The software imports CAD and acts as an AI co-pilot during design stages, taking in the material and tool constraints upfront, leading to reduced cycle times and minimal post-processing requirements. www.infinitform.com

which typically relies on expert manual programming, an automated approach driven by AI and machine learning could be a massive win for product development. By making precision manufacturing more autonomous, it’s hoped that businesses

will see an improvement in the ease, speed and reliability with which parts are made. That, in turn, could lead to improved costs, resulting in more manufacturing getting reshored and supply chains becoming more local.

Another tool for 3-axis machining that integrates seamlessly with Autodesk Fusion’s manufacturing workspace, Toolpath uses AI to help automate time-consuming tasks like design-for-machinability analysis, quoting and CAM programming. Its ability to analyse parts, estimate costs and create a machining strategy aims to reduce bottlenecks and reduce repetitive tasks, freeing up the time and talents of machinists to tackle more complex problems. To help those machinists, Toolpath has constructed a guide that it says can teach anyone to use the plug-in in just 30 minutes. www.toolpath.com

The complexities associated with additive manufacturing (AM) come to a head at the build preparation stage. The specific requirements of every process, machine and material mean that

getting this right (let alone optimised) can be a tough challenge. Expensive failures are too often the end result. But AI can deliver better parts, more complicated builds and fewer errors through its ability to optimise

build supports, proactively predict failures and more. Some tools are even able to correct errors ‘on the fly’, increasing print yields and pushing AM further in the direction of mass manufacturing.

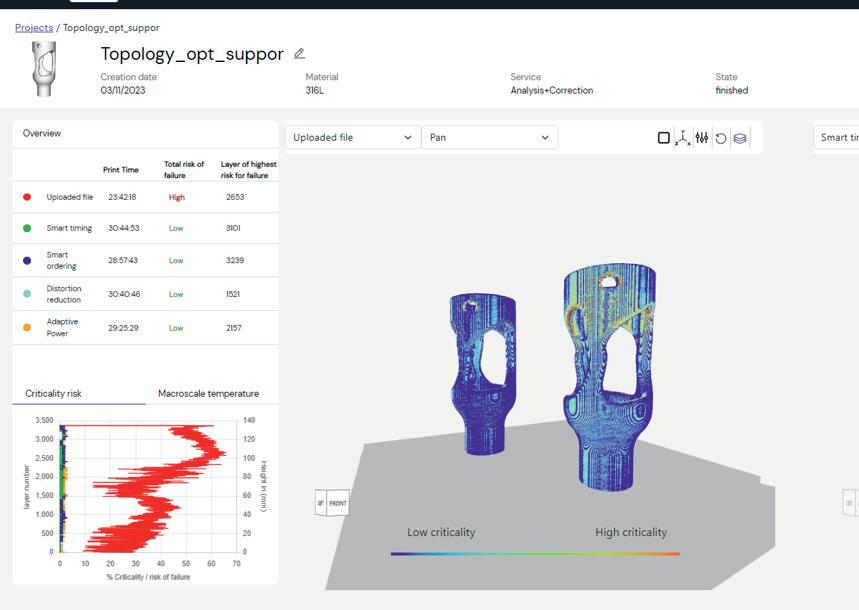

The second release of AMaize offers automated design printability checkers, optimised build preparation and intelligent adjustments to scan strategies and process parameters. By populating its Virtual Shop Floor with all the machines and materials you have, the software can suggest strategies that increase productivity and reduce print fails. AMaize’s tools for printability check the design before it heads to production and help identify distortions, overheating and shrinkage. A physics-based approach means that during build prep, AI generates support structures only where necessary, reducing material usage as well as the time it would take an experienced operator to manually add those structures. www.1000kelvin.com

If you’re printing big parts, then AI Build may be for you. A hybrid platform for robotic and LFAM 3D printing, it combines AM with tailor-made CNC strategies, all through the same user interface. Different parts will require different slicers to put down material layers and create toolpaths for a build. AI Build can assist with all that, before enabling you to package your process into a ‘recipe’ that can be used again and again. Many tasks can be carried out using the AI co-pilot, which makes recommendations and offers smart setting defaults. Upgrades beyond the standard version include build process monitoring and defect detection. AI Build is available either on the cloud or on-premise for added off-grid security. www.ai-build.com

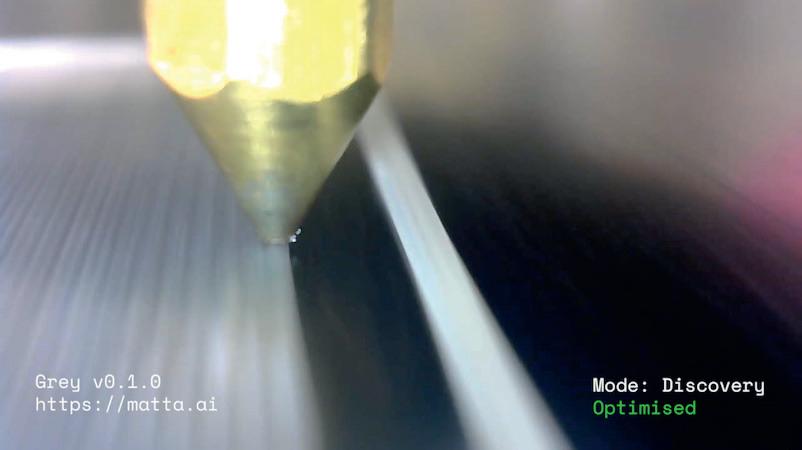

Founded at the University of Cambridge by a team of AI and manufacturing engineers, London-based Matta is looking to improve build quality, increase 3D print yields and help automate the factory of the future. Equipped with advanced error detection, parameter prediction and optimisation capabilities, its Grey software learns from every print produced within its global network of 3D printers. Its initial toolset in Grey 1 is more machine learning than AI, but offers an exciting indicator for where the tech is heading. Able to correct errors on the fly, it can ensure quality prints first time, reducing failure rates and making AM more applicable to volume production. The software creates digital twins of printed parts using G-code, projecting quality measurements onto each extruded line, ready for inspection. www.matta.ai/greymatta

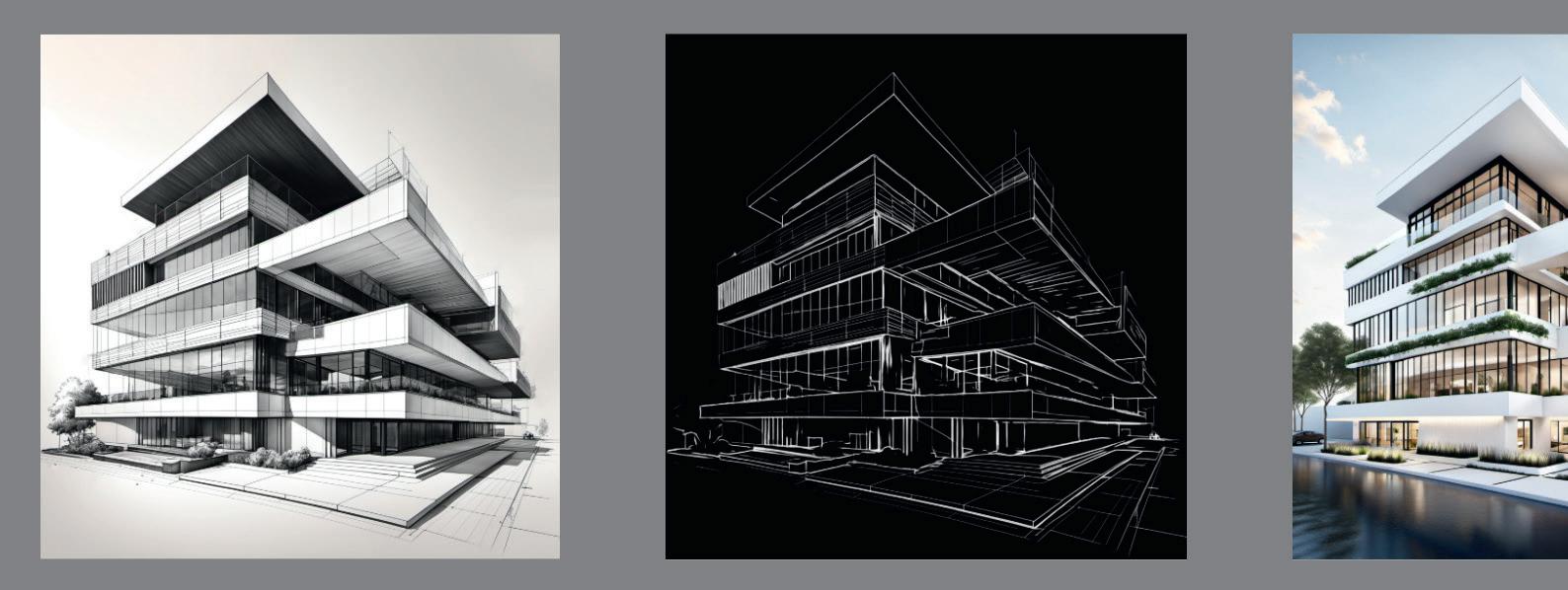

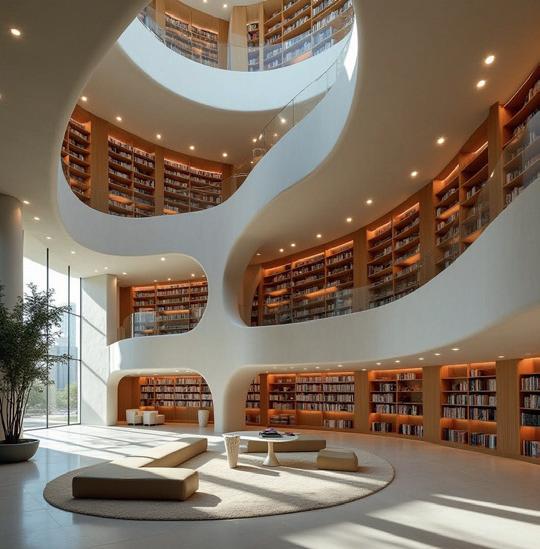

Slick visualisation tools have always been important in product development. But AI’s impact in this area looks likely to be utterly game-changing: stacks of realistic renderings, delivered as early as the concepting stage, which use for reference only quick sketches, a handful of prompts

Krea is not a single software, but an AI platform designed to support a wide range of visual content. Its tools span a whole array of professions, but there are some interesting options in there for product designers. Like other generative platforms, Krea allows users to upload sketches and generate renders, but its wider toolset includes features like Realtime, which enables you to turn 2D images of objects into 3D assets, or take XR sculpting tools and use the output to build detailed concepts. It might take a while to master everything that Krea has to offer, but going on what the product’s online user community is achieving, it can clearly unleash a whole world of creativity. www.krea.ai

Having recently taken more of a focus towards soft goods, footwear and fashion, NewArc is still an excellent tool for generating and editing product images. A simple sketch and some loose prompts can produce incredibly detailed renderings with realistic lighting across materials. The user interface is designed without particular skills in mind, so you don’t have to have created even a single product rendering in the past in order to be able to jump straight into what this tool can offer. Among its array of preconfigured styles, it even has a clay rendering mode for traditionalists. All paid accounts come with full privacy included, so that all of your sketches, prompts and images remain solely yours and can only be accessed from your personal account. www.newarc.ai

Prome is a slightly more straightforward generative AI tool than many others. You enter a sketch, generate a rendering, and then expand it with prompts to adjust CMF and even produce short video clips. This is not to say it’s short of functions, as it has a fully stocked list of AI tools for crafting the perfect image, including AI background generation, and some with particular focus for tweaking the scene lighting. Since realistic, physics-based lighting is something that is often lacking in AI-generated content, any user who knows their way around photorealistic rendering software will appreciate the extra levels of control that Prome offers. www.promeai.pro

and mere seconds of processing time.

In a world that demands new ideas faster than ever before, generating concepts using generative AI software is fast becoming a norm, far overtaking the compilation of mood boards and lookbooks.

AI enables designers to explore multiple ideas, materials and colourways. The better

tools offer intuitive control of edits, even allowing you to train the AI on your own design history and maintaining design language as you hit generate. It’s not all about ideation, either; these tools work brilliantly for those who might not be the best sketchers, or for anyone wanting to bring a last-second idea to life in a client meeting.

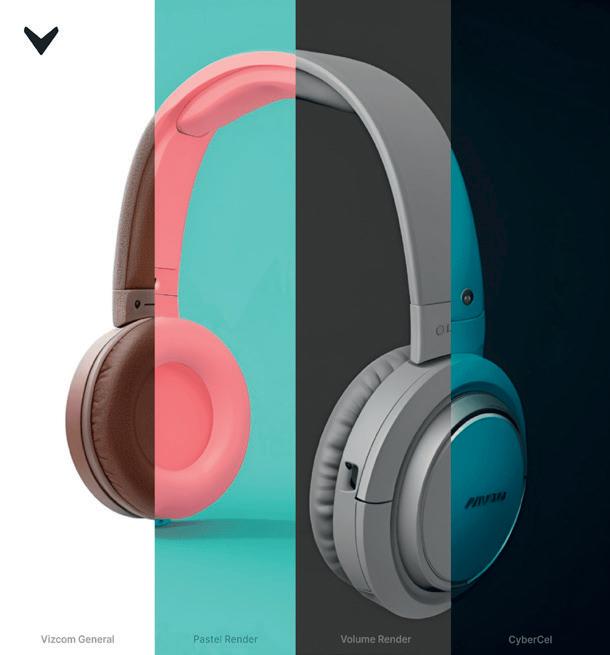

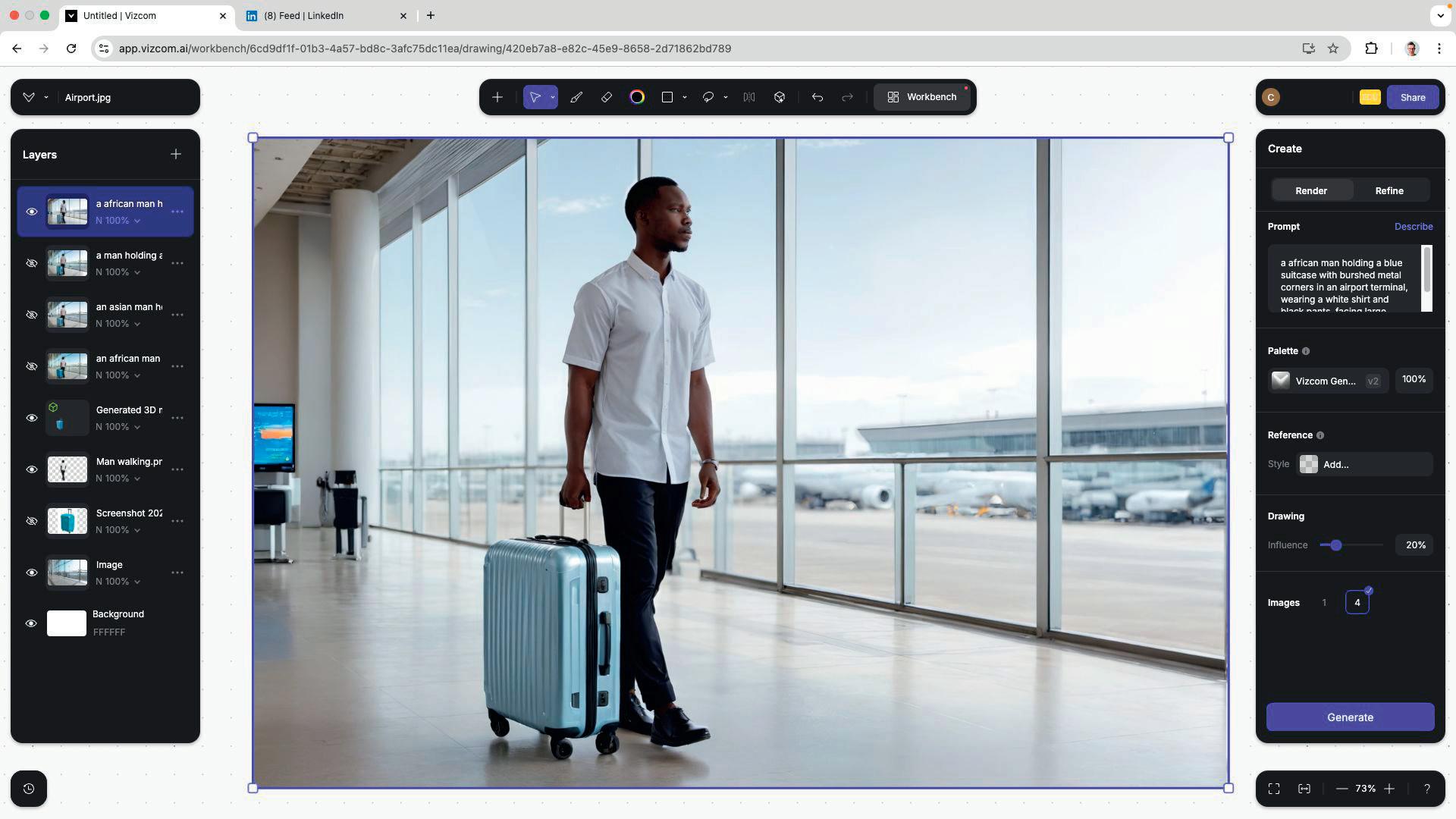

Vizcom is now a feature of seemingly every automotive studio’s software arsenal and offers designers an increased level of control over the sketch and edit workflow. The simple user interface lets users decide how much of their original sketch will influence the overall look, while a library of render styles can be selected, from soft pastels to eye-popping brights. It’s very clear that Vizcom has been designed by a team steeped in product design, with two features standing out: first, its Palettes feature, which allows users to train its AI with their own design language by uploading up to 30 example images; and second, Workbench, an infinite whiteboard where teams can collaborate, explore and elaborate on ideas. www.vizcom.ai

AI is unlocking the upper echelons of what modern simulation software can achieve. Often, it’s doing so by speeding up the process, automating simulation setups down to a single click in place of hours of manual preparation. Or, it’s delivering improvements by

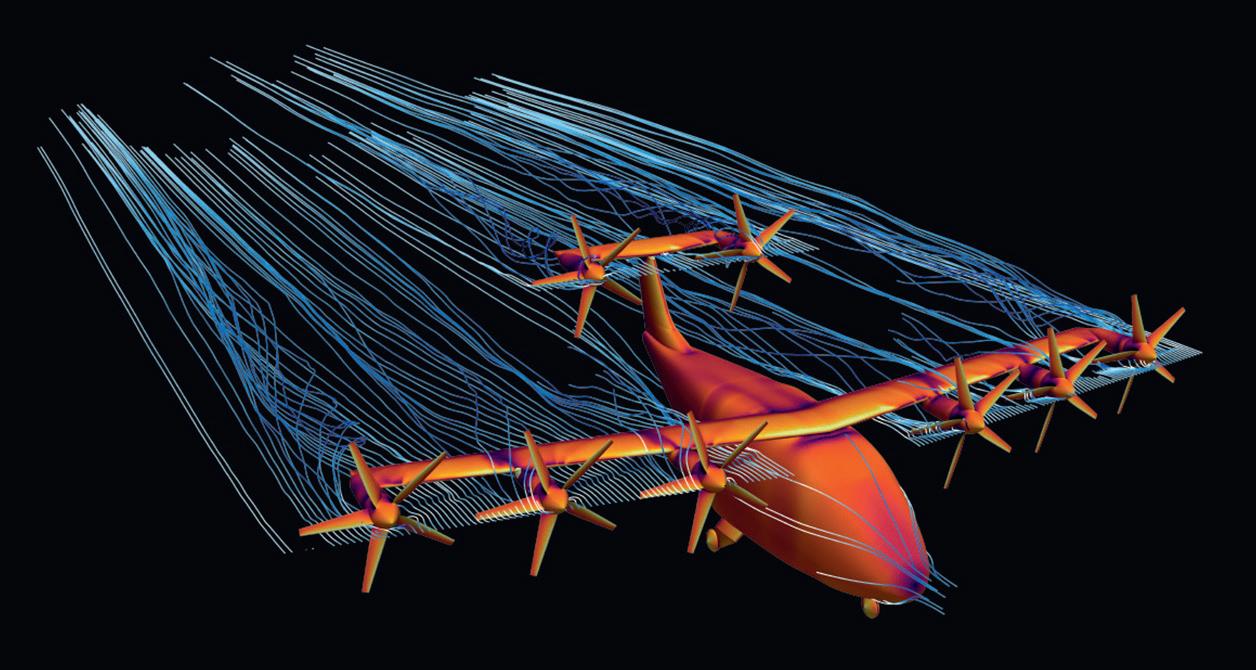

Luminary is a simulation-as-a-service, high-fidelity CFD solver that relies on cloud-based compute power and makes the CAE workflow faster and easier, thanks to its Lumi AI co-pilot. Lumi AI reduces the time that engineers need to spend on simulation set-up, so that they can instead prioritise analysing results and optimising designs. Part of this toolset includes the Lumi Mesh Adaptation, which automatically generates physics-informed meshes for fast and accurate results that learn from existing solutions. Users can also take advantage of a minimal initial mesh generation feature, which reduces the mesh size required in order to achieve a target level of accuracy. Ultimately, that means a more cost-effective, faster simulation. www.luminarycloud.com

analysing huge amounts of data to highlight the points of a simulation that most matter.

Along the way, it’s opening up simulation tools to more engineers, enabling them to perform rigorous testing earlier on in the design workflow, so that experts need only inspect the higher-level details.

Monolith’s goal is to get 100,000 engineers using its AI tools to cut their product development cycles in half by 2025. It’s a bold target for any start-up to set, but the company insists it is confident in its no-code AI approach and machine learning tools that can be used to build pipelines and interactive ‘notebooks’ for loading, exploring and transforming data for AI. Refined from hundreds of AI projects to find hidden errors, streamline test plans and build better products using users’ own historic data, Monolith’s tools allow for the testing and simulation of materials, component design simulations and manufacturability, using past data to help companies streamline their processes and focus on the most impactful areas. www.monolithai.com

With its AI4Simulation toolset, NXAI offers particlebased simulations for modelling multi-fluid systems and fluid-material interactions. AI4Simulation’s first simulation project, NeuralDEM, is an end-to-end, deep learning alternative for modifying industrial processes such as fluidised bed reactors or silos. Aimed at scaling industrial and manufacturing processes, NeuralDEM captures physics over extended time frames. With deep learning, AI4Simulation looks to scale to millions of particles, but has an eye on the future, involving scales that surpass human understanding. www.nx-ai.com

PhysicsX claims that, once set up, its tools can simulate complex systems in seconds, automatically iterating through millions of designs to optimise performance while respecting manufacturing and other constraints. Any engineer can drive all parts of the workflow, even those parts traditionally managed by dedicated experts, with AI automating the most time-consuming tasks, minimising handovers and accelerating iterations. PhysicsX LGM-Aero is a geometry and physics model that has been pre-trained on more than 25 million meshes, representing more than 10 billion vertices, as well as a corpus of tens of thousands of CFD and FEA simulations generated using Siemens Simcenter STAR-CCM+ and Nastran software. www.physicsx.ai

» They may have been slower to bring AI tools to market than some start-ups, but the big software companies have large R&D budgets, sizable workforces and masses of data at their disposal. Here, we get a glimpse from four senior executives on how they see AI and what might be in the pipeline at their companies

EVP of product development and manufacturing solutions

I believe we’ll see AI automate mundane tasks more and more across all industries, but certainly in design and manufacturing. In the next year, I think we’ll see AI surface even more in design to accelerate the creative process.

I believe we’ll see a fork in the AI road. The fascinating and novel capabilities that we’ve become accustomed to thinking about as defining AI, such as natural language prompts yielding fantastic images, essays and code, will continue to advance. At the same time, very practical, somewhat mundane AI capabilities will emerge. And these advancements will save us immense amounts of time. Soon, busy-work tasks that used to take hours or even days will be completed with a simple click of a button. And we’ll get that time back to do the creative work that humans excel at, while the computer focuses on computing. With disconnected, disparate products, organisations can only achieve incremental productivity gains. To see breakthrough productivity gains, data must flow seamlessly and be connected end to end. The productivity increases will be a welcome accelerant in and of themselves, but they’re also the fuel for building more AIpowered automation tools. but

CEO of Dassault Systèmes Solidworks

With 95% of companies anticipating that AI will improve product development, it’s increasingly important for organisations to ensure their AI systems are built on a foundation grounded in reliable, high-quality data. I believe 2025 will be crucial in laying a data-driven foundation that allows AI tools to thrive.

Many organisations lack a centralised platform to collect and manage the data that is vital for training AI models, which may introduce potential risks, such as inaccuracies in automated designs, lack of transparency in decision-making and potential security vulnerabilities that require careful management. Without a clear picture of all the data at your disposal, AI models also won’t function to their highest capability. This involves know-how in addition to hard knowledge. Key learnings are critical to input into AI models to complement facts and ensure that users do not make the same mistakes twice.

AI will be the determining factor that ultimately streamlines and optimises design capabilities, but 2025 can be considered a bridging year, for ensuring that AI models have all the data in place to ensure organisations are maximising their potential.

Using natural language to ask questions and interact with software allows new users to learn and use complex software more quickly and with less need for expert guidance. At the same time, experienced users can seamlessly automate workflows and speed up tasks. However, while industrial AI chatbots represent an important first step on the path of bringing AI into professional software, they should not be mistaken for the end goal.

In the increasingly complex and digitally integrated world of modern design and manufacturing, AI is uniquely positioned to connect people and technology in a way that plays to the strengths of both with AI moving many of the burdens of professional software away from the user. Over the course of the coming months and years, AI will not just be a novelty in industry, but a critical technology that will upend the way that products are designed, manufactured and interacted with.

Companies that fail to adopt AI will find themselves unable to keep up in a fast-paced world where competitors have continued their digital transformation maturity journey – a journey that will lead to an autonomous, intuitive and integrated design process far surpassing anything that exists today. are

JON HIRSCHTICK // PTC CEO, PTC Onshape, speaking to Engineering.com

I think AI is critical. I think our users must feel like product developers did when plastics or carbon fibre came along. It’s not just a better way of doing things; it’s a whole new set of tools that make you redefine problems.

AI allows you to approach problems differently, and so the baseline is important, not only for study but also for releasing products. Just like with the first plastic product, you can’t know what it’s really like until you use it. We need to build reps, to understand how to deliver and leverage the cloud-native solutions of Onshape.

Our system captures every single action as a transaction. If you drill a hole, undo it, or modify a feature, that’s all tracked. We have more data than any other system about a user’s activity, so we don’t need to go out and collect data manually. This gives us a huge advantage in training AI applications.

In the future, users might even be able to combine data from their channels, emails, and other sources, and create a composite picture of what’s happening. We’re working on ways to give more value without relying on manual collection. a huge advantage in training AI applications. channels, emails, and other sources, and create a composite picture of

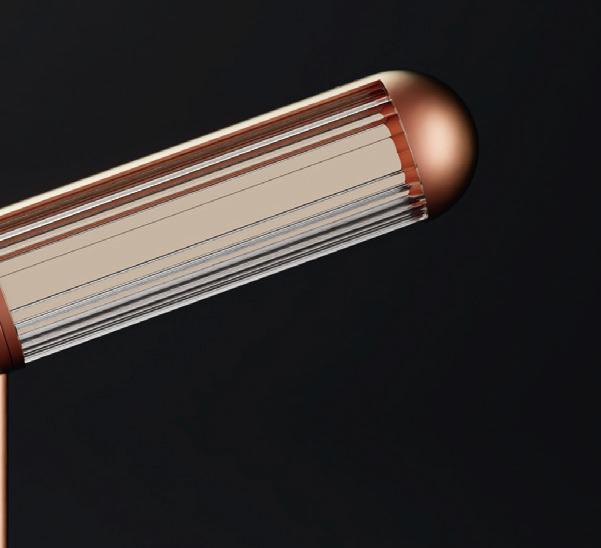

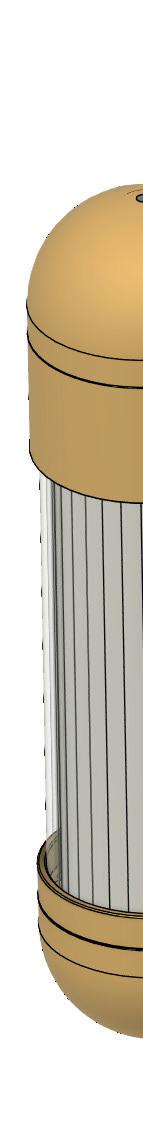

Based in Edinburgh, Scotland, R&S Robertson has delivered exceptional lighting solutions for the hospitality and leisure sectors since 1939. However, as the lighting industry diversified in distribution markets and channels, the company realised it was time to forge a new path to stay competitive and embarked on designing their very first collection.

Vivid Nine, an industrial design agency also located in Edinburgh, began working with R&S Robertson to create the new lighting designs. Both share the same core values of environmental responsibility and a sustainability-first mindset. The new collection shouldn’t just be eye-catching—it should help reduce carbon, too.

Adopting new tools to meet ambitious goals

Friends since their days in university, Jonathan Pearson and Terje Stolsmo founded Vivid Nine in 2022 after heading up design at different industrial design agencies. With Stolsmo based in Norway, Pearson in the UK, and a limited, bootstrap budget, they decided to begin using Autodesk Fusion.

“We needed something that could work collaboratively across different locations,” Pearson says. “We’d have to buy a server and network licenses with Solidworks. The whole infrastructure was a lot more complicated, whereas Fusion does everything in the cloud. We can use it wherever we want. With Solidworks, you’d also have to pay quite a bit extra to get static stress simulation built in, and that is available in Fusion as-is.”

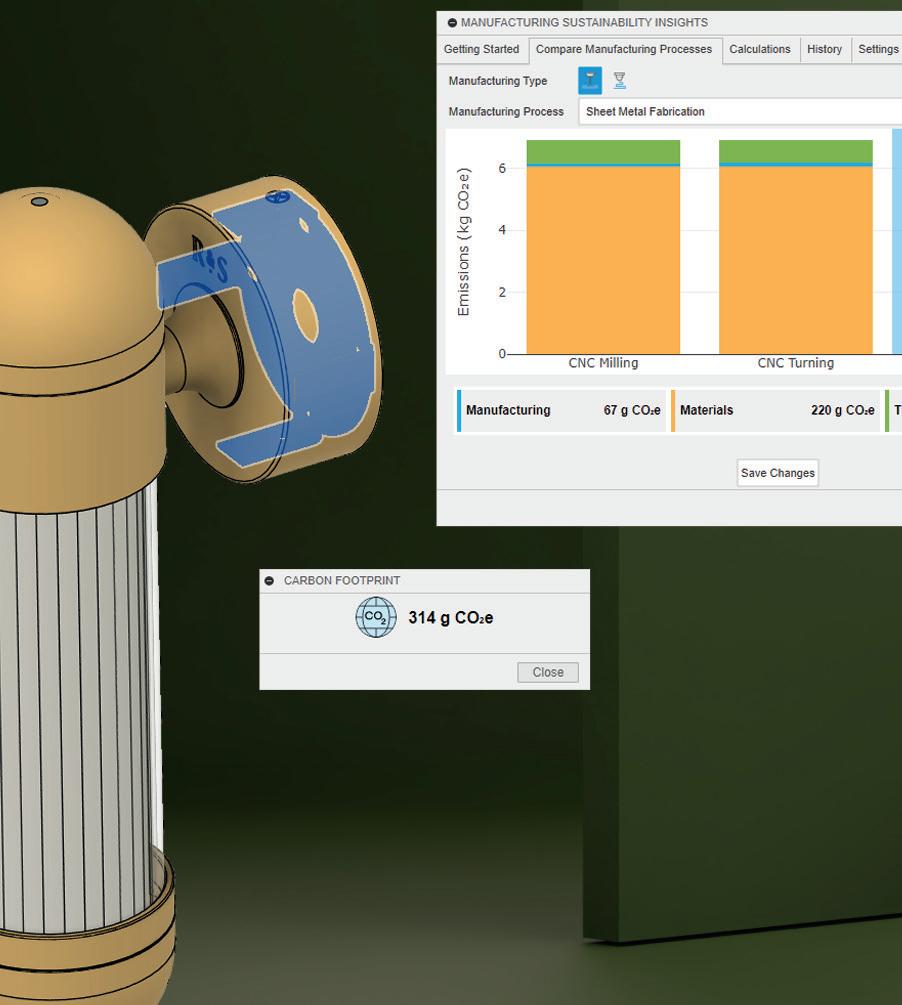

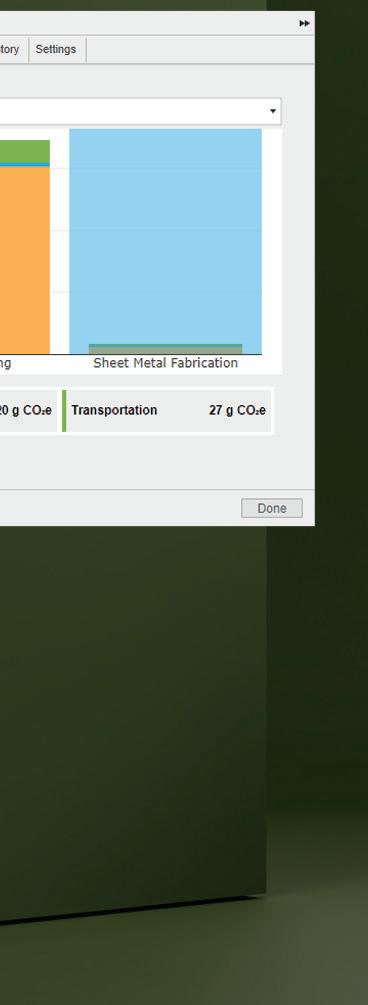

Vivid Nine was also able to leverage the sustainability tools available with Fusion, including its Manufacturing Sustainability Insights (MSI) Addon. This powerful tool enables them to calculate the carbon footprint of their designs, optimise products for reduced carbon emissions, and enhance sustainability reports they generate for their clients, including R&S Robertson.

Bringing style and sustainability priorities together

When Vivid Nine began its work to design the new lighting collection, they first started by interviewing interior designers. “One of the things that came out as a priority was sustainability,” Pearson says. “When asked, more than 85% said a sustainable choice was important to them. Being able to understand how different lamps compare to others could influence their choice as well.”

With this crucial knowledge in hand, Vivid Nine began its work on sustainable, Art Decoinspired lamps. From start to finish, the new Hudson collection was designed and ready for manufacturing in Portugal within eight months. But, during the process, they still grappled with the best way to capture its carbon footprint, relying on Excel sheets, siloed data, and assumptions. With

MSI, they could do a full lifecycle analysis to easily showcase Hudson’s sustainability data to R&S Robertson’s customers, improving the depth of the sustainability disclosures.

Additionally, MSI enabled Vivid Nine to discover that the biggest carbon contributors were the lower and upper houses for the lamps, contributing 15kg of the total 22.6kg CO2 emissions. This hotspot highlighted where significant improvements could be made, either through a material choice change or design optimisation.

The team also discovered a rather unexpected insight. Using MSI, Vivid Nine was able to compare the manufacturing impacts of different locations compared to R&S Robertson’s manufacturing partner in Portugal. The insights demonstrated the substantial value of producing in Portugal, highlighting improvements in carbon emissions and overall environmental impact when compared to manufacturing in Asia. In fact, the European location is also influencing their material choices moving forward.

“We’re doing more investigation into products that are even more marketable as a sustainable material, such as cork,” says Michael Lawrence, Marketing and Product Manager, R&S Robertson. “There are a lot of cork factories locally and near

our partners in Portugal, which helps us deliver even more environmentally friendly products.”

Since MSI offers unparalleled and real-time visibility into the carbon impact of various design and manufacturing variables—such as material selection, manufacturing process, and geography—directly in Fusion, it’s now become an integral part of Vivid Nine’s workflow.

“We can go through each component piece of the light and run calculations of different CO2 values to try and reduce it,” Pearson says. “We can compare different manufacturing methods or different sizes to reduce the number. You can have a load of parts. But, with MSI, it’s easy to see what stands out as a really high value from a CO2 perspective and focus on what to change and impact the overall value.”

Since adopting the tool, Vivid Nine has used the MSI Add-on for Fusion for many new successful—and sustainable—lighting products with R&S Robertson. “We have a range of 10 new designs coming out next year,” Lawrence says. “Our work with Vivid Nine and how they use technology such as Fusion and MSI really demonstrates the positive impacts that can be made both for your business and the world.”

Sustainabilityiscoretoourvalues, andit’salsoaknownbusiness differentiator.VividNineandtheir useofFusionandMSIhas—quite literally—shinedanewlightonhow weapproachourdesignstoreduce thecarbonfootprint MichaelLawrence, MarketingandProductManager, R&SRobertson

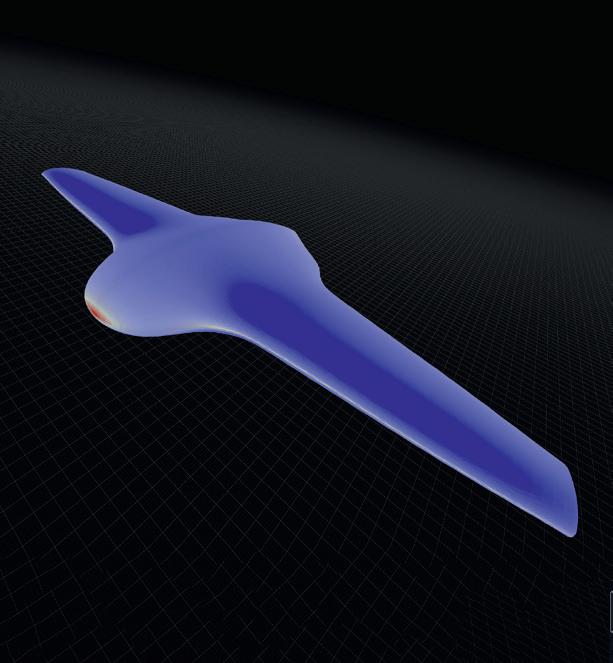

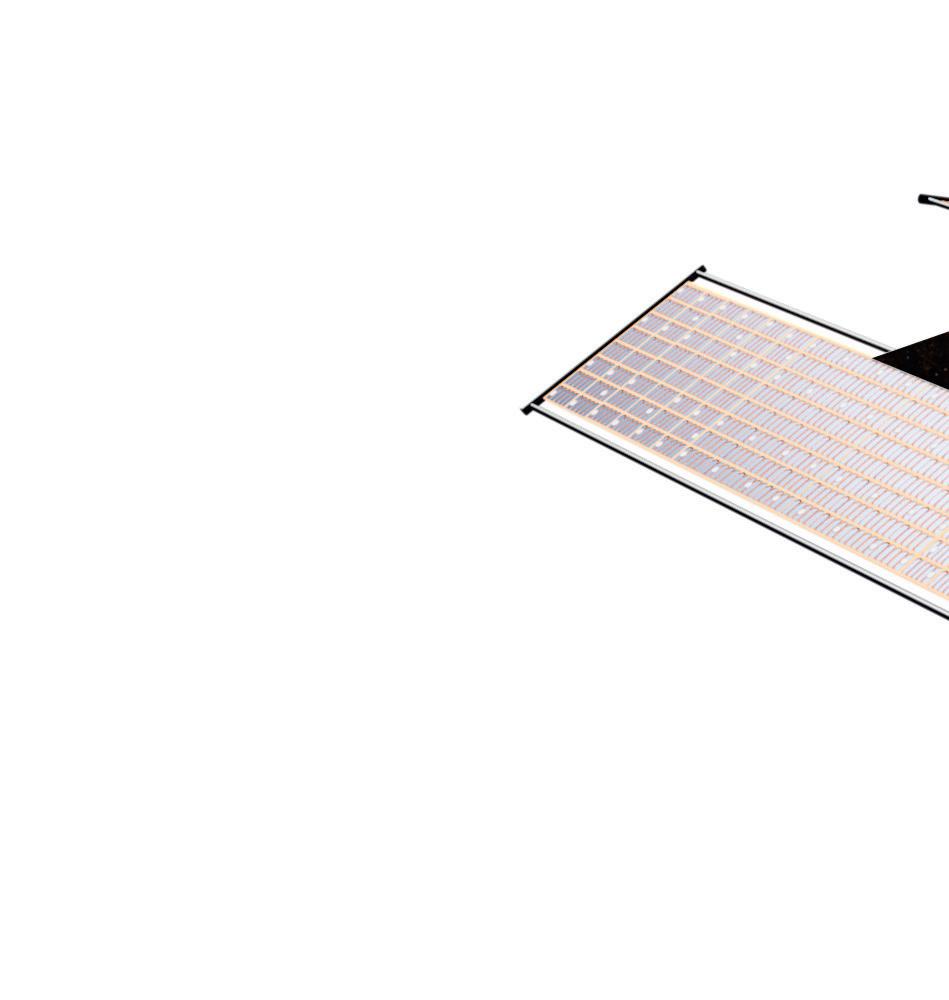

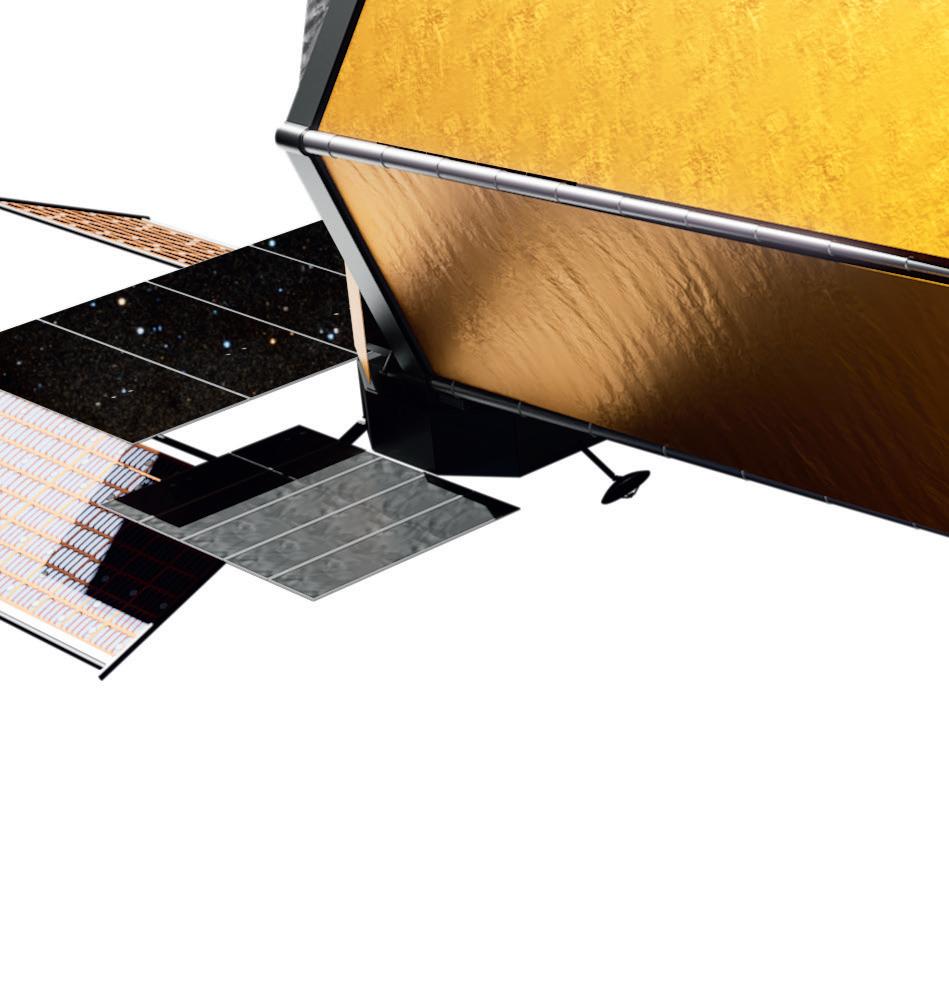

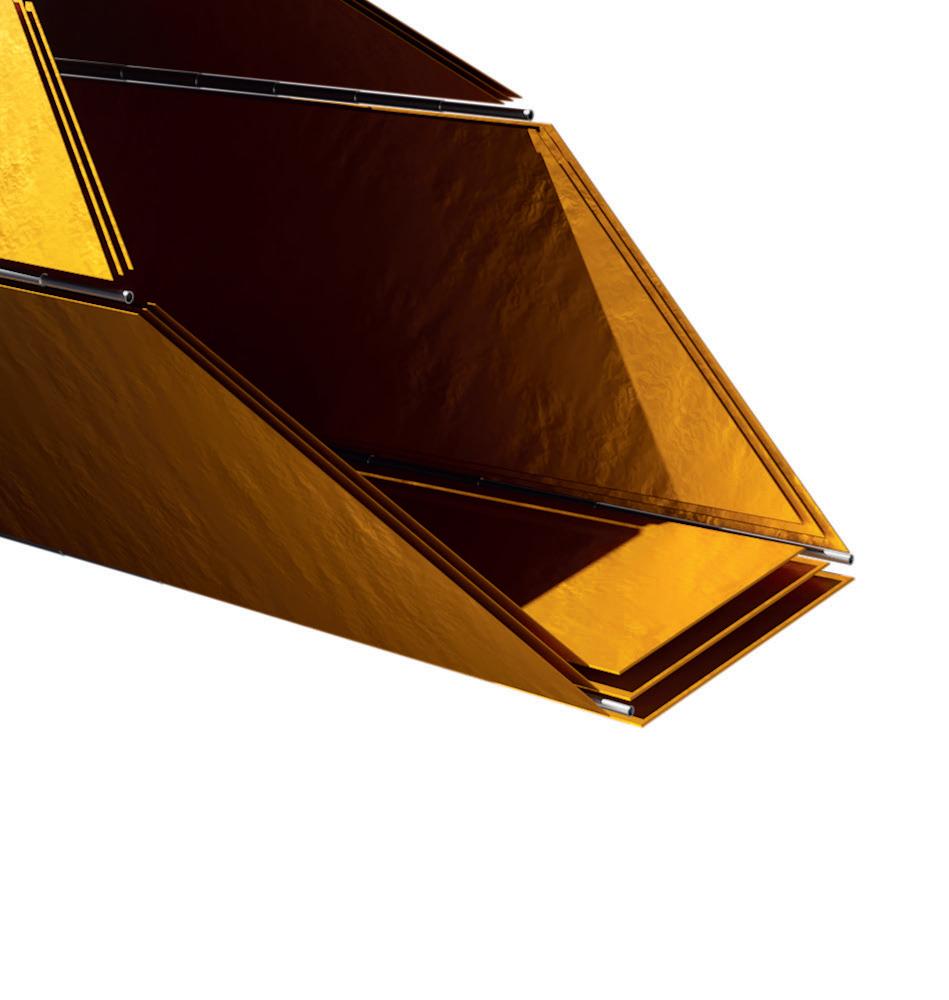

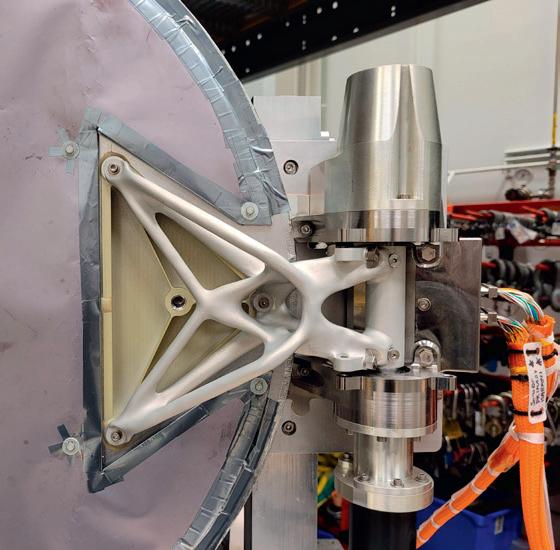

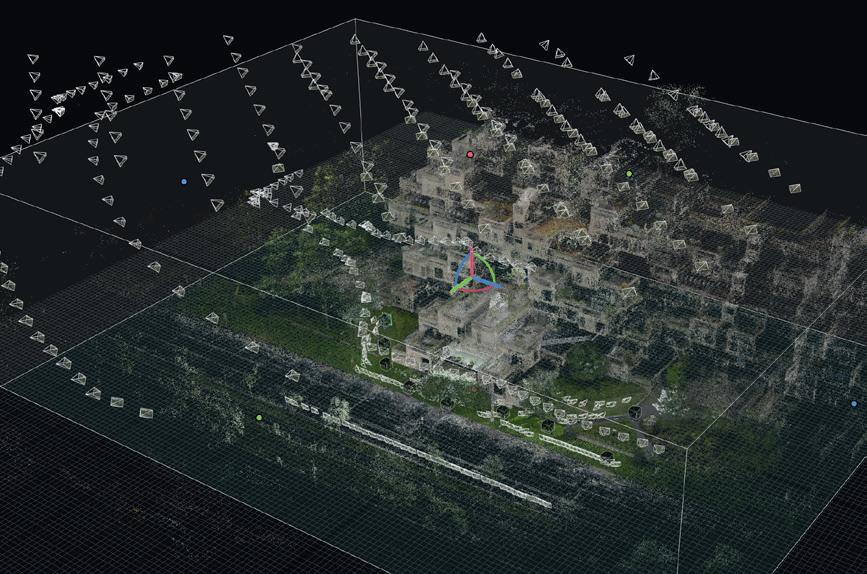

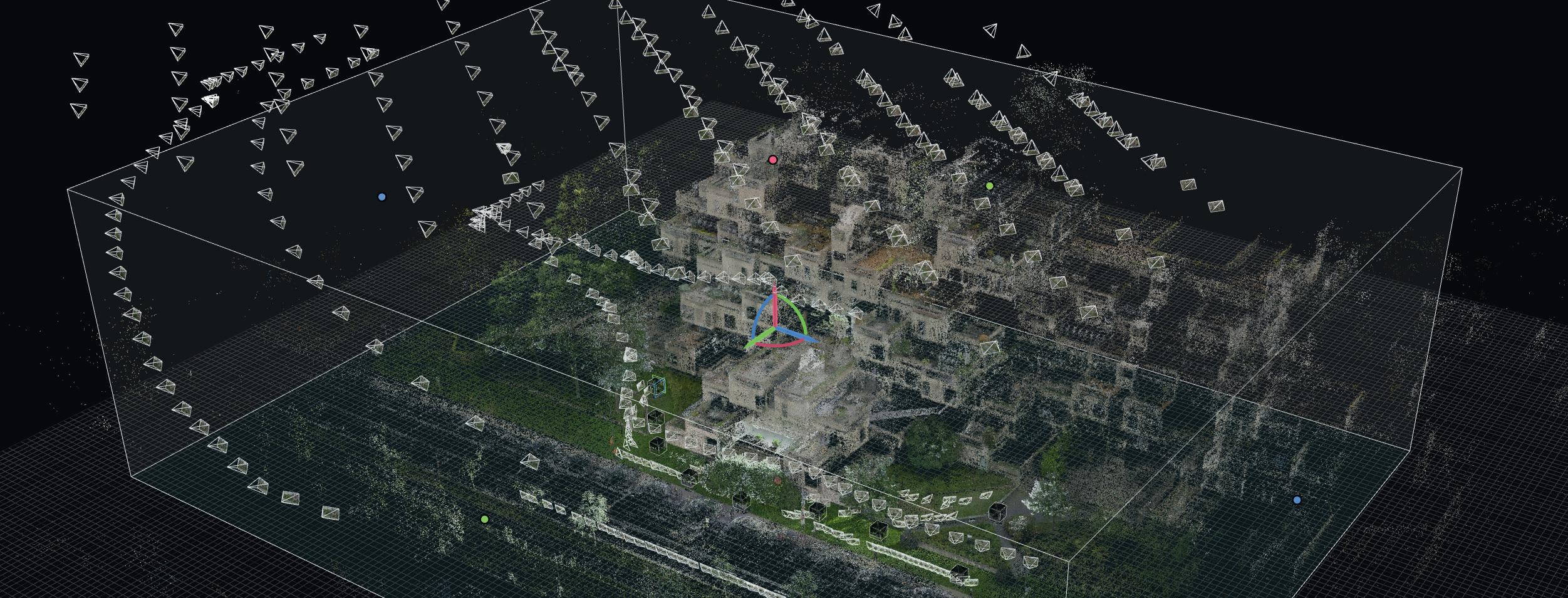

» Engineers at NASA Goddard Space Flight Center are using generative design to produce lightweight structures for space and taking their AI work on to the next frontier, in the form of text-to-structure workflows, as Stephen Holmes reports

If developing hardware is difficult, then developing hardware for space is on another level. Space agency NASA has more experience in this area than any other organisation worldwide.

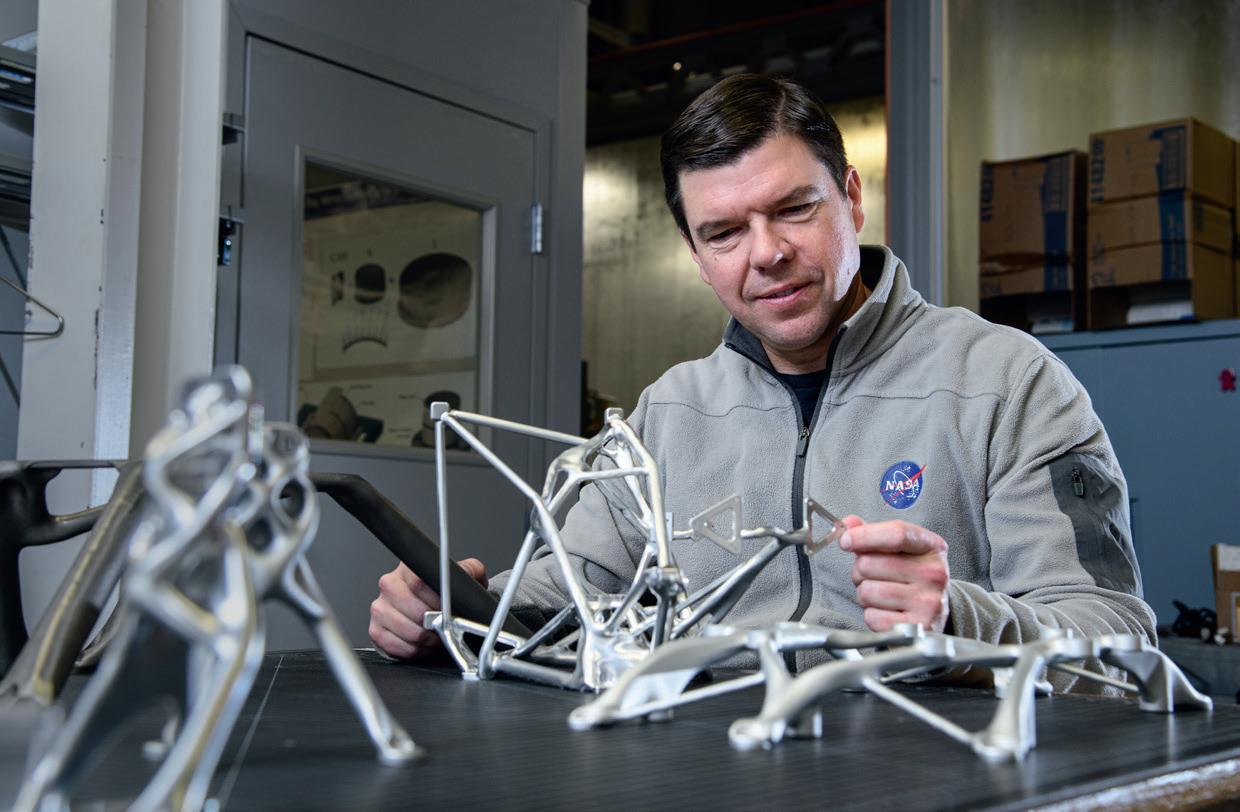

“NASA is a cutting-edge organisation and we have an interest in staying on the cutting edge,” smiles Ryan McClelland, speaking from his office at NASA

Goddard Space Flight Center.

Located just outside Washington DC, Goddard is a nucleus of design, engineering and science expertise for spaceship, satellite and instrument development, making it a critical ingredient in NASA’s space exploration and McClelland, a research engineer, is looking to build processes in which AI is used to help design parts and structures, in order to accelerate next-stage space

to-CAD allowing AI to create a form shaped by the list of requirements ran against the large datasets available.

“There’s a real tension between doing things incrementally, so that they’re easy to be absorbed into the workflow, and taking these big leaps, right? So an example of a big leap, is where we’ve used text input,” says McClelland. “It goes through the text-to-structure algorithm and outputs a CAD model, a finite element

scientific missions. processes in which AI is used to help design parts exploration.

Generative design, he says, brings extensive benefits to this work: speed, stronger and lighter parts, and a reduction in the human input necessary. NASA’s total mission cost estimates are in the ballpark of $1 million per kilogram. McClelland shows us an example project, built using generative design, that shaved off 3kg. “The AI generates the CAD model, generates the

McClelland

design, does the finite element analysis, does the manufacturing simulation – and then you just make it,” he says. “What’s powerful about this is it focuses the engineer on the higherlevel task, which is really thinking deeply about what the design has to do.”

McClelland says this has changed the way his team at

McClelland says this has changed the way his team at NASA Goddard approach design work. “We realised that most engineers take their tools and immediately start designing, sketching and so on without sometimes really

fully understanding what the problem is.”

How he and the team now approach a project is by first arriving at a deep understanding of what the part needs to do and then allowing generative design software to build a part that ticks all the boxes. That enables them to get from a list of requirements to machined metal parts in under 48 hours.

Having brought generative design to the fore, the next step was to use AI to start accelerating the process, with text-

But from there, the technology can go even further, he explains. “Now we’ve gone and taken that so you can just chat with the AI voice or [use] text, and it creates a JSON file, a standard input file, and then that feeds in. So that’s radically different, right? It skips all the CAD and FEA clicks, and jumps right from, ‘I’m discussing what it is that I want with this agent’, to actually having what I want.”

McClelland explains that while these may indeed be big leaps, they are also laying the groundwork for incremental

model and a stress analysis report.” evolution in people’s workflows.

1

“In the text-to-structure workflow that I talk about, the underlying technology of generative designevolved structures is topology optimisation. So, there’s a topology optimisation engine in there that’s like a function call. And ideally, you can just pop that out, right? The Textto-Structure is somewhat agnostic to what that is. So I think what you’ll see is maybe user interfaces start to fall away.”

He believes that, while today the UIs are a differentiator between CAD softwares, the future will see engineers interface with these systems in a more natural manner. “[Like] the way that you interface with teams of people, or with people that you want to work with you - so, much more natural interaction, discussing things, sketching things, iterating in a collaborative fashion.”

“We’re basically at the next big paradigm,” he says. “I went and talked to the [NASA] engineer that took us from

drawing boards to AutoCAD, and then I talked to the engineer that took us from AutoCAD to what we primarily use now, which is PTC Creo, what used to be ProEngineer. And now we’re ready, I think, for another big step.”

He explains that the majority of the software designers and engineers use today was developed when engineers first had powerful computers sitting on their desk, but before the existence of GPUs, the cloud, and in most cases, the internet.

“I think a lot of those legacy programs have really just taken baby steps to embracing those new compute resources. That next big step is with AI. It really goes along with cloud and GPU and taking advantage of all the amazing things that IT development has to offer the field of engineering.”

During the Covid-19 pandemic, McClelland used the slowdown to take a more in-depth look at what AI might deliver for mechanical engineering. Having been interested in the topic for decades, he began further research into its capabilities. The day ChatGPT went public, he says, was a major milestone in his thinking.

“If you asked my wife, I was wandering around in a daze. I couldn’t believe that we were living in this timeline. The ChatGPT moment brought a lot of new awareness to my work,” he recalls.

Trying out new AI tools at NASA begins with a sprint proposal, a small, 100-hour proposal that can be quickly attained. Having done “a bunch of market research”, McClelland got the green light to try out different AI tools. Once some promise is identified, the next stage

focuses on finding applications.

In a facility packed with scientists, engineers and researchers, McClelland began talking to people that might have a need for these AI tools. “I found real applications to apply these to, and that’s how you know if an idea really holds water, when you try it with real

might have a need for these AI tools. “I found real needs,” he says.

“We’ve had over 60 applications now, and as you have more applications, you start to find all the edge cases. What are all the complicated factors that you need to take into account that maybe you didn’t think about at first?” To this point, this approach has worked well, resulting in

the Evolved Structures Guide.

With text-to-structure working well, the next stage is to make it more generalisable, says McClelland, so as to cover more of the edge cases. “Which means making it more complex over time, by using real applications on it.”

●

1 The

Getting others to adopt this method is challenging, much like getting people to switch to a new CAD software, he says, but can be achieved with training and by explaining the promised benefits.

“You’ve got to demonstrate it. You’ve got to build things. You’ve got to test. That’s what matures the technology, so that it comes into broad usage,” he says.

McClelland says that the full power of AI is, as yet, “under hyped”, and that a lot of people are not factoring its potential into their

Getting others to adopt this method is challenging, that it comes into broad usage,” he says. thinking.

edge AI tools that we can access

“Fortunately, we have an AI that we can access internally. We have cuttingwith our work data. But I was at a conference recently and I asked people in the audience how many of them can use one of these foundation large language models with their work data with official approval. And, you know, very few people raised their hands.”

The challenge is lining up the right applications that it can be tested on – the hard bits, he says. “That’s where you find the challenges. Just like how agile software development approaches are used, so that you can get something out there, you have to see what the use cases are, and then iterate, release, iterate.”

A flagship future mission is the Habitable Worlds Observatory, the first telescope designed specifically to search for signs of life on planets orbiting other stars. McClelland hopes that aspects of its design can be accelerated using these AI technologies.

“It’s huge – the size of a three-storey house. You’re going to have these big truss structures, so generating those automatically would be very high-value.”

With no room for error, using AI to generate such structures would prove an incredible benchmark for McClelland and the engineers at Goddard. What it could lead to –and where it might take us next – is entirely out of this world. www.nasa.gov/goddard

● 2 This generatively designed

●

3 Ryan McClelland at NASA Goddard Space Center, inspecting parts ready for use in space missions

●

4 This generatively designed hatch closure mechanism captures and retains orbiting capsules of sample materials collected by rovers on Mars’ surface

» How do modern design courses tackle the subject of artificial intelligence? Stephen Holmes speaks to leading educators about how they see the future of AI and the role that today’s students will play in taking AI tools and skills into workplaces that may still be coming to terms with the technology

When a story hits the headlines about the use of artificial intelligence (AI) in education, the focus tends to be some egregious example of plagiarism, fakery or misinformation.

In product development courses, however, AI has a more constructive role to play. And that’s leading to some vital conversations, not only around the possibilities that this technology brings to design work, but also the extent to which future employers will value job candidates with a sure grasp of how to apply it.

At Wayne State University in Detroit, Michigan, associate professor of teaching

Claas Kuhnen is a vocal advocate for AI software, who believes that exposing design students to this technology is essential.

“AI should be seen as a tool to modernise labour-intensive workflows, not as a shortcut to replace effort,” he says. “In the future, designers won’t be replaced by AI, but those skilled in leveraging it will have a competitive edge.”

It’s a view echoed by Dr Robert Phillips, a senior tutor within the School of Design at London’s Royal College of Art (RCA). “I think what we should be doing is asking

what jobs we want AI to take, so that we can retrain people to do better jobs,” he says. “I think we need to elevate how we’re talking about AI to focus on what we actually need it to do, and what – more importantly –we’re going to feed it.”

Phillips sees this as a topic on which the RCA has always focused. Rather than simply teaching design, he says, the emphasis at the college is on redefining the purpose of design and the directions in which it’s heading.

“I think ethics are key,” he adds. “Let’s take responsibility for AI and be part of the journey, rather than let it happen to us.”

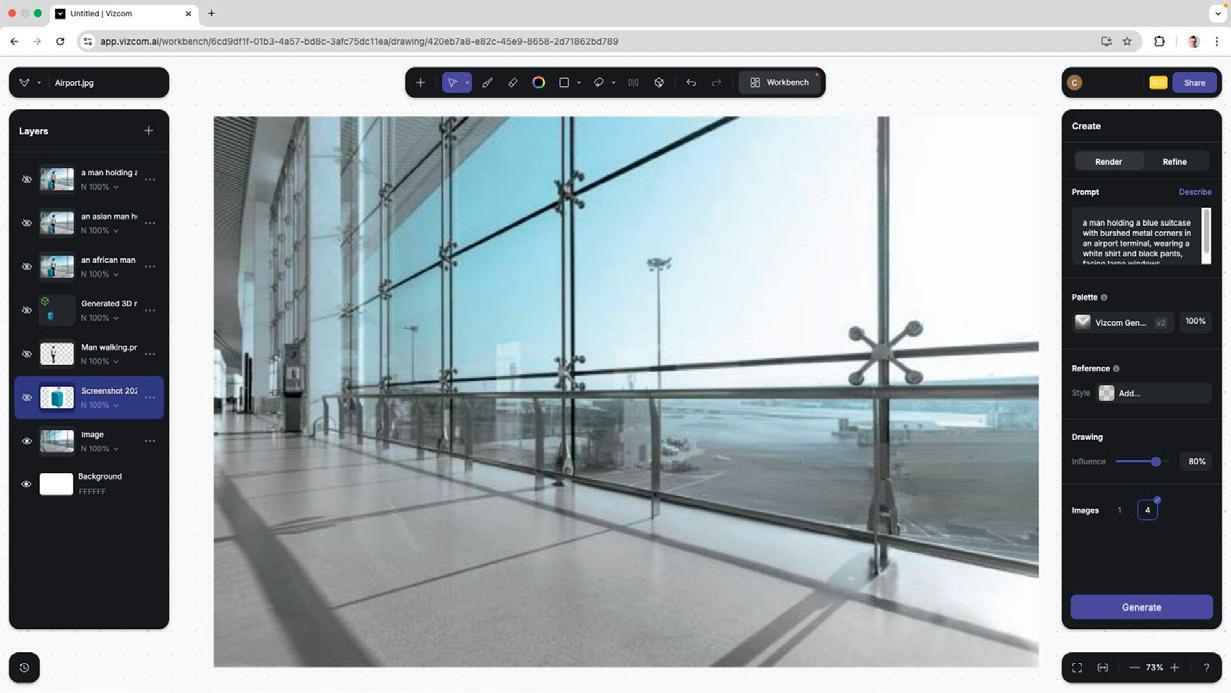

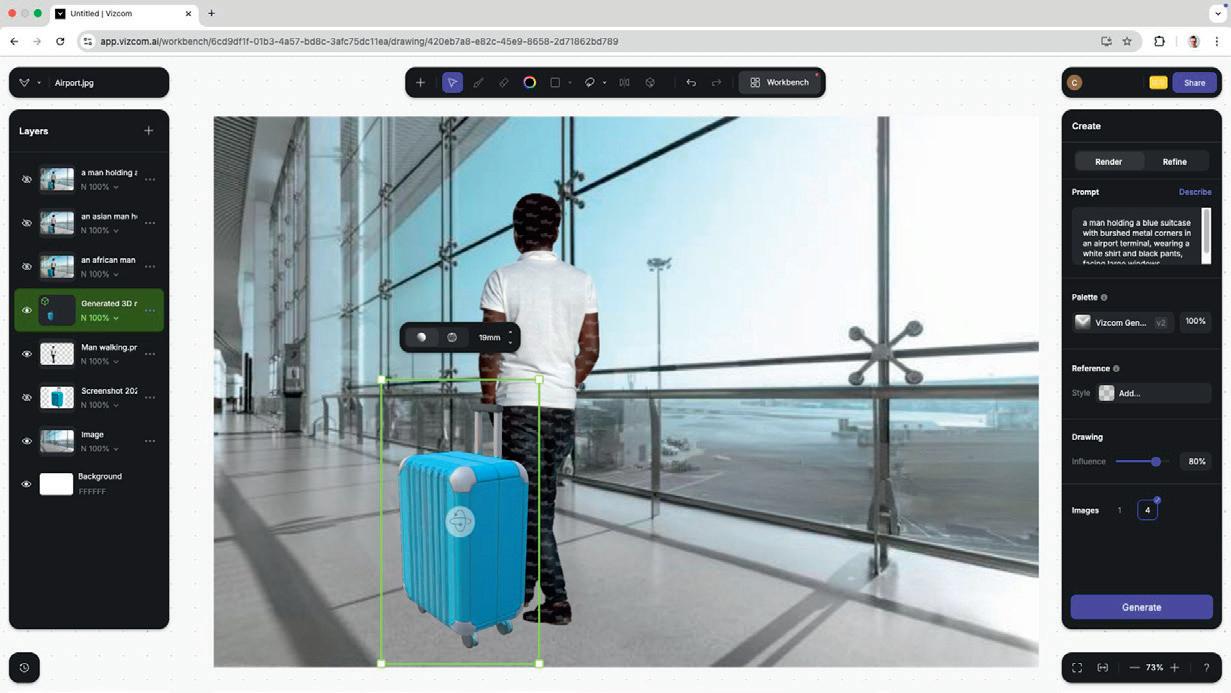

● 1 This rendering for a suitcase design was built by Claas Kuhnen to demonstrate the AI power of Vizcom to his students

● 2

● 3 First, a suitcase design is imported (a 2D sketch or screengrab of a 3D CAD model), along with a stock image of an airport

● 4 Second, an image of a human is added and composed alongside the suitcase

● 5 Finally, using prompts, an initial generated image is edited to fit requirements

Much of the design process centres on developing concepts and presenting them visually, says Claas Kuhnen, and this is an area where AI-generated images could be revolutionary.

“For example, when creating a new consumer product, we conduct research and begin ideation, exploring form, materials, and colours. Yet, brainstorming fatigue or reliance on familiar ideas can limit creativity,” he points out.

Instead, AI tools can offer advantages at multiple stages, not just by generating forms, but when looking to uncover details to inform a design project during the research phase, says Paul Russell, a teaching fellow in design at Loughborough University in the UK who regularly works with students to develop their CAD and visualisation skills.

“In terms of efficiencies, you could spend hours or days researching on Google and get back the same information that you could get back in a few minutes from ChatGPT – and it’s quicker to do that and verify it than it is to do it manually,” he says.

AI’s generative capabilities are also capable of offering up ‘happy accidents’ too, says Kuhnen. Much like Newton’s falling apple, a generated image might suggest materials or shapes that designers might otherwise not consider.

Naturally, this could lead to a debate of how much of a particular design is the student’s own work. It’s true that many of the products that surround us all were invented ‘by accident’, because inspiration can strike in random and mysterious ways.

At the same time, being able to judge the quality and originality of a job candidate’s design work remains a big concern for employers.

“We’ve always had this issue,” says Phillips at the RCA, but he says it’s like comparing a product made by hand with one made using a CNC machine.

“They’re different things!” he says. In the latter case, somebody has still had to create a file to be able to complete the CNC machining and make it possible to replicate, he says. “So someone has still taken the lead in the creative process.”

He believes that referencing will be key. In effect, a design project should reference its AI sources, just as another project might provide credits relating to the photography it uses. Design agencies often employ professional photographers and credit them for their work within a project. The same should be true with AI, he says. “Everyone needs to be really open about it, and I think we need to embrace it.”

Getting everybody to embrace AI in the workplace may be a sticking point, however. Educators acknowledge a growing divide, based on age, in terms of how AI tools are viewed. “It’s clear. Anyone over about 50, maybe late 40s, is apprehensive, while all the young people are excited and interested in it,” says Russell. “When CAD came about, there were loads of old people saying this is BS, it isn’t proper drafting, you know? And then young people picked it up and loved it. It was the same with rendering.”

He sees a clear pattern emerging, however. Put simply, the better students make the most of what AI tools can do, much like with any other technology. “I don’t think this is just true in design. I think I’ve seen it all around, with people using AI for coding, videography and more,” he says. “If you’re good, AI makes you better. If you don’t put in the effort, and you don’t have some of the skills, then it doesn’t enhance you as much, which I think is kind of scary because we want an equal playing field. But these tools actually exaggerate the difference between students.”

If students refuse to engage or put effort into other aspects of the course, he continues, there’s nothing to suggests that the results they produce using AI will sweep them to the top of the class. “With AI, you have to learn through doing. If they don’t put the time in, they don’t get quality results out.”

For him, it’s about giving students the baseline skills, knowledge and understanding, and then letting them run with it. They typically go above and beyond, he adds. In fact, what he teaches about AI to next year’s students will almost certainly be based on what he learns from the current year’s students.

At Wayne State University, Kuhnen agrees that AI tools have their limitations, and that ‘quality in, quality out’ is a steadfast guide. With visualisation, to get usable results, users must feed AI quality hand drawings, understand light and shadow dynamics, and compose images with correct perspective. “These foundational skills remain essential to create believable concept renderings,” he says. “Ultimately, AI doesn’t replace creativity; it complements it. By automating repetitive tasks, it frees designers to focus on what truly matters: innovation and artistry.”

At the Design and Technology Association, director of education Ryan Ball says secondary school educators have

‘‘

If you’re good, AI makes you better. If you don’t put in the effort, or don’t have some of the skills, then AI just doesn’t enhance you as much Paul Russell, Loughborough University ’’

often had to fight to get the curriculum updated to reflect new technologies. AI will be no different, he predicts.

For example, until recently, limitations on how much CAD and CAM could be used on GCSE projects in England and Wales forced students to ‘throw in’ handcrafted pieces and hand-drawn parts alongside their parametric CAD models and 3D-printed prototypes, just to satisfy the requirement for ‘traditional’ practices, he says. “How can AI be any different?”

Each use of AI – be it text-to-image, image-to-image, generative design, AI-powered FEA, image enhancement and so on – just involves tools, he says. “The skill lies in deciding when to use them.”

AI could also make the subject of Design & Technology more accessible to a wider range of students, he adds: “There are creative students who cannot draw well or get their ideas out their heads to share with others. AI offers a potential lifeline.”

One examination board suggests that, in order to attain the highest marks when generating designs, students must demonstrate “imaginative use of different design strategies for different purposes and as part of a fully integrated approach to designing.”

As Ball points out, that sounds like a “perfect chance” to put AI to work.

Whichever way you look at it, the adoption of AI could well prove a turning point for education, and provide course content with a real shake-up.

“Do we still need to teach modules around 3-axis machining when [because of AI tools], it’s almost at the point of ‘One click, done’?” asks Russell at Loughborough University.

“I think at some point, we’ll have to trust these AI technologies, right? It’s like autonomous vehicles. The common point is that it’s inevitable now.”

On the whole, most view the use of AI by students as a positive, although some issues raised include a deepening of the divide between the haves and have-nots.

And, without any overarching curricula or industry guidance for how AI is taught, encouraged and explained, it could potentially widen the gap between the best university courses and the rest of the field.

Ball suggests that the divide looks even greater at a secondary school level, where a drastic lack of IT infrastructure, especially in D&T, and even in some cases the banning of mobile phones, may indeed widen the gap further between some centres.

If AI is set to automate repetitive work and free designers to focus on innovation and artistry, then those without access to AI might struggle to reach the same heights as those that have it at their disposal.

“Ultimately, AI doesn’t replace creativity; it complements it,” concludes Kuhnen. “In the end, 3D CAD models and accurate product renderings must be crafted by hand, as AI lacks the creative precision required to meet the high standards we demand.”

While the tools used by today’s students looking to join tomorrow’s industry are advancing at pace, the fundamentals are still necessary to get the best from today’s AI software.

» Vital Auto is rewriting the playbook on evidential design loops in automotive design and innovation, using AI tools that were built in-house at the Coventry-based company

For Shay Moradi, head of technology at industrial design studio Vital Auto, AI is “a suitcase term”. It is commonly used to refer to many slightly different things, he explains: automation, machine learning, generative AI, and so on.

“We’ve not even hit peak fatigue on the term and it gets bandied about so much that it tarnishes some of the shine of doing genuinely useful things,” he says, speaking to DEVELOP3D as he travels back from an event in France to Vital’s headquarters in Coventry, UK.