Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Leading AEC software developers share their BIM 2.0 observations and projections

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

Leading AEC software developers share their BIM 2.0 observations and projections

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction

editorial

MANAGING EDITOR

GREG CORKE greg@x3dmedia.com

CONSULTING EDITOR

MARTYN DAY martyn@x3dmedia.com

CONSULTING EDITOR

STEPHEN HOLMES stephen@x3dmedia.com

advertising

GROUP MEDIA DIRECTOR

TONY BAKSH tony@x3dmedia.com

ADVERTISING MANAGER

STEVE KING steve@x3dmedia.com

U.S. SALES & MARKETING DIRECTOR

DENISE GREAVES denise@x3dmedia.com

subscriptions MANAGER

ALAN CLEVELAND alan@x3dmedia.com

accounts

CHARLOTTE TAIBI charlotte@x3dmedia.com

FINANCIAL CONTROLLER SAMANTHA TODESCATO-RUTLAND sam@chalfen.com

AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com

about

AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK

T. +44 (0)20 3355 7310

F. +44 (0)20 3355 7319

© 2025 X3DMedia Ltd

All rights reserved. Reproduction in whole or part without prior permission from the publisher is prohibited. All trademarks acknowledged. Opinions expressed in articles are those of the author and not of X3DMedia. X3DMedia cannot accept responsibility for errors in

Register your details to ensure you get a regular copy register.aecmag.com

Solibri acquires Xinaps, Xyicon extends reach of Revit, Spacio gets AI-powered façade design, and Snaptrude boosts Archicad interoperability, plus lots more

Nvidia DLSS 4.0 leans on AI for real-time 3D performance, AI Assistant for Archicad enters preview, Tektome liberates ‘dark data’, plus lots more

We ask Greg Schleusner, director of design technology at HOK for his thoughts on the AI opportunity

As we move into 2025, we ask several leading AEC software developers to share their observations and projections for BIM 2.0 and beyond

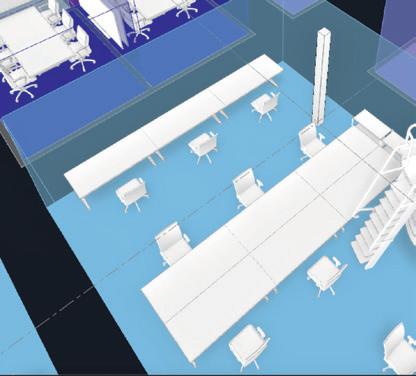

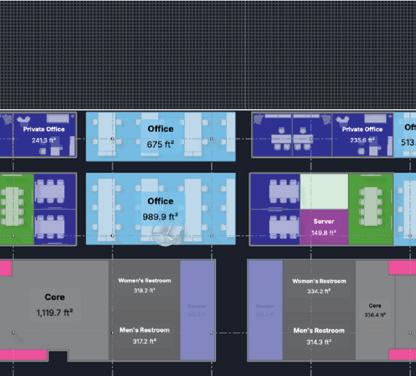

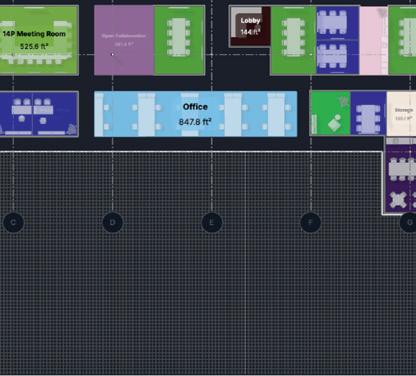

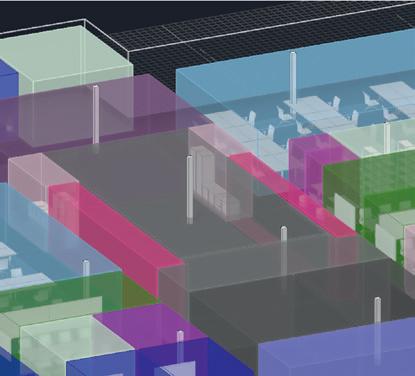

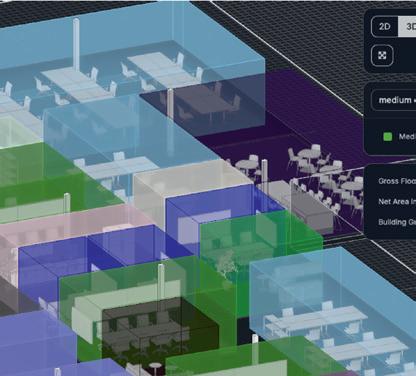

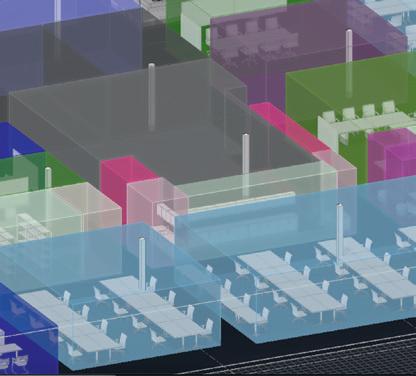

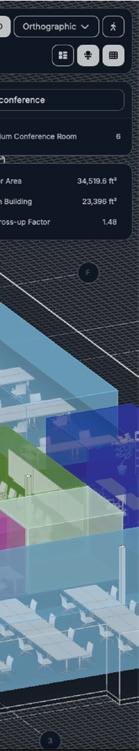

Hypar co-founder Ian Keough gives us the inside track as his cloud-based design tool puts the spotlight on space planning

On 11 - 12 June, our annual NXT BLD and NXT DEV conferences will bring together the AEC industry to help drive next generation workflows and tools

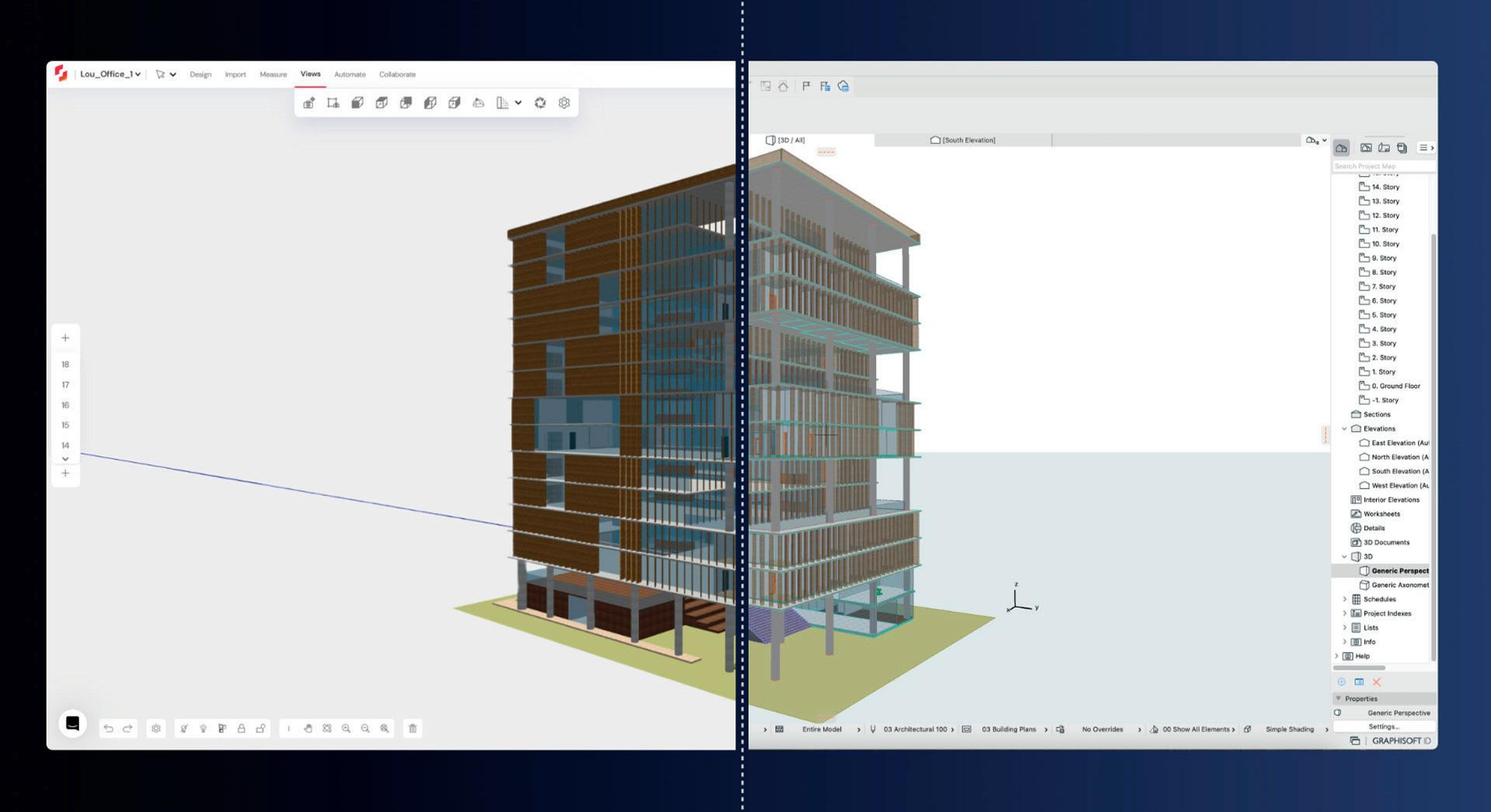

Snaptrude is working on enhanced interoperability between its web-based BIM authoring tool and Nemetschek Group BIM solutions, including Graphisoft Archicad, Allplan, and Vectorworks.

The aim is to enable architects to more easily transition between a range of BIM tools, harnessing the strengths of each tool at different project stages.

Interoperability with Nemetschek Group software will start with the ability to export Snaptrude projects into Archicad, ‘preserving all the parametric properties’ of BIM elements. Project teams on Snaptrude have shared workspaces that also include a centrally managed

library of standard doors, windows, and staircases. Upon import, Snaptrude objects will be automatically converted into editable families in Archicad.

In the future, the integration will extend to a bi-directional link between Snaptrude and Archicad for synchronisation of model data and changes. According to Snaptrude, this will further enhance collaboration and efficiency in the design process, as users will be able to switch back and forth between the programs.

Snaptrude already offers bi-directional support for Autodesk Revit, a workflow that we explore in this AEC Magazine article - www.tinyurl.com/Snap-Revit

■ www.snaptrude.com

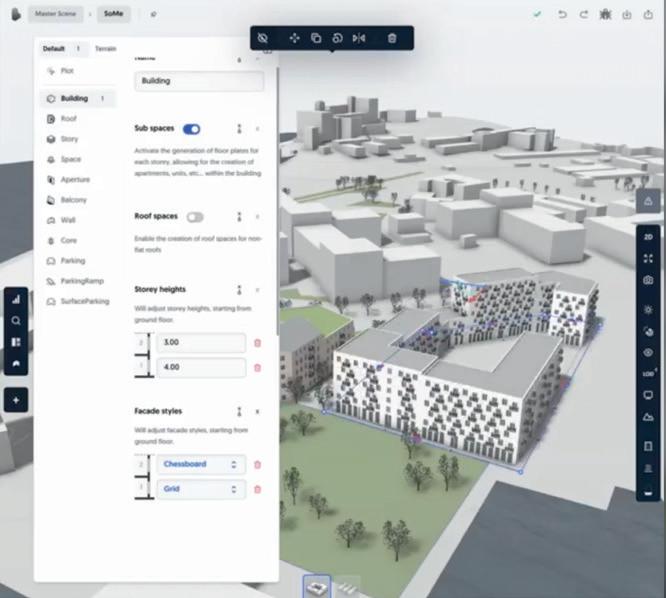

Spacio has added an AI-powered façade generation feature to its building design software.

The new feature is designed to significantly speed up early-stage façade design, offering ‘curated façade presets’ that ‘intelligently adapt’ to a building’s geometry. It also integrates with both manual and generative design workflows, and provides ‘instant visualisation’ of different architectural expressions.

“We’re not replacing creativity – we’re amplifying it,” said Spacio co-founder André Agi, whose software combines sketching with ‘instant 3D models’, and real-time analysis. “These tools let architects focus on what truly matters: creating exceptional spaces.”

Spacio is also launching a freemium version of its software and is building a community on Discord.

■ www.spacio.ai

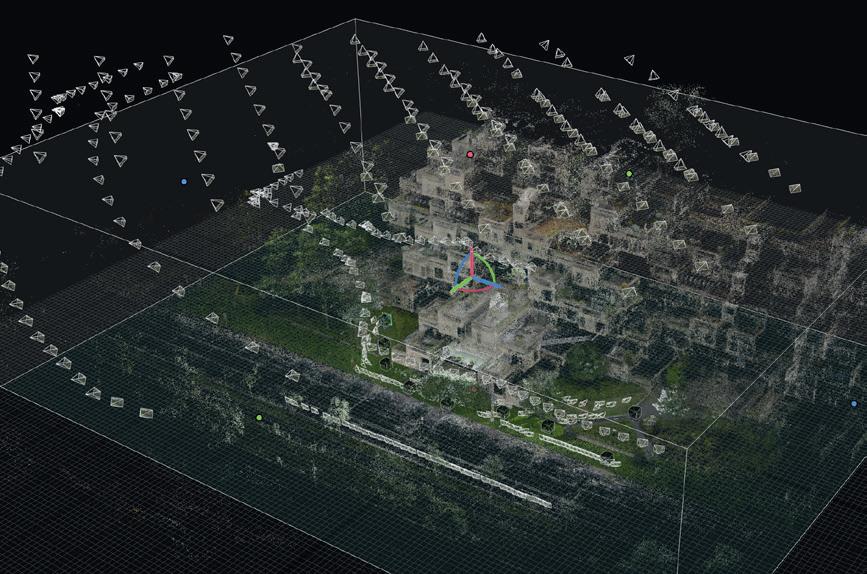

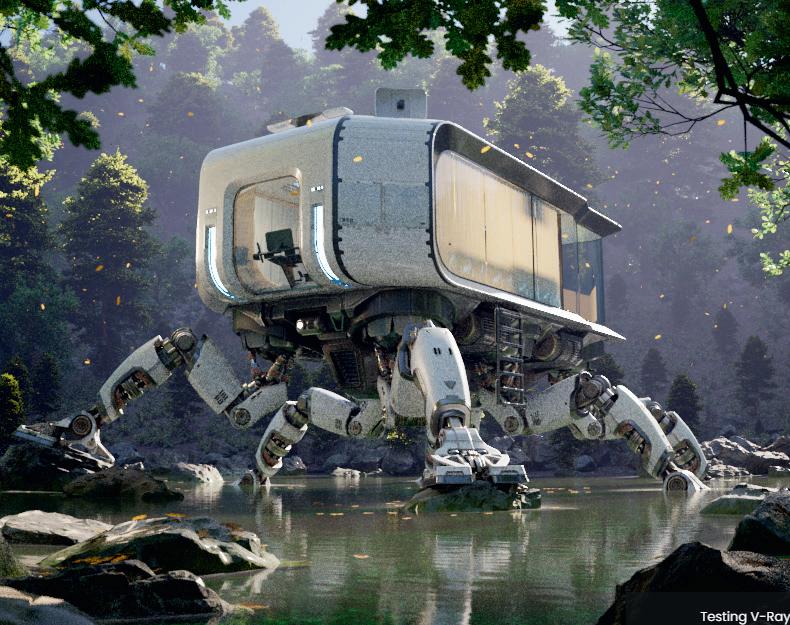

Ray 7 for SketchUp and V-Ray 7 Rhino, the latest releases of the photorealistic rendering plug-ins, include support for 3D Gaussian Splats, a technology that enables the rapid creation of complex 3D environments from photos or video.

VBy adding native support in V-Ray, SketchUp and Rhino users can now place buildings in context or render rich, detailed environments that can be reflected and accept shadows.

V-Ray 7 also includes new features for creating interactive virtual tours — immersive, panoramic experiences that can be customised with floor plans, personalised hotspots and other contextual details that will highlight a space’s attributes.

There have also been several improvements to V-Ray GPU rendering, inclusion support for caustics and the ability to use system RAM for textures, to free up GPU memory.

■ www.chaos.com

Elecosoft, a specialist in building lifecycle software, has announced its strategic merger with Pemac, a provider of cloud-based computerised maintenance management software (CMMS).

According to Elecosoft, the move will enhance its ability to deliver asset management solutions across manufacturing, life sciences, and healthcare, with a focus on compliance and operational efficiency. With offices in Cork and Dublin, Pemac will also expand Elecosoft’s presence in the Irish market.

■ www.elecosoft.com

Bentley Systems has appointed James Lee as chief operating officer (COO). Lee transitions from Google, where he held the position of general manager overseeing startups and artificial intelligence (AI) operations at Google Cloud ■ www.bentley.com

German tech investor, Maguar Capital, has acquired a majority investment in hsbcad, a specialist in offsite timber construction software. Hilde Sevens, who held previous roles at Nemetschek SCIA, Autodesk and Siemens PLM, will take over the role as CEO ■ www.hsbcad.com

Construction tech startup Automated Architecture (AUAR) has been awarded a Smart Grant of £341K by Innovate UK. The grant is will help AUAR scale up its building system and micro-factory platform to manufacture mid-rise timber housing, up to six storeys ■ www.auar.io

Frilo 2025, the latest release of the structural analysis software, includes a direct interface to Allplan BIM, a new PLUS program SLS+ for the design of splice connections, and the option of designing one and two-sided transverse joints to timber beams ■ www.frilo.eu/en

Hexagon’s Asset Lifecycle Intelligence (ALI) division has acquired CAD Service, a developer of advanced visualisation tools used to integrate CAD drawings, BIM models, and reality capture data into HxGN EAM, Hexagon’s asset management solution ■ www.hexagon.com

AEC and CAD solution service provider Mervisoft GmbH and software development company AMC Bridge have formed a strategic partnership to expand the range of development services offered to AEC firms in the DACH region (Germany, Austria, and Switzerland) ■ mervisoft.de ■ amcbridge.com

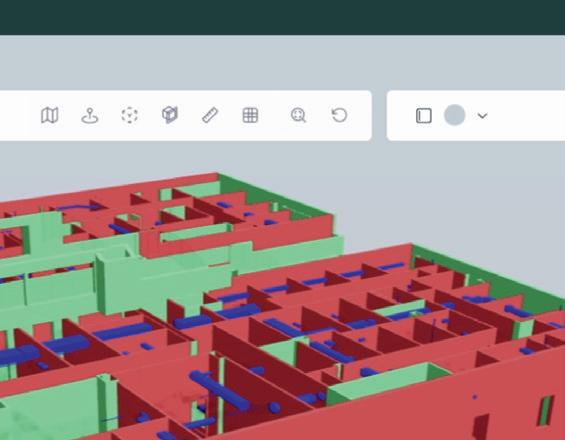

olibri, part of the Nemetschek Group, has acquired Xinaps, a specialist in BIM model QA software. Solibri has also announced Solibri CheckPoint, a new cloud-based model checking solution that connects directly to Autodesk Construction Cloud (ACC), BIM 360, and Procore.

Solibri CheckPoint is designed to help AEC firms ensure that their BIM projects, consisting of native Revit and IFC files, comply with ‘robust standards’.

The software includes customisable rules for model checking. Users can run clash detection, data validation, and free

■ www.solibri.com/checkpoint S

space checks, to find issues early in the design phase, then assign, and track issues directly within the platform.

For data validation, ‘Property check’ allows users to check the quality of model data, verifying that model elements meet required data standards for accuracy, consistency, and compliance.

Free Space checks allows users to check for clearance around critical elements to prevent obstructions and maintain functional, safe spaces, ensuring that target elements maintain the required spaces, dimensions, and alignments.

yicon has updated the Revit addin for its information modelling platform which is designed to give non-AEC professionals, such as building owners and project managers, real-time access to data embedded within Revit RVT files.

Xyicon’s information modelling platform, often used for planning and operations, centralises both graphical and non-graphical data into integrated 2D/3D models. The Revit add-in is designed to address the disconnect between AEC professionals and non-AEC project teams, by allowing anyone to work in a functional BIM environment.

BIM tools like Revit, as Xyicon explains, are built exclusively for AEC professionals, often leaving non-AEC stakeholders to rely

on traditional methods like PDF based diagrams and spreadsheets. These manual workflows are said to limit collaboration, especially for those without modelling expertise or access to BIM software.

With Xyicon’s Revit add-in, any user can view and update the Revit BIM model directly and contribute to its progress. For example, through the Xyicon platform, users can lay out new or additional furniture, assets and equipment, move placements, rotate positions, view and edit parameters, or delete assets. At the same time, AEC professionals retain full control over what gets synced back to Revit. According to the developers, this ensures alignment and accuracy in the final model.

■ www.xyicon.com

Tektome is a new AI platform for processing, automation, and quality checking of architectural design data through natural language.

“Dark data” can be analysed across various formats — including CAD, BIM, PDF, Word, and Excel — automatically identifying, structuring, and extracting insights

■ www.tektome.com

BIMlogiq Copilot, an AI-powered tool for automating tasks in Revit, now features an enhanced code generation model, several new public commands available to all users, and the ability to share saved commands with others

■ www.bimlogiq.com

Nvidia is making it easier to build AI agents and creative workflows on workstations and PCs. The company’s new AI foundation models — neural networks trained on huge amounts of raw data — are optimised for performance on Nvidia GPUs ■ www.nvidia.com

Rose is a new Revit plug-in designed to streamline BIM classification. The software uses a multimodal AI model to evaluate Revit families and family instances, then updates them with a normalised name parameter. Rose uses computer vision to understand elements ■ www.ulama.tech/products/rose

LookX V3.0, the latest release of the architectural-focused text to image tool, is said to be able to generate visuals that are ‘virtually indistinguishable’ from real-world designs. The new AI model can focus on details such as intricate façades or complex structural elements and understand prompts better ■ www.lookx.ai

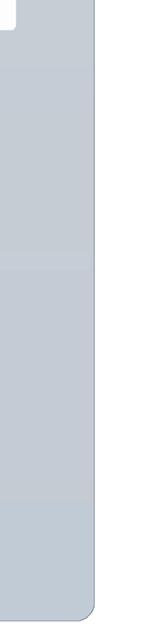

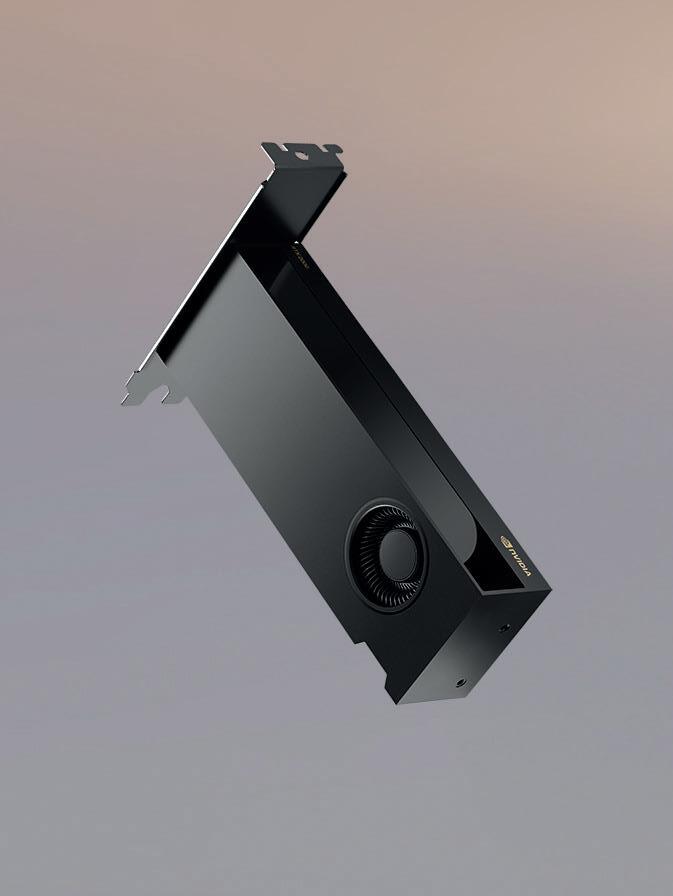

vidia DLSS 4, the latest release of the suite of neural rendering technologies that use AI to boost real time 3D performance, will soon be supported in visualisation software – D5 Render, Chaos Vantage and Unreal Engine.

The headline feature of DLSS 4, ‘Multi Frame Generation’, brings revolutionary performance versus traditional native rendering, according to Nvidia.

Multi Frame Generation is an evolution of Single Frame Generation, which was introduced in DLSS 3 to boost frame rates with Nvidia Ada Gen GPUs by using AI to generate a single frame between every pair of traditionally rendered frames.

In DLSS 4, Multi Frame Generation takes this one step further by using AI to generate up to three additional frames

between traditionally rendered frames. The feature is available exclusively on the new Blackwell-based Nvidia RTX 50 Series GPUs (see page WS40).

Multi Frame Generation can also work in tandem with other DLSS technologies including super resolution (where AI outputs a higher-resolution frame from a lower-resolution input) and ray reconstruction (where AI generates additional pixels for intensive ray-traced scenes). When these technologies are combined, it means 15 out of every 16 pixels are generated by AI — much faster than rendering pixels in the traditional way.

According to Nvidia, in D5 Render enabling DLSS 4 can lead to a four-fold increase in frame rates, leading to much smoother navigation of complex scenes.

■ www.nvidia.com

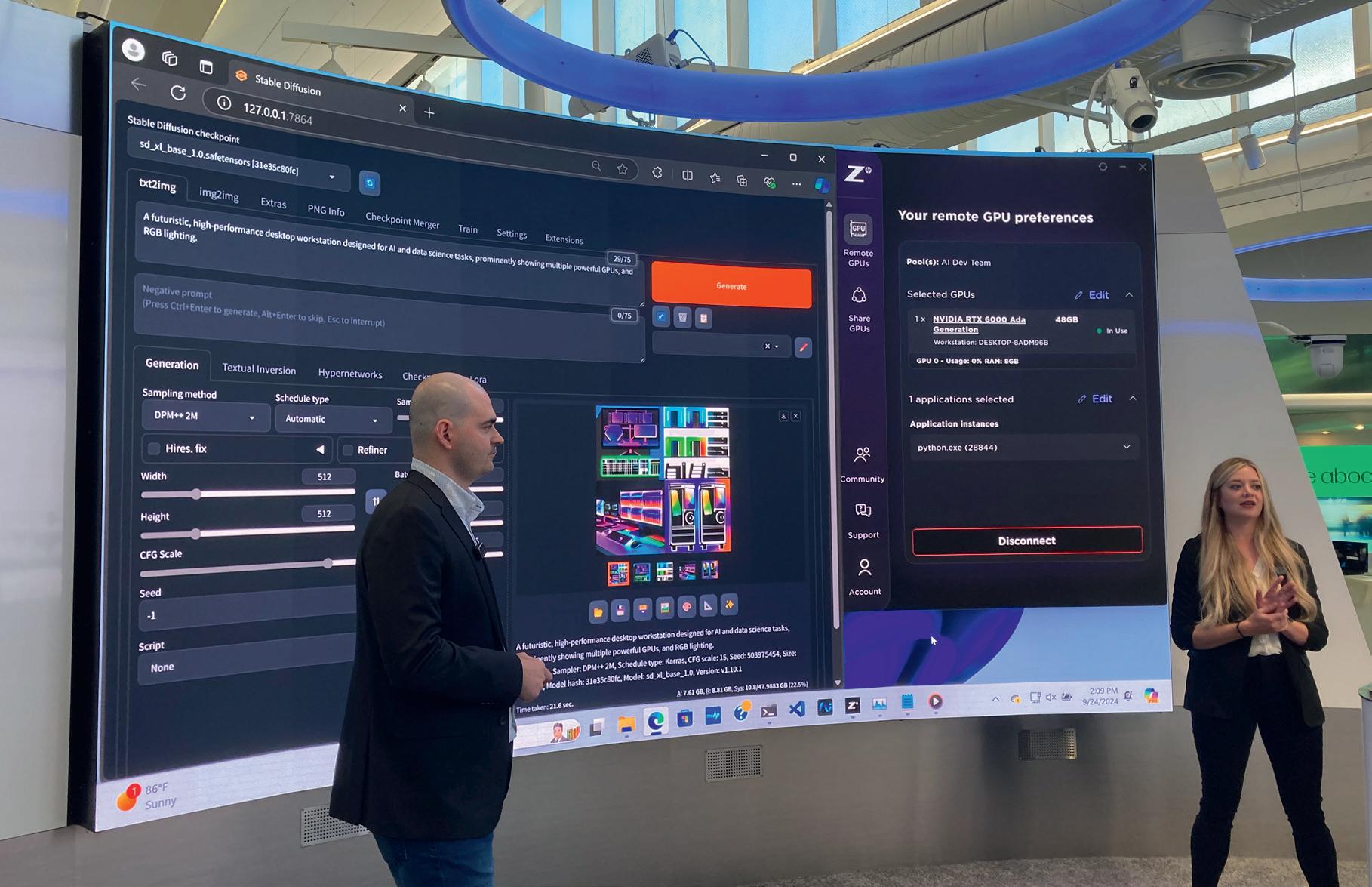

The Nemetschek Group has previewed AI Assistant, an AI-agent-based technology for Archicad that builds on the AI layer the Group announced in October 2024. The AI Assistant will be embedded directly into Archicad as an integrated AI chatbot, and there are plans to expand the integration to other Group brands.

According to Nemetschek, AI Assistant will streamline creative exploration while saving time and ensuring quality and compliance. It will feature product knowledge, industry insights, BIM model

queries, and the integration of AI Visualizer, a text-to-image generator powered by Stable Diffusion.

■ www.nemetschek.com

In AEC, AI rendering tools have already impressed, but AI model creation has not – so far. Martyn Day spoke with Greg Schleusner, director of design technology at HOK, to get his thoughts on the AI opportunity

One can’t help but be impressed by the current capabilities of many AI tools. Standout examples include Gemini from Google, ChatGPT from OpenAI, Musk’s Grok, Meta AI and now the new Chinese wunderkind, DeepSeek.

Many billions of dollars are being invested in hardware. Development teams around the globe are racing to create an artificial general intelligence, or AGI, to rival (and perhaps someday, surpass) human intelligence.

In the AEC sector, R&D teams within all of the major software vendors are hard at work on identifying uses for AI in this industry. And we’re seeing the emergence of start-ups claiming AI capabilities and hoping to beat the incumbents at their own game.

However, beyond the integration of ChatGPT front ends, or yet another AI renderer, we have yet to feel the promised power of AI in our everyday BIM tools.

The rendering race

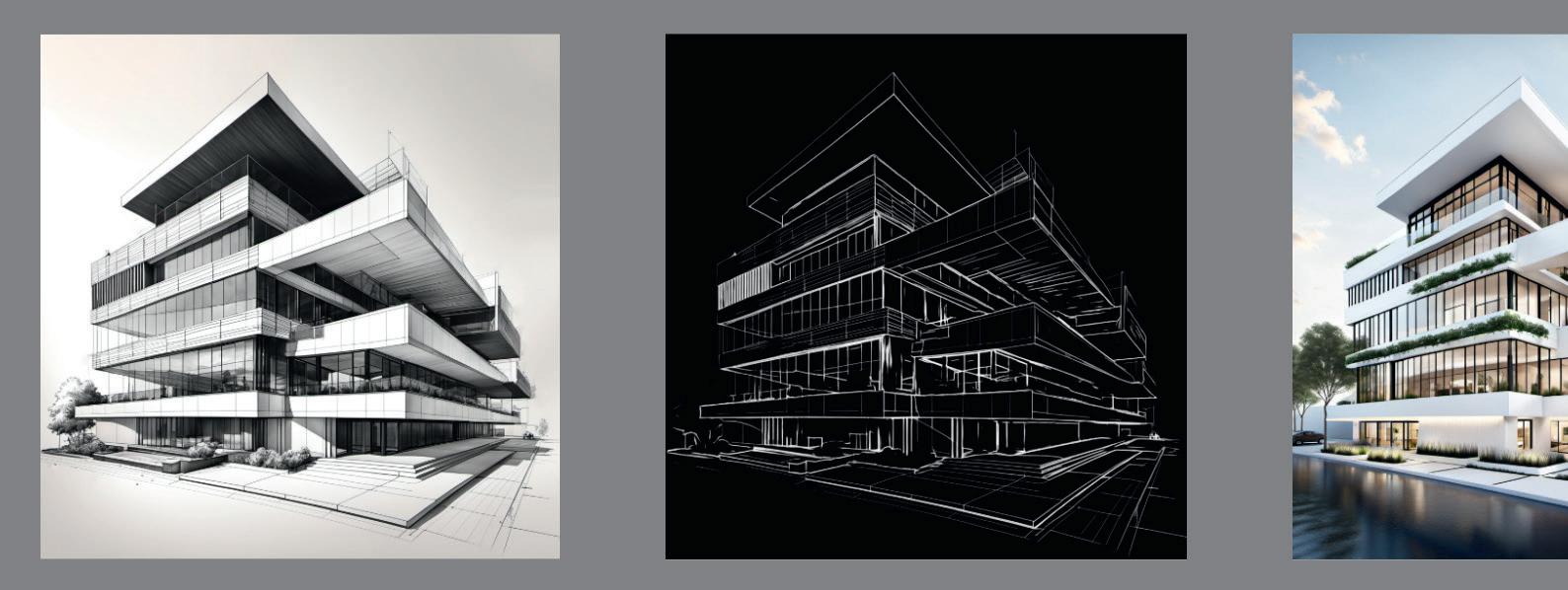

The first and most notable application area for AI in the field of AEC has been rendering, with the likes of Midjourney, Stable Diffusion, Dall-E, Adobe Firefly and Sketch2Render all capturing the imaginations of architects.

While the price of admission has been low, challenges have included the need to specify words to describe an image (there is, it seems, a whole art to writing prompting strategies) and then somehow remain in control of its AI generation through subsequent iterations.

In this area, we’ve seen the use of LoRAs (Low Rank Adaptations), which implement trained concepts/styles and can ‘adapt’ to a base Stable Diffusion model, and ControlNet, which empowers precise and structural control to deliver impressive results in the right hands. For those wishing to dig further, we recommend familiarising yourself with the amazing work of Ismail Seleit and his custom-trained LoRAs combined with ControlNet (www.instagram.com/ismailseleit)

For those who’d prefer not to dive so deep into the tech, SketchUp Diffusion (www.tinyurl.com/SketchUp-Diffusion), Veras (www.evolvelab.io/veras), and AI Visualizer (for Archicad, Allplan and Vectorworkswww.tinyurl.com/AI-visualizer), have helped make AI rendering more consistent and likely to lead to repeatable results for the masses.

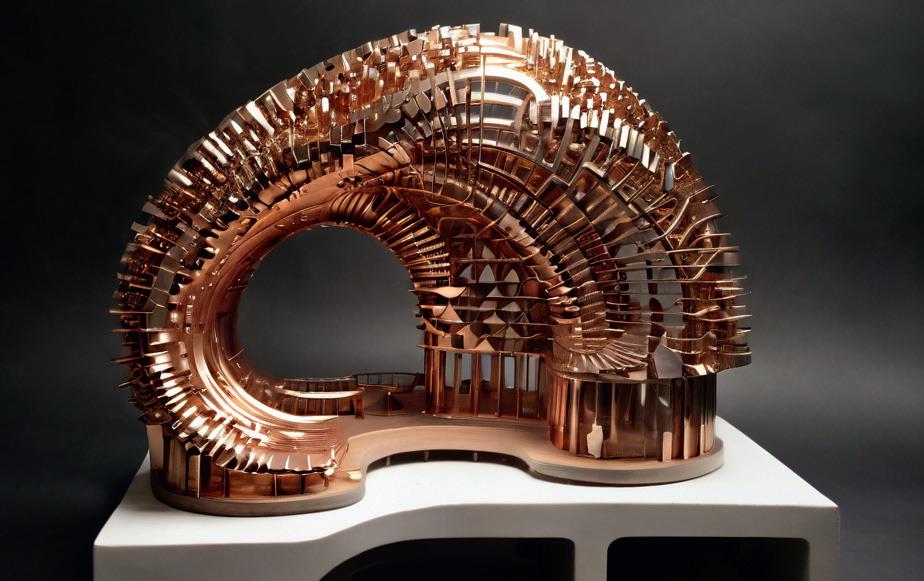

However, when it comes to AI ideation, at some point, architects would like to bring this into 3D – and there is no obvious way to do this. This work requires real skill, interpreting a 2D image into a Rhino model or Grasshopper script, as demonstrated by the work of Tim Fu at Studio Tim Fu (www.timfu.com).

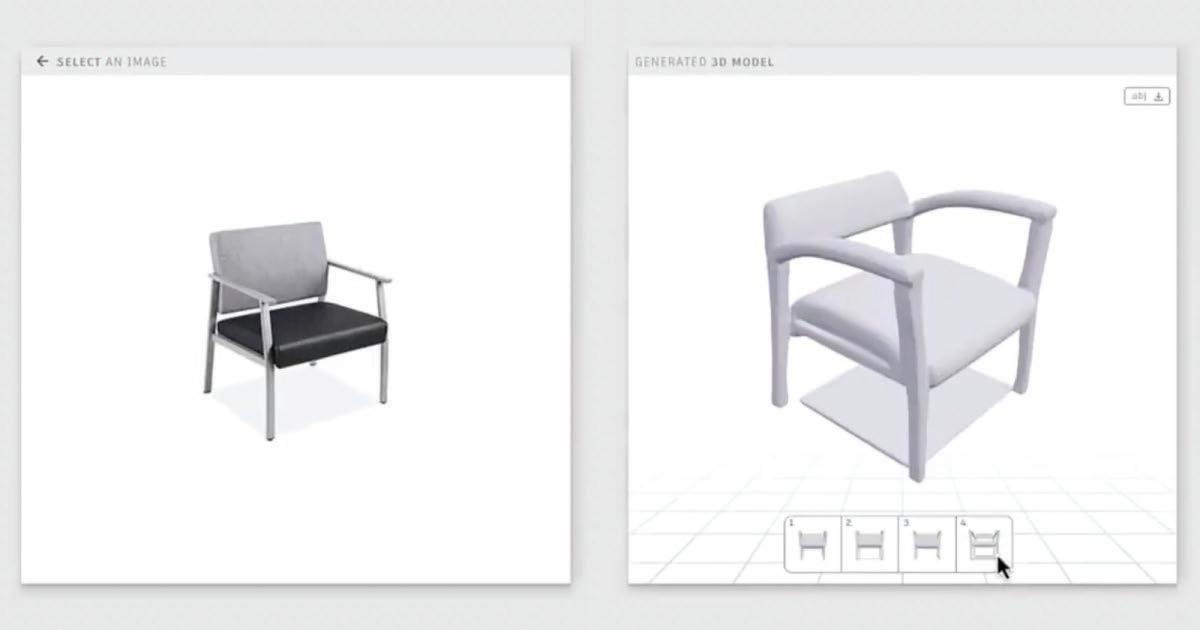

It’s possible that AI could be used to auto-generate a 3D mesh from an AI conceptual image, but this remains a challenge, given the nature of AI image generation. There are some tools out there which are making some progress, by ana-

lysing the image to extract depth and spatial information, but the resultant mesh tends to come out as one lump, or as a bunch of meshes, incoherent for use as a BIM model or for downstream use.

Back in 2022, we tried taking 2D photos and AI-generated renderings from Hassan Ragab into 3D using an application called Kaedim (www.tinyurl.com/AEC-tsunami). But the results were pretty unusable, not least because at that time Kaedim had not been trained on architectural models and was more aimed at the games sector.

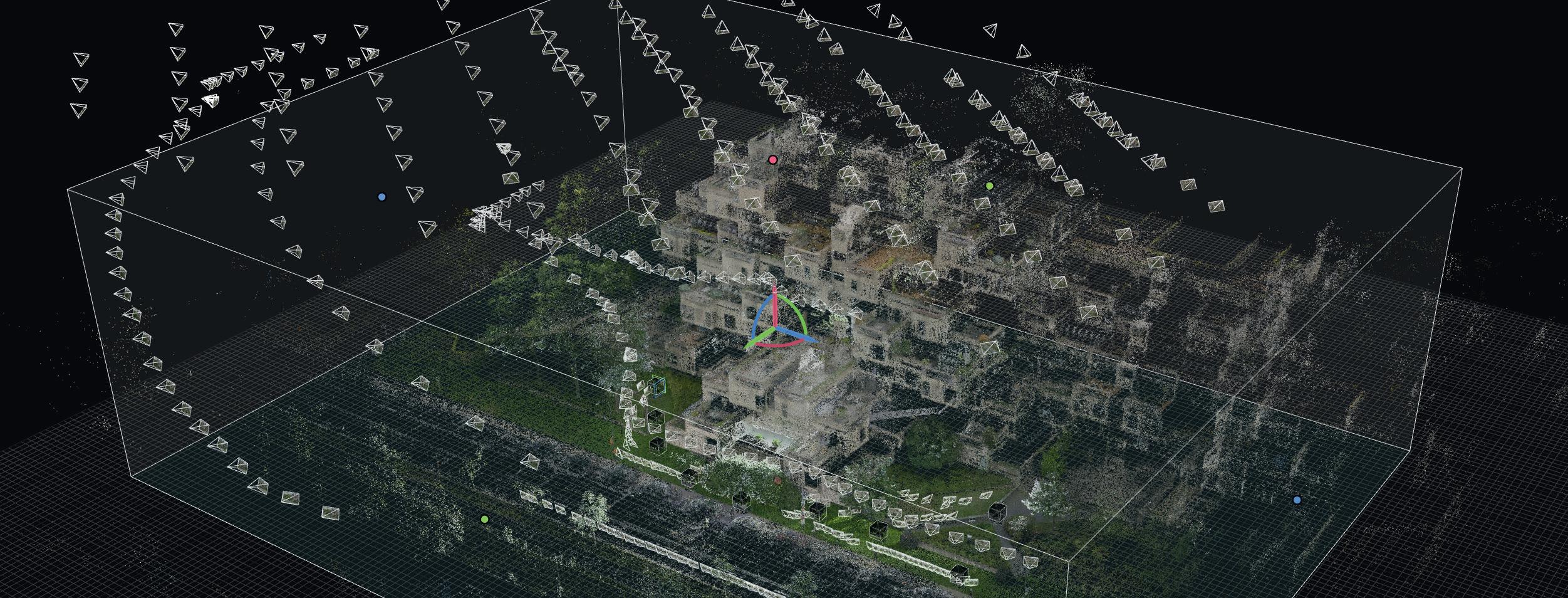

Of course, if you have multiple 2D images of a building, it is possible to recreate a model using photogrammetry and depth mapping.

Text to 3D

It’s possible that the idea of auto-generating models from 2D conceptual AI output will remain a dream. That said, there are now many applications coming

Stable Diffusion architectural image courtesy of James Gray. Generated with ModelMakerXL, a custom trained LoRA by Ismail Seleit. Follow Gray @ www.linkedin.com/ in/james-gray-bim

online that aim to provide the AI generation of 3D models from text-based input.

The idea here is that you simply describe in words the 3D model you want to create – a chair, a vase, a car – and AI will do the rest. AI algorithms are currently being trained on vast datasets of 3D models, 2D images and material libraries.

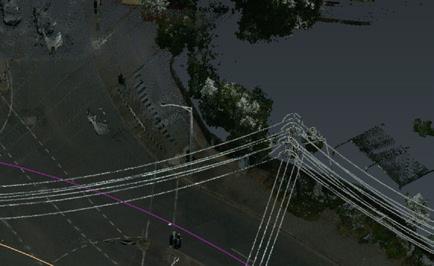

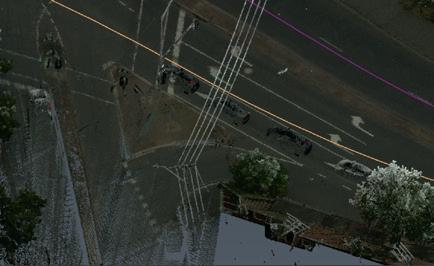

While 3D geometry has mainly been expressed through meshes, there have been innovations in modelling geometry with the development of Neural Radiance Fields (NeRFs) and Gaussian splats, which represent colour and light at any point in space, enabling the creation of photorealistic 3D models with greater detail and accuracy.

Today, we are seeing a high number of firms bringing ‘text to 3D’ solutions to market. Adobe Substance 3D Modeler has a plug-in for Photoshop that can perform text-to-3D. Similarly, Autodesk demonstrated similar technology — Project Bernini — at Autodesk University 2024 (www.tinyurl.com/AEC-AI-Autodesk)

However, the AI-generated output of these tools seems to be fairly basic — usually symmetrical objects and more aimed towards creating content for games.

In fact, the bias towards games content generation can be seen in many offerings. These include Tripo (www.tripo3d.ai), Kaedim (www.kaedim3d.com), Google DreamFusion (www.dreamfusion3d.github.io) and Luma AI Genie (www.lumalabs.ai/genie)

There are also several open source alternatives. These include Hunyuan3D-1 (www.tinyurl.com/Hunyuan3D), Nvidia’s Magic 3D (www.tinyurl.com/NV-Magic3D) and Edify (www.tinyurl.com/NV-edify)

Of course, the technology is evolving at an incredible pace. Indeed, Krea’s text to image model (www.krea.ai) and Hunyuan 3D’s image to 3D (www.tinyurl.com/hunyuan-3D) have just added promising 2D to 3D capabilities. With Krea, a user can click on an image of a car, automatically isolating from its forest background, then rotate it as if by magic. The software autogenerates the fully bitmapped 3D model. Some architects have shared their experiments on LinkedIn, with impressive results, producing non-symmetrical, complex forms from AI generated images - even creating interior layouts.

Louis Morion, architect at Architekturbüro Leinhäupl + Neuber, believes the art of image to 3D model is to create white architectural-style images with no textures, no noise to gives the best results.

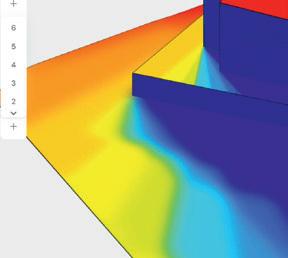

When AEC Magazine spoke to Greg Schleusner of HOK on the subject of textto-3D, he highlighted D5 Render (www. aecmag.com/tag/d5-render), which is now an incredibly popular rendering tool in many AEC firms.

The application comes with an array of AI tools, to create materials, texture maps and atmosphere match from images. It supports AI scaling and has incorporated Meshy’s (www.meshy.ai) text-to-AI generator for creating content in-scene.

That means architects could add in simple content, such as chairs, desks, sofas and so on — via simple text input during the arch viz process. The items can be placed in-scene on surfaces with intelligent precision and are easily edited. It’s content on demand, as long as you can

when it comes to work that will be shown to clients. So, while there is certainly potential in these types of generative tools, mixing fantasy with reality in this way doesn’t come problem-free.

It may be possible to mix the various model generation technologies. As Schleusner put it: “What I’d really like to be able to do is to scan or build a photogrammetric interior using a 360-degree camera for a client and then selectively replace and augment the proposed new interior with new content, perhaps AI-created.”

Gaussian splat technology is getting good enough for this, he continued, while SLAM laser scan data is never dense enough. “However, I can’t put a Gaussian splat model inside Revit. In fact, none of the common design tools support that emerging reality capture technology, beyond scanning. In truth, they barely support meshes well.”

LLMs and AI

At the time of writing, DeepSeek has suddenly appeared like a meteor, seemingly out of nowhere, intent on ruining the business models of ChatGPT, Gemini and other providers of paid-for AI tools.

Schleusner was early into DeepSeek and has experimented with its script and code-writing capabilities, which he described as very impressive.

LLMs, like ChatGPT, can generate Python scripts to perform tasks in minutes, such as creating sample data, training machine learning models, and writing code to interact with 3D data.

Schleusner is finding that AI-generated code can accomplish these tasks relatively quickly and simply, without needing to write all the code from scratch himself.

“While the initial AI-generated code may not be perfect,” he explained, “the ability to further refine and customise the code is still valuable. DeepSeek is able to generate code that performs well, even on large or complex tasks.”

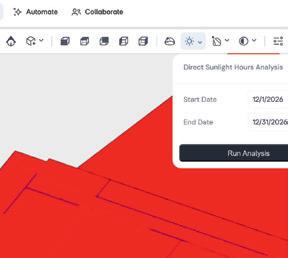

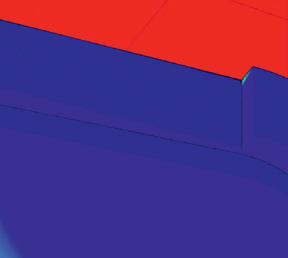

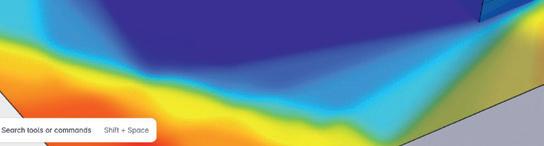

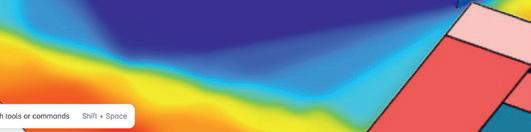

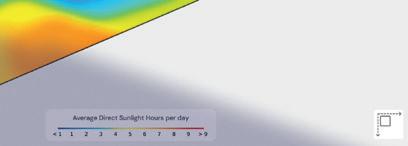

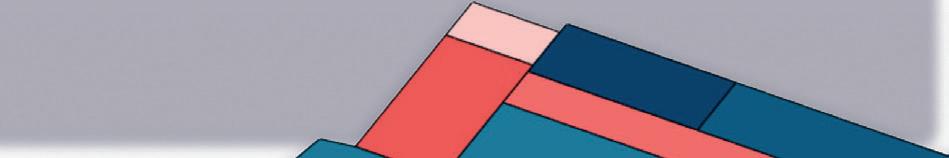

With AI, much of the expectation of customers centres on the addition of these new capabilities to existing design products. For instance, in the case of Forma, Autodesk claims the product uses machine learning for real-time analysis of sunlight, daylight, wind and microclimate.

However, if you listen to AI-proactive firms such as Microsoft, executives talk a lot about ‘AI agents’ and ‘operators’, built to assist firms and perform intelligent tasks on their behalf.

Microsoft CEO Satya Nadella is quoted

as saying, “Humans and swarms of AI agents will be the next frontier.” Another of his big statements is that, “AI will replace all software and will end software as a service.” If true, this promises to turn the entire software industry on its head.

Today’s software as a service, or SaaS, systems are proprietary databases/silos with hard-coded business logic. In an AI agent world, these boundaries would no longer exist. Instead, firms will run a multitude of agents, all performing business tasks and gathering data from any company database, files, email or website. In effect, if it’s connected, an AI agent can access it.

At the moment, to access certain formatted data, you have to open a specific application and maybe have deep knowledge to perform a range of tasks. An AI agent might transcend these limitations to get the information it needs to make decisions, taking action and achieving business-specific goals.

and accuracy are paramount. On the subject of AI agents, Schleusner said he has a very positive view of the potential for their application in architecture, especially in the automation of repetitive tasks. During our chat, he demonstrated how a simple AI agent might automate the process of generating something as simple as an expense report, extracting relevant information, both handwritten and printed from receipts.

He has also experimented by creating an AI agent for performing clash detection on two datasets, which contained only XYZ positions of object vertices. Without creating a model, the agent was able to identify if the objects were clash-

‘‘

programmatic way to interact with data and automate key processes. As he explained, “There’s a big opportunity to orchestrate specialised agents which could work together, for example, with one agent generating building layouts and another checking for clashes. In our proprietary world with restrictive APIs, AI agents can have direct access and bypass the limits on getting at our data sources.”

For the foreseeable future, AEC professionals can rest assured that AI, in its current state, is not going to totally replace any key roles — but it will make firms more productive.

The potential for AI to automate design, modelling and documentation is currently overstated, but as the technology matures, it will become a solid assistant. And yes, at some point years hence, AI with hard-coded knowledge will be able to automate some new aspects of design, but I think many of us will be retired before that happens. However, there are benefits to be had now and firms should be experimenting with AI tools.

AI agents could analyse vast amounts of data, such as a building designs, to predict structural integrity, immediately flag up if a BIM component causes a clash, and perhaps eventually generate architectural concepts

AI agents could analyse vast amounts of data, such as a building designs, to predict structural integrity, immediately flag up if a BIM component causes a clash, and perhaps eventually generate architectural concepts. They might also be able to streamline project management by automating routine tasks and providing realtime insights for decision-making.

The main problem is going to be data privacy, as AI agents require access to sensitive information in order to function effectively. Additionally, the transparency of AI decision-making processes remains a critical issue, particularly in high-stakes AEC projects where safety, compliance

ing or not. The files were never opened. This process could be running constantly in the background, as teams submitted components to a BIM model. AI agents could be a game-changer when it comes to simplifying data manipulation and automating repetitive tasks.

Another area where Schleusner feels that AI agents could be impactful is in the creation of customisable workflows, allowing practitioners to define the specific functions and data interactions they need in their business, rather than being limited by pre-built software interfaces and limited configuration workflows.

Most of today’s design and analysis tools have built-in limitations. Schleusner believes that AI agents could offer a more

We are so used to the concept of programmes and applications that it’s kind of hard to digest the notion of AI agents and their impact. Those familiar with scripting are probably also constrained by the notion that the script runs in a single environment. By contrast, AI agents work like ghosts, moving around connected business systems to gather, analyse, report, collaborate, prioritise, problem-solve and act continuously. The base level is a co-pilot that may work alongside a human performing tasks, all the way up to fully autonomous operation, uncovering data insights from complex systems that humans would have difficulty in identifying.

If the data security issues can be dealt with, firms may well end up with many strategic business AI agents running and performing small and large tasks, taking a lot of the donkey work from extracting value from company data, be that an Excel spreadsheet or a BIM model.

AI agents will be key IP tools for companies and will need management and monitoring. The first hurdle to overcome is realising that the nature of software, applications and data is going to change radically and in the not-too-distant future.

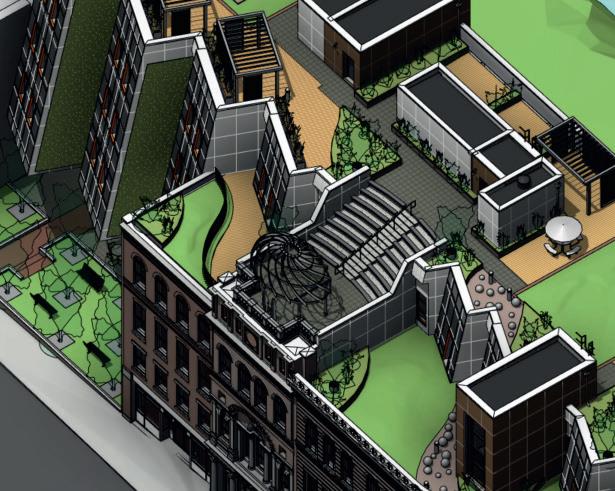

As we move into 2025, we ask five leading AEC software developers to share their observations and projections for BIM 2.0

The AECO industry has a lot to be proud of. You have constructed iconic skyscrapers, completed expansive highway systems, and restored historic monuments like the NotreDame de Paris.

But there’s more work to do. We hold the responsibility of designing and making our homes, workplaces, and communities. We must also solve for complex global challenges like housing growing populations and improving the resiliency of the built world to withstand the impacts of climate change.

Connected data is at the core of how we will solve these challenges. Better access to data will enable new ways of working that improve collaboration, productivity, and sustainability.

Today, AECO firms have more data than ever before, and their storage needs grow by 50% each year. While it’s beneficial to have every piece of information you could ever need about a project digitised, if the data is locked in files, teams can waste hours trying to find the specs for that third-floor utility closet door.

We’re at the start of the next major digital transformation for the AECO industry. And unlocking data’s value is the first step towards building a better future together.

The ongoing transformation of BIM will empower teams to define their desired project outcomes, like maximum cost or carbon impacts, from the earliest stages of design and planning. At Autodesk, we believe outcomebased BIM is the solution for smarter, more sustainable and resilient ways of designing and making the built environment.

This future starts with data that is granular, accessible, and open. The traditional silos that have long characterised AECO are breaking down, making way for a more connected approach. For example, teams in the design phase can inform product and system performance criteria as documented in specifications – such as which HVAC systems meet the project’s sustainability and energy efficiency requirements. This aids the contractor in making the most informed decision on which product gets selected and installed. And then, in the operations phase, owners would have the spec data on hand to measure the asset’s performance to understand if it achieved its target energy usage. The benefits of enhanced data accessibility across the asset lifecycle are truly unlimited.

Just last year, we launched the AECO Data Model API, an open and extensible solution that allows data to flow across project phases, stakeholders, and asset types. Teams save time by eliminating manual and error-prone extraction of model data. And access to

project data is democratised, leading to better decisions, increased transparency, and trust. This vision is how we’ll unlock the future of BIM. In the connected, cloud-based world of granular data, teams will be able to move a project from one tool to another, and across production environments, with all their data in context. Designers will no longer have to re-create the same pump multiple times when it’s already been built by another designer. Contractors won’t need to save that pump’s spec data to different spreadsheets, and risk losing track of which version is approved.

Throughout this next year, we predict that more design and make technology companies will embrace openness and interoperability to support seamless data sharing.

Data to connect design and construction Connected data will help our industry understand the health and performance of their business. In fact, companies that lead in leveraging data see a 50% increase in average profit growth rate compared to beginners. Data is especially valuable in bridging the gap between design and construction. With Autodesk Docs, our common data environment, we are connecting data across different phases of BIM. It’s a source of truth for bringing granular data and files together from design to construction. With a digital thread that connects every stage of the project lifecycle, teams can course-correct early and often to save time, money, and waste.

A great example of this is WSP’s work on the Manchester Airport. The team designed a ‘kit of parts’ that includes a lift, staircase, lobbies, and openings for the air bridge as part of the new Pier 2 construction. By utilising the kit-of-parts, an application of industrialised construction, and rationalising design into fewer, much larger assemblies, WSP with contractor Mace significantly reduced the duration of work onsite. The approach also reduced the amount of construction waste. This process was made possible through the seamless transfer of data from design to construction.

As we transition to more cloud-connected workflows, we’ll see more use cases of AI generated insights to inform design, engineering, and construction at the start of projects to help teams achieve desired outcomes such as minimising carbon impacts. For example, firms like Stantec are using AI-powered solutions to understand and test in real-time the embodied carbon impacts of their material design decisions from day one. This is significant because early concept planning for buildings offers the greatest opportunity for impact on carbon and the lowest cost risk for design changes.

As AI continues to progress in daily applications, it will enable our industry to optimise the next factory, school, or rail system. Because with data, AI knows the past and can help lead to a more sustainable future.

The expanding impact of legislation

To realise the untapped value of our data, it is critical to remember that it all starts with getting data connected and structured in one place.

In fact, another trend we predict in 2025 is that information requirements legislation will continue to grow with the recent introduction of the EU’s Digital Passport Initiative. Alongside existing mandates like ISO-19650, being able to classify, track and validate data across the asset lifecycle will become essential to successfully deliver on projects.

These regulations mean that AECO firms will need to invest in a Common Data Environment that will support their firm’s ability to track, manage and control project data at the granular level.

Data to supercharge AI progress

Data and AI have a symbiotic relationship. Better data – both in quantity and quality – is the fuel to unlocking the potential of AI and improving workflows.

Over the next year, we expect to see more practical uses of AI continue to make big strides, such as the dayto-day applications of AI that solve real-world problems. The industry is ready, as 44% of AECO professionals view improving productivity a top use case for AI.

The AECO industry is poised for a datadriven transformation. Over the next year, we’ll see continued shifts towards connected data that will help us achieve new levels of innovation, sustainability, and resiliency for the built environment. Firms that embrace granular, datacentric ways of working will be able to use this information from the office to the job site and share just the right amount of data with collaborators anywhere in the world and with any tool you choose.

The journey ahead is full of opportunities, and together, we can shape the AECO industry’s future for the better.

Today’s infrastructure projects are becoming more complex. Demand for better, more resilient infrastructure is increasing in the face of rapid urbanisation, climate change, the energy transition, and more. The sheer scale of data created from design to construction to operations makes infrastructure a prime area for AI disruption. AI is not just a trend, but a transformative force that will shape the AEC industry and the built environment, paving the way for smarter, more efficient project delivery and asset performance.

Of course, AI isn’t new to infrastructure sectors. We recognise its potential to process vast amounts of data to provide insights that were previously unattainable. Because more than 95% of the infrastructure that will be in use by 2030 already exists today, owner-operators need to ensure existing infrastructure is resilient, efficient, and capable of meeting current and future demands. AI-driven asset analytics generate insights into the condition of existing infrastructure assets, while eliminating costly, manual activities. AI allows operators to predict when maintenance is needed before failures occur. AI agents analyse digital twins of infrastructure assets—bridges, roads, dams, or water networks—to identify issues and recommend preventive action, avoiding costly breakdowns or safety hazards.

But when we take a step back, AI also has huge potential in the design phase of the infrastructure lifecycle. In design, AI can automate repetitive tasks—such as documentation and annotation—so that engineers can focus on higher-value activities.

For example, through a copilot, professionals can quickly create, revise, and interact with requirements documentation and 3D site models through natural language to automatically make real-time design changes with precision and ease. Or, with a design agent, they can evaluate thousands of layout options and suggest alternative designs in real-time, helping them make better design decisions sooner, saving time and money. We have calculated that users can accelerate drawing production by up to ten times, and improve drawing accuracy using AIpowered annotation, labelling, and sheeting that automatically places labels and dimensions according to organisational standards that are optimised for legibility and aesthetics.

systems. As we look to the future, the possibilities seem endless. But to begin to understand what’s possible for tomorrow, we need to be able to harness data – the foundation of AI.

For the effectiveness of AI to take shape, we need to leverage the power of open data ecosystems. Open ecosystems break down barriers and facilitate seamless data exchange across platforms, systems, disciplines, organisations, and people. They ensure secure information flow and collaboration are unimpeded, without vendor lock-in, and preserve context and meaning— ultimately enabling more effective AI-driven analysis and decision-making over the infrastructure’s lifecycle.

networks and assets. After all, infrastructure is of geospatial scale.

A 3D geospatial view changes the vantage point of an infrastructure digital twin from the engineering model to planet Earth— geolocating the engineering model, and all the necessary data about the surrounding built and natural environment. It enables a comprehensive digital twin of both the built and natural environment, with astonishing user experiences and scale, from millimetre-accurate details of individual assets to vast information about widespread infrastructure networks

AI’s true power will be measured by its ability to improve outcomes—more sustainable designs, faster and safer builds, and more reliable infrastructure

This digital thread allows users to connect and align data from various sources—from the engineering model to the subsurface and from enterprise information to operational data, such as IoT sensors and more—to provide the full context needed for smarter decision-making. Still, that is not enough. To truly unlock the value of AI, the digital twin must be augmented by 3D geospatial capabilities and intelligence. A 3D geospatial view is the most intuitive way for owner-operators and engineering services providers to search for and query information about infrastructure

By adding AI, to a data-rich, digital twin of the built and natural environment, we can create better and more resilient infrastructure. AI-driven automation, detection, and optimisation can take organisational performance and data-driven decision-making to new levels throughout the lifecycle of a project or asset. Generative AI can help significantly boost productivity and accuracy, while machine learning algorithms can identify inefficiencies, forecast maintenance, and suggest design modifications before physical construction commences.

This powerful combination will unlock unprecedented efficiency, sustainability, and resilience, transforming how we design, build, and maintain the world around us. With open data ecosystems fostering limitless innovation and AI continuously powering and automating 3D-contextualised digital twins, we are entering an era of smarter infrastructure.

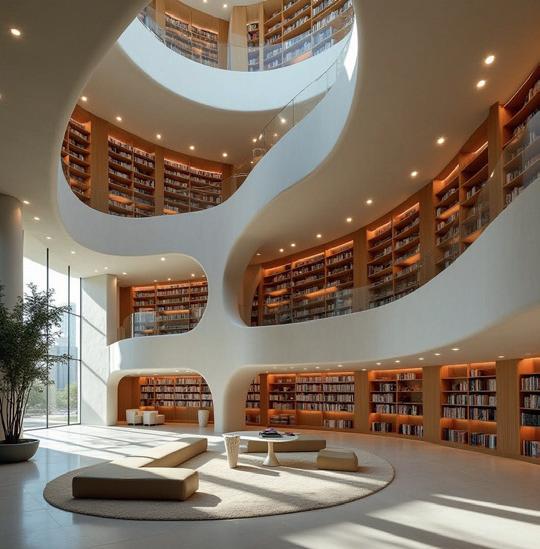

Our mission at Graphisoft has always been to empower architects with the tools they need to bring their visions to life. As we look ahead to 2025 and beyond, I am more excited than ever about the transformative impact of emerging technologies like AI, BIM, and cloud-based collaboration. These innovations will reshape how design teams work, pushing the boundaries of what’s possible.

One of the most thrilling changes on the horizon is the growing influence of AI in architecture. We’re already seeing the early signs of this shift, and it’s clear that we’re just scratching the surface. The first wave of AI tools and capabilities that have emerged have introduced inspiring and time-saving capabilities — helping designers generate and refine initial concepts quickly or automate repetitive tasks. AI concept visualisers are already being used in practice. Capabilities like automated drawings are predicted to augment creative workflows by automatically completing repeatable tasks with increasing accuracy and quality. Through beta releases followed by full product integration, teams have used these first use cases to stress test AI development layers.

In the short term, I expect to see more sophisticated AI capabilities built on such layers emerge en masse, both as standalone tools and integrated solutions that tap into existing data repositories, enhancing everything from design to analysis.

Zooming out even further, we will also see AI agents evolve. More than an automation engine for repetitive tasks, the vision for AI agents is to act autonomously, proactively interact, solve problems, and execute more complex workflows.

Imagine that you have a project that is far along in the design and refinement stage – suddenly, as it inevitably does, a new development necessitates a change in the original design. An AI agent will be able to lead that change from the original file across all affected assets and communication touchpoints automatically, eliminating all the manual redrawing, communication, and quality assurance checks that later-stage design changes usually require. A mature version of this vision will have the power to disrupt the whole construction value chain, and it’s one of the most exciting emerging trends to keep an eye on.

We can expect AI capabilities to be applied to sustainability problems, another industry topic that has grown in urgency.

AI’s ability to process vast amounts of data offers architects the chance to make informed decisions early in the design process, supporting more efficient, more environmentally conscious design results. It’s this front of the technology that will

effectively impact the 50% of global carbon emissions that buildings are responsible for. And it’s in this direction that we’ll see the technology take as data-driven design begins to inform initial concepts with climate, location, light, and other variables to shape the design essence of projects.

With the power of data-driven scenario building capabilities, designers and clients can explore new project opportunities in greater detail and research multiple models in reuse and refurbishment projects. Wellknown design challenges, such as how to repurpose large sports arenas or shopping centres, will benefit from value-driven evaluations supported by simulations that factor in the surrounding environment.

Applying insights using historical data from similar projects will also fuel sustainability efforts. Compounded learnings from hundreds of thousands of buildings become valuable data points for intelligent design systems. With the help of AI, architects can tap into this historical data to optimise future buildings, enhance energy efficiency, cut down on waste, and reduce environmental impact. By learning from past successes, we can make smarter decisions and avoid repeating the same mistakes. Further along the project lifecycle, the intelligent application of historical data will augment the power of digital twins to support even more sustainable and efficient facilities management.

Collaboration is another area undergoing rapid changes. Remote and hybrid teamwork solutions boosted in the past years have continued to evolve. Cloud-based platforms like BIMcloud are revolutionising how design teams work together, connecting teammates in different cities in real time and setting the stage for more agile collaboration. The result is teams that are more dispersed yet also more aligned, increasingly efficient project cycles, higher accuracy rates, and a better-built environment.

stages of the project lifecycle, significantly reducing errors and inefficient rework. By keeping stakeholders on the same page using a shared visual language, communication barriers dissolve and the stage is set for more creative input to fuel the design. As ideas and concepts are communicated more clearly with the aid of translation and collaborative design tools, AI and different stakeholders are better poised to explore unique building design ideas, merging the ‘best of both (or more) worlds,’ fusing solutions from different regions to create sustainable and fascinating results.

To see the benefits of visual collaboration in the long term, design directors need to select the right tools for the right job. At Graphisoft, we emphasise continuity in all our products, ensuring they remain relevant. In addition to ensuring compatibility with all operating systems and design platforms, future-proof products can integrate emerging technology into a seamless user experience. Curating a value-driven toolset — versus a tech-driven one — is essential to capturing the benefits of visual, data-driven collaboration.

As we look ahead to the next three to five years in the industry, it’s worthwhile to look back first. The volatility disrupted supply chains, and remote work scramble of previous years have created a stronger sense of resilience and confidence in the industry. In many ways, this has been a turning point, and I believe that one of the most critical investments companies can make is in continuous training for their employees.

Client relationships will also change. As AI advances solve previous rework and time constraint challenges, the emphasis will shift to maintaining tighter communication with clients from concept to handover. Educating and onboarding stakeholders can maximise technological gains but also requires a new collaborative mindset and fluency with shared communication infrastructure.

In dispersed, crosscultural environments, seeing is believing. Mobile, deviceagnostic viewing and collaboration tools bridge teams at various

We view AI as a tool to extend human ingenuity, not replace it. As with any set of tools, using it correctly will require a set of skills, especially as use cases and individual tools mature and grow in complexity. The opportunities that autonomous and intelligent design present are only beginning to emerge, and this is the time to get in ‘on the ground floor.’ Just as we invest in the continuity of our products and services, leaders should also invest in their teams and hone skillsets that keep pace with these exciting technical developments.

The future of architecture is incredibly exciting, and I do not doubt that with the right tools, training, and mindset, we can create a built environment that is not only more efficient and sustainable but also more aligned with the needs of tomorrow. This is our chance to leave a lasting, positive impact on the world. invest

By some measures, the pace of change in the AEC industry is driving more change, more innovation and more complexity than at any time in history. It’s also safe to say that the pace of change will never again be as slow as it is today.

The arsenal of technologies AEC professionals use to do their jobs continues to grow. Modern projects have many stakeholders, each providing highly specialised contributions to a project and generating valuable project data. With each stakeholder employing a different stack of hardware and software, each with its own data formats and validation, permissions and security parameters, pulling the data thread across disciplines, systems and project phases has become increasingly arduous. At the same time, it’s never been more clear that consolidating project information is a critical problem to solve.

Just like every other project phase, design requires a new focus on openness and interoperability, allowing connected teams to continue using those models throughout projects and across the complete asset lifecycle – from design to build to maintain. A connected ecosystem allows everyone throughout the asset lifecycle to work with their preferred tools, minimise rework, make informed decisions and collaborate effectively.

From inflexible and siloed to open, connected ecosystems

stakeholders and the asset lifecycle.

At Trimble, we’re creating integrations between our products and third-party solutions. We’re also providing tools for construction businesses to build new integrations to solve further data challenges. Over 100 preconfigured integrations are available on Trimble Marketplace, and Trimble App Xchange empowers software developers to build integrations for their customers. App Xchange and Trimble Marketplace are key parts of our commitment to facilitating open, interoperable systems and an automated flow of data between solutions from Trimble and other software vendors.

‘‘ AI agents will reflect and iterate, plan ahead by defining priorities and tasks, and access tools and real-time data, such as information provided by in-field sensors ’’

Compliance with accepted standards is another area of responsibility for tech providers. Trimble software and platforms enable the import and export of a wide range of product-specific and global file formats, including IFC. In addition to our strategic advisory role with BuildingSmart, Trimble also joined the AOUSD Alliance, which is dedicated to promoting the interoperability of 3D content through Universal Scene Description (OpenUSD), helping improve interoperability between SketchUp and other platforms, like Nvidia Omniverse Cloud, for more advanced visualisation.

In the grand scheme of things, no single tech vendor can – or probably should –provide it all. As a result, we’re seeing a broader industry shift away from inflexible, closed technology suites to more open and connected technology ecosystems.

In the field, models can be accessed in Trimble Connect and with an augmented reality system, such as Trimble Sitevision, workers can place and view georeferenced 3D models — above or below the ground to accurately install or validate field assembly.

Interoperability will unlock emerging tech

We’ve already begun to see the value of AI in AEC. Trimble and other vendors are incorporating AI into customer workflows to enhance decision-making and creativity, and automate repetitive tasks. From automated scan-to-3D workflows to generative design solutions like SketchUp Diffusion, which produces stylised renderings in seconds based on presets or natural language text prompts, AI is a catalyst for creativity and an engine for productivity.

Like BIM, unlocking transformative value from the next wave of AI will depend on interoperability and high-quality data. With more data moving between systems and across disciplines, we can explore advanced forms of AI, such as AI agents. Unlike generative AI, an AI agent works independently, with little to no human interaction, to perform a specific task. AI agents will reflect and iterate, plan ahead by defining priorities and tasks, and access tools and real-time data, such as information provided by in-field sensors.

Numerous agents across disciplines may use the same project data to perform their unique tasks. For example, various agents would use scan data to assist with separate tasks related to estimating, project management, and scheduling. To use this data across different project phases and disciplines, various AI agents must be able to access and understand the data, regardless of which system it originates from.

work continues

The free movement of data between systems and workflows is the next great productivity breakthrough in AEC. The industry is shifting away from inflexible, closed technology suites to more open and connected ecosystems. Project teams and project owners are demanding the benefits that interoperability brings – not just to task optimisation, but as a way to optimise the entire asset lifecycle.

It’s not a lack of innovation that has the construction industry mired in its low productivity index. Many project teams are actually quick to adopt new technologies and techniques. The problem is the level at which that innovation is happening. Sure, we can make a task easier to do, but the benefit stops right there, with that stakeholder. The next-level challenges are going to be solved through improved data flow across projects,

When we can meet people where they are, with the tools they’re already using, we begin to see the real power of data. For example, an architect uses one tool for conceptual design and then brings that data into their preferred BIM solution to create an architectural model. The architectural model is then used as a reference file to build detailed structural engineering models to automate the fabrication of components. In a connected data environment, such as Trimble Connect, working models can be available to everyone who needs them.

Projects today are far more sophisticated and data-driven than at any time in history, and the need for connected data has never been clearer. The industry has made strides toward more connected workflows and data sharing, but the

As the ecosystem of AEC hardware and software expands and becomes more complex, interoperability will be the key to unlocking the value of data, extending the value of BIM and maximising emerging technologies.

Design transformed: 2025 predictions from Vectorworks

With 2025 in full swing, the AEC industry is at the forefront of a technological revolution, driven by rapid advancements in artificial intelligence (AI), immersive visualisation tools, and a commitment to sustainable design. These innovations are reshaping the tools architects and designers use and influencing how they think about productivity, creativity, and environmental stewardship. Below, I’ll share some insights into the key trends that are set to redefine the industry and recommendations for design leaders for the year ahead.

Artificial Intelligence: a creative ally AI continues to evolve as a pivotal tool for the AEC industry. It is not a replacement for creative professionals but a powerful assistant that enables them to focus more on design and creativity. In recent years, AI has proven its potential to handle time-consuming tasks such as automating project schedules, optimising workflows, and generating accurate documentation. This allows architects and designers to direct their energy toward conceptual development and problem-solving.

In 2025, AI is expected to go beyond visualisation and become deeply integrated into the design process. Generative design tools powered by AI will enable professionals to explore innovative forms and solutions that were once unimaginable. These tools will enhance creativity and streamline processes, making it easier to meet tight deadlines and client expectations.

BIM is becoming a standard practice for firms of all sizes, transforming how projects are planned, coordinated, and executed. According to the AIA Firm Survey Report 2024, BIM adoption has surged postpandemic, with mid-sized and large firms leading the way. However, smaller firms are steadily recognising its benefits, particularly in improving workflows and addressing specific project challenges.

In 2025, BIM will continue to be instrumental in achieving sustainability goals. Tools embedded in BIM software now allow designers to conduct energy efficiency analyses and carbon footprint assessments early in the design phase. This capability is crucial as the industry works toward net-zero emission targets for the building sector by 2040. Software providers are refining BIM features to prioritise real-time coordination and seamless documentation. The emphasis on usability ensures that BIM tools are accessible, enabling architects to design smarter and more sustainably. Looking ahead, I anticipate a rise in BIM-driven projects that meet high-performance standards and redefine collaboration among multidisciplinary teams.

Client expectations for project visualisation have shifted dramatically. While traditional blueprints and 2D documentation remain relevant, immersive technologies such as AR and VR are becoming essential for client engagement. These tools offer an unprecedented level of interactivity, allowing clients to walk through their designs and provide informed feedback virtually.

In 2025, I predict a significant expansion of immersive tools tailored to client collaboration. Many platforms share interactive 3D models, panoramic images, and project files more efficiently in realtime. These tools “wow” clients and foster better decision-making and collaboration. As immersive technologies become more mainstream, they will redefine how architects and clients work together, creating a more transparent and engaging design process.

The urgency of climate change demands a radical shift in how architects and designers approach their work. According to the World Meteorological Organisation’s 2024 State of Climate Services report, the past decade has been the warmest, highlighting the need for immediate action. Sustainability has transitioned from being a priority to an industry imperative.

Innovative approaches like wood construction and adaptive reuse are gaining traction as effective ways to reduce a building’s environmental impact. Technologies such as cross-laminated timber (CLT) offer a sustainable alternative to concrete, while adaptive reuse preserves existing structures, conserving resources and minimising waste.

Tools like the Vectorworks Embodied Carbon Calculator enable designers to measure and reduce the carbon footprint of their projects. By integrating data-driven decision-making into the design process, architects can make more sustainable material choices and meet stringent climate goals. Looking ahead, I anticipate the introduction of new metrics and sustainability dashboards that will allow designers to visualise the environmental impact of their decisions in real time, further solidifying sustainability as a core tenet of architectural practice.

The technological advancements outlined above are not just reshaping tools but also redefining roles within the AEC industry. Architects and designers are increasingly becoming collaborators, facilitators, and problem solvers tasked with balancing creativity, functionality, and sustainability. This shift requires a holistic approach to design that considers the needs of clients, the environment, and future generations.

For firms, this means investing in continuous learning and upskilling. Professionals must stay abreast of new technologies and

methodologies to remain competitive. As the industry evolves, I foresee a growing emphasis on interdisciplinary collaboration, with architects, engineers, software developers, and sustainability experts working together to create innovative solutions.

To prepare for the future, design leaders should prioritise evolving their tech stack, optimising workflows, and cultivating staff skills to meet emerging industry challenges. Investing in interoperability and cloud-based collaboration ensures seamless data exchange and resilience while integrating AI and machine learning can automate repetitive tasks and enhance design optimisation. Embracing sustainability tools to track energy efficiency and carbon footprints will align with growing client and regulatory demands. Workflows should be streamlined for agility by adopting unified processes, enabling large-scale design iteration, and leveraging technologies like digital twins and data-driven design tools to refine outcomes efficiently. Simultaneously, fostering staff expertise will be critical. Cross-disciplinary knowledge in areas like engineering and environmental science can enhance collaboration, while training in emerging technologies such as AR/ VR, BIM, and AI will ensure teams remain competitive. Soft skills like adaptability, communication, and leadership development are equally important as teams grow more diverse. Cultivating sustainability expertise and preparing for leadership transitions will further position firms for long-term success.

Embracing innovation to shape tomorrow

The AEC industry stands at a transformative juncture, with developing technologies and sustainability goals paving the way for a brighter future. By embracing these trends, architects and designers can push the boundaries of what’s possible, creating innovative, functional, and environmentally responsible spaces.

Finally, staying ahead of industry trends is essential. Monitoring evolving regulations, leveraging predictive analytics, and understanding client expectations can inform strategic decision-making. These strategies collectively enable leadership to produce and deliver innovative, impactful designs that address both present needs and future possibilities, and ensure your firm remains agile in a dynamic market. To see how we’re keeping pace with industry demands, visit our public roadmap (www.vectorworks.net/public-roadmap), where we share insights into the innovations we’re prioritising. As we progress into 2025, I am excited to witness how these advancements will shape the industry and the built environment. The journey ahead promises challenges but also immense potential for creativity, collaboration, and meaningful impact. Together, we can redefine the future of design, leaving a lasting legacy for generations.

Twinview aggregates information from multiple sources, so you can access all your data from a single platform to create a holistic view of your building.

Visualise static and dynamic building data on customisable dashboards allowing real-time analysis and optimisation of building performance.

Streamline your facility management processes, reduce downtime, implement predictive maintaince and improve resource allocation.

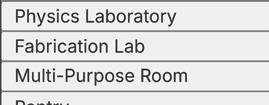

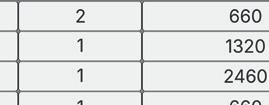

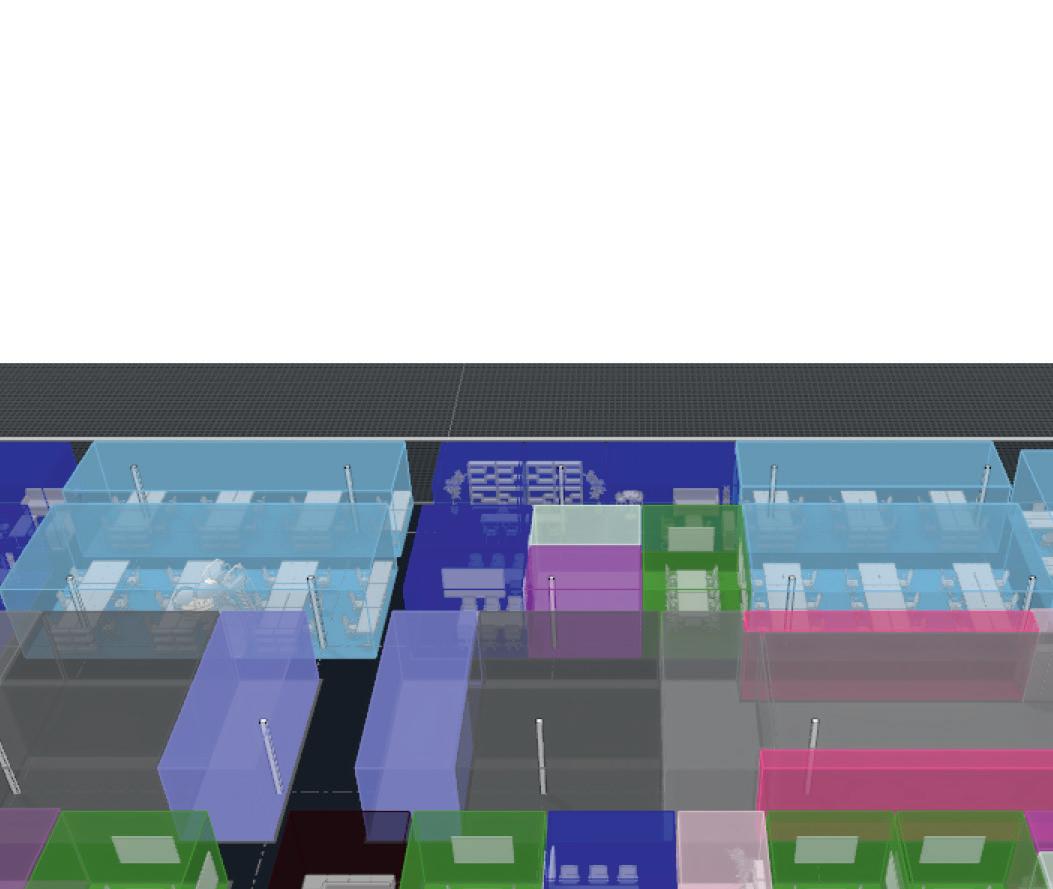

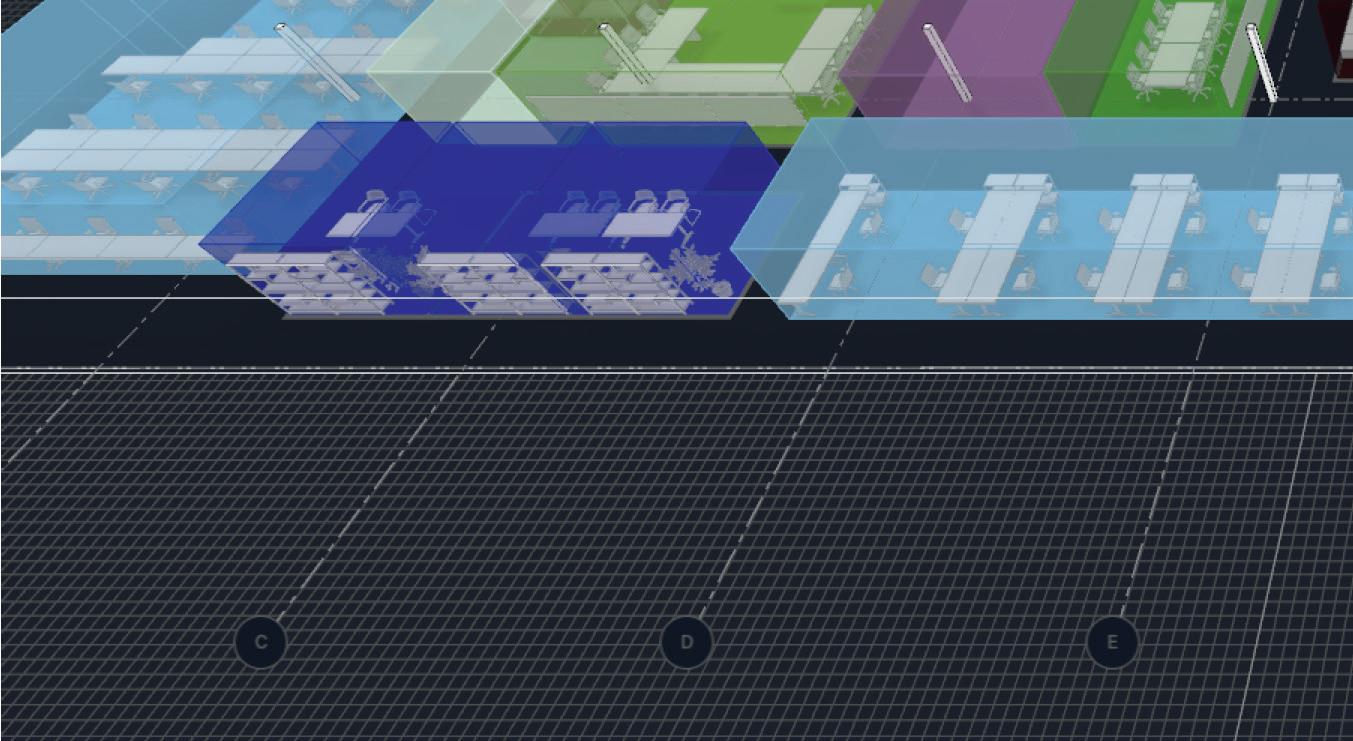

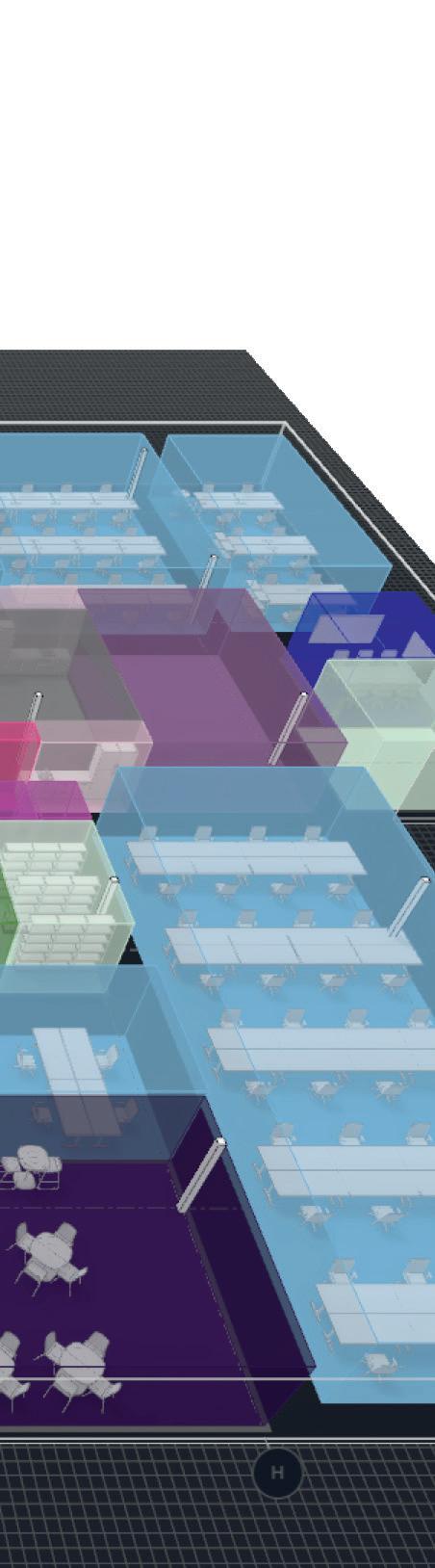

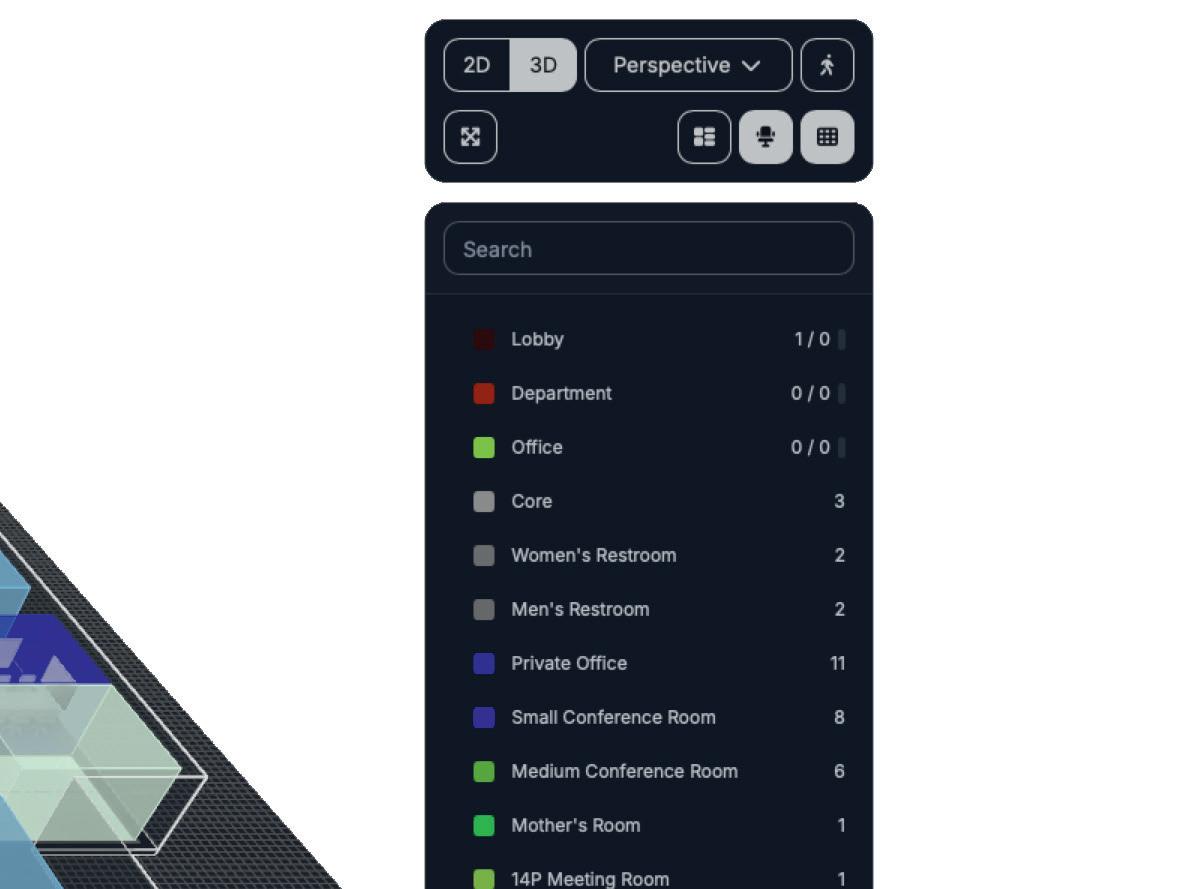

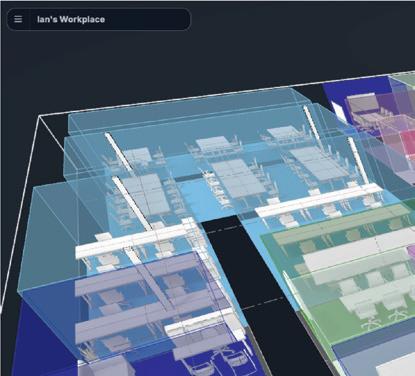

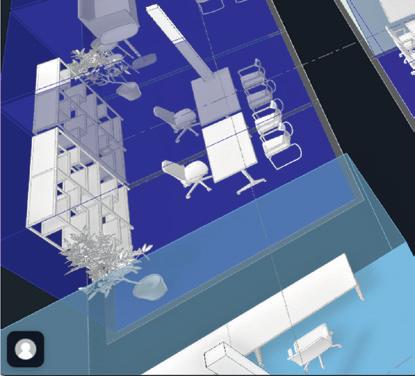

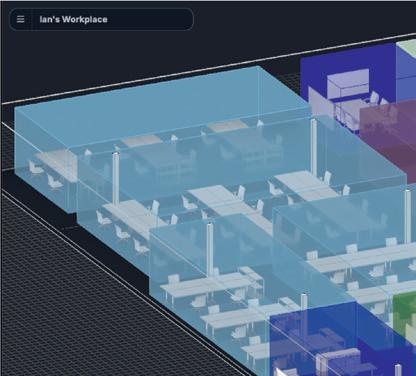

Towards the end of 2024, software developer Hypar released a whole new take on its cloud-based design tool, focused on space planning and with a cool new web interface. Martyn Day spoke with Hypar co-founder Ian Keough to get the inside track on this apparent pivot

Founded in 2018 by Anthony Hauck and Ian Keough, Hypar has certainly been on a journey in terms of its public-facing aims and capabilities.

Both co-founders are well-established figures in the software field. Hauck previously led Revit’s product development and pioneered Autodesk’s generative design initiatives. Keough, meanwhile, is widely recognised as the creator of Dynamo, a visual programming platform for Revit.

Initially, their creation Hypar looked very much like a single, large sandpit for generative designers familiar with scripting, enabling them to create system-level design applications, as well as for nonprogrammers looking to rapidly generate layouts, duct routing and design variations and get feedback on key metrics, which could then be exported to Revit.

Back in 2023, we were blown away with Hypar’s integration of ChatGPT at the front end. (www.tinyurl.com/AEC-Hypar). This aimed to give users the ability to rapidly generate conceptual buildings and then progress

on to fabrication-level models. This capability was subsequently demonstrated in tandem with DPR Construction.

One year later and the company’s front end has changed yet again. With a whole new interface and a range of capabilities specifically focused on space planning and layout, it feels as if Hypar has made a big pivot. What was once the realm of scripters now looks very much like a cloud planning tool that could be used by anyone.

AEC Magazine’s Martyn Day caught up with the always insightful Ian Keough to discuss Hypar’s development and better understand what seems like a change in direction at the company, as well as to get his more general views on AEC development trends.

Martyn Day: Developers such as Arcol, Snaptrude and Qonic are all aiming firmly at Revit, albeit coming at the market from different directions and picking their own entry points in the workflow

to add value, while supporting RVT. Since Revit is so broad, it seems clear that it will take years before any of these newer products are feature-comparable with Revit, and all these companies have different takes on how to get there. With that in mind, how do you define a nextgeneration design tool and what is Hypar’s strategy in this regard?

Ian Keough: At Hypar, we’ve been thinking about this problem for five or six years from a fundamentally different place. Our very first pitch deck for Hypar showed images from work done in the 1960s at MIT, when they were starting to imagine what computers would be used for in design. They weren’t imagining that computers would be used for drafting, of course. Ivan Sutherland had already done that years before and we have all seen those images.

What they were imagining is that computers would be used to design buildings,

and they were making punch card programmes to lay out hospitals and stuff and that. To me, that’s a very pro-future kind of vision. It imagined that computing capacity would grow to a point where the computer would become a partner in the process of design, as opposed to a slightly better version of the drafting board.

However, when it eventually happened, AutoCAD was released in the 1980s and instead we took the other fork of history. The result of taking that other fork has been interesting. If you look at this from a historic perspective, computers did what they did and they got massively more powerful over the years. But the small layer on top of that was all of our CAD software, which used very little of that available computing power. In a real sense, it used the local CPU, but not the computing power of all the data centres around the world which have come online. We were not leveraging that compute power to help us design more efficiently, more quickly, more correctly. We were just complaining that we couldn’t visualise giant models, and that’s still a thing that people talk about.

That’s still a big problem for people’s workloads. I don’t want to dismiss it. If you’re building an airport, you have got to load it, federate all of these models and be able to visualise it. I get that problem. But the larger problem is that,

to get to that giant model that you’re complaining about, there are many, many years of labour, of people building in sticks-and-bricks models. How many airports have we designed in the history of human civilisation?

So, thinking about the fork we face –and I think we’re experiencing a ‘come to Jesus’ moment here – people are now seeing AI. As a result, they’re getting equal parts hopeful that it will suddenly, at a snap of the fingers, remove all the toil that they’re experiencing in building these bigger and bigger and more complicated models, and equal parts afraid that it will embody all the expertise that is in their heads, and will leave them out of a job!

Martyn Day: I can envisage a time where AI can design a building in detail, but I can’t see it happening in our lifetime. What are your thoughts?

Ian Keough: I don’t think that’s the goal. I don’t think that’s the goal of anybody out there – even the people who I think have the most interesting and compelling ideas around AI and architecture. But I do think there are a lot of people who have very uninteresting ideas around AI in architecture, and those involve things like using AI to generate renderings and stuff like that. It’s nifty to look at, but it’s so low value in terms of the larger story of what all this computing power could do for us.

product directly follows the ‘text-to-BIM thing’, because what the ‘text-to-BIM thing’ showed us is that we have this very powerful platform.

‘‘

The new Hypar 2.0, which was released in September 2024, and more specifically, the layout suggestions capability (www. tinyurl.com/layout-suggest), was our first nod towards AI-infused capabilities. The platform is all about seeing if we can make a design tool that’s a design tool first and foremost.

I think there are a lot of people who have very uninteresting ideas around AI in architecture, and those involve things like using AI to generate renderings and stuff like that. It’s nifty to look at, but it’s so low value in terms of the larger story of what all this computing power could do for us

Ian Keough ’’

At AEC Magazine, you’ve already written about experiments that we’ve conducted in terms of designing through our chat prompt/text-to-BIM capability. So, we took the summation of the five years of work that we have done on Hypar as a platform, the compute infrastructure and, when LLMs came along, Andrew Heumann on our team suggested it would be cool if we could see if we could map human natural language down into input parameters for our generative system.

We did that. We put it out there. And everybody got really, really excited. But we quickly realised the limitations of that system. It’s very, very hard to design anything real through a check prompt. It’s one thing to generate an image of a building. It’s another thing to generate a building.

You’ll see in the history of Hypar that the creation of this new version of the

The problem with AI-generated rendering is you get what you get, and you can’t really change it, except for changing that prompt, and you’re totally out of control. What designers want is control. They want to be able to move quickly and to be able to control the design and understand the input parameters design. Hypar 2.0 is really about that. It’s about how you create a design tool and then lift all of this compute and seamlessly integrate it with the design experience, so that computation is not some other experience on top of your model.

Martyn Day: Historically, we have been used to seeing Hypar perform rapid conceptual modelling through scripting, generate building systems and be capable of multiple levels of detail to quickly model and then swap out to scale fidelity. The whole Hypar experience, looking at the website now, seems to be about space planning. Would you agree?

Ian Keough: That’s the head-scratcher for a lot of people when it comes to this new version. People who have seen me present on the work we did with DPR and other firms to make these incredibly detailed and sophisticated building systems are saying, “Wait, now you’re a space planning software now?”

That may seem like a little bit of a left turn. But the mission continues to enable anyone to build really richly detailed models from simple primitives without extra effort. We do this in the same way that we could take a low-resolution Revit wall and turn it into a fully clad DPR drywall layout, including all the fabrication instructions and the robotic layout instructions that go on the floor, and everything else. That capability still lives in

Hypar, underneath the new interface. What we are doing is getting back to software that solves real problems, again. This is a very gross simplification of what’s going on, but what problem does Revit actually solve? The answer is drawings, documentation. That’s the problem that Revit solves today and has solved since the beginning. What it does not solve is the problem of how to turn an Excel spreadsheet that represents a financial model into the plan for a hospital. It does not solve that at all. That is solved by human labour and human intellect. And right now, it’s solved in a very haphazard way, because the software doesn’t help you. It doesn’t offer you any affordances to help you do that. Everybody is largely either doing this as cockamamie-crazy, nested-family Lego blocks and jelly cubes in Revit, or trying to do it as just a bunch of coloured polygons in Bluebeam. That’s not how we’re utilising compute.

At the end of a design tool, it is still the architect’s experience and intellect that creates a building. What the design tool should do is remove all of the toil.

that knowledge in the form of spaces, specific spaces, and all the stuff that’s in a space and the reasons for that stuff being there. And then they just want to transfer that knowledge from one project to another, whether it’s a healthcare project or any other kind of project that they’ve carried out before.

At the beginning of defining the next version of Hypar, when we started talking with architects about this problem, I was amazed by the cleverness of the architects. They’re actually finding solutions to do this with the software they have now. They build these giant, elaborate Revit models with hundreds of standard room types in them, and then they have people open those Revit models and copy and paste out stuff from the library.

I had one guy who referred to his model as ‘the Dewey Decimal System’. He had grids in Revit numbered in the Dewey Decimal System manner, such

about tasks that need different levels of abstraction and multiple levels of scale, depending on the task. Can you explain how this functions in Hypar?

Ian Keough: You’ll notice in the new version of Hypar that there’s something called ‘bubble mode’. It’s a diagram mode for drawing spaces, but you’re drawing them in this kind of diagrammatic, ‘bubbly’ way.

That was an insight that we gleaned from spending literally hundreds of hours watching architects at the very early stage of designing buildings. They would use that way of communicating when they were doing departmental layout or whatever. They were hacking tools like Miro and other things, where they were having these conversations to do this stuff. But it was never at scale.

‘‘ Why isn’t it possible in Revit to select a room and save it as a standard, so the next time I put a room tag in that set exam room, such as a paediatric exam room, it just infills it with what I’ve done for the last ten projects ’’

To give you an example of this, now that we’ve reached a point where users can use our software in a certain production context, to create these larger space plans, they’re starting to ask for the next layer of capabilities such as clearances as a semantic concept. This is the idea that, if I’m sitting at this desk, there should be a clearance in front of this desk, so that people have enough room to walk by. Sometimes, clearances are driven by code – so why has no piece of architectural design software in the last 20 years had a semantic notion of a clearance that you could either set specifically or derive from code? You might be able to write a checker in Solibri in the postdesign phase, but what about the designer at the point of creating the model?

Clearances are just one example. There are plenty of others, but the other impetus for a lot of what we’re doing right now is the fact that organisations like HOK have a vast storehouse of encoded design knowledge, in the form of all of the work that they’ve done in the past. Often, they cannot reuse this knowledge, except by way of hiring architects and transmitting this expertise from one person to the next, in a form that we have used for thousands of years – by storytelling, right? What firms want is a way to capture

that he could insert new standards into this crazy grid system. And he referred to them by their grid locations.

In other words, architects have overcome the limitations that we’ve put in place in terms of software. But why isn’t it possible in Revit to select a room and save it as a standard, so the next time I put a room tag in that set exam room, such as a paediatric exam room, it just infills it with what I’ve done for the last ten projects.

To get back to your question about what the next generation looks like, I guess the simplest way to explain how we’re approaching it is that we’re picking a problem to solve that’s at the heart of designing buildings. It’s at the moment of creation, literally, of a building. We want to solve that problem and use software as a way to accelerate the designer, rather than a way to demonstrate that we can visualise larger models. That will come in time, but really, we want to use this vast computational resource that we have to undergird this sort of design, and make a great, snappy, fun design tool.

Martyn Day: Old BIM systems are oneway streets. They are about building a detailed model to produce drawings. But you have gone on record talking

We were already thinking of this idea of being able to move them from lowlevel detail to a high level of detail without extra effort by means of leveraging compute. Now, in Hypar, and I’ll admit the bits are not totally connected yet in this idea, you’ll notice that people will start planning in this bubble mode, and then they’ll have conversations around bubble mode, at that level of detail.

Meanwhile, the software is already working behind the scenes, creating a network of rooms for them. And then they’ll perform the next step and use this clever stuff to intelligently lay out those rooms, the contents in the rooms. The next level of detail passed that will be connectors to other building systems, so let’s generate the building system. There’s this continuous thread that follows levels of detail from diagram to space – to spaces with equipment and furniture and to building systems.

Martyn Day: We have seen Hypar focus on conceptual work, space planning, fabrication-level modelling. Is the goal here to try and tackle every design phase?