THE FUTURE SPECIAL REPORT

Anybody working in design technology can sympathise with the field’s simultaneously fast and slow pace. On the one hand, we see topics such as AI and digital twins presenting new possibilities. On the other hand, we have our day-to-day tools. Whilst object-oriented parametric modelling may have been an innovation a decade ago, the general AEC software estate has remained relatively stagnant in terms of efficiency and innovation. When considering this in the broader context of our industry’s challenges – our need to be more climate-responsible, safety-conscious, and profitable against increasingly shrinking margins – something has to change. This field of ours has a unique role to play in sidestepping commercial competition and driving for change. This is how the Future AEC Software Specification came about and the spirit upon which it was developed. While architects, engineers and constructors directly compete, the design technology leadership of these firms have developed a culture of open collaboration, sharing their experiences with peers for bidirectional benefits and enabling the broader industry. These groups had instigated various initiatives, including direct feedback to vendors, feature working groups, industry group discussions, etc.

At the height of the pandemic, many found the opportunity to step back and evaluate the tools they use to deliver against the increasing investments they’re making. A consensus of views about the value proposition resulted in the development of an Open Letter to Autodesk (www.tinyurl.com/AEC-openletter)

Contributors to the Open Letter, many putting their name to it, others wishing to remain anonymous, aimed that this would encourage further development in the tools we use to design and deliver projects. In the years since, we have received engagement from further national and international communities. As a group, we have reconvened to reflect on whether the original objective was achieved. A limiting barrier to that objective was its direction towards a single vendor, not emerging

startups and broader vendors that could develop faster and quickly embrace modern technology’s benefits (cloud / GPU / AI, etc.). With that in mind, we pivoted from a reactive, single-vendor dialogue to a more proactive open call to the software industry.

We aimed to set out an open-source specification for future design tools that facilitates good design and construction by enabling creative practice and supporting the production of construction-ready data. The specification envisages an ecosystem of tools that are best in class at what they do, overlaid on a unifying “data framework” to enable efficient collaboration with design, construction, and supply chain partners. Once drafted, the specification was presented and agreed upon as applicable by both national and international peers, with overwhelming support that it tackled the full breadth of our challenges and aspirations. This specification has coalesced around ten key features of what the future of design tools needs:

• A universal data framework that all AEC software platforms can read from and write to, allowing a more transparent and efficient exchange of data between platforms and parties.

• Access to live data, geometry, and documentation beyond the restrictions of current desktop-based practices.

• Design and evaluate decisions in realtime, at any scale.

• User-friendly, efficient, versatile and intuitive.

• Efficient modelling with increasing accuracy, flexibility, detail and intelligence.

• Enables responsible design.

• Enables DfMA and MMC approaches.

• Facilitates automation and the ability to leverage AI at scale and responsibly as and when possible.

• Aids efficient and intelligent deliverables.

• Better value is achieved through improved access, data, and licensing models.

Since launched and presented at NXT DEV in June 2023 (www,tinyurl.com/nxtdev-23),

there has been a vast range of engagements by additional interested design, engineering and construction user groups, resulting in expansions and tweaks to the originally written spec.

• BuildingSmart has confirmed that IFC5 is in active development, sharing the structure and end goals presented within the specification’s chapter: Data Framework (cloud-centric) schema for vendor-neutral information collaboration as a transaction.

• The group has engaged with software developers, startups and venture capitalists to better discuss the specification and how it relates to their current tools, roadmaps acquisitions and investments.

• Some of the major software houses have opened their doors and invited specification authors to sit on advisory boards, attend previews and advise on aligning with the specification.

• The specification, structured around its ten core tenets, is being trialled as a means of assessing software offerings as part of an AEC software marketplace.

• Internationally, the specification has proven influential in significant research projects such as Arch_Manu in Australia, providing base reading material and a guiding framework for a programme supporting five PhD candidates over the next five years.

• Leaning into the intention that the Data Framework should be industry-led, we are actively exploring opportunities for how this can be structured and move forward meaningfully.

• We are connecting with like-minded parties in the US, Europe, Asia, and Australia, who are undertaking their own developments and experiments using a common approach to data. We believe the specification can be a rallying point for all of these initiatives to work together rather than apart.

In this AEC Magazine Special Report we explore the tenets of the Future AEC Software Specification

■ www.future-aec-software-specification.com

The premise is simple: every time we import and export between software using proprietary file formats and structures, it is lose - lose. We’re losing geometry, data, time, energy, sanity.

In AEC, some design firms have just accepted the path of least resistance and avoid file exchanges as much as possible by accepting a single tool to do a little bit of everything in an average way. A few of the larger firms use whatever the best tools for the job are. However, they are paying for it by building custom workflows, buying talent, and building conversion software. But that doesn’t change the fundamental problem. The data is still ‘locked’ and only readable to a proprietary file type.

So, what would the solution look like? Let’s make a comparison to Universal Scene Description (USD), with an extremely simplified comparison. In the media and entertainment world, Disney Pixar said enough is enough as the largest market share. We’re losing too much time translating geometry, materials and data through different tools and software file types, so they created an open-source USD. Like AEC, in media and entertainment, they need to develop 3D models in flexible modelling software, calculate and build lighting models, spaces, realistic materials, movement, and even extra effects like fur, water, fire.. All have a specific modeller best in class at that specific output.

With USD, a common data structure sits outside of the software product. Each software vendor was ‘forced’ to adopt and read USD data layers, variants and classes and would read and write back to the USD. No data loss is associated with

importing or exporting because there is no transformation or translation. There were tons of additional benefits too, such as opening times (because you’re not actually opening all the data), which meant sending a job to the render farm was almost instant and didn’t require massive packaging of model files. Collaboration was massively improved with sublayers’ checked out’ by relevant departments or people all working concurrently. Its standardisation also created a level playing field for developers, who no longer had to build many versions of their tools as plugins for different modellers. If it can work with USD, it can work with everyone.

So, does all this sound fantastic and somewhat transferrable? So, where could this be adapted or improved for AEC? There’s a much longer version as to why USD isn’t adaptable for AEC, and the reality is that USD is technically still a desktop file format with variants like .usda, usdz or usdc. It’s the same answer for . IFC, which is a mature and relevant dictionary/library of industry specific terms/ categories, but still offline file format.

When we think of our Future AEC software specification, it sets out that data needs to move outside of formats tied to desktop software products, the complete opposite of where we are today.

The proposal?

Within the tools we use (whichever is the best tool for the problem), the software reads and writes to a cloud-enabled data framework to get the information required.

That could be at one end of the scale, the primary architect or designer need-

‘‘

ing absolutely everything, heavily modelling on a desktop device, or at the other end, a stakeholder who only needs access to a single entity or component, not the entire file (see part 2)

For absolute clarity, we have cloudbased file hosting systems today, which don’t expose a granular data parameter level for each entity and component. Working outside file formats enables a more diverse audience of stakeholders to access, author and modify parameters concurrently, only interacting with the data they need, not a whole model. Collaborators can benefit from robust git-style co-authoring and commits of information with full permissions, audit trail and acceptance process.

Moving away from file formats and having the centralised data framework enables local data to be committed to the centralised web data, allowing an entire supply chain to access different aspects of the whole project without losing geometry, data or time associated with file type translations.

As an architect, when I have finished a package of information, it is set for acceptance by another party, who then continues its authorship. This is gamechanging and can hugely influence decision-making efficiency in construction projects. A granular Data Framework enables engagement, collaboration of data, diversification of stakeholders, and hybrid engagements from a much broader range of people with access to different technologies. It creates an equitable engagement for an audience not limited by expensive, elitist, complicated tools with high barriers of entry.

Moving away from file formats and having the centralised data framework enables local data to be committed to the centralised web data, allowing an entire supply chain to access different aspects of the whole project without losing geometry, data or time associated with file type translations

Today, our tools connect through intensive prep, cleaning, exporting, and importing, even within tools made by the same vendor.

Some design firms avoid file exchanges as much as possible by accepting a single tool.

Larger or specialist firms use whatever is the best tool for the job, but they are paying for it by building custom workflows and conversion software.

In any event, vendor locked proprietary formats and the collective energy wasted by our industry tackling this must change.

What’s needed?

• Entity component system, not proprietary file formats.

• Git-style collaboration and commits of local data to the cloud data framework

• Shared ownership of authoring –transactional acceptance between stakeholders.

• An industry alignment of Greg Schleusner’s work with StrangeMatter (www.tinyurl.com/magnetar-strange-matter), the direction of IFC 5 from buildingSmart (www.tinyurl.com/IFC5-video)

and the Autodesk AEC Data Model (www.tinyurl.com/Autodesk-AEC-data).

Since July 2023, this has been the most active section of the specification, with frequent and consistent gains to connect thoughts and active development. We’ve been meeting with vendors, users, industry experts, Building Smart and many more. We have the right people and skills to solve this problem. It’s not impossible, and it won’t happen overnight.

Depending on the task, we should choose the right hardware, to access the right tool, on a single stream of data

Twenty years ago, the design and construction industry looked very different.

We produced paper drawings, rolled them in cardboard tubes and cycled them across town to our collaborators.

Today, we upload thousands of PDFs and large, proprietary models to cloudhosted storage systems. It’s a more digitised exchange, but we’re still creating and developing that content locally.

Ten years ago:

Design studios and engineers operated in a reasonably consistent but traditional way. Each business would internally collaborate in the same building, sitting across from each other, with the same desktop machines, software builds, and versions, waiting 10 minutes to open models and more minutes each time they synchronised their changes. Sound familiar?

To recycle a phrase I stole from Andy Watts (www.linkedin.com/in/andy-r-watts) during the pandemic and as people needed to work from home, our office of five hundred people became five hundred separate offices.

In 2024, every design studio, engineering firm, and construction company will operate with a hybrid workforce between various offices, at their homes and increasingly with a global footprint. We’re not going to revert to office-only working.

Yet, with the software we need to use to deliver the scale and complexity of projects, we’re tied back to our office desktop computing and the infrastructure connecting them. I often reflect on an interaction

with a young architect from a couple of years ago, whose puzzled face still troubles me today, as I still don’t have a great answer to his conundrum: They have firsthand experience of the challenge in collaborating with colleagues, on the same spec hardware next to each other, with struggling performance. Yet outside of work, their benchmark is effortlessly engaging with a global group of 150+ strangers in a dataset the size of a city, with the intricate details of a window, across a mix of hardware specifications, in a game.. and confusingly returning to the office the next day for a significantly worse experience.

What’s needed?

Today, our data and energy are locked into inaccessible proprietary file formats, accessed only by expensive hardware, complex desktop software, and traditional ways of working collaboratively.

In reducing the barriers of entry (Desktop Hardware, On-prem infrastructure, and high-cost monolithic licence structures), we increase the opportunity for interaction by a broader, more equita-

‘‘

ble range of stakeholders. Each has the opportunity to engage with a project’s design, construction and operational data. Depending on the task and level of interaction or change, we should be free to choose the right hardware to access the right tool.

Within the tools we use (whichever is the best tool for the problem), the software should read and write to a cloudenabled data framework (see part 1) to get the required information.

Software should appropriately expose relevant tool sets and functionality for data interaction based on our chosen hardware:

• Lightweight apps for quick review, measuring or basic changes

• Desktop or cloud-augmented tools for heavy modelling, significant geometry changes, mass data changes or processing etc.

Importantly, in either case, having access to interact with a consistently updated, singular data source (see part 1) . All are live, not via copies or versions.

In 2024, every design studio, engineering firm, and construction company will operate with a hybrid workforce between various offices, at their homes and increasingly with a global footprint. We’re not going to revert back to office-only working

e accept that for a small set of design firms, the current design tools on the market are, for the most part, capable of delivering production models, drawings and deliverables for their projects.

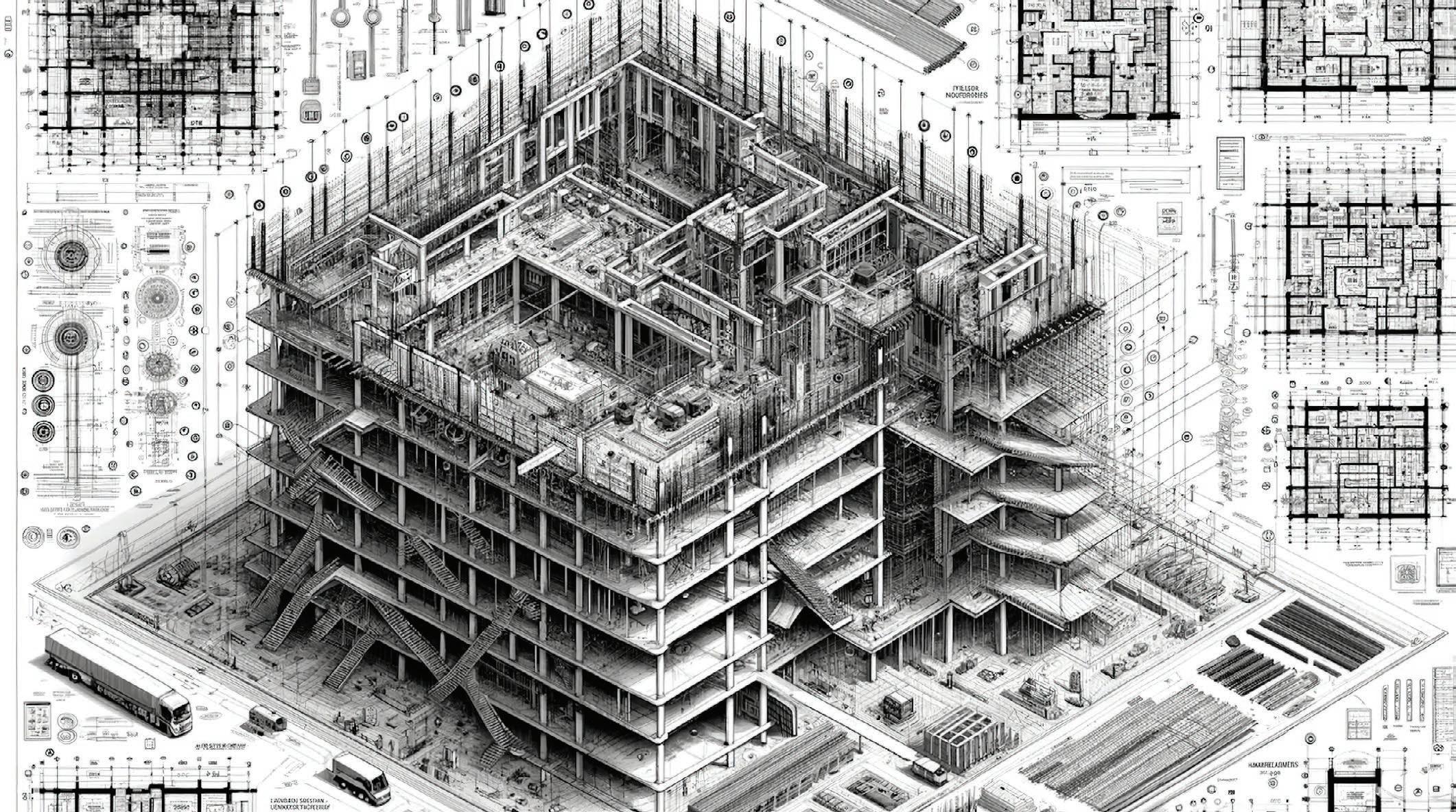

However, for many who’ve helped put together this specification, our Mondays through Fridays deal with much more than that. Intricate, challenging, troublesome projects. From office buildings in the historic city to newly proposed neighbourhoods, even tall buildings, airports, stadiums and more.

We get it. Software developers must balance features and performance for most of their use base (good market fit) against the needs of the few (the 4 in 10 customers, though they often represent a larger overall quantity of users).

As a collective, we fundamentally believe that the vast majority of the tools we use today can barely support the projects delivered by most firms. Take a square mile of London around Bank, with a mix of intricate heritage buildings and modern tall glass icons. Add to that the buildings in between, the glue. These built assets need constant refreshes and attention to support adaptability and appropriate longevity.

Designers are struggling to deliver these projects efficiently.

To accommodate the required level of detail that we need to deliver both digital

models and the construction documentation, we’re forced to take complex technical strategies and workarounds to subdivide and fragment models by purpose or volume to try and collate the whole picture. This approach results in teams no longer working on a holistic design but rather a series of smaller project sections, making it harder to affect project-wide changes when necessary, ultimately leading to unintended errors. If the tools at our disposal today had a strong relationship structure to allow proxies between assemblies and construction packages, this could be avoided.

Workarounds significantly limit creative flow and disrupt updating design and data alignment between models, even leading to inconsistency.

Technical barrier?

Is there a technical barrier preventing any design software from dealing with the range and scale of data? From the detail of the sharp edges of a fire sprinkler to zooming out and seeing an entire urban block?

It’s tough not to make yet more comparisons to the software used in game development, with their level of streaming, proxies, and Nanite technologies, and to get deeply jealous. We’re questioning our ability to model anything smaller than 100mm, whilst they’re having conversations like “Let’s model sand and dust particles.”

In recent years, increased access to real-

time rendering engines and using them on top of design software has enabled designers to receive instantaneous feedback whilst designing and immediately understanding the impact of their decisions on lighting, material, and space/volume without waiting days for the response.

Beyond visualisation, what about understanding the performance of space for things like natural light, climate, and environmental comfort? It’s not uncommon for many to share models that need to be rebuilt and evaluated. Those delays make it extremely difficult to implement changes or tweaks to the design to accommodate the results of these analyses (if at all still possible).

Analysis or review of any kind should not be a 4-day exercise to move data models between tools so that we as designers can make more informed decisions whilst designing.

The Data Framework (see part 1) is key to supporting software and tools connecting to the latest design data without proprietary file format chaos. Maximising the

‘‘ Workarounds significantly limit creative flow and disrupt updating design and data alignment between models, even leading to inconsistency ’’

benefits of cloud computing would reduce the complex strategies we take to collate design information, make widespread changes to data and better connect with our design and construction partners.

Where are we now?

In summary, we’re struggling to deliver holistic design at both a product level and whilst considering the full construction model or the broader context of a collection of buildings.

Many of the projects we’ll all be working on in the future will include complex retention of existing building fabric, which isn’t easy with the tools we have today, designed for small orthogonal

new-build buildings. More on this later.

We’re prohibited by tools that cannot manage the scale of data or connect the relevant representations of the overall scheme; we’re left waiting to see what might happen when we formally visualise or analyse our design.

Delays and disconnect between design and analysis reduce the ability to make changes to improve the quality of the design and the comfort of occupants.

What we need?

Going forward, we have to close that loop.

We need modern, performant software that enables designers to interact with the full scale of data associated with challeng-

ing construction projects, including the ability to make widespread changes to design in efficient ways.

We need tools that can represent the complete details of design and construction models with the functionality to assess, analyse, adapt, and mass manipulate changes. This would span from individual components to rooms, apartments, building floors, construction packages, and whole buildings in a master plan.

The data framework enables designers to understand the consequences of their decisions in ‘close-to-real-time’ and can make changes more efficiently to improve the quality and lifespan of the built environment.

The design tools we predominantly use today are not from this era.

Code prompts, command lines, patterns of key presses, and dense user interfaces of small buttons aren’t exactly friendly to those not raised on CAD tools in the 90s. These challenge artists, designers, and creatives to convert their ideas into geometry that adequately conveys and communicates their intent and vision. Most of our tools today have their roots in a previous generation and, while they have improved over time, have retained a UI structure unintuitive to new users, who clearly can’t know where every button is or know every command line. The thick software manual they handed out when the product was released is an excellent example of the time investment needed to use the tools.

Most designers, even those very adept with complex software, flag their tools today as a wrestling match where the mental load to operate prevents them from thinking about design.

That becomes even trickier when we take into account how modern software updates. In our businesses, Microsoft Teams updates every other day or so, moving buttons around, which can be frustrating. With design software able to move away from annual release cycles, we’ll see this in AEC too.

That doesn’t mean don’t innovate. It just needs to come with clear communication on new functionality. We’ll work on growing agile users who become more expectant and comfortable with constant changes across all their tools.

We’re not talking about performance; we’ve covered that in part 2. But the ease/ level of obstructions in moving from idea conception to appropriately conveying modelled design. A good design tool can achieve this in an ‘appropriate’ time with structured data and useful geometry for others. And I’m also talking about the overall time to complete relevant outputs

‘‘

navigate and find what’s needed, even for experienced and well-versed users. Our designers battle tools to get their ideas converted into digital space.

What we need?

Code prompts, command lines, patterns of key presses, and dense user interfaces of small buttons aren’t exactly friendly to those not raised on CAD tools in the 90s

Aligned with the Data Framework (see part 1), Users will be accessing design and construction data across different devices based on their persona with the type of interaction they need. Though a familiar experience is important across any interaction people will have with their tools. We recognise there will be relevant limits in functionality based on the device.

needed for an issue or to collaborate with others. A good design tool can swiftly support development and changes by coordinating and exchanging with others.

We’re still using tools with the experience and interface of CAD products from the late 90s.

Fragmented software, with panels and windows of dense, small icons reflecting tools and functions. These are difficult to

Design software needs to be user-friendly, efficient, versatile and intuitive. Clean interfaces that utilise advancements in language models to interpret plain language instruction and requests, including navigation of complex tools, e.g. ‘Isolate all doors with a 30-minute fire rating..’ or ‘Adjust all Steel columns on 5th floor, from type X to Y..’ More on Automation in part 8

Tools need to offer a clean and friendly experience that enables designers to translate ideas to digital deliverables quickly. Different personas will interact and access data across different devices, which must feel familiar.

Provide accessible feedback. If a command, tool or code prompt doesn’t work, why not? Help the user understand what they may need to do to unlock this.

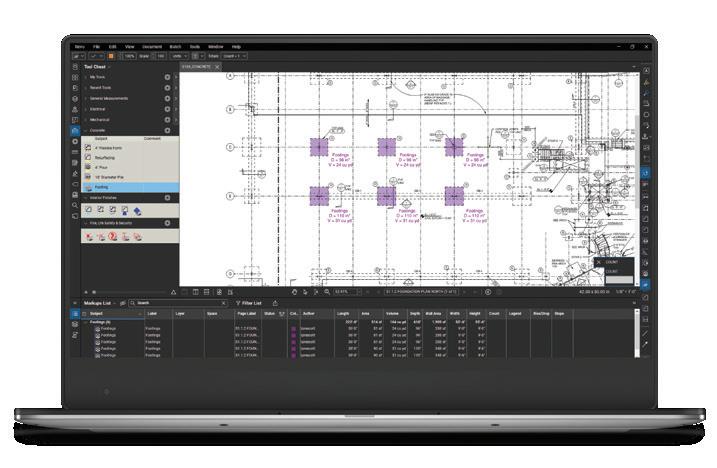

Construction software that gives you the flexibility to build fearlessly.

Bluebeam construction software empowers you to take full control of projects and workflows, with customisable tools specially designed for how you work.

Whatever your vision, we’ll help you see it through.

bluebeam.com/uk/be-more

I’ll start by making a bold statement that might annoy a few people in design studios when the drawing board was king.

The transition between the drawing board and CAD was ‘easier’ than the move to 3D object modelling.

In reality, we replaced drawing lines on paper with drawing lines in software.

The more challenging move was from 2D line work to 3D object-based modelling, and plenty of people in roles similar to mine with the scars to prove it. It was a much more significant challenge and mindset change for most designers, who had to adjust from only drawing what they wanted to see to getting everything and then working out what they did not want to show. Being presented with everything and dialling back has created a laxness or lack of attentiveness to correct or manually adjust drawings, leading to many familiar comments about a ‘worsening’ in drawing quality.

Aside from drawing development (and more in part 9 deliverables), there have also been changes in the modelling approach we take within tools. At one end of the scale, we have tools focusing on surface, point, plane, and line modelling, with complete flexibility and creative control. At the other end of the scale, we have tools driven by objectbased modelling with more controlled parametric-driven design. Unfortunately, the tools we use in our industry are either at one end or the other and fail to recognise the need for a hybrid of the two.

We approve of the consistency and structure that object modelling brings to a project, but the types of buildings most people work on require greater flexibility. Design features within these proposals need nuanced modelling tools to cut, push, pull, carve, wrap, bend, and flexibly connect with the parts and libraries of fixed content.

It is also a misconception that the requirement for creative modelling is limited to the early design stages of a project. Sure, at early conceptual stages, we need design tools with flexible, easy modelling functions, and yes, later in a project’s design development, we need rigid delivery software through production. But the reality is that we always need both; a structured classification system of modelled elements with consistent components whilst being able to adapt edge cases to support unique or historical design creatively. Design does not stop, and the need for flexibility does not stop just when we start production documentation.

Tools that understand construction? Many of the design tools that we use today do not have any intrinsic understanding of ‘construction’, or ‘packages’ of information for tender or construction. The plane/point-based modellers are just as valuable for designing items of jewellery as they are for a building’s design and construction.

At the other end of the scale, there are object-based modellers with basic classification systems loosely based on construction packages. But these classifications are not smart. They have no intelligence or understanding of why a door is not a window, or a roof is not a floor. As a specific example, adjusting the location of a hosted door in a wall should prefer to correlate/snap to internal walls not internal furniture (because interior layouts are unlikely to influence the locations of doors). When using our current platform of design tools to help encapsulate our design ideas, we are not doing so with any underlying building intelligence or construction parameters. Architectural design often challenges the boundaries of form and function. What if a building’s geometry causes a modelled element to perform as both a floor, a wall, and

an arch? Having the flexibility to customise and flexibly classify elements is important beyond a basic construction. Future tools should have genuine construction intelligence from a building down through assemblies to individual elements. These project elements should host data relating to assigned identifiers, key attributes, relationships of constraints, and associated outcomes or performance. The Data Framework (see part 1) supports this by recognising Entity Component Systems (ECS). You can find an excellent summary of how this would work by Greg Schleusner on Strange Matter (www.tinyurl.com/magnetar-strange-matter) Software developed with this relational understanding of construction and parts enables a more intelligent future of automating the resolution of connecting and interacting parts like a floor to a wall and repeating elements and information on mass. More on Automation in part 8.

Where are we now?

In summary, today, we are limited to tools at either end of the spectrum of function—flexible tools without structure or structured tools without flexibility. Neither understand the basic principles of how buildings come together.

Software should enable design, not control it through its limitations. There are key modelling functionalities that enable good design software:

Accuracy - Modelling tools should be an accurate reflection of construction. They should allow design teams to create models that have an appropriate level of accuracy depending on scale (see part 3) , project stage and proposed construction.

Flexibility - Project teams should not have to decide between geometric flexibility, complexity, or object-based modelling. Future architectural design software should strive to embrace and connect the two approaches, offering an environment where designers can nimbly navigate between the two. Transforming a geometric study into a comprehensive, data-rich

‘‘

The transition between the drawing board and CAD was ‘easier’ than the move to 3D object modelling. In reality, we replaced drawing lines on paper with drawing lines in software ’’

building model should be a fluid progression, reducing the risk of information loss and eliminating the need for time-consuming geometric data processing. This would involve intelligent conversion systems that interpret and transition geometric models into object-oriented ones, retaining original design intent while proposing appropriate object classifications and data enrichment.

Level of detail - Future modelling tools should be able to handle increasing levels

of detail without sacrificing performance or efficiency. Teams should also be able to cycle through various levels of detail depending on a specific use case.

Intelligent modelling - A new generation of tools needs to push beyond the current standard of object-based modelling. Project elements — from whole buildings, down through assemblies, to individual elements — should host data relating to assigned identities, key attributes, constraints relationships, and associated processes. This

embedded data should be based on realworld information, reflecting the knowledge and information that the AEC operates with and the appreciation for emerging methods of construction. More to follow in part 7 on MMC and DfMA. Software developed with this balance and understanding of basic construction principles can also benefit from intelligence and efficiency gains, such as resolving basic modelling connections/ details and amending repeating elements and data/ information en masse.

Combined with the power of real-time GPU rendering and a seamless integration to Chaos Vantage, Chaos is thrilled to announce the launch of Corona 12, designed to revolutionize how AEC professionals explore, create and present their designs.

Experience the full potential of Corona with:

Streamlined efficiency: Seamless integration with Chaos Vantage enables instant scene exploration and rapid rendering, enhanced with GPU ray tracing in real-time.

Enhanced control: Virtual Frame Buffer 2 (VFB) lets you manage multiple LightMix setups in one place, use A/B comparison tools, bloom/glare calculation deferment, edit .cxr files instantly, and more.

Limitless creativity: Tools like Chaos Scatter, Curved Decals, Corona Pattern improvements, low sun angles in Corona Sky, Density Parameter for clouds, and Corona Material Library accessibility, offer additional ways to elevate your creative work.

Join our webinar, “Explore beyond limits with Corona 12 & Vantage,” where you’ll see the seamless integration in action, and be inspired by the results achieved by Luis Inciarte and Robin Walker from Narrativ studio. Visit chaos.com/webinars/corona-12

Try the new Corona-Vantage connection yourself, visit the Chaos Corona and Chaos Vantage trial pages and download a 30-day free trial for both products.

e are already living through disturbing weather patterns due to a changing climate. The years to come will challenge the occupational comfort of those in our existing building stock as much as those planning to live in the buildings we are designing. Despite the obvious challenges ahead, the tools we currently use to design and develop the urban environment offer little to no native functionality that provides feedback relating to building performance.

As hinted at in Part 3 – Designing in context and at scale, understanding the performance of a design whilst designing is the most effective way to incorporate change and minimise adverse effects.

As sustainability and the drive for net zero carbon have moved up the priority list of the AEC industry, we have seen various platforms appear to help us understand the carbon load of our designs through life cycle analysis

(LCA). Whilst initially limited to smaller, more nimble and reactive software developers, we finally see intent in the more developed tools we use.

However, these tools all make the same assumption: that every project starts with a blank screen. For most projects we’re working on today, the question isn’t about starting from scratch; it’s about how much of the existing building fabric we can save.

The most sustainable new building in the world is the one you don’t build.

The default approach to entirely demolish and rebuild new has no future. Most projects we design today are ‘retrofit first’, reinventing the buildings we already have. In 2023, to have any genuine conversation with local authorities and (for the right reasons), we’re spending significant time analysing how much existing building fabric can be retained vs. replacing appropriate material with a more efficient structure providing more comfortable and efficient spaces.

This analysis can take weeks and is a delicate balance that no design software tools support or understand. Carefully considering a balance of commitments to the environment with future occupant comfort in a changing climate, the retrofitting of existing buildings is not a trend that is going away.

What is the role of design software here?

As designers, we’re arguably the most responsible party for the impact of new construction on the environment, economy and society. With our broader design and construction teams, we’re making decisions about the merits of the existing building, its fabric and what is still perfectly viable to retain and reuse.

And this doesn’t start with a blank screen in our software.

Tools should effortlessly incorporate and provide feature detection and systems selection from point cloud, existing survey models, LiDAR and photogram-

metry data to enable designers to utilise the existing building data from day one. With our designers spending weeks making assessments and viability studies on the retention vs new construction materials, we need tools to simplify and support our analysis of material quantification, embodied carbon calculators, and thermal performances within spaces. Today, most firms have built their own tools, like embodied carbon calculators, in the absence of the capability of the tools we use. This should be equitable across the sector, not limited to the few.

As we determine the appropriate strategy and balance between retention and longer-term operational efficiency, design tools will equally need to support wider studies such as operation energy, climatic design, water, biodiversity, biophilia, occupant health and well-being, their impact on amenity and their community. We’re not seeing any design tools with that on their agenda.

In summary, and quite bluntly, nowhere. We’re building our own tools, duplicating the efforts of our peers in the absence of these being accessible to the design software we use, emphasising the need for a data framework. We’re desperately struggling to tackle existing building data and use it intelligently to interact with/slice up existing buildings further limited by modelling capabilities. When we want to complete any level of analysis to gain intelligence, we’re remodelling in each tool.

Software that enables responsible design. Future design software should, by default, have a basic understanding of materials and fabric and the ability to provide real-time feedback to ensure that a design is aligned with predetermined performance indicators across comfort, environmental impact, social performance, and economic considerations. An

environment should be enabled that is free from siloed or disconnected analysis work-streams and models: analysis should happen in parallel, on the fly, as opposed to having to export certain model formats for analysis in a data cul-de-sac.

Specifically relating to environmental impact, future design software should understand, by default, existing buildings and how retention and refurbishment designs can be facilitated.

These tools should effortlessly incorporate and provide feature detection and systems selection from point clouds, existing survey models, LiDAR and photogrammetry data to enable designers from project inception to utilise the existing building data. Tools need to be able to accommodate the imperfections of existing buildings.

In any event, we need these best-in-class analysis and assessment tools to interpret models and data from our Data Framework (see part 1), where the model may originate from another tool completely.

‘‘ The most sustainable new building in the world is the one you don’t build. The default approach to entirely demolish and rebuild new has no future

At Bentley, we believe that data and AI are powerful tools that can transform infrastructure design, construction, and operations. Software must be open and interoperable so data, processes, and ideas can flow freely across your ecosystem and the infrastructure lifecycle. That’s why we support open standards and an open platform for infrastructure digital twins.

Leverage your data to its fullest potential. Learn more at bentley.com.

Current AEC software is, for the most part, developed around traditional methods of design, construction and delivery. In 2024, we are struggling against both our and our client’s aspirations to deliver a design using modern methods of construction (MMC) or Design for Manufacture and Assembly (DfMA) approaches. We want to work in this way, but we’re hampered by our tools. Construction approaches have evolved in the last five to ten years. Many construction efficiencies, site planning, communication, quality control and safety initiatives have enabled MMC approaches, such as offsite and modular, to deliver construction. The volumes achievable through safe and high-quality ‘factory’ construction is an inevitable, unstoppable, and increasing requirement that our design tools simply do not align with out of the box.

For the majority of firms who helped author this specification, there is an increasing number of projects looking to achieve more certainty and confidence prior to construction.

Across Design for Manufacture, Modular and Offsite Construction, there are constraints that we, as designers, know we must work to. For example, a prefab unit’s maximum volume/size to fit on the back of an unescorted lorry through Central London. The units can then be joined together to make a 2-bedroom apartment. Giant Lego, if you will.

However, two key issues are present in 99% of the tools we use today.

Firstly, we cannot easily set out any

critical dimensional constraints when conceptually massing an early-stage design (associated with DfMA, Modular or Offsite construction). We’ve seen new tools come to market in the last 18 months, fighting for the same small piece of the pie in early-stage massing explorations and viability studies. Without the ability to define design constraints for modular or prefabrication intelligence whilst designing and setting out these buildings, these new tools are unfit for purpose for many architects.

In the absence of construction intelligence, we’re going to keep walking into problems.

Secondly, the design delivery tools we use today cannot interface with construction/manufacturing levels of detail. This means we can’t fully coordinate a prefabricated assembly with the overall building design. Let me repeat that. Today, in our current design delivery tools, we cannot coordinate a fabrication level of detail assembly with the overall building design whilst designing. Primarily, this is related to the capability of performance and scale, which we covered in part 3

You will frequently see the construction media share dramatic headlines about the collapse of another modular/ factory-built offsite company and them going bankrupt. I know it is a complicated issue with many influencing factors, but if we cannot coordinate manufacturing level of detail and data with our design models, that has to be a big factor, which is why it isn’t going smoothly. Implementing changes later in the pro-

ject is always challenging, especially at the cost of either design quality or profitability, to modularise packages. Or both.

Where are we now?

We’re designing with no modular intelligence whilst developing models, using tools that don’t understand how buildings are built.

And the tools we’re using for delivery cannot coordinate fabrication levels of detail with our design intent.

What the industry needs?

Software that enables DfMA and MMC approaches.

To enable MMC and DfMA through our software, we require tools that meet the criteria across design, construction, and delivery:

Design - The next generation of design tools must be flexible enough to support modern construction methods and an evolving construction pipeline. DfMA and MMC approaches, such as volumetric design and kits of parts, are developed by rules. Software should understand this and facilitate rules-based design. As covered in the Modelling section (part 5) , this doesn’t mean the software knows geographic/regional limitations, construction performance limitations or limiting to a fixed/locked system, but provides the platform for users to define these rules.

These tools are essential for real-time design and system validation, optimisation and coordination. These future platforms should manage scale, repetition,

‘‘ For the majority of firms who helped author this specification, there is an increasing number of projects looking to achieve more certainty and confidence prior to construction

and complexity, the hallmarks of DfMA. Collaboration with fabricators and contractors should not require reworking models to increase the level of detailing.

Construction and delivery - Future AEC tools should enable design teams and contractors to work together in the same environment, drawing upon intelligence and input from both to produce a true DfMA process. For this, these tools should understand modern methods of construction by default. Whether it be a modular kitchen, pod bathroom or apart-

ment building by modules, design tools must support fabrication levels of detail across an entire building and incorporate construction intelligence.

This is possible. Industry and software developers have built configurators of industrialised construction systems, aware of how products can be applied to designs based on the parameters and production logic defined by the manufacturers. This isn’t easily accessible within our current design software. However, it could be closer through the ‘Data framework’ (see part 1) and with performant and

scalable tools (see part 3). We are also aware that construction and delivery methods will be constantly evolving to stay “modern”. Our tool ecosystem needs to do the same by continuously staying relevant and in touch with the actual work of the industry.

Future tools should allow project teams to define key design parameters and constraints for compliance, regulatory assessment and design qualification. These are not necessarily set values but an ability within the data framework to set parameters.

Most industries leverage automation and machinelearned intelligence to support decision-making, reduce repetitive tasks, increase quality, and boost efficiency. But what about architecture, engineering, manufacturing, and construction, and in the context of this series, what about the tools we use? Firstly, let’s contextualise against familiar types of automation and AI:

Scripting - Most references to AI today are confusions for scripting. The most common form of automation is basic scripts that run and perform automated tasks to resolve a repetitive process. Usually, to perform a function or provide a solution, the base software we use is unable to do by itself. No creative thinking, out-of-the-box assessment or suggestions, just following a defined process to solve a problem.

Large language models (LLM) - As a result of big tech scanning every page of every book, sensible associations of words and languages created LLMs. These apply to most industries. Whilst this is significantly accessible for most industries, the niche vocabulary and specific terminology of design and construction result in reduced value. Additionally, these tools are often averse to specificity. For example, having recently used a custom GPT trained on the UK Building Safety Act, despite multiple iterations of varied prompts, it could not summarise the minimum building height requirements that had been clearly identified in the documentation.

Generative AI for Media / GraphicsAfter mass training models to identify objects in images (via humans using verification/I am not a robot), media generation/production has become mainstream and relevant to our industry in a broad way to support ideation, mood boards etc. However, as mentioned above, limited industry-specific dictionaries mean using ‘Kitchen’ as a prompt will get you somewhere. However, specifics about timber cabinetry or variation terminology like upper or lower cabinets won’t get you any further.

AI in design and construction

Machine learning and the broader field of AI has seen limited use in the architecture, engineering or construction industry. They are not part of the core tools we use.

This is partly due to the relatively slow digital transformation of the industry into a data-driven sector but also down to the limited data structure in the tools we use today.

The first wave of value from AI?

The AEC industry is rife with repetitive processes. Yet, we always try to reinvent the wheel from project to project. Across our firms, experience and knowledge is often reapplied on each project from first principles, without any thought about learning from previous projects. Given the unstructured and inconsistent wealth of data, drawings and models across our industry, AI’s first wave of value will likely be in the ease of accessing and querying historical project data. A mix of language models and object/text recognition will help us harvest the experience we have built up across delivered projects.

For example, for an architectural firm designing a tall commercial building in the city, there’s obvious and existing experience that defines the size of a building core. The structure’s height might determine the number of lifts/elevators, minimum stair quantities, and critical loading dimensions. The floor area and desk density may define how many toilets

are needed within the core. If challenged to re-explore the envelope of the building mass, these changes would influence that core’s previous design and dimensional parameters. As we slightly increase the building envelope, leading to more desks, we tip the ratio for more toilet cubicles, reducing the leasable footprint. Despite tackling this challenge repeatedly across projects, with the current software stack, it’s common to do these calculations manually every time. Currently, the ‘automation’ or ‘intelligence’ comes from an architect or engineer who has been doing this for 20 years and has the experience (occasionally a script) to provide quick insight. Suppose AI can enable rapid discovery of experience from previous projects into a framework for future projects. It will create more time to explore improvements and emerging techniques.

The second wave of value from AI? Following the first wave of connecting unstructured data and the resulting deeper understanding/training of the data produced in our industry, AI’s second wave of value will be around better insight from assessing geometry, data, and drawings, unlocked by an open framework of data (see part 1) . With a deeper understanding of AEC dictionaries and exposure to 1,000s of data-rich 3D models filled with geometries and associated data, AI can now

propose contextual suggestions. As covered in the User Experience chapter (see part 4) , this might, at a simple level, mean that tools can process natural language requests, instead of interacting with complex interfaces, toolsets, and icons.

Another example of value following industry-specific machine-learned intelligence, would include tools that help us find our blind spots or oddities in the models and data we create, such as gaps, missing pieces of data, incomplete or likely erroneous parameters or drawing annotations.

This instantly brings significant value to designers, engineers, constructors and manufacturers to substantially improve the quality of information we generate (whilst significantly reducing the risks of exchanging incomplete information).

The third and further waves of value?

Following contextually accurate, structured data with better insight from geometry, data, and drawings, AI has a deeper understanding of how we generate and deliver information. It’s finally wellplaced to understand our deliverables. It can support and augment the delivery of mundane and repetitive drawing production and model generation.

Automated drawings? More on this in the next chapter, Deliverables.

Where are we now?

Our sector has a significant opportunity to apply automation and AI principles to increase the efficiency of design ideation, design development, and the generation of data and drawings to collaborate with others. Our unstructured, inconsistent data has provided no easy win or low-hanging fruit for developers to apply emerging technology to such a niche industry.

As a result, whilst we can lean on tools developed for generic industries, like large language models and generative models for images, there is no more relevant, usable application of AI within the core tools we use today.

We cannot leverage historic design experience project-to-project or quickly revisit previous approaches.

Whilst every software startup in the

market is touting themselves as AI-enabled, it’s yet to be seen how they leverage AI and how it is ‘learning’ from users securely and responsibly.

What the industry needs?

As the premise of the data framework progresses, tools will have a better structure and hierarchy of construction packages/sets, exposing data at an entity component system (ECS) level, enabling training models and future use of AI. This structure will enable software to understand better the relationships of modelled geometry, their associated data and that which is relevant and collaborated on by 3rd parties. This wealth of exposed interactions will be essential in training machine learning to augment mundane and repetitive processes.

Leveraging historical data, design decisions, and the logic of existing projects can help us enable project-to-project experience, reducing the need to reinvent the same wheel each time.

Tools that can understand our outcomes will be well-placed to machine-learn the steps taken to get there. These highly repeatable processes can then be augmented by the software we use to provide designers and engineers more time to focus

on better design outcomes.

Tools that have learnt from our outputs can highlight possible risks for us to review and fix before exchanging information with third parties.

Using AI technologies to help harvest, discover, suggest, and simplify the generation of deliverables like data and drawings is entirely different from automating design generation. You’ll automatically think this is coming from the place of ‘turkeys not voting for Christmas’, but how can AI generate design effectively? Generating great design is not based on rules and principles but on creative thought, emotion, and the relationship to specific context and use. I’m sure a tool can develop 10,000 ideas for a building, but how many are relevant? Are they appropriate by relating to the people who’ll occupy and live in the space? Form vs function? What are the appropriate materials and suitable construction options? Do they have an appreciation of the historical context or existing building fabric? You’ll expect me to say this, but we’re not looking for tools to design buildings because it’s too emotive. We need tools that augment our delivery of great design—automated design intelligence, not automated design generation.

For over 100 years, the primary method for commercial and contractual exchange between designers, engineers, constructors, manufacturers and clients has been best recognised through the production and exchange of drawings.

We produce a product (drawing), generated over many hours, including years of previous experience and knowledge, and then exchange with another party. These are very often referenced as required contractual deliverables in agreements between parties.

In the last decade, with a more digitised approach to developing and sharing design and construction information, we’ve evolved from printing drawings, rolling them in tubes, and manually delivering them to partners to using cloud-based exchange platforms. These have enabled us to transact this exchange of drawings in the web (Extranet solution forming part of a Common Data Environment). Additionally, through the use of more intelligent design software, we’ve been enabled to associate data within geometry. As such, this chapter refers to deliverables, including drawings, documentation, and data.

The future of deliverables through augmented automation?

When architects were master builders, drawings initially started as instructions or storytelling of building and intertwining materials to achieve the desired outcomes (building Ikea furniture or Lego models, if you like).

Today, ‘Instructional Drawings’ equates to less than 5% of the types of drawings we now produce. It wouldn’t be uncommon for a complex project (scale and number of contributors) to create at least 5,000 drawings through a project’s design, construction and handover. Each was developed for different purposes, from demonstrating fire risk compliance to providing suitable information for tendering a construction package/set of information. Somewhere between five and ten hours are invested to generate, coordinate, revise, and agree on each drawing.

Generating drawings represents one of the most considerable cost implications to the design and delivery of construction, yet it is comprised of highly repetitive tasks which apply to every type of project.

For example, architects spend hours developing a fire plan drawing for each floor of every proposed project within our firms. These plans are broadly 80% the same from floor to floor and project to project. We might apply graphical templates to show, hide and alter the appearance of modelled content to ‘look right’ and then spend time adding descriptions, text, dimensions, specifications, performance data callouts, etc. At least on the same project, this is almost the exact process for each floor. This is significantly consuming for tall buildings with 30+ storeys.

The future of deliverables with a strong data framework?

Where a Data Framework (see part 1) enables design and construction team members to collaborate and exchange data more equitably and flexibly, will we still need to generate and share the same volume of traditional drawings?

Suppose we can communicate the relevant information for tendering a construction package/set through shared access to geometry and the relevant data and parameters. In that case, a manufacturer may have all the information they need digitally without needing a set of 100+ drawings and schedules?

Additionally, when collaborating in such a digitised way (Data Framework), does it still make sense that we are planning information production for printed drawings for an A1 or A3 (24 x 36) sized piece of paper? Given the unlikely event that it will ever be printed?

Where are we now?

With that said, whilst many would like less documentation and traditional drawing production, formal drawings are still the product of most firms. Unfortunately, this is not going to change soon, so we need to look towards a future of AEC software that still has to cater to a component of drawn information.

Whilst more object-based modelling tools make the generation of coordinated drawings easier than traditional 2D drafting, there is little other automation, let alone AI-based workflows, that assist in generating final deliverables, including drawn content and other digital outputs.

Much of the effort expended throughout the design process is on curating sets of these drawings, sometimes disproportionately to the amount of time spent on actual design. Amongst many of the frustrations felt by project teams that have given rise to the creation of this specification, this is one of the most contentious. Designers want to design. When the legal framework still centres on drawings as the main deliverables, and the software we use caters for that in a manual fashion, then the time is ripe for change.

What the industry needs?

Creating more drawings is not an economical way to resolve questions between design and construction teams or reduce risk. That said, we need tools that enable drawings and other deliverables to be generated efficiently and intelligently.

We need design software platforms that have the ability — either through manual input or data-driven intelligence — to largely automate the output of drawn information and submission models to suit both the organisation, the specific project, and wider influencing standards.

The needle of our processes should be moved from focusing the majority of our time on representing a design — through the generation of drawings and associated data — to actually doing the design work. The generation of design documentation and digital deliverables should be incidental to the design process, not vice versa, as it sometimes feels today.

We need design software platforms and tools that enable data and geometry transacting on the Data Framework, to minimise unnecessary drawings from being generated. All whilst supporting a record of information exchange and edits in a fully auditable way to resolve legal concerns.

WHERE’S CLIPPY? WHERE IS THE APPROPRIATE USE OF MACHINE LEARNING AND AI?

IT LOOKS LIKE YOU’RE TRYING TO MAKE A FIRE PLAN?

I CAN GET YOU 90% OF THE WAY?

When presenting this specification at NXT DEV back in July 2023, given that much of the audience was software developers, startups and venture capitalists, it was very much appropriate also to cover an area outside of functionality and experience of ideal software - Access, Data and Licensing.

Today, we have a diverse portfolio of design apps and tools. The Future Design Software Specification promotes using the best tools for the job, which means more software, not less. As such, every firm needs to assess and justify the tools they use as a business. And so, we must talk about commercials because in an even more competitive market, especially with market pressures and challenging economies, we need to be able to position and justify a software’s value.

How do you quantify value?

For example, my company commissioned a detailed report of our design software portfolio last year, which explicitly focused on the tools and software we use, their classification, usages, our business’ skill and experience, barriers, workflows/ interoperability, tools feature set, their roadmap/lifecycle and importantly commercials, access and licensing.

This helped us benchmark the tools we’re using, where they are sat within a wider delivery mechanism and to some extent which tools to keep paying for. But that is one company’s view. What about the collective view of all authors of the spec? Well, that’s what the spec represents. Each of the ten sections are our universal key issues and measurables of software we want and would happily pay for.

Access and licensing?

We understand the upfront investment required in developing software, marketing, ongoing technical maintenance, support and implementing features as the product matures. We get it. The approaches for accessing products and licensing have evolved significantly in the last five years and are set for even more disruption (more on this below). We don’t

propose to offer a ‘silver bullet’ win-win model that works for everyone. In reality, a combination of models is likely the answer. But to be clear, we are all mature, profitable businesses that want to pay for the tools that provide real value aligned with the Design Software Specification. We want modern tools in the hands of our users, achieving better efficiency and value. However, our hands are often tied through some of these licensing models. However, those who put together this specification can offer our experiences with those models offered today:

% construction value - Charging by a % construction value moves the decision maker of which design tools to use to the one ‘paying all the bills’, the Client/ Budget Holder/Pension Investment Fund. Do you think they are best placed to make the commercial decisions about the design tools the project team should use? How effective or frankly time-consuming do you think it would be for design firms to have to justify each tool they want to use to, as an example, a pension investment portfolio funding the project? This is in addition to the complexities of how the construction value of a project is calculated and agreed upon and the ongoing administrative processes in place as the project budget fluxes and evolves through to completion.

Assigned users - Some might say how ‘good’ we’ve had it with shared or a floating licence pool within our companies (maximum number of concurrent users who can release licences for others when not in use). That’s versus assigned licences by a user, where anyone using the product, no matter how infrequently, requires an assigned licence. Naturally, this is great for investors, as on paper, many customers now need 50% more licences, but in the long term, that’s like loading a gun and aiming at your foot. With those floating licensing models that supported users ‘sharing’ a licence up to a concurrent amount, we effectively gave everyone access to your products. This enabled the organic growth of usage for the product as we found value. We’d buy

more licences as your tools provided more value to more of our designers. In contrast to assigned licences, if we must buy every designer a dedicated licence, at full cost, for a product not yet at its organic fit, we will probably end up restricting access to the products to a few who are our early adopters. That limitation is unlikely to produce positive results for either the software developer or the design firm’s efficiency.

Consumption - Those contributing to this specification are mature businesses needing to forecast and plan operation expenditure against forecast fee earnings from our projects. Predictability and planning are a must for us. Licensing models that require us to gamble and roll the dice predicting what level of token, consumable or vendor currency we need to buy to access tools over the year ahead is a lose-lose for everyone. We either resent buying too many that expire or didn’t buy enough (digging into unbudgeted costs). In this model, we can’t see how any design firm would reflect positively on the tool’s positioning value. Like the previously mentioned model, the result is likely for the firm to remove access to tools to avoid accidental or unplanned consumption, not a positive move for the increasing usage or the design firm realising the product’s actual value.

Overusage models - So long as there are accurate, accessible metrics for usage, consumption, and structures to provide warnings/flags regarding usage, this model offers two main benefits: A fairer view of value by ‘only paying for what we use’ and the flexibility for firms to quickly adopt, grow and encourage usage of products (compared to the limitations of fixed licence assignments or limited users or tokens). However, I don’t think anyone would positively reflect on unforeseen or unexpected bills at the end of the month. Hence, metrics and warning structures are critical for this model.

Future evolution of license models? Today, all the above models value the software’s consumption through dedicat-

The ADDD Marketplace is the eCommerce website dedicated to discovering AEC software & services.

Reach your ideal customers. Easy store creation & management.

Increase traction & sales.

Diverse AEC software options. Informed decisions from user reviews & descriptions.

2050 Materials API integrates climate data into design workflows, accessing 150,000+ products, 7,000 materials, and 1M+ data points for sustainable choices.

Site operations software for construction contractors. Insite removes slow, manual, and paper-based processes, enhances site-to-office communication, and tracks progress, quality and safety to ensure timely project delivery.

We are Remap. We are design technology generalists. We build software, develop add-ins for BIM environments and offer digital transformation consultancy. Get in touch - info@remap.works

By tapping into live data from your Revit models, we offer you real-time insights into the health of your projects. Our live data audits save time and resources.

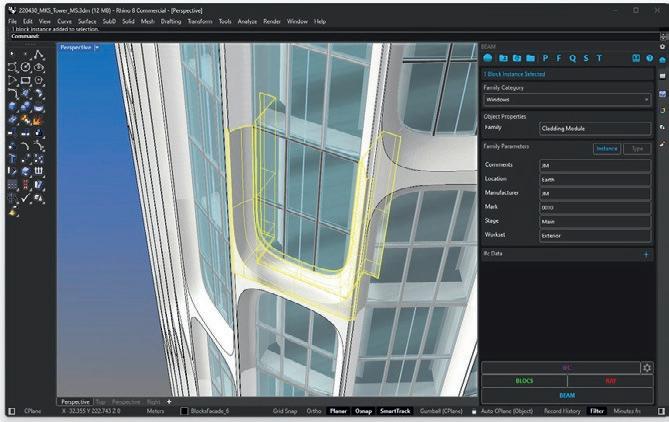

BEAM bridges Rhino, Revit & IFC for seamless BIM workflows. Trusted by global AEC firms, BEAM empowers architects to effortlessly create BIM elements in Rhino and transfer them to Revit or IFC.

Satori is a collaboration platform with a humancentric approach. Empowering organisations to efficiently design, build and operate with digital assets across their Real Estate.

Search amazing software, see our featured products below!

Howie is your AI-Copilot, in-house expert, who never retires, is always up to date, and extracts valuable insights from your data. New Gen Project Management System.

Simplify ISO 19650 compliance with integrated OIR, PIR, EIR, AIR, BEP, TIDP, MIDP, and more. Try automated model verification in our Clever Data Environment (CDE).

empowers architects, developers, real estate agents and municipalities to get quick and accurate access to building regulations through AI.

ed seats, usage, or tokens.

In the not-so-distant future, it is highly likely that through the introduction of further Automation, Machine Learning and AI, the interaction with software (quantity of time, users, engagement or need for as many tools) will very likely be reduced.

As a result of AI, will we all reduce the number of licences, tokens, or products we need? How will the software vendors then position access and licensing models? How will product data and running services be monetised? The authors of this specification aren’t best placed to determine what this might look like but are well-positioned to support software developers in how this works for everyone.

are we now?

Some leading AEC tools in use today have shifted towards poor value offerings over the past 5-10 years, where the ‘actual’ cost of using a single design tool has become almost incalculable against the genuine production output by a designer. A best-in-class modeller has a poor value position when only available as part of 30 tools, especially where the majority are not updated or reflect modern software functionality. These spiralling costs

to design firms become extremely hard to justify when these products do not fulfil fundamental design requirements or are not evolving at the pace of the AEC industry.

Many of the licensing models covered above lead to customers having to restrict or remove access to software to control expenditure. For emerging tools finding their place within design and engineering firms, these restrictions throttle the ability to quickly find organic usage and prevent firms from realising efficiency gains.

Running combinations of models, such as dedicated licenses and consumption models, has dramatically increased administration for large companies, constantly needing to review and reassign licences to avoid over consumption and obtain the true value of what has been purchased (contrasted to pool/shared licences with zero overhead admin).

Flexible access to software promotes and encourages product growth within a firm. Software accessing and resolving data or geometry across a data framework becomes a valuable feature of the software. However, it should not be a

chargeable function for the customer/user.

For many, especially larger firms, longer-term models better support their ability to budget and plan design software usage.

Providing functionality for administering licences, users, consumables or over usages should be the primary focus of software developers before launching new licence models. While providing customers with tools to get the best value from the software may not seem commercially advantageous, it vastly increases the trust and comfort each year around renewals and increases usage and loyalty between parties.

For example, functionality to highlight users in the ‘wrong’ model or advise where usage is peaking and teams are facing limitations preventing them from working. Suppose software developers can demonstrate real usage (value) through statistics. In that case, renewal conversations will be straightforward and focused on achieving more value in the year ahead. We want to pay for what we are using, and transparent usage metrics should not be an extra/chargeable offering to any user, let alone enterprise customers.