“The scientific way of thinking,” says Carl Sagan, “is…an essential tool for a democracy in an age of change.”

Dear Readers,

I start this issue by remembering Carl Sagan, an astronomer and a popular public advocate of sceptical scientific inquiry and the scientific method. Carl reminds us of the potential of science as a tool for advancing society. And I think that is where we are in the industry now.

As I welcome you to read this issue, I wish for you to remember that you bear the torch of technological transformation. In the data centre industry, we have much change coming as we tackle many challenges.

Primarily, there is the widely discussed subject of Sustainability. On that, I want to pause and ask, how much of it is talk and how much of it is action? I hope this issue helps you to look at the discussions and question what is missing, what you can add, and what you are changing.

I will also confess that my interest in Cooling has expanded while reading about the

EDITOR: ISHA JAIN

T: 01634 673163

E: isha@allthingsmedialtd.com

GROUP EDITOR: CARLY WELLER

T: 01634 673163

E: carly@allthingsmedialtd.com

GROUP ADVERTISEMENT MANAGER:

KELLY BYNE

T: 01634 673163

E: kelly@allthingsmedialtd.com

SALES DIRECTOR: IAN KITCHENER

T: 01634 673163

E: ian@allthingsmedialtd.com

various methods being utilised in the industry. Reiterating my interview with Liz Cruz from the Autumn 2023 issue, she predicted a transition in liquid cooling that is now highlighted further in this issue’s feature.

Also, Cloud Computing & Storage still remains the most diverse topic, and I have tried to encase as many ideas as possible, from cloud repatriation to WAN acceleration and immutable object storage!

Finally, I want to share that this is my final issue of DCNN as the Editor. I have made a difficult decision to move on, but someone equally brilliant will be taking over. Be on the lookout for further updates, and I wish you a relaxing, yet riveting read!

Isha Jain EditorSTUDIO: MARK WELLER

T: 01634 673163

E: mark@allthingsmedialtd.com

MANAGING DIRECTOR: DAVID KITCHENER

T: 01634 673163

E: david@allthingsmedialtd.com

ACCOUNTS

T: 01634 673163

E: susan@allthingsmedialtd.com

3

48

65

Isha Jain engages in a conversation with Louis McGarry, Sales and Marketing Director at Centiel, discovering his personal journey into the world of uninterruptible power supply.

22 What liquid cooling means for carbon reduction in data centres

24 Data centre cooling: an imperative for innovation

27 How to unleash the full potential of data centre liquid cooling

29 Why immersion cooling is not yet a viable option for many data centres

31 Unlocking cooling efficiencies with rear door heat exchangers

50

54

56

59

62

Pulsant, UK provider of edge infrastructure and data centres, has cut the ribbon on its new £4.5 million data hall in Manchester and welcomed IT services leader, Dacoll, as its first customer in the upgraded facility.

The expansion supports Manchester Digital Strategy’s 2026 targets for £1 billion investment in digital infrastructure and a 50% increase in digital

sector businesses by 2026. This latest development to the Trafford Park site is part of platformEDGE, Pulsant’s national edge strategy to equip regional businesses with the infrastructure to capitalise on new technologies such as analytics, AI and IoT.

Recent research from LINX shows that bandwidth needs in Manchester have more than doubled, going from 135Gbps to 307Gbps in the last 12 months. Answering this demand, the Pulsant Manchester site spans four data halls with more than 400 racks, offering 1MW of power. Using its national data centre network and ecosystem of connectivity partners, Manchester businesses can also access global clouds, telcos and carriers via the LINX Manchester point of presence (PoP).

Pulsant, pulsant.com

Schneider Electric has announced a collaboration with NVIDIA to optimise data centre infrastructure and pave the way for ground-breaking advancements in edge artificial intelligence (AI) and digital twin technologies.

Schneider Electric will leverage its expertise in data centre infrastructure and NVIDIA’s advanced AI technologies to introduce the first publicly available AI data centre reference designs. These designs are set to redefine the benchmarks for AI deployment and operation within data centre ecosystems, marking a significant milestone in the industry’s evolution.

With AI applications gaining traction across industries, while also demanding more resources than traditional computing, the need for processing power has surged exponentially. The rise of AI has spurred notable transformations and complexities in data centre design and operation, with data centre operators working to

swiftly construct and operate energy-stable facilities that are both energy-efficient and scalable.

In collaboration with NVIDIA, Schneider Electric plans to explore new use cases and applications across industries and further its vision of driving positive change and shaping the future of technology.

Schneider Electric, se.com

Stelia and Telehouse Europe have announced a partnership to launch Stelia IX solution, a high-capacity Layer 3 internet exchange service. Stelia IX revolutionises global connectivity and data exchange efficiency with Telehouse Europe as a key anchor location.

The new partnership comes at a critical juncture as IP traffic volumes continue to surge with a 22% compound

annual growth rate (CAGR) in western Europe. This trend is largely driven by the proliferation of content-rich applications such as video streaming, social media, and gaming, which together account for around 80% of all data traffic and is leading to a significant shift toward more localised traffic patterns.

By facilitating efficient, high-capacity data exchange and reducing reliance on traditional transit services, Stelia IX enables Telehouse Europe clients to optimise their network costs and improve overall profitability, at the same time empowering them to dramatically extend their reach into distributed ecosystems by making it highly accessible to a wide range of organisations, from small-scale enterprises to established ISPs and content providers.

Stelia, stelia.io; Telehouse Europe, telehouse.net

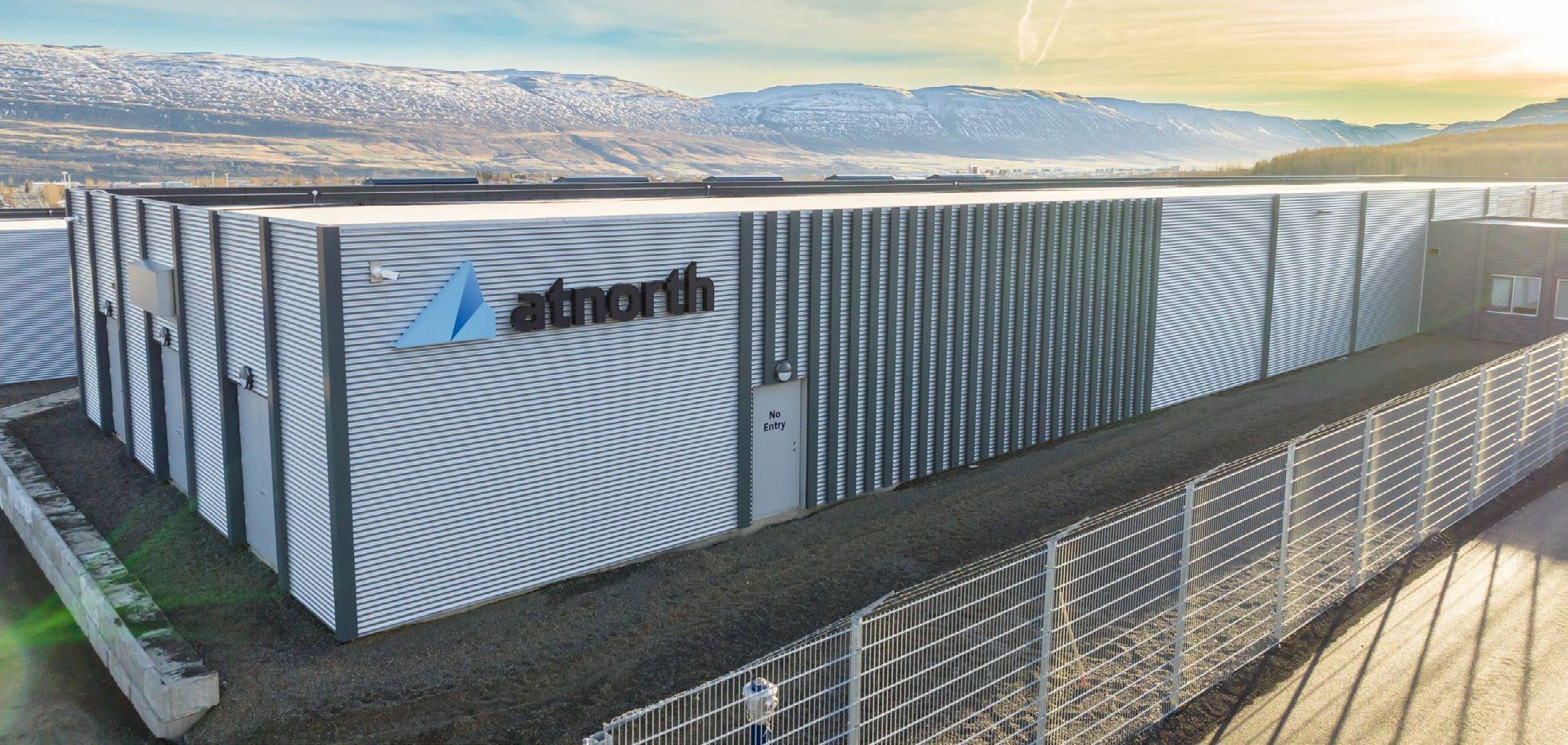

atNorth, a leading Nordic colocation, high-performance computing and artificial intelligence service provider, has announced an expansion of its partnership with Nordic IT services corporation, Advania, providing additional capacity at its ICE03 site in Iceland which opened last year.

Advania is a long-standing customer of atNorth at its ICE01 site in Reykjavík, in addition to some of atNorth’s other data centres in Sweden and Finland. The expansion to the ICE03 campus allows for further geographical separation of its infrastructure and highlights the business’ focus on data security, not to mention the benefits of redundancy and performance optimisation.

atNorth’s ICE03 data centre is located in the town of Akureyri, which is in the north of Iceland and, therefore, benefits from the country’s cool climate and renewable

energy sources. Akureyri is becoming increasingly attractive as a thriving technology hub as a result of investment in better and more resilient connectivity in the region.

atNorth, atnorth.com

As the global community intensifies its focus on sustainability and energy efficiency, the intersection between technology and environmental responsibility becomes increasingly significant. As a result, techUK has released a new report shedding light on the potential benefits and challenges associated with integrating data centre heat into district heating networks in the UK.

The report, Warming Up to Efficiency: Understanding the Potential Benefits and Pitfalls of Data Centre Heat Export in the UK, delves into the opportunities, barriers, and successes of reusing data centre residual heat, offering insights for stakeholders across industries. District heating networks, renowned for their low-carbon heating solutions, stand as a platform to harness surplus heat generated by data centres. However, effective implementation hinges on addressing several critical factors.

The report underscores the importance of sustainability considerations and regulatory frameworks in advancing towards net zero objectives. It calls for government clarity on participation criteria, heat availability, quality standards, and infrastructure guidelines to establish a standardised and scalable approach.

techUK, techuk.org

Yondr Group has energised its first campus in Malaysia, marking a significant milestone in delivering the site’s power infrastructure.

Located in Johor’s Sedenak Tech Park, the campus is set to deliver 300MW of critical IT capacity when fully complete. It will see the development of multiple

phases, with access to dark fibre connectivity, scalable utilities, and infrastructure.

The milestone reached in Johor brings the company a step closer to its aim of positively contributing towards Malaysia’s digital infrastructure. The Sedenak Tech Park, formerly known as Kulai Iskandar Data Exchange (KIDEX), is a flagship data centre complex, which spans 700 acres and is nestled in the heart of the larger 7,290-acre Sedenak Technology Valley.

This puts Yondr’s data centre development geographically close to a wide variety of technology-driven developments. This will ensure that its clients in Malaysia benefit from long-term scalability potential, in terms of both power and land requirements.

Yondr Group, yondrgroup.com

University of Lincoln sets sail on an edge data centre modernisation journey in collaboration with Schneider Electric and RMD.

Established around 25 years ago, the University of Lincoln is one of the newest centres of academia in the UK. Charged with enriching the city’s economic, social and cultural life, and listed in the world’s top 130 in the Times Higher Education’s (THE) Young University Rankings 2022, today it is also one of the top universities for student satisfaction.

The main university campus is situated in one of the great historic cities, in the heart of the city of Lincoln. Today, the city is a winning combination of old meets new, where remnants of Roman Britain, a Norman castle and the Cathedral Quarter lay alongside a vibrant city

square and the contemporary architecture of the university’s campus buildings.

To date, the university has constructed or acquired 25 buildings at a rate of approximately one per year, recently opening a substantial new student village. In terms of its significance to the local economy, out of every five people you might stop in the streets of Lincoln City, one is likely to be studying at the university, where just under 200 different courses are offered (independent numbers suggest 18,000 students of a total 103,000 urban population).

As an academic institution that has more or less been conceived and grown up in the internet age, its student population is tech-literate, and the university depends heavily on IT to support the many faces of college life. For example, the campus has become largely cashless in recent years. “You can’t buy a cup of coffee or a sandwich if the IT isn’t working,” says Darran Coy, Senior Infrastructure Analyst and Team Leader for Compute and Storage at the university. “Everything has to work 24/7.”

With IT and network uptime critical for the function of the university, its IT team supports a variety of services, some of which require large amounts of data storage and processing. For instance, at Lincoln Agri-Robotics (LAR), established at Lincoln University as the world’s first global centre of excellence in agricultural robotics, lightweight robotic vehicles are sent into fields for a variety of tasks, using image recognition in applications from the identification and eradication of pests and diseases in real time without synthetic pesticides, to monitoring, weeding and harvesting crops.

Elsewhere, Darran says many of the standard applications used by students and the university itself have moved to a Software-as-a-Service (SaaS) or cloud-based delivery model. Accordingly, downtime is a luxury the university simply cannot afford. “In times past we could arrange to shut down IT systems on, say, a Thursday morning to carry out essential maintenance and upgrades, and of course our weekends were completely free,” he says. “But today, many of our buildings are open all day and every day. So we have to make sure that everything is up and running all the time.”

“We open a new building nearly every year, and each one needs its own comms room. Despite the fact that we operate a central data centre, each comms room is populated with IT racks, including servers and networking equipment, together with all the necessary supporting

infrastructure, including cooling, structured cabling, power distribution (PDUs) and power protection. It is the epitome of edge computing.”

These edge environments, distributed across the city centre campus and satellite campuses at Riseholme and Holbeach, provide Wi-Fi connectivity, enabling access to SaaS applications required by students and staff. These edge facilities are, therefore, mission-critical to academic and back-office operations. Each person has a unique IP address, allowing them, for example, to print documents and materials. Even those studying traditional subjects like geography and music use as much technology as the computer scientists, according to Darran.

“We have something like 1,000 teaching groups that rely on AV, for example, they’ve got big screens, sound systems and digital projectors, all kinds of cool stuff to enliven lectures and make information more consumable.”

The university is also a major user of Power over Ethernet (PoE). “All of our access points use PoE,” continues Darran. “And it’s also used to power other assets such as Raspberry Pi operated digital information displays widely used around the campus and security cameras. PoE requirements increase the need for reliable power in all situations.”

Like many universities, Lincoln works with outside companies on research projects as well as providing incubation services for innovations which may have wider market appeal. These sorts of activities are income-generating for Lincoln, and the IT which supports them needs to be robust and demonstrably resilient.

Power reliability is, therefore, a major challenge for the university. Given its location in the city centre, the utility is generally dependable, and since prolonged power blackouts are not seen as a major threat, there is no provision for secondary power generation to any of the university facilities. However, intermittent disruptions do occur to the main power supply, and there are occasional ‘brownouts’. Taken together, these are recurring problems which could present a threat to continuous uptime.

Consequently, the university depends heavily on uninterruptible power supply (UPS) systems to build resilience into its network. UPS systems provide battery backup in the event of a disruption to mains power, so that essential functions can continue operating as normal until mains power is restored. Given the distributed nature of the edge IT infrastructure around the college, there has been a substantial wide

variety of UPS systems in place. Currently, there are 110 APC Smart-UPS systems from Schneider Electric providing backup to essential assets.

Given the lack of power-generating equipment at the university, UPS is specified with battery systems to deliver one hour’s runtime for the attached load. It had been the custom to add UPS support on an ad hoc basis as new buildings were built and fitted out with IT. In the early days, there was no systematic or coordinated approach to deploying UPS systems and, in fact, it was only the loss of expensive IT equipment in the early days which made their use standard.

“The distributed edge nature of the university’s IT infrastructure in the university and the ongoing expansion with new buildings, together with the growth in dependence upon SaaS and cloud services, has sometimes meant that infrastructure has not always kept up with demand. We faced two tasks – the need to maintain and upgrade existing UPS systems to ensure they could deliver the required runtime and the need to meet the provision of new Schneider Electric UPS and installation services in new construction projects. To help us, we partnered with RMD.”

Darran and the team began their relationship with RMD over a decade ago when the Schneider Electric Elite partner won a tender for the replacement of some aging APC Smart-UPS On-Line SRT units on site. Soon after, the university took the step to implement a programme to ensure regular inspection and maintenance of the UPS devices on which it is so dependent. “In many respects, Schneider Electric is a victim of its own success – the UPS were so reliable and worked so well we hadn’t really realised that many of them were well past their use-by date!”

Opting for a systematic approach to securing power by contracting with a specialist UPS service provider, RMD was selected on the basis of an open tender. Based on various single and three-phase UPS systems from Schneider Electric, the approach to maintenance has since become much more proactive. RMD’s Scot Docherty says, “Our start point was to understand the condition of the UPS under contract using a simple traffic light scheme – there were a lot of red lights!”

Together, Darran and RMD started to renew the UPS and bring them up to spec. This ongoing programme covers the UPS installed in buildings, as well as adding UPS protection to some of the older campus buildings, which had never had the benefit of protection. In addition to the maintenance and modernisation services, RMD was also tasked to work with construction contractors to support them with sourcing and the installation of UPS to ensure power protection of edge server rooms in the new buildings.

The expertise of RMD has yielded benefits to the university, from procurement of UPS systems to maintenance and replacement, allowing the university to match new UPS systems to the exact requirements needed in each location.

“We’ve found it useful to involve RMD at the construction phase of each new building,” says Darran. “Sometimes a main contractor might recommend a UPS system that is wholly excessive to what we really need. Whereas, RMD, which has specialist expertise in the field, is much better placed to recommend what sort of UPS system we need and how many battery packs should be installed. So it’s great to have a relationship which allows us to ‘right-size’ our UPS requirements and, therefore, keep an eye on the efficiency and effectiveness of the proposed solution.”

The RMD relationship has made for a more systematic and regular approach to maintenance. “RMD knows us and our requirements and how we work,” says Darran. “Now, instead of waiting until something dies before replacing it, we have an ongoing system of regular maintenance and of replacing batteries and UPS units in accordance with their condition rather than their age.”

Two other important measures have been implemented as a result of the relationship. Firstly, following the installation of monitoring software - using Data Centre Expert - which is part of Schneider Electric’s EcoStruxure IT data centre infrastructure management solution, Darran is now able to manage and monitor all elements of the data centre infrastructure, including UPS and cooling centrally, to ensure maximum efficiency and reliability.

Data Centre Expert provides a scalable monitoring software solution that collects, organises and distributes critical device information to provide a comprehensive view of equipment. Importantly, the application provides instant fault notifications for quick assessment and resolution of critical infrastructure events that could adversely affect IT system availability.

The software gives Darran’s small team of six full visibility of infrastructure equipment spread widely across the campus in different edge locations, with the ability to prioritise remedial tasks in the event of unforeseen circumstances and respond more quickly to events.

Secondly, and further demonstrating how RMD’s expertise has benefitted the university, bypass panels as an aid to maintenance and replacement activities are now being installed as standard in the electrical design for infrastructure supporting the edge server rooms. “They’re not the cheapest things to put in, but they have saved us a lot of downtime. If a battery fails and needs to be replaced, for example, you just flick a switch to bypass the UPS and that allows you to keep IT services operating while you swap out any parts that need to be replaced.”

Immediate results from the university working with RMD and Schneider Electric include improvements to power availability as well as the serviceability of its infrastructure. By increasing temperature setpoints, the university is saving energy as a first step to moving towards becoming net zero carbon for IT services.

The improved monitoring and maintenance has resulted in a more efficient and reliable power-security environment that provides peace of mind to the IT staff and also presents opportunities for improvements in the area of sustainability. The insights from Schneider Electric APC UPS systems, APC, PDUs and APC NetBotz sensors made available using Data Centre Expert software have enabled Darran and the IT team to collaborate more effectively with the university’s sustainability team tasked with improving the overall carbon footprint of the campus.

The IT team has been slowly raising temperatures in its comms room, which naturally means using less power on air conditioning, using insights provided by a Data Centre Expert and custom software written by the IT team. “I can use query data to generate helpful graphs that provide an overview of whether the temperature is right in a room and where it can be appropriate to raise the operating temperature for better overall efficiency,” says Darran. “Being able to mine the data allows us to only use the power that we need.”

In addition, monitoring using Data Centre Expert software together with NetBotz sensors ensures that servers, as well as the UPS batteries, are kept within recommended temperatures. This ensures that warranty requirements are maintained and the batteries are in an environment that maximises their useful lifecycle. Another benefit is that equipment changes can be planned according to their condition rather than their age.

On the recommendation of RMD, physical infrastructure in edge locations is now being deployed in new builds with bypass switches as standard and upgraded in older installations, improving the efficiency of maintenance operations with no break in IT services.

“We enjoy working with RMD – over the years, their site engineers have given us straight advice, which we’ve found to be trustworthy. This is backed up by the quality of Schneider’s products and solutions. They not only help us deliver a first-class student experience, but also help us to achieve our efficiency and reliability goals whilst working towards greater sustainability. Together, we’re giving an edge to the education of all those choosing to enrich their lives by studying at the University of Lincoln,” concludes Darran.

Find out how one small change can be the big solution to your IT challenges. Watch the video.

Jad Jebara, President and CEO at Hyperview, illuminates the path for companies to bridge the widening skills gap, safeguarding the flourishing data centre industry’s future.

In an age ruled by data, the data centre industry has become the backbone of modern society, supporting the infrastructure that underpins our interconnected world. However, a critical challenge now threatens the stability and growth of the industry: The widening skills gap.

According to the Uptime Institute, 58% of companies reported difficulty sourcing qualified candidates to fill vacancies in 2023, up from 53% in 2022. This shortage, if not addressed promptly, poses a major existential threat to the data centre industry, and to every modern business that relies on digital services and storage. Not only should this challenge be taken seriously, but it should be addressed as the number one threat to the industry.

The rapidly evolving technology landscape is looked at by so many of us with excitement, and rightly so. However, it also presents a range of new challenges for data centre operators to grapple with. Workloads are shifting beyond traditional data centres, leading to a more complex and distributed infrastructure.

Gartner predicts that by 2025, 85% of infrastructure strategies will integrate core data centres, edge data centres, colocation, and cloud services. To navigate this complexity, personnel now require a specialised set of skills spanning various disciplines.

The talent shortage in the data centre industry not only jeopardises its ability to meet growing technological demands, but also poses a significant threat to the industry’s transition toward a greener, low-carbon future. The shift towards sustainable practices and the development of environmentally friendly data centres demand expertise in energy-efficient technologies, renewable energy integration, and innovative cooling solutions.

Finding qualified candidates with the required skill set has become a daunting task. Challenges arise not only due to the rapid evolution of technology, but also because established professionals in the field are in high demand. Poaching is nothing new, but it is certainly on the rise. The Uptime Institute found that 42% of operators reported issues with staff being hired away, primarily to competitors. This represents a considerable jump from 17% in 2018.

The industry is also confronting the impending retirement of many experienced professionals. The workforce within the industry tends to be on the more mature side, as indicated by a Data Centre Knowledge survey, which found that only 13% of respondents were below the age of 44. The Uptime Institute reported that by 2025, at least 2.3 million personnel will be required globally to keep data centres operating. Yet, by this time, half of the world’s engineering staff are projected to retire, creating an enormous void of talent to fill.

As the data centre landscape continues to evolve, operators must prepare for emerging technologies such as artificial intelligence and machine learning. A recent Capgemini survey highlighted the growing concern, with 59% of respondents considering the gap between talent availability and open roles a top business risk.

To stay ahead of the curve, data centres must start to look for other ways to fill the skills gap, such as proactively implementing training and development programmes for graduates entering the workforce. Efforts must be intensified to establish trade school and

graduate talent pipelines to the data centre that prevent other sectors from poaching such valuable talent. Additionally, the focus should also be directed within to retain current staff. By providing thorough training on emerging technologies and relevant regulations, these initiatives can help existing employees gain the necessary skills to continue a long, successful career in the modern data centre environment.

To broaden their talent pool, operators should start looking internationally for talent. Companies such as Deel.com provide opportunities to hire resources across the globe, breaking down geographical barriers. Improving recruitment strategies is equally vital, focusing on networking opportunities, role-model presentations, and career progression. By emphasising the importance of skills in project management, technical networks, data management, cyber security, and multi-cloud environments, the industry can attract and retain top talent.

Another important step to bridging the skills gap is to implement next-generation data centre infrastructure management (DCIM) applications into data centre operations. These technologies streamline management, automate tasks, and leverage AI and machine learning to enhance efficiency, resiliency and sustainability. The importance of evolving process maturity levels to achieve the four R’s - Recruit, Retain, Retrain and Reward - cannot be overstated. Next-gen DCIM technologies operate at a maturity level five, in contrast to traditional processes with a maturity of typically level one or two.

Currently, data centres are in the transition of forging a greener future and DCIM software is central to this. Its integration provides real-time insights into energy consumption, forecasting energy needs, optimising temperature settings, benchmarking performance, and measuring progress. This level of automation becomes especially invaluable during talent shortages, offering substantial support to ensure operational efficiency and environmental responsibility.

Hyperview, hyperviewhq.com

Isha Jain speaks to Louis McGarry, Sales and Marketing Director at Centiel, to learn more about his personal journey into the world of uninterruptible power supply (UPS).

IJ: Tell us about yourself and how you got into the sector.

LM: I left Bournemouth University with a degree in Design Engineering, and at the time, I didn’t even know UPS systems existed! I wanted to design aircrafts for a living, but hours spent at a CAD machine turned out to be uninteresting. I applied for a sales engineering job and Centiel’s now Chairman, David Bond, was on the interview panel. Later, I received a copy of The UPS Handbook - of which David is the authorin the post, with a note asking me to read it and

come back for a second interview. That was 18 years ago and I’ve never looked back.

IJ: For those who may not know, can you give us an overview of the work that Centiel does?

LM: Centiel is a Swiss-based technology company designing, manufacturing and delivering industry-leading quality power protection solutions for critical facilities. The individual members of its R&D team developed and brought to market the first

three-phase transformerless UPS and the first, second, third and fourth generations of true modular, hot-swappable UPS. Centiel’s products protect critical power loads in locations across the world.

IJ: What is your role like, and what has been your greatest achievement?

LM: I wear several hats. I’m busy with client meetings, I work to ensure my team is aligned, I’m involved in marketing and there is a significant strategic side to my role too. My greatest achievement is growing a team of individuals who can plan projects and support clients on a much bigger scale than I did when I started out. I try to impart knowledge early, so they can achieve great things. Centiel is all about quality - from our Swiss manufacturing base to our industry-leading solutions, to our client relationships. This all begins with strong foundations and the training of our team of trusted advisors.

IJ: Are there any exciting projects that you are working on at the moment?

LM: We are currently working on projects across different industries, from transport to banking and finance to healthcare. We have recently launched an IP54 UPS, making our products suitable for use in semi-industrial settings, opening another market sector for Centiel.

IJ: Where does UPS fit in the overall sustainability of data centres?

LM: Sustainability means making informed decisions today which will have a positive impact on tomorrow. However, up until recently, the most sustainable options have often been overlooked in place of lowest purchase price. Now, due to the dramatic increase in energy costs, customers are seeking solutions which save energy and have a low total cost of ownership (TCO). The introduction of StratusPower, with its 30-year design life, 9-nines availability and almost 98% efficiency, can help organisations to move away from a ‘throw away’ culture with a genuinely sustainable offering that also helps them reduce their TCO.

IJ: What does the future hold for Centiel products within the data centre industry?

LM: Centiel is a technology company which uses technology to solve challenges – these just happen to be in the UPS industry. We were initially focused on maximising availability and energy efficiency, but these technology challenges are now solved and we are focused on sustainability and how energy can be best managed in the future, especially for data centres. StratusPower is already hardware-enabled, and is therefore, future ready to accept and harness alternative energy sources.

IJ: Is there a topic you believe needs more attention and discussion in the industry?

LM: Sustainability must be addressed. The world is already behind the curve and conversations in board rooms have simply been too late to achieve the UN’s 2030 sustainability goals. In the UPS industry, we can make a

difference today to ensure organisations are more energy efficient, and there are longer term measures which can be brought in too. People are often worried about change, but we have no choice.

IJ: What are some challenges you face as a UPS manufacturer?

LM: Although Centiel’s team has led development in the UPS industry for many years, the company is relatively young. Established competitors have deep marketing pockets, yet their technology is not as good or as sustainable as ours. Our main challenge, therefore, is an ongoing one around education. However, our specialist team offers free training and acts as a trusted advisor to help organisations make informed decisions about different UPS solutions and help them work out what the best long-term options are.

IJ: What’s next for you and your career?

LM: To continue to grow and develop along with Centiel. I want to be the leader of a team that helps to revolutionise critical power protection in data centres and delivers excellent advice and customer service. I hope to leave a legacy of people who can carry on supporting clients with our solutions for many years to come.

IJ: What are your interests away from work?

LM: I love spending time with my family. I have three young children and act as transport to all manner of activity clubs from cubs, squirrels, karati, rugby to swimming – not necessarily in that order!

Centiel, centiel.com

As data centre facilities produce a significant carbon footprint, with high-power densities pushing air cooling to its limits, they will soon require specialised cooling solutions. Junji Zhu and Maria-Anna Chatzapoulou, Principal Engineers – Mechanical at Cundall, explain further.

The journey towards net zero carbon practices is approaching its culmination: action. Regulatory bodies are now focusing on the effects of embodied carbon and Scope 3 emissions.

Embodied carbon refers to the total emissions from producing and transporting the materials of a building, such as the emissions released when processing raw materials, transportation, construction, and the end-of-life disposal of materials. This is relevant for companies that want to invest in data centre development and retrofitting, as European companies must report indirect emissions through their value chains from 2025.

One of the challenges is to develop net zero carbon data centres while covering the demand for high-performance computing (HPC). To do this, companies must look to

systems that consider all of their stakeholders across the whole supply chain. Additionally, they are likely to deploy liquid cooling technologies to cope with the associated steep increase in IT density.

There is a need for a standardised approach to measuring net zero carbon data centres, but there is currently no global agreement on what this should entail. However, some initiatives seek to achieve this, for example, the iMasons Climate Accord Group. The lack of standardisation has made it difficult for companies to accurately report their emissions, leading to concerns about greenwashing.

Whole Life Carbon Assessments (WLCA), starting from material extraction, are becoming more comprehensive and require a standard metric for embodied carbon impact assessment. Whilst the sector-wide metric of kgCO2e per m2 is used, the metric of kgCO2e per kW of IT capacity might be better suited when evaluating operating efficiencies, particularly considering the imminent growth of high-density HPC servers. This will provide a more accurate representation, improving audit processes.

The technologies of the future require HPC. Many organisations building their data centres will have to invest in campuses that increase their IT capacity in MW and rack density. However, transitioning is expensive, and by current requirements and predictions, unnecessary at an entire data centre scale. Instead, organisations should look to construct their data centres to be HPC-ready, utilising sustainable, targeted cooling systems such as liquid cooling. By being HPC-ready, companies can take advantage of technologies such as AI, while the data centre itself continues to operate with a low PUE.

HPC pushes traditional air-based cooling to its limits, potentially increasing costs and carbon emissions and impacting sustainability. In some instances, air-based adiabatic cooling systems, particularly at scale, may see a high demand for water consumption when trying to cool HPC racks, as the racks need to operate at a lower temperature with higher air speeds, making liquid cooling more efficient in these use cases. As the number of HPC racks and their densities increase, liquid cooling deployment will also increase. These systems can manage the increased energy consumption of HPC racks in a targeted way that doesn’t affect the standard rack systems around them.

There are two main groups of liquid cooling technology suitable for HPC: direct-to-chip and immersion/precision-immersion cooling. Another technology often discussed as liquid cooling, but strictly speaking is not, but can be

suitable for HPC, is Rear Door Heat Exchangers (RDHx). Each of them have different benefits, but the main advantage of using them is that they can circulate liquid precisely to cool HPC environments without affecting the standard air-cooled racks often situated close to them.

With direct-to-chip or immersion liquid cooling, it is possible to achieve the same IT processing capacity using less power input to the rack due to the decreased demand for server fan power. This can be in the region of 10%, but does not contribute to increasing the PUE as it sits on the IT power side of the equation. In addition, liquid has a far greater heat absorption rate than air, which can transport the heat from the racks more efficiently and at high liquid temperatures, potentially eliminating the need for compressors to reject the heat.

Organisations that use HPC technologies must understand their carbon footprint and the technologies they use to comply with sustainable regulations. A hybrid approach will be essential for most in the future, rather than the complete transition. It will be crucial for legacy facilities, as it can help operators transition to more efficient systems while increasing IT capacity.

Cundall is already taking steps to address the need for a net zero carbon data centre. Its team is producing what a data centre of this kind would look like in practice and what operators need to consider when designing one. It has developed tools to measure embodied carbon affecting different areas of the project development. This approach to definition, measurement and reporting is significant.

As AI and HPC continue to increase IT capacity demand in data centres, liquid cooling will become far more relevant. However, only with a sustainability-led mindset can we realise its actual impacts and utilise the technology to the fullest.

Cundall, cundall.com

The rise of immense computing power presents unique cooling challenges. Nikolai Chakinski, Product Manager, Colocation at Neterra, shares that liquid cooling solutions are well-positioned to address these challenges.

The data centre services industry is experiencing a period of unprecedented growth, fueled by the ever-increasing demand for computing power. This surge is particularly pronounced in the realm of high-performance computing (HPC). The raw computing power of data centres comes from two workhorses: Central Processing Units (CPUs) and Graphics Processing Units (GPUs). While each excels in specific tasks, both share a key characteristic; they’re becoming increasingly powerful, cramming more processing muscle into smaller physical footprints. This miniaturisation, while impressive, comes at a cost of more heat generation.

This translates to a rapid rise in data centre power consumption, pushing the boundaries of traditional cooling methods like air conditioning. These systems, while effective for a time,

are struggling to keep pace with the thermal demands of modern data centres.

Air conditioning systems were not designed for the intense heat loads generated by today’s high-powered servers. They’re becoming increasingly inefficient and expensive to operate, struggling to meet the cooling requirements of these ever-more-powerful machines.

Traditional air conditioning systems have a practical upper limit of around 6-7kW on average cooling capacity per rack. This falls short for the growing number of racks demanding 12kW or more, highlighting the need for innovative solutions.

Liquid cooling solutions offer a significant leap forward in cooling capabilities compared to traditional air conditioning. They boast superior heat dissipation, improved energy efficiency, and the potential to reduce operating costs. There are two primary types of cooling solutions: immersion cooling and liquid cooling with heat exchangers.

Immersion cooling stands as the most efficient liquid cooling technology available today. In these systems, servers are submerged in a special dielectric fluid, which directly absorbs the heat they generate.

This method offers the highest cooling capacity and the lowest energy consumption of all liquid cooling solutions, making it ideal for the most demanding applications such as AI and HPC.

Direct-to-chip or cold plate liquid cooling provides another effective liquid cooling option. This approach is bringing the liquid closer to the

heat source and involves circulating a coolant through a cold plate heat exchanger located on the chip. The heat dissipating from the computer chip is absorbed into the coolant loop.

Compared to air conditioning, cold plate liquid cooling offers increased cooling capacity and improved energy efficiency. This makes it a suitable solution for applications requiring moderate cooling capabilities, such as general-purpose computing.

Liquid cooling solutions hold the potential to significantly reduce the environmental impact of data centres by optimising energy consumption and water usage.

Immersion cooling, in particular, shines in terms of energy efficiency, requiring less energy to remove the same amount of heat compared to other methods. Additionally, liquid cooling systems can be repurposed to capture waste heat from data centres, which can then be used for building heating, for example. The sustainable approach here is demanding reusing the energy one way or another.

As the data centre industry continues to evolve, innovative cooling solutions will play a central role in driving progress. Liquid cooling, with its superior cooling capabilities and energy efficiency, represents the path forward for building data centres that are truly future-proof.

Neterra’s latest data centre, SDC 2, exemplifies this commitment by utilising cooling technologies to cater to the growing demand for high-performance computing while also prioritising sustainability. The facility leverages a combination of advanced cooling and structural engineering techniques to minimise energy consumption and environmental impact.

As the data centre industry continues its journey of transformation, liquid cooling solutions stand ready to play a pivotal role in driving innovation.

Neterra, neterra.net

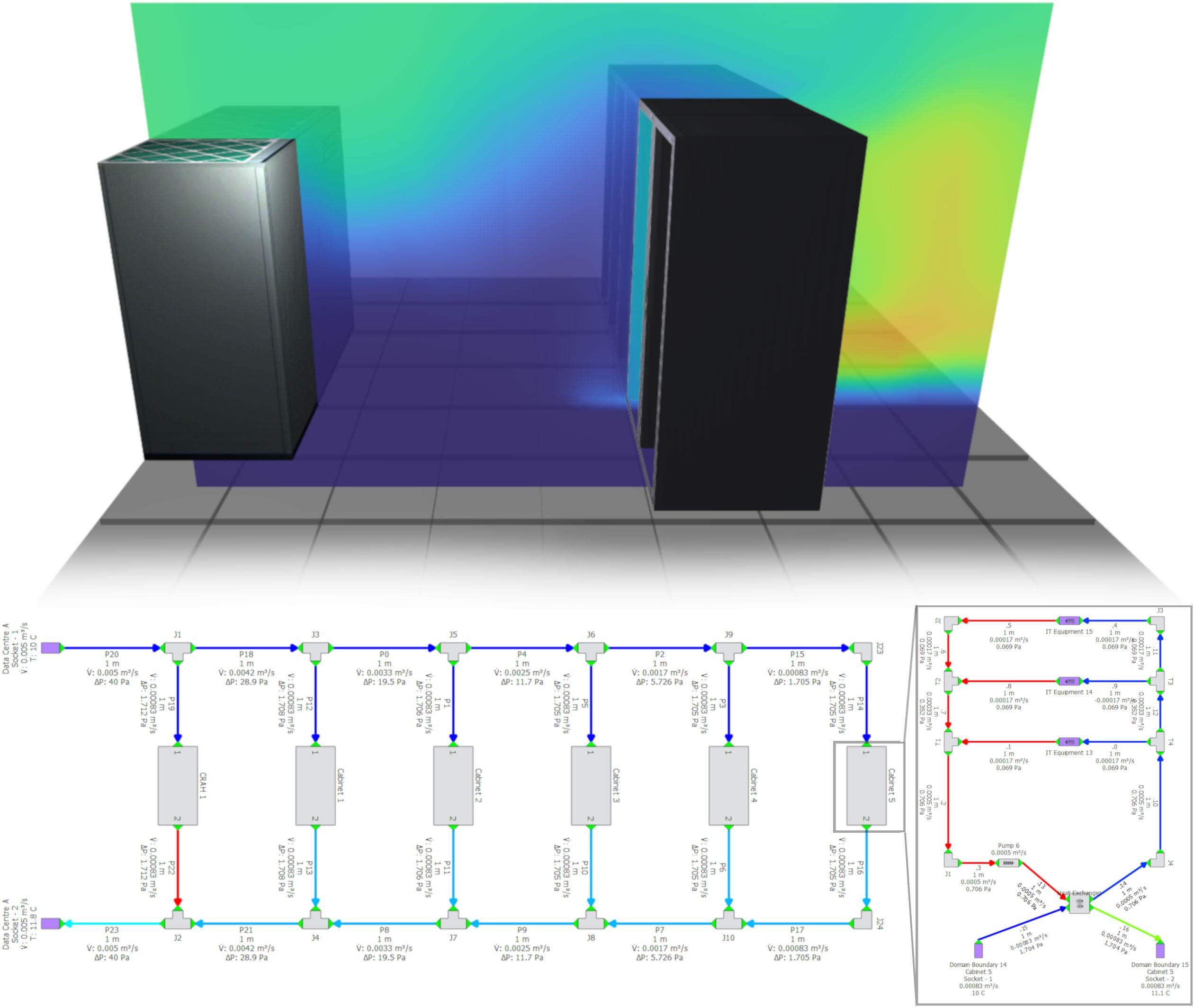

Mark Seymour, Distinguished Engineer at Cadence, invites readers to see how data centre digital twins can ensure liquid cooling reaches its full potential.

Reducing emissions is a top priority for leaders across the globe, pushed by the demands from both governments and customers. The data centre industry is no different. Luckily, there is good news. Those looking to drive both sustainability and efficiency can consider liquid cooling as part of the solution.

However, facility leaders and operators need an in-depth understanding of the technology to introduce it effectively. That includes knowing not just the pros, but also the limitations, and how tools such as data centre digital twins can help to ensure liquid cooling reaches its full potential.

The first challenge stakeholders must navigate is the integration of liquid cooling with legacy air cooling infrastructure in traditional data centres. Coordinating the intricate flow networks of both systems can be complex. Liquid cooling has the potential to disrupt established air cooling patterns, although this requires the careful retrofitting of existing infrastructure. Moreover, new liquid cooling technology that can work harmoniously with older systems could be costly for legacy data centres that have already invested heavily in air cooling systems.

Operational complexity can arise even in new facilities that incorporate liquid cooling from the get-go. After all, introducing fluid connections is an entirely different setup from what many data centre professionals are used to.

Immersion cooling, where servers are submerged in a mineral oil (or equivalent) bath, or cold plate technology, where a metallic plate with coolant inside removes heat directly from the components, introduces a completely new operational paradigm. It requires specialised training and expertise to navigate this unfamiliar territory and ensure efficiency and safety. In short, the transition from air to liquid cooling demands new skills.

Even with a willingness to embrace new methods, practical limitations exist. Theoretically, immersion liquid cooling should remove 100% of the heat from chips into the liquid. However, material incompatibilities and the systems’ reliance on buoyancy-driven flow create limitations. For example, in the immersion model, the insulating plasticisers in wires can react with the coolant, causing degradation and brittleness and impacting the equipment’s longevity. Furthermore, as chips become more densely packed to facilitate higher power densities, immersion systems may find it difficult to remove heat through a buoyancy-driven flow mechanism.

Cold plate technology offers an alternative approach but comes with its own dilemmas. Subpar coolant quality can clog and corrode the plate, diminishing the efficiency of heat removal. Because the hot chips and the cold plate cannot be effectively insulated from the air and cold plates are generally only applied to critical chips (for example, CPUs, GPUs and DIMMS), a portion of the heat will escape into the surrounding environment. Therefore, while offering advantages in certain scenarios, cold plate technology still requires careful consideration and will not be a complete blanket solution for all data centre cooling needs.

In light of the inherent challenges associated with cold plate and immersion cooling technologies and the absence of a clear

frontrunner for widespread adoption, facility owners and operators are tasked with critical evaluation.

Data centre digital twins, virtual replicas of physical data centre facilities, can offer the insight needed to help liquid cooling decision-making. These valuable tools enable modeling different cooling scenarios and technologies to be trialed in the digital realm so operators can make informed decisions about implementing changes in the real world. This helps maximise performance and minimise downtime, which are both key measurements for whether the introduction of liquid cooling has been successful.

Turning to digital twins to implement liquid cooling effectively will also put data centres in a stronger position to meet the regulations looming on the horizon. This includes the Energy Efficiency Directive (EU/2023/1791) and Corporate Sustainability Reporting Directive (CSRD), both of which mean larger companies must disclose their carbon usage figures, both direct and indirect. The reason is that digital twins enable the design, implementation and operation of liquid cooling to achieve carbon and energy reduction goals. However, digital twins can also provide customised dashboards and reports to help facilities oversee the necessary information for upcoming regulations.

Cleary, despite the hurdles, the potential of liquid cooling remains significant - it is a lucrative pathway to improved efficiency for data centres. However, this is not a solution that data centre managers can simply plug and play. Implementation cannot be rushed and requires intelligent insight. To manage the infrastructural and operational complexities of liquid cooling, operators need a safe test bed for trialing their introduction. Digital twins can offer this and help drive success.

Cadence, cadence.com

Considering a wide range of existing data centre cooling environments, Paul Mellon, Operations Director, Stellium Datacenters, offers his viewpoint on immersion cooling.

To say that immersion cooling is not yet a viable option in many data centres is quite a bold statement in an environment where immersion cooling can achieve the highest level of cooling efficiency. There are many in the data centre and communications industry who view immersion cooling as a panacea to energy inefficiency. In many ways, it has the capability to bring rack power density and efficiency to new levels.

However, this comes at a price in terms of the immersion environment, which is usually a tank filled with dielectric fluid. Both the tanks and the dielectric fluid bring a new range of issues for the data centre environment to embrace:

• Weight: This can vary in fully loaded size and weight from 500kg to several thousand kilograms requiring 20kN+ floors to support the same.

• Physical size: These can vary in size from 1,000mm x 800mm x 1,500mm high to 6,000mm x 2,000mm x 2,000mm high.

• Dielectric fluid handling: This is a significant issue. Depending on the specific dielectric, there can be health and safety issues, as well as the practical issue of dealing with 230/400V in a dielectric environment.

• Power density: HPC power densities can be very challenging for many of the mature data centres.

• Power and communication interfaces with the tank.

• Removal/reinstatement of IT kit from the immersion tank.

• Specialist training for staff: We are already challenged by a significant deficit of trained talent in the data centre industry, so the additional training will add to an already challenged situation.

While none of these elements are particularly challenging for a green field development, many of the 3,000 existing data centres in North America, plus another 3,000 in Europe will struggle to accommodate access, weight, space and staff training. Currently, there are only a dozen or so data centres in the world which are OCP (Open Compute Project) certified, Stellium Datacenters being one which can deliver on these elements.

In time, the immersion solution will evolve into a product that will be more favourable to deployment in a broader range of data centre environments. Currently, it is primarily used by clients who have purpose-built facilities specifically designed for this cooling method. There are also the few within the existing data centre pool that, with significant investment, can be adapted for immersion cooling.

This may all sound too negative in terms of immersion cooling. As an engineer, Paul has been trained to design the most efficient system, as well as ensuring the design works in an ‘existing world’ environment. He has the view that the world will take some time to evolve to a point where immersion cooling becomes an off-the-shelf solution to fit into a wide range of existing data centre cooling environments.

There are some 1.3bn internal combustion engine cars in the world today, alongside 26 million fully electric cars. In the last three years, this number has begun to move at a faster pace to fully electric vehicles. The product is more refined and practical. It has taken some 20 years for the motor industry to evolve to this position. HPC immersion cooling as a design, like the electric car, will continue to evolve and

mature to a product which is readily deployable to a wide range of data centre facilities, instead of the current purpose-designed immersive cooling facilities.

So, the expression of a bridge too far might be better expressed as a bridge too soon, certainly for the very many existing data centres that are not OCP certified and cannot meet the fundamental requirements of immersion cooling. Therefore, in the interim, the existing range of HPC cooling solutions will continue to deliver robust efficient HPC solutions for clients:

• In-row cooling for application up to 40kW per rack

• Rear door cooling up to 50kW

• Direct-to-chip/cold plate up to 100kW

These are all tried and tested robust solutions that can freely operate within the existing data centre environment. They have been deployed in many existing data centres. All can be configured to deliver a PUE of sub-1.2. In many ways these non-immersive options maintain the flexibility of the traditional data centre to evolve over time.

The term, flexibility, is really important. Taking an existing rack and fitting it with a rear door cooler in one of the 6,000 data centres in Europe and North America offers an immediate solution to support HPC demands. This route to HPC also has the real value of extending the life of existing data centre facilities without creating the significant construction carbon burden of building new facilities.

Stellium Datacenters, stelliumdc.com

Data centre organisations are striving to balance innovation with sustainability, especially when it comes to finding efficient cooling solutions for the data centres powering AI infrastructure. John Hall, Managing Director, nLighten UK, says that rear door heat exchangers can be a promising solution.

Rear door heat exchangers, also known as rear door coolers or heat exchanger doors, provide a targeted cooling solution for high-density server racks. By directly cooling the exhaust air expelled from servers, these heat exchangers optimise airflow management, ensuring that hot spots are mitigated effectively. This targeted cooling approach enhances the overall efficiency of the cooling system, enabling data centres to maintain optimal operating temperatures even under heavy computational loads.

Traditional cooling methods, such as raised floor air conditioning or overhead cooling units, often result in significant energy wastage due to inefficient airflow distribution and cooling redundancy. Rear door heat exchangers eliminate these inefficiencies by leveraging the hot air generated by servers to facilitate the cooling process. By harnessing this waste heat, data centres can reduce their reliance on mechanical cooling systems, resulting in substantial energy savings and lower operational costs, particularly in AI environments characterised by continuous high-power computing.

One of the key advantages of rear door heat exchangers is their scalability and adaptability to evolving AI workloads. As organisations scale their AI infrastructure to meet growing computational demands, traditional cooling solutions may struggle to keep pace. Rear door heat exchangers, however, can be seamlessly integrated into existing server racks or retrofitted onto new installations, providing a flexible cooling solution that can easily accommodate changes in rack density or configuration. This scalability ensures that data centres remain agile and responsive to the dynamic requirements of AI applications without compromising on cooling efficiency.

Maintaining optimal operating temperatures is crucial for the reliability and performance of AI hardware. Excessive heat can degrade component lifespan, increase the risk of system failures, and compromise the accuracy of AI algorithms. Rear door heat exchangers can play a pivotal role in ensuring the reliability of AI infrastructure by actively dissipating heat from servers, thereby extending equipment lifespan and reducing the likelihood of costly downtime. By maintaining consistent temperatures, these heat exchangers contribute to the overall resilience and uptime of data centre operations, essential for mission-critical AI workloads.

In an era where environmental sustainability is a top priority for businesses worldwide, rear door heat exchangers offer a compelling solution to reduce the carbon footprint of data centres. By optimising energy efficiency and minimising reliance on traditional cooling

methods, these heat exchangers help data centres achieve higher levels of energy efficiency and reduce the greenhouse gas emissions associated with cooling operations. Additionally, the reuse of waste heat for heating applications or district heating further enhances the environmental benefits of rear door heat exchangers, transforming data centres into energy-positive assets that contribute to local sustainability initiatives.

Rear door heat exchangers, therefore, represent a game-changing technology for data centres powering energy-hungry AI applications. However, there are a number of practical considerations when considering installing rear door hear exchangers. If the data centre uses raised floors, can the additional weight be supported, knowing that the ‘Wet Weight’ of the rear door heat exchanger, including the frame, can be anything between 120 and 190kg? What existing infrastructure can be used? Can the existing air cooling equipment support the new hybrid cooling infrastructure? Is the site water supply suitable? And is there capacity for growth as cooling requirements increase?

Having evaluated these factors carefully and achieved the optimum balance, data centre operators and owners can expect significant improvements in cooling efficiency, reduced energy consumption, enhanced scalability, increased reliability, and environmental sustainability.

In summary, these innovative cooling solutions are poised to drive the next wave of efficiency and performance in AI infrastructure. As organisations continue to embrace AI technologies to gain a competitive edge, investing in rear door heat exchangers is a strategic decision that promises long-term benefits for both business operations and the planet.

SUPPORTED BY

Sustainability means making informed decisions today, which will have a positive impact on tomorrow. So, when replacing an uninterruptible power supply (UPS), Louis McGarry, Sales and Marketing Director at Centiel, says that we have a duty to research and do our due diligence to select solutions which will offer a better, greener future.

Up until recently, the most sustainable options have often been overlooked in place of options which save on upfront CapEx costs. Money was the driver. Now, due to the dramatic increase in energy costs, for the first time in history, we are seeing this same driver push customers towards solutions which also save energy. Sustainability now goes hand-in-hand with cost savings.

The positive news is that most modern UPS already offer high levels of efficiency. For example, both of Centiel’s modular UPS solutions, CumulusPower and StratusPower have online efficiencies of over 97%.

Choosing the most efficient UPS available and right-sizing the UPS to the load to ensure the UPS works at the optimal point in its efficiency curve is essential.

Selecting a UPS with variable load management can help too. In a situation where the load can vary, UPS modules can be put into a ‘sleep mode’. While not switching power, their monitoring circuitry is fully operational, so they are instantaneously ready to switch power if needed. Because it is the switching of power that causes the greatest energy losses, system efficiency is significantly increased.

Peak shaving is also becoming more commonly used. This is a way that facilities can actively use their own energy storage to save costs during peak times of demand

on the national grid. Peak shaving can help customers avoid paying higher electricity prices or fees that are applied when going above their maximum peak load. It can be achieved by either reducing usage levels by switching off non-essential equipment or by utilising other energy sources such as battery storage or UPS systems. With UPS, peak shaving is achieved with reduced energy taken from the grid, while batteries simultaneously discharge during high rate demand.

For peak shaving to work successfully, the necessary technology must be included in the UPS, so product selection from the outset needs to be considered carefully. The type of UPS battery used is also critical.

Using LiFePO4 batteries, it is possible to reduce costs by taking some energy from the batteries instead of the national grid during peak times in the day, for example, and recharging batteries at times of lower demand when electricity costs are less, such as at night. It would not make sense to discharge batteries completely or quickly, so to preserve battery life, small amounts of battery energy are taken simultaneously with the grid to shave pence off the bill which over time adds up to significant savings. Centiel’s Intelligent Flexible Battery Charging functionality, which is customer enabled, sets site specific parameters and takes place automatically.

However, Centiel has gone even further with the recent introduction of its latest sustainable UPS innovation, StratusPower. StratusPower shares all the benefits of its award-winning three phase, true modular UPS CumulusPowerincluding ‘9 nines’ (99.9999999%) availability to effectively eliminate system downtime; class-leading 97.6% online efficiency to minimise

running costs; true ‘hot swap’ modules to eliminate human error in operation –now also includes long-life components to improve sustainability.

Uniquely, StratusPower offers a 30-year design life, so five years down the line facilities will not be hit with component lifecycle replacements. The system is fully scalable and can be enhanced through the years to ensure the latest tech is always on board. It’s a distinct step away from our ‘throw away culture’ to one where the UPS can be upgraded or repaired rather than replaced to extend its useful working life.

What if StratusPower could also harness renewable energy? Well, StratusPower is already hardware enabled, and with adaptations to the software/firmware, is future- ready to accept alternative energy sources.

To save energy, become more sustainable and lower carbon footprints, organisations will need to plan many years ahead. However, with the proper research, there are now options available to contribute more positively to tomorrow’s world, where managing and reducing future energy use will become second nature.

Let’s see what changes we can make today to reduce energy, save costs and become more sustainable for the future.

Centiel, centiel.com

R&M considers a sustainable approach to be a prerequisite for longevity. As a 60-year-old corporation dealing with sustainability from the outset, Markus Stieger, Chief Operating Officer, shares some of R&M’s findings and best practices.

The Corporate Sustainability Reporting Directive (CSRD) and other measures related to the European Green Deal are impacting the data centre market for fibre and copper cables, just like any other industry. This extends the scope of the current Non-Financial Reporting Directive (NFRD) considerably. The data centre sector is working to get to grips with these regulations and understand what they mean for operations, processes and supply chains.

It’s never too early to start documenting and collecting data continuously. Like other businesses, R&M had to start collecting the data it had never tracked in detail before.

CO2 monitoring, for example, is particularly difficult. Everybody is talking about CO2 reduction, but nobody seems to know exactly how much CO2 they’re emitting! Setting up a process takes time. Two years after starting data collection, Markus says that R&M has a report for Scope 1 and 2 emissions, whereas, Scope 3 is still an ongoing process.

People are just beginning to understand what CDSR requires, particularly when it comes to estimating Scope 3. How does one get the information from its partners? How does a company ensure it’s audit-ready?

R&M’s core business of providing connectivity categorises 34 main goals into four areas:

• Connecting people encompasses all topics relating to social commitment.

• Connecting nature addresses all environmental and climate protection issues.

• Connecting ethics addresses ethical and compliance principles we act on.

• Connecting circularity encompasses all topics of the R&M value chain.

“We want to seize new opportunities, retain our financial independence, and sustainably invest our profits, as we have done in the past,” says Martin Reichle.

“R&M is a family-owned company with a long-term focus. We are proud to be celebrating our 60th anniversary in 2024. This alone is proof of how solid and sustainable our business model is,” says Peter Reichle.

By the end of this year, R&M plans to have a first estimation in place, guiding its goal to reduce greenhouse gas emissions 50% by 2030. For ongoing data collection, employees need to receive training, or new people must be hired. For big and small firms, it’s a very specific, time-consuming job requiring a great deal of expert knowledge and teamwork.

One thing that is very important is to get your documentation right. To date, assessors often simply checked whether companies had policies in place. If that was the case, they would be

awarded a certain number of points. However, just because policies have been introduced, doesn’t necessarily mean that people are acting on them. Now, auditors are beginning to ask questions such as: Do people work with policies? What is the specific result? It is also important to document in a way that’s clear to others. The burden of proof lies with oneself!

R&M spent a long time documenting what it had already been doing for a considerable period of time. Once a larger team was involved, data was collected more professionally and the practices evolved - even though there are still some gaps to fill. Markus imagines that smaller businesses may struggle as they may not be able to conclusively demonstrate that they’re doing things right if they simply haven’t kept records.

R&M has been focusing on gathering data and having documentation in place to prove that it is complying with everything, from environmental standards to human rights and labour laws, but it’s hard to organise this without adjusting resources. It can place a lot of pressure on individuals that suddenly need to take this on in addition to their ‘regular’ jobs. Although implementing new systems costs manpower, this will, in fact, reduce the workload in coming years. Of course, it’s also vital to make sure all information is provided in a way that is immediately understandable and useable by all involved and by the auditor.

EcoVadis has been assessing R&M’s business since 2016. Through EcoVadis, the company has learned which data it had to start tracking to improve its scores. Hence, the company did its homework and started documenting policies that had been ‘unwritten rules’ for many years. These topics are transforming from ‘voluntary’ to ‘mandatory’ quickly. The sooner one starts to work on documentation and data collection to comply with regulations, the better.

Transformation takes time, so it is important to engage, empower and involve people to create motivation. By making people part of the process, Markus believes that R&M empowers them to take initiative. Last year, it started a global sustainability ambassador network to foster exchange. R&M’s motto is, ‘Every action, no matter how small, has an impact’.

What it’s also starting to do is rethink processes and products that have remained unchanged for many years, including the materials the company utilises. Technology is advancing and so are the recyclable or recycled materials that it can use in its products. Markus shares that the company started out as a component provider and evolved into a full solution provider, which has stimulated an alignment between different business lines and the value chain. The only constant is change and he believes that they are just starting to ask questions such as: What happens with a cable when it has been in the ground for 25 years? Which parts can we reuse? What happens when the end of the lifecycle of a product has arrived? Can we prolong its operational lifetime? Give it a second life?

R&M’s first Corporate Social and Environmental Responsibility (CSER) report was released in 2010. Quite early on, especially as the company is not obliged to report figures to shareholders. Initially, it released a report every two years, yet since 2021, it has released an edition annually, as part of management’s strategic agenda.

So putting out a CSER Report can significantly boost reputation and enhance brand value by making commitment to sustainability and social responsibility tangible. It is vital now that investors consider environmental, social, and governance (ESG) factors as part of their investment criteria. CSER reporting also helps

identify and manage environmental and social risks at the earliest stages, while identifying inefficiencies and waste, helping bring about operational improvements and cost savings.

Last year, the company began with the first in-house double materiality assessment. This provides information to determine whether a sustainability topic is critical for a business or not. The company’s main learning was that it should have done this type of analysis earlier!

It is very important to evaluate the most critical sustainability topics for the business, to narrow them down and focus on the most relevant ones. As topics change, a continuous materiality assessment is important. Current focus areas include CO2, packaging and waste, transportation, human rights, equality, diversity and circularity.

“On to the next 60 years of doing business sustainably!” says Markus.

He believes that we are all on a learning path together and need to embrace this societal shift and turn it into business opportunities that benefit business, environment and society while complying with the law. None of us is going to save the planet alone, even the tiniest action has an impact. It adds up and it’s scalable.

Reichle & De-Massari, rdm.com

By leveraging power electronics, data centres can improve reliability, efficiency, flexibility and sustainability. Jorlan Peeters, Managing Director at HyTEPS, explains how we are seeing more and more power electronics in data centres worldwide – which can bring significant advantages, as well as complications.

Power electronics uses electronic (semiconductor) components such as diodes, thyristors, MOSFETs and IGBTs to switch, control and convert significant electrical power. They might be used to convert direct current to alternating current or change voltage level or frequency. Power electronics enable more efficient energy conversion and provide flexibility in managing and controlling electrical energy.

In data centres, power electronics are used in a variety of ways.

Some examples are:

• Inverters used to convert mains voltage to required levels for equipment, or convert UPS direct current to AC power.

• Adapt energy supply to specific equipment and loads in the data centre. This can help optimise energy consumption and deal with peak loads.

• Convert and distribute energy more efficiently, leading to lower operational costs and a reduced carbon footprint.

• Integrate variable renewable energy sources more easily and efficiently.

• Reduce generated heat and optimise cooling systems, for example with Variable Frequency Drives (VFDs) for fans and pumps.

• Power Factor Correction (PFC) of (non-linear) loads.

• Power electronics make smaller equipment possible, which can lead to significant space savings.

Power electronics place higher demands on energy quality and design of the energy infrastructure. If these higher requirements are not met in an installation, this will lead to a dropout of electricity, which in turn, will lead to production loss, damage to the installation, and malfunctions. Also, installation parts may age more rapidly and need to be replaced sooner than necessary.

Power electronics in a data centre can cause several problems, mainly due to their critical role in managing and distributing electrical power across various systems:

• Power quality issues such as voltage spikes, sags and electrical noise can be exacerbated. Poor power quality can lead to data corruption, equipment malfunctions, and reduced equipment life.

• Installation, maintenance, repair and detecting and resolving potential vulnerabilities require specialised knowledge. Failure to maintain these systems can lead to unexpected downtime, which is highly detrimental in a data centre environment.

• Switching power supplies, for example, in servers and UPS systems, can generate harmonic currents. These can interfere with other equipment and reduce efficiency of the electrical installation.

• Causing Electromagnetic Interference (EMI) and voltage fluctuations, which may interfere with the operation of other devices.

• Overheating due to inadequate cooling can shorten the operational lifetime of components and lead to failure.

• Switching on and off power electronics can cause transient voltages and currents that can be harmful to other devices.

• An improperly executed grounding system will lead to undesirable currents and potential differences.

LED lighting offers many practical advantages in data centres. The longer lifespan of LED (often thousands of hours more than traditional fluorescent or incandescent bulbs) means less frequent replacements, lower maintenance costs and less downtime. LED lighting consumes significantly less energy and is often dimmable. Given the scale of data centres, this can bring significant energy and cost savings. However, LED uses power electronics such as dedicated drivers, rotating power supplies, dimmers and current control circuits. Therefore, it’s smart to properly map out the possible consequences before rolling out LED on a large scale in a data centre.

HyTEPS conducted research for a client on the differences between a standard fluorescent fixture with an HF starter and a LED fixture. A report provided insight into the impact of both options on the electricity grid and power quality (quality of voltage and current).

Analysis showed the LED luminaires inrush current was higher, but nominal power consumption was considerably lower.

The company then extrapolated the results for an installation of 500 luminaires and concluded that heat could be generated in the neutral conductor as a result of a third harmonic current. Without adequate modifications to the system, fire might break out. Based on this information, and continuous monitoring and optimisation, it was able to present changes to the design and the bank was able to switch to LED with peace of mind.

This illustrates how modelling, maintenance, monitoring and adherence to best practices can help identify and address (potential) issues with power electronics in the data centre. Furthermore, by paying attention to power quality, downtime can be minimised or prevented. That’s why HyTEPS recommends continuous monitoring and analysis to identify and address issues such as harmonics, voltage dips or voltage spikes.

Good power quality reduces stress on electronic components, prolonging their lifespan. It minimises the risk of damage due to power disturbances such as voltage spikes, sags or electrical noise, thereby enhancing the reliability of the data centre’s operations. By maintaining high power quality, the chances of equipment failures and associated downtime are significantly reduced, and data centres can ensure better integrity and accuracy of data processing and storage. Equipment operating under optimal power conditions consumes less energy, requires less maintenance and is less likely to experience premature failure. Furthermore, data centres can comply with strict regulations regarding data integrity and uptime.

HyTEPS,

hyteps.nl

In the fast-paced world of technology, the rise of AI and high-density computing has become a game-changer, redefining what data centres need to handle.

Sam Bainborough, Sales Director EMEA-Strategic Segment Colocation and Hyperscale at Vertiv, invites data centre experts to review their strategies to ensure a holistic approach.

The increasing use of AI applications has created a huge demand for computing processing and power, putting significant pressure on data centres, and pushing them to quickly adapt to the evolving needs.

According to Statista, the AI market is projected to reach US$305.90bn in 2024 and have an annual growth rate (CAGR 2024-2030) of 15.83%, resulting in a market volume of US$738.80bn by 2030.

As AI workloads continue to soar and the need for computing power becomes more intense, data centre architects find themselves

at a crossroads. They must rethink their design strategies, making significant changes to network architecture, power systems and thermal management if they are to continue to be fit for purpose.

This paradigm shift emphasises two pivotal areas that demand simultaneous evolution to effectively accommodate the escalating demands of AI workloads.