2023 begins and TM Broadcast International is fully focused on two of the major trends that will make our industry evolve during this year. We have thoroughly analyzed the technical developments and business possibilities that virtual production techniques and metadata curated by artificial intelligence in the digital environments will bring to the companies in our industry, not in the future, but in the present.

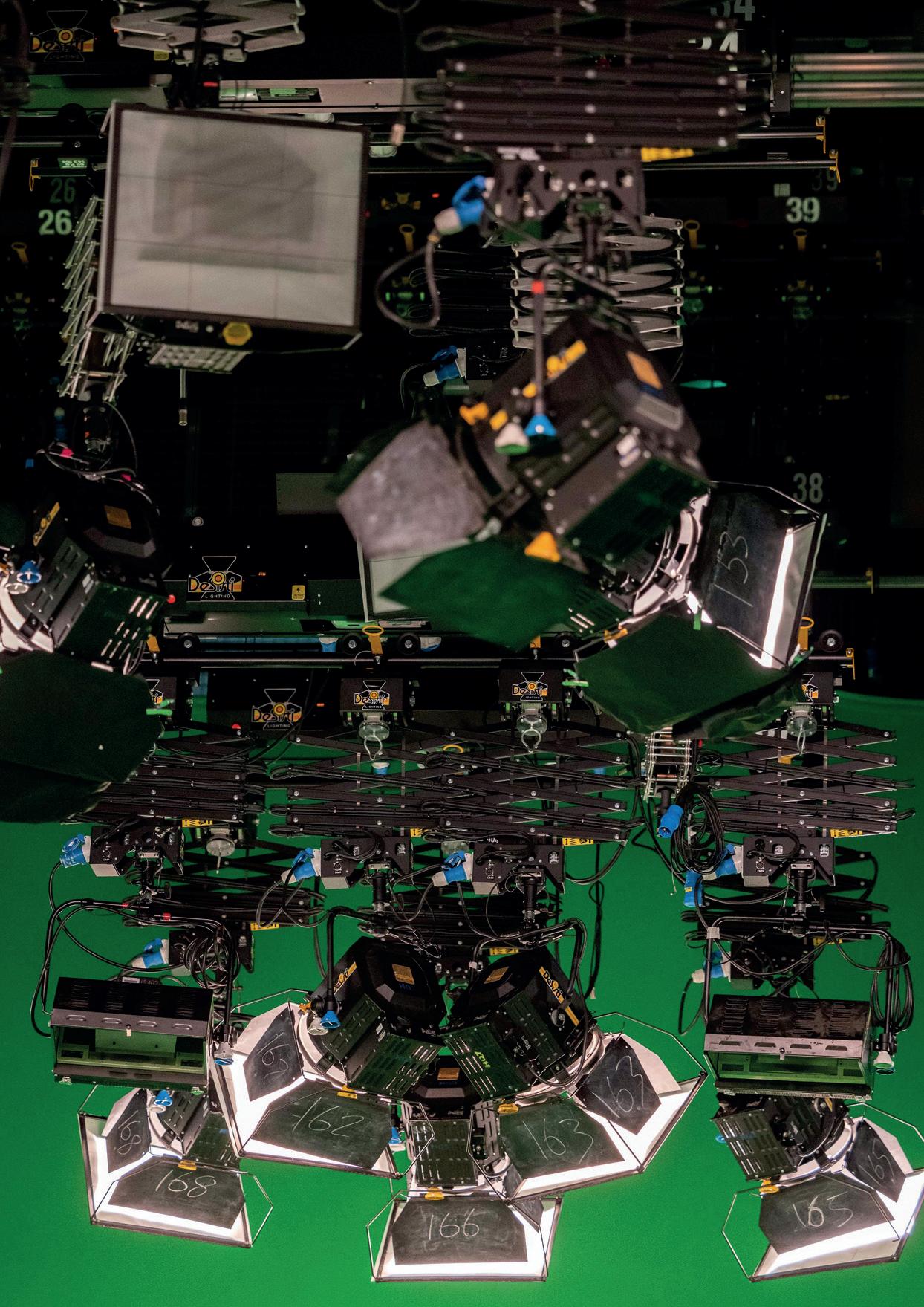

Virtual production is destined to be one of the great gamechangers in the multimedia content creation sector. The technology enables more flexible workflows, broader creative possibilities and much more sustainable modes of consumption and investment than traditional techniques. We offer you a detailed report on the technological capabilities and business possibilities provided by two of the most widespread technical infrastructures in virtual production environments: Green Screen or LED Volume?

Our expert, Yeray Alfageme, Business Development Manager of Optiva Media, an EPAM Company; has brought us an in-depth analysis of everything that can contribute to our business by knowing our user perfectly. Through an analysis of the Big Data

provided by the sharing of content consumers on the platforms and through artificial intelligence curation techniques we can multiply advertising revenue in digital environments. We can even slightly dilute one of the biggest risks associated with this industry: knowing in advance if what we are going to produce will be successful.

As a magazine focused on all the margins of our field, we have approached to know the technical details of one of the most significant post-production companies in LATAM: Cinema Maquina. Adapted to the new times, its workflows have evolved to gain flexibility and efficiency in its contributions thanks to its great investment in technology. This Mexicobased post-production company has worked with major platforms such as Netflix, Amazon Studios or HBO, and has become one of the main players in the American market.

Finally, in this edition, we travel to central Africa to learn about the working methods, the state of infrastructure, renovation plans, development trends and the challenges that one of the oldest public broadcasters in the region faces: Kenya Broadcasting Corporation. Will you join us on this journey?

We wanted to go to the center of Africa to know, first-handed, what is the state of broadcast technology in this part of the world. Through an exclusive interview with the oldest television in the region, has almost a century of life, we have been able to identify the same trends of transition to IP, adaptation to digital ecosystems or the constant investment and modification of infrastructure to broadcast in HD.

Virtual production is destined to dominate the multimedia solutions sector. These techniques, which are expanding rapidly in our industry, require fewer physical resources than more conventional production methods. This feature provides three key circumstances for the future of the sector: flexibility, sustainability and great creative capabilities.

Kiloview has released the E3 generation of video encoders: Kiloview E3. It realizes video input and loop through HDMI up to 4K P30 and 3G-SDI up to 1080 P60, encoding HDMI and 3G-SDI video by H.265 and H.264 simultaneously. It also features an enhanced chipset and supports simultaneous transmission to 16 destinations with an adjustable bit rate of up to 100 Mbps. The product to release on January 16th, 2023.

E3 allows encoding video sources from any 3G-SDI or HDMI port, mixing both video sources

into one output in PIP or PBP mode or even encoding video sources up to 1080p60 from both HDMI and 3G-SDI ports simultaneously for one transmission.

For all IP-based video transmission processes it supports the HEVC video codec as well as H.264.

It has the ability to process either source or a video mix of the two video sources with multi-protocols including NDI|HX2/ NDI|HX3/ SRT/ RTMP/ RTSP/ UDP/ HLS.

The Kiloview E3 system can transmit live to up to 16 different platforms

simultaneously by outputting both main stream (up to 8 channels in 1080p) and secondary stream (up to 8 channels in 720p) with an adjustable bit rate. The maximum speed is up to 100 Mbps.

The solution can process rotation/crop and add custom overlays, including images, text or other OSD to the output video.

E3 allows encoding audio sources through its 3.5 mm line input and SDI/ HDMI embedded audio input. It can convert audio into multiple formats, including AAC, MPEG-4, MPEG-3, MPEG-2, Opus or G.711. The device supports embedded audio encoding of 8 channels of SDI inputs and 4 channels of HDMI inputs.

The encoder is equipped with a 1.14-inch LCD display with touch buttons. Connectivity, IP address, CPU, memory, resolution, temperature and real-time tally status can be checked on the LCD display.

The collaboration between Plateau Virtuel, Studios de France and Sony resulted in a modern studio adapted to virtual production flows with LED volumes. Sony Crystal LED screens have been deployed in the virtual studio to create a surface of 90 m2 (18 meters wide by 5 meters high). The capture technology also comes from the Japanese multinational: it has been integrated with the Sony Venice.

Plateau Virtual is a company focused on virtual production, a subsidiary of Novelty-MagnumDushow Group, a business group focused on event production. Studios de France is a set supplier subsidiary of AMP Visual TV, a group dedicated to live production in France.

The project was born after shooting a campaign for the European Space Agency in virtual production with the Venice camera. Plateau Virtuel wanted to take

virtual production a step further in terms of playback and also on-set quality.

“It took us 15 days to set up this screen with Sony equipment. We felt it was important to have a suspended structure to be able to slide floors underneath, LED or otherwise. We also have an LED ceiling that allows us to integrate everything if necessary,” says Bruno Corsini, Technical Director of Plateau Virtuel.

The curved display consists of 450 “units”, each with a combination of 8 LED

modules. The technology offers a very high contrast ratio, and a pixel pitch of 1.5 mm.

This new studio, designed for audiovisual and film productions, has also been designed for television production. AMP Visual TV representatives say they wanted to deploy a “laboratory platform” to respond to all kinds of requests.

The presentation of the study will take place on February 12 at Seine Saint Denis, in the north of Paris.

As fans watched, broadcasters around the globe were bringing smashing graphics to the screen with the help of Zero Density products. Using its real-time graphics hardware and software, Belgium’s RTBF, France’s TF1, Qatar’s Alkass, Maldives’ Ice TV, Malaysia’s Astro, Slovenia’s RTV SLO, and UAE’s Asharq News built virtual studios for all their live coverage.

While few broadcasters opted for fully green screen studios, others built hybrid sets with LED panels in addition to the cycloramas or went for virtual set extension in a physical studio. They all had in common: smashing graphics, high-quality storytelling and cutting-edge Reality tools.

Reality Engine offers broadcasters a wide range of tools and features to create the ‘wow’ effect on screen. Flycam and teleportation were among the most popular features to be utilized. Flycam gives the ability to go beyond the limitation of the physical location and 360-degree visual storytelling capability. France’s TF1 placed its studio on the stadium’s edge, looking over the field. With flycam, TF1 connected the physical camera to the virtual camera and took the audience to any location in the stadium to see player lineups, statistics and more.

Teleportation allows to ‘beam’ guests to the studio from a remote location as augmented reality graphics. For example, RTBF teleported soccer players from Qatar to get their post-game comments, and Alkass Sports teleported reporters as well as guests to receive their commentary on the game and news from the field.

All these creative broadcasters offered dynamic player lineups, statistics and scoreboards as augmented reality graphics to their thousands of viewers and pulled them into the story. For example, RTV SLO extended its regular u-shaped cyclorama with additional flat pieces to have more space for the graphics and the host to move. The shape and color difference can be handled easily by Reality Keyer, the first and only real-time imagebased keyer with advanced clean plate technology, making the system much more advanced than just a standard chroma keyer.

Read on Zero Density website to discover how each broadcaster decided to enhance their creativity with Reality Engine.

Blackmagic Design has shared a recent interview with Tashi Trieu, professional colorist, about his work on James Cameron’s “Avatar: The Way of Water”. The colorist did his grading process in DaVinci Resolve Studio The development of the esthetics was mainly carried out in WetaFX.

The professional was involved at the beginning of pre-production. A series of very detailed tests were carried out to achieve the best possible solutions. Shooting in stereoscopy – the 3D feel – added a higher level of difficulty. Trieu says that polarized reflections can result in different levels of brightness and texture between the eyes. That can generate gradient effects in “3D.” “I’ve never done such detailed camera tests before,” he says.

Thanks to the intermediation of WetaFX, a wide creative latitude was provided at the

digital intermediate stage. Coupled with this factor, the LUT used is a simple S-curve with a basic color spectrum mapping from SGamut3.Cine to P3D65. “These two features provided flexibility in bringing different tonalities to the film,” says the colorist.

The mechanics of the work, in the interest of those involved, were simplified and automated as much as possible. The colorist created on this project, through DaVinci Resolve’s Python API, “a system to index the delivery of visual effects once they were sent to the digital intermediate, so that my editor Tim Willis

(Park Road Post Production) and I could quickly import the latest versions of the shots.”

The challenges associated with the production process, highlighted by Tashi Trieu, were not only related to the stereoscopic format, but also to the high frame rate (48 f/s).

“Even on a state-of-theart workstation with four A6000 graphics processing units, it’s very difficult to guarantee real-time operation. It’s a very delicate balance between what is sustainable with SAN bandwidth and what the system is capable of decoding quickly,” details the interviewee.

“Avatar: The Way of Water” graded with Blackmagic Design’s DaVinci Resolve tool

6G transmission will be at frequencies below 1000 hertz, or below THz. SubTHz typically encompasses the frequency range from 100 to 300 GHz. Within this range, frequencies between 100 GHz and 300 GHz are of great interest worldwide.

Access to much higher bandwidths will enable very high-performance shortrange communication combined with an environmental sense for object detection or next-generation gesture recognition down to millimeter resolution.

For these reasons, Rohde and Schwarz is undertaking

in-depth studies of the specific conditions in the electromagnetic bands at this frequency. In addition, it has also devoted efforts to channel sounding measurements between 100 and 300 GHz.

The company’s findings have contributed to the ITU-R Working Party 5D (W5PD) report, “Technical Feasibility of IMT in Bands above 100 GHz” with the objective of studying and providing information on the technical feasibility of cellular mobile technologies in bands above 92 GHz.

IMT stands for International Mobile Telecommunications Standards.

The report will be consulted at the International Telecommunication Union (ITU) World Radiocommunication Conference 2023 (WRC23), where additional frequency bands above 100 GHz are scheduled to be discussed and their allocation considered at the subsequent WRC27.

The current 3GPP channel model is only validated up to 100 GHz. A crucial first step for the 6G standardization process is to extend this channel model to higher frequencies.

Netflix recently announced the creation of an engineering center in Poland. The new division will be based in the Warsaw office.

The objective of the engineers will be to help build the products and tools that the creative partners use to deliver Netflix shows and films.

The relationship between Polish culture and Netflix goes back a long way. It’s been six years since the platform launched the Polish-language service and already more than 30 movies and TV shows have been created in Polish, including titles such as High Water and How I Fell in Love with the Gangster.

In the words of Netflix’s

press release, the reasons for establishing the center in this Central European country are that there is a very good engineering background and a large development community. “We can’t wait to see the innovation and creativity that comes from our hub here. We are currently recruiting for business application software engineers.”

Ateme and Enensys have recently combined their expertise in video distribution for Rai Way, the operator of the RAI television network, to initiate the project whose objective is the transition to the DVB-T2 standard.

DVB-T2 allows broadcasters to distribute HD content. The standard also provides more efficient and resilient signals, while freeing up the 700MHz terrestrial frequency for 5G mobile broadband.

The combined solution, offered by a local partner, won the tender and the project started in 2021. Both companies proposed a competitive solution with a reduced Total Cost of Ownership.

The integration includes Ateme’s Titan softwarebased live video compression solution. Enensys provided the Seamless IP & ASI switches (ASIIPGuard) and DVB-T/

T2 network gateways (SmartGate T/T2), enabling SFN transmission (Single Frequency Network).

The transition was completed within the March 8, 2022 national conversion deadline.

“Viewers want higherquality experiences and stronger emotions from their video entertainment,” commented Carlo Romagnoli, Sales Director Southern Europe from Ateme. “The move to DVB-T2 satisfies this need by facilitating the distribution of 4K content, as well as providing advanced features such

as High Dynamic Range, High Frame Rate, Wide Color Gamut and Next Generation Audio to enhance the viewing experience. We are delighted to have been able to help Rai Way in this transition”

“We are proud to have played a key role in this exciting project of national importance”, said Cyprien Galesne, Sales Manager Southern Europe at ENENSYS. “Our synergistic partnership with Ateme on digital media distribution projects continues from strength to strength, offering flexible solutions to complex challenges.”

of the

GB News is a free-to-air television and radio services in United Kingdom. The broadcaster has chosen Quicklink Studio (ST55) for introducing remote guests into their news productions for national broadcast.

Remote opinions are a large part of the channel’s programming. Withs essence in mind, GB News required a solution for introducing remote guests in high-quality with realtime latency into their Schedule.

“Opinions and thoughts from remote experts/

guests are a key part of GB News structure. As a result, it was extremely important that we adopted a solution that would allow us to obtain these opinions in the highest quality possible –Quicklink Studio allowed us to achieve exactly this,” said Stephen Willmott, Head of Technology & Operations at GB News

Using Quicklink Studio Servers (ST100, ST102, ST208 & ST200), the remote guests can be integrated into any workflow using HD-SDI, NDI and other professional broadcast outputs. The solution

works within a simple web browser. Contributors can be invited via SMS, WhatsApp, email or by generating an URL link.

In addition, by utilising Quicklink Manager, GB News has operational control over virtual rooms, remote guests and more within one central portal accessible from any global location.

“The management of remote guests is an essential part of introducing remote guests. Our guests are not always the most proficient with technology, however the remote control options within the Quicklink Manager allow us to overcome these challenges by being able to remotely control devices and other aspects of the remote guests. The Quicklink solutions are truly a fundamental part of GB News productions,” adds Stephen Willmott.”

ZDF is a German public service television broadcaster. The corporation produced and showed the World Cup live in Germany.

They have trusted Apantac KVM over IP solutions for its remote production. The games were handled remotely at the National Broadcasting Center (NBC), located in Mainz, rather than Qatar.

For this project, MPE (“mobile production unit”

founded and driven by ARD and ZDF) purchased more than one hundred and fifty KVM over IP transmitters (Tx) and receivers (Rx) (models: KVM-IP-Tx-PL/ KVM-IP-Rx-P) and software licenses from Apantac, via Broadcast Solutions Produkte und Service, Apantac’s representative in Germany.

These solutions were chosen because the technical equipment can extend and switch video signals, keyboard and

mouse functions, as well as embedded and analog audio signals over IP. With this solution, operators can access all their computers and servers on the network from any other point.

In addition, the firmware has been specially designed for this project. It adds an extra 3840×1080 EDID to the Tx module, which addresses the teams’ need to display two computers (top and bottom) on a single 16:9 UHD monitor.

ZDF develops a solution to work the World Cup remotely with Apantac and Broadcast Solutions

KAN Channel 11 is an Israelbased public television station that has offered the emotion of the World Cup to their audience. The Station has partnered with systems integrator AVCom to outfit an OB Van for remote production coverage. The objective was to connect the communication system with the production crew in the OB and with the rest of the team in Israel, the camera team at the venues, and the presenters located in the KAN studio in Qatar. They chose Clear-Com solutions.

“The production value is always enhanced when the crew can communicate

clearly and quickly. Collaborating with ClearCom on this championship has simply reinforced that their extensive experience in these types of large-scale and often high-pressure events ensures we have a flawless result for our communications,” explains said Tsachi Korner, Senior Presales Engineer at AVCom.

AVCom deployed an Eclipse® HX Digital Matrix Intercom System for the company on the core. This solution interfaces with endpoints including the V-Series Iris IP User keypanels and FreeSpeak beltpacks. Also, they

added Dante interfaces and MADI cards to KAN’s communication system to provide interoperability with a Dante-based audio-overIP infrastructure.

Both AVCom and KAN explained their reasons when asked why ClearCom. “By using Clear-Com equipment, we’re able to make our production process much smoother,” said Tsachi. “We’re always confident when we propose the Clear-Com solutions to our clients, as they are so reliable and scalable, and offer such powerful capabilities. This enables our clients to produce a superior event.”

KAN entrusted Clear-Com to develop its communications system for the Qatar World Cup

ViewLift has announced a multi-year partnership with Bitmovin. ViewLift is specialized in streaming and OTT solutions and Bitmovin is focused in deliver cloud-based video streaming infrastructure solutions.

With this collaboration, both companies are aiming to elevate the standard of viewing experiences on OTT platforms worldwide by integrating their capabilities and expertise.

According to Bitmovin’s research reported in the press release, a large portion of viewers who unsubscribe from certain platforms are due to problems when viewing content, such as buffering delays. Both companies have identified that the quality of the experience has become one of the main metrics for viewer retention.

Through this agreement, ViewLift customers can now access Bitmovin’s capabilities, including: NextGeneration VOD Encoder, which features multi-codec streaming, 8K and multiHDR support, and its Live Event Encoder. Bitmovin Analytics, Bitmovin’s Stream Lab, an automated device testing solution, and Bitmovin Player will also be integrated with ViewLift’s OTT platform.

Together, they enable ViewLift customers to leverage the power of cloud encoding to scale video channels and optimize

adaptive bitrate scaling (ABR) to reduce content delivery network (CDN) costs.

Stefan Lederer, CEO and co-founder of Bitmovin, said, “In today’s fiercely competitive video streaming market, quality of experience is vital for viewer retention. Teaming up with ViewLift means we will power the best viewing experiences and accelerate how quickly customers get to market and monetize their offerings so they can remain one step ahead of competitors.”

ViewLift partners with Bitmovin to tackle one of the main reasons for OTT platform unsubscriptions

Enco, a company specialized in the development of software solutions for broadcast environments, has announced that it has acquired Rushworks. With this move, the company will integrate its automated captioning and translation services systems into Rushworks’ products. Although the terms of the agreement are not yet public, Enco will keep the Rushworks professionals in their positions and the founder will relocate him as sales and marketing manager for the company’s product line. Engineers from both companies will join forces to develop new solutions, they said in their communication.

“Enco is the global leader in real-time captioning with its patented enCaption technology,” said Rush Beesley, Rushworks’ Founder. “The integration of its powerful, highly accurate captioning engine with our broadcast automation,

integrated PTZ production, and courtroom production and streaming systems will ensure our mutual customers can comply with government regulations and provide critical captioning, transcription and translation services to audiences worldwide.”

“The acquisition of Rushworks adds proven technology and talent that

opens the door for us to innovate and develop cohesive, integrated broadcast and AV solutions for years to come,” said Ken Frommert, president of ENCO. “They also bring strong expertise in video applications, which diversifies our software portfolio to serve a much broader array of business verticals and applications.”

Enco acquires Rushworks to integrate its software

We wanted to go to the center of Africa to know, first-handed, what is the state of broadcast technology in this part of the world. Through an exclusive interview with the oldest television in the region, has almost a century of life, we have been able to iden fy the same trends of transi on to IP, adapta on to digital ecosystems or the constant investment and modifica on of infrastructure to broadcast in HD.

In this detailed interview you can learn about the current technological status of the Kenya Broadcas ng Corpora on, as well as their plans for the future or the content they offer for the whole country, as it is the main mul media public service broadcas ng corpora on in the country.

The Kenya Broadcasting Corporation, established in 1928, is Kenya’s state media organization. It was established by an Act of Parliament —CAP 221 of the Laws of Kenya— to provide independent and impartial broadcasting services in the areas of information, education and entertainment. It broadcasts in English and Swahili, as well as most local Kenyan languages. It is headquartered at Broadcasting House (BH) and is located at Harry Thuku Road, Nairobi.

The Corporation has three main production centers: the BH headquarters, which we have already mentioned;

Sauti House in Mombasa County, which serves the coastal region; and Kisumu, which serves the western region.

We offer several products:

In the area of television broadcasting, we have three channels: KBC Channel One, the main flagship channel; Y254, which seeks to attract young audiences; and Heritage TV, whose content focuses on spreading our cultural and historical heritage.

On the other hand, as far as radio is concerned, we have several stations: Radio Taifa and KBC English Service, which are the most important channels of the corporation and share content differentiated by language. In addition to these two outstanding stations, we also offer radio content through fifteen services: Coro FM, Pwani FM, Mwatu FM, Mwago FM, Nosim FM, Kiembu FM, Kitwek, Minto, Mayienga, Western Services, Eastern Services, Iftin and Ingo FM.

Television and radio services distributed through the DTT (Digital Terrestrial Signal Distribution) infrastructure are also important enough to mention. KBC is the only authorized public digital signal distribution operator under its flagship Signet brand, broadcasting from 42 sites throughout the country, covering 97% of the terrestrial area. It currently hosts 125 channels and all are free-toair (FTA).

I would also like to mention another of our most important services. In our country there is a great need to give opportunities to people who have talent, but do not have the financial capacity that would allow them to access professionalized contexts. To offer these opportunities, we created Studio Mashinani —which means rural areas— and through this infrastructure we help artists of music or short films. As we have already mentioned, we offer them a professional environment at zero cost. So far we have created 8 audiovisual studios.

To conclude the section on the content we offer, we also broadcast the signals of the Parliament and the Senate on two independent channels: Bunge (Parliament) TV and Senate TV. We do so by virtue of a contractual agreement.

How does Kenya’s public television work? What is its organization chart and how is its structure organized?

KBC is led by a Managing Director and, under him, there are 16 departments headed by a Manager. The Board, appointed by the Ministry of ICT and Digital Operations, oversees the management.

What content does KBC broadcast, how much is produced in-house and how much is produced externally? How much is broadcast live?

KBC broadcast operations are regulated by the Kenya Communications Authority.

The authority dictates that 70% of the broadcast content must be local. Therefore, 70% of our

content is local and the rest is foreign. Of the 70% local, 80% is done in-house and 20% is bought locally.

As a broadcaster we do not have a threshold of live content that we must cover, but our mandate is public interest and, as such, we ensure coverage of all major public events.

How is the network infrastructure between production centers?

We have deployed fiber between the different production centers. To ensure continuity of service, we have also invested in developing redundancy for this network.

How does Kenyan television deal with sending signals from the outside?

KBC has two OB vans connected to Intersat satellite (KU band) and 25 mobile backpacks from TVU Networks. This infrastructure is intended for remote coverage of the various events taking place throughout the country. To

guarantee the link with our 42 transmission centers, we count on a C-band uplink to Amos Sat.

Which manufacturers make up your infrastructure?

We have recently completed a process of improvement and transition in our newsroom. It is now semi-automated with solutions from the manufacturer Octopus.

Part of this process has also focused on our file management system, our MAM. We now operate a system from SNS (Studio Network Solutions), a playout solution from Axel Technology and graphics from Vizrt.

Our next goal in the newsroom is to fully automate it, which we are already working on.

Editing is done in Final Cut Pro, routing, switching and internal distribution solutions are from Blackmagic Design, lights are from Canara and cameras are from Sony.

For signal processing and uploading to the network we have solutions from Thomson and Harmonic. The transmission for TV and radio is currently carried out using technology from the company TEM. 70% of our infrastructure is based on this brand. Now we are looking to renew it in two directions: counting on the company DB for radio transmitters and on the company Egatel for the transmission of TV signals.

What have been the latest renovations that Kenyan television has developed?

Due to a shortage of funds, we have not been able to carry out a complete upgrade of the production infrastructure. To realize our transitions, we have developed a mix and match strategy.

The goal was to improve signal quality and workflow efficiency. And we have certainly succeeded.

What challenges have you encountered in this

The mix-and-match approach has not achieved optimal results, but it has worked for us.

However, we are committed to funding a complete overhaul to achieve what we want: a seamless IPbased, high-definition workflow. Right now our

signal flow is on BNC cable and we are only using IP in the radio workflows. That radio infrastructure works synergistically.

Regarding HD transmission,

process and how have you overcome them?

all our own content, the content we produce inhouse, is HD. However, it is transmitted in SD due to technical restrictions. Nevertheless, as I was saying, we want to overcome these restrictions by upgrading the system to also transmit in HD.

How is KBC adapting to digitalization?

Initial adoption was slow, but later a digital department was created and is catching up quickly. We understand that the future is digital and we are doing everything in our hands to make sure we are up to speed.

What are KBC’s own challenges?

KBC is the mother of

broadcasting in this African region. As we mentioned at the beginning of the interview, the fact that it was founded in 1928 has given it a history of almost a century. As you can understand, all that time of life has made us build up a huge archive of historical material in audiovisual formats. This archive is composed of

content stored in many different formats: Two-inch tapes, One-inch tapes, U-matic, Betacam and DV. Part of our digitization goal is to transfer, catalog and unify the archive into single, organized formats. However, we do not yet have the capacity to do this because of technological constraints. The KBC obtains its funding through ex-cheque and, due to the difficult economic climate, we have faced funding problems that have affected our productivity. That is

why we have not yet moved forward on these terms.

the radio in Dante.

We are pursuing funding to renovate our television and radio production center, as well as the remote transmission center.

We intend to install a highdefinition IP system for television, as mentioned above, and also to upgrade

On the other hand, our remote broadcast centers are now staffed. However, we are building the remote monitoring capability with adequate redundancy to ensure uninterrupted service availability. In this way, we will optimize our human resources.

In addition, we are also planning to acquire two more modernized OB vans: one for television and one for radio.

What are the next steps in the technological evolution of the Kenya Broadcasting Corporation?

Virtual production is destined to dominate the multimedia solutions sector. These techniques, which are expanding rapidly in our industry, require fewer physical resources than more conventional production methods. This feature provides three key circumstances for the future of the sector: flexibility, sustainability and great creative capabilities.

Gaining the ability to tackle ambitious and quality projects in record time is one of the most widespread goals in the multimedia content creation world. Virtual production techniques provide a series of workflows that optimize times by providing photorealistic 3D and 2D environments and characters. Previously, to recreate the same assets using the old techniques, we would have needed large investments in time and budget, as it would have been necessary to produce mock-ups, scenery, characters, etc.

By offering us the possibility to dedicate less effort to physically recreate all those objects and characters, we are also becoming more sustainable. We mobilize fewer personnel and use fewer resources to achieve a result that, in many cases, is even more optimal and spectacular than what we would achieve using traditional workflows.

Finally, as we have already mentioned, the possibilities of obtaining original and creative content are multiplying exponentially. Technology, always at the service of stories, is now capable of offering us worlds that we could only imagine before. Suddenly, those projects that are too complex, too expensive and too inaccessible due to their narrative conditions are really viable. If you don’t believe it, ask the producers and content creators who have already worked with these techniques. We wanted to go further. Virtual production technology is evolving in two very different ways today. The technical evolution trends are clearly differentiated. Green screen or LED Volume? That is the key.

In this report, we wanted to explore these two possibilities. With the direct testimonies of the heads of the virtual production department of dock10 and Bild Studios - Mars Volume, we offer you the reasons for this differentiation and the capabilities of each of these possibilities.

By Javier Tena

A journey into the possibilities of expanding reality

Andy Waters: We began our journey of virtual studies in 2017. We started because we perceived a real need in our customers. At that moment, we considered the way forward with our client BBC Sports.

To understand the context, dock10 has ten television studios where they produce sports content and shows such as “The Voice”, “Let it Shine” or “Bake”. The imput that made us decide to create a virtual studio was motivated by the sports products, they always have a very innovative mentality.

After development, we wanted to share what we learned with the rest of our customers. The date previewed was March 2020. We were going to invite lots of entertainment clients from all over the UK to come and show off what we could do with Virtual Studio, but unfortunate something else happened in March 2020.

However, thanks to the development we had done, the technology was ready and virtual studios came to

our rescue. Our client, the BBC through orders given by the British government, also contributed to the growth of the technology.

The government said to the public broadcaster: “We need to educate young people at home urgently. Can you get a program working?”

Richard and his team stood up one of our smallest studios to create a children’s education show. The workflow deployed consisted in our team to build the sets from home, email them to the company and, finally, to create program. All of this was done with a minimal crew. We had to run and scream, because we were on the air in two weeks.

The government wanted to continue with the curriculum learning, so the show was, literally, day by day following what would have been taught in school.

“BBC Bitesize, that is its name, became the most watched BBC educational program we have ever produced. We thought we were going to make a program for a couple of

months, but we went on to produce 370 episodes.

In fact, 3 million children watched the program on the first day of broadcast.

I believe that by taking advantage of the techniques offered by virtual production, in conjunction with 3D objects and augmented reality, we were able to help explain fundamental educational issues.

Since then we have done factual entertainment programs, eSports tournaments, commercial programs, etc. Our goal is to push this technology into the entertainment space.

Andy: Going back to our plans, it wasn’t until 2022 that we put our original strategy into action: to show the whole of the UK what we could do. The national entertainment community found it fascinating.

Was the company prepared to exploit virtual production technology?

Andy: When we adopted this technology we could not afford, as a commercial company, to have a

How did dock10 get started in virtual production?

dedicated studio that was only used two days a week for sports through virtual production. We have to try to maximize our resources and we can’t have a green screen studio unoccupied the rest of the days of the week.

What we planned to do was to take the entire infrastructure out of the virtual studio every week, turn it into a regular studio, do a traditional live TV show, and then at night turn the space back into a

virtual production space. A lot of people said, “Oh, that’s crazy. We shouldn’t do that.” But it was the only way.

Richard: Literally every week we adapted the stage six times.

Andy: However, we gained a great understanding of virtual reality technology and all the associated workflows by repeating this task so many times.

In this respect, how have you evolved?

Richard: We used Zero Density as a graphics solution on top of Unreal Engine visual creation technology and Mo-Sys tracking solutions. We started with five camera channels for the BBC. Now we are working with fifteen. We want to continue to grow our capabilities because the trend of creating these products is growing all the time.

We started working in that small studio, where we modified the infrastructure

as needed, and now we have just finished a pilot for Saturday prime time that we produced on our 7,500 square feet stage in a 270 degrees sight with eight cameras.

Andy: We now offer the solution in every of our studios.

Richard: We have achieved this through the expertise we have gained and also through the decision we made at the beginning to centralize our services in order to gain scalability. We standardized our inside-out tracking solution centrally with Mo-Sys StarTracker. We can deploy that fairly rapidly across any of our spaces. Was everything else also planned from the beginning or were some decisions made on the spur of the moment?

Richard: Some parts were improvised. For example, we added the ability to capture motion. We used a system similar to StarTracker’s, thus based on inertia, from a company called Xsens. We don’t need external tracking cameras pointing at an object with

reflective stickers, it’s all based on body movement. We don’t even need to be in a green screen situation to deploy motion capture on a physical set. From motion capture, we have started to offer facial recognition capture.

Have you swelled the ranks of professionals to offer these new arts?

Richard: It was very important for us to have an in-house team. We needed people in the building who understood not only the equipment, but also the physical dimensions and operation of the spaces.

We’ve hired five people to work with us full time. Andy Elliott, our lead developer, who I’m sharing this interview with, has been with us for five years and comes from a video game background. Now, Andy has a couple of juniors who used to work for him delivering content. We’ve also created a new role in the industry: Virtual Studio Operator. This is someone who works in the gallery, in an operational role that ensures that the virtual elements work optimally. We also have a

development producer who works for us. He works with the client in pre-production, making sure that everything they need from their scenery is understood, provided and delivered on time.

On the other hand, we have also encouraged extensive education and exposure among the teams so that they are familiar with virtual production techniques.

Andy: On the other hand, we have also spent a lot of time educating our customers. The interesting thing is that they are very different. Sports has been one of those industries that has always embraced technology early on, however, customers coming from the entertainment sector tend to be more traditional when it comes to arranging the way they work. A lot of this technology is new to them.

Richard: As soon as we show them the capabilities of this technology, producers, executives and curators start thinking creatively. They realize that certain things they had to discard in the past because the technology wasn’t

ready, can now be done thanks to virtual production techniques.

initial concept to final delivery. This is actually the most comfortable way of doing things for us.

Richard: There is a MoSys unit in the emission chamber itself or on the physical floor of the camera studio. This is an ultraviolet camera sensor that sends a signal to the studio grid. On the grid, there are a series of reflective stickers placed in random positions and at different heights. The signal is sent and arrives back when it bounces off the sticker. The system measures the time it takes for the light to travel. With this method, the solution can triangulate the camera in the physical world. It is a fairly flexible and simple solution.

Richard: Yes. We work in multiple ways, so we can go from “BBC Bitesize Daily” to illustrate it.

In that case, we design it, pitch it, produce it and do the whole process, from

However, as we have expanded the services we offer, we have delegated tasks such as set design. Also, in those larger productions, we tend to work on hybrid models that include sets with part real physical sets and part virtual.

We are also starting to see professionals coming to us with sets and assets already designed. We tend to introduce them into our systems if we think they are worth having.

You work in near real life, what technological capacity do you need to work in this way?

Richard: Our production channel is almost live, only 6 or 12 frames delayed. To achieve this, we have taken two things into account. The first is that each broadcast camera has its own independent pipeline. If one were to fail, it would only affect that part of the system. We rely on that level of redundancy to ensure this capability.

The second is that we have the most advanced graphics systems in each of the camera channels. The graphics GPUs are some of the best in the industry. They are NVIDIA graphics with raytraing capabilities using artificial intelligence solutions.

However, these capabilities we are talking about would be nothing without Unreal Engine technology. This, combined with advances in GPU rendering performance and possibly the tracking accuracy that can be obtained from MoSys, has made it possible to produce photorealistic 3D images.

In any case, we are always playing at the limit. We must try to squeeze the most out of our systems without pushing them too hard, because if we do we will find image problems. We are constantly on the edge of that precipice.

How do you see augmented graphics and what capacities do you have to deliver them?

Richard: We base our ability to develop augmented reality graphics

How have you managed to solve the challenges related to tracking?

on the very infrastructure we are talking about. When we design the content, we decide whether certain elements will be part of the background or whether they will make up other graphical elements such as augmented reality.

From our technology point of view, we have always tried to be as agnostic as we can be. What we try to do is adapt to the number of options that exist in the industry and we do that by ensuring that our graphics output is adapted to any of

the graphics options on the market.

Then there is the case of creative options. Augmented reality can add a lot to a piece of content if used in an appropriate and original way.

Andy: I would like to share some examples. What we did with Clogs, one of the characters in the “BBC Bitesize” format, was to create the character using motion capture techniques. In fact, the combination of virtual production and

motion capture techniques that we developed in that program won an Innovation Award from “Broadcast Tech”.

Another example was the show we did for Polyphony on “Gran Turismo”. In that case, what we developed was a graphic solution that indicated the possible performances that the competitors would have on different tracks.

What I mean by this is that there are many ways to get that data out to the public,

but an important part is to make sure that we can collaborate and interact with our preferred graphic customers and suppliers. That’s why we pursue agnosticism.

Your bet is on green screen technology instead of LED Volume. What are the advantages and disadvantages of this approach? Do you have the capacity to implement LED Volume in your studios?

Andy: LED Volume is a brilliant tool which will transform our industry, but it won’t transform the industry we work in. What we do has a lot to do with events or live and recorded production. This implies that productions are done on multi-camera systems and this option is not the most appropriate for LED Volume. These techniques are appropriate for drama, for productions that might be made by Netflix or Amazon, but definitely not for us.

Richard: It is based on the

same techniques. It uses

Unreal in the back end, it uses camera tracking technology and many other similar devs, but in an environment as limited as LED Volume you can’t get wide shots or freedom with the camera. That’s why it’s not a technology for us.

Richard: We have produced a talk show with Stephen Fry set in the world of dinosaurs. Using virtual production and augmented reality techniques, we have managed to place him in the Jurassic surrounded by prehistoric animals and interviewing different specialists about this period.

It was a really important project for us where the biggest challenge was to create all these different dinosaurs, their animations and to do it in real time in the studio. We had over 300 different animations, more than seven different dinosaurs, in five locations and everything shoot in 360°. All produced in

three months. We had 32 animators in 19 countries working around the clock to meet the deadlines.

We pushed the boundaries of technology. Mixing, in real time, real characters with fully automated, multicamera, pre-animated characters was another big step forward in our technical roadmap.

Andy: Currently, and with future perspectives, we are working with MMU, Manchester Metropolitan University, to adapt this technology to the 3D world, to the metaverse or to different platforms. We are in the process of applying for a government grant to further this research.

Richard: We want to create a distribution platform for 3D assets. We are producing entertainment content in three dimensions, so now it’s about how we can take what we currently build in 3D, but adapt it by delivering it on a 2D platform or take it from 2D and deliver it on a 3D platform.

We began plans for MARS as the covid pandemic was hitting its global stride. But even earlier than that, we had been conducting our own research and development into the foundational building blocks of Virtual Production - camera tracking technologies, LED Systems and real-time game engines.

Bild Studios, the parent company of MARS Volume, was founded by visual

engineering pioneers

David Bajt & Rowan Pitts. Bild Studios has been responsible for the deployment of some of the largest shows in the world such as Eurovision, Dubai Expo, Birmingham Commonwealth Games and more.

In mid-2020, we ran a proof of concept called MARS 1.0 in Central London. This helped us test the workflows and customer experience, while also testing the market to gauge the level of commercial interest. The pilot was a

success on all counts; which spurred us on to make plans to establish the UK’s most accessible Virtual Production facility, to cater for the widest possible audience. In August 2021 after months of R&D and hard work, we opened our doors and had bookings from day one.

Our facilities and human teams at MARS Volume are specialized in offering services for multimedia content creation such as:

Plate playback on LED stage 2D playback, which is perfect for driving scenes; real-time scene playback with 3D technology and tracked cameras; LED stage for professionals, and stage integration. Our human resources, in addition to providing assistance on the virtual production set, also offer instruction through our MARS Academy educational offering, and an R&D space for industry professionals. How much space have you set up for virtual production?

Our stage covers a 15m x 9m space within an 8000 sqft studio. We have a 4000 sqft space for production services additionally. What specific projects could you highlight and what challenges have you encountered in them? How have you solved them?

In collaboration with BBC Studios and Epic Games, MARS Volume transported Sir David Attenborough 66 million years ago to the day an asteroid devastated our planet for “Dinosaurs:

The Final Day with David Attenborough”.

Through a blend of Epic Games’ Unreal Engine and physical prop and set design, Tanis’ prehistoric graveyard was recreated allowing Attenborough to interact real time with the virtual world before and after the final day of the dinosaurs.

In recent statements, Sir David Attenborough, said: “We’ve gone far beyond the old days of the cinema, when you had greenscreens

and after filming you were able to replace the screen electronically to make you appear in any landscape you wished. When I walked into that studio the images were all already there, the back end of the studio was a forest on fire, and it was very, very impressive too.”

In 2021, Pulse Films approached MARS Volume for a Virtual Production solution to their driving scenes for the Sky series. With an ambition to shoot some of the most creative

and compelling driving scenes, Pulse Films agreed virtual production offered maximum flexibility and most efficiency for creative output compared to other production techniques.

The driving scenes were shot within a sequence of

busy city roads and luscious countryside driving plates with the vehicles lit with LED wild walls and exterior lighting. This enabled scenes with seamless and naturalistic reflections over the vehicles reflective surfaces, and the faces of the actors.

Using the vehicles mounted on a turntable that was paired with the content was a good example of MARS Volume’s technical expertise powering the simulated driving scenes. With this innovation, the crew were able to not only create hyper-realistic

scenes but also shoot angles that would otherwise be impossible when compared to traditional techniques such as green screen or location shooting.

As the production also involved a variety of long driving scenes, the ability

to avoid any technical, environmental and unforeseen weatherrelated issues by shooting virtual production were additional benefits for the production team. Avoiding the logistics of closing roads, gaining permissions, managing shooting in public spaces was another huge advantage for the team.

The LED panels are from the manufacturer AOTO, our processing on the contents and LED volumes comes from Brompton Technology. The camera

tracking technology is from Stype. The playback is from Disguise and the content is driven by Unreal Engine.

The camera tracking system is a Stype Redspy, as I was saying. This is an “inside out” system which relies on an array of retroreflective stickers to triangulate the camera’s position to high degrees of accuracy. We can also extract FIZ data from the lens.

We build our own bespoke render nodes for the

rendering of real time environments on the volume for which we have developed an extensive range of proprietary tools to enable the smooth operation of our virtual production shoots at MARS.

Do you have the infrastructure to design the backgrounds? What

computing power do you have to generate and what are the characteristics and how does your computer network work?

We have our own in-house Unreal Engine artists and operators. While the usual workflow is to work with our clients supplied

content, and their preferred VFX house of choice, we do have expert resource internally to support and provide essential translation services to ensure that content runs successfully on the screen at all times.

MARS Volume has been equipped with the highest

available computing power, and live events-grade systems redundancy to ensure that the show will have the up-time required. Our approach is informed by our teams experience on the world’s largest live events, where there is no second take - you only get one shot.

Productions shooting at MARS are staffed and supported by our in-

house VP team. This team covers a diverse and broad range of skillsets specializing in everything from real time systems, mediaserver platforms, camera hardware and video / IT infrastructure. We often supplement this team with external resource drawing from our freelancer network.

How are your virtual production teams composed? Have you incorporated professionals specialized in virtual production workflows?

Your bet is on the LED volume, what pros and cons have you found compared to the green screen?

First things first, they are different tools for different jobs.

There are, however, a number of common situations where LED provides some really compelling advantages over greenscreen. The most significant of which is probably reflections and naturalistic ambient lighting. The ability to capture these in camera can often be of huge benefit to DOP’s and directors, and can save on expensive and time consuming post production work. We have seen a number of shoots leverage this to huge success including “Gangs of London” and a number of car commercials.

The other significant benefit which is often overlooked, particularly for car plate work, is having a clear reference point for actors, being able to see where the car is travelling, what direction the car is turning etc helps lead to

a much more naturalistic performance.

What is your perspective on Volume LED technology, do you think it could become the next big revolution in the industry?

We have no doubt that the LED Volume, as a tool in the filmmakers toolkit, is here to stay. As happens when new technologies are introduced to markets, there is often a moment of over-excitement before the reality of the applications of this tool become abundantly clear.

Once teams are well versed in the LED Volume, and the re-ordering of the production workflow ( a lot of post-production decisions have to be made earlier in the process in pre-production) then time and budget efficiencies can be made - and we have seen teams come back a second or third time and make these savings.

We are all painfully aware that the film and television industry must look for innovation and stepchange to achieve the

sustainability targets that we have to attain for our industry to be responsible.

Virtual production and LED Volume productions offer that opportunity. We are actively working on tools and efficient workflows to support this for the industry.

What is the future of this technology? What does the future hold for MARS Volume?

We are excited to see what 2023 will bring, but already we can see teams returning and recommending us as a good supportive place to host their virtual production shoots. We invested heavily into education in 2022, launching MARS Academy - and we have big plans to take the Academy global in 2023.

The future lies with the brave and innovative teams and industry leaders who are working hard to champion virtual production as a positive future filmmaking tool. We are determined to collaborate, educate and support these leaders as best we can in 2023.

Data has become the gold of our industry. This precious material is already delivering its enormous benefits. What distributors and content creators can do with it is, quite simply, the panacea of interaction with their customer. Knowing who your consumers are, what they like, when they like to watch it, knowing how they prefer to enjoy their favourite content are differential factors when creating content, observing the interests that could awaken a certain project and even foreseeing the economic benefit options that could be obtained. All this can be easily obtained thanks to the fusion between Big Data and AI.

The options for exploiting this information are virtually limitless. Content providers can deploy content only to specific demographics that are likely to consume it. In fact, thanks to the capabilities provided by digitization, online thematic channels can be created and their call for access can be targeted to specific users who are likely to pay a subscription to consume.

Going further in this concept of directing the communication and focusing the shot on the target, the commercial possibilities skyrocket. Imagine being able to serve the specific solution to your hypothetical customer’s particular needs by targeting advertising that responds to his or her greatest interests. Put another way: Who would be more likely to buy a particular car than someone who has spent months thinking about it and informing themselves?

Targeted and personalized advertising is precisely that last push they will need to buy.

All of this, targeted and personalized advertising and the right content at the right time, is achieved through data analytics. We know, there is a lot of data to analyze. The task would take too much time and professionals. And that’s where artificial intelligence (AI) comes in. Machines can save us tremendous amounts of both time and effort. In fact, they can finish this task in seconds with a good training (machine learning). The capabilities, therefore, are endless.

TM Broadcast International presents an informative special on the real capabilities of these technologies analysed in detail from three points of view: a report by Yeray Alfageme, Business Development Manager of Optiva Media, with an agnostic and personal vision on how and why to use this technology in FAST channels; and two specialized articles by two major industry representatives focused on these solutions: Signiant and Digital Nirvana.

As we already tried to explain in a previous article in TM Broadcast, FAST (Free Adsupported Streaming Television) channels are a very good option to exploit the existing VOD content, already produced, thus creating linear channels where this content can be offered in a thematic and novel way. However, where FAST channels really make sense is in customization.

By Yeray Alfageme, Business Development Manager at Optiva Media an EPAM company

By Yeray Alfageme, Business Development Manager at Optiva Media an EPAM company

We identify streaming, in a natural fashion, as yet another way of distributing content to viewers, just as satellite, DTT or cable television are. And that is true, there is no doubt. We could set out to establish the differences between IPTV and OTT, but it is pointless in this context. However, streaming is more than just taking our linear video signal and, instead of uploading it to a

satellite or inserting it into a distribution network such as DTT or cable, encoding it into data packets and distributing it over a structured data network.

Actually, it’s a lot more.

And the thing is that, if we just did that we would be leaving out one of the most useful tools that this technology offers us: two-way communication. Until now content would travel from point A -the production center, broadcast or distribution point- to point B -our TV in the living room. To establish

what was being watched, who was watching it and when it was being watched, we would have to resort to firms like Kantar Media, who would carry out surveys, prepare statistics and extrapolate those results to 100% of the viewers. However, with streaming it is not like that.

Now we are able to find out who, when, what and how our content is being viewed with 100% accuracy. No statistics, sampling, or applicable approximations. All devices report to the CDN (Content Distribution

Network) -because it is necessary for the CDN to provide them with the content in the quality, format and specifications required-, what they are displaying, when and where from. Let’s just say it’s now a requirement for everything to work, rather than something ancillary. This is a game changer in several ways. The first of these is capturing and understanding all this new information that is being generated.

All these viewing metrics that are generated in real time that the CDN needs to use in order to distribute the right content to the right devices, is at the risk of dying there, on the CDN. Because they really aren’t necessary, technically, beyond that. However, it would be a serious mistake to let them go to waste. In fact, it was so for a while when streaming first appeared. Luckily, we soon realized what we were wasting.

Using viewing metrics only as a technical component of the content delivery network is like throwing away the great advantage that streaming offers us beyond being able to reach more devices with more content. But you have to know how to capture all this information and also know what to do with it.

The first thing to keep in mind is where all this information is generated. That place is the CDN, the last layer in the entire distribution chain. Summing up, the CDN -we have also covered it extensively in another TM Broadcast article [#157 TM Broadcast, October 2021]is a worldwide network of servers that replicates all the content that you may want to distribute and makes it available to all devices. Being this understood, it is clear that the CDN needs to know to whom, when and how to distribute this content in order to determine how the right size for these servers and their underlying network.

Once these metrics are

generated we have to store them, analyze them and understand them. For those who are more familiar with Big Data, standardization of this information is not in place, but such standardization is not necessary since the source of information is unique and therefore the data will always arrive in the same way.

And now, what do we do with the metrics?

And so, the first thing to do is store them. But we can’t. Well, technically we can, but it doesn’t make much sense to keep them all. It’s just too much information and, in part, only usable by the CDN for its own operation. That’s why you have to choose what to store and where. That’s where platforms like NPAW’s Youbora play their part (I’m sorry to use a commercial example, but I think it’s pretty enlightening for everyone) as they link up to the CDN, whatever

it is, and store these metrics. They even go a step further, because they analyze these metrics and present them in a usable way; but let’s go step by step.

Well, we have already generated these bunch of data, we have already extracted and saved them for further use in order to make the content distribution network work; the metrics platform has also analyzed the data, but we have not done anything with them yet. This platform is going to give us a lot of information about content consumption and, apart from the technical use of correct network operation, there are many more things we can do.

The first thing is to group the data in a useful way. That is, carry out a segmentation of our audience. The first thing that comes to mind are demographic parameters of the type: age, sex, location and so on, but that would be

the “easy” approach. The correct segmentation would be by likes, similarities, viewing time, and data that are actually associated with audience behavior regarding our content. Now we’re getting to the heart of it.

Another important point is to know if the data we have are good or not, the much-vaunted data quality. Not because we have a lot of data it means we have a lot of information. We must have quality data and, of course, a quantity of them that allows us to extract the

appropriate analyses. That is why being sure that the data we have is of the right quality is something vital before even segmenting and analyzing the data.

All this hard work of capturing that data, making sure they are good quality and segmenting our audience correctly, can lead to very nice, but complex metrics and analytics.

Again, this would be falling short. In addition, the CDN itself can already offer us this type of dashboards without having to do all the additional hard work. But leaving it like this would be a waste of time, so we must go one step further.

And it is that measuring and analyzing our audience is nothing more than a new source of information on which to make decisions.

Just as Kantar Media used to tell us by who and when our linear DTT channels were being watched, we now have these endless

dashboards. But what decisions did we use to make with that information? Well, programming of new content, editorial decisions about the content to be produced and even the creation of new channels or themes. And that’s when we’re back where we started. Let’s use all these data, our correctly processed streaming viewing metrics, to create our FAST channels.

Customization is the key

And if we have the content, -our entire VOD cataloguewe also have our audience and we know them, -thanks to our properly captured, segmented and quality metrics- and we have the technology that allows us to group this content in order to create new linear channels; we have everything, we just have to connect the dots to get the full picture. Let’s get to it.

There is a detail that we have skipped before, and not a trivial one. It is the fact that, in order to have these metrics with the

right quality, there is an indispensable requirement: that our viewers get registered on our platform. And if not, how do we know who sees the content: if it is a man, a woman or if it is always the same person from the same place, or a family of 9 people. There are several strategies for this.

The most direct one is to associate payment with access to the content. That is, a subscription-based model. So, it is already obvious that it is necessary to have a record in place and of course, in keeping with the GDPR, request viewers to provide the appropriate data. This clashes head-on with the F of our FAST channels, the free-of-charge part. Remember that these channels are going to include advertising, so a subscription odel must be handled with care. There are models that follow this example, but only the major players -Netflix and the likes- can afford to do it thanks to the quality and, above all, the amount of content they have.

The second strategy, and applicable in this case, is to offer viewers something in return. If you tell me who you are you will have the content you like, created for you and, in addition, with ads that interest you that will not bother you. Deal?

Deal, of course. And this is the true new model of FAST channels: the use of these metrics to generate customized channels as if they were tailor-made for the viewer. But they are more than just grouping, in an intelligent way, of the content we already have.

Of course, having an army of people looking at metrics dahsboards and thinking about how to group the different parts of our huge content catalog so as to offer them to our viewers doesn’t seem the easiest or the cheapest of approaches. That’s why the next obvious step is to automate this whole process.

Linking our analytics platform -including our

CDN- with our CRM systems, where our viewers’ data are stored, and use this information to automatically position the VOD content on our OTT platform and thus deploy new channels is the culmination of the whole process. And it’s not technically that difficult, let’s see.

We have data that tells us that women of 30 years of age who reside in a specific neighborhood of Valencia spend an hour and a half, around 11pm to watch property auction programs, for example. If we have our content with the right metadata -something we have not covered today, and not because it is a requirement for FAST channels but for our OTT to be of quality- it is easy to make the most of it and offer this viewer segment customized content. That is, a channel that every day at 11 pm will show three chapters of our favorite twins and, in addition, the ads between these episodes are from those decoration and refurbishment stores that

they are going to visit the next morning. The squaring of the circle, nothing less.

Last, there is a key component to all of this: ads. Someone has to pay for all this system and the effort needed to get here, of course. And this someone is our advertisers. But of course, something must be given to them in return. Just as we do with our viewers to have them provide us with their data and agree to view these ads, the deal is similar.

You are going to pay me to advertise on my FAST channels above what you paid me on my traditional linear channels. But why so? Because I’m offering to reach exactly the audience you want, whenever you want. Not a bad deal, uh?

Advertisers view this proposal in a very positive way, as it closely resembles what online ad platforms offer them. No need to conduct massive campaigns of repeated, costly advertisements on all sites

at once and let everyone see the ads to find the needle in the haystack. Reaching out to those viewers who are potential clients of mine and offering them what they want at the time of the day when they are most likely to accept my offer looks better, doesn’t it?

That’s why FAST Channel ad prices are justified and higher than traditional

ones. That’s why this business model works and is so interesting.

We have a lot of content that we have already paid for in our OTT platform’s VOD catalogue. In addition, all this content has its associated metadata and is perfectly categorized. We

also have an audience that we know, since they have registered on our platform, and, thanks to the quality metrics and the correct segmentation that we have carried out, we know what they like watching, and when.

On the other hand, we have advertisers who are tired of paying huge amounts of money to reach 100% of

our viewers when, in truth, they only want to reach that niche market that will accept their offering and that accounts for 5% of our audience. It’s not that they don’t want to pay for promotions. They both want and need to do that, but they also want their campaigns to be better and their return greater. No one wants to throw the money away, of course.

FAST channels join these two worlds together and offer our viewers a selection of content based on their history, tastes, preferences of their peers and novelties according to what our analytics, which are good, reveal. On the other hand, they offer our advertisers a selected audience, very prone to like their offering and with a very high sensitivity to their proposals. Therefore, the return on advertising investment is higher, almost guaranteed, than in previous models. As we said, the squaring of the circle.

When we talk about big data in broadcast, we’re talking about the hundreds of terabytes, or even petabytes, of data that a system gathers during direct interaction with end users. Typically that happens when broadcasters make their content available through VOD or streaming options. Broadcasters can analyze this big data to understand customers’ preferences, which in turn, helps them serve better content to viewers and serve the right demographic to advertisers.

Besides the massive amounts of data exchanged between broadcasters and end users, big data also refers to the many content feeds most broadcasters ingest continuously and simultaneously. For example, the volume of

incoming video feeds for a news organization is huge — several hundred gigabytes to terabytes on a daily basis. If broadcasters can make sense of that big data, they can use it to help make content.

What are the possibilities of artificial intelligence in the broadcast industry?

Applying artificial intelligence across audio and video opens a world of possibilities for the broadcast industry. For example, speech-to-text technology has reached a point where it is better than humans at understanding specific domains. A welltrained speech-to-text engine can provide a very accurate transcript and captions of incoming content. At the same time, other well-trained engines can extract facial recognition, perform onscreen text detection,

detect objects in the background, and more.

In the case of multiple and continuous incoming video feeds, artificial intelligence can help describe what is in the feed and make it very easy for editors to find what they’re looking for. AI capabilities can also generate metadata that makes the content easily searchable and retrievable, leading to easier content creation and better content publishing decisions.

How can all these technologies enrich the content consumer experience?

Once content providers become more familiar with user preferences, they can bubble up content within their archive that is better-suited to those preferences. They can also use AI to quickly access

data that will inform their content feeds, such as in social media channels.

Take World Cup soccer, for example. Rightsholder Fox Sports could use AI technology to identify moments in the game that are worthy of viewing, and within a few minutes of the game ending, they can put up those highlights on YouTube. Before AI, this process would have taken a human many hours.

And of course, the more consumers watch content that is in tune with their preferences (action, drama, certain news topics), then the better the system gets at predicting and serving similar content. That’s an example of tailoring the content for a better consumer experience.

How should traditional broadcasting adapt to these technologies to get the most out of them?

Broadcasters need a website or platform where users can search, find, and consume content. The smaller the chunks broadcasters produce, the greater the consumption,

which means they need to be able to capture all of that consumer information and make it useful. To be able to do that at scale, broadcasters have to adopt technologies that can process the information faster and better than employing an army of people.

From what perspective does Digital Nirvana approach the possibilities of artificial intelligence?

Digital Nirvana believes AI has great potential to accelerate media workflows and make life easier for our clients. To realize that potential, we’re always looking for new and better ways to help our clients use artificial intelligence

tools like speech to text and facial or object recognition to describe what is in their audio and video.

Digital Nirvana is doing a lot of work on training speech-to-text engines to automatically recognize who is speaking and what they are saying — such as distinguishing one media personality from another and identifying different topics.

How does Digital Nirvana intend to take advantage of the confluence of both technologies?

Our focus right now is to leverage AI technologies in the audio, video, and natural language processing sectors. Natural language processing is the ability to understand what is being said in the content. Not only can we provide a verbatim transcript of what is being said, but then we use natural language processing to figure out who is doing the talking and what the topic and context are. For example, our Trance application uses multiple technologies, including automatic speech

to text and an automated translation engine. Our goal is to make sure those engines keep getting better and better.

It has not yet become mainstream technology, but there are already many developments and pilot projects. Which ones would you highlight as the most challenging and interesting?

One pilot project we’ve been working on with a major U.S. broadcaster is automatic segmentation and summarization of incoming news feeds. Suppose the programming in the feed lasts 60-90 minutes and contains multiple segments on different topics. Today in production, we are generating real-time text of that content, but in the future, we’ll automatically be able to figure out which people and places are being discussed in that feed, then provide a headline and summary of each of the segments. We’ll also be able to detect changes in topics

and categorize accordingly.

This is not an easy thing to do.

A similar use case relates to podcasts. Today, a welldesigned podcast will have what we call chapter markers within an hourlong or 45-minute podcast. The chapter markers delineate the different segments, and there are show notes related to each chapter marker. Right now this process is done manually.

We foresee technology that will listen to a podcast and automatically generate chapter markers along with a summary of each chapter.

Finally, Digital Nirvana is developing an advertising intelligence capability for a large ad intelligence provider that needs to analyze advertisements at scale. This provider must process close to 20 million advertisements per year, and there is no way to do it manually. They have to use technology.

The technology we’re developing will look at an

advertisement — whether it be outdoor creative, a six-second social media advertisement, or a 30-second broadcast commercial — and determine the product, the brand, and the category (e.g., alcohol ad, political ad, automobile commercial). That kind of analysis is a challenge, and being able to do it automatically will significantly improve this company’s workflow.

What future developments is Digital Nirvana involved in regarding the capabilities of this technology?