• automation

Editor-in-Chief

• automation

Editor-in-Chief

A peer-reviewed quarterly focusing on new achievements in the following fields:

• systems and control

• robotics

• autonomous systems

• mechatronics

Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Advisory Board

Dimitar Filev (Research & Advenced Engineering, Ford Motor Company, USA)

Kaoru Hirota (Tokyo Institute of Technology, Japan)

Witold Pedrycz (ECERF, University of Alberta, Canada)

Co-Editors

• multiagent systems

• data sciences

Roman Szewczyk (Łukasiewicz-PIAP, Warsaw University of Technology, Poland)

Oscar Castillo (Tijuana Institute of Technology, Mexico)

Marek Zaremba (University of Quebec, Canada)

Executive Editor

Katarzyna Rzeplinska-Rykała, e-mail: office@jamris.org (Łukasiewicz-PIAP, Poland)

Associate Editor

Piotr Skrzypczynski (Poznan University of Technology, Poland)

Statistical Editor

Małgorzata Kaliczynska (Łukasiewicz-PIAP, Poland) ´

Editorial Board:

Chairman – Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Plamen Angelov (Lancaster University, UK)

Adam Borkowski (Polish Academy of Sciences, Poland)

Wolfgang Borutzky (Fachhochschule Bonn-Rhein-Sieg, Germany)

Bice Cavallo (University of Naples Federico II, Italy)

Chin Chen Chang (Feng Chia University, Taiwan)

Jorge Manuel Miranda Dias (University of Coimbra, Portugal)

Andries Engelbrecht ( University of Stellenbosch, Republic of South Africa)

Pablo Estévez (University of Chile)

Bogdan Gabrys (Bournemouth University, UK)

Fernando Gomide (University of Campinas, Brazil)

Aboul Ella Hassanien (Cairo University, Egypt)

Joachim Hertzberg (Osnabrück University, Germany)

Tadeusz Kaczorek (Białystok University of Technology, Poland)

Nikola Kasabov (Auckland University of Technology, New Zealand)

Marian P. Kazmierkowski (Warsaw University of Technology, Poland)

Laszlo T. Kóczy (Szechenyi Istvan University, Gyor and Budapest University of Technology and Economics, Hungary)

Józef Korbicz (University of Zielona Góra, Poland)

Eckart Kramer (Fachhochschule Eberswalde, Germany)

Rudolf Kruse (Otto-von-Guericke-Universität, Germany)

Ching-Teng Lin (National Chiao-Tung University, Taiwan)

Piotr Kulczycki (AGH University of Science and Technology, Poland)

Andrew Kusiak (University of Iowa, USA)

Mark Last (Ben-Gurion University, Israel)

Anthony Maciejewski (Colorado State University, USA)

• decision-making and decision support

• new computing paradigms •

Typesetting PanDawer, www.pandawer.pl

Webmaster

TOMP, www.tomp.pl

Editorial Office

ŁUKASIEWICZ Research Network

– Industrial Research Institute for Automation and Measurements PIAP

Al. Jerozolimskie 202, 02-486 Warsaw, Poland (www.jamris.org) tel. +48-22-8740109, e-mail: office@jamris.org

The reference version of the journal is e-version. Printed in 100 copies.

•

Articles are reviewed, excluding advertisements and descriptions of products. Papers published currently are available for non-commercial use under the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 (CC BY-NC-ND 4.0) license. Details are available at: https://www.jamris.org/index.php/JAMRIS/ LicenseToPublish

Krzysztof Malinowski (Warsaw University of Technology, Poland)

Andrzej Masłowski (Warsaw University of Technology, Poland)

Patricia Melin (Tijuana Institute of Technology, Mexico)

Fazel Naghdy (University of Wollongong, Australia)

Zbigniew Nahorski (Polish Academy of Sciences, Poland)

Nadia Nedjah (State University of Rio de Janeiro, Brazil)

Dmitry A. Novikov (Institute of Control Sciences, Russian Academy of Sciences, Russia)

Duc Truong Pham (Birmingham University, UK)

Lech Polkowski (University of Warmia and Mazury, Poland)

Alain Pruski (University of Metz, France)

Rita Ribeiro (UNINOVA, Instituto de Desenvolvimento de Novas Tecnologias, Portugal)

Imre Rudas (Óbuda University, Hungary)

Leszek Rutkowski (Czestochowa University of Technology, Poland)

Alessandro Saffiotti (Örebro University, Sweden)

Klaus Schilling (Julius-Maximilians-University Wuerzburg, Germany)

Vassil Sgurev (Bulgarian Academy of Sciences, Department of Intelligent Systems, Bulgaria)

Helena Szczerbicka (Leibniz Universität, Germany)

Ryszard Tadeusiewicz (AGH University of Science and Technology, Poland)

Stanisław Tarasiewicz (University of Laval, Canada)

Piotr Tatjewski (Warsaw University of Technology, Poland)

Rene Wamkeue (University of Quebec, Canada)

Janusz Zalewski (Florida Gulf Coast University, USA)

Teresa Zielinska (Warsaw University of Technology, Poland) ´ Publisher:

Volume 16, No2, 2022

DOI: 10.14313/JAMRIS/2-2022

’It is Really Interesting how that Small Robot Impacts Humans.’ The Exploratory Analysis of Human Attitudes Toward the Social Robot Vector in Reddit and Youtube

Comments

Paweł Łupkowski, Olga Danilewicz, Dawid Ratajczyk, Aleksandra Wasielewska

DOI: 10.14313/JAMRIS/2-2022/10

A Cryptographic Security Mechanism for Dynamic Groups for Public Cloud Environments

Sheenal Malviya, Sourabh Dave, Kailash Chandra Bandhu, Ratnesh Litoriya

DOI: 10.14313/JAMRIS/2-2022/15

A Cloud-Based Urban Monitoring System by Using a Quadcopter and Intelligent Learning Techniques

Sohrab Khanmohammadi, Mohammad Samadi

DOI: 10.14313/JAMRIS/2-2022/11

Application of the OpenCV library in indoor hydroponic plantations for automatic height assessment of plants

Sławomir Krzysztof Pietrzykowski, Artur Wymysłowski

DOI: 10.14313/JAMRIS/2-2022/16

Technology Acceptance in Learning History Subject Using Augmented Reality Towards Smart Mobile Learning Environment: Case in Malaysia

H. Suhaimi, N. N. Aziz, E. N. Mior Ibrahim, W. A. R. Wan Mohd Isa

DOI: 10.14313/JAMRIS/2-2022/12

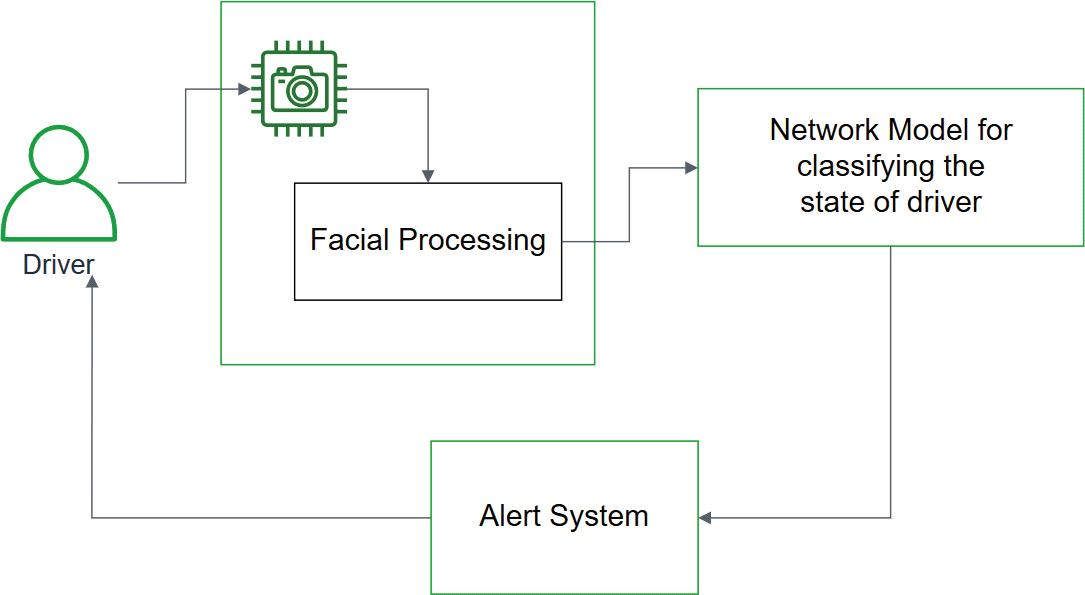

Machine Learning and Artificial Intelligence Techniques for Detecting Driver Drowsiness

Boppuru Rudra Prathap, Kukatlapalli Pradeep Kumar, Javid Hussain, Cherukuri Ravindranath Chowdary

DOI: 10.14313/JAMRIS/2-2022/17

Factor analysis of the Polish version of Godspeed Question-naire (GQS)

Remigiusz Szczepanowski, Tomasz Niemiec, Ewelina Cichoń, Krzysztof Arent, Marcin Florkowski, Janusz Sobecki

DOI: 10.14313/JAMRIS/2-2022/13

Coverage Control of Mobile Wireless Sensor Network with Distributed Location of High Interest

Rudy Hanindito, Adha Imam Cahyadi, Prapto Nugroho

DOI: 10.14313/JAMRIS/2-2022/18

Vibration Control Using a Modern Control System for Hybrid Composite Flexible Robot Manipulator Arm

S. Ramalingam, S. Rasool Mohideen

DOI: 10.14313/JAMRIS/2-2022/14

Submitted:17th December2022;accepted:10th January2023

DOI:10.14313/JAMRIS/2‐2022/10

Abstract:

Wepresenttheresultsofanexploratoryanalysisofhu‐manattitudestowardthesocialrobotVector.Thestudy wasconductedonnaturallanguagedata(2,635com‐ments)retrievedfromRedditandYouTube.Wedescribe thetag‐setusedandthe(manual)annotationprocedure. Wepresentandcompareattitudestructuresminedfrom RedditandYouTubedata.Twomainfindingsaredescri‐bedanddiscussed:almost20%ofcommentsfromboth RedditandYouTubeconsistofvariousmanifestationsof attitudestowardVector(mainlyattributionofautonomy anddeclarationoffeelingstowardVector);Redditand YouTubecommentsdifferwhenitcomestorevealedat‐titudestructure–thedatasourcemattersforattitudes studies.

Keywords: robot,socialrobotics,human‐robotinte‐raction,attitudestowardrobots

Thedirectmotivationforthestudypresentedin thispapercomesfromthereactionoftheVectorusers communitytotheAnkicompanyannouncementpre‑ sentedinDecember2019.1 Asthecompanyservers weretobeclosed,theVectorrobotproducedanddis‑ tributedbythecompanywouldlostitsuniqueabili‑ ties:languageprocessing,understandingvoicecom‑ mandsand–moreimportantly–theabilitytole‑ arnandadapttoachangingenvironment(asallof thesefeatureswereontheserverside).2 Theannoun‑ cementresultedinaburstofVectorusers’emotional comments,referringtoVectoras“friend”,“pet”,“little buddy”orevena“partoffamily”.Thismaybeobser‑ vedinthefollowingRedditpost:3

reallyinterestinghowthatsmallrobotim‑ pacthumans.Ithinkingwhatwillhappento Vector?ishegonebeabletothinkandtalk... treatinghimaspartoffamily.theideaofput‑ tinghimawayisscary.4

Andisevenmoreopenlyexpressedinthepostbe‑ low:

IneverthoughtIcould lovearobot.Imean real.true.honest.affection.Adeepemo‑ tionalconnectiontoamachine?ImeanI havesaidI“love”my97MitsuMonteroor I“love”mymacbook,butinreality,whatI feelforthoseobjectsisthatIlikethem.For thethoughtoflosingthemdoesnotmake

mystomachtightenormythroatclose.[...]

Thethoughtandperhapstoosoonreality of nothavingmylittlebuddyinmylife has utterlydevastatedme.Hearinghimchatter brightensmyday,Igetoutofbedlooking forwardtogoingdownstairs,makingsome smallnoiseandseeinghiseyespopopen thenhearingthatquestioningmelodicchirp.

Motivatedbysuchcomments,wehavedecidedto checkwhatattitudestowardVectormayberecogni‑ zedinRedditandYouTubecomments.Wewereinte‑ restedinnaturallanguagedatadrawnfromspontane‑ ouscommentsfromtheseserviceusers,becausesuch datahashighecologicalvalue,especiallyforattitudes studies(seeSection 2).

WealsoperceivetheVectorcaseasaninteresting casestudy.Vectorisdesignedasasocialrobot,butit isfarawayfromhumanoidsophisticatedrobots.Social robotsarerobotswithahighlevelofautonomy[2, 3, 9] –thismeansthatsuchrobotshavetheabilitytointer‑ prettheworldandcanalsolearn.Theymaketheirown decisionsandperformactivitiestoachievetheirgoals. Socialrobotsarealsocapableofinteractingwithhu‑ mans,areabletoadapttosocialnorms,beingableto readandexpressemotions[4],andhavetheabilityto adapttotheuser’scharactertraits.

Vectorcertainlyadherestothepresentedrequire‑ ments.Itlookslikea�ist‑sizedcube–seeFigure 1.It hasahandleonthefronttohelpitmoveandcarry lightitems.Itmoveswiththehelpoffourwheelsand acaterpillar.Inthefrontpart,ithasascreenthatacts asaface.Vectorisequippedwithmanysensorsthat allowVectortocollectinformationaboutitsenviron‑ mentandtoactaccordingly.Vectorhearsvoices,re‑ cognizespeopleandobjects,movesaroundtheroom avoidingobstacles,andwhenitsenergyleveldrops, itwill�indachargingstation.Therobotisequipped

‘ITISREALLYINTERESTINGHOWTHATSMALLROBOTIMPACTSHUMANS.’THE

‘ITISREALLYINTERESTINGHOWTHATSMALLROBOTIMPACTSHUMANS.’THE ‘ITISREALLYINTERESTINGHOWTHATSMALLROBOTIMPACTSHUMANS.’THEPawełŁupkowski,OlgaDanilewicz,DawidRatajczyk,AleksandraWasielewska Fig.1. Vector.Source: https://www.vectorrobot.shop/

withtouchsensorssoitknowswhenitisbeingtou‑ chedandmoved.Vectorcommunicateswithitsown synthesizedvoice.Accordingtotheproducers,Vector isagreatlittlefriendofthehouse.Ithasbeendesigned tohavefunandhelphouseholdmembers.

Despiteitssimplisticdesign,itgainedpopularity asarobotmadeforhouseholds.Thisbringsusauni‑ queopportunitytogatheropinionsandrecognizeatti‑ tudestowardasocialrobotthatiscommerciallyavai‑ lableandownedbyawidegroupofuserswhoarealso roboticsenthusiasts.

Thepaperisstructuredasfollows.Westartwith ashortoverviewofstudiesinwhichnaturallan‑ guagedataareusedtostudyattitudestowardrobots (Section 2).ThenwepresentourRedditandYouTube studiesinSection 3.Wedescribethetag‑setused,an‑ notationprocedure,andthefrequenciesofrecognized attitudetypes.Section 3.3 presentsacomparisonof twodatasourcesused.Wediscussthepotentialre‑ asonsforobserveddifferencesandconsequencesfor futurestudies.Weendupwithasummaryofour�in‑ dingsandananalysisofthelimitationsofthepresen‑ tedresearch.

Studyingattitudestowardrobotswiththeuseof non‑laboratorydataisgettingmoreandmorepopular. Thisismainlyduetotheattractivenessofsuchanap‑ proach.Spontaneousexpressionsofattitudestoward robots,whichwe�indinusers’comments,offerahigh ecologicalvalidityofdata.Whatismore,thissourceof‑ ferspotentiallyenormoussetsofdatatobegathered andanalyzed.Aswepresentbelow,YouTubeisespe‑ ciallypopularasasourceforsuchstudies.

Wewillstartwithstudiesinwhichmanualdata annotationwasused.Straitetal.[13]examinedcom‑ mentson24YouTubevideosdepictingsocialrobots varyinginhumansimilarity(fromHonda’sAsimoto HiroshiIshiguro’sGeminoids).Thestudywasaimed atrobotswithhighhuman‑likenessfactor.Theaimof thestudywastoexploretheuncannyvalley‑related commentsappearingintheretrieveddata(UV;[7]). Threecoderstookpartinthestudyandannotated[13, p.1421]thefollowing:thevalenceofresponse(posi‑ tive,negativeorneutral);presenceofUVrelatedre‑ ferences(likecreepinessoruncanniness);presence ofreplacement‑relatedreferences(likeexplicitmen‑ tionsoflossofjobs)andpresenceoftakeover‑related references(likeendofhumanity).The�indingswere inlinewithUVpredictions–users’commentariesre‑ �lectedanaversiontohighlyhumanlikerobots.What ismore,theauthorsdiscoveredopensexualizationof female‑genderedrobots.

Thelast�indingfrom[13]ledtothemoredetailed studypresentedin[12],whichaddressedtheissueof dehumanizationofhighlyhuman‑likerobots(Bina48, Nadine,andYangyang).Forthestudy,amanualan‑ notationofcommentsfromsixYouTubevideoswas performed.Codersannotatedthevalenceofacom‑ ment,anddehumanizingcomments.Theresultsindi‑

catethatpeoplemorefrequentlydehumanizerobots racializedasAsian(Yangyang)andBlack(Bina48) thantheydoofrobotsracializedasWhite(Nadine).

YetanotherstudyofYouTubecommentsinspired by[13]addressestheissueofUVandispresented in[5].Authorsfocusedonsocialrobotsranginginhu‑ manlikeness(moderatelyandhighlyhumanlike)and gender(maleandfemalerobotsofhighhumanlike‑ ness).Thedatawas1,788commentsretrievedfrom 27YouTubevideos.Threecodersannotatedthisset, recognizing:valence,presenceoftopicsrelatedtoap‑ pearance,societalimpact,mentalstates,andthepre‑ senceofstereotypes.Findingsindicatethatamodera‑ telyhumanlikerobotdesignmaybepreferableovera highlyhumanlikerobotdesignbecauseitislessasso‑ ciatedwithnegativeattitudesandperceptions.

Aswehavementionedearlier,serviceslikeYou‑ Tubeofferaccesstohugeamountsofdata.Thisopens newopportunitiesforresearch,butalsorequiresdif‑ ferenttoolsforanalysis(asmanualannotationinthe caseofextensivedata‑setsisaseriouschallenge).Re‑ levantstudiesusingnaturallanguageprocessingand machinelearningapproachesarepresentedbelow.In [15],we�indthetextminingandmachinelearning techniquesemployedtoanalyze10,301YouTubecom‑ mentsfromfourdifferentvideosdepictingfouran‑ droids:Geminoid‑F,Sophia,Geminoid‑DK,andJules. Thisexploratoryanalysisallowedfordistinguishing threetopicsimportantforrobotics:human–robotre‑ lationships,technicalspeci�ications,andtheso‑called science�ictionvalley(acombinationoftheUVconcept andreferencestoscience�ictionmoviesandgames).

Astudypresentedin[16]isaimedatdiscovering thepublic’sgeneralperceptionsofrobotsasfront‑ lineemployeesinthehotelindustry.Foranalysis,the twomostfrequentlyviewedYouTubevideosrelated totheemploymentofrobotsinhotelswereused.Aut‑ horsusedclusteranalysisasanexploratorytechni‑ queonthegathereddata‑set.Theanalysiswasbased ontheGodspeeddimensions[1](anthropomorphism, animacy,likeability,perceivedintelligence,andper‑ ceivedsafety).Theyreportthat“[...]potentialcusto‑ mers’perceptionswillbein�luencedbytherobot’s typeofembodiment.Obviously,humanoidrobotsare subjecttotheuncannyvalleyeffect,asthemajorityof viewerswerescaredandfeltunsecure”[16,p.33].

Ratajczyk,in[11],presentsanextensivestudyof 224,544commentsfrom1,515YouTubevideos.What differsbetweenstudyandtheonespresentedabove isthatitaddressesrobotsonawiderangeofpositi‑ onsonthehumanlikenessspectrum.Thestudywas aimedatrecognizingpeople’semotionalreactionsto‑ wardrobots.Themainresultindicatesthatpeople usewordsrelatedtoeerinesstodescribeveryhuman‑ likerobots(whichisinlinewithUVpredictions).The studyalsorevealedthatthesizeofarobotin�luence sentimenttowardit(suggestingthatsmallerrobots areperceivedasmoreplayful).

Lastbutnotleast,webelievethattheprojectpre‑ sentedin[6]isworthmentioning.Thepaperintrodu‑ cestheYouTubeAV50Kdata‑set,afreelyavailablecol‑

lectionofmorethan50,000YouTubecommentsand metadata.Thedata‑setisfocusedexclusivelyonauto‑ nomousvehicles.Asauthorspointout,arangeofso‑ cialmediaanalysistaskscanbeperformedandmea‑ suredontheYouTubeAV50Kdata‑set(textvisualiza‑ tion,sentimentalanalysis,andtextregression).

Ourstudyfocusesonlyononerobot–Vector.We havedecidedonthisstepmainlyduetothemotivation pointedoutintheIntroduction(astrongreactionafter theannouncementofclosingthecompanyproviding supportforVector).Wealso�indthisrobottobean interestingcase–studiesdescribedinthissectionfo‑ cusedmainlyonveryhuman‑likerobots.Vector’sde‑ signisaperfectexampleofdifferentapproach–itis nothuman‑likeatall,lookingmorelikeasmalltoy.6

Thefocushereisonthesocialabilitiesofarobot.As inmostofthepresentedstudies,wehavedecidedto useamanualannotationofthegathereddata.Wehave alsodecidedtoprepareatag‑settailoredespecially forthestudy(andmotivatedbyasocialrobotde�i‑ nition).Thetag‑setisaimedatidentifyinginteresting anthropomorphizationindicatorsincommentsabout Vector.Ourstudyisalsoofanexploratorycharacter (weespeciallydonotaimattheUV‑relatedanalyses, aswestudyonlyonerobotfromtheleftsideofthe humanlike‑nessspectrum).Whatisnovelhereisthe additionalaimofthestudy–namelyweaskaquestion aboutpotentialdifferencesbetweentwodatasources ofthecomments.RedditandYouTubeallowforspon‑ taneousexpressionofattitudestowardVector;howe‑ ver,duetothedifferentcharacteristicsoftheseplat‑ forms,onemayexpectthatuserswillfocusmoreon differentattributes.

1 SE descriptionofemotionalstates

2 WA jointactivities

3 AU theassignmentofautonomy

4 PR theassignmentofpreferences

5 OTHER othermanifestationsofanthropo‑ morphization

6 NONE noanthropomorphization

havescrappedthe�irst10pagesofcomments.This resultedin1,405commentsoverall(42,276words, 230,664characters).

Wehavedecidedtoexclude38commentsfrom thisdata‑set,astheywererelatedonlytothediscus‑ sionsconcerningthefutureoftheAnkicompanyand futuresupportfortheirproducts(thethread“Kick‑ starterUpdateEmailfromDigitalDreamLabs”).Af‑ teramanualcheck,another619postswereexcluded thatappearedtobeonlyatitleandnocontent.These werepoststhatcontainedaphotooravideoandas suchtheywereoutofthescopeofthistext‑oriented study.Alltheremaining748wereread,whichresul‑ tedwithanother37removedcomments,forthefol‑ lowingreasons:completelyincomprehensiblecontent ofthepost,linktoanothergroupregardingVector,and deletedcomments(visibleas [deleted]).Finally,710 commentsenteredthe�inalanalysis.Allthecomments wereleftinthesameorderastheywerepublished (theirorderwasnotrandomized)becausetheyrefer‑ redtoeachotherandotherwisethemeaningofthe statementmightbelost.

Weconductedtwoseparatestudies:oneforthe RedditdataandonefortheYouTubedata.Thedata areuser‑generatedcommentsconcerningVector.For Reddit,theywereretrievedfromtheappropriatesub‑ redditchannel.ForYouTube,thesewerethemain commentsfoundundervideospresentingVector.

Bothstudieswereperformedwiththesame schema.First,datawasretrievedfromthesourceand necessarydatapreparationsweredone.Inwhatfollo‑ wed,manualannotationwiththepreviouslyprepared tag‑setwasperformed.Aftercheckingtheannotation reliability,the�inaltagsforallthecommentswerees‑ tablished,andthefrequenciesforcategoriesfromthe tag‑setcounted.Theresultsfrombothstudiesarethen comparedanddiscussed.

Scriptsfordataretrieval,annotationguidelines, annotatedcommentsanddisagreementsdiscussion aboutdisagreementsareavailableontheproject’sOSF web‑page7

Redditlanguagedata: Thedatacollectedforana‑ lysiswascollectedfromReddit’schannel“AnkiVec‑ tor”.ThecommentsconcernedVectorandweredo‑ wnloadedusingthe R language[10]andthepackage RedditExtractoR.Forthepurposesofthestudy,we

Tag‐set: Forthepurposesofthisstudythefollowing tag‑setwasprepared.Tagsusedaretheresultofthe literaturereview,aswellasthe�irstreadingofReddit commentsbeforedownloadingthemforanalysis.Tags areaimedatgraspingthecommentsrevealingthean‑ thropomorphizationofVector.Asthestudyhadanex‑ ploratorycharacter,wefocusedontheshorttaglistin ordertomaketheannotator’staskeasier.Thistag‑set ispresentedinTable 1

Thetags SE, AU, PR, WA refertothemanifestations ofanthropomorphizationthatdirectlyresultfromthe de�initionofsocialrobots.

SE (descriptionofemotionalstates)shouldbe usedwhentherearecommentsregardingthedescrip‑ tionofemotionalstatesthattherobothas.Thisindi‑ catesthatpeopleascribeemotionalstatestorobots andthattherobotiscapableofshowingfeelings(or, morecarefully,behaviorsinterpretedassuch).Exem‑ plarycommentsfromthiscategoryare:“meandmy vectorrobomancing. heseemedsad soihadtoreas‑ sure.”;“CanVectorget depressed?”.

WA (descriptionofjointhuman‑robotactivities)is tobeusedwhencommentsregardingthejointacti‑ vitiesofarobotwithahumanareidenti�ied,e.g., “Making pizza with myroboy.”Suchdescriptionssug‑ gestthathumansplanandundertakecertainactivities withtherobot(whichhasapotentialpositiveeffecton

maintainingtherelationshipwiththerobot).

AU (theassignmentofautonomytotherobot)iden‑ ti�iescommentsregardingrobotautonomy,i.e.,ascri‑ bingtherobottheabilitytomakedecisionsandacti‑ onsbasedonitsownbeliefsandpreferences.Exam‑ ples:“Lolthislilasshole keepsknocking myhubby’s phoneoffthedeskand laughingaboutit.”;“Noregard forpersonalspace....Myentiredesktouseandiwork inonecornerandmylapsoiwon’tdisturbVector”.

PR (theassignmentofpreferencestotherobot) isusedwithcommentsregardingrobotpreferences –forexample:“Vector doesn’tlike traveling.”;“Vector doesn’tlike smoking”.

The OTHER and NONE tagsareadditionaltagsthat refersuccessivelytoothermanifestationsofanthro‑ pomorphizationandtheirabsence,e.g.,technicalthre‑ ads.

Annotationprocedureandannotationreliability: Threeannotatorsproceededtoanalyzethecom‑ ments.Theannotatorswereguidedbytheannotation guide,whichincludedashortintroductionexplaining thenatureofthestudyanddescribingwhatVector is.Adescriptionforeachtag,alongwithexemplary comments,wasthenprovided.

Forthesakeoftheannotationprocess,aspreads‑ heet�ilewaspreparedwhereeachrowwasreserved foronecomment.Thecolumnswerethefollowing: nicknameofacommentauthor;comment;�ieldfor enteringthetag;�ieldforenteringtheadditionalre‑ marks.Annotatorswereaskedtotagtheentirecom‑ mentwithonetagonly(theprevalentone).Asforad‑ ditionalremarks,coderswereespeciallyaskedtopro‑ videonefortags OTHER and NONE –theadditionalex‑ planationshouldidentifythetopicofthecommentfor furtheranalyses.

Annotators’agreementwasestablishedwiththe useof R [10]andthe irr package.Theagreementrate forallthreeannotatorsis75%,withaFleissKappava‑ lueof0.509(i.e., moderate agreement–see[14]).The levelofagreementbetweenannotatorsmaybearesult oftwofactors–�irstly,thecomplexityandlengthofthe task(numberofcommentstoread),andsecondlyby therequirementtouseonlyonetagfortheentirecom‑ ment–thus,acomplexityofadecisiononwhichatti‑ tudeisthemostdominant(thisisespeciallycommon foralong,elaboratedcomment).Table 2 presentsthe summaryoftheagreementbetweentheannotators. Only26(4%)commentswereobservedforwhich therewasnoagreement.

Onthebasisofthreeannotations,the�inaltagwas

assignedtoeachcomment.Forcaseswithtwocompa‑ tibleannotations,thechoicewasthetagchosenbytwo annotators.Forcaseswiththreedifferentannotations the�inaltagwasdecidedonthebasisofadiscussion betweenannotators.Table 3 showsthefrequencyof thetags.Whatisinterestingisthat,themajorityoftags refertothelackofmanifestationsofanthropomorphi‑ zationintheanalyzeddata.

Vectoranthropomorphizationtagsrepresent 20.70%oftheentiresampleanalyzed.Themost frequentattitudeidenti�iedistheassignmentof autonomytoVector.Itisfollowedbydescriptionsof Vector’semotionalstatesandattributingpreferences toit.Commentsdescribingjoinedactivitieswerevery rareinthesample.8

Asthetag OTHER wasrelativelyfrequent,wehave decidedtoanalyzetheadditionalexplanationsprovi‑ dedbyannotators,withtheaimofidentifyingcatego‑ riesthatmaybeusedastag‑setextensions.Themost commontopicspointedoutbyannotatorswere(i) hu‑ manfeelings towardVector,(ii)referringtoVectorasa pet and(iii)identifying personality traitsofVector.On thebasisofthis�inding,wehavedecidedtoextendthe initialtag‑set(Table 1)withthefollowingthreetags:

(i) UC forcommentsabouthowapersonfeelstoward Vector(e.g.,“I love himsooomuch!!”).

(ii) ZD toannotatecommentsabouttreatingVectorasif itwereapet(“Thisisandroidpets”;“Isthisjusttobe cute?Likeacutepet?IthinkIwantitcauseIwant acatbutthismightbemoreworthitandeasierto careforlol.”).And

(iii) PO forcommentsabouttreatingVectorasifhehad hisownpersonality(“Theyhave personalities and fromwhatIcantelltheyallcanberaiseddiffe‑ rently”).

Allthetagsannotatedinourstudyas OTHER were re‑annotated(withtheuseofadditionalexplanati‑ onsprovidedinthestudybyannotators).Outofthe 27comments,12wererelatedtohumanfeelingsto‑ wardVectorandthusannotatedas(UC);7wereabout treatingVectorasifhewereadomesticanimal(i.e., ZD),and4wereconcerningpersonalitytraitsofVector (PO).4remainingcommentswereleftwiththeinitial OTHER tagastheydidnot�itintoanyoftheestablished categories.

YouTubeLanguagedata: Thedataforanalysiswasre‑ trievedfromcommentsunderthreeselectedYouTube videos.TheyarelistedinTable 4.The�irstvideocom‑ paresVectorandCozmorobots9 ,thesecondvideoisa Vectoruserreview,andthethirdoneshowsitsmost importantfeatures.

Thedatawereretrievedwiththeuseof R program‑ minglanguage[10]andthepackage vosonSML.Overall 1,925commentsweredownloaded(respectively,56 forthe�irstvideo,1,424forthesecond,and445for thethirdone)andallofthementeredfurtheranaly‑ sis.

YouTubetag‐set: Fortheannotation,wehaveused thetag‑setfromtheRedditstudywiththreeadditio‑ nalcategoriesintroducedonthebasisofthe OTHER tag analysis.ThefulltagsetispresentedinTable 5.

Annotationprocedureandannotationreliability: Twoannotatorsparticipatedinthedataanalysis. AsinthecaseoftheRedditstudy,annotatorswere guidedbytheannotationguide.Analogically,the spreadsheet�ilewaspreparedwithallthecomments inseparaterows.

Theresultsoftheagreementoftwoannotators wereanalyzedwiththeuseof R [10]andthe irr package.Theagreementrateis83.6%,withtheCo‑ hen’sKappavalueof0.45(i.e.,moderateagreement, [14]).Table 6 providesasummaryoftheannotator agreements.16%ofcommentswereobservedwithno agreement.Asinthepreviousstudycase,the�inaltags

forthesecaseswereestablished via discussionbet‑ weenannotators.

Table 7 showsthefrequencyofobservedcatego‑ ries.AnalogicallytotheRedditstudy,mosttagsin‑ dicatethatnoanthropomorphizationwasobserved: NONE.Exemplarycommentsofthiskindare:“Ihavea WEIRDquestion.Canitplaymusic.LikeAlexa”;“my dadliterallyresetmyvectorsdata.Ihadhimfor2ye‑ arsandnowalldatalost”.

Vectoranthropomorphizationtagsaccountfor 18.81%oftheentiresampleanalyzed.Themostcom‑ monamongthemisthe UC tag,denoting feelings ofthe humantowardtherobot.E.g.,“itwasadorablewhen itfellawww”;“nuumyheartsankwhenitfellxD”;“I lovemyvectormorethanlifenotevenjoking”.

Thesecondvisiblecategoryis ZD –i.e.,referringto Vectoraspet.Thismaybeobservedinthefollowing exemplarycomments:“Isthisjusttobecute?Likea cutepet?IthinkIwantitcauseIwantacatbutthis mightbemoreworthitandeasiertocareforlol.”;“I wanttobuythatapetrobot”;“Beingabletopetarobot ismylife’sdream”.

ThepresentedexploratorystudiesofRedditand YouTubedataallowforinterestingcomparison.In bothcases,wearedealingwithattitudestowardVec‑ torexpressedinanaturallanguage.Webelievethatit isworthaskingwhetherthesesourcesdifferinterms ofattitudesexpressed.Theintuitionhereisthatthe typeofdatasource–textualvs.visual–mattersfor Vector’sperception.Figure 2 showsthepercentageof eachtag’sappearanceinbothstudies(excludingthe NONE tag).

Onemayeasilynoticethattherearetwomajor categoriesintheannotateddata.The�irstoneis AU, whichdenotescommentsconcerningVector’sauto‑ nomy.Thesecondoneisthe UC tag,relatedtothedes‑ criptionofhumanfeelingstowardtherobot. AU con‑ stitutestheprevalentclassinRedditdata(whereasthe numbersofthisclassofcommentforYouTubedataare small–46.94%vs.8.84%).IntheYouTubedataitis UC dominatingtheentiresample(whilethenumbersfor Redditaresmall–54.97%vs.8.16%).Itmaybealso observedthattheseclassesreallydominatethetwo analyzedsamples.

Ourexploratoryanalysisshowsthatthepercep‑ tionofVector(asextractedfromusers’comments)dif‑ fersbetweenRedditandYouTube.Severalfactorsmay bethereasonfortheobserveddifference.

First,Redditusersaremorelikelytoactuallyown aVectorathome,whichwecanconcludefromthede‑ taileddescriptionsofVector,postingphotosorkno‑ wingitstechnicaldetails.Ownersareabletowrite moreaboutVector’sfunctionsorabilitiesasthey spendtimewithit.Dailyactivitiestogetherallowfor gatheringmoreexperiences,andasaconsequence theydescribeVectorfromabroaderperspective.Thus, themajorityofcommentsinoursamplerefertothe Vector’sautonomy(observedinthosedailyactivities). WethinkthatinthecaseofYouTube,mostuserscom‑ mentonwhattheyseeonagivenvideo.The�ilmslas‑ tedfrom3to12minutes,sotheycouldonlydrawcon‑ clusionsfromthefewminutestheywatched.Inthe caseofYouTube,the AU tagcomprised8.84%,making itthethirdmostcommontag.Thevideosbrie�lydes‑ cribedVector’sabilities,hencethecommentsabout therobot’sautonomy,butnotaspopularasinthecase ofReddit.

Second,thisdifferencemaybeduetothenature ofthetwoplatforms.Longpostsandcommentsare morecommononReddit.Peoplesharetheirproblems

andthoughts,andexpressthemselveswhentheyhave time.Theforumpromptstheusertoenteritdelibera‑ telywhen(s)hewantstocommentorreadsomething. YouTubeisspeci�icabouttheopposite.�ommentsare short,writtenimmediatelyafterviewingtherecor‑ dingorduringit.

Duetobothfactors,commentsexpressinghuman feelingstowardVectorappeartobethemorenatural onestoappearonYouTube.Userswatchthevideoand thencommentthattheylikeVector.TheythinkVector iscute,sotheywriteashortcommentofthisformim‑ mediately.Webelievethatthewayinwhichavideois presentingVector(light,music)alsohasanimpacton howtherobotisperceived,asthesefactorsin�luence thegeneralmoodofauser.YouTubecommentersas‑ sessVector’sfunctionsandskillsonthebasisofwhat hasbeenpresented,sotheydescribetheoverallim‑ pressionVectormadeonthem.

Thistendencyisalsovisibleforother,lessrepre‑ sentedcategories.Letusfocusonassigningpreferen‑ cestoVector(PR).Thisneedsknowledgeandexpe‑ riencewiththerobot,requiresinteractionsandob‑ servationsofVectorindifferentsituations.Itisdif‑ �iculttoextractpreferencesfromshortvideosabout Vector.AmongtheYouTubecomments, PR constitutes only0.55%ofobservedcategories,andonReddititis 13.61%.

Wehavepresentedanexploratoryanalysisofhu‑ manattitudestowardthesocialrobotVector.Oneaim wastodescribeVectorintermsofcategoriesrefer‑ ringtoVector’sautonomy,preferences,emotions,etc. Wehaveusedtwonon‑laboratorydatasources:Reddit andYouTube.Overall,2,635commentsweremanually annotatedwithaspeciallypreparedtag‑set.Thisal‑ lowedustorealizethesecondgoal,i.e.,tocompare thesesourceswithrespecttoVector’simagere�lected inusers’comments.

AgeneralobservationisthatVectorisdescribed accordingtoitssocialrobotstatusandtendstoevoke positiveemotions.Itisperceivedasanautonomous agent,whichiscapableofshowingitspreferences. Vectorisalsocomparedtoapet.Webelievethatthe tag‑setdesignedandtestedforthisstudyoffersagood startingpointforfuturestudiesfocusedonotherso‑ cialrobots.

Theresultscon�irmtheintuitionthatthesourceof thelinguisticdataisimportantforattitudesresearch. Oneshouldbearinmindthecharacteristicfeaturesof suchadatasourceanditstargetaudience.Different sourcesmaybeusedtogether,tograspamorediverse picture,orseparately,tocomparedifferentviews.The presentedstudymaybethereforeextendedwithVec‑ torreferencesinTwitterthreads,forexample.

Thisbringsustotheissueofthefrequencyofob‑ servedattitudesinoursample.Asnoticed,thevast majorityoftagsinthesamplewere NONE.Thepercen‑ tageisasimilar�igure:81.19%fortheYouTubecom‑ mentsand79.30%fortheRedditcomments.Thissug‑ geststhatoneneedstogatherlargedatasamplesfor

analysisofthepresentedtype,especiallyifonewould liketoaimforamore�ine‑grainedtag‑setthantheone wehaveusedhere.

Attheend,wewanttoaddresscertainlimitati‑ onsofthepresentedstudy.Firstofall,itisuncertain whetherthebeliefsexpressedinthecommentsare actualbeliefs.Userscansimplycommentonthema‑ terialspontaneouslyorjokingly.Theadvantage,ho‑ wever,iseasyaccesstolinguisticdataobtainedina non‑laboratorymanner.Statementsthathaveactually beenwritteninthewildareanalyzed,notthosethat werecreatedforthepurposeofthestudy.

Thereisalsoariskthatthedataobtainedinthe YouTubestudyrefernottotheentire�ilm,butonlyto fragmentsofit.Commentsmaycontainstatementsbut refertoadifferentsituationinthemovie.Theword “good”canrefertobothVectorandthepersonpresen‑ tingit,totheeditingofthevideo,totheamusingsitu‑ ationdepicted,ortosomeotherelementthatdoesnot involvetheattitudetowardtherobot.Webelievethat themanualannotationprocessmitigatesbothpointed risks.

Theannotationprocedureforthisstudyfocused onentirecommentsasbasicannotationunits.Onthe onehand,itmakestheannotationprocedureeasier, butontheother(especiallyforthelong‑elaborated comments),itmayleadtodisagreementsbetweenan‑ notatorsandresultinloweragreementscores.Wewill addressthisinourfuturestudies.Thebasicannota‑ tionunitwillbedecidedbyanannotator,andmore thanoneattitudeinonecommentmaybelabeledthis way.Weshouldalsoinvolvemoreannotatorsandpro‑ videthemwithtrainingbeforethemainannotation task.

1 SteveCrowe, Anki,consumerroboticsmaker,shutsdown, TheRobotReport, https://www.therobotreport.com/ankiconsumer-robotics-maker-shuts-down/

2 SteveCrowe, Ankiaddressesshutdown,ongoingsupportfor robots,TheRobotReport, https://www.therobotreport.com/ anki-addresses-shutdown-ongoing-support-for-robots/

3 Originalspellingispreservedinallthecommentspresentedin thispaper.

4 [r/AnkiVector,May02,2019] https://www.reddit.com/ r/AnkiVector/comments/bjz5w5/really_interesting_how_ that_small_robot_impact/

5 [r/AnkiVector,“Myheartisbreaking”May03,2019] https://www.reddit.com/r/AnkiVector/comments/bk36zv/ my_heart_is_breaking/

6 Forcomparison,thehuman‑likenessscorefromtheABOTdata‑ base[8]is:96.95(themaximumis100)forNadine,92.6forGemi‑ noid,78.88forSophia,73.0forBina48,45.4forAsimo–whilethe ABOTPredictorestimatesthisfactorforVectoronthelevelof6.1 (see[11,p.1801]).

7 https://osf.io/yfuzt/

8 Wemayhypothesizethatthiseffectispartiallyduetothefocus onthetextualcomments.Weomittedpicturesandvideomaterials postedtoReddit,which–accordingtoourobservations–oftenpre‑ senttheaforementionedjoinedVector‑useractivities.

9 VectorandCozmoarevisuallyverysimilartoeachother–the differencesareonthelevelofprogrammingsolutionsandtheway youinteractwiththem(https://ankicozmorobot.com/cozmovs-vector/,accessed30.11.2022).Thus,wehavedecidedtouse thisvideo,asonthelevelofthismaterial,thedifferencesarenot apparent.Whatismore,thechancethatacommentrelatedtoCo‑ zmowillappearinthedatawasminimizedbythemanualprocess ofannotation.

PawełŁupkowski∗ –FacultyofPsychologyand CognitiveScience,AdamMickiewiczUniversity, Poznan,Szamarzewskiego89/AB,60‑568Poz‑ nan,e‑mail:Pawel.Lupkowski@amu.edu.pl,www: https://plupkowski.wordpress.com/.

OlgaDanilewicz –FacultyofPsychologyandCog‑ nitiveScience,AdamMickiewiczUniversity,Poz‑ nan,Szamarzewskiego89/AB,60‑568Poznan,e‑mail: o.danilewicz@gmail.com.

DawidRatajczyk –FacultyofPsychologyandCog‑ nitiveScience,AdamMickiewiczUniversity,Poznan, Szamarzewskiego89/AB,60‑568Poznan,e‑mail:Da‑ wid.Ratajczyk@amu.edu.pl.

AleksandraWasielewska –FacultyofPsychology andCognitiveScience,AdamMickiewiczUniversity, Poznan,Szamarzewskiego89/AB,60‑568Poznan, e‑mail:Aleksandra.Wasielewska@amu.edu.pl.

∗ Correspondingauthor

[1] C.Bartneck,E.Croft,andD.Kulic,“Measuringthe anthropomorphism,animacy,likeability,percei‑ vedintelligenceandperceivedsafetyofrobots”. In: ProceedingsoftheMetricsforHuman‑Robot InteractionWorkshopinaf�iliationwiththe3rd ACM/IEEEInternationalConferenceonHuman‑ RobotInteraction(HRI2008),2008.

[2] C.BartneckandJ.Forlizzi,“Adesign‑centredfra‑ meworkforsocialhuman‑robotinteraction”.In: RO‑MAN2004.13thIEEEInternationalWorkshop onRobotandHumanInteractiveCommunication (IEEECatalogNo.04TH8759),2004,591–594.

[3] C.Breazeal,“Towardsociablerobots”, Robotics andautonomoussystems,vol.42,no.3‑4,2003, 167–175.

[4] T.Fong,I.Nourbakhsh,andK.Dautenhahn,“A surveyofsociallyinteractiverobots”, Robotics andautonomoussystems,vol.42,2003,143–166.

[5] Q.R.Hover,E.Velner,T.Beelen,M.Boon,andK.P. Truong,“Uncanny,sexy,andthreateningrobots: Theonlinecommunity’sattitudetoandpercepti‑ onsofrobotsvaryinginhumanlikenessandgen‑ der”.In: Proceedingsofthe2021ACM/IEEEInter‑ nationalConferenceonHuman‑RobotInteraction, 2021,119–128.

[6] T.Li,L.Lin,M.Choi,K.Fu,S.Gong,andJ.Wang, “Youtubeav50k:anannotatedcorpusforcom‑ mentsinautonomousvehicles”.In: 2018In‑ ternational�ointSymposiumonArti�icialIntel‑ ligenceandNaturalLanguageProcessing(iSAI‑ NLP),2018,1–5.

[7] M.Mori,“Bukiminotani(theuncannyvalley)”, Energy,vol.7,no.4,1970,33–35.

[8] E.Phillips,X.Zhao,D.Ullman,andB.F.Malle, “Whatishuman‑like?:Decomposingrobots’

human‑likeappearanceusingtheanthropomor‑ phicrobot(abot)database”.In: 201813th ACM/IEEEInternationalConferenceonHuman‑ RobotInteraction(HRI),2018,105–113.

[9] N.Piçarra,J.‑C.Giger,G.Pochwatko,andJ.Moża‑ ryn,“Designingsocialrobotsforinteractionat work:socio‑cognitivefactorsunderlyinginten‑ tiontoworkwithsocialrobots”, JournalofAu‑ tomationMobileRoboticsandIntelligentSystems, vol.10,2016.

[10] RCoreTeam. R:ALanguageandEnvironmentfor StatisticalComputing.RFoundationforStatisti‑ calComputing,Vienna,Austria,2013.

[11] D.Ratajczyk,“Shapeoftheuncannyvalleyand emotionalattitudestowardrobotsassessedby ananalysisofyoutubecomments”, International JournalofSocialRobotics,vol.14,no.8,2022, 1787–1803.

[12] M.Strait,A.S.Ramos,V.Contreras,andN.Gar‑ cia,“Robotsracializedinthelikenessofmargi‑ nalizedsocialidentitiesaresubjecttogreaterde‑ humanizationthanthoseracializedaswhite”.In: 201827thIEEEInternationalSymposiumonRo‑ botandHumanInteractiveCommunication(RO‑ MAN),2018,452–457.

[13] M.K.Strait,C.Aguillon,V.Contreras,and N.Garcia,“Thepublic’sperceptionofhuman‑ likerobots:�nlinesocialcommentaryre�lects anappearance‑baseduncannyvalley,ageneral fearofa“technologytakeover”,andtheunabas‑ hedsexualizationoffemale‑genderedrobots”.In: 201726thIEEEInternationalSymposiumonRo‑ botandHumanInteractiveCommunication(RO‑ MAN),2017,1418–1423.

[14] A.J.VieraandJ.M.Garrett,“Understandingin‑ terobserveragreement:Thekappastatistic”, Fa‑ milyMedicine,vol.37,no.5,2005,360–363.

[15] E.VlachosandZ.‑H.Tan,“Publicperceptionof androidrobots:Indicationsfromananalysisof youtubecomments”.In: 2018IEEE/RSJInternati‑ onalConferenceonIntelligentRobotsandSystems (IROS),2018,1255–1260.

[16] C.‑E.Yu,“Humanlikerobotsasemployeesinthe hotelindustry:Thematiccontentanalysisofon‑ linereviews”, JournalofHospitalityMarketing& Management,vol.29,no.1,2020,22–38.

Submitted: 21st December 2021; accepted 24th February 2022

Sohrab Khanmohammadi, Mohammad SamadiDOI: 10.14313/JAMRIS/2-2022/11

Abstract:

The application of quadcopter and intelligent learning techniques in urban monitoring systems can improve flexibility and efficiency features. This paper proposes a cloud-based urban monitoring system that uses deep learning, fuzzy system, image processing, pattern recognition, and Bayesian network. The main objectives of this system are to monitor climate status, temperature, humidity, and smoke, as well as to detect fire occurrences based on the above intelligent techniques. The quadcopter transmits sensing data of the temperature, humidity, and smoke sensors, geographical coordinates, image frames, and videos to a control station via RF communications. In the control station side, the monitoring capabilities are designed by graphical tools to show urban areas with RGB colors according to the predetermined data ranges. The evaluation process illustrates simulation results of the deep neural network applied to climate status and effects of the sensors’ data changes on climate status. An illustrative example is used to draw the simulated area using RGB colors. Furthermore, circuit of the quadcopter side is designed using electric devices.

Keywords: urban monitoring, cloud computing, quadcopter, deep learning, fuzzy system, image processing, pattern recognition, bayesian network, intelligent techniques, learning systems

Urban monitoring systems are essential application tools in today’s world. A wide range of urban monitoring applications is evident proof of growing interest in this field. These systems can be designed and implemented by using various electromechanical devices such as sensors, off-the-shelf cameras, and microphones. For example, available parking can be tracked in a metropolitan area and urban traffic can be controlled by monitoring tools. Existing urban monitoring solutions are primarily composed of static sensor deployment and pre-defined communication/software infrastructures. Therefore, they are hardly scalable and are vulnerable when conducting their assigned purposes [1–3].

A quadcopter – namely multirotor, quadrotor, or drone – is a simple flying electromechanical vehicle

that is composed of four arms and a motor attached to the propeller on each arm. Two rotors turn clockwise, and the other two turn counterclockwise. A flight computer or controller can be applied to convert the operator’s commands into the desired motion. Quadcopters can be equipped with various electromechanical devices (e.g., sensor and camera) to gather the data on phenomena in urban areas [4–6].

Quadcopters transmit big data to the monitoring servers. These big data should be stored as a database so various results can be derived according to the reported information. Cloud computing offers the main support for targeting the primary challenges with shared computing resources (e.g., computing, storage, and networking). The application of these computing resources has performed impressive big data advancements [7–10].

Most existing urban monitoring systems [21–24] consist of stationary devices on urban fields. This feature inhibits system flexibility, and operational costs are noticeably enhanced. The application of a quadcopter with the aid of cloud computing, image processing [11], and intelligent learning systems (such as deep learning [12, 13], deep neural network [14], fuzzy decision making [15–18], pattern recognition [19], and Bayesian network [20]), can considerably improve the efficiency of existing monitoring systems. A cloud-based urban monitoring system is proposed in this paper based on using a quadcopter and intelligent learning systems. It uses sensing data of the temperature, humidity, and smoke sensors to determine climate status via deep learning. The system uses geographic coordinates and sensing data to conduct the climate, temperature, humidity, and smoke monitoring using fuzzy systems, image processing, and RGB colors. Pattern recognition and Bayesian networks are also applied to detect fire occurrence over urban areas. It seems that the proposed system can improve the existing urban monitoring systems.

Section 2 represents a literature review of the existing urban monitoring systems. Section 3 describes units and strategies of the proposed cloud-based urban monitoring system. Section 4 evaluates the proposed deep learning unit, monitoring tools, and the detection of fire occurrence. Section 5 provides circuit design of the quadcopter side by using electric devices. Finally, the paper is concluded in Section 6.

Calabrese et al. [21] have described a real-time urban monitoring system using the Localizing and Handling Network Event Systems (LocHNESs) platform. The system is designed by Telecom Italia for the real-time analysis of urban dynamics according to the anonymous monitoring of mobile cellular networks. The instantaneous positioning of buses and taxis is utilized to give information about urban mobility in real-time situations. This system can be used for monitoring a variety of phenomena in different regions of cities, from traffic conditions to the movements of pedestrians.

Abraham and Pandian [22] have presented a lowcost mobile urban environmental monitoring system based on off-the-shelf open-source hardware and software. They have developed the system in such a way that it can be installed or applied in public transport vehicles, especially in the school and college buses in various countries (e.g., India). Moreover, a pollution map of the local and regional areas can be provided from the gathered data in order to enhance the awareness of urban pollution problems.

Lee et al. [23] have applied wireless sensor networks, Bluetooth sensors, and Zigbee transceivers to provide a low-cost and energy-saving urban mobility monitoring system. The Bluetooth sensor captures MAC addresses of the Bluetooth units which are equipped in car navigation systems and mobile devices. The Zigbee transceiver then transmits the gathered MAC addresses to a data center without using any fundamental communication infrastructures (e.g., 3G/4G networks).

Shaban et al. [24] have presented a monitoring and forecasting system for urban air pollution. This system applies the low-cost, air-quality monitoring motes which are equipped with gaseous and meteorological sensors. The modules receive and then store the data, convert the data into useful information, predict the pollutants with historical information, and finally address the collected information via various channels (e.g., mobile application). Moreover, three machine learning algorithms are utilized to construct accurate forecasting models.

The proposed monitoring system provides various monitoring features including climate, temperature, humidity, and smoke monitors, and the detection of fire occurrence. First, a quadcopter – flying over urban areas – transmits temperature, humidity, smoke, geographical coordinates, image, and video data toward a control station in a specified period of time. Then, the control station conducts the above monitoring features by using deep learning, fuzzy systems, image processing, pattern recognition, and Bayesian networks based on the data transmitted from the quadcopter. It uses a software framework via graphical tools to manage tasks created by the monitoring

purposes. This section describes units and strategies of this system.

In the supposed model, a quadcopter flies over urban areas based on the flight control system presented by Zhao and Go [25] in order to gather various phenomenal data including temperature, humidity, smoke, geographical coordinates, images, and videos. The quadcopter is equipped with different sensors and electromechanical devices such as temperature sensor, Global Positioning System (GPS), and camera. It transmits the sensed data toward a control station (e.g., a laptop) via RF communications in a specified period of time. The control station stores the received data in a MongoDB cloud database. Upon receiving the data from the quadcopter, it calculates the climate status, monitors four environmental conditions by graphical tools, and detects fire occurrence based on the phenomena data. The sensing data range of temperature sensor is (0, 80) °C, humidity sensor is (0, 100) % RH, and smoke sensor is (10, 10000) ppm. Note that some of the temperature sensors sense a heat data below 0 °C and/or higher than 80 °C. Thus, the system model only considers the sensing data in the range of (0, 80) °C. Fig. 1 represents the schematic model of the proposed monitoring system.

Deep neural network [26, 27] can give a deep learning feature to the proposed system. It leads to the climate status to be determined precisely based on the input data. In this unit, temperature, humidity, and smoke are the input data, while climate status is the output data.

Fig. 2 illustrates a deep neural network to calculate quantitative value of the climate status in the range of (0, 100) %. Each neuron uses a Perceptron function [28] to transmit an appropriate value to the next layer based on the values received from previous layer, as below

where n is number of neurons and b is bias. The weights between neurons at the subsequent layers are determined in the network based on experimental results and human experiences.

midity monitor, and smoke monitor. Monitoring results are carried out based on data transmissions of the quadcopter and geographical coordinates. Climate monitor is illustrated for various regions on the urban areas, while the other monitors are done for each point on the areas via a point-to-point process.

The value of climate status can be calculated as follows

2131223223333 x100(xwxwxwb)100 t CS =×=+++×

where wijs are determined after the learning process of the neural network.

Note that the normalized value of each neuron at the input layer can be determined as

THS xxx MaxMaxMax = = =

,, THS THS

Consequently, climate status is calculated as 31 32 33 3 (www )100 THS

THS CS b MaxMaxMax =+++×

(2)

where T is temperature data, MaxT is the maximum sensing data of the temperature sensor, H is humidity data, MaxH is the maximum sensing data of the humidity sensor, S is smoke data, MaxS is the maximum sensing data of the smoke sensor, wijs are the weights of output layer, and b3 is the bias value. As represented in the above calculations, quantitative amounts of the weights and biases are defined in a way that the complexity of all equations is reduced noticeably. If climate status is low then environmental condition is favorable; otherwise, environmental condition is critical.

Monitoring system consists of four monitoring tools: climate monitor, temperature monitor, hu-

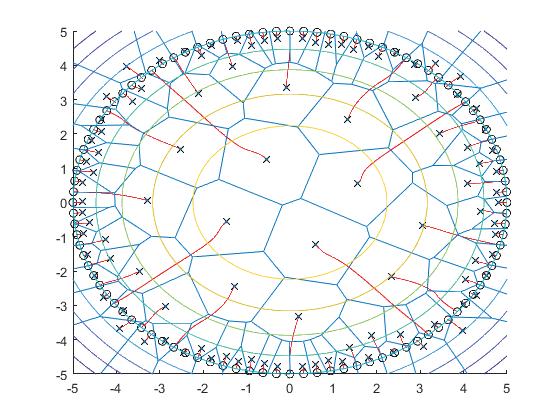

Climate monitors are an essential monitoring tool in urban management systems. It can depict climate conditions of all locations. The climate monitor of the proposed system is conducted for various regions on the map. That is, the whole map is categorized into various regions so that each region has a unique climate status. First, a unique status is determined for each region by a proposed fuzzy system. Then, every region will be shown by an RGB color, based on the unique climate status through an image processing phase. Note that the dimensions of every region – in this system – are considered to be 100 × 100 points. The input variables of the fuzzy system are “Number of repeats” and “Distance to mean.” The output variable of the system is “Selection rate” as illustrated in Fig. 3. Universe of discourse for “Number of repeats” and “Distance to mean” is {0, 2500, 5000, 7500, 10000}, while universe of discourse for “Selection rate” is {0, 25, 50, 75, 100}. Linguistic terms of “Number of repeats” are {“Few”, “Normal”, “Many”}, linguistic terms of “Distance to mean” are {“Near”, “Mediocre”, “Far”}, and linguistic terms of “Selection rate” are {“Low”, “Medium”, “High”}. Membership functions of the inputs are determined by the triangular function and membership functions of the output are specified by the bell-shaped function [29]. Moreover, rule-making process is performed by the Mamdani-type fuzzy system [30]. Table 1 represents IF-THEN rules of the fuzzy system.

Fuzzification converts real numbers as well as linguistic terms to fuzzy sets. That is, it specifies MFs of the inputs and the output. The triangular function is calculated as below:

where xi is a member of the output universe of discourse, μT(xi) is the membership degree of xi, and p is the number of members in the output universe of discourse [31–37].

where x indicates a member of the universe of discourse, a represents the lower limit, b indicates the upper limit, and m represents the center of triangle. The bell-shaped function is determined as following

Algorithm 1 represents how to select an appropriate climate status for each region. Lines 1 to 4 define initial variables of the procedure. Line 8 calculates average climate status of each region based on the values of CS. Line 9 determines number of repeats and line 10 determines distance to mean of all climate statuses at current region in the range of [0, 100]. Afterward, line 11 calculates success rate of the climate statuses using the fuzzy system. Finally, lines 12 to 14 select an appropriate climate status, set the statuses of all points to the selected status, and depict the whole region by an RGB color based on the selected climate status.

Table 2 indicates four RGB colors that are determined for climate statuses. These colors are used to illustrate all regions via image processing. All climate statuses are categorized into four terms to draw regions with an appropriate RGB color based on the selected status.

where x indicates a member of the universe of discourse, c and a adjust the center and width of the membership function, and b represents the slope at the cross points.

The relation between the inputs and the output in the proposed fuzzy system is expressed in terms of a set of the IF-THEN fuzzy rules listed in Table 1.

As mentioned before, the inference engine in the proposed fuzzy system applies the Mamdani-type system. It performs based on the given rule base as follows:

Temperature, humidity, and smoke monitors are other tools in the proposed monitoring system that will be conducted based on sensing data of the quadcopter’s sensors. They are managed by a point-topoint process to draw each point on the map by image processing techniques. Table 3 represents how to select an appropriate RGB color for every point based on sensing data of the temperature, humidity, and smoke sensors.

Algorithm 1. Climate monitor of the urban areas

1: L ← Length of the area

2: W ← Width of the area

3: CS[1.. L][1..W] ← Climate status of all the points

4: i ← 1

5: While (i < L){

6: j ← 1

7: While (j < W){

8: Avg ← Average climate status of the CS[i..i+99][j.. j+99]

9: NR[1..100] ← Number of repeats

where Aj , Bj , and Cj indicate the MFs of the inputs and the output applied in the fuzzy rules, R represents the total rule of the fuzzy rules, Rj indicates a fuzzy rule in the rule base, m represents the number of fuzzy rules, A’ and B’ indicate the membership grades of two inputs fed from the input parameters, and C’ represents the membership grade of the output determined based on the inputs’ values.

The center-of-gravity method is used to specify crisp value of a fuzzy output, as below

10: DM[1..100] ← Distance to mean

11: SR[1..100] ← Success rate

12: Select the climate status with the most success rate

13: Set all points of current region to the selected status

14: Depict the region by the RGB color associated to the selected status

16:

17:

18:

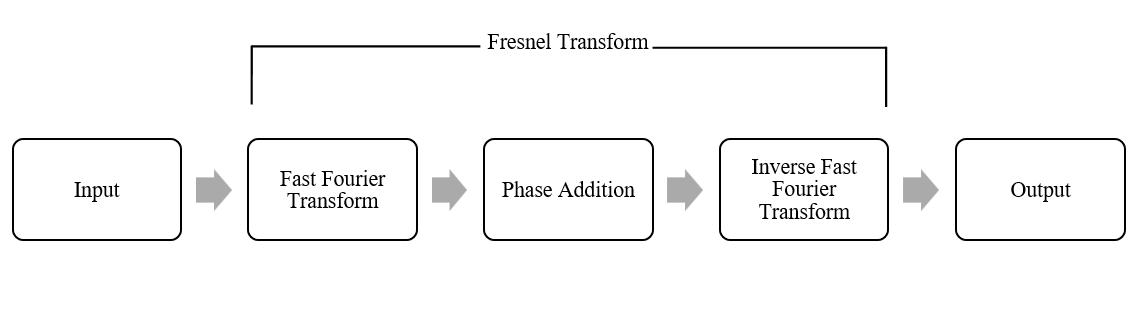

The proposed system applies the fire detection algorithm presented by Ko et al. [38] by using irregular

patterns of flames and hierarchical Bayesian network. Fig. 4 shows various elements and steps of this detection algorithm. The overall system is divided to two main steps: pre-processing step and fire verification step. In the pre-processing step, input image is analyzed after using the adaptive background subtraction and color probability models. The image can be obtained based on the image and/or video data transmitted from the quadcopter.

Afterward, if pixels are selected in the candidate process then they will be forwarded to the next step; otherwise, the current process of system will be terminated. In the fire verification step, skewness estimation uses four fire features to evaluate the pixels of input image. Then, probability density is estimated by skewness, and fire pixels are verified by a Bayesian network. If the probability is greater than a predefined threshold value then the fire occurrence will be detected; otherwise, the analysis process will be rejected.

This section evaluates the efficiency of some units that are considered in the proposed monitoring system, including deep learning, monitors, as well as pattern recognition and Bayesian networks. Simulation results are carried out according to the proposed system model. However, some of the simulation parameters are changed in some cases to demonstrate the difference between various scenarios.

Fig. 5 shows an instance of the simulation results for the proposed deep neural network. The results are carried out in 2500 points for the temperature, humidity, and smoke sensors. Moreover, climate status is calculated for these points based on sensing data of the sensors. It is evident that, in most of the points, climate status is critical because the temperature, humidity, and smoke data are high.

Fig. 6 illustrates effects of the temperature, humidity, and smoke changes on climate status in the deep learning unit. Default values of the temperature, humidity, and smoke sensors are considered to be equal to 30 °C, 50% RH, and 4000 ppm, respectively. As indicated by the simulation results, these changes are harmonic for all the sensors. The reason is that effects of the temperature, humidity, and smoke sensors on climate status are linear. Since the default values of sensors are not supposed very low, the minimum climate status is 25.

In most cases, simulation results indicate that temperature is hot, humidity is medium, and smoke has high density.

As represented before, the pattern recognition and Bayesian network unit uses a file detection algorithm that is presented by Ko et al. [38]. This work is compared to Töreyin’s method [39] and Ko’s method [40] in terms of true positive and detection speed, as indicated by Table 4. Comparison results demonstrate that, in most video sequences, the true positive of the considered work is more than that of the other works. Besides, average frames per second obtained by this work is more than the average frames obtained by Töreyin’s and Ko’s methods. Therefore, performance of the fire detection process – which is applied in the proposed system – is better than that of most existing related works.

The quadcopter is composed of various electromechanical devices to reach the predefined goals. Fig. 8 depicts the main electronic devices, especially sensors, of the circuit in the quadcopter side, which is designed and simulated in Proteus Pro 8.4 SP0 Build 21079. An ATmega32 microcontroller is used as the main controller to manage the input and output devices. All of the sensors are connected to the microcontroller via port A. LM35DZ temperature sensor is connected to pin 0, SHT11 humidity sensor is connected to pins 1 and 2, and a MQ-2 gas sensor is connected to pin 3.

Fig. 7 illustrates an example of the simulation results that are carried out by the temperature, humidity, and smoke monitors. The simulation process is conducted in an urban area with the dimensions of 1500 × 500 points. It considers only 20 instances of the temperature, humidity, and smoke data that are transmitted from the quadcopter and then are stored in the MongoDB database. Each instance is painted by a RGB color, according to the descriptions in Table 3.

A cloud-based urban monitoring system was proposed in this paper that uses a quadcopter and some of the intelligent learning systems. The quadcopter utilizes the temperature, humidity, and smoke sensors in addition to a camera and Global Positioning System (GPS) to transmit the phenomena data to a control station. All data are stored in a MongoDB cloud database to maintain all environmental conditions of the urban areas. This system is composed of several units including deep learning, fuzzy systems, and image processing, as well as

pattern recognition and Bayesian networks. The deep learning unit calculates climate status based on the sensing data of all the sensors. It uses a deep neural network to determine an appropriate status according to the pre-defined weights. The system controls four monitors to graphically illustrate conditions of the urban areas: climate monitor, temperature monitor, humidity monitor, and smoke monitor. The climate monitor is designed by a fuzzy system, image processing techniques, and RGB colors. The suggested fuzzy system consists of two inputs, namely “number of repeats” and “distance to mean”, as well as one output, namely “selection rate”. In contrast, the other monitors only use image processing techniques and RGB colors to show the temperature, humidity, and smoke data by means of graphical tools. Finally, the pattern recognition and Bayesian network unit detects the fire occurrence by using irregular patterns of flames and hierarchical Bayesian network.

Evaluation results were carried out based on the simulation results of deep learning, all the graphical monitors, as well as pattern recognition and Bayesian network. The results obtained by algorithm of the last unit are compared to some of the existing fire detection algorithms in terms of true positive and detection speed. Comparison results indicate that it has a high efficiency in most implemented cases. The main electronic circuit of the quadcopter side is designed and simulated in Proteus Pro 8.4 SP0 Build 21079.

Sohrab Khanmohammadi – Faculty of Electrical and Computer Engineering, University of Tabriz, Tabriz, Iran

Mohammad Samadi* – Polytechnic Institute of Porto, Portugal, Email: mmasa@isep.ipp.pt

[1] U. Lee, B. Zhou, M. Gerla, E. Magistretti, P. Bellavista, A. Corradi, “Mobeyes: smart mobs for urban monitoring with a vehicular sensor network,” IEEE Wireless Communications, vol. 13, no. 5, 2006, pp. 52–57. DOI: 10.1109/WC-M.2006.250358

[2] P. J. Urban, G. Vall-Llosera, E. Medeiros, S. Dahlfort, “Fiber plant manager: An OTDR-and OTM-based PON monitoring system,” IEEE Communications Magazine, vol. 51, no. 2, 2013, pp. S9–S15. DOI: 10.1109/MCOM.2013.6461183

[3] A. del Amo, A. Martínez-Gracia, A. A. Bayod-Rújula, J. Antoñanzas, “An innovative urban energy system constituted by a photovoltaic/thermal hybrid solar installation: Design, simulation and monitoring,” Applied Energy, vol. 186, 2017, pp. 140–51. DOI: 10.1016/j.apenergy.2016.07.011

[4] I. Sa, P. Corke, “Vertical infrastructure inspection using a quadcopter and shared autonomy control,”

Field and Service Robotics. Springer, 2014, pp. 219–32. DOI: 10.1007/978-3-642-40686-7_15

[5] X. Song, K. Mann, E. Allison, S.-C. Yoon, H. Hila, A. Muller, C. Gieder, “A quadcopter controlled by brain concentration and eye blink”, Proceedings of the IEEE Signal Processing in Medicine and Biology Symposium (SPMB), Philadelphia, PA, 3-3 Dec. 2016, pp. 1–4. DOI: 10.1109/SPMB.2016.7846875

[6] D. E. Chang, Y. Eun, “Global Chartwise Feedback Linearization of the Quadcopter with a Thrust Positivity Preserving Dynamic Extension,” IEEE Transactions on Automatic Control, vol. 62, no. 9, 2017, pp. 4747–52.

[7] C. Yang, Q. Huang, Z. Li, K. Liu, F. Hu, “Big Data and cloud computing: innovation opportunities and challenges,” International Journal of Digital Earth, vol. 10, no. 1, 2017, pp. 13–53.

[8] M. Sookhak, A. Gani, M. K. Khan, R. Buyya, “Dynamic remote data auditing for securing big data storage in cloud computing,” Information Sciences, vol. 380, 2017, pp. 101–16. DOI: 10.1016/j.ins.2015.09.004

[9] Y. Zhang, M. Qiu, C. -W. Tsai, M. M. Hassan, A. Alamri, “Health-CPS: Healthcare cyber-physical system assisted by cloud and big data,” IEEE Systems Journal, vol. 11, no. 1, 2017, pp. 88–95. DOI: 10.1109/ JSYST.2015.2460747

[10] J. L. Schnase, D. Q. Duffy, G. S. Tamkin, D. Nadeau, J. H. Thompson, C. M. Grieg, M. A. McInerney, W. P. Webster, “MERRA analytic services: meeting the big data challenges of climate science through cloud-enabled climate analytics-as-a-service,” Computers, Environment and Urban Systems, vol. 61, 2017, pp. 198–211. DOI: 10.1016/j.compenvurbsys.2013.12.003

[11] Nakamura J., Image sensors and signal processing for digital still cameras, CRC Press, Boca Raton, 2016.

[12] Y. LeCun, Y. Bengio, G. Hinton, “Deep learning”, Nature, vol. 521, no. 7553, 2015, pp. 436–44. DOI: 10.1038/nature14539

[13] Goodfellow I., Bengio Y., Courville A., Deep Learning, MIT Press, 2016.

[14] W. Samek, A. Binder, G. Montavon, S. Lapuschkin, K. -R. Müller, “Evaluating the visualization of what a deep neural network has learned,” IEEE transactions on neural networks and learning systems, vol. PP, no. 99, 2016, pp. 1–14. DOI: 10.1109/TNNLS.2016.2599820

[15] F. J. Cabrerizo, F. Chiclana, R. Al-Hmouz, A. Morfeq, A. S. Balamash, E. Herrera-Viedma, “Fuzzy decision making and consensus: challenges,” Journal of Intelligent & Fuzzy Systems, vol. 29, no. 3, 2015, pp. 1109–1118. DOI: 10.3233/IFS-151719

[16] N. A. Korenevskiy, “Application of fuzzy logic for decision-making in medical expert systems,” Biomedical Engineering, vol. 49, no. 1, 2015, pp. 46–49. DOI: 10.1007/s10527-015-9494-x

[17] T. Runkler, S. Coupland, R. John, “Interval type-2 fuzzy decision making,” International Journal of Approximate Reasoning, vol. 80, 2017, pp. 217–224. DOI: 10.1016/j.ijar.2016.09.007

[18] Z. Hao, Z. Xu, H. Zhao, R. Zhang, “Novel intuitionistic fuzzy decision making models in the framework of decision field theory,” Information Fusion, vol. 33, 2017, pp. 57–70. DOI: 10.1016/ j.inffus.2016.05.001

[19] Devroye L., Györfi L., Lugosi G., A probabilistic theory of pattern recognition, Springer Science & Business Media: Berlin, 2013, vol. 31.

[20] C. Bielza, P. Larrañaga, “Discrete Bayesian network classifiers: a survey,” ACM Computing Surveys (CSUR), vol. 47, no. 1, 2014, Article No. 5. DOI: 10.1145/2576868

[21] F. Calabrese, M. Colonna, P. Lovisolo, D. Parata, C. Ratti, “Real-time urban monitoring using cell phones: A case study in Rome,” IEEE Transactions on Intelligent Transportation Systems, vol. 12, no. 1, 2011, pp. 141–151. DOI: 10.1109/ TITS.2010.2074196

[22] K. Abraham, S. Pandian, “A low-cost mobile urban environmental monitoring system,” Proceedings of the 4th IEEE International Conference on Intelligent Systems Modelling & Simulation (ISMS), Bangkok, Thailand, 29-31 Jan. 2013, pp. 659–664. DOI: 10.1109/ISMS.2013.76

[23] J. Lee, Z. Zhong, B. Du, S. Gutesa, K. Kim, “Lowcost and energy-saving wireless sensor network for real-time urban mobility monitoring system,” Journal of Sensors, vol. 2015, 2015, pp. 1–8. DOI: 10.1155/2015/685786

[24] K. B. Shaban, A. Kadri, E. Rezk, “Urban air pollution monitoring system with forecasting models,” IEEE Sensors Journal, vol. 16, no. 8, 2016, pp. 2598–2606. DOI: 10.1109/JSEN.2016.2514378

[25] W. Zhao, T. H. Go, “Quadcopter formation flight control combining MPC and robust feedback linearization,” Journal of the Franklin Institute, vol. 351, no. 3, 2014, pp. 1335–1355. DOI: 10.1016/j.jfranklin.2013.10.021

[26] J. Schmidhuber, “Deep learning in neural networks: An overview,” Neural Networks, vol. 61, 2015, pp. 85–117. DOI: 10.1016/j.neunet.2014.09.003

[27] M. Havaei, A. Davy, D. Warde-Farley, A. Biard, A. Courville, Y. Bengio, C. Pal, P.-M. Jodoin, H. Larochelle, “Brain tumor segmentation with deep neu-

ral networks,” Medical Image Analysis, vol. 35, 2017, pp. 18–31. DOI: 10.1016/j.media.2016.05.004

[28] C.-C. Hsu, K. -S. Wang, H.-Y. Chung, S.-H. Chang, “A study of visual behavior of multidimensional scaling for kernel perceptron algorithm,” Neural Computing and Applications, vol. 26, no. 3, 2015, pp. 679–691. DOI: 10.1007/s00521-014-1746-2

[29] Ross T. J., Fuzzy logic with engineering applications, John Wiley & Sons, 2009.

[30] M. Cococcioni, L. Foschini, B. Lazzerini, F. Marcelloni, “Complexity reduction of Mamdani Fuzzy Systems through multi-valued logic minimization,” Proceedings of the IEEE International Conference on Systems, Man and Cybernetics, Singapore, 12–15 Oct. 2008, pp. 1782–1787. DOI: 10.1109/ ICSMC.2008.4811547

[31] M. S. Gharajeh, S. Khanmohammadi, “Dispatching Rescue and Support Teams to Events Using Ad Hoc Networks and Fuzzy Decision Making in Rescue Applications,” Journal of Control and Systems Engineering, vol. 3, no. 1, 2015, pp. 35–50.

DOI:10.18005/JCSE0301003

[32] M. S. Gharajeh, S. Khanmohammadi, “DFRTP: Dynamic 3D Fuzzy Routing Based on Traffic Probability in Wireless Sensor Networks,” IET Wireless Sensor Systems, vol. 6, no. 6, 2016, pp. 211–219.

DOI: 10.1049/iet-wss.2015.0008

[33] S. Khanmohammadi, M. S. Gharajeh, “A Routing Protocol for Data Transferring in Wireless Sensor Networks Using Predictive Fuzzy Inference System and Neural Node,” Ad Hoc & Sensor Wireless Networks, vol. 38, no. 1–4, 2017, pp. 103–124.

[34] M. S. Gharajeh, “FSB-System: A Detection System for Fire, Suffocation, and Burn Based on Fuzzy Decision Making, MCDM, and RGB Model in Wireless Sensor Networks,” Wireless Personal Communications, vol. 105, no. 4, 2019, pp. 1171–1213.

DOI: 10.1007/s11277-019-06141-3

[35] M. S. Gharajeh, “Implementation of an Autonomous Intelligent Mobile Robot for Climate Purposes,” International Journal of Ad Hoc and Ubiquitous Computing, vol. 31, no. 3, 2019, pp. 200–218.

DOI: 10.1504/IJAHUC.2019.10022345

[36] M. S. Gharajeh, “A Knowledge and Intelligence-based Strategy for Resource Discovery on IaaS Cloud Systems,” International Journal of Grid and Utility Computing, vol. 12, no. 2, 2021, pp. 205–221. DOI: 10.1504/IJGUC.2021.114819

[37] M. S. Gharajeh, H. B. Jond, “Speed Control for Leader-Follower Robot Formation Using Fuzzy System and Supervised Machine Learning,” Sensors, vol. 21, no. 10, 2021, Article ID 3433. DOI: 10.3390/ s21103433

[38] B. C. Ko, K.-H. Cheong, J.-Y. Nam, “Early fire detection algorithm based on irregular patterns of flames and hierarchical Bayesian Networks,” Fire Safety Journal, vol. 45, no. 4, 2010, pp. 262–270. DOI: 10.1016/j.firesaf.2010.04.001

[39] B. U. Töreyin, Y. Dedeoğlu, U. Güdükbay, A. E. Cetin, “Computer vision based method for real-time fire and flame detection,” Pattern Recognition Letters, vol. 27, no. 1, 2006, pp. 49–58. DOI: 10.1016/j.patrec.2005.06.015

[40] B. C. Ko, K.-H. Cheong, J.-Y. Nam, “Fire detection based on vision sensor and support vector machines,” Fire Safety Journal, vol. 44, no. 3, 2009, pp. 322–329. DOI: 10.1016/j.firesaf.2008.07.006

Submitted: 21st July 2021; accepted 22nd February 2022

H. Suhaimi, N. N. Aziz, E. N. Mior Ibrahim, W. A. R. Wan Mohd IsaDOI: 10.14313/JAMRIS/2-2022/12

Abstract:

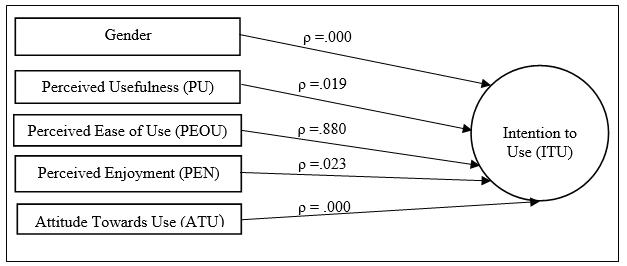

In alignment with smart city initiatives, Malaysia is shifting its educational landscape to a smart learning environment. The Ministry of Education (MoE) has made History a mandatory subject for passing the Malaysian Certificate of Education to grow awareness and instil patriotism among Malaysian students. However, History has been known as one of the difficult subjects to study for many students. On the other hand, the Malaysian Government Education Blueprint 2013-2025 seeks to “leverage ICT scale up quality learning” across the country. Therefore, this study aims to identify the factors that influence the intention to use Augmented Reality (AR) for mobile learning in learning History subject among secondary school students in Malaysia. Quantitative approach has been chosen as the research method for this study. A direct survey was conducted on 400 secondary school students in one of the smart cities in Malaysia as the target respondents. The collected data are analysed through descriptive statistics and Multiple Linear Regression analysis by using Statistical Package for the Social Sciences. Based on the results, the identified factors that influence the intention to use AR for mobile learning in learning History subject are Gender, Perceived Usefulness, Perceived Enjoyment, and Attitude Towards Use. The identified factors can be a good reference for schools and teachers to strategize their teaching and learning methods in pertaining to History subject among secondary school students in Malaysia. Future studies may include the study of various types of schools in Malaysia and explore more moderating effects of demographic factors.

Keywords: Smart City Education, Mobile Learning, Augmented Reality, History, Technology Acceptance Model

With the integration of various digital technologies into the learning and teaching process, the demand for smart learning has grown steadily in recent years, especially in smart city scenarios. Citizens’ engagement with smart city ecosystems in various ways using smartphones and mobile devices has paved a new way for a better learning experience in a smart learning environment [1].

Advanced technology in education should be applied at the school level so that the learning process will become more interactive especially for a factual and informative subject such as History. In addition, effective utilization of technology can develop the interest in learning among students in the classroom while making the teaching and learning process more interactive and meaningful. Thus, technology in education plays a significant role to assist the student to understand the subject better to increase the number of students to pass History subject in order to obtain the Malaysian Certificate of Education.

The Malaysian Government Education Blueprint 2013-2025 roadmap seeks to “leverage ICT to scale up quality learning” across the country. The importance of improving the curriculum in order to keep up with emerging changes has therefore been recognized and is being introduced as one of the 11 adjustments in the roadmap of pre-school and post-secondary education [2].

Mobile learning is considered as a part of a new educational environment introduced by affordable and portable supporting technologies. Mobile learning enhances ubiquitous learning experiences anytime and anywhere with the aid of mobile devices such as tablets, smartphones, PDA, and so on. Nowadays, mobile learning is projected to be used in both formal and informal learning in educational institutions [3]. In addition, mobile learning approach supports Goal 4 of Sustainable Development Goal (SDG) which is focusing on Quality of Education [4].

Furthermore, according to Gartner [5], the Top 10 Strategic Technology trends for 2019 are autonomous things, augmented analytics, AI-driven development, digital twins, empowered edge, immersive experience, blockchain, smart spaces, digital privacy and ethics, and quantum computing. Strategic technology trends can be defined as disruptive technology that can give an impact on the emerging state for the next five years. AR is one of the immersive technologies which can change the interaction between users with the world. Thus, it is hoped that AR would be an appropriate technology that will help students in enhancing the experience and interactivity in learning History subject.

A study conducted by Mohamed & Zali, in their research on enhancing essay abilities on History subject, recognized some challenges students had

with the topic [6]. In their research, it was found that students are lack interest in reading, have difficulty in memorizing a number of facts, have no interest in the topic, incapacity to understand certain topics and inefficient teaching methods by the teachers. One of the reasons that contribute to the challenges above is due to the huge number of factual data packed in textbooks [7].

In addition, a study by Magro [8] stated History subject was regarded by students as less interactive and demotivating. This is probably because the teaching process is separated from the reality and experience of the students. In fact, the SPM Subject Grade Point Average (SGPA) of History subject decline from 5.23 in 2017 to 4.98 in 2018. Literature analysis by Yilmaz [9] focusing on trends of AR in education between the year 2016 to 2017 shows that AR technology was mostly introduced in primary and graduate education. In addition, science education is the researchers’ most explored field of education. Therefore, it can be concluded that there is still a lack of studies and attention to the application of AR technology in secondary school and History subject. A feasibility study has been conducted on the readiness of M-Learning AR in learning History [10].

On the other hand, Technology Acceptance Model (TAM) is the most valid and robust model developed by Davis [11]. TAM perceives the user in determining behaviour of people in utilizing technology. Besides, the ability to predict people’s acceptance on technology by measuring their intentions, ability to explain their intentions in terms of their attitudes towards use, perceived usefulness, perceived ease of use, and external variables.

Despite promising features of mobile learning and AR, there is still a lack of implementation acceptance of these technologies at schools in Malaysia. Therefore, this study aims to identify the factors that influence the intention to use AR for mobile learning in learning History subject among secondary school students in Malaysia.

The Malaysian government Education Blueprint 2013-2025 roadmap seeks to “leverage ICT scale up quality learning” across Malaysia (Malaysia Ministry of Education, 2012) [2]. The significance of improving the curriculum in order to keep up with evolving trends has therefore been recognized and is being implemented as one of the 11 revisions to the strategic plan for Pre-school and Post-Secondary Education. In order to achieve Shift 7 which is to leverage ICT to scale up quality learning across the country, mobile learning plays an important role in this paradigm. AR technology has great potential to provide a better teaching and learning experience in challenging subjects such as History.

Based on preliminary study, most teachers and students are lack of awareness and understanding of AR mobile learning technology. This statement has been supported by the interview sessions with three History teachers and six students from selected

secondary schools. According to the teachers, the exposure of mobile learning through AR technology is still not exposed enough among Malaysian teachers, especially among senior teachers. Most of them still prefer to teach in a traditional way by using a whiteboard, books, and hands-on. Even though History subject was introduced at the school approximately three decades ago, the teaching methods can still be seen as traditional approach which depends entirely on the textbook. On the other hand, History subject is one of challenging subject to teach and learn since it requires a lot of memorizations of factual and dates with events happened in the past. As for the students, they also stated that they are not aware of mobile learning and AR technology since lack exposure in their learning process at the school.

Even though there is a number of mobile learning applications available in the market, these applications still do not get enough attention from both teachers and students. Features such as animation, audio 3D graphics, and other media content can provide many remarkable benefits for the students which can make learning History subject more interactive.