WWW.JAMRIS.ORG pISSN 1897-8649 (PRINT)/eISSN 2080-2145 (ONLINE) VOLUME 18, N° 3, 2024

Indexed in SCOPUS

WWW.JAMRIS.ORG pISSN 1897-8649 (PRINT)/eISSN 2080-2145 (ONLINE) VOLUME 18, N° 3, 2024

Indexed in SCOPUS

A peer-reviewed quarterly focusing on new achievements in the following fields: • automation • systems and control • autonomous systems • multiagent systems • decision-making and decision support • • robotics • mechatronics • data sciences • new computing paradigms •

Editor-in-Chief

Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Advisory Board

Dimitar Filev (Research & Advenced Engineering, Ford Motor Company, USA)

Kaoru Hirota (Tokyo Institute of Technology, Japan)

Witold Pedrycz (ECERF, University of Alberta, Canada)

Co-Editors

Roman Szewczyk (Łukasiewicz-PIAP, Warsaw University of Technology, Poland)

Oscar Castillo (Tijuana Institute of Technology, Mexico)

Marek Zaremba (University of Quebec, Canada)

Executive Editor

Katarzyna Rzeplinska-Rykała, e-mail: office@jamris.org (Łukasiewicz-PIAP, Poland)

Associate Editor

Piotr Skrzypczyński (Poznań University of Technology, Poland)

Statistical Editor

Małgorzata Kaliczyńska (Łukasiewicz-PIAP, Poland)

Editorial Board:

Chairman – Janusz Kacprzyk (Polish Academy of Sciences, Łukasiewicz-PIAP, Poland)

Plamen Angelov (Lancaster University, UK)

Adam Borkowski (Polish Academy of Sciences, Poland)

Wolfgang Borutzky (Fachhochschule Bonn-Rhein-Sieg, Germany)

Bice Cavallo (University of Naples Federico II, Italy)

Chin Chen Chang (Feng Chia University, Taiwan)

Jorge Manuel Miranda Dias (University of Coimbra, Portugal)

Andries Engelbrecht ( University of Stellenbosch, Republic of South Africa)

Pablo Estévez (University of Chile)

Bogdan Gabrys (Bournemouth University, UK)

Fernando Gomide (University of Campinas, Brazil)

Aboul Ella Hassanien (Cairo University, Egypt)

Joachim Hertzberg (Osnabrück University, Germany)

Tadeusz Kaczorek (Białystok University of Technology, Poland)

Nikola Kasabov (Auckland University of Technology, New Zealand)

Marian P. Kaźmierkowski (Warsaw University of Technology, Poland)

Laszlo T. Kóczy (Szechenyi Istvan University, Gyor and Budapest University of Technology and Economics, Hungary)

Józef Korbicz (University of Zielona Góra, Poland)

Eckart Kramer (Fachhochschule Eberswalde, Germany)

Rudolf Kruse (Otto-von-Guericke-Universität, Germany)

Ching-Teng Lin (National Chiao-Tung University, Taiwan)

Piotr Kulczycki (AGH University of Science and Technology, Poland)

Andrew Kusiak (University of Iowa, USA)

Mark Last (Ben-Gurion University, Israel)

Anthony Maciejewski (Colorado State University, USA)

Typesetting

SCIENDO, www.sciendo.com

Webmaster TOMP, www.tomp.pl

Editorial Office

ŁUKASIEWICZ Research Network

– Industrial Research Institute for Automation and Measurements PIAP

Al. Jerozolimskie 202, 02-486 Warsaw, Poland (www.jamris.org) tel. +48-22-8740109, e-mail: office@jamris.org

The reference version of the journal is e-version. Printed in 100 copies.

Articles are reviewed, excluding advertisements and descriptions of products.

Papers published currently are available for non-commercial use under the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 (CC BY-NC-ND 4.0) license. Details are available at: https://www.jamris.org/index.php/JAMRIS/ LicenseToPublish

Open Access.

Krzysztof Malinowski (Warsaw University of Technology, Poland)

Andrzej Masłowski (Warsaw University of Technology, Poland)

Patricia Melin (Tijuana Institute of Technology, Mexico)

Fazel Naghdy (University of Wollongong, Australia)

Zbigniew Nahorski (Polish Academy of Sciences, Poland)

Nadia Nedjah (State University of Rio de Janeiro, Brazil)

Dmitry A. Novikov (Institute of Control Sciences, Russian Academy of Sciences, Russia)

Duc Truong Pham (Birmingham University, UK)

Lech Polkowski (University of Warmia and Mazury, Poland)

Alain Pruski (University of Metz, France)

Rita Ribeiro (UNINOVA, Instituto de Desenvolvimento de Novas Tecnologias, Portugal)

Imre Rudas (Óbuda University, Hungary)

Leszek Rutkowski (Czestochowa University of Technology, Poland)

Alessandro Saffiotti (Örebro University, Sweden)

Klaus Schilling (Julius-Maximilians-University Wuerzburg, Germany)

Vassil Sgurev (Bulgarian Academy of Sciences, Department of Intelligent Systems, Bulgaria)

Helena Szczerbicka (Leibniz Universität, Germany)

Ryszard Tadeusiewicz (AGH University of Science and Technology, Poland)

Stanisław Tarasiewicz (University of Laval, Canada)

Piotr Tatjewski (Warsaw University of Technology, Poland)

Rene Wamkeue (University of Quebec, Canada)

Janusz Zalewski (Florida Gulf Coast University, USA)

Teresa Zielińska (Warsaw University of Technology, Poland)

Publisher:

1

VOLUME 18, N˚3, 2024

DOI: 10.14313/JAMRIS/3-2024

Tackling Non‐IID Data and Data Poisoning in Federated Learning Using Adversarial Synthetic Data

Anastasiya Danilenka

DOI: 10.14313/JAMRIS/3‐2024/17

14

Gradient Scale Monitoring for Federated Learning Systems

Karolina Bogacka, Anastasiya Danilenka, Katarzyna Wasielewska‐Michniewska

DOI: 10.14313/JAMRIS/3‐2024/18

Efficiency of Artificial Intelligence Methods for Hearing Loss Type Classification: An Evaluation

Michał Kassjański, Marcin Kulawiak, Tomasz Przewoźny, Dmitry Tretiakow, Jagoda Kuryłowicz, Andrzej Molisz, Krzysztof Koźmiński, Aleksandra Kwaśniewska, Paulina Mierzwińska‑Dolny, Miłosz Grono

DOI: 10.14313/JAMRIS/3‐2024/19

39

Analysis of Dataset Limitations in Semantic Knowledge‐Driven Multi‐Variant Machine

Translation

Marcin Sowański, Jakub Hościłowicz, Artur Janicki

DOI: 10.14313/JAMRIS/3‐2024/20

49

Identification and Modeling of the Dynamical Object with the Use of HIL Technique

Łukasz Sajewski, Przemysław Karwowski

DOI: 10.14313/JAMRIS/3‐2024/21

Advanced Perturb and Observe Algorithm for Maximum Power Point Tracking in Photovoltaic Systems with Adaptive Step Size

Amal Zouhri

DOI: 10.14313/JAMRIS/3‐2024/22

EEG based Emotion analysis Using Reinforced Spatio‐Temporal Attentive Graph Neural and Contextnet techniques

C. Akalya Devi, D. Karthika Renuka

DOI: 10.14313/JAMRIS/3‐2024/23

69

Enhancing Stock Price Prediction in the Indonesian Market: A Concave LSTM Approach with RunReLU

Mohammad Diqi, I Wayan Ordiyasa

DOI: 10.14313/JAMRIS/3‐2024/24

78

Atlantic Blue Marlin, Boops, Chironex Fleckeri, and General Practitioner – Sick Person Optimization Algorithms

Lenin Kanagasabai

DOI: 10.14313/JAMRIS/3‐2024/25

89

Network Optimization Using Real Time Polling Service with and Without Relay Station in WiMax Networks

Mubeen Ahmed Khan, Awanit Kumar, Kailash Chandra Bandhu

DOI: 10.14313/JAMRIS/3‐2024/26

This part of the Journal of Automation, Mobile Robotics and Intelligent Systems is devoted to current studies in Computer Science and Information Technology presented by young, talented contributors working in the field – it is the fourth edition of this series. Among the included papers, one can find contributions dealing with diagnosing machine learning problems, natural language processing procedures, AI classification and clustering methods, optimization tasks, and learning procedures. This part of JAMRIS was inspired by broad and interesting discussions during the Eight Doctoral Symposium on Recent Advances in Information Technology (DS-RAIT 2023), held in Warsaw, Poland, on September 17-20, 2023, as a satellite event of the Federated Conference on Computer Science and Information Systems (FedCSIS 2023). The Symposium facilitated the exchange of ideas between early-stage researchers, particularly PhD students, in Computer Science. Furthermore, the Symposium gave all participants an opportunity to obtain feedback on their ideas and explorations from the experienced members of the IT research community who had been invited to chair all DS-RAIT thematic sessions. Therefore, submitting research proposals with limited preliminary results was strongly encouraged. Here, we highlight the contributions entitled “Mitigating the effects of non-IID data in federated learning with a self-adversarial balancing method,” written by Anastasiya Danilenka (Warsaw University of Technology). This paper received the Best Paper Award at DS-RAIT 2023.

This issue contains the following DS-RAIT papers in their special, extended versions.

The first paper, entitled “Tackling Non-IID Data and Data Poisoning in Federated Learning Using Adversarial Synthetic Data,” authored by Anastasiya Danilenka, explores crucial aspects of federated learning (FL). FL involves collaborative model training across diverse devices while safeguarding data privacy. However, managing heterogeneous data across these devices poses a significant challenge, exacerbated by the potential presence of malicious clients aiming to disrupt the training process through data poisoning. The article addresses the issue of discerning between poisoned and non-Independently and Identically Distributed (non-IID) data by proposing a technique that leverages data-free synthetic data generation, employing a reverse adversarial attack concept. This approach enhances the training process by assessing client coherence and favouring trustworthy participants. The experimental findings garnered from image classification tasks on MNIST, EMNIST, and CIFAR-10 datasets are meticulously documented and analysed, shedding light on the efficacy of the proposed method. As already mentioned, the DS-RAIT Program Committee voted this work the Best Paper of the event because of its excellent presentation of applicational aspects and promising results.

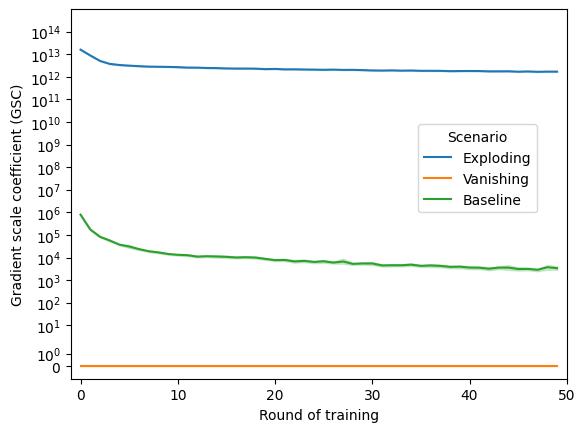

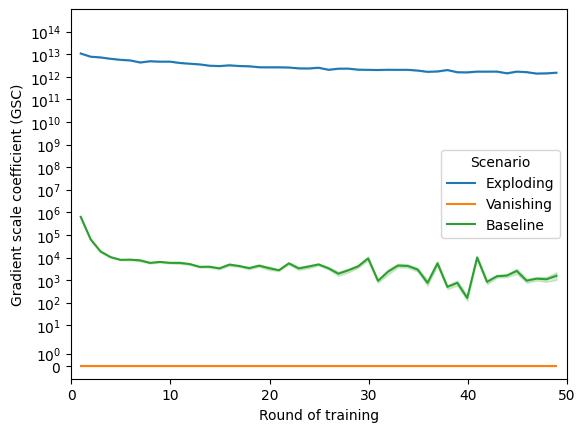

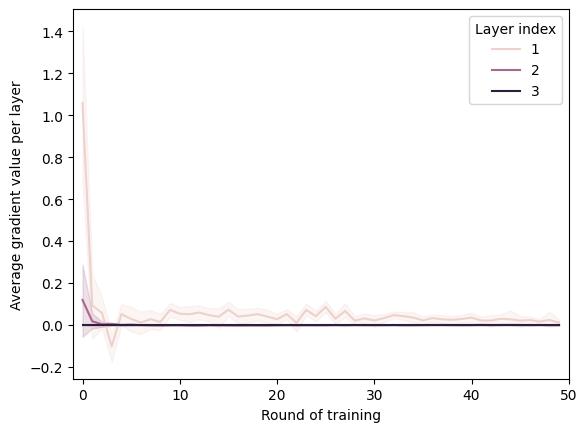

The paper entitled “Gradient Scale Monitoring for Federated Learning Systems” was written by Karolina Bogacka, Anastasiya Danilenka, and Katarzyna Wasielewska-Michniewska. In this paper, the authors delve into the burgeoning realm of Federated Learning amidst edge and IoT devices’ expanding computational and communicational capabilities. While FL holds promise, particularly in cross-device settings, existing research often needs to be more focused on critical operationalisation and monitoring challenges. Through a case study comparing four FL system topologies, the paper uncovers periodic accuracy drops and attributes them to exploding gradients. Proposing a novel method reliant on the local computation of the gradient scale coefficient (GSC) for continuous monitoring, the study expands to explore GSC and average gradients per layer as potential diagnostic metrics for FL. By simulating various gradient scenarios, including exploding, vanishing, and stable gradients, the paper evaluates resulting visualizations for clarity and computational demands, culminating in introducing a gradient monitoring suite for FL training processes.

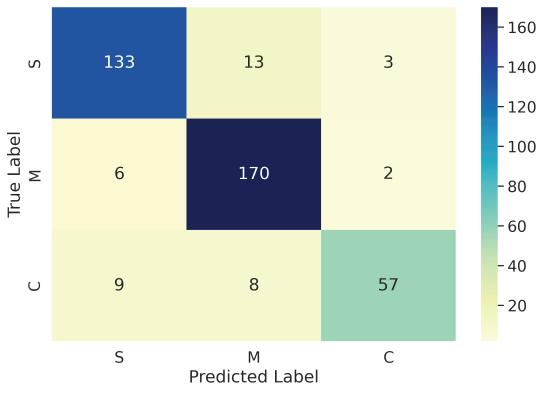

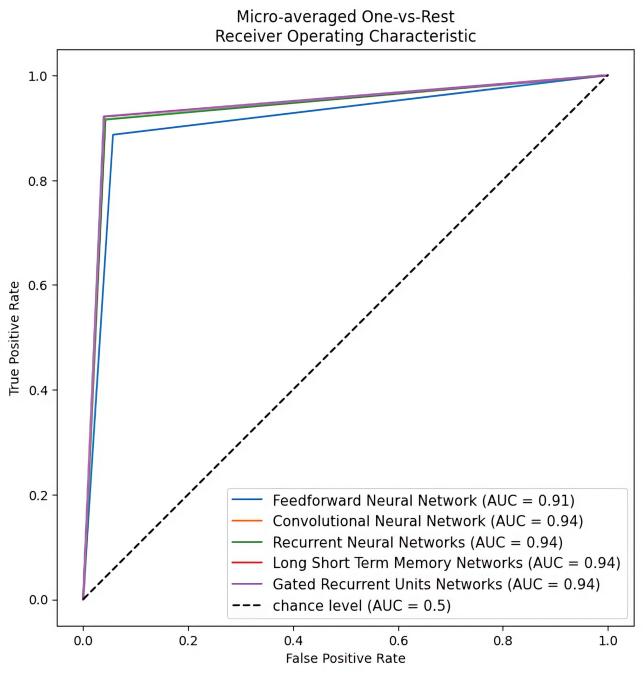

In their study titled “Efficiency of Artificial Intelligence Methods for Hearing Loss Type Classification: An Evaluation,” Michał Kassjański, Marcin Kulawiak, Tomasz Przewoźny, Dmitry Tretiakow, Jagoda Kuryłowicz, Andrzej Molisz, Krzysztof Koźmiński, Aleksandra Kwaśniewska, Paulina Mierzwińska-Dolny, and Miłosz Grono address critical issues surrounding the evaluation of hearing loss. Traditionally, hearing loss assessment relies on pure tone audiometry testing, considered the gold standard for evaluating auditory function. Once hearing loss is identified, distinguishing between sensorineural, conductive, and mixed types becomes paramount. The study compares various AI classification models using 4007 pure-tone audiometry samples meticulously labelled by professional audiologists. Models tested

range from Logistic Regression to sophisticated architectures like Recurrent Neural Networks (RNN), Long ShortTerm Memory (LSTM), and Gated Recurrent Unit (GRU). Furthermore, the study explores the impact of dataset augmentation using Conditional Generative Adversarial Networks and different standardisation techniques on the performance of machine learning algorithms. Remarkably, the RNN model emerges with the highest classification performance, achieving an out-of-training accuracy of 94.4% as determined by 10-fold Cross-Validation.

Finally, Marcin Sowanski, Jakub Hoscilowicz, and Artur Janicki contributed a paper titled “Analysis of Dataset Limitations in Semantic Knowledge-Driven Multi-Variant Machine Translation.” This research explores the intricacies of dataset constraints within semantic knowledge-driven machine translation, tailored explicitly for intelligent virtual assistants (IVA). Departing from conventional translation methodologies, the study adopts a multi-variant approach to machine translation. Instead of relying on single-best translations, their method employs a constrained beam search technique to generate multiple viable translations for each input sentence. The methodology’s expansion is noteworthy beyond the constraints of specific verb ontologies, operating within a broader semantic knowledge framework. This enables a more nuanced interpretation of linguistic nuances and contextual intricacies, thereby enhancing translation accuracy and relevance within the IVA domain.

We want to thank all those who participated in and contributed to the Symposium program and all the authors who submitted their papers. We also wish to thank all our colleagues and the members of the Program Committee for their hard work during the review process, their cordiality, and the outstanding local organisation of the Conference.

Editors: Piotr A. Kowalski

Systems Research Institute, Polish Academy of Sciences and Faculty of Physics and Applied Computer Science, AGH University of Science and Technology

Szymon Łukasik

Systems Research Institute, Polish Academy of Sciences and Faculty of Physics and Applied Computer Science, AGH University of Science and Technology

Submitted:27th December2023;accepted:11th March2024

AnastasiyaDanilenka

DOI:10.14313/JAMRIS/3‐2024/17

Abstract:

Federatedlearning(FL)involvesjointmodeltrainingby variousdeviceswhilepreservingtheprivacyoftheir data.However,itpresentsachallengeofdealingwith heterogeneousdatalocatedonparticipatingdevices. Thisissuecanfurtherbecomplicatedbytheappear‐anceofmaliciousclients,aimingtosabotagethetrain‐ingprocessbypoisoninglocaldata.Inthiscontext, aproblemofdifferentiatingbetweenpoisonedand non‐identically‐independently‐distributed(non‐IID)data appears.Toaddressit,atechniqueutilizingdata‐free syntheticdatagenerationisproposed,usingareverse conceptofadversarialattack.Adversarialinputsallow forimprovingthetrainingprocessbymeasuringclients’ coherenceandfavoringtrustworthyparticipants.Exper‐imentalresults,obtainedfromtheimageclassification tasksforMNIST,EMNIST,andCIFAR‐10datasetsare reportedandanalyzed.

Keywords: federatedlearning,non‐IIDdata,labelskew, datapoisoning,labelflipping

1.Introduction

Federatedlearning(FL)[1]focusesondeveloping aglobalmodelbycoordinatinglearningonmultiple deviceswhilemaintainingtheprivacyoflocaldata. ThetypicalprocessofFLconsistsoftrainingrounds andinvolvesseveralsteps:(1)theglobalmodelis initializedontheserver;(2)thesubsetofclientsof aspeci iedsizeisrandomlychosenfromallavail‐ableclients;(3)theglobalmodelissharedamong theselectedsubsetofclients;(4)clientsperform localtrainingwiththereceivedglobalmodelfora limitednumberofepochsusingtheirprivatedata; (5)clientsreturntheirmodelupdatestotheserver; and(6)modelupdatesareaggregatedontheserver intoanewversionoftheglobalmodel[1].

EachofthedefaultFLstepsisopentochangesand re inements.Thus,thesubsetofclientsforatraining roundmaybecreatednotrandomly,butbyfollowing astrategy,clientsmaynotsendweightupdatesto theserver,buttheresultsofSGD[1],orcommuni‐catefullmodelweights.Moreover,aggregationofthe modelupdatesontheservermaynotsimplyaver‐ageallreceivedmodelupdatesasproposedinthe FedAvgalgorithm,butprioritizeoneclient’supdates overanother’s.Forinstance,byusingthesizeoftheir localdatasetsasweights[1],participantswithbig

localdatasetsarefavoredastheycontributemoreto thenewglobalmodelduringaggregation.

DuetotheprivacyrestrictionsofFL,clients’local datasetsremainontheirlocaldevices,makingit impossibletoperformcentralizeddataanalyticsand inferpropertiesofbothglobalandlocaldatasets. Moreover,inreal‐lifecases,datamaynotbeiden‐ticallyindependentlydistributed(non‐IID)among clients,whichwasprovedtocauseproblemsforFL, asthequalityoftheglobalmodelanditsconvergence canbenegativelyimpactedbythepresenceofsuch data[2,3].Non‐IIDdatacanbecategorizedinto ive types[4],i.e.,(1)featuredistributionskew(different clientshavevariationsinfeaturestylesforthesame label);(2)labeldistributionskew(clientshavevary‐inglabeldistributionsbutsimilarfeaturesforspe‐ci iclabels);(3)samelabel,differentfeatures(differ‐entclientspresentdifferentfeaturedistributionsfor thesamelabel);(4)samefeatures,differentlabels (clientsassigndifferentlabelstothesamefeatures); and(5)quantityskew(differencesintheamountof dataacrossclients).

Ingeneral,thesedata‐relatedskewsaresupposed tobetheresultofthenaturalcharacteristicsofthe data,highlightingthecomplexityanddiversityofreal‐lifefederateddatasets.However,anothersetofdata issuescancomefrommaliciousactors,whichhave accesstoclientdevicesandclientdata,resultingina securityissueknownasadatapoisoningattack[5]. Inthiscase,theadversarymayperformdatapoi‐soningattacksandaimtocompromisethetraining process,reducetheglobalmodelperformance,and causeincorrectmodelpredictionsduringtheinfer‐encestage[6].Despitepoisoneddatabeingdifferent fromthenon‐IIDdataproblem,FLbydefaultequally protectstheprivacyofnon‐IIDandmaliciousclients, naturallymakingthetaskofdistinguishingbetween themmorechallenging.

Toaddressthechallengesposedbylabelskewnon‐IIDdata,the AdversarialFederatedLearning (AdFL) algorithmwasintroduced[7].Thismethodoriginated fromtheconceptofadversarialattacksandismainly applicabletoneuralnetworksdealingwithimage data.TheAdFLalgorithmallowsforgainingvaluable insightsaboutclients’localdatasetswithoutrequest‐inganyadditionalinformationfromlocaldevicesby utilizingsyntheticdatageneratedontheserver.

Thealgorithmimprovestheperformanceofglobal modelsinthepresenceoflabelskewdataandresults inmorestabletrainingandmorebalancedper‐class accuracyoftheglobalmodel.

Thisworkisanextensionoftheresearchpre‐sentedin[7]andexploresthepossibilityofusingself‐adversarialsamplesfordistinguishingmaliciousnon‐IIDclientsfromthosethatarebenign,focusingon untargetedandtargetedlabel‐ lippingattacks.

Followingthis,inSection 2,relatedresearchon datapoisoningattacksinthepresenceofnon‐IIDdata withinFLisoutlined.Section3presentskeyconcepts ofadversarialattacks.InSection4,theAdFLalgorithm isdescribed.Section 5 de inesthedatapoisoning attacksadoptedinthispaper.Section 6 coversthe experimentalresultsandtheiranalysis,collectedfrom MNIST[8],EMNIST[9],andCIFAR‐10[10]datasets. Thisworkconcludeswithasummaryof indingsand futureresearchsuggestions.

ThedatapoisoningattacksinFLasastandalone issuearebeingaddressedinmultipleways.For instance,maliciousclientscanbedetectedand iltered outfromthetraining.Here,methodswereproposed totracktheconsistencyoftheclient’supdatesto verifyitsintent[11],applydimensionality‐reduction andclusteringtechniques(suchaskernelprincipal componentsanalysisandk‐means)[12],orusing Euclideandistance‐measure[13, 14]todistinguish betweenmaliciousandbenignclientsand ilterout suspiciousclients.Anotherapproachproposedisto maintainasmallcleantrainingdatasetandasepa‐ratemodelontheserver,usingthemtoassessthe trustworthinessofclients’updatesbycomparingthe directionoflocalmodelsupdateswiththeserver‐side modelupdateobtainedfromthecleandatasetand furtherusingtrustworthinessscoreasaggregation weightsfornormalizedclients’modelupdates[15]. Anotherlineofresearchfocusedonmodifyingthe aggregationstrategytowardsoutlierresistance,for example,isbytakingnotthemean,butthemedian foreachdimensionfromthemodelupdates[16]or trimmingtheupdatesbeforeaveraging[17]toavoid extremevalues.However,thesemethodsmainlyrely ontheassumptionthatbenignclientswillhavesimilar modelupdates,whichcannotbeguaranteedunder thenon‐IIDdata.

Toaddressthejointproblemofpossibledatapoi‐soningattacksandnon‐IIDdata,methodsforboth maliciousclientsdetectionandnon‐IIDdatamiti‐gationwereproposed.Forinstance,thealgorithm utilizingacosinesimilaritymeasurewaspresented toassessclients’contributionsimilarity,assuming thatbenignclientswillhavemorediversegradi‐entupdatesthancoordinatedmaliciousclients[18]. Anotherapproachsuggestedusingasmallproxy datasetasatooltoperformon‐serveroptimization to indthebestmodelupdatesfusionandmitigate possiblemaliciousclientseffectbynaturallyassigning themsmallaggregationweights[19].

Adifferentsolutionproposedanalyzingthecrit‐icalparametersofthelocalmodelstoreliablyiden‐tifymaliciousclientsanduseitforweightedupdates aggregation[20].Anattack‐tolerantFLmethodwas alsoproposed,presentinglocalmetaupdatesand globalknowledgedistillationtomitigatepossible maliciousclientseffectontheglobalmodel[21].

Althoughtheresearchhasbeguntosimultane‐ouslyaddresstheproblemofbothnon‐IIDdataand potentialdatapoisoningattacksinFL,theproposed solutionscanstillrelyonproxydatasetsavailableon theserversideorcomplicatethelocaltrainingprocess withadditionalcomputations.Suchassumptionsmay notbefeasibleinsomeFLscenarios.Moreover,the complexityofthenon‐IIDdataproblemandthevari‐etyofdatapoisoningattackscenariosmakeitharder to indsolutionsthatcansatisfybothperformance andavarietyofsecuritygoals,leavingthischallenging areaopenforfurtherresearch.

AdversarialdataareadoptedbymanyFLalgo‐rithms.Thecommonideaisutilizingadversarialtech‐niquesasdatageneratorsinorderto(a)defend themodelagainstadversarialattacks[22, 23],or (b)augmentthequantityoflocallyaccessibledata withsyntheticsamples[24–26].Thisworkextends theapplicabilityofthepreviouslyproposedalterna‐tivemethod,incorporatingadversarialdataintoFL.

3.1.AdversarialAttack

Theessenceofadversarialattacksliesintheability tomodifyasamplefromthetrainingdataofaneural networkinamannerthatisimperceptibletohumans, yetcausesthetrainednetworktoincorrectlyclassify whatwasonceacorrectlyclassi iedsample[27].This phenomenonwasillustratedtobecausedbytheabil‐ityoftheadversarytoalterthetargetdatasampleina waythatmakesitcrosstheclassi ier’sdecisionbound‐ary,and,therefore,resultinmisclassi ication[28].

Theclassi icationofadversarialattacksfallsinto twomaincategories: untargeted attacks,whichsim‐plyfocusoncausinganyincorrectclassi ication,and targeted attacks,wherethegoalistotriggermisclas‐si icationintoaspeci icclass.Attackmethodologies arefurtherdividedinto white-box attacks,whenthe involvedadversaryhasaccesstothemodel’sarchi‐tectureandparameters,and black-box attacks,which relysolelyontheattacker’saccesstooutputdata. Asetofgradient‐basedalgorithmswaspreviously presentedthatreliesonthemodel’sgradientsanda lossfunctiontocreatethenecessarychangestothe sourcedatainordertoperformanattack.Forinstance, gradient‐basedalgorithmsare:one‐stepFastGradi‐entSignMethod(FGSM)[29],itsiterativeversionI‐FGSM[30],anditsversionenhancedwithmomentum MI‐FGSM[31].

Inthisstudy,themomentumiterativefastgradient signmethod(MI‐FGSM)isusedtoperformtargeted adversarialattacks[31](seeEquations1and2).

Here,���� representstheaccumulatedgradients,��∗ �� istheperturbedadversarialimageiniteration��,�� is atargetclass, �� isalossfunction, �� isadecayfactor introducedforbetterattacksuccessrateand �� isa stepsize.Ateachiteration,��∗ �� isclippedinthevicinity ��,topreservetheresultingadversarialimagewithin ��∞ distancefromthesourceimage.

3.2.TransferabilityofAdversarialInputs

Adversarialinputspossesstheabilitytotrans‐feracrossmodels,meaningthatadversarialinputs designedforonemodelcanalsocausemispredictions fromothermodels,withthetransferabilityofadver‐sarialsamplesbeinghigherbetweenmodelstrained ondataandtasksthataresimilar.Thisphenomenonis attributedtothefactthatmodelsaddressingthesame tasktendtodevelopsimilardecisionboundaries.In thecontextofFL,whereclientsworkonthesametask withsharedmodelarchitectureandfeaturespace,this transferabilityisparticularlyuseful.Itwasshown, thatadversarialsamplesgeneratedbyanyclientcan provideinsightsaboutlocaldatadistribution[7].The propertyoftransferabilityofadversarialsamplesand itsrelevancetodecisionboundariesoftrainedclassi‐iersinFLformedthebasisoftheAdFLalgorithm.

IntheAdFLalgorithm,adversarialimagesareuti‐lizedasanadditionalsourceofinformationtoimprove andguidethetrainingprocess.Thegenerationof theseimagesisdoneontheserver,usingthemodels thathavebeenupdatedandarandomnoisesample imageasastartingpointforthegenerationprocess. Thisway,theadversarialimagesaregeneratedin adata‐freeway,meaning,thatnoaccesstoactual clients’dataisneeded.Thespeci icstepsperformed bytheserverintheAdFLareoutlinedinAlgorithm1

Note,thatintheAdFLalgorithm,theweightsofthe modelarecommunicatedbetweenclientsandserver.

Intotal,sixstepssummarizetheAdFLalgorithm: 1) Duringthe irstfederatedtraininground,allclients receivetheinitializedglobalmodel,performlocal training,andreturntheresultingmodelsbackto theserver.

2) Updatedmodelsreturnedbyclientsareusedto generateadversarialsamples(Section4.1).

3) Theestimationofthedistributionofclassesacross clientsisperformedusingthegeneratedadversar‐ialsamples,asdiscussedinSection4.2

4) EachclientgetsaCScalculatedwiththehelp ofupdatedmodelsandthegeneratedadversarial samples(seeSection4.4fordetails).

Algorithm1 AdFLalgorithm(Server); ���� –client; ������ –subsetofclientspickedfortrainingonepoch ��; globaldistribution –distributionofclassesduring FLtraining; ���������������� –estimatedclassespresencein clients’localdatasets

Ensure: globalmodel ��0, globaldistribution,clients ready for ��in��������ℎ�� do if ��==0 then ������ ← allclients else ������, globaldistribution ← pickclientsfor training(����������������, globaldistribution) endif for ����in������ do ������ �� ←runtraining(����) endfor advdata ←createadvdata([��0 ��,...,�� ������ �� ]) if ��==0 then ���������������� ←estimatedistribution(advdata) endif C��[0−������] ←calculateCS(advdata,��[0,..,������] �� ) ���� ←FedAvg([��[0,..,������] �� ],����[0−������]) endfor

5) Theaggregationsteputilizesclients’coherence scoresasweightstoformthenextversionofthe globalmodel.Thisnewglobalmodelisthendis‐tributedtoanewsubsetofclients,initiatingthe nexttraininground.

6) Thereafter,theclient‐pickingstrategy,guidedby theglobalclassesdistribution(seeSection 4.3), regulateswhichsubsetofclientswillengagein thenextroundoftraining,andtheprocessrepeats fromstepone,omittingtheestimationofthedis‐tributionofclassesacrossclients.

Itshouldbeemphasized,thatallstepsintroduced bytheAdFLthatexpandtheclassicalFLpipelineare performedontheserver.Theadversarialimagescre‐ation,clientpickingstrategy,andcoherencescores calculationarecoveredinthenextsubsections.

AdversarialdataintheAdFLalgorithmiscreated basedonthemodelsreturnedbyclientsanddoesnot requireactualclients’data.Therefore,theadversarial inputgenerationstartsfromarandomnoiseimage andisperformedwiththeMI‐FGSMalgorithm(see Equations 1 and 2).Thisattackisparameterizedby ��, ��,thenumberofsteps,andtheclippingbound‐ary.AstheadversarialinputsproducedbytheAdFL algorithmarenotusedtoperformactualadversar‐ialattacks,theconstraintsontheamountofchange appliedcanberelaxed.Forexample,thenumberof steps,��,andtheclippingboundarycanbeviewedas constraintsonthealgorithmsothatthe inaladver‐sarialimageisnotfarfromitssource,therefore,they wereadjustedaccordingtotheobjective.

Itwasexperimentallyvalidatedthatgoingbeyond 30adversarialstepsdoesnotimprovetransferability, thus,thenumberofstepswassetto30.Thestepsize �� wassetto1,whiletheclippingboundaryand �� wereleftunchanged,followingtheoriginalMI‐FGSM research.

Algorithm 2 outlinestheprocessforcreating adversarialinputs,whilethedefaultfederatedsteps arenotincluded.

Algorithm2 Adversarialdatageneration

Ensure: targets ←[0,...,��−1]

Ensure: ��[0,..,������] �� ▷Updatedmodelsatepoch�� for ��in�������������� do for ���� �� in��[0,..,������] �� do advimg ←randomnoise[��ℎ,��,��] for ��������in numsteps do ▷MI‐FGSMstep advimg�� �� ←step(���� ��, advimg�� ��,��) endfor endfor endfor

Afterthelocaltraining,eachupdatedmodel returnedtotheserverisusedtogenerate �� images, i.e.,oneimageisgeneratedperclassthatispresentin theclassi icationtask.

4.2.LocalDistributionEstimation

Asspeci iedintheAlgorithm 1,the irsttraining roundintheAdFLalgorithminvolvesallclientsinper‐formingthelocaltraining.Thesemodelsarethenused tocreateadversarialsamples(Section4.1).Duringthe research,itwasdeterminedthatwhenoneclient’s updatedmodelmakespredictionsonadversarialsam‐plescreatedbyanotherclient’supdatedmodelatthe endofthe irstepoch,thesepredictionsareindicative ofthespeci icclassespresentinthelocaldatasetofthe clientthatperformedthepredictions[7].Therefore,at theendofthe irstround,itispossibletoestimatethe labeldistributionamongallclients,thatparticipated inthetraininground.

Detectinglabels’presenceinlocaldatabyinspect‐ingtheadversarialdatapredictionspresentsthe opportunityforfurtherimprovementsinthefeder‐atedtrainingprocess,basedontheinsightsgathered. However,itisworthemphasizing,thatthediscovered labeldistributionisstillanestimation.

4.3.Client‐pickingStrategy

Oncetheclassesintheclients’localdatasetsare estimated,aclient‐pickingstrategycanbeusedto reducetheeffectsoflabelskewinthelocaldatasets.In theAdFLalgorithm,thein luenceoflabelskewonthe trainingprocessisbeingaddressedwithabalanced client‐picking.

Thebalancedclient‐pickingisperformedbyuti‐lizingtheinformationretrievedduringthelocaldis‐tributionestimationstepdescribedintheprevious subsectionandaimsathavingtheclientswithdiverse localdatalabeldistributionspickedforeachtraining round.

Thisstrategyensuresequalrepresentationofcom‐monandrareclassesineachtraininground,there‐fore,continuouslyexposingthemodeltoallpossible classesintheclassi icationtask,leadingtoamore balancedperformanceacrossallclasses.

Totrackwhichclasseswerepresentontheclients thatparticipatedinthetrainingprocess,agloballabel frequencyvectorofsize��ismaintainedontheserver, accumulatingthenumberofclientsthatparticipated intrainingepochsuptonowandhadacertainclass�� intheirlocaldataset.Asanewsubsetofclientsisbeing formedforatraininground,thevectorisupdatedwith thelabeldistributioninformationofeachclientadded tothesubsetforthisfederatedtraininground.

TomaintainthebalancedFLtrainingandcon‐sistentinvolvementofallclassesinthetraining,the clientsforeachnewfederatedroundarepickedin suchawayastobringthevaluesintheglobalfre‐quencyvectorclosertoauniformdistribution.Todo so,aKullback–Leiblerdivergenceisused(Equation3).

Therefore,priortoaddingacertainclienttoasub‐setofclientsforthetraininground,theKL‐divergence iscalculatedwithrespecttotheuniformdistribution andthegloballabelfrequencyvectorassumingthat thisclientisaddedtothetraining,i.e.,itsclassesare admittedtotheglobalclassesfrequency.Thistech‐niqueensuresthatclientswhopossessrarelabelsin theirdataareconsistentlyincludedinthetraining.

4.4.ClientsCoherenceMeasurement

Transferabilityofadversarialsamplesisnotguar‐anteedbydefaultforallfederatedclients,asitrelieson theinternalpropertiesofthemodelandthedataitwas trainedon.AspresentedinSection4.2,examiningthe predictionsofthemodelsthatonlycompletedtheir irstfederatedtrainingroundcanhelpidentifytheir localdistribution,sincethisiswhatcanbeseenin thepredictionsthemodelsmakebasedonadversarial samples.Consequently,thesepredictionscanidentify whichclassesarenotinthelocaldistribution,locating nodeswithraredata.However,thispropertycanbe usednotonlyforlabeldistributionestimationbutalso forassessinghowclosetoeachothertheupdated clients’modelsare.ThisassessmentintheAdFLalgo‐rithmiscalledacoherencescore(CS)andisemployed to indclientswithahighabilitytocorrectlypredict adversarialsamplesaswellasproducethosethatare correctlypredictedbyothermodels.

Thus,theCSconsistsoftwoparts,i.e.,themodel’s abilityto(1)producesamplestransferabletoother modelsand(2)predictadversarialsamplesfrom othermodels.Thecalculationofthesemetricsisper‐formedeachtrainingroundaftertheupdatedclient modelsreturntotheserverafterperforminglocal training.Eachupdatedmodelgenerates �� adversar‐ialsamplesandmakespredictionsforalladversarial samplesgeneratedbyotherupdatedmodelsreturned byclientsparticipatinginthecurrenttraininground.

Afterthepredictionsaredone,thescorecalcula‐tionproceedswithcalculatingthemodel’sabilityto predictadversarialimagesproducedbyothermodels accordingtoEquation4.Foreachmultiplication,there isabinaryindicatordeterminingifthepredictionfor theadversarialsampleforclass �� frommodel �� was accurateandtheclassprobabilitygivenbythemodel. Theresultsobtainedforthemodelpredictingitsown adversarialinputsarenotincluded.

predictedothers

Thesameformulaisusedtoassessthemodel’s abilitytoproducetransferablesamplesthatarerecog‐nizedbyothermodels,wherethecorrectpredictions areanalyzedacrossthemodelsthatmadepredictions ofthesamplesproducedbythecurrentlyevaluated model.

The inalCSisasummationofthetwoassessment resultsandiscalculatedas:

coh.score=predictedothers+waspredicted (5)

Theresultingnormalizedcoherencescoresareused tofavormodelswithgoodtransferabilityandare employedasweightsduringtheupdatedmodelsaver‐agingprocess,therefore,haveadirectin luenceonthe globalmodelaggregation.

TheAdFLalgorithmcanutilizecoherencescores toidentifyclientswhichcannotreliablyclassifyadver‐sarialinputsorcreatesuchinputs.Thispropertyof thealgorithmcanbeusefulwhendealingwithdata poisoningattacks,thatinterferewiththeclient’slocal dataduringthetrainingprocess.Moreover,inconfor‐mitywiththeliteratureoverview,itprovidesaweight‐ingschemeforpotentiallyassigningmoreimportance tobenignclientsovermaliciousones.Therefore,this propertyofCSsinspiredthisresearch,extendingthe applicationoftheAdFLalgorithmbeyondnon‐IID label‐skewscenarios.

5.DataPoisoningAttacksinFL

Datapoisoningattackscanbeclassi iedintotwo categoriesbasedonthetargetofadversarialmanipu‐lation:cleandataattacksanddirty‐labelattacks[32]. The irsttypeofattacksdoesnotchangethelabelsof thedata,onthecontrary,itinjectschangestothesub‐setoftrainingdata[33]anddoesnotrequireaccess todatalabeling,whilethesecondattacktype,changes thelabelsofthesamplesinsidethedataset,accord‐ingtotheadversary’sgoalandleavingdatafeatures unchanged[34].

Asthenon‐IIDscenariosconsideredinthiswork arerepresentedbythelabelskew,thenaturaltypeof attacktoconsiderasits“companion”isadirtylabel label‐ lippingattack.Meaning,thatinadditiontothe limitedclassesbeingpresentinsidethelocaldata,it canfurtherbeasubjectoflocaldatasufferingfrom labelsbeing lipped.

Intheseterms,datapoisoningattackscanbe performedbyfederatedclients.Here,theattackcan bedescribedfromtheperspectiveofthenumberof clientsparticipatingintheattack—whetherthere isonlyalimitednumberofadversariesorifthere aremany—aswellasfromthewaythesourcedata labelsarebeingaffected,whethertheadversariesdo nothaveaspeci icstrategyandthelabelsare lipped randomly[35],ortheyhaveaspeci icobjectiveand liplabelsaccordingtosomerule[36].

Inthecurrentresearch,twolabel‐ lippingstrate‐giesarebeingstudied:untargeted(random)label lip‐pingandtargetedlabel lipping,meaningthatlabels foroneclassareconsistentlysubstitutedbylabels fromanotherclass.Inbothscenarios,adversariesdo nothaveawaytoseebenignclientsdatadistribu‐tions,however,inthetargetlabel‐ lippingscenarios maliciousclientshaveajointpairofsourceandtarget labelsfortheattack.Thispairisknowntoalladver‐saries.Thedetaileddescriptionofattackscenarios employedinthisworkisgiveninSection6.4

Therandomlabel‐ lippingattackprimarilyfocuses ontheoverallperformancedegradationoftheglobal model,whiletargetedattackshaveatargetclass whoseperformancetheyaimtodamage.Inorderto assesswhetherthetargetedattacksweresuccessful, theAttackSuccessRate(ASR)measureisemployed (Equation 6)withrespecttothelabelwhoseperfor‐manceistargeted.

������= numberofsuccessfulattacks totalnumberofattacks (6)

Itisalsoworthsayingthatinthepresenceofhighly skeweddatapartitionwithequalclassprobabilities insidelocaldata,randomlabel lippingresultsina softerattackscenario.Forexample,with2classes beingpresentonalocalnode,therearearound50%of correctlyassignedlabelsinsideeveryclass,asrandom assignmentisnotprohibitedfrompickingtheactual class.

6.1.Datasets

Forexperiments,threeimagedatasetswereused –MNIST[8],EMNIST[9],andCIFAR‐10[10].The datasetsrepresenttasksofvaryingdif icultyforthe algorithmsandarecommonlyutilizedasbenchmark datasetsinFLresearch.MNISToffers10‐class,28x28 grayscaleimages,andisoftenusedasabasicimage classi icationtask.EMNISTexpandsthetaskwith hand‐writtenletters,increasingthenumberofclasses to62,addingcomplexitytolabel‐skeweddata,and makingtargetedlabelattackshardertospotand counter.CIFAR‐10furtherescalatesthechallenge, introducing10classes,and32x32pixelsRGBimages withmorecomplexfeatures.

6.2.ExperimentalSetup

TheprojectwasimplementedinPython(version 3.7.9),usingPyTorchmachine‐learningframework (version1.10.0[37]).Datasetsandcustommodel architectureswereprovidedbythetorchvisionpack‐age(version0.11.1[38]).Allexperimentswererun onGPUhardware,speci ically,NVIDIAGTX1070and NVIDIAA100.

6.3.DataPartitioning

Non‐IIDlabelskewwasemulatedonlocaldevices accordingtothefollowingprocedure:(1)inthe parametersoftheexperiment,thenumberofunique classes��andthetotalnumberofdatasamples��are de inedandappliedforallclients,(2)randomseed issetforrandomoperationsreproducibility,(3)the probabilityofeachclassappearingonthelocalmodel isde inedbytakingasamplefromanormaldistribu‐tion,(4)foreachclientasetofclassesintheirrespec‐tivelocaldatasetisdeterminedbydrawingasample ofsize��fromtheclassprobabilitydistribution,(5)a uniquesubsetoftotal��datasamplesoftheselected classesisassignedtotheclient,witheachlabelhaving ��/��samplesinthelocaldataset.

Asthenumberofuniquelabel‐skewdistributions thatcanbegeneratedwiththisapproachisimmense andtheresultsobtainedacrossdifferentdistributions cannotbesimplyaggregatedduetothesedifferences instatisticalpropertiesofthedatasets,theexperi‐mentswereperformedona ixeddatadistribution. Thishelpedpreventdata‐dependentfeaturesfrom interferingwithperformancemetricsandmadeit morereliabletoattributedifferencesinmodelper‐formancetospeci icalgorithmsanddatapoisoning attacksused.

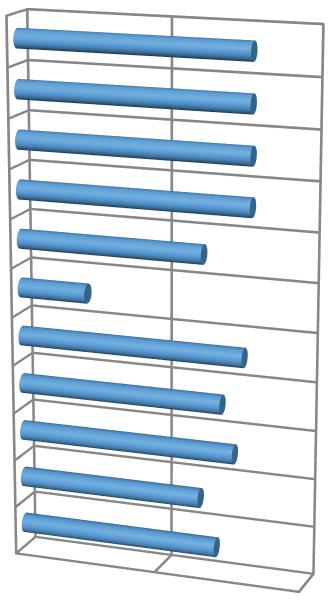

Theprobabilityoflabeloccurrenceusedforclas‐si icationtaskswith10classes(MNISTandCIFAR‐10) isillustratedinFigure1.

Duetothenormaldistributionbeingusedtocreate thelabelprobabilitydistributionforthewholeexper‐iment,someclassesnaturallyappearmoreoftenin thelocaldatasetthanothers.Itadditionallyintroduces theglobalclassimbalancetotheFLpipeline.Inacase whenthenumberoffederatedclientsisloworthe numberofuniqueclassesinthelocaldatasetsislow, someclassesmaynotappearatall.

6.4.AssumptionsandDataPoisoningAttackModel Intheconsideredattackscenariositisassumed thattheserverisfairandnotcompromised–onlya setofmaliciousclientsarethreateningtheFLpipeline. Moreover,theattackersarepresentintheFLpipeline fromthebeginningandstaytilltheendoftraining–no attackerleavesorjoinsthetrainingintheprocess.The designoftheFLexperimentisadaptedfromtheFools‐Goldalgorithmnon‐IIDscenario[18]andfeatures15 federatedclients:10honestclientsand5malicious clients.However,changesweremadetothedatapar‐titionscenariocomparedtothereferenceexperiment accordingtothedatapartitionstrategypresentedin theprevioussection.Thesechangesmodifythedata distributionstrategyandintroducethelabelskewto theclients’localdatasetsasadoptedintheexperi‐mentsdesignedforthealgorithmsfocusedonmitigat‐ingtheeffectsofnon‐IIDdata.

AccordingtothescenariospresentedinSection5, threeattacksweredesignedforexperiments:(1) untargetedrandom lippingattack,(2)targetedattack onacommonlabel,(3)targetedattackonanuncom‐monlabel.

Duringtheuntargetedattack,everymalicious clientrandomlyassignslabelstolocaldatasamples basedontheavailablelocallabelsset.Thetargeted attacksincludemaliciousclientsjointlypickingtheir target.First,maliciousclientsrevealthesetoflabels presentintheirlocaldata.Then,maliciousclientsesti‐matelabeldistributionbasedontheobservedlocal distributions.Theattackruleisde inedasapairof labels��������������,��������������,where�������������� isalabelthatwill be lippedwith��������������,therefore,�������������� performance willbetargeted.Aftersettingtheattackpair,every maliciousclientinspectsitsdataandlooksfor�������������� Iffound,the lippingisperformedaccordingtothe attackrule.Moreover,thetargetedattacksutilizenon‐IIDdataproperties,de inedintheprevioussection,by targetingeithercommonoruncommonlabelsbased ontheempiricallabeldistributioncollectedbythe maliciousclients.Commonlabelsarede inedaslabels, whoseprobabilityofoccurrenceexceedsthe66% quantileoftheprobabilityvector,whileuncommon arede inedasthoseunderthe66%quantile.

Thisway,targetedattackschemesnaturallylimit theactivenumberofactiveattackers,astheyare basedontheempiricallabelprobabilitydistribution estimatedbyattackersbeforetrainingstarts.Com‐monlabelsarenotguaranteedtobepresentinevery attacker’slocaldataduetothe66%quantilethresh‐old,makingsomepotentiallymaliciousclientsbenign duringtraining,however,stillcontributingtothe overalldistributionestimation.

Inthepresentedscenarioswith5maliciousfeder‐atedclients,anuntargetedattackresultsinall5clients beingmaliciousduringthetraining,atargetedattack oncommonlabelsresultsinaround3clientsperform‐ingthejointattackonacertainlabel,whiletheattack onanuncommonlabelisdonebyonemaliciousclient, therefore,re lectingtheadaptationoftheattacking partytotheobservednon‐IIDdatadistribution.

Table1. Hyperparametersusedintheexperiments

Parameter

Totallabels 10 62 10

Totalclients 15 15 15

6.5.ModelsandHyperparameters

Convolutionalneuralnetworks(CNNs)werecho‐senfortheimageclassi icationtasksrepresentedin thedatasets.ThebasicLeNet5[39]architecturewas adoptedforbothMNISTandEMNISTtasks.ForCIFAR‐10amoresophisticatedarchitecturewaschosen, namely,mobilenetv2[40].Thepre‐trainedversionof themodelwasprovidedbythetorchvisionpackage withweightscomingfromtheImagenet[41]dataset.

Eachexperimentusedthecross‐entropylossfunc‐tionandtheStochasticGradientDescentoptimizer. Thefulllistofparametersforexperimentswith respecttodatasetsisgiveninTable1

Foreachalgorithm,dataset,andattacktype,the trainingwasperformed5timeswithdifferentmodel initializationscontrolledbyasetofseeds,andmean resultswereusedforfurtheranalysis.

6.6.ExperimentalResultsandAnalysis

ToevaluatetheAdFLalgorithm’sabilitytoiden‐tifymaliciousclientsandmitigatetheireffectinthe presenceofnon‐IIDdata,twowell‐knownalgorithms werechosenasbaselinesforevaluation.The irstone isMulti‐Krum[13]whichusestheEuclideandistance metricto ind �� closestmodelstouseforglobal modelaggregation,rejectingtherestoftheupdates collectedontheserverduringtheFLtraininground. Thisapproachfavorsmodelupdatesthataresimilar toeachotherandtreatsunusualupdatesasmalicious. Thesecondapproachtakenintocomparisonisthe FoolsGold(implementationfortheexperimentswas basedonthesourcecodeofthealgorithmprovidedby theauthors[18]).Thisapproachemploysadifferent strategyandusescosinesimilaritymeasuretoiden‐tifyclientswithsimilargradientupdatesandassigns themsmallerimportanceduringglobalmodelupdate. Thisalgorithmservesasanexampleofaweighting approachthatwasinitiallyevaluatedonnon‐IIDdata. Moreover,itisanexampleofadefensethatisnot suitedforuntargetedattacks[42].Therefore,thetwo selectedbaselinealgorithmspresenttwodifferent approachestodefenseagainstlabel‐ lippingattacks.

AstheAdFLalgorithmwasnotdesignedas adefenseagainstdatapoisoningandthecurrent researchaimsatextendingtheusageofgeneratedself‐adversarialsamplestotheFLsecuritydomain,these baselinemethodsservemoreasrepresentativesofthe algorithmsdesignedtodefendFLtrainingratherthan competitorsintermsofdefenseef iciency.

Itisimportanttonote,thattheMulti‐Krumalgo‐rithmexpectstheservertoknowbeforehandthenum‐berofpotentialmaliciousclientstakingpartineachFL traininground,asthisparametercontrolsthenumber ofupdatestobeeliminatedfromtheaggregationpro‐cess.Thisconditionwasful illedintheexperiments, andtheMulti‐Krumalgorithmwasempoweredwith theknowledgeoftheactualnumberofattackerswho performedthelabel lippingintheirlocaldata.As thisparameterissetforthewholelearningprocess anddoesnotadaptdependingonthesubsetofclients pickedforacertainFLiteration,itwasdecidedtoelim‐inatetheclient‐pickingstepfromtheexperiments, makingall15clientsalwaysparticipateineachtrain‐inground.Insuchcases,theMulti‐Krumalgorithm alwayshasachancetoeliminateallmaliciousclients andforweightingalgorithms(FoolsGoldandAdFL), theaggregationweightsforeachclientcanbetracked throughoutthewholetrainingprocessuninterrupt‐edly.

Duringallexperiments,theaggregationweights fortheclientaretrackedforeachepochreportedby re‐weightingalgorithms(AdFLandFoolsGold),while fortheMulti‐Krumalgorithm,theaggregationweights areassignedequallyfortheclientupdateschosen foraggregation,e.g.ifthereare �� chosenclientsfor aggregation,eachoftheclientsreceivesaggregation weightof 1 ��

Eachexperimentwasanalyzedwithrespecttothe meanaccuracyreachedbythealgorithminagiven scenarioandthemeanaggregationweightsthateach algorithmgavetothemalicious/benignclients.Mean valuesarecalculatedacrossall5repetitionsper‐formedforeachuniquealgorithm,dataset,andattack type.

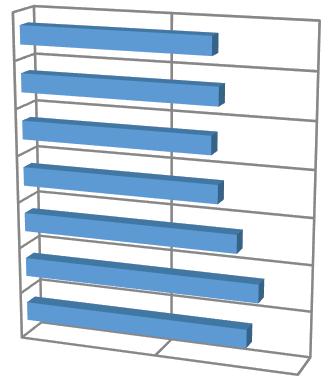

The irstdatasetanalyzedwasMNIST.Theresults fortheuntargetedattackformodelaccuracyand meanbenign/maliciousclientaggregationweightsare showninFigure2.

AdFL:Benign FoolsGold:Benign Multi-Krum:Benign

AdFL:Malicious FoolsGold:Malicious

Figure3. MNISTtargetedattackonthecommonlabel

Figure4. MNISTtargetedattackonuncommonlabel

Hereitisvisible,thattheAdFLalgorithmscores irstinaccuracy,whiletheFoolsGoldalgorithm reachesfarloweraccuracy(68%and42.5%forthe AdFLandFoolsGoldalgorithmsrespectively).Among thepresentedalgorithms,Multi‐Krumgivesthehigh‐estweighttobenignclients,however,atthebegin‐ningoftraining,forthe irst10epochstheweightof maliciousclientswashigher.TheFoolsGoldalgorithm continuouslyfavorsmaliciousclients,whiletheAdFL algorithmmanagestoassignhigherweightstobenign clients.

Thecomparisonofthreealgorithmsforthetar‐getedattackonthecommonlabelontheMNIST datasetispresentedinFigure3

Itcanbeseen,thatbothAdFLandFoolsGoldalgo‐rithmsmanagetoreachaccuracyaround85%,while Multi‐Krumscoressigni icantlylower,despiteprop‐erlyfavoringbenignclientsduringmodelaggregation. Here,FoolsGoldshowsanotablechangeintheweight‐ingdynamic,withmaliciousclients irstscoringhigh‐estandthen,afterepoch53,switchingwithbenign clients.

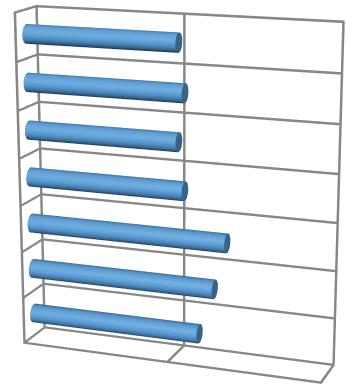

Thecomparisonofthreealgorithmsforthetar‐getedattackontheuncommonlabelontheMNIST datasetispresentedinFigure4

TheplotillustratestheAdFLalgorithmreaching higheraccuracy,anditcanbeseen,thattheweight oftheonlymaliciousclientwasalsodifferentfrom thebenign,althoughthepreferencetowardsbenign clientsissmallerthanthoseoftheMulti‐Krumalgo‐rithm.Whatismore,inthepresentedscenario,the mechanismoftheFoolsGoldalgorithmfavoringthe uniqueupdatescanbeseeninaction,assigningthe highestweightstothemaliciousclient.

Figure5. EMNISTuntargetedattack

Figure6. EMNISTtargetedattackonthecommonlabel

Thecomparisonofthreealgorithmsfortheuntar‐getedattackontheEMNISTdatasetisillustratedin Figure5

BothMulti‐KrumandAdFLalgorithmssuccess‐fullyidentifymaliciousclients,whilefortheFoolsGold algorithm,ittakestimetostartcorrectlyre‐weighting clientsandthepositivebenignclientweighting dynamicvanishesasthetrainingprogressesafter epoch100.Still,Multi‐Krumscoreshigherinboth accuracyandbenignclients’weightasitmanagesto successfully ilteroutallmaliciousclients,whilethe AdFLalgorithmonlylowerstheirweights.

Thetargetedattackonthecommonlabelonthe EMNISTdatasetispresentedinFigure6.

Itisseen,thattheMulti‐Krumalgorithmmanages to ilteroutsomeofthemaliciousclients,butscores lowerinaccuracy,whiletheFoolsGoldalgorithmisnot abletoreliablyidentifythemaliciousclients.However, togetherwiththeAdFLalgorithmitreachesanaccu‐racyof60%,whiletheMulti‐Krumalgorithm–only 57.5%.TheAdFLalgorithmshowsaslightpreference forbenignclients,withbothmaliciousandbenign clientweightschanginginthenarrowrange.There‐fore,themeanandstandarddeviationvalueswere computedforthedifference(notabsolute)between aggregatedweightsassignedtobenignandmalicious clientsandarepresentedinTable2

Thetargetedattackontheuncommonlabelonthe EMNISTdatasetispresentedinFigure7.

Table2. Meanandstandarddeviationofthedifference betweenaggregationweightsfortargetedattackonthe commonlabelfortheEMNISTdataset Algorithm

Figure7. EMNISTtargetedattackontheuncommon label

Table3. Meanandstandarddeviationofthedifference betweenaggregationweightsfortargetedattackonthe uncommonlabelfortheEMNISTdatase Algorithm

Thisscenariowasthemostcomplexforallthree algorithmstodealwith.Astheclassi icationtask,in thiscase,consistsof62uniquelabels,andoneof therarestlabelswaspoisonedbyonlyoneadver‐sary,detectingthemaliciousclientwasnottrivial. Therefore,itcanbeseenthattheMulti‐Krumalgo‐rithmfailedto ilteroutthemaliciousclient,while theFoolsGoldalgorithm,asinthescenariowiththe targetedattackonthecommonlabel,failstoperform re‐weightingatall.AsfortheAdFLalgorithm,the luctuationsofweightscanbeobserved,however,the weightsarechangingwithinasmallrange(Table 3 statesthemeanandstandarddeviationforthediffer‐encebetweentheaggregationweightsofbenignand maliciousclients),moreover,thebenignclientsare beingcontinuouslyfavoredonlyafterepoch70.

Thecomparisonofthreealgorithmsfortheuntar‐getedattackontheCIFAR‐10datasetispresentedin Figure8

Intheobservedscenario,theMulti‐Krumalgo‐rithmmanagestocorrectlyidentifythemalicious clientsandscores irstinaccuracy,whileboththe FoolsGoldandtheAdFLalgorithmsshowsimilar loweraccuracyof32%comparedto66%fortheMulti‐Krumalgorithm.However,despiteloweraccuracy, theAdFLalgorithmstillproperlyre‐weightsclients, favoringbenignclientsfromthebeginningofthetrain‐ing,whentheFoolsGoldalgorithmprefersmalicious clients.

AdFL FoolsGold Multi-Krum

AdFL:Benign FoolsGold:Benign Multi-Krum:Benign

AdFL:Malicious FoolsGold:Malicious Multi-Krum:Malicious

Figure8. CIFAR‐10untargetedattack

AdFL FoolsGold Multi-Krum

AdFL:Benign FoolsGold:Benign Multi-Krum:Benign

AdFL:Malicious FoolsGold:Malicious Multi-Krum:Malicious

Figure9. CIFAR‐10targetedattackonthecommonlabel

AdFL FoolsGold Multi-Krum

AdFL:Benign FoolsGold:Benign Multi-Krum:Benign

AdFL:Malicious FoolsGold:Malicious Multi-Krum:Malicious

Figure10. CIFAR‐10targetedattackontheuncommon label

Thecomparisonofthreealgorithmsforthetar‐getedattackonthecommonlabelontheCIFAR‐10 datasetispresentedinFigure9.

Inthepresentedcase,itcanbeobserved,thatall threealgorithmsmanagetocorrectlyfavorbenign clientsandreachtheaccuracyof62%,59.5%,and 58%respectivelyfortheMulti‐Krum,FoolsGold,and AdFLalgorithms.

Thecomparisonofthreealgorithmsforthetar‐getedattackontheuncommonlabelontheCIFAR‐10 datasetispresentedinFigure10

Here,theMulti‐Krumalgorithmmanagesto ilter outthemaliciousclientinsomeexperiments,while theAdFLalgorithmonlydecreasestheweightofthe maliciousclientuntilepoch90andtheFoolsGoldalgo‐rithmcontinuouslyfavorsthemaliciousclient.

Table4. MeanASR(%)atthefinaltrainingepochfora targetedattackonthecommonlabel

Dataset

Table5. MeanASR(%)atthefinaltrainingepochfora targetedattackonuncommonlabel

Dataset Algorithm

Fortargetedattacks,ASRsforeachofthealgo‐rithmswerealsotrackedwithrespecttothehold‐outtestdatasetaccordingtoEquation 6.Thus,the meanASRoncommonanduncommonlabelsatthe endofthetrainingfortheMNIST,EMNIST,andCIFAR‐10datasetsareshowninTable4andTable5,respec‐tively.

ThereisanotabledifferenceintheASRbetween theMulti‐KrumalgorithmandtheAdFLandFoolsGold algorithmswhenitcomestotheCIFAR‐10dataset. Fortargetedattacksonbothcommonanduncom‐monlabels,theMulti‐Krumalgorithmreachessignif‐icantlylowerASR(under10%),whiletheothertwo algorithmsreachASRbetween25and93%.Forthe EMNISTdataset,allthreealgorithmsshowsimilar ASRregardlessoftheattacktarget.However,forthe MNISTdataset,thetargetedattackontheuncommon labelshowsanexceptionallyhighASRfortheFools‐Gold.

ThedynamicoftheASRchangefortheattackon thecommonlabelispresentedinFigure11

Here,thedifferencesintherangeofASRvalues isfurtherspeci iedwiththedynamicthroughoutall epochs,highlightingthatfortheMNISTdataset,the endofthetrainingalignedwiththelowestASR,while forbothEMNISTandCIFAR‐10datasets,theendofthe trainingyieldedahigherASR,withtheexceptionof Multi‐KrumalgorithmontheCIFAR‐10dataset.

ThechangesinASRsduringthescenarioswiththe targetedattackontheuncommonlabelarepresented inFigure12

ItisclearlyvisiblehowtheFoolsGoldalgorithm’s tendencytofavoruniqueupdatesimpactstheASR onallthreedatasets.Another indinghereillustrates thatalthoughtheEMNISTdatasethasarelativelylow absoluteASR,theASRgrowsdynamicallyastraining progresses,showcasinghowallthreealgorithmsfail todefendthemodelfromtargetedattacksregardless ofthetargetlabel.

ASRonthecommonlabelperalgorithmper dataset

Tosumup,theexperimentsshowthatthelabel skewcombinedwithdifferentlabel‐ lippingattacks presentsachallengingtaskforallthreealgorithms whencomparedtooneanother.However,itcanbe seen,thattheaggregationweightsgivenbytheAdFL algorithmtomaliciousandbenignclientsdifferin allexperimentsconducted,withbenignclientsbeing favoredbythealgorithm.Still,therangeofthediffer‐encebetweentheseweightsvariesdependingonthe datasetandattacktype.Moreover,experimentsonthe MNISTandCIFAR‐10datasetsshowthattheweights ofmaliciousandbenignclients,reportedbytheAdFL algorithm,tendtobecomeevenasthetrainingpro‐gresses,asmoremodelaggregationhappens,andthe accuracyoftheglobalmodelincreases.

Therefore,thesyntheticsamplesgeneratedby bothmaliciousandbenignclientsbecomesimilarand receivesimilarcoherencescores.Anotherobservation highlightsthattheASRsforalgorithmsdifferdepend‐ingonthedatasetandattacktype,withtheMulti‐KrumhavingthemoststablemeanASRacrossall datasetsandattacktargets,whileboththeAdFLand FoolsGoldalgorithmsshowedhighASRsfortheCIFAR‐10dataset,whileEMNISTdatasetwasthemostchal‐lengingdatasettoprotectfromthetargetedattack regardlessofthetargetlabelbeingcommonoruncom‐monamongfederatedclients.Still,theAdFLalgorithm showedanASRcomparablewiththeselecteddefense algorithms,despitenotbeingdesignedwithprotec‐tionfromdatapoisoningattacksinmind.

Figure12. ASRontheuncommonlabelperalgorithm perdataset

Table6. Wilcoxonsigned‐ranktestp‐valueand significance

Thesigni icanceoftheaggregationweightsdiffer‐enceprovidedbytheAdFLalgorithmwasadditionally assessedwiththehelpoftheWilcoxonsigned‐rank test[43]basedonthemeanaggregationweightsfor malicious/benignclientsinsideeachtrial,i.e,foreach repetitioninsidetheexperiment,themeanaggre‐gationweightswerecalculatedformaliciousand benignclientsacrossallepochsandusedasapair fortheWilcoxonsigned‐ranktest.Aseparatetestwas performedforalltrialsperformed,foreachdataset (regardlessoftheattacktype),andforeachattacktype (regardlessofthedataset).Theresultsarepresented inTable6

TheWilcoxonsigned‐ranktestrevealedasigni i‐cantdifferenceacrossallconsideredcombinationsof datasetsandattacktypes.However,itisseen,that thedifferencebetweenmaliciousandbenignclients aggregationweightsfortheEMNISTdatasetandfor targetedattacksonuncommonlabelsislesssignif‐icantthanintherestofthecases,whichhighlights thatfortheAdFLalgorithm,itishardertooperatein presenceofattacksaimedatuncommonlabelsand withintheclassi icationtaskswithabiggersetof uniquelabels.

Inthiswork,theapplicabilityofsyntheticadver‐sarialsampleswasexploredinthecontextofnon‐IIDdataanddatapoisoningattacks.Threetypesof attackswereperformedonthreebenchmarkimage classi icationdatasetsandtheresultswerecompared withrespecttoglobalmodelaccuracy,theabilityof thealgorithmstodistinguishmaliciousclientsfrom benign,andtheASRofthetargetedattacks.

Theresultsrevealedthatutilizingadversarialdata ontheserversideduringFLtrainingcansuccess‐fullyre‐weightmaliciousclientsandgivethemless importanceduringmodelaggregationforalluntar‐getedandtargetedattacks.However,themagnitudeof theweightdifferenceisnotsuf icienttofullymitigate thedamageperformedbythemaliciousclientsincom‐parisonwiththesecuritymethodsspeci icallycrafted tobattledatapoisoningattacksofcertaintypes.Still, astheAdFLalgorithmshowedtheabilitytofavor benignclientsovermaliciousonesduringtheexperi‐mentsconducted,futureresearchcanfurtherimprove theresultsbyensuringamorepowerfulweighting schemetopromoteagreaterin luenceoftheAdFL coherencemeasuresteponthemodelaggregation andverifytheAdFLalgorithmperformanceinmore populatedFLscenariosthatincludeclientpickingand introducemorediversedatadistributions.

AUTHOR

AnastasiyaDanilenka∗ –FacultyofMathematics andInformationScience,WarsawUniversityof Technology, Koszykowa75,00‐662Warsaw,Poland,e‐mail: anastasiya.danilenka.dokt@pw.edu.pl, www: orcid.org/0000‐0002‐3080‐0303.

∗Correspondingauthor

ThisworkwassupportedbytheCentreforPriority ResearchAreaArti icialIntelligenceandRoboticsof WarsawUniversityofTechnologywithintheExcel‐lenceInitiative:ResearchUniversity(IDUB)pro‐grammeandbytheLaboratoryofBioinformaticsand ComputationalGenomicsandtheHigh‐Performance ComputingCenteroftheFacultyofMathematicsand InformationScienceatWarsawUniversityofTechnol‐ogy.

[1] H.B.McMahan,E.Moore,D.Ramage,S.Hamp‐son,andB.A.yArcas.“Communication‐ef icient learningofdeepnetworksfromdecentralized data”,2017.

[2] X.Li,K.Huang,W.Yang,S.Wang,andZ.Zhang. “Ontheconvergenceoffedavgonnon‐iiddata”, 2020.

[3] T.‐M.H.Hsu,H.Qi,andM.Brown.“Measuring theeffectsofnon‐identicaldatadistributionfor federatedvisualclassi ication”,2019.

[4] X.Ma,J.Zhu,Z.Lin,S.Chen,andY.Qin, “Astate‐of‐the‐artsurveyonsolvingnon‐iid datainfederatedlearning”, FutureGeneration ComputerSystems,vol.135,2022,244–258, https://doi.org/10.1016/j.future.2022.05.003.

[5] R.Gosselin,L.Vieu,F.Loukil,andA.Benoit,“Pri‐vacyandsecurityinfederatedlearning:Asur‐vey”, AppliedSciences,vol.12,no.19,2022.

[6] P.ErbilandM.E.Gursoy,“Detectionand mitigationoftargeteddatapoisoningattacksin federatedlearning”.In: 2022IEEEIntlConfon Dependable,AutonomicandSecureComputing, IntlConfonPervasiveIntelligenceandComputing, IntlConfonCloudandBigDataComputing,Intl ConfonCyberScienceandTechnologyCongress (DASC/PiCom/CBDCom/CyberSciTech),2022, 1–8,10.1109/DASC/PiCom/CBDCom/Cy55231. 2022.9927914.

[7] A.Danilenka,“Mitigatingtheeffectsofnon‐iid datainfederatedlearningwithaself‐adversarial balancingmethod”, 202318thConferenceon ComputerScienceandIntelligenceSystems(FedCSIS),2023,925–930.

[8] Y.LeCunandC.Cortes,“MNISThandwrittendigit database”,2010.

[9] G.Cohen,S.Afshar,J.Tapson,andA.vanSchaik. “Emnist:anextensionofmnisttohandwritten letters”,2017.

[10] A.Krizhevsky.“Learningmultiplelayersoffea‐turesfromtinyimages”,2009.

[11] Z.Zhang,X.Cao,J.Jia,andN.Z.Gong,“Fldetector: Defendingfederatedlearningagainstmodelpoi‐soningattacksviadetectingmaliciousclients”. In: Proceedingsofthe28thACMSIGKDDConferenceonKnowledgeDiscoveryandDataMining,NewYork,NY,USA,2022,2545–2555, 10.1145/3534678.3539231.

[12] D.Li,W.E.Wong,W.Wang,Y.Yao,andM.Chau, “Detectionandmitigationoflabel‐ lipping attacksinfederatedlearningsystemswithkpca andk‐means”.In: 20218thInternational ConferenceonDependableSystemsand TheirApplications(DSA),2021,551–559, 10.1109/DSA52907.2021.00081.

[13] P.Blanchard,E.M.ElMhamdi,R.Guerraoui, andJ.Stainer,“Machinelearningwithadver‐saries:Byzantinetolerantgradientdescent”.In:

I.Guyon,U.V.Luxburg,S.Bengio,H.Wallach, R.Fergus,S.Vishwanathan,andR.Garnett,eds., AdvancesinNeuralInformationProcessingSystems,vol.30,2017.

[14] D.Cao,S.Chang,Z.Lin,G.Liu,andD.Sun, “Understandingdistributedpoisoningattack infederatedlearning”.In: 2019IEEE25th InternationalConferenceonParalleland DistributedSystems(ICPADS),2019,233–239, 10.1109/ICPADS47876.2019.00042.

[15] X.Cao,M.Fang,J.Liu,andN.Z.Gong.“Fltrust: Byzantine‐robustfederatedlearningviatrust bootstrapping”,2022.

[16] D.Yin,Y.Chen,K.Ramchandran,andP.Bartlett. “Byzantine‐robustdistributedlearning: Towardsoptimalstatisticalrates”,2021.

[17] C.Xie,O.Koyejo,andI.Gupta.“Generalized byzantine‐tolerantsgd”,2018.

[18] C.Fung,C.J.M.Yoon,andI.Beschastnikh, “Thelimitationsoffederatedlearninginsybil settings”.In: 23rdInternationalSymposium onResearchinAttacks,IntrusionsandDefenses (RAID2020),SanSebastian,2020,301–316.

[19] Y.Xie,W.Zhang,R.Pi,F.Wu,Q.Chen,X.Xie, andS.Kim.“Robustfederatedlearningagainst bothdataheterogeneityandpoisoningattackvia aggregationoptimization”,2022.

[20] S.Han,S.Park,F.Wu,S.Kim,B.Zhu,X.Xie, andM.Cha,“Towardsattack‐tolerantfederated learningviacriticalparameteranalysis”.In: ProceedingsoftheIEEE/CVFInternationalConferenceonComputerVision(ICCV),2023,4999–5008.

[21] S.Park,S.Han,F.Wu,S.Kim,B.Zhu,X.Xie,and M.Cha,“Feddefender:Client‐sideattack‐tolerant federatedlearning”.In: Proceedingsofthe29th ACMSIGKDDConferenceonKnowledgeDiscoveryandDataMining,NewYork,NY,USA,2023, 1850–1861,10.1145/3580305.3599346.

[22] C.Chen,Y.Liu,X.Ma,andL.Lyu.“Calfat:Cali‐bratedfederatedadversarialtrainingwithlabel skewness”,2023.

[23] G.Zizzo,A.Rawat,M.Sinn,andB.Buesser.“Fat: Federatedadversarialtraining”,2020.

[24] Z.Li,J.Shao,Y.Mao,J.H.Wang,andJ.Zhang. “Federatedlearningwithgan‐baseddatasynthe‐sisfornon‐iidclients”,2022.

[25] Y.Lu,P.Qian,G.Huang,andH.Wang.“Person‐alizedfederatedlearningonlong‐taileddatavia adversarialfeatureaugmentation”,2023.

[26] X.Li,Z.Song,andJ.Yang.“Federatedadversarial learning:Aframeworkwithconvergenceanaly‐sis”,2022.

[27] C.Szegedy,W.Zaremba,I.Sutskever,J.Bruna, D.Erhan,I.Goodfellow,andR.Fergus.“Intrigu‐ingpropertiesofneuralnetworks”,2014.

[28] O.Suciu,R.Marginean,Y.Kaya,H.D.III,and T.Dumitras,“WhendoesmachinelearningFAIL? generalizedtransferabilityforevasionandpoi‐soningattacks”.In: 27thUSENIXSecuritySymposium(USENIXSecurity18),Baltimore,MD,2018, 1299–1316.

[29] I.J.Goodfellow,J.Shlens,andC.Szegedy. “Explainingandharnessingadversarialexam‐ples”,2015.

[30] A.Kurakin,I.Goodfellow,andS.Bengio.“Adver‐sarialexamplesinthephysicalworld”,2017.

[31] Y.Dong,F.Liao,T.Pang,H.Su,J.Zhu,X.Hu,and J.Li.“Boostingadversarialattackswithmomen‐tum”,2018.

[32] G.Xia,J.Chen,C.Yu,andJ.Ma,“Poisoning attacksinfederatedlearning:Asurvey”, IEEEAccess,vol.11,2023,10708–10722, 10.1109/ACCESS.2023.3238823.

[33] A.Shafahi,W.R.Huang,M.Najibi,O.Suciu, C.Studer,T.Dumitras,andT.Goldstein,“Poison frogs!targetedclean‐labelpoisoningattackson neuralnetworks”.In: NeuralInformationProcessingSystems,2018.

[34] V.Shejwalkar,A.Houmansadr,P.Kairouz,and D.Ramage,“Backtothedrawingboard:Acriti‐calevaluationofpoisoningattacksonfederated learning”, ArXiv,vol.abs/2108.10241,2021.

[35] H.Xiao,H.Xiao,andC.Eckert,“Adversariallabel lipsattackonsupportvectormachines”.In: Proceedingsofthe20thEuropeanConferenceon Arti icialIntelligence,NLD,2012,870–875.

[36] V.Tolpegin,S.Truex,M.E.Gursoy,andL.Liu. “Datapoisoningattacksagainstfederatedlearn‐ingsystems”,2020.

[37] A.Paszke,S.Gross,F.Massa,A.Lerer,J.Bradbury, G.Chanan,T.Killeen,Z.Lin,N.Gimelshein, L.Antiga,A.Desmaison,A.Kopf,E.Yang, Z.DeVito,M.Raison,A.Tejani,S.Chilamkurthy, B.Steiner,L.Fang,J.Bai,andS.Chintala. “Pytorch:Animperativestyle,high‐performance deeplearninglibrary”.In: AdvancesinNeural InformationProcessingSystems32,8024–8035. CurranAssociates,Inc.,2019.

[38] S.MarcelandY.Rodriguez,“Torchvisionthe machine‐visionpackageoftorch”.In: Proceedingsofthe18thACMInternationalConference onMultimedia,NewYork,NY,USA,2010,1485–1488,10.1145/1873951.1874254.

[39] Y.Lecun,L.Bottou,Y.Bengio,andP.Haffner, “Gradient‐basedlearningappliedtodocument recognition”, ProceedingsoftheIEEE,vol.86,no. 11,1998,2278–2324,10.1109/5.726791.

[40] M.Sandler,A.Howard,M.Zhu,A.Zhmoginov,and L.‐C.Chen.“Mobilenetv2:Invertedresidualsand linearbottlenecks”,2019.

[41] J.Deng,W.Dong,R.Socher,L.‐J.Li,K.Li,andL.Fei‐Fei,“Imagenet:Alarge‐scalehierarchicalimage database”.In: 2009IEEEconferenceoncomputer visionandpatternrecognition,2009,248–255.

[42] L.Lyu,H.Yu,X.Ma,C.Chen,L.Sun, J.Zhao,Q.Yang,andP.S.Yu,“Privacyand robustnessinfederatedlearning:Attacks anddefenses”, IEEETransactionsonNeural NetworksandLearningSystems,2022,1–21, 10.1109/TNNLS.2022.3216981.

[43] F.Wilcoxon. Individualcomparisonsbyranking methods,196–202.Springer,1992.

Submitted:27th December2023;accepted:11th March2024

KarolinaBogacka,AnastasiyaDanilenka,KatarzynaWasielewska‑Michniewska DOI:10.14313/JAMRIS/3‐2024/18

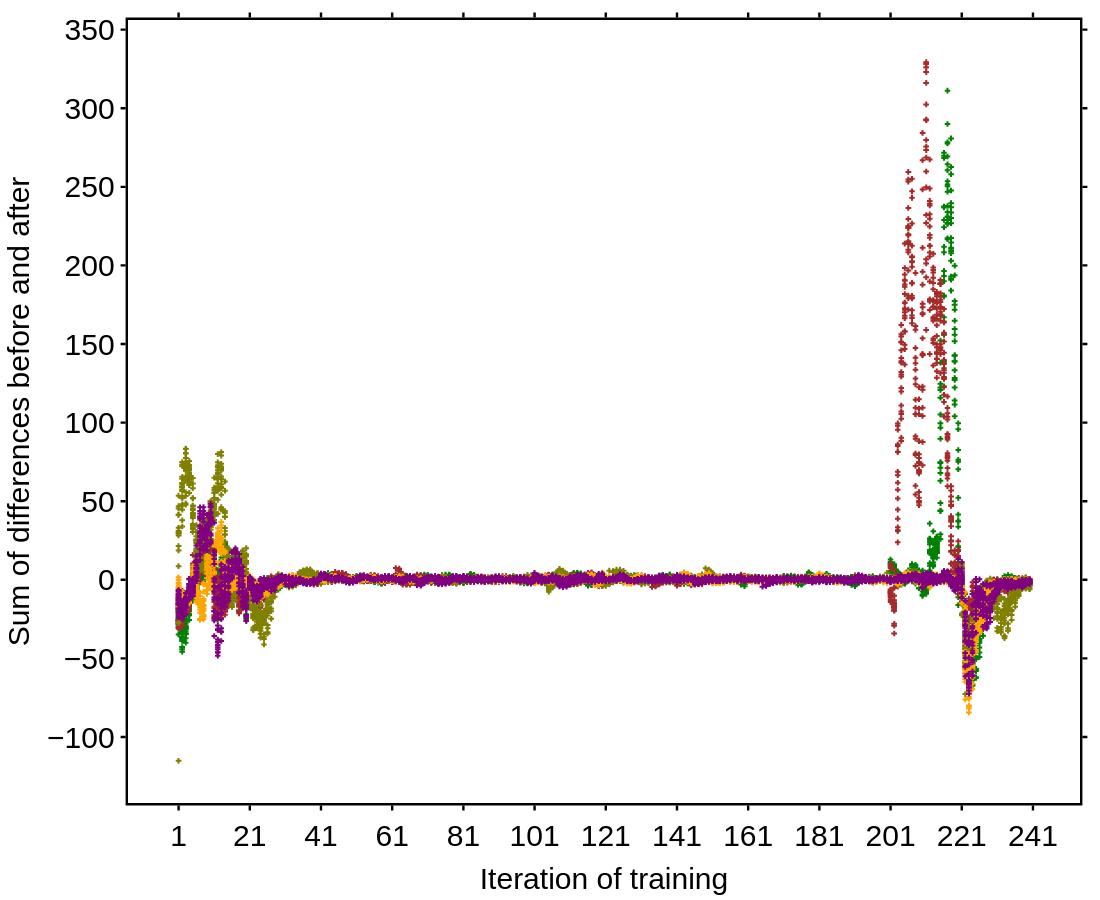

Abstract:

Asthecomputationalandcommunicationalcapabilities ofedgeandIoTdevicesgrow,sodotheopportunitiesfor novelmachinelearning(ML)solutions.Thisleadstoan increaseinpopularityofFederatedLearning(FL),espe‐ciallyincross‐devicesettings.However,whilethereisa multitudeofongoingresearchworksanalyzingvarious aspectsoftheFLprocess,mostofthemdonotfocuson issuesconcerningoperationalizationandmonitoring.For instance,thereisanoticeablelackofresearchinthetopic ofeffectiveproblemdiagnosisinFLsystems.Thiswork beginswithacasestudy,inwhichwehaveintendedto comparetheperformanceoffourselectedapproachesto thetopologyofFLsystems.Forthispurpose,wehave constructedandexecutedsimulationsoftheirtraining processinacontrolledenvironment.Wehaveanalyzed theobtainedresultsandencounteredconcerningperi‐odicdropsintheaccuracyforsomeofthescenarios.We haveperformedasuccessfulreexaminationoftheexper‐iments,whichledustodiagnosetheproblemascaused byexplodinggradients.Inviewofthosefindings,wehave formulatedapotentialnewmethodforthecontinuous monitoringoftheFLtrainingprocess.Themethodwould hingeonregularlocalcomputationofahandpickedmet‐ric:thegradientscalecoefficient(GSC).Wethenextend ourpriorresearchtoincludeapreliminaryanalysisofthe effectivenessofGSCandaveragegradientsperlayeras potentiallysuitableforFLdiagnosticsmetrics.Inorderto performamorethoroughexaminationoftheirusefulness indifferentFLscenarios,wesimulatetheoccurrence oftheexplodinggradientproblem,vanishinggradient problemandstablegradientservingasabaseline.We thenevaluatetheresultingvisualizationsbasedontheir clarityandcomputationalrequirements.Weintroduce agradientmonitoringsuitefortheFLtrainingprocess basedonourresults.

Keywords: federatedlearning,explodinggradientprob‐lem,vanishinggradientproblem,monitoring

FederatedLearning(FL,[17,25])asaDistributed MachineLearning(DML)paradigmprioritizesmain‐tainingtheprivacyofthedevices(calledclients).It aimstodosobyleveragingthecomputingandcom‐municationalcapabilitiesoftheclients.AstandardFL trainingprocessbeginswiththeserverinitializinga machinelearning(ML)modelandsubsequentlycom‐municatingitsweightstotheclients.

Theclientsthenusethemtoconductlocaltraining andreturntheirresultstotheserver,wheretheyare aggregatedintoanewglobalmodel.Thewholepro‐cessrepeatsmultipletimesuntilstoppingcriteriaare met.

Asofnow,mostoftheMLmodelsusedforFLtrain‐ingare irstdesignedinacentralizedsetting,withthe developerhavingunrestrictedaccesstoarepresenta‐tivesampleoftheglobaldataset.Becauseofthat,they areabletoemployavarietyofpreexistingtechniques andtoolstomakesurethattheinitialmodelarchi‐tecturehasbeenoptimallyselected.Manyofthedata preprocessingstepsandhyperparametersdeveloped inthatinitialphaseformabaseforlaterFLtraining. Unfortunately,thiswork lowcanonlybeutilizedfor usecaseswheretherepresentativeglobaldatasetcan beconstructed,excludingsettingsthatdemandaddi‐tionalprivacyorjusthavelargelydistributed,heavily localizedandclient‐speci icdata.Inthatcase,theFL modeldevelopmentphasehastobeconductedina distributedenvironmentovermultipleruns,causing ittobepotentiallymuchslower.Distributedenvi‐ronmentsalsoinvolvetheunexpectedoccurrencesof otherpotentialhazardsintheformofsuddenclient dropoutanddifferingclientdatadistributions,caus‐ingthediagnosisofproblemssuchasvanishingor explodinggradientstobesigni icantlymoredif icult. Thisnecessitatesthedevelopmentofeffectivetoolsof FLsystemdiagnosis,forexamplethroughcontinuous monitoringofselectedmetrics.Asthisisaproblem thataffectsthedevelopmentandmaintenanceofFL systems,itcanbeunderstoodasbelongingtothe domainofFederatedLearningOperations(FLOps)[4], whichaimstoimprovetheFLlifecycleasawhole.

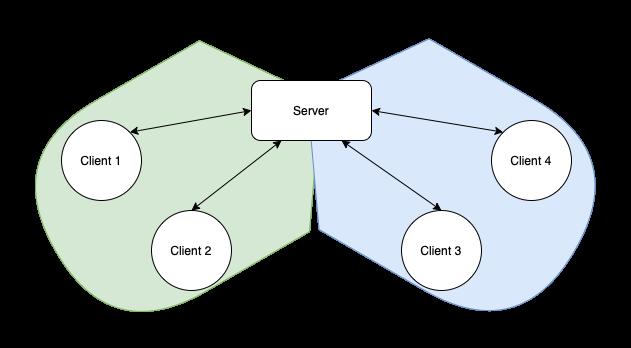

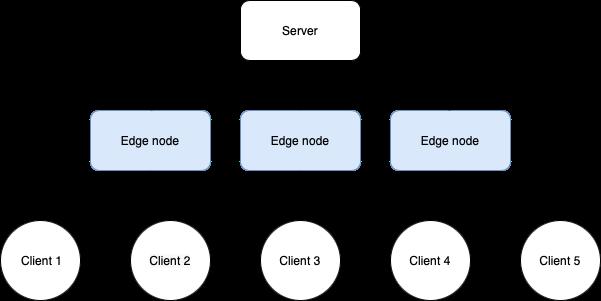

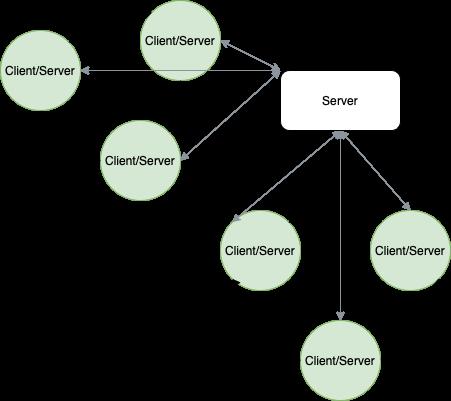

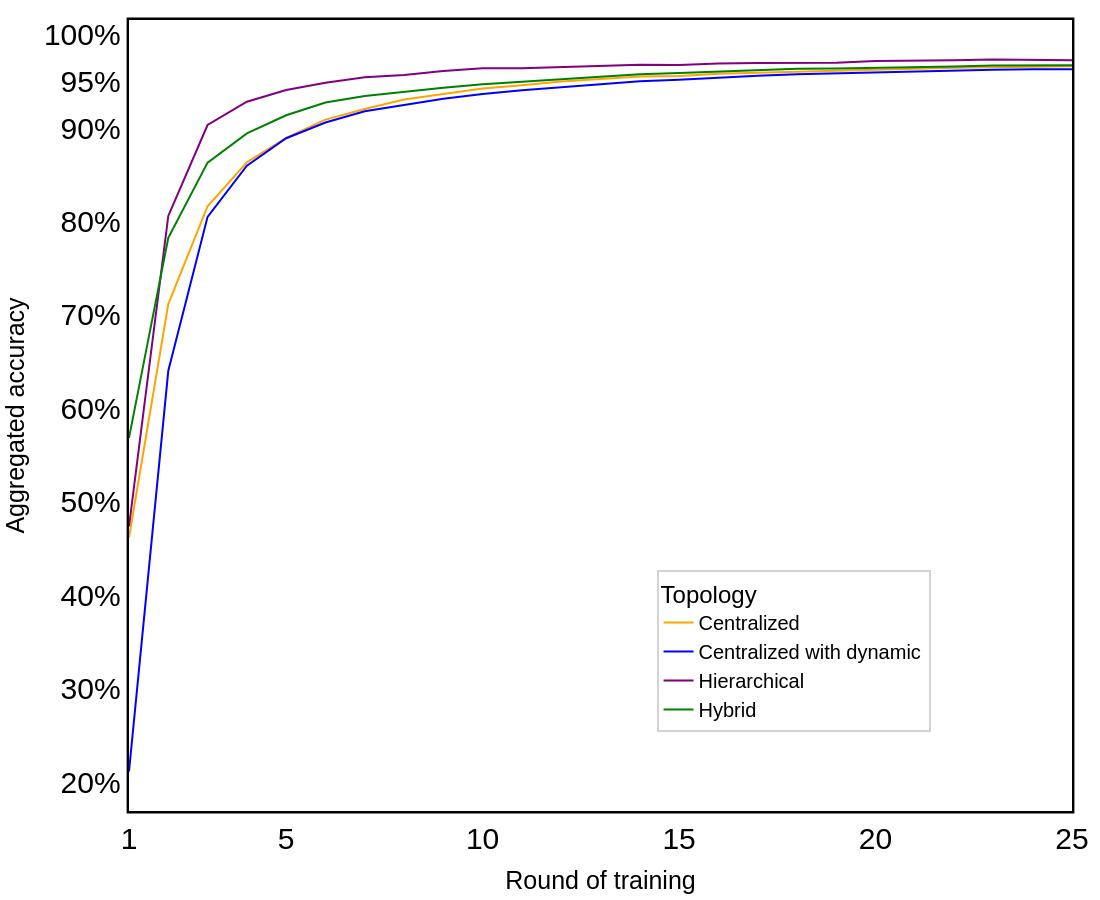

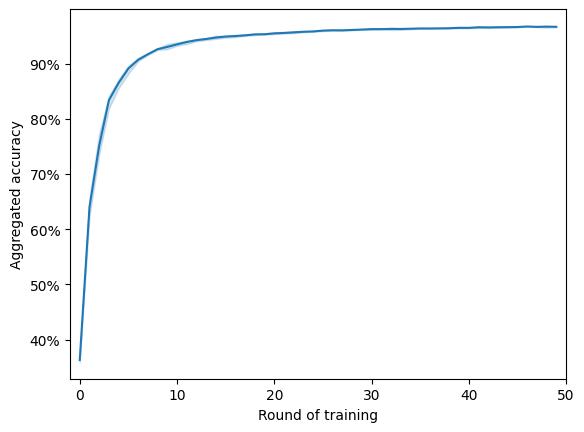

Wehaveconfrontedtheaforementionedissues duringourworkontheAssist‐IoTproject 1.Wehave conductedtrialstodeterminethemostsuitableFL topologytoimplementintheAssist‐IoTprojectpilots centeredaround:(1)constructionworkers’health andsafetyassurance,(2)vehicleexteriorcondition inspection[6].Morespeci ically,itwasimportantto providealightweightandscalablesystemforfall detectionofconstructionworkersinpilot1andauto‐maticvehicledetectioninpilot2.Inordertoascertain thebestFLtopologyforthepilots,wehaveconducted apreliminaryanalysisoftheissue[1]andselected4 especially“promising”approachesintheformofthe centralized,centralizedwithdynamicclusters,hierar‐chical,andhybridarchitectures.

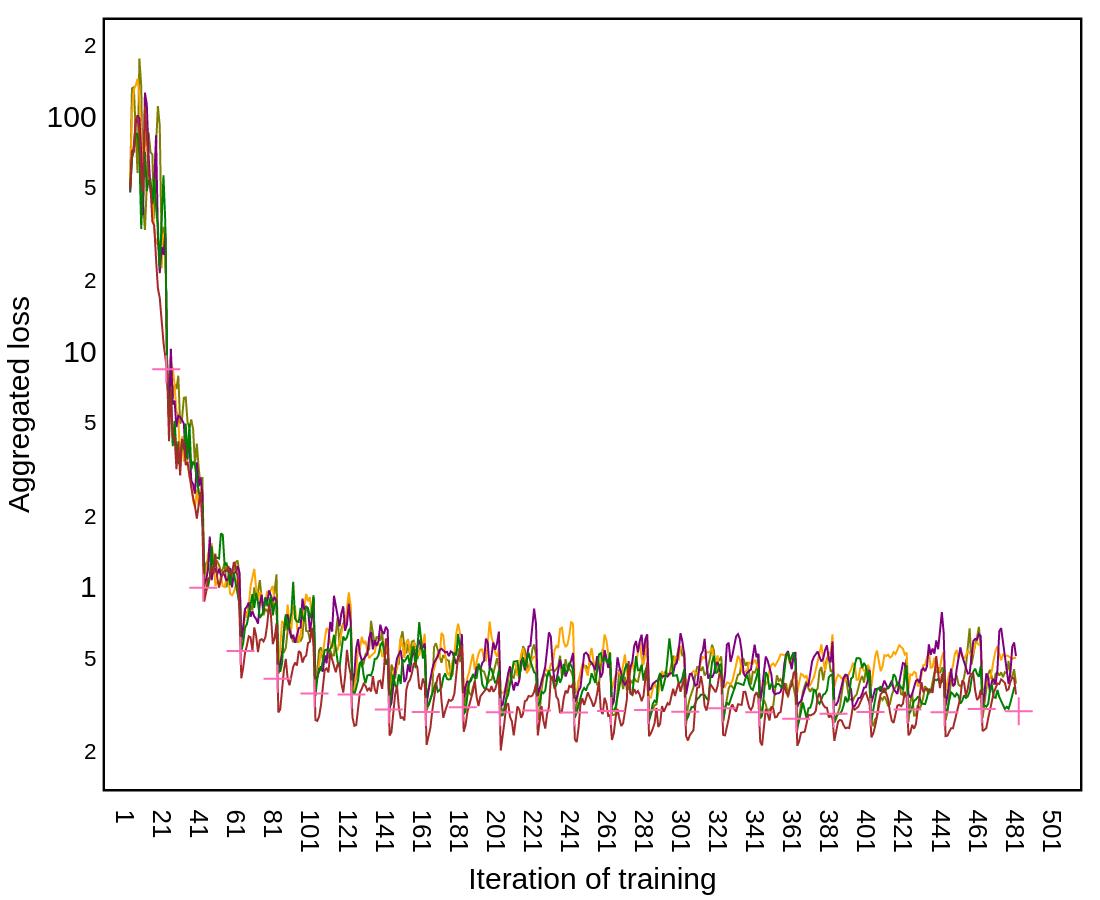

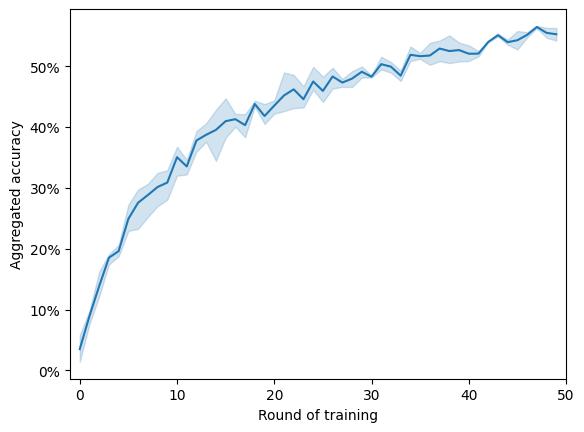

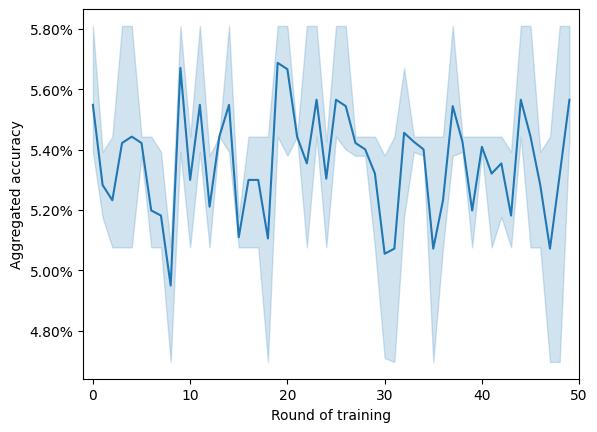

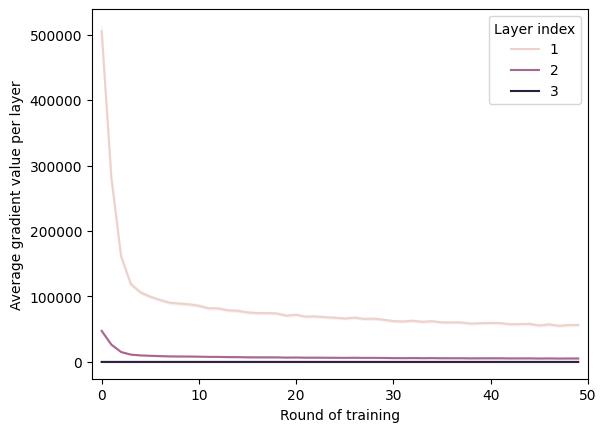

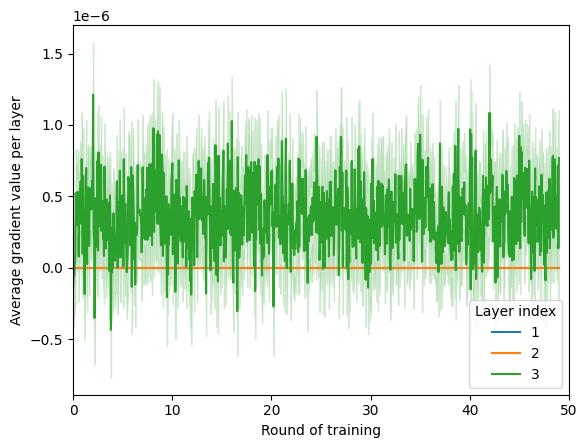

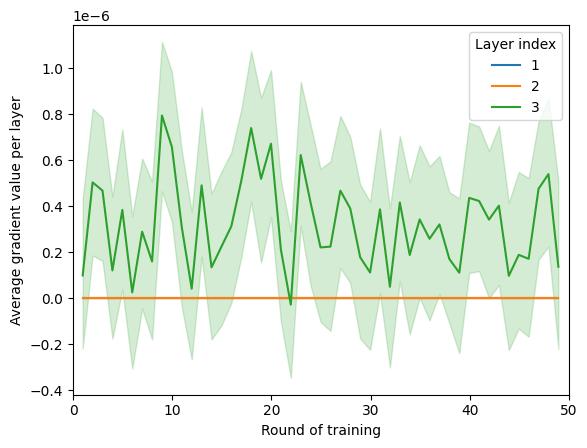

Ourinitialsimulationshaveinsteadrevealedsome ofthoseapproaches(hierarchicalandhybrid)tobe especiallysensitivetotheexplodinggradientproblem, whichintheircasespresentsitselfasperiodicdrops inaccuracy.Theexplodinggradientproblemhereis de inedasasituationinwhichthegradientbackprop‐agationinneuralnetworktrainingincreasesexponen‐tially.Thiscausesthetrainingprocesstostall,with theresultingmodeldeterioratinginsomecases[31]. Wehaveappliedmodi icationstotheexperiment designinordertomitigatethisproblem.Wehavethen describedthewholeprocessasacasestudy.

Thisarticleisanextensionoftheresearchpre‐sentedintheconferencepaper[3].Weexpandthe theoreticalpartofthiswork,whichnowincludes moreinformationaboutthecurrentstateofFLOps withspecialimportancegiventothediagnostictools. Descriptionsofboththeexplodingandvanishinggra‐dientproblemsarebroadenedaswell,includingboth theircommoncausesandmitigationtechniques.A proposedgradientmonitoringmetricsuiteisdesigned bycombiningamodi iedversionofthepreviously proposedGradientScaleCoef icient(GSC)withthe newlyaddedaveragegradientperlayer.Theef icacy ofthesuiteistestedinthreesimulatedscenarios(van‐ishinggradient,explodinggradient,andbaseline)for twoselectedtopologies(centralizedandhierarchical). Resultsareanalyzed,bothinvestigatingtheclarityof thevisualizationsproducedbythesuiteaswellasits necessarycommunicationcost.

2.1.FederatedLearningOperations

FederatedLearningOperations(abbreviatedas FLOps)isacross‐disciplinesoftwaredevelopment methodology.Itsaimstoimprovetheef iciencyand qualityofthedevelopment,deployment,andmain‐tenanceprocessesofFLsystems[4].Assuch,FLOps extendstheprinciplesdevisedforthepurposesof MLOpsandDevOpsmethodologies,suchascontinu‐ousintegration,deploymentautomationandmodel monitoring[18]toFLenvironments.

Itisworthmentioningthatthede initionofFLOps formulatedin[4]refersonlytocross‐siloenviron‐ments.However,therearenoclearreasonsmen‐tionedwhyitcouldnotbeextendedtocross‐device settings.Onthecontrary,therearemanyexam‐plesofcross‐devicebusinessusecasessuchasthe Gboard[37].Althoughtheparticularactivitiescom‐posingtheFLOpslifecycleincross‐devicescenario maychange,involvinglessnegotiationsbetweenbusi‐nessentitiesanddatainterfaceformulationsthanin thecross‐siloenvironments,thescenariostillposes asigni icantchallengeintermsofautomationand operationalization.Thisworkwillfocusondiagnosing problemscausedbythegradientinstabilityincross‐deviceFLsystems.AseffectivesolutionsforFLdiag‐nosticsin luencetheef iciencyandqualityofFLdevel‐opment,itcanthereforebeconsideredascontributing totheresearchonFLOps.

Figure1. AsimplifieddiagramoftheFLOpsprocess flowsfrom[4]

TheinteractionbetweenvariousFLOps lowsis visualizedinFigure1.FDstandsforFederatedDesign, whichencompassestheprocessesofdataanalysisand modeldesign.FLmarksthe lowofFLtraining,and OPSindicatesthemaintenanceandmonitoringofFL solutionsdeployedinproduction.Eventhoughagiven FLdevelopmentprocessalwaysbeginswiththeFD phase,otherphasescan lexibly lowintoeachother basedontheresultsachievedatagivenstage.For example,amodelthatdoesnotperformwellmayindi‐catethenecessityofareturntoFD,andinsuf icient performancemetricsachievedduringOPSmaycause FLtorestart.

Ourresearchcanbeplacedattheintersectionof FDandFL,enablinganearliertransitionfromthe lattertotheformer.Itcanbethereforeunderstood asameansofoptimizingthewholework lowina holisticmanner.Movingbeyondtheideaofoptimiz‐ingasingulartrainingprocess,effectiveFLdiagnostic toolscanshortenthelengthofthewholefederated developmentprocess.

2.2.TheStateofFLDiagnosticTools

Asofnow,theresearchonFLsystemdiagno‐sisoftencentersaroundmonitoringtheclientsina secureandprivatemannerinordertoeffectivelydis‐tinguishthosethataremarkedbytheirexception‐allybadperformance[21][24][26].Asmuchasthe solutionspresentedintheaforementionedworksare interesting,theymaynotbesuf icienttoidentifyprob‐lemswithabadchoiceofhyperparametersormodel architecture.FedDebug[11]offersthemostcompre‐hensiveapproachofallofthosemonitoringframe‐works,enablingthedevelopertousemetricsgathered throughoutthetrainingtoreplaypreviousroundsor setbreakpoints.Thisisaneffectivesolutiontothe problemofrecognizingfaultyclients.

However,someworksinvolvingotheraspectsof diagnosingFLsystemscanalsobefound.[20]pro‐videsaworthwhilecontributiontotheproblemofFL modeldebuggingbydelineatinghowtheintegration ofinterpretablemethodsintoFLsystemsmayresult inapotentialsolution,makingitaverypromising researchdirection.[7],ontheotherhand,concen‐tratesonthesoftwareerrorsfrequentlyencountered bytheusersofselectedFLframeworks.Finally,Fed‐DNN‐Debugger[8]aspirestomitigatesomeofthe problemsaffectingFLmodels(biaseddata,noisydata, orinsuf icienttraining)byin luencingtheirlocalcom‐putation.Structurebugs,suchasinsuf icienttraining aswellasbiasedornoisydata,arebeyondthescopeof thissolution.Fed‐DNN‐Debuggercontainstwomod‐ules,withthe irstoneprovidingnon‐intrusivemeta‐datacapture(NIMC)andgeneratingdatathatisthen usedforautomatedneuralnetworkmodeldebugging (ANNMD).

2.3.TheExplodingGradientProblem

Theproblemofexplodinggradientiscausedby asituation,inwhichtheinstabilityofgradientval‐uesbackpropagatedthroughaneuralnetworkcauses themtogrowexponentially,aneffectthathasan especiallysigni icantin luenceontheinnermostlay‐ers[31].Thisproblemtendstogetoccurmoreoften themoredepthagivenMLarchitecturehas,forming anobstacleintheconstructionoflargernetworks. Additionally,explodinggradientproblemmayinsome casesbecausedbythewrongweightvalues,which tendtobene itfromnormalizedinitialization[12]. Whentalkingaboutactivationfunctions,theproblem maybeavoidedbyusingamodi iedLeakyReLUfunc‐tioninsteadoftheclassicReLUfunction.Thereason forthisbehaviourliesintheadditionofaleakyparam‐eter,whichcausesthegradienttobenon‐zeroeven whentheunitisnotactiveduetosaturation[27]. Anotherapproachtostabilizingneuralnetworkgradi‐ents(fortheexplodingaswellasthevanishinggradi‐entproblem)involvesgradientclipping.Theoriginal algorithmbehindgradientclippingsimplycausesthe gradienttoberescaledwhenevertheyexceedaset threshold,whichisbothveryeffectiveandcomputa‐tionallyef icient[29].

Thereareotherexistingtechniques,suchasweight scalingorbatchnormalization,whichminimizethe emergenceofthisproblem.Unfortunately,theyare notsuf icientinallcases[31].Somearchitectures,for instancefullyconnectedReLUnetworks,areresistant totheexplodinggradientproblembydesign[14]. Nonetheless,asthesearchitecturesarenotsuitable forallMLproblems,thismethodisnotauniversal solution.

Thereverseoftheexplodinggradientproblem,the vanishinggradientproblemisconsideredoneofthe mostimportantissuesin luencingthetrainingtime onmultilayerneuralnetworksusingthebackpropa‐gationalgorithm.Itappearswhenthethemajorityof theconstituentsofthegradientofthelossfunction approacheszero.Inparticular,thisproblemmostly involvesgradientlayersthataretheclosesttothe input,whichcausestheparametersoftheselayers tonotchangeassigni icantlyastheyshouldandthe learningprocesstostall.

Theincreasingdepthoftheneuralnetworkandthe useofactivationfunctionssuchassigmoidmakesthe occurrenceofthevanishinggradientproblemmore likely[32].Alongwiththesigmoidactivationfunction, thehyperbolictangentismoresusceptibletotheprob‐lemthanrecti iedactivationfunctions(ReLU),which largelysolvesthevanishinggradientproblem[13]. Finally,similarlytotheexplodinggradientproblem, theemergenceofthevanishinggradientproblemhas beenlinkedtoweightinitialization,withimprove‐mentsgainedfromaddingtheappropriatenormaliza‐tion[12].

2.5.AdvancesinResearchonTopologyofFederated Learning

Thedefault,centralizednetworktopologyusedfor aFLsystem,whichinvolvesasinglepowerfulcloud servercommunicatingwithafederationofclients locatedonedgeandIoTdevicesmaynotbethe mostsuitablesolutionforallusecases[35].Some requireef icientcommunication,whichmaybemore effectivelyprovidedbythesolutionsthathaveeither reducedtheimportanceoftheserverorremovedit alltogether[2].Othersfocusonleveragingnetwork topologytominimizeproblemscausebydatahetero‐geneity.Therealsothosethatattempttocombinethe twoapproachesdescribedabovebycarefullygrouping theclients[5].

[35]includesacatalogueofmanycommonly encounteredtrendsinresearchinvolvingFLtopol‐ogy,classifyingFLtopologytypesto 7 categories, includingcentralized[25],tree[28],hybrid[19],gos‐sip[16],grid[33],mesh[35],clique[2],andring[9]. Here,FederatedAveragingdescribedin[25]isan exampleofthecentralizedtopology.TornadoAggre‐gate,ontheotherhand,isunderstoodasbelonging tothehybridcategoryduetoitconstructingSTAR‐ringsandRING‐starsbycombiningstarandring topologies[19].STAR‐ringsindicatestheexistence ofaserver,whichperformsregularclientweight aggregationalongwithring‐basedgroups.RING‐stars constructsalargeglobalring,withsmallcentralized groupsconductinglocalcomputationandpassingit periodicallytoothergroupsinthechain.Outofthose two,STAR‐ringsreceivesmuchbetterperformance resultswhilemaintainingthesamescalability.

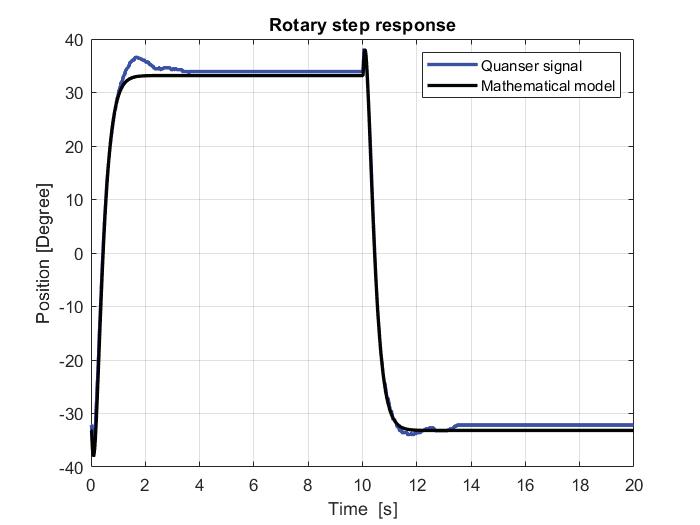

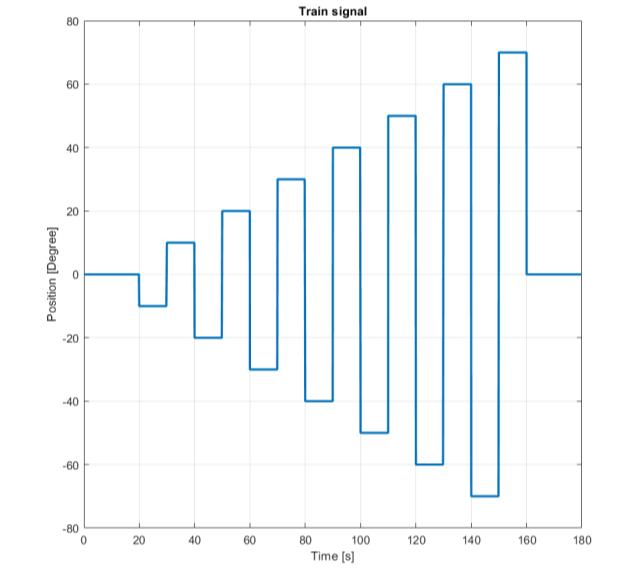

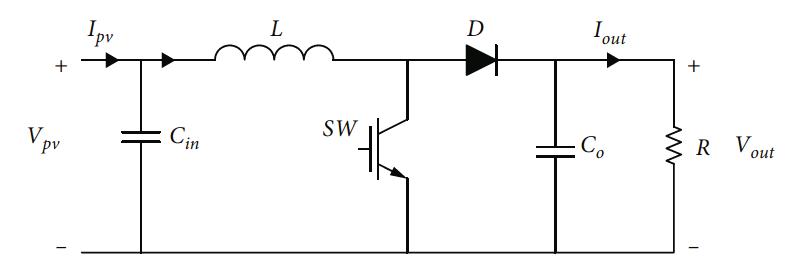

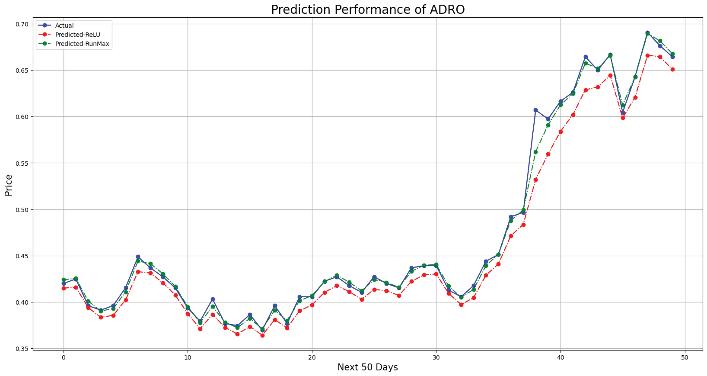

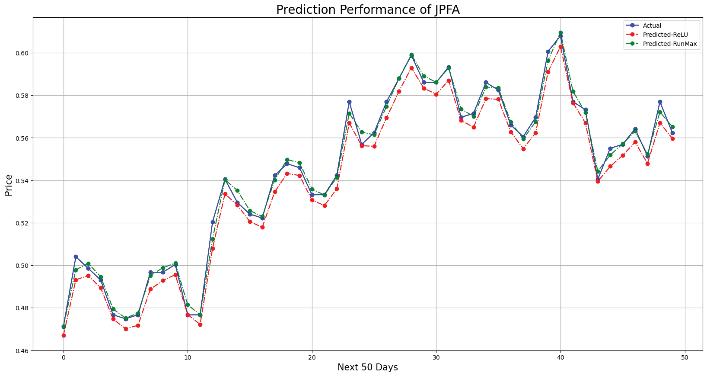

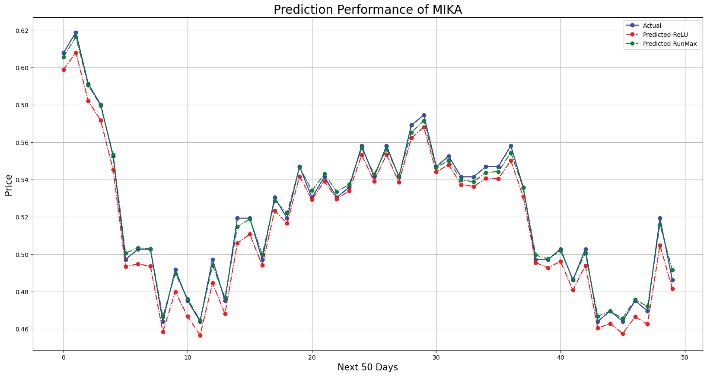

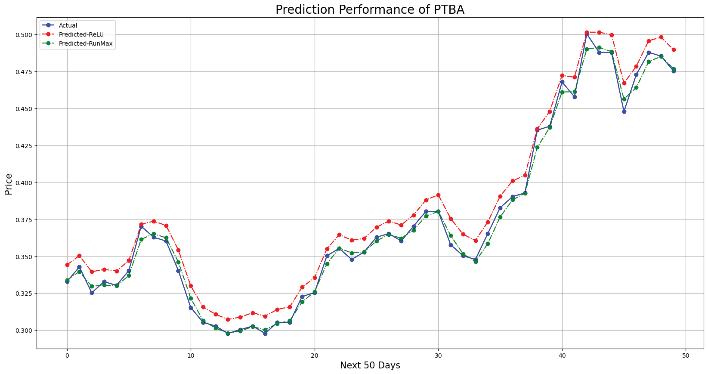

Somesystemscombinedifferenttopological approachesinordertocreateamoreresponsive system,thatcan,forinstance,adaptivelyrespond toproblemswithheterogenousdata.IFCA[10] integratesacentralizedtopologywithdynamic clusteringbyperiodicallygroupingtheclientsand simultaneouslytrainingapersonalizedmodelforeach oftheobtainedgroups.Unfortunately,asthismethod necessitatesawarmstarttothetrainingandprior knowledgeaboutthenumberofclustersnecessary, itleavessigni icantspaceforimprovement[15].This improvementcomesintheshapeofSR‐FCA,whichcan automaticallydeterminetherightamountofclusters, makingitmorerobustandresource‐ef icient.