Building Information Modelling (BIM) technology for Architecture, Engineering and Construction March /April 2024 >> Vol.130

drawings Coming to a CAD/BIM system or cloud service near you soon GENERATED WITH ADOBE FIREFLY

performance Real time feedback with Enscape Sound advice Acoustic performance with Treble iGPU comes of age Prime time for 3D CAD / BIM

Autonomous

Building

THE FUTURE OF AEC technology www.nxtbld.com TIM FU TIM FU

- 26 June 2024

available

In association with GOLD SPONSORS SILVER SPONSORS

25

Tickets

now

THE FUTURE OF AEC software development www.nxtdev.build

In association with

Queen Elizabeth II Centre / London GOLD SPONSORS SILVER SPONSORS

Industry news 6

AEC technologies emerge for Apple Vision Pro, Unreal Engine and Twinmotion get new licensing, Alice uses AI to optimise Primavera P6 schedules, plus lots more

Autodesk to take over VAR payments 13

New changes to the Autodesk business model could be set to diminish the role of the CAD reseller

Workstation news 14

Intel Core Ultra laptop processors, Nvidia Ada Generation RTX GPUs for CAD, plus new workstations from HP and Dell

Cover story: the dawn of auto-drawings 18

Several CAD software firms are making real progress in drawing automation in the race to eliminate one of the AEC sector’s biggest bottlenecks

NXT BLD / DEV 2024 28

AI, automation, digital fabrication, BIM 2.0, data specifications, open source, automation, and lots, lots more at AEC Magazine’s London conferences

Enscape: building performance analysis 30

Enscape is to get a new module, powered by IES technology, that gives instant visual feedback on building performance

Enscape and V-Ray: a collaborative future 32

Chaos has big plans to enhance workflows between Enscape and V-Ray, boost real time collaboration, and more

Smart reality capture 34

A new integrated reality capture solution from Looq uses computer vision, AI and a proprietary handheld camera with GPS, to capture infrastructure at scale

Treble: sound advice

38

New software helps analyse and optimise designs for acoustic performance

TestFit runs free 42

The Texas-based design automation software developer releases a free massing tool for architects

Informed Design 44

Autodesk connects BIM (Revit) with fabrication (Inventor) via the cloud to support modern methods of construction

Scaling-up

Facit Homes brings new hope to the need to build houses and digitise fabrication

Laptop processors with integrated GPUs are now powerful enough for 3D CAD. Does this mean a cheaper, slimmer future?

5 www.AECmag.com March / April 2024

on-site digital construction 46

Prime time for iGPU 48

Building Information Modelling (BIM) technology for Architecture, Engineering and Construction FREE SUBSCRIPTIONS Register your details to ensure you get a regular copy register.aecmag.com editorial MANAGING EDITOR GREG CORKE

EDITOR MARTYN DAY

EDITOR STEPHEN HOLMES

advertising GROUP MEDIA DIRECTOR TONY BAKSH tony@x3dmedia.com

MANAGER STEVE KING

SALES & MARKETING DIRECTOR DENISE GREAVES denise@x3dmedia.com subscriptions MANAGER ALAN CLEVELAND alan@x3dmedia.com accounts CHARLOTTE TAIBI charlotte@x3dmedia.com FINANCIAL CONTROLLER SAMANTHA TODESCATO-RUTLAND sam@chalfen.com AEC Magazine is available FREE to qualifying individuals. To ensure you receive your regular copy please register online at www.aecmag.com about AEC Magazine is published bi-monthly by X3DMedia Ltd 19 Leyden Street London, E1 7LE UK T. +44 (0)20 3355 7310 F. +44 (0)20 3355 7319 © 2024 X3DMedia Ltd All rights reserved. Reproduction in whole or part without prior permission from the publisher is prohibited. All trademarks acknowledged. Opinions expressed in articles are those of the author and not of X3DMedia. X3DMedia cannot accept responsibility for errors in articles or advertisements within the magazine. 30

greg@x3dmedia.com CONSULTING

martyn@x3dmedia.com CONSULTING

stephen@x3dmedia.com

ADVERTISING

steve@x3dmedia.com U.S.

Resolve brings design review to Apple Vision Pro, blending 3D models with 2D docs

Resolve, the AEC-focused design review solution, is now available for the Apple Vision Pro, the much-hyped mixed reality headset that launched last month.

Resolve is a collaborative VR tool that is well established on the Oculus Quest VR headset. It includes native integrations with Autodesk Construction Cloud (ACC) and Procore, where models are automatically kept up to date in VR without having to export or upload with each design change.

According to Resolve CEO, Angel Say, one of the key benefits of Apple Vision Pro in BIM and AEC workflows is in its ability to ‘supercharge multi-dimensional multitasking’. In other words, viewing crisp 2D documents in a 3D spatial environment thanks to the headset’s incredibly high-resolution display.

“It means you can have your 2D content that is an established part of the [AEC] industry and isn’t going anywhere, and then seamlessly transition into a 3D spatial environment, whether that’s overlaying a model on top of your environment or being fully immersed in a 3D model,” he explains, adding that this workflow has been hard to achieve on other devices.

“For example, you can open up the ACC

or Procore iPad app and navigate it as if it were an iPad floating in front of you. And then in the click of a button, jump into a 3D environment in an application like Resolve, but bring that iPad app with you and suddenly you have your ACC issues floating in front of you. And you’re running multiple apps at the same time. But in different dimensions. One is 2D, one is for 3D, and you can go back and forth however you see fit.

“In the Resolve app on the App Store, you can interact with these 2D PDFs and

it feels really smooth. You can zoom in and out with ‘pinch to zoom’ like you’re used to. You can drag and scroll around the 2D PDF. Of course, you can pull up issues and click on them and see them in your model.”

Users can also leave spatial annotations on designs to track key issues and tasks, sync annotations back to ‘industry tracking tools’, and control the opacity of models so they can see through to the environment around them.

■ www.resolvebim.com

Graphisoft BIMx available for Apple Vision Pro

Graphisoft has released BIMx –BIM Experience for Apple Vision Pro, an interactive app for exploring BIM projects and linked documentation sets created in Archicad and DDScad.

The first version of BIMx on Apple’s visionOS supports full immersion mode in 3D. However, Graphisoft explains that navigation in the virtual model is experimental and will be fine-tuned.

“We’re actively working on solutions to bring you the immersive experience you crave. We have big plans for its evolution, with more features and enhancements scheduled for future updates,” said Graphisoft’s Emoke Csikos.

■ www.graphisoft.com

Nvidia to extend reach of Omniverse with new Cloud APIs

Nvidia is making its Omniverse Cloud industrial digital twin platform available as a series of five application programming interfaces (APIs) to make it easier for software developers to integrate core Omniverse technologies for OpenUSD and RTX into their existing design and automation software applications.

According to Rev Lebaredian, Nvidia VP

of Omniverse and simulation technology, this gives ISVs all the powers of Omniverse: interoperability across tools, physically based real time rendering, and the ability to collaborate across users and devices.

In its simplest form, software developers can embed Omniverse powered viewports into their 3D apps, giving their apps ‘instant’ real-time physically based rendering – pixel

streaming from the cloud. Software developers can also use the API to connect Generative AI tools into their existing apps, as well as a range of workflow tools for OpenUSD data.

The Omniverse Cloud APIs are being adopted by several software developers including Ansys, Hexagon, Microsoft, Siemens, and Trimble.

■ www.nvidia.com/omniverse

6 www.AECmag.com March / April 2024

Nvidia streams colossal 3D models into Apple Vision Pro

Nvidia has introduced a new service that allows firms to stream interactive Universal Scene Description (OpenUSD) scenes from 3D applications into the Apple Vision Pro mixed reality headset.

The technology makes use of Nvidia’s new Omniverse Clouds APIs (see story left), using a new framework that channels the data through the Nvidia Graphics Delivery Network (GDN), a global network of graphics-optimised data centres.

“Traditional spatial workflows require

developers to decimate their datasets – in essence, to gamify them. This doesn’t work for industrial workflows where engineering and simulation datasets for products factories and cities are massive,” said Rev Lebaredian, VP of Omniverse and simulation technology at Nvidia.

“New Omniverse cloud APIs let developers beam their applications and datasets with full RTX real-time physicallybased rendering directly into vision pro with just an internet connection.”

■ www.nvidia.com

PlanRadar enhanced with reality capture

PlanRadar is to get a new AI-powered ‘SiteView’ feature, designed to enhance the digital construction platform’s documentation, and reporting capabilities.

SiteView allows users to capture 360° imagery of a project while walking the site

with a 360° camera attached to a helmet. Images are automatically mapped onto a 2D plan, creating a ‘detailed visual record’ of activity across every stage of the build. Reality capture images are automatically transferred ready to review in PlanRadar

■ www.planradar.com/product/siteview

App helps assess biodiversity net gain

Temple, a UK environment, planning and sustainability consultancy, has launched a new application to help AEC firms make decisions on development plans and meet the new biodiversity net gain (BNG) legislation.

The legislation demands that all new construction projects deliver at least 110%

of the biodiversity value found on a site prior to its development.

Temple developed the app using Esri’s GIS technology. Designed to simplify BNG assessments, the software is said to streamline the workflow from field data collection to in-office assessment and provide a real time BNG score.

■ www.tinyurl.com/BNG-assess

Ideate Automation links to ACC

Ideate Automation, a software tool designed to automate tasks in Revit, now integrates with Autodesk Construction Cloud.

With the new ‘no-cost’ Ideate Cloud Connector, project teams can ‘seamlessly integrate’ with Revit models managed in Autodesk Build, Autodesk Docs, or BIM 360, enabling Ideate Automation’s scripting capabilities to run time-intensive BIM tasks in the background.

Examples of tasks that can be started in Ideate Automation manually or scheduled to run at regular intervals include: opening large Revit files, running the Revit ‘audit and compact’ process, exporting data from Revit into PDF, DWG, NWC, and IFC file formats, and publishing files to Autodesk Construction Cloud.

■ www.ideatesoftware.com

Looq launches reality capture platform

Looq AI has launched the Looq platform, a ‘onestop solution’ for surveyors, contractors, asset owners and engineers to capture infrastructure, in minutes, with ‘survey-grade accuracy’.

The platform is powered by what the company describes as a novel and fundamental computer vision and AI technology. There’s also a hardware component, a handheld proprietary ‘Q’ camera, which combines four high-resolution cameras (on either side, on top and on front) with ‘surveygrade’ GPS and an AI processor.

■ www.looq.ai

See page 34 for our interview with Looq AI CEO, Dominique Meyer

7 www.AECmag.com March / April 2024

News

New licensing for Unreal Engine and Twinmotion Nemetschek

E

pic Games is changing the way its Unreal Engine, Twinmotion and RealityCapture tools are licensed for firms in the AEC sector, and other industries other than game development.

Users at companies that are generating under $1m in annual gross revenue will be able to access the tools for free. However, firms that go over that threshold, will be subject to new seat-based enterprise software pricing from late April with the launch of Unreal Engine 5.4.

The annual cost per seat of $1,850 will include access to Unreal Engine 5.4 (and future versions released during the subscription period) along with Twinmotion for architect-friendly real

time viz and RealityCapture for creating realistic 3D models from photos. Twinmotion and RealityCapture will still be available to purchase individually. Twinmotion seats will be priced at $445 per year, and includes access to Twinmotion Cloud, as well as all updates released during the subscription period. Individual seats of RealityCapture will cost $1,250 per year, starting with RealityCapture 1.4.

Epic Games has also announced its goal to ‘fully integrate’ Twinmotion and RealityCapture with Unreal Engine by the end of 2025. The plan is to make them ‘fully accessible’ within the Unreal Editor.

■ www.unrealengine.com

■ www.twinmotion.com

Tekla 2024 structural tools launch

Trimble has introduced the 2024 versions of its Tekla software for constructible BIM, structural engineering, and steel fabrication management.

Tekla Structures 2024, Tekla Structural Designer 2024, Tekla Tedds 2024 and Tekla PowerFab 2024 boast an enhanced user experience and better collaboration through connected workflows, among other new features.

Tekla Structures 2024 offers ‘enhanced interoperability’ between Trimble software, hardware and third-party solutions. With support for open standards such as BCF (BIM Collaboration Format), users can

communicate model-based issues among project collaborators. buildingSMART properties are also supported by improved and extended IFC property sets. Automated fabrication drawing cloning has also been enhanced, with a view to benefiting steel and precast cast unit drawings creation.

Structural design and analysis software Tekla Structural Designer 2024 has been enhanced with ‘Staged Construction Analysis’, which takes into account that there is a sequence in construction and loading. This ‘fully automated’ process can be applied to the design of both concrete and steel structures.

■ www.tekla.com

Allplan, Frilo & Scia join forces

The Nemetschek Group is to bring together three of its AEC software brands – Allplan, Scia and Frilo – under the Allplan umbrella.

Allplan, a Germanheadquartered provider of BIM solutions, will be joined by structural engineering software brands Scia, based in Hasselt, Belgium, and Frilo, located in Stuttgart, Germany.

According to Nemetschek, the merger will provide a unique solution for architects and engineers. Allplan offers a single BIM platform from first concept through detailed design and multidisciplinary planning to prefabrication and construction deliverables.

Scia and Frilo deliver software for structural analysis and design, including both the analysis and design of 3D multimaterial structures as well as a ‘component orientated calculation approach’.

■ www.allplan.com

Scia Engineer gets new multicore solver

Scia Engineer 24, the latest release of the structural analysis software from Scia, part of the Nemetschek Group, has launched with a brand-new multi-core solver, which offers faster calculation times.

The new solver also allows for more control over the calculation process, enabling users to monitor ongoing tasks, review results, and interrupt processes as required.

Scia Engineer 24 also marks the release of one complete version, so users don’t need to navigate between different configurations.

■ www.scia.net

8 www.AECmag.com March / April 2024

News

ARCHICAD AI VISUALIZER

powered by Stable Diffusion

a modern residential building with wooden surfaces and a roof garden

Impress your clients with AI-assisted inspiration

www.graphisoft.com/uk

Alice uses AI to optimise Primavera P6 schedules ROUND UP Carbon Calculator

UK structural and civil engineering consultancy Perega has launched a CO2 accounting tool – The Carbon Calculator – to help its clients assess the whole carbon footprint of their projects, from inception to completion, and make greener material choices for a more sustainable built environment

■ www.perega.co.uk

New CEO for Bentley

Bentley Systems has announced that effective July 1, 2024, Greg Bentley will transition from Bentley Systems’ CEO to executive chair of its board of directors.

Nicholas Cumins, currently COO, will then be promoted to CEO

■ www.bentley.com

RSK invests in GIS

RSK Group, a specialist in sustainable solutions, has extended the use of Esri geospatial technology across its business including environmental impact and biodiversity net gain surveys for the water, renewable energy, property and construction industries

■ www.esri.com

Remap launches

Remap has been launched by two former Hawkins\Brown associates. The built environment consultancy offers a range of services including digital transformation strategy, computational BIM & digital design, 2D / 3D application development and design to construction solutions

■ www.remap.works

Fastest desktop CPU

Intel has launched the Intel Core 14th Gen i9-14900KS processor, which is being billed as the world’s fastest desktop processor. The processor delivers up to 6.2 GHz max turbo frequency out of the box, which will help accelerate CAD and BIM software

■ www.intel.com

MCAD exchange

BIMDeX has launched a suite of CAD interoperability products designed to help engineers exchange design data between leading mechanical CAD (MCAD) tools and Revit, AutoCAD and IFC. There are separate exporters for PTC Creo, Solidworks, Rhino, Autodesk Inventor and more

■ www.bimdex.com

Alice Technologies has added the ability to import Primavera P6 schedules directly into Alice Core, a new, enhanced version of its AI-powered construction scheduling software.

With Alice Core, contractors and asset owners can upload their baseline P6 or Oracle Primavera Cloud schedule and the software will then simulate scenarios to look for ‘optimised schedules’. Once the best path forward has been identified, it can be exported back to Oracle’s scheduling products.

According to CEO Rene Morkos, it is the most significant change to the software since it was first released in 2015.

“By making it fast and easy to bring information from Oracle’s scheduling products to Alice and back again, we are

enabling Primavera users to build on the investment they’ve made in their existing schedules,” said Morkos.

“We’re excited to see both contractors and asset owners maximise the impact that AI can have on their businesses with Alice.”

With the launch of Alice Core, Alice Technologies has also joined Oracle Partner Network (OPN).

Alice uses AI to analyse a project’s complex building requirements, generate efficient building schedules, and adjust those schedules as needed during construction. According to the company, users can simulate thousands of options in seconds with the software, then test different scenarios to find the optimum solution, leading to time and cost savings and reduced risk on projects.

■ www.alicetechnologies.com/alice-core

Revit add-in addresses sustainable design

Symetri has launched Naviate Zero, an Autodesk Revit addon for sustainable building design, which aims to address the need for reduced embodied carbon and CO2 emissions within the building industry.

The software focuses on the early stages of a building’s life cycle by helping designers and engineers to calculate emissions, and make informed decisions directly within the Revit platform.

The software builds on Symetri’s strategic partnership with One Click LCA, a global platform for lifecycle assessment, environmental product declaration, and

sustainability. By utilising One Click LCA’s databases, Naviate Zero provides architects and designers direct access to global building material data.

■ www.naviate.com

10 www.AECmag.com March / April 2024

Enscape to visualise energy efficiency of building designs

Chaos is working on a new Building Performance Module for its architectural visualisation software Enscape that will enable users to visualise the energy efficiency of buildings while designing in real time. Later this year the company will also launch a new ‘story telling’ solution codenamed Project Eclipse that allows users to ‘rapidly enhance’ their Enscape and V-Ray scenes.

The Enscape Building Performance Module is powered by technology from IES. According to the developers, it will help drastically reduce the amount of time required for architects to achieve optimum energy efficiency for their designs and improve sustainability. Users will be able to visualise aspects such as daylight energy usage, and

comfort analysis. The aim is to help architects see the impact of their earlystage design decisions and to help create more sustainable buildings.

“[The Building Performance Module for Enscape] will place the power of insightful decision making directly in your hands and democratise analysis,” said Phil Miller, Chaos. “Bringing this to Enscape connects the BIM model with performance analysis, and within the real time visualisation mode you already know. Your analysis will dynamically update with your changes, just as your visualisation updates within Enscape.” ■ www.enscape3d.com

Turn to page 30 for more on the Building Performance module and page 32 for more on Project Eclipse and collaborative workflows

Graphisoft shares Archicad benchmarks

Graphisoft has published Archicad benchmark scores comparing the new Apple Macbook Pro with Apple M3 Max silicon to older generation Apple computers, including a Mac Studio with an Apple M1 Max, Mac mini with Apple M1 and iMac with Intel Core i7. According to the company, compared to an Intel Core i7-based iMac, the new M3 Max chip is up to 2.5x faster at opening files, up to 3x faster in section generation and display, up to 3x faster in documentation layout update and display, up to 2x faster at rendering with

Cineware and Redshift engines.

For the Archicad benchmark tests, the company used three different model sizes: a 343 MB residential project model with 1,014 3D elements, a 530 MB commercial project model with 19,965 3D elements and a 2.26 GB stadium model with 94,944 3D elements.

As one would expect, the performance leap from Apple M1 and Apple M1 Max silicon is not as large, but still significant. No comparison is made with Apple M2 silicon. The full benchmark results can be seen at the link below.

■ www.tinyurl.com/archicad-mac

IES tracks building performance

IES has launched a new cloud-based platform designed to utilise energy models throughout a building’s lifecycle. With IES Live, an IES ‘Performance Digital Twin’ can be hosted online and connected with operational building data with a view to enabling continuous tracking and improvement of building performance.

According to the company, IES Live allows sustainability, energy and facilities teams to take control of building operation, reduce energy risk, increase resilience, unlock netzero potential, and deliver healthy and comfortable spaces.

The platform can display near real-time energy and carbon emission performance data from utility meters, BMS systems and IoT sensors, against a predicted ‘ideal’ energy benchmark, and, if available, occupancy data.

■ www.iesve.com/ies-live

Laser scan navigation

C

intoo has improved the way in which users can navigate lasers scans on its ‘Visual Twin’ platform using a ‘teleportation camera’.

Previously, scan to scan navigation was limited to the scan set up locations. Now users can teleport by pointing, clicking and setting the desired height.

Combined with TurboMesh, the mesh streaming engine, Cintoo explains that this provides the user with ‘unlimited virtual vantage points’ at the same resolution as the source scan.

Teleportation can also be used to navigate imported BIM or CAD models, to compare to as-built scans.

■ www.cintoo.com

11 www.AECmag.com March / April 2024

News

Leading US firms extend investments in Revizto ROUND UP

Going underground

Israeli startup Exodigo has secured $105m in Series A funding for its non-intrusive subsurface mapping solution for construction and utility firms. The Exodigo system combines advanced sensors, 3D imaging and AI to give a clear picture of underground conditions

■ www.exodigo.com

Newforma Konekt

A bridge between the Newforma Konekt platform for Project Information Management (PIM) and Procore’s contract administration platform is designed to support the ‘efficient review of submittals and RFIs’ by design teams

■ www.newforma.com

Bricklaying robots

Monumental, a startup that makes bricklaying robots, has raised $25m in funding. The money will help the company grow its team of engineers, scale the number of robots it can deploy on sites across Europe and increase the types of blocks and tasks the robots can manage

■ www.monumental.com

HVAC design

BlueGreen Engineering used FineHVAC BIM software from 4MCAD to design the HVAC system for a new Breast Cancer facility in the Westmead Hospital in New South Wales, Australia. The 3D BIM model helped maximise the utilisation of space within the building

■ www.4msa.com/brands/finehvac

Wireless networks

Amrax has partnered with network planning solution provider Ranplan Wireless to help designers use 3D modelling to create efficient wireless networks. Ranplan’s customers can visualise and optimise the design of networks using 3D models captured with an iPhone Pro or iPad Pro

■ www.amrax.ai

BuildData rebrands

BuildData Group is bringing together its brands to operate under Zutec. The BuildData Group name will no longer be used and the Createmaster, Createmaster Information Management, and Resi-Sense brands have been changed to Zutec

■ www.zutec.com

Revizto provides project teams at HED with a single source of truth

AEC collaboration software provider Revizto has secured enterprise agreements with two major US firms — HED, an architecture and engineering firm headquartered in Royal Oak, Michigan, and Hoar Construction, a contractor headquartered in Birmingham, Alabama.

Since 2017, HED has utilised Revizto for design coordination, quality control, and model clash detection. The new agreement will help expand its use of the software across its 400+ active projects in healthcare, education, housing, mixeduse, manufacturing, science, workplaces, and community design, and enable its team to invite an unlimited number of cross-functional users into any project.

“Revizto provides our project teams

with a single source of truth. If they need to review anything from the project details on the sheets to the coordination of the integrated disciplines in the model, they can turn to Revizto,” said Jason Rostar, CM-BIM, HED’s corporate practice technology leader. “We are very excited to start utilising the Revizto clash tools, as well as the new Phone App, which will give us access to critical information when out of the office and on the construction site.”

Hoar Construction has been using Revizto for six years to improve collaboration among its teams. The company will be using Revizto on various projects in the healthcare, higher education, office spaces, entertainment, and mixeduse development market sectors.

■ www.revizto.com

Hexagon joins forces with Nemetschek

Hexagon’s Geosystems division and the Nemetschek Group, which owns leading AEC brands including Graphisoft, Allplan and Vectorworks, have formed a strategic partnership with a view to ‘accelerating digital transformation within the AEC/O industry’.

As a first step, the partnership will look to drive the adoption of digital twins. The two companies aim to provide customers with tools, services and expertise for an ‘end-to-end digital twin workflow’ by joining the up-to-date building data through Hexagon’s reality

capture solutions with building operations powered by Nemetschek’s dTwin. Hexagon offers ‘end-to-end’ reality capture and Scan2BIM solutions to automatically capture accurate and realtime field data, as well as AI-powered solutions for building analytics and simulations, to generate progress insights. dTwin, Nemetschek’s digital twin platform, delivers data-driven insights and helps customers to manage facilities from design to operations. It is claimed to be the first solution that fuses all data sources of a building in one overarching view.

■ www.hexagon.com

■ www.nemetschek.com

12 www.AECmag.com March / April 2024 News

Autodesk

to take over payments from customers, diminishing the role of the VAR

Throughout its history, Autodesk’s business model has been constantly evolving. Someone joked on LinkedIn recently that ‘Autodesk’s business model is to get you to the next business model.’

For end users, this continual change has been seen in the form of product bundles, Suites, Collections, proprietary to subscription, tokens, removal of network licensing, mandatory named user licensing, etc. The rapid evolution has meant that many firms are operating the same software under different licensing conditions.

Autodesk also regularly changes the business models of the value added resellers (VARs) that sell its software. Over the years it has altered product margins, MDF (co-op market development funds), targets, incentives, promotions or execution. With each new amendment, it’s then down to the VAR to funnel their resources to try and meet the new goals.

For the last thirty plus years this magazine has witnessed this familiar dance between resellers and Autodesk. However, over the last seven years, with the internet and digital fulfilment, it’s become increasingly obvious that resellers have felt the squeeze like never before. Autodesk has been taking an increasing number of clients direct, while margins have been reduced.

Some VARs have responded by developing their own software, increasing their consultancy levels, banding together or acquiring other resellers to benefit from economies of scale. Some went out to get venture capital to create huge resellers than spanned the globe.

Last year in Australia and New Zealand, a region where Autodesk likes to test out changes to its business model, we heard that Autodesk was taking over the order processing of all the reseller deals. While the reseller would still actively manage the customer base and sell software services (SaaS) and their own services, Autodesk would take payment direct.

In February 2024 this was rolled out to the USA, and we hear it will happen in the UK next year. This represents a substantial change to Autodesk’s role within customer sales and will significantly alter the cashflows of VARs — especially the very large ones. It gives Autodesk insight

into every deal, every discount and it benefits Autodesk from the positive cash flow of the sales of all its software, paying commissions back to the reseller.

It’s hard to predict the outcome of all of this but it would indicate that the street price of Autodesk products are set to move closer to the RRP and web prices that are available.

For resellers this may actually be a good thing, in terms of income, as Autodesk dealers competed mainly amongst themselves and discounted to the bone to win business. We’ve seen numbers quoted of $600 million given away in discounts by the channel.

Now that competition can’t be based on price, the large resellers that relied on that cash flow might struggle as the economy of scale is somewhat neutered. It also seems one step closer to the inevitability of Autodesk going fully direct. However, Autodesk’s applications are complex and there will always be a market for training, consultancy services and implementation, which Autodesk will not have the local resources to fulfil.

Autodesk’s three yearly Enterprise Business Agreements (EBAs) are for customers of a certain size, but this has been reducing in qualification of seat count / value of company for a while. In the future, EBAs might become the best way to get discounts, but they come with the constraint that they are notoriously hard to get out of. On leaving, all the

software used would need to be repurchased at street price.

Some AEC firms decided that buying through resellers at discount and avoiding EBAs gave better flexibility. We think that these alternative routes will make less business sense as things progress.

While this is being presented as a change in process, it feels like the beginning of a change in business model and route to market. It’s like someone taking over driving a car in stages, as they swap seats. One has to wonder just what this will do to the number of resellers in the long term. At the recent Autodesk ‘One Team’ VAR Conference we hear there were some rather heated conversations.

Customers in the USA have had emails which ask them to set up Autodesk as a vendor on their internal procurement systems before their next purchase. Quotes and services are still handled by the reseller.

Autodesk states the benefits to customers will be a more ‘Personalised Service’, although, surely, this only applies to firms that never see their resellers in the flesh and a streamlined process with self-service tools to set up payment, subscription terms and renewals.

The other benefit mentioned by Autodesk is ‘Predictable pricing: enjoy consistent pricing, ensuring the best value for your investment’.

If, as we predict, street prices go up with no real route to negotiate, some customers may consider this plain cynical.

13 www.AECmag.com March / April 2024

Autodesk will soon collect direct payment from customers for software like Revit

Intel Core Ultra laptop processors launch with integrated CAD optimised GPUs

Intel has launched its new vPro platform with Intel Core Ultra laptop processors that will form the foundation for mobile workstations from Dell, HP, Lenovo and others.

For users of CAD and BIM software, the big news is the built-in Intel Arc GPU, which is said to offer up to twice the graphics performance of the previous generation, plus advanced features such as AI and hardware ray tracing.

Mobile workstations from major OEMs will also ship with a new dedicated Intel Arc Pro workstation graphics driver which comes with Independent Software Vendor (ISV) certifications and ‘enhanced performance optimisations’ for creative, design and engineering software Intel is currently working on certifica-

tions for several CAD tools including Autodesk Fusion, Autodesk Inventor, Siemens Solid Edge, Siemens NX, Dassault Systèmes Solidworks, Nemetschek Vectorworks, Bentley MicroStation, PTC Creo and more. Certifications for digital content creation tools, Autodesk Maya and 3ds Max are also in progress.

Intel Core Ultra processors also feature a dedicated AI acceleration capability spread across the central processing unit (CPU), graphics processing unit (GPU) and the new neural processing unit (NPU). In some AI workflows, the GPU still delivers the best performance, but the NPU is more power efficient. According to Intel, the new Intel Core Ultra 7 165H uses up to 36% less power than the previous generation Intel Core i7-1365U in certain video conferenc-

What AEC Magazine thinks

From the independent CPU benchmark scores we have seen, we don’t expect Intel Core Ultra to offer a significant performance benefit over Intel’s previous generation. Performance in single threaded CAD software might be on par, but highly multithreaded software, such as ray trace rendering, may get a boost thanks in part to the two additional LPE cores. However, it’s not in CPU

performance that Intel’s new laptop processors are likely to garner most interest among architects and engineers. With the significantly more powerful Intel Arc GPU in the Intel Core Ultra H-Series and a new dedicated Intel Arc Pro workstation graphics driver, the new chip could deliver sufficient performance for some 3D CAD workflows, so users do not need to buy a mobile workstation with a discrete GPU, such as the new

ing operations such as background blur in Zoom, leading to longer battery life.

Intel Core Ultra comprises two product families: the Core Ultra H-Series and Core Ultra U-Series. The H-Series features up to sixteen cores, comprising Performance cores (P-Cores), Efficient cores (E-Cores) and new Low Power Efficient (LPE) cores, for very lightweight tasks.

The top end Intel Core Ultra 9 185H processor features 6P, 8E and 2LP cores, and has a max P-Core frequency of 5.1 GHz. However, with a base power of 45W and a max turbo power of 115W, the chip looks best suited to high-end mobile workstations. For highly portable laptops expect to see a more limited choice of processors up to the Intel Core Ultra 7 165H. This CPU has the same number of cores, but a slightly lower max P-Core frequency of 5.0 GHz and a lower base power of 28W.

Meanwhile, the Intel Core Ultra U-Series uses less energy and comes with a base power of 15W and a max power of 57W. It achieves this by reducing the number of P-Cores to two, while retaining the same number of E and LP cores. In single threaded CAD workflows, performance shouldn’t be far off that of the H-Series. However, users can expect significantly lower multi-threaded performance. The U-Series also has less powerful Intel graphics. The top-end Intel Core Ultra 7 165U has a max P-Core frequency of 4.9 GHz.

■ www.intel.com

Nvidia RTX 500 Ada Generation.

Of course, for professional apps like 3D CAD, performance can mean very little without driver stability, and Intel’s investment in its discrete desktop and mobile Arc Pro graphics cards over last 18 months should certainly help here. However, Intel also faces stiff competition from AMD and its Ryzen Pro 7000 / 8000 Series processors, which also feature an integrated GPU, and a much more mature

pro graphics driver.

The other notable benefit of Intel Core Ultra is the power efficient NPU, which can be used to offload AI processing tasks that previously would need to be done on the GPU or CPU. With a growing need for AI processing in laptops including noise suppression and background blurring in video conferencing, the chip’s AI capabilities should prove more important moving forward.

14 www.AECmag.com March / April 2024

news

Workstation

HP ZBook G11 mobile workstations unveiled

HP has unveiled the G11 editions of its ZBook mobile workstation family (Power, Fury, Studio and Firefly), featuring a broad range of processors including Intel Core Ultra 5, 7 & 9 or ‘next-generation’ AMD Ryzen Pro with dedicated NPUs. All the new HP ZBooks have 16-inch displays (the Firefly G11 also has a 14-inch option) and come with a choice of Nvidia RTX Ada Generation Laptop GPUs.

The HP ZBook Power G11 features a new premium 16-inch design that is slightly deeper than the G10 edition. It’s available with Intel Core Ultra 9 or AMD Ryzen 9 Pro processors and comes with a choice of lap top GPUs, including the new Nvidia RTX 500 (see page 17) and RTX 1000 Ada, up to the RTX 3000 Ada.

NVMe PCIe 4 x 4 SSD. It starts at 2.04 kg and is 22.9mm thick.

The HP ZBook Fury G11 is billed as the most powerful ZBook with a choice of

up to 16 TB NVMe PCIe 4 x 4 SSD. It starts at 2.4 kg and 27.7mm.

The HP ZBook Studio G11 is HP’s premium thin and light mobile workstation, starting at 18.3mm and 1.73kg. It comes with a choice of Intel Core Ultra processors, up to Nvidia RTX 3000 Ada Laptop GPU, up to 64 GB SODIMM DDR5 memory and up to 4 TB PCIe 4 SSD.

The AMD version only goes up to the Nvidia RTX 2000, but can also rely on AMD Radeon Graphics built into the CPU, which features a pro graphics driver. The laptop supports up to 64 GB SODIMM DDR5 memory and up to 2 TB

‘desktop class’ Intel Core HX processors, including 13th Gen Intel Core (with vPro) or 14th Gen Intel Core (non vPro). It can be configured with the top-end Nvidia RTX 5000 Ada Laptop GPU. It supports up to 128 GB SODIMM DDR5 memory,

The HP ZBook Firefly G11 is at the budget end of the range and comes in 16-inch and 14-inch form factors. It’s available with Intel Core Ultra 7 or AMD Ryzen 9 Pro 8945HS processors and up to Nvidia RTX A500 Laptop GPU. Like the HP ZBook Power G11, it can also rely on the AMD Radeon Graphics built into the CPU, which should be powerful enough for mainstream 3D CAD workflows.

The 14” HP Firefly G11 A (AMD) starts at 1.4kg, the 14” HP Firefly G11 starts at 1.45kg and the 16” HP Firefly G11 starts at 1.76kg. All three models come in at 19.8mm.

■ www.hp.com/z

Dell claims smallest footprint 16-inch mobile workstation

Dell has launched five mobile workstations built around the new Intel Core Ultra processor. This includes the 14-inch Precision 3490 and Precision 5490, the 15.6-inch Precision 3590 and Precision 3591, and the Precision 5690, which it claims to be the world’s smallest footprint 16-inch mobile workstation.

All five laptops feature the new Intel Core Ultra laptop processors, which combine Central Processing Unit (CPU), Graphics Processing Unit (GPU) and Neural Processing Unit (NPU) in a single piece of silicon (see story left)

There’s a choice of 15W, 28W or 45W models depending on the machine. Some models can be configured without a discrete GPU and still be adequate for 3D CAD workflows, taking advantage of the much-improved Intel Arc graphics and Arc Pro driver with certifications for many leading CAD tools.

The Precision 3490 and Precision 3590 look best suited to entry-level 3D CAD, with a choice of processors up to the 28W

Intel Core Ultra 7 165H and up to Nvidia RTX 500 Ada (4 GB) graphics. The 3490 starts at 1.40 kg and the 3590 at 1.62 kg.

The Precision 3591 offers more powerful processors up to the 45W Intel Core Ultra 9 185H vPro and Nvidia RTX 2000 Ada (8 GB) graphics, which takes it into the realms of entry-level visualisation. It starts at 1.79 kg.

All three Precision 3000 Series laptops feature displays with a maximum resolution of 1,920 x 1,080 (FHD).

The Precision 5490, which Dell claims to be the world’s smallest and most powerful 14-inch mobile workstation, offers more powerful graphics options up to the Nvidia RTX 3000 Ada (8 GB). It comes with a choice of displays up to the QHD+, 2,560 x 1,600 resolution, 60Hz, anti-reflection, touch, 100%sRGB, 500 nits, wideviewing angle, PremierColor panel with active pen support. It starts at 1.48 kg.

Meanwhile, the Precision 5690 goes all the way up to the Nvidia RTX

5000 Ada (16 GB) graphics for more demanding visualisation workflows. The Precision 5690 is the only new model to offer a 4K display, with an OLED touch, 3,840 x 2,400, 60Hz, 400 nits WLED, Adobe 100% min and DCI-P3 100%

www.AECmag.com

Nvidia RTX 2000 Ada GPU for compact desktop workstations launches

Nvidia has added the Nvidia RTX 2000 Ada Generation (16 GB) to its family of desktop workstation GPUs based on the Ada Lovelace architecture. The $625 add-in board launched last month at Dassault Systèmes 3D Experience World in Dallas, Texas – a significant location given that product designers and engineers are a key target audience (along with architects).

The Nvidia RTX 2000 Ada Generation is a low-profile dual slot board, designed to fit in small form factor and ultra-compact workstations like the HP Z2 SFF / Mini and Lenovo ThinkStation P3 Ultra. It comes with both a half-height and fullheight ATX bracket, so it can be used in full sized towers as well.

The Nvidia RTX 2000 Ada Generation comprises 2,816 CUDA cores delivering

12 TFLOPs of peak single-precision performance, 88 Tensor (AI) cores delivering 192 TFLOPs of peak performance and 22 RT (ray tracing) cores delivering 28 TFLOPs of peak performance.

The Nvidia RTX 2000 Ada Generation (16 GB) will replace the Ampere-based Nvidia RTX A2000 (12GB) and provide a much more price competitive option than the $1,250 Nvidia RTX 4000 SFF Ada Generation (20 GB), which launched last year.

The Nvidia RTX 2000 Ada looks identical to both GPUs and shares many of the same characteristics — four mini DisplayPort 1.4a connectors and a 70W max board power (so it doesn’t need an external 6-pin connector). However, unlike the Nvidia RTX 4000 SFF Ada, it does not support Nvidia Quadro Sync II or 3D stereo.

What AEC Magazine thinks

The Nvidia RTX 2000 Ada Generation is an important release for Nvidia as it hits the price/performance sweet spot for architects, engineers and product designers who want to take their design workflows beyond CAD/BIM and into the realms of visualisation.

Prior to the launch, users had to pay a significant premium to get a low profile Ada Generation board (Nvidia RTX 4000 SFF Ada), or go for the older Nvidia RTX A2000, which has less memory and does not support DLSS 3.0 which can make a big difference to real time

Target workflows for the Nvidia RTX 2000 Ada Generation include 3D CAD, BIM (Solidworks, Siemens NX, Revit, etc.) and, in particular, design-centric visualisation and VR. Compared to the Nvidia RTX A2000, Nvidia reports superior performance in rendering (1.5 times better in KeyShot, 1.5 times better in V-Ray and 1.18 times better Solidworks Visualize) and in VR (1.4 times better in Enscape and 1.3 times better in KeyShot).

Nvidia also highlights much bigger rendering performance gains over 2019’s Nvidia Quadro P2200, a card that many users will likely be making an upgrade from. The Nvidia RTX 2000 Ada Generation is up to four times faster in Solidworks Visualize. This big leap is probably because the 5 GB GPU does not have dedicated ray tracing cores, but possibly because it’s under-resourced in terms of memory.

As the race for AI continues, Nvidia is also keen to highlight the Nvidia RTX 2000 Ada Generation’s AI credentials. According to Nvidia, the GPU doubles the AI throughput compared to the previous generation, thanks to fourth Gen Tensor cores. With text to image software Stable Diffusion, which can be used for architectural concept design, Nvidia reports 1.6 times better performance compared to the Nvidia RTX A2000.

Nvidia says a lot of work has been done ensure that all of its RTX Ada Generation GPUs are able to run AI inferencing on the desktop but admits that the RTX 2000 Ada won’t be a ‘powerhouse for AI training’.

■ www.nvidia.com

performance in tools such as D5 Render, Chaos Vantage, Autodesk VRED and Nvidia Omniverse.

But what about the low end of the pro GPU market? For now, CAD users must still rely on the Nvidia T400 or T1000, which lack the dedicated Tensor cores

for AI or RT cores for ray tracing. As CAD applications start to introduce ray tracing into the viewport and, especially as AI becomes more important, we would not be surprised if Nvidia introduces entry-level Ada Generation RTX desktop cards soon.

16 www.AECmag.com March / April 2024

Workstation news

Dell unveils 14th Gen Intel Core workstations

Dell has updated its entry-level desktop workstation line-up with the launch of the Precision 3280 CFF (compact form factor) and Precision 3680 Tower. Both workstations feature 14th Gen Intel Core Processors and come with a choice of Nvidia RTX GPUs.

The Dell Precision 3280 CFF is billed as the world’s smallest workstation that supports Tensor core GPUs, referring to the optional Nvidia RTX 2000 Ada Generation or Nvidia RTX 4000 SFF Ada Generation. Both GPUs are well suited to CAD and visualisation workflows in applications including Enscape, Twinmotion and V-Ray. The machine can also be configured with CAD-focused GPUs, including the Nvidia

T1000 and AMD Radeon Pro W6400.

The compact chassis is designed for flexibility. It can be set on a desk, mounted on the back of a monitor, or kept in the datacentre or server room, for access over a 1:1 connection. Seven units can be stored in a 5U rack space.

The Dell Precision 3280 CFF can support a range of 14th Gen Intel Core CPUs up to 65W (which can be driven at 80W), but not the top-end 125W models.

The Dell Precision 3680 Tower is a more traditional desktop workstation offering more expandability, and higher spec CPUs. It comes in at 373 x 173 x 420mm. It supports a wide range of 14th Gen Intel Core CPUs, up to the 125W Intel Core i9-14900K vPro. According to Dell, this can be run up to 253W for extended

periods and, rather than having a limited Turbo experience of 15 to 30 seconds, the Turbo is now unlimited. This sustained performance is in part due to a new ‘Premium Cooling Solution’ featuring an air shroud and enhanced side panel venting, which is designed to keep the system running cool and quiet.

■ www.dell.com

The compact Dell Precision 3280 CFF can be mounted behind a display

Ray tracing and AI comes to entry Nvidia Ada laptop

GPUs

Nvidia has added new entrylevel models to its line-up of Ada Lovelace pro laptop GPUs. The Nvidia RTX 500 and 1000 Ada Generation will be available soon in highly portable mobile workstations from Dell, HP, Lenovo and MSI. They will join the Nvidia RTX 2000, 3000, 3500, 4000 and 5000 Ada Generation laptop GPUs which launched last year.

The Nvidia RTX 500 and 1000 Ada will replace the Ampere-based RTX A500 and A1000. On paper, there’s a significant generation-on-generation improvement in single precision floating-point performance (TFLOPs), although the new GPUs

have a higher TGP (total graphics power) so some of this may be because the GPUs can draw more power. There’s a much bigger leap in AI performance thanks to fourth generation Tensor cores.

AI is central to Nvidia’s messaging around the new chips. The company points out that next generation mobile workstations with Ada Generation GPUs will include both a neural processing unit (NPU), a component of the CPU [think Intel Core Ultra], and an Nvidia RTX GPU, which includes Tensor Cores for AI processing. The company states that the NPU helps offload light AI tasks, while the GPU provides additional AI performance for

What AEC Magazine thinks

It’s an interesting time for entrylevel mobile workstations. Nvidia is facing increased competition from AMD and Intel who are introducing CPUs with increasingly powerful integrated GPUs that can deliver good performance for mainstream 3D CAD. It means there is less need for a discrete Nvidia GPU in certain workflows, potentially saving money and power.

For example, the AMD Ryzen Pro 7000 Series, at the heart of the HP ZBook Firefly G10 A and Lenovo ThinkPad P14s, can deliver good performance in Solidworks, Revit and other CAD/BIM tools. New Intel Core Ultra laptop processors with Arc Pro graphics offer a similar single processor value proposition (see page 48 for more on this)

Of course, with a discrete

more demanding day-to-day AI workflows.

Nvidia claims the higher level of AI acceleration delivered by the GPU is essential for tackling a wide range of AI-based tasks, such as video conferencing with high-quality AI effects, streaming videos with AI upscaling or working faster with generative AI and content creation.

According to the company, the new Nvidia RTX 500 GPU delivers up to 14x the generative AI performance for models like Stable Diffusion, up to 3x faster photo editing with AI and up to 10x the graphics performance for 3D rendering compared with a CPU-only configuration.

■ www.nvidia.com

GPU, Nvidia can scale up performance, and not just in terms of core 3D graphics or hardware ray tracing. Nvidia’s Tensor Cores can significantly boost AI processing. At the moment, most of the benefits for AEC firms are either generic (text-to-image or background blur on video calls) or focus on photo editing or visualisation. Many viz tools include AI

de-noising or Nvidia DLSS 3 which boosts 3D performance by generating additional highquality frames.

As more AEC software firms embrace AI, applications are likely to grow, although with a growing trend for browser-based BIM tools and centralisation of data, much of this processing could be handled by GPUs in the cloud or in servers.

17 www.AECmag.com March / April 2024

18 www.AECmag.com March / April 2024 GENERATED WITH ADOBE FIREFLY

The productivity promise of auto-drawings

In a world swimming in AI-related hype, several CAD software firms are making serious progress in the race to eliminate workflow bottlenecks using new technologies. Martyn Day looks at the field of drawing automation and identifies some of its frontrunners

Throughout their history, desktop CAD systems have been sold on the promise of delivering one overriding benefit: improved productivity when compared to manual drawing.

That makes sense, since productivity improvements are always sought by firms looking to save money and to increase their competitive edge. In these highly digitised times, the deployment of technology has become a key differentiator in the AEC world.

While it took twenty years for most AEC firms to move from drawing boards to 2D CAD, many like to think that the adoption of 3D CAD and BIM has been faster. In fact, that move has also taken the best part of two decades. And many of those firms using BIM tools have still not progressed as far as they might, remaining resolutely 2D-centric. On the other hand, who can blame them, when the primary deliverable of most contracts – and the most frequently cited target in legal wrangles – are drawings?

BIM was sold to 2D CAD users as a way to model their buildings and get automatic sections and elevations as a base for drawings, with the added benefit of coordinated documentation updates when model designs change.

In fact, what has happened is that the number of drawings produced has mushroomed. Many BIM seats are not involved in design at all, but instead focus on documenting the relatively poor automated output of 3D models.

A cynic might applaud the CAD software firms for increasing the costs associated with a documentation seat and driving appetite for complex document management systems to handle greater volumes of drawings. After all, many firms take the output from BIM tools such as Revit and perform refinements to drawing output in AutoCAD - the king of the 2D drawing world. However, this undermines the benefit of keeping both models and drawings in the BIM environment, breaking the links needed to coordinate updates to sections and elevations.

As documentation mushrooms, and clients increasingly demand BIM deliverables (even if they only do so because that’s what their consultant advises), the result is a heftier workload that consumes available resources and delivers a serious hit to the bottom line.

Many design IT managers have told me in confidence that they wish that drawings would simply ‘go away’. They would prefer the model to be the deliverable from which all data is extracted by con -

19 www.AECmag.com March / April 2024

Cover story

tractors and project managers.

But here, they are at odds with the industry’s foremost institutions, such as the Royal Institute of British Architects (RIBA) and the American Institute of Architects (AIA), not to mention complex legal frameworks that are still largely predicated on drawings.

Here to stay

Another question I hear regularly is, “Why can’t AEC be more like manufacturing?” The misconception here is that once manufacturing designs move into CAD, the importance of drawings is greatly diminished.

But it might shock readers to hear that many manufacturing sectors – and in particular, automotive and aerospace – are just as burdened by the need to produce manufacturing drawings to accompany their 3D models, typically for reasons relating to fabrication and legal compliance. And this is the case even where parts are 3D printed directly from a 3D CAD model.

With that in mind, it’s safe to say that drawings are simply not going to ‘go away’. They will continue to be essential deliverables, although uses may change and their relevance in some areas may decline.

ings. When Gehry’s practice switched to modelling buildings in 3D CAD and started sending Catia models to fabricators, he saw a corresponding drop in quotes and bids were suddenly aligned to within 1% of each other. As a result, his buildings were viable – but Gehry still produces 2D section drawings in AutoCAD in order to detail more standard aspects of designs. In other words, models offer clarity in situations of complexity, but for most buildings, simple rectangles are hardly likely to bust budgets.

Auto-drawings on demand

When I first met Greg Schleusner, HOK’s director of design technology, at a Bricsys (BricsCAD) meeting in Stockholm, Sweden in 2019, we quickly got talking about automated drawings. He explained to me that this was something the industry desperately needed, but that nobody seemed interested in developing the technology.

‘‘

drawings that we must make. So not only are the drawings themselves costly, but we also have this interoperability problem that needs to be solved. We have to send model data between design tools to make drawings. An open auto-drawing tool would allow design teams to stay in Rhino,” said Schleusner.

“What do you need to make drawings? Do you need an accurate model? If we solve drawing automation, we are going to have to make better models. If we make better models – hey, we have better models! We are going to have to improve what we model to get a better drawing. Right now, we have so much drawing work, that we all end up ‘faking stuff’ in drawings, just to get them out the door. I actually think auto-drawings is a very complementary idea to making better models.”

BIM 2.0’s killer feature is not going to be cloud; it’s going to be automation. Auto-drawings won’t just improve the speed of drawing production. They will ultimately mean fewer skilled people being tied up in documentation

This is not to say, however, that there is nothing to be done about this situation. In fact, modern mechanical CAD (MCAD) tools do a much better job of automatically creating drawings and reducing repetitive tasks than today’s BIM tools, even for very complex parts and assemblies. That said, even with this progress, it’s estimated that engineers spend between 25% and 40% of their time refining drawings.

This is frustrating, because in modelling a building, a car or an aircraft, most design and engineering decisions have already been made. That makes it hard to justify excessive amounts of time spent creating 2D drawings from a model, a process that adds no real value to a project.

And as complexity increases, the case for drawings starts to fall apart. Take, for example, Frank Gehry, who couldn’t get any of his buildings made until he began using Dassault Systèmes Catia and McNeel Rhino. The drawings were so hard to understand that fabricators would bump up costs in order to accommodate the impact of inevitable misunderstand-

The case he put forward was clear. At that time, HOK was spending around $18 million per year on creating and managing drawings and construction docs (inclusive of staff costs, software and so on). Schleusner reckoned that, if the firm could automate 60% of the work associated with construction documents, or maybe 50% of the work associated with drawings, it would cut those costs in half. Even partial automation had the potential to deliver significant cost benefits.

While researching the topic, Schleusner discovered a Korean open-source project conducted for a building authority that focused on taking in IFC data and creating floor plan drawings for fire code review (www.tinyurl.com/KBim-D-Generator). Labels, text, drawing grids and dimensions were all added by the programme when the automated 2D drawings were viewed. He was impressed by the quality of the results. Everything displayed was dynamically created on the fly. It didn’t exist in the dataset.

“I think, at least from my perspective, the problem is we import all this other stuff into Revit. That is so much of our design effort, just to waste time to make

Since 2019, Schleusner has been bending the ears of leaders at many of the biggest CAD vendors, trying to spark their interest in auto-drawings. Now, with the advent of automation and AI, those vendors are scrambling to identify where might be best to apply new technologies. Since drawings represent ‘low-hanging fruit’, interest in autodrawings has exploded.

Most of the early auto-drawing solutions will begin by offering configuration-based output (drawing templates). But Schleusner is keen to see work begin further upstream, at the project level. This would involve understanding what drawing sets are required by project managers and project architects, not just automating ‘the drawing’ but also automating project document sets. This would require the equivalent of HTML and CSS for drawing content, settings and how it is all put together.

“If the industry can solve this autodrawing problem as a service, it could shatter the market into one thousand solutions, which is perfect. I don’t think you’ll need monolithic applications, because drawings are external and it’s an assembly process.”

In passing, Schleusner has also lamented that PDF is the ultimate industry deliverable, as historic constraints of standard drawings sizes 1:100/200 and so on limit the digital canvas. He explains that one of the reasons the industry makes so many drawings is because of the physical limitations of displaying text

20 www.AECmag.com March / April 2024

’’

legibly within paper documents. “Couldn’t we just send one bloody big PDF drawing, which contractors never print out? If you can’t see something, then zoom in! While certain trades really liked this idea, it’s true that some want to print things out, which is a limitation. But if we can’t get the digital delivery of drawings right, how are we going to ever do the digital delivery of models?”

Greg Schleusner will be speaking at AEC Magazine’s NXT BLD and NXT DEV conferences on 25 / 26 June 2024 at London’s Queen Elizabeth II Centre (www.nxtbld.com)

The Gräbert view

Gräbert is based in Berlin and has been a key independent DWG software developer since 1994. It has armed many of the best-known CAD players with OEM DWG capabilities but is also a solutions developer in its own right. DWG tools that fall under its ARES Trinity brand (www.tinyurl.com/ares-trinity) work on desktop, mobile and cloud, where they compete head on with Autodesk AutoCAD and LT. You will find Gräbert tech in Snaptrude, Draftsight (Dassault Systèmes), Trimble, Solidworks, Onshape – all serious players in the AEC and MCAD worlds, which require good DWG capabilities and want to leverage Gräbert’s cloud-based DWG Editor in particular.

Gräbert has been developing automated drawing technology for a number of years. The company is now at the stage where it has put code into the hands of some clients to test out. Thanks to the company’s broad reach in the CAD community, you can expect to see automated drawing features appear from competing CAD firms, aiming at Autodesk AutoCAD / LT and Revit. Some, such as Draftsight, will choose to embed these capabilities in their own CAD programmes. Others may opt to make autodrawings a cloud service, where users just drag and drop their IFC or RVT into the service and get back drawings for all the floors of their projects.

Gräbert’s technology does not currently use AI, but is procedural and based on template configurations. In my dealings with Gräbert, I have to say that the company is unusual, in that it doesn’t oversell its products. In fact, it’s more likely to downplay its capabilities and is not all about pushing the Gräbert brand. In many ways, this reminds me of McNeel, the developer of Rhino.

I recently caught up with CTO Dr Robert Gräbert to discuss the company’s

auto-drawing development. He explained that with templates and rules, it’s possible to get quite far in auto-drawing creation without AI. AI approaches look good for investors, he said, but template views offering a variety of layout styles are already available in MCAD applications such as Autodesk Fusion.

And, on the subject of Autodesk, he foresees Autodesk adding similar template-based drawing layout technology, either in Revit or on the cloud as a service.

“We think drawings are not going to go away. You’re not going to get to a future where you’re just going to exchange models, but you don’t want to spend more time working on drawings,” explained Dr Gräbert. “The fact is that BIM modellers have done a poor job of producing documentation. Users are frustrated that the drawings themselves don’t contain a lot of information. They’re just ‘the output’, they don’t capture anything.”

He continues: “There was this hope that if we moved to exchange 3D models, plus metadata, we were going to get better collaboration within industry. It’s a big vision and it’s attractive, but I think what we’ve seen is the reality that it’s very difficult to manipulate these models outside of dedicated authoring tools like Revit or others.”

really materialised in a meaningful way.”

According to Dr Gräbert, every BIM tool today gives the user the ability to create content, view it, deliver section views, plan views, vertical sections. It gives them the geometry that they’re going to use to create that view from the model, and that’s already 90% of drawing automation.

“All we are talking about today is, ‘We know you need fifteen sheets, and you need a view per floor. And we know that you’re probably going to want to tag your rooms. And probably, you want to have some dimensions aligned to it.’ We’re really talking about annotation and view orchestration on automation.”

‘‘ BIM modellers have done a poor job of producing documentation. Users are frustrated that the drawings themselves don’t contain a lot of information. They’re just ‘the output’, they don’t capture anything

Most software vendors have no interest in exchanging models in a neutral format, he believes. In other words, they want users to adopt their own format. “And legally we’ve never got to the point where the model is accepted as the deliverable. It’s an additional deliverable, potentially,” he adds.

Dr Robert Gräbert, CTO Gräbert ’’

“The biggest proponents of BIM are large owners, because they can reap the benefits of all their constituent subcontractors doing this together. Clients now say, ‘I want to see a 3D BIM model’, but what hasn’t happened is that this model is not taken from design, through construction and into operation. That never

Gräbert has decided to differentiate between what it can automate and what it should automate. This is a big part of its work in this area over the past year –and the focus is very much on continual improvement. “It’s still not perfect, but we’re working very closely with Snaptrude and others. We can ingest an IFC or Revit, and we can output the PDFs. Now we have put that behind a web service. It’s still usable as a desktop product: if you are an ARES user, you can bring in IFC or RVT, and we will auto-generate floor plans with sections, as to what we think is appropriate. We have solved simple problems, like, how do you figure out where to put all the labels and everything else? We just implemented an approach that makes sure that labels never overlap and then you can tweak and modify after.”

The web service is a kind of design automation API, he explained. It will create a job transform order for any template, request the model be processed and the results placed in a project Slack channel. “So, you get an updated PDF output. That’s probably not a real use case, as somebody would download and want to do tweaks. But the output is already very good. It’s just a long road to address the fundamental building blocks to get full automation,” he said.

Gräbert is now experimenting on how to

21 www.AECmag.com March / April 2024

Cover story

make the process more customisable. Users would configure a local job, and the cloud service would process it. For now, the cloud features are still in the early days of development and require a monitoring system to create a commercial grade offering. Gräbert also doesn’t have a price tag for this service yet. This is currently under evaluation, along with scoping out the kind of firms that might find this useful, such as AutoCAD, Revit and ARES users.

Pricing in the auto-drawings market could become a very interesting battleground. If drawings become 50% automated, firms like HOK will not pay half their budget for this kind of automation. Software firms pushed out of subscriptions for seats might push for value-based billing, perhaps a percentage of project value, but this is unpopular as hell.

As Dr Gräbert mused during our conversation: “There’s going to be some resistance in the market to a ‘value’ based fee here, because customers want to pay a fixed price for this. Maybe a price per project could be something that software vendors could offer, but this is a reason why big groups of CAD users are meeting up, because they don’t want to pay vendors a portion of their revenue. They want to pay a fixed fee, ideally, once and never again – and if they have to pay annually, so be it, but that’s all.”

Powered by Gräbert

I also talked with Jonathan Asher, Catia global sales director at Dassault Systèmes. He confirmed that the Gräbert technology is being used in his company’s MCAD software Solidworks and that its DraftSight programme is built on top of Gräbert’s ARES technology.

Auto-drawings were announced at Dassault Systèmes 3D Experience World in February 2024, a technology that will be available to Solidworks users through the Dassault Systèmes 3D Experience cloud platform. The focus is very much on MCAD users, but obviously DraftSight (www.draftsight.com) will get the technology into the AutoCAD and AEC markets. Nobody is sure of how much it will cost.

‘‘ We are going to have to improve what we model to get a better drawing. Right now, we have so much drawing work, that we all end up ‘faking stuff’ in drawings, just to get them out the door

Greg Schleusner, director of design technology, HOK ’’

Unlike the many startups arriving fresh to the AEC market, Gräbert’s long-time stability, and its income from licensing its DWG technology and selling ARES, mean that the company doesn’t have venture funding. As such, it completely lacks the ‘big story’ pitch that attracts investors. Nor does it face shareholder pressure to deliver huge returns. It works with firms it likes and solves the problems its customers raise. So, if all this takes some time, Gräbert can just keep going.

Dr. Robert Gräbert will be speaking at AEC Magazine’s NXT DEV conference on 26 June 2024 at London’s Queen Elizabeth II Centre (www.nxtdev.build)

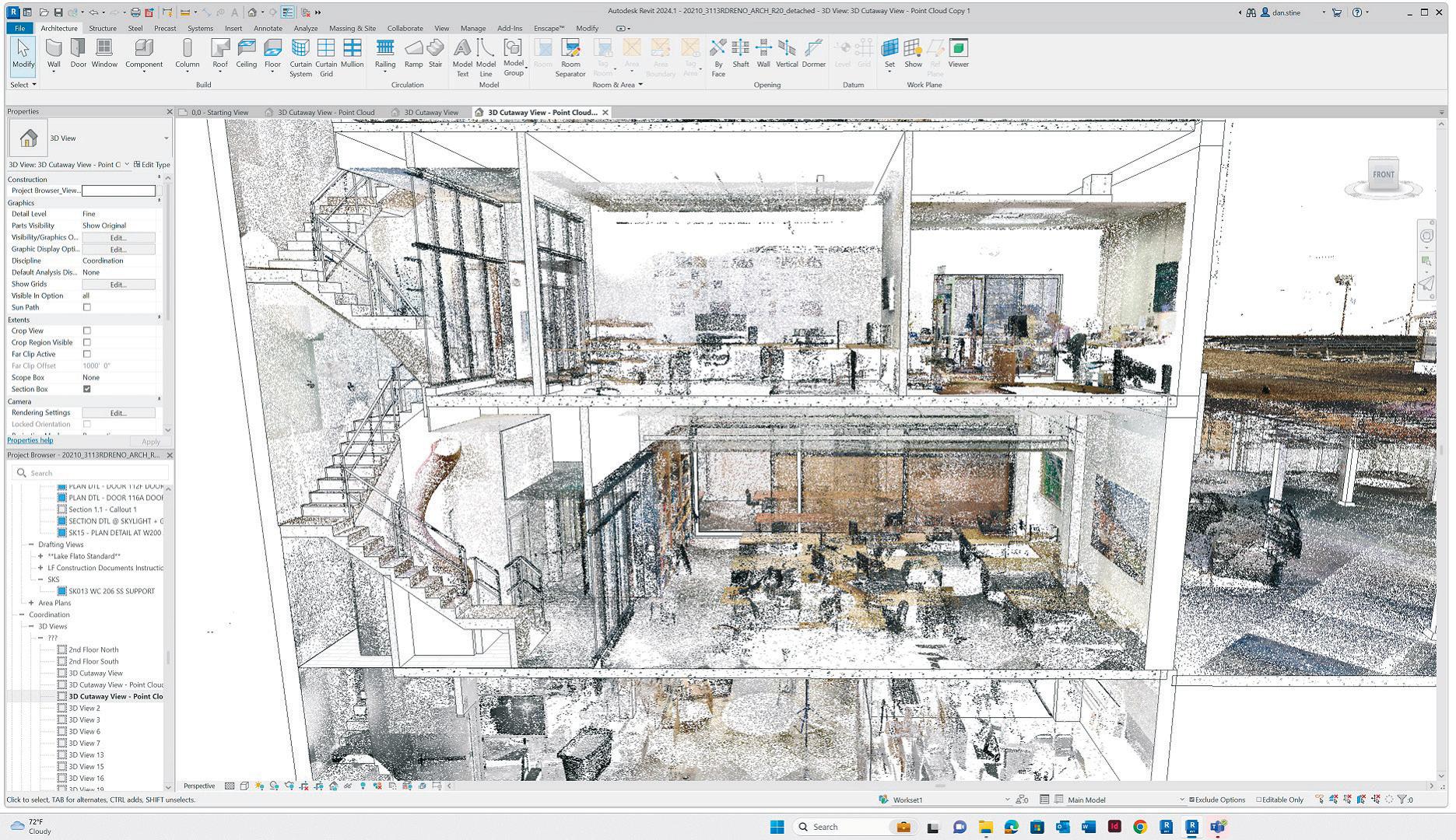

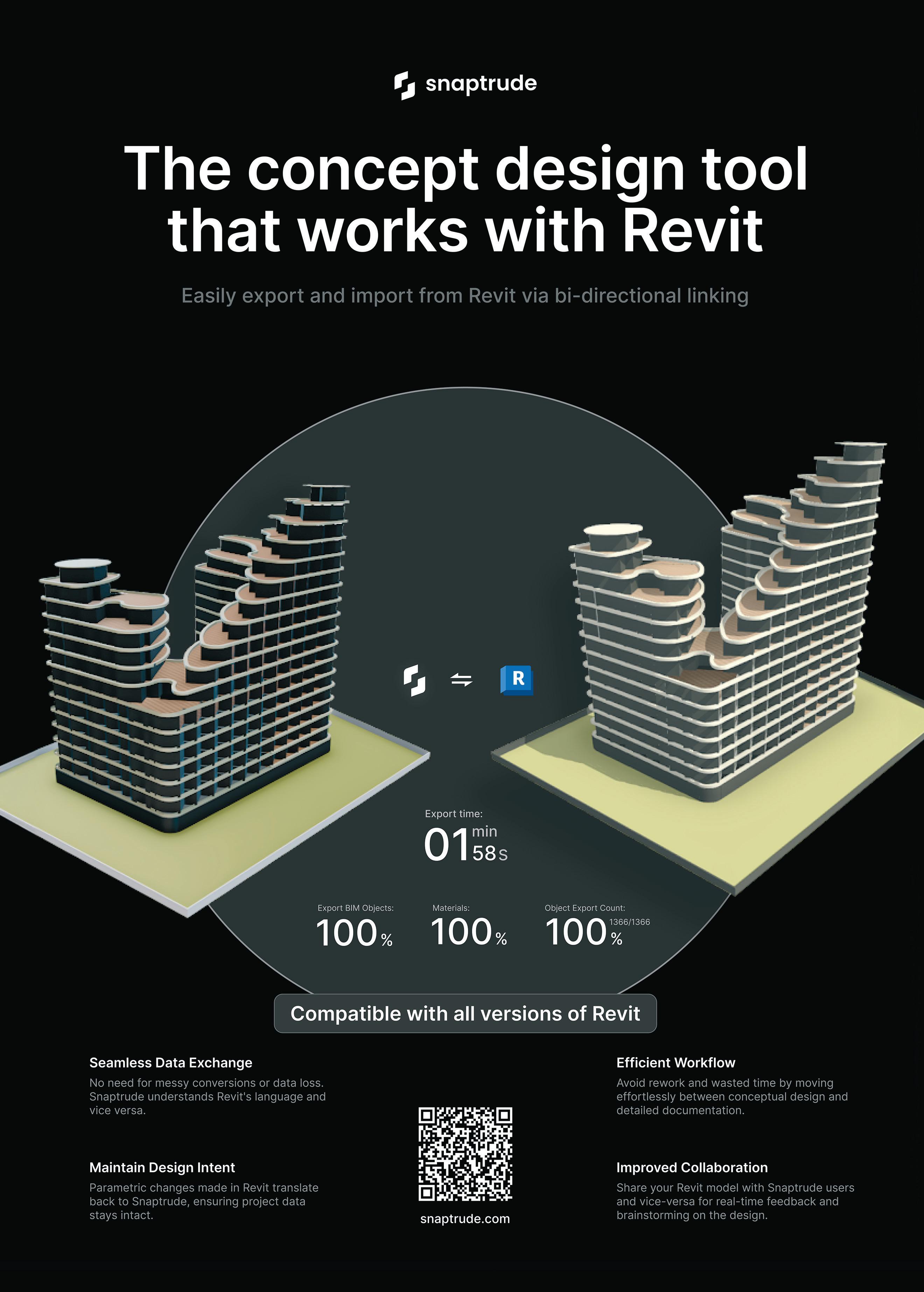

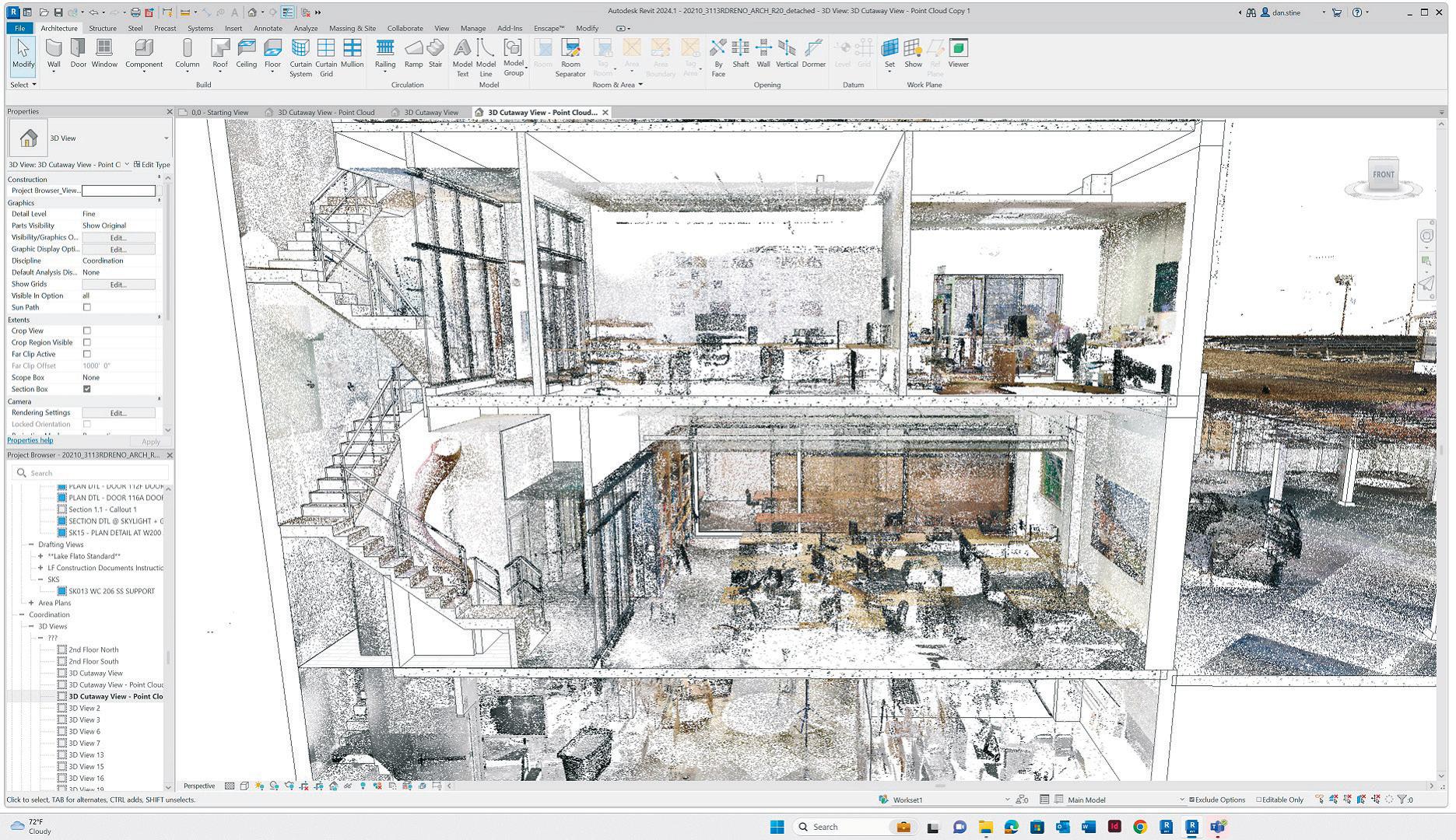

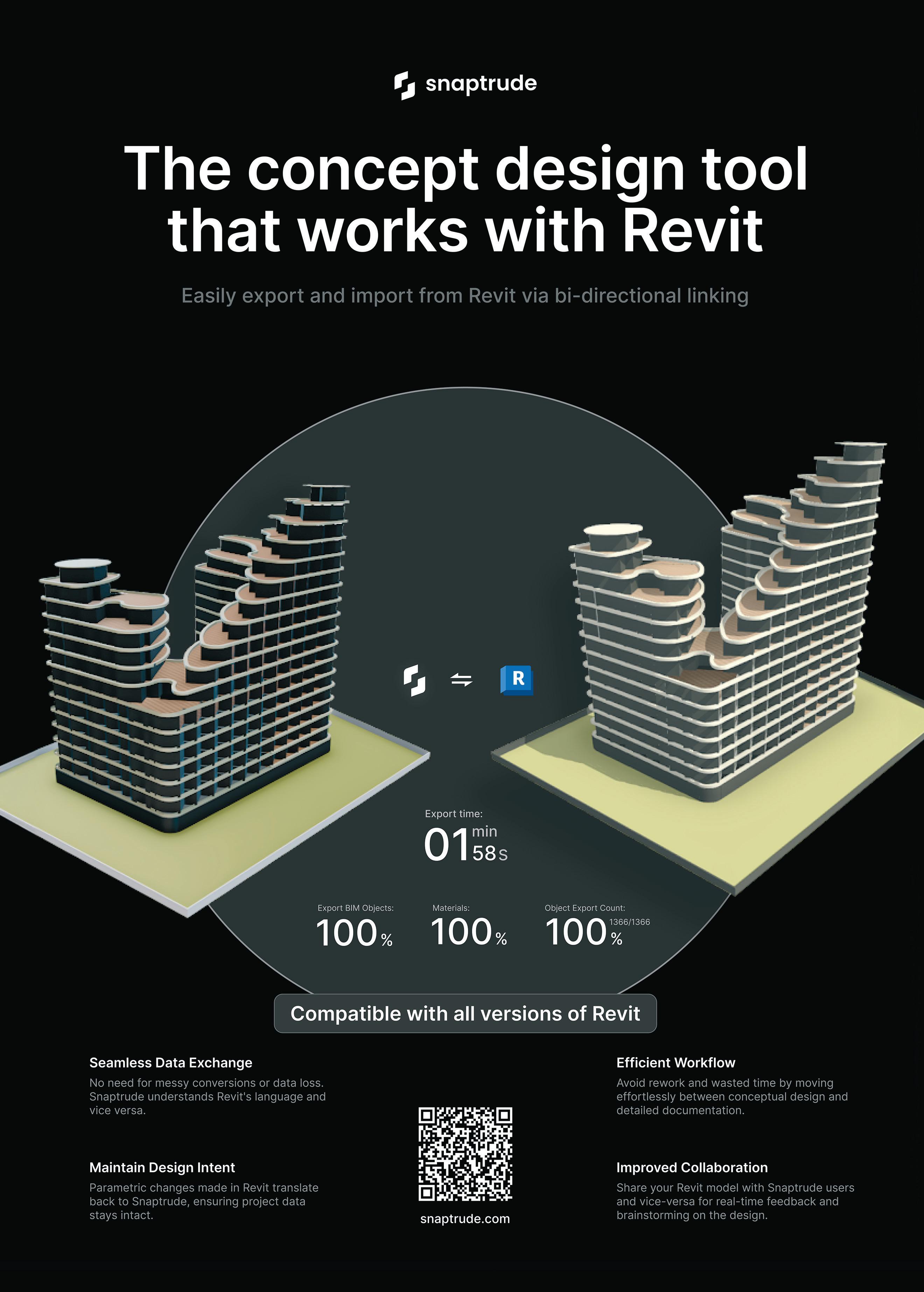

Meanwhile, Snaptrude (www.snaptrude.com) represents the next generation of cloud-based BIM tools and, while the company has been mostly working on the 3D part of the RVT file format, by licensing Gräbert’s DWG technology, it will get a cloud-capable 2D DWG engine, without having to reinvent the wheel.

Snaptrude has been experimenting with the auto-drawing capability, which I saw in January 2024, running autodimensions. It’s still early days, but when Snaptrude has figured out its play, it’s likely the company will be able to quickly enable it for every user.

Trimble Drawing is also based on Gräbert ARES. As the company is part of this OEM family, I am pretty sure that Trimble will also be offering auto-drawings soon.

Powered by AI

Eventually, I suspect AI will feature in most auto-drawing solutions. To start with, there will be procedural AI, providing configurations and so on. From there, we will see more companies attempting to use AI to learn drawing layouts and perform automation functions, such as auto dimension, auto label, auto title

block, auto table, auto grid and so on.

To figure out where all this might lead, it’s useful to first look at Swapp. Based in Israel, Swapp’s initial application of AI to Revit promised us a miracle. What it proposed was to take users from 2D sketches to fully detailed BIM, with all the drawings involved, in the time it took them to go and have lunch.

Along the way, Swapp would learn from past projects and then automate Revit detail models – a proposition that favours standard, rectangular, predictable buildings, such as student blocks, hospitals and offices.

That was the message delivered by Swapp’s chief science officer Adi Shavit at AEC Magazine’s NXT BLD 2023 (www.nxtdev.build/speakers/adi-shavit). More recently, I caught up with Shavit to discuss auto-drawings. As he explained, the company has pivoted its software engineering focus to deliver the auto-drawing component as a bespoke solution, as well as expanding to Canada and the UK.

Shavit explained how, in talking with clients over the past year, the number one feature that they wanted to know more about was auto-drawings, and not so much about the magic at work in turning 2D sketches into fully detailed Revit models.

However, to understand how to make a drawing, Swapp needs the model data. “All of our processing is done on our system in the cloud. We have a plug-in for Revit, which does the export and the interoperability part, but nothing really happens on the desktop. It talks to our system and sends us the model, then we generate the construction documents from that data and bring it back into Revit drawings,” Shavit explained.

“We are working with architecture companies, and we essentially say, ‘You can think of Swapp like you outsource drawing production to a team in Belarus or in India, but our delivery times for drawings are constant.’ Meanwhile, our customers are saying that they are invoicing their customers sooner, by one month or two months. And for us, that’s the name of the game. We’re charging based on project size.”

When it comes to rules/template-based automation, Shavit told me that Swapp tackles the output standards problem from the opposite direction, as every office has its own standards, and even within firms, teams have different standards. Swapp treats the standardisation of output wider and at much higher resolution. There is no uniform Swapp standard. It is derived from past projects, to

22 www.AECmag.com March / April 2024

generate drawings that are compliant with the specific customer’s way of doing things. This can start with Swapp looking at its past projects (both the BIM and the drawings), or it can be trained on projects currently in the works.

Shavit readily admitted that the documentation that is generated is not 100% complete. There are always special cases, special fire requirements for certain parts of the building and other external requirements. Documents will still need to be put past a senior architect for liability issues. But he claimed the company is trying to perform between 80% and 95% of the most tedious, time-consuming work, enabling skilled staff to focus their efforts on other aspects of projects.

At its heart, Swapp is a consultative, bespoke auto-drawing solution that uses AI. Company executives are keen to state that it siloes every client’s data and AI into their own results. From this, it sounds as if Swapp has clients already using its technology, even though it’s hard to understand what they do from the company’s website.

It will be interesting to see how Swapp’s output quality, speed and ‘completeness’ compares to Autodesk’s own in-Revit auto-drawing features (more on this ahead) when they eventually ship.

Adi Shavit will be speaking at AEC Magazine’s NXT DEV conference on 26 June 2024 at London’s Queen Elizabeth II Centre (www.nxtdev.build)

Established runners and riders

At Bentley Systems’ Year in Infrastructure 2023 event, chief technology officer Julien Moutte talked at some length about the possibilities of combining AI and digital twin technologies in the infrastructure sector. But in a side meeting, he also discussed Bentley’s interest in applying AI to design, introducing the concept of an AI ‘co-pilot’ and applying the technology to create automated drawings.

“What we’re looking at right now is how can we automate the production of drawings for site engineering first, because there’s a lot to be done there? And how can AI models be trained to look at past designs and past drawings and try to understand style, requirements, layout etcetera, and try to automate some of those drawings?” he said.

As has been previously mentioned and will likely come up again in this article, the core issue is having all information upfront to help the auto-drawing system.

If you have good, accurate models, it makes the process much easier. Where the input is low-quality models, the output will be low-quality drawings – and that represents a threat to the whole idea of automated drawings.

Said Moutte: “Producing drawings for any kind of design is a challenging task, because you need to have a lot more data first to learn each kind of drawing that you will need to produce for all the different disciplines. You need to understand each domain’s requirements, because a structural engineer, an architect, a mechanical engineer, an electrician have different needs from drawings. Also, to start with, you need to have a model with the necessary level of detail to produce those drawings.”

At the time of writing, we have no known delivery date for Bentley’s AI auto-drawings capabilities, but the company seems to have been working on it for quite a while. Bentley is watching emerging BIM and AI start-ups closely and is once more actively investigating the broader AEC market, looking beyond its infrastructure flagship products and its fascination with digital twins.

Julien Moutte will be speaking at AEC Magazine’s NXT BLD and NXT DEV conferences on 25 / 26 June 2024 at London’s Queen Elizabeth II Centre (www.nxtbld.com)