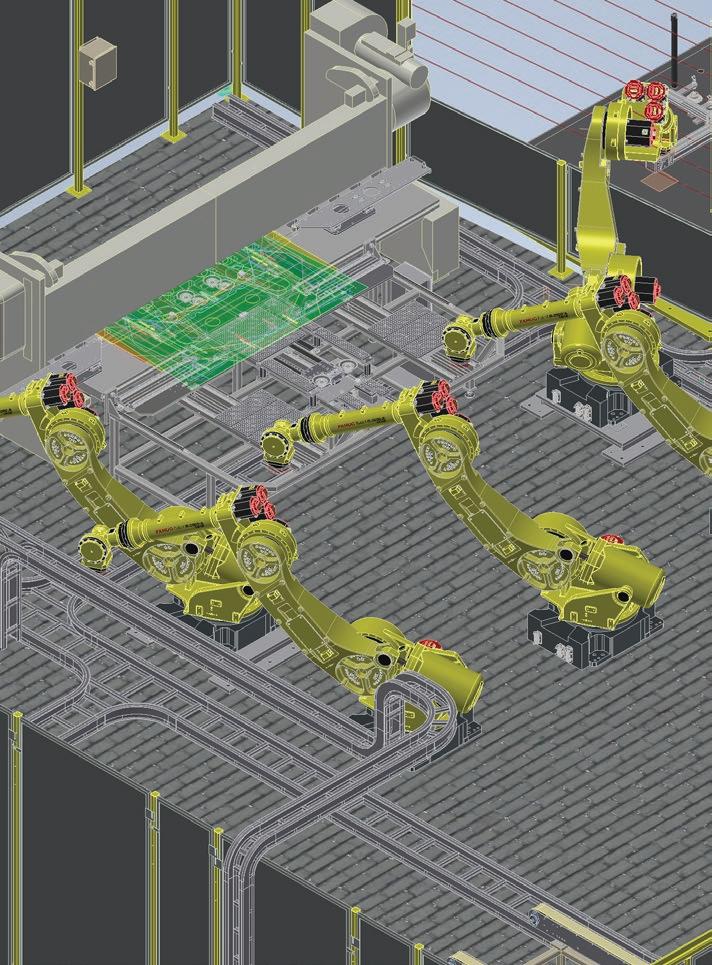

Massive datasets, complex simulations and tight deadlines are common in design and manufacturing workflows. Engineers require a versatile workstation processor capable of addressing the different compute requirements of their key workflow applications. AMD Ryzen™ 7000 Series processors offer the best of both worlds with high frequency cores for lightly threaded 3D design tasks, and plenty of cores for multithreaded simulation, generative design, and toolpath optimization.

#AMD #TogetherWeAdvance

To learn more amd.com/RyzenCreators

It feels a little hypocritical to kick off this Workstation Special Report with some advice on how to save energy. After all, I’ve spent the last two months testing workstations with some of the most demanding design and visualisation tools. The fastest processors get lauded, but they are almost always the biggest consumers of electricity.

We all know we need to use less energy to protect the environment, but there’s nothing like a global energy crisis to focus the mind. The fact is that the energy consumption of processors has been rising steadily. Today we have mainstream CPUs that draw over 250W at peak and highend GPUs that hit 450W. And even though we’ve had the coldest snowy December in years, you can still warm a home office with a few high-performance machines. Thank goodness we didn’t do the Workstation Special Report this summer!

Performance per watt has always been a metric for workstations, but how many of us have really purchased a machine with that in mind? We buy our light bulbs and appliances based on energy efficiency, run our washing machines in eco mode. Some of us even drive our cars at 56 mph — if the authorities have cleared the snow.

To learn how to save energy, we first need to understand how much our workstation consumes. The easiest way to do this is with a power meter. Simply plug it into a mains socket, then plug your workstation directly into that. It measures how much energy your entire workstation consumes in realtime, in watts and kWh. Fancier ‘smart’ devices can track usage through an app. While memory, storage, motherboard, PSU, and fans all use energy, the CPU and GPU are by far the biggest consumers in your workstation. To understand how these work in relation to your workflows, you can get a pretty good idea with Windows Task Manager > Performance.

For the CPU, expect to see 100% usage when rendering. In CAD, it’s much lower, as fewer cores are used. For the GPU, realtime visualisation and GPU rendering will also use 100% of GPU resources – again, this is usually much less for CAD.

All processors have a sweet spot where they run most efficiently. After that point there are diminishing returns, and you’ll need to pump a lot more power into the chip to increase frequency and therefore performance. As a result, running CPUs at slightly lower frequencies can save power without slowing things down too much.

Windows has built in power settings to control how much the CPU boosts. To explore the impact of this we rendered an 8K scene in KeyShot. With a highperformance power plan on a Dell Precision 5470 mobile workstation with the 45W Intel Core i7-12800H CPU (see page WS24) it consumed 0.025 kWh and took 18 mins 18 secs. The same scene rendered with an energy saving power plan used 0.022 kWh and took 24 mins 32 secs. Halving the number of cores had the opposite effect, increasing power usage to 0.033 kWh and taking 36 mins 31 secs.

Of course, some processors are more power hungry than others and the new 125W+ Intel Core i9-13900K (see page WS04) is one of the hungriest. The Boxx Apexx 4, for example (see page WS17) , used 0.042 kWh to render the same scene, although it did this in 6 mins 09 secs. But wouldn’t be nice if we could have our cake and eat it too? Save energy and still get the same (or very similar) performance out of your workstation. Well, you might just be able to do that by undervolting your processor. Modern CPUs allow you to lower the amount of power that they receive, but without necessarily slowing them down. It’s generally reserved for people who know what they’re doing, so proceed with caution, or ask your custom

workstation provider if that’s an option. The temptation when speccing out a workstation is to go for as powerful components as you can afford. With ray tracing, more performance generally means lower render times, so there’s a tangible benefit. However, for real time 3D there is a danger of paying for performance you simply don’t need. If your viz datasets aren’t that complex and you can smoothly navigate your scene with a 70W GPU like the Nvidia RTX A2000, do you really need the 200W Nvidia RTX A4500? Your workstation will consume more power and you probably won’t notice a difference.

Another tip is don’t waste compute resources. Some viz tools will continue to render the viewport when idle, so switch them off when not required. This includes Unreal Engine (GPU), Twinmotion (GPU), KeyShot (CPU / GPU), Solidworks Visualize (CPU / GPU) and others.

Also ask yourself some questions: does your render really that many passes? Do you really need it back so quickly? Could you render at a lower resolution? Could you use AI denoising?

Finally, hybrid renderers can use both GPU and CPU resources, but because the code is generally optimised for the GPU, the contribution of the CPU can be quite small. It can still use lots of energy though, so disable it in the settings.

With new technology, pushing components to their limits seems a natural thing to do. But this not only increases energy usage of the workstation but can also have a knockon effect on our working environment. This winter, the heat given out by the powerful machines tested for this report was certainly welcome. In summer, less so. It’s not only your workstation fans that need to work harder, it’s your office air con as well.

Of course, we all have jobs to do and deadlines to meet. But some small adjustments to your workstation config, workflows, habits, or purchasing decisions can make a big difference.

Driving at 56 mph down the M1 motorway might not feel that exciting, but if it helps save you money and lessen your environmental impact, there’s much to be said for slow and steady win the race.

No one wants their workstation to go slower. But in a world obsessed with bigger, better, faster, should we be paying more attention to how much energy they consume? asks Greg Corke

‘‘

Don’t waste compute resources. Some viz tools will continue to render the viewport when idle, so switch them off when not required ’’

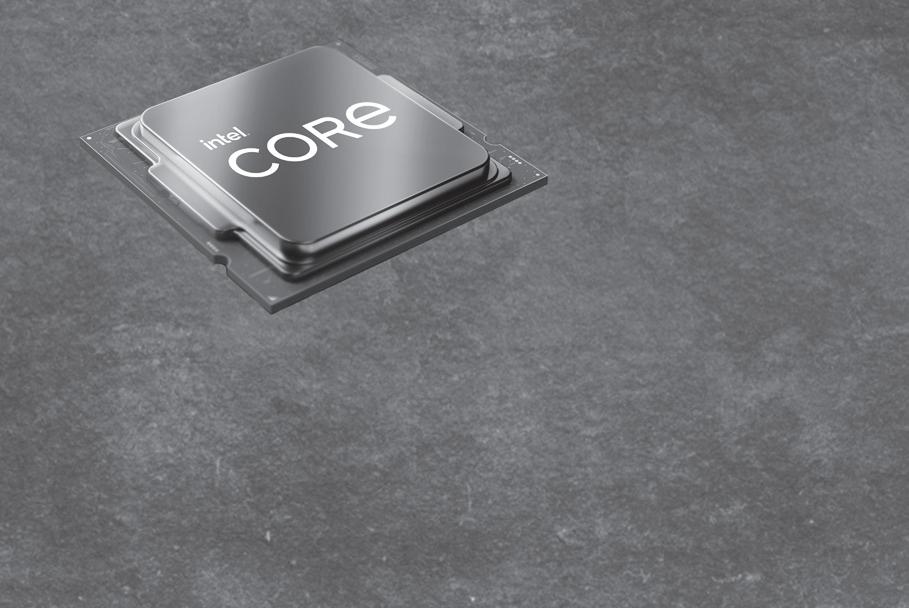

Over the last few years, competition between AMD and Intel has been intense. This is particularly true with AMD Ryzen and Intel Core, popular processors for mainstream desktop workstations.

AMD has maintained a performance lead in extremely multi-threaded workflows like rendering, but the battle for supremacy in single-threaded applications like CAD and BIM, has been hard fought. To a certain extent, AMD and Intel have been playing leapfrog with each new generation since late 2020.

Autumn 2022 saw the launch schedules of both chip manufacturers align. The AMD Ryzen 7000 Series was announced in August while 13th Gen Intel Core got its first public airing in September. High on frequency, these mainstream workstation processors are ideal for CAD and BIM, but still offer plenty for multithreaded workloads including rendering, simulation, point cloud processing, photogrammetry, CAM and more.

Both processor families are now

available in workstations from specialist manufacturers like Armari, Workstation Specialists, BOXX and Scan (who provided the test machines for this article - see page WS14) . We’ve yet to see machines from the major workstation manufacturers. However, this is standard from HP, Lenovo, Dell and Fujitsu – there is always a bit of a lag between launch and support of new technologies.

The Ryzen 7000 Series of desktop processors is based on AMD’s 5nm ‘Zen 4’ architecture. There are four models, ranging in frequency and number of cores.

The top-end AMD Ryzen 9 7950X has 16-cores and a max boost frequency of up to 5.7 GHz. The lower end processors have slightly lower clock speeds and fewer cores but are considerably cheaper. The AMD Ryzen 5 7600X, for example, has six cores and a max boost of 5.3 GHz, but is less than half the price of the Ryzen 9 7950X. The full line up can be seen in the table below. Compared to the previous generation

Ryzen 5000 Series, the number of cores for each class of processor remains the same. All models support simultaneous multithreading (AMD’s equivalent to Intel Hyper-Threading). This utilises twice the number of threads as physical cores to help boost performance in certain multi-threaded workflows, such as ray trace rendering.

Ryzen 7000 gets its performance uplift from a significant rise in base and boost frequency, a claimed 13% increase in Instructions Per Clock (IPC), double the Level 2 cache and support for DDR5 memory (up to 128 GB).

One trade-off with the new chips is a significant increase in Thermal Design Power (TDP), a measure of power consumption under the maximum theoretical load. The top two Ryzen 7000 Series models feature a TDP of 170W and a peak power of 230W, compared to 105W and 142W in the previous generation. However, the CPUs are only likely to draw such power when using multiple cores in combination with Precision Boost

With 13th Gen Intel Core and AMD Ryzen 7000, competition in workstation CPUs has never been so strong. Greg Corke explores the best CPUs for designcentric workflows from CAD and BIM to reality modelling and rendering

Overdrive (PBO), a feature of Ryzen CPUs that increases voltages to allow the CPU to clock higher.

The 13th Gen Intel Core processor family (codenamed ‘Raptor Lake’) picks up where 12th Gen Intel Core ‘Alder Lake’ left off, with a hybrid architecture that features two different types of cores: Performancecores (P-cores) for primary tasks and slower Efficient-cores (E-cores).

The P-cores support Hyper-Threading, Intel’s virtual core technology, so every P-core can run two threads. E-cores do not support Hyper-Threading. Workloads are split ‘intelligently’ using Intel’s Thread Director.

The big difference with 13th Gen Intel Core is that it has more E-cores than its predecessor. The flagship Intel Core i913900K, for example, has 16, double that of the Core i9-12900K. This can deliver a big performance boost in highly multithreaded workflows like rendering.

Of course, the P-cores have also been enhanced and while their number remains the same (a total of eight with the Core i913900K), Intel says users can expect up to 15% better single-threaded performance compared to the previous generation with an IPC increase and a Max Turbo frequency of 5.8 GHz.

13th Gen Intel Core is even more power hungry than AMD Ryzen 7000. The top two models feature a TDP of 125W and a max turbo power of 253W. Power consumption of the Core i9-13900K is on par with the AMD Ryzen 9 7950X in single threaded workflows but significantly higher in multi-threaded workflows (more on this later).

Other features include up to two times the L2 cache and increased L3 cache, plus support for up to 128 GB of memory (DDR5 or DDR4).

While the spotlight is on the flagship Core i913900K, Intel has launched a total of six 13th Gen Intel Core processors – three with integrated graphics and three without.

As with AMD, the other models trade off frequency and cores for a lower price. However, the drop in clock speed is more dramatic. The Intel Core i5-13600K, for example, has 6 P-cores, 8 E-cores, and Max Turbo frequency of 5.10 GHz.

The idea behind Intel’s hybrid architecture is that critical software, especially current

active applications, runs on the P-cores, while tasks that are not so urgent run on the E-cores. This could be background operations such as Windows updates, anti-virus scans, hidden tabs on a web browser, or applications that have been minimised or placed in the background (more on this later).

To help assign tasks to the appropriate cores, 13th Gen Intel Core CPUs include a hardware-based “Thread Director”. Intel states that this works best with Windows 11. We have not done any real testing across operating systems but have heard reports that some applications will only run on the E-cores on Windows 10. It may also be helpful to use the latest software releases or service packs.

Splitting out the CPU into P-cores and E-cores doesn’t mean that highly multi-threaded processes simply run on the P-cores, leaving the E-cores idle. In ray trace rendering software like V-Ray or KeyShot, for example, the Intel Core i9-13900K will max out all 24 cores and 32 threads.

Of course, in modern design workflows, multi-tasking is common, and architects and engineers often use multiple applications at the same time. Thread Director allows you to deprioritise certain processes by simply minimising an application or moving it to the background by maximising another. This forces the calculations for the inactive application to run on the E-cores only, leaving the more powerful P-cores free for the active application.

Having this level of control can be useful if you often run concurrent compute intensive applications, such as rendering, point cloud processing, CAM, photogrammetry and others. It allows you to prioritise tasks quickly and easily, on the fly.

The downside of this approach is when the foreground application is less demanding. If, for example, you’re modelling in Solidworks or Revit, and rendering in the background with V-Ray or KeyShot, you don’t really need to dedicate eight superfast P-Cores to a CAD or BIM application which, in many parts, can only take advantage of a single core.

One way round this is to keep both applications in the foreground, perhaps each on their own monitor, or even leaving a tiny bit of the application visible.

While our testing went no further, there may be other solutions, such as assigning applications to specific cores using Processor Affinity in Task Manager. However, this would only be temporary fix until reboot. Maybe a tool like Process Lasso or Prio could give applications permanent priority.

Comparing AMD Ryzen 7000 with 13th Gen Intel Core, isn’t just about specs, performance, and features – it’s also about the machines you can buy. When it comes to workstations, not all customers have a free choice of supplier, with many large AEC firms only allowed to procure IT from a major global manufacturer like Dell, HP, Lenovo or Fujitsu.

While both 13th Gen Intel Core and AMD Ryzen 7000 Series workstations are available now from specialist manufacturers like Scan, BOXX, Armari and Workstation Specialists, we expect Intel to continue to dominate in mainstream workstations from the major global manufacturers.

Lenovo is currently the only one out of HP, Dell and Fujitsu to offer an AMD Ryzen desktop processor in a mainstream workstation, and that is with the AMD Ryzen Pro 5000 in the ThinkStation P358, which launched August 2022. Whether Ryzen 7000 (and the Ryzen Pro 7000 that will presumably follow) becomes the catalyst for others to follow suit remains to be seen.

For our testing we focused on the top end models from both processor families – the AMD Ryzen 9 7950X (16-cores, 32-threads, and a max boost clock of up to 5.7 GHz) and the Intel Core i9-13900K (8 P-cores, 16 E-cores, 32-threads and a max turbo frequency of 5.8 GHz).

Our CPUs were housed in very similar workstations — the AMD Ryzen 9 7950X in the Scan 3XS GWP-ME A132R and the Intel Core i9-13900K in the Scan 3XS GWP-ME A132C.

Apart from the CPUs and motherboards, the other specifications were almost identical. This includes the 240mm Corsair H100i Pro XT hydrocooler. The other specs can be seen below. Windows Power plan was set to high-performance.

• CPU – AMD Ryzen 9 7950X

• Motherboard – Asus TUF B 650-Plus WiFi

The AMD Ryzen 9 7950X starts to show real benefits when rendering, a process that can harness every single core, all the time ’’

Scan 3XS GWP-ME A132C (13th Gen Intel Core workstation)

• CPU – Intel Core i9 13900K

• Motherboard – Asus Z790-P WiFi

Common components

• Memory – 64GB (2x 32GB) Corsair Vengeance DDR5 5,600MHz

• System Drive – 2TB Samsung 980 Pro NVMe PCIe 4.0 SSD

• Cooling – Corsair H100i Pro XT

• PSU – 750W Corsair RMX, 80PLUS Gold

• GPU – Nvidia RTX A4500 (20 GB)

• Networking – 2.5GbE NIC, WiFi

• Operating System – Microsoft Windows 11 Professional 64-bit

Turn to page WS14 for a full review of both workstations.

We tested both workstations with a range of real-world applications used in AEC and product development. We also compared performance figures from previous generation workstations, including 11th Gen Intel Core (Core i911900), 12th Gen Intel Core (Core i912900K) and AMD Ryzen 5000 (Ryzen 5950X). The comparisons aren’t perfect; the older machines all ran Windows 10 with different memory, storage, and cooling configs, but should still offer a pretty good approximation of relative performance.

CAD, BIM and beyond

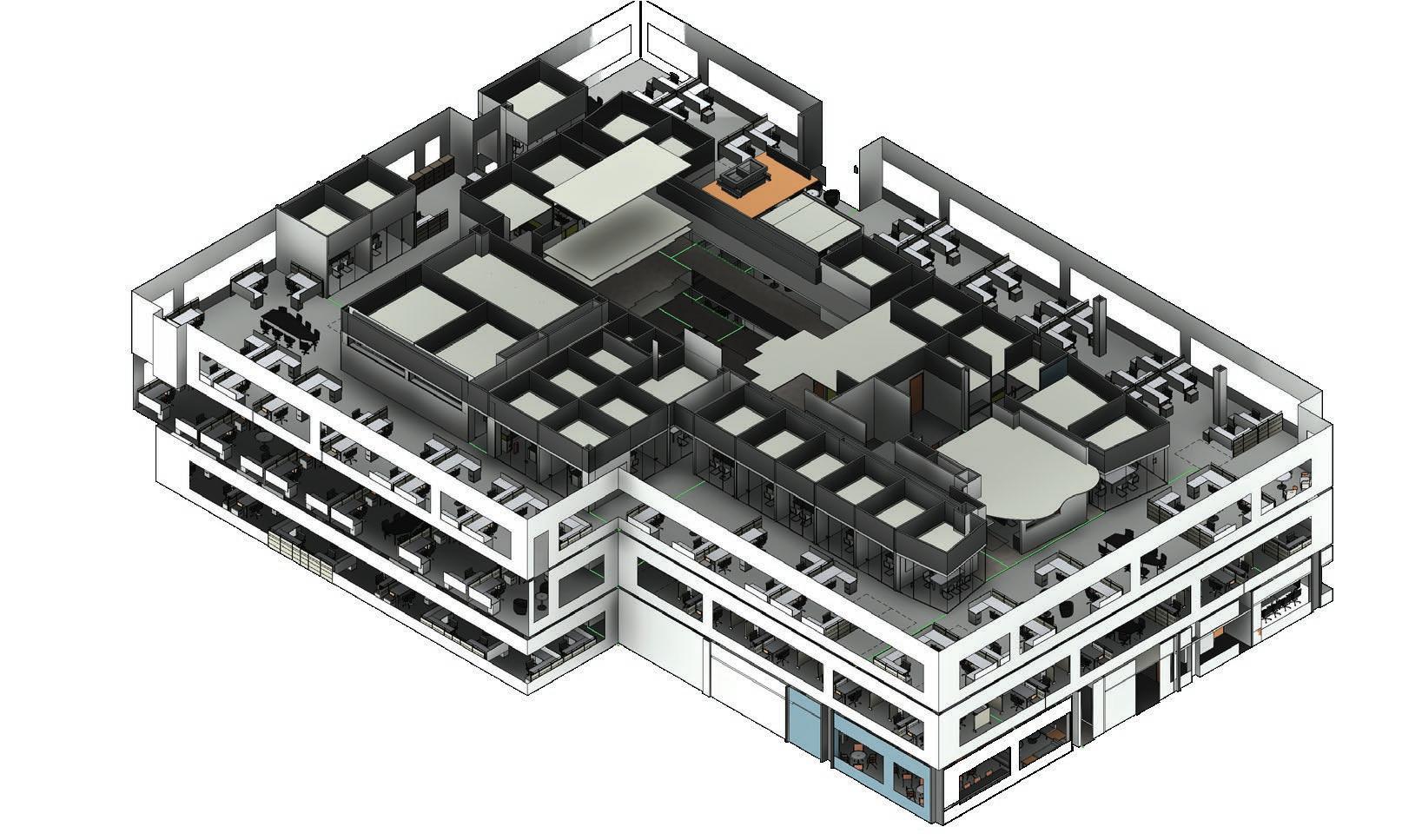

CAD applications like DS Solidworks and Autodesk Inventor, and BIM authoring tools like Autodesk Revit, are bread and butter for product designers, engineers, and architects. In the main they are single threaded and while some processes can use a few CPU cores, it’s only usually ray trace rendering that can take full advantage of all the processor cores, all the time.

In Autodesk Revit 2021 using the RFO v3 benchmark the Intel workstation was around 10% faster in model creation and export, and, surprisingly, around 5% faster when rendering. We saw similar results in Solidworks 2021 using the SPECapc 2021 benchmark, although Intel’s lead was extended considerably in ‘model rebuild’.

With the InvMark for Inventor benchmark by Cadac Group and TFI, Intel had a small lead in most sub tests, which are either single threaded, only use a few cores (threads) concurrently, or use a lot of cores, but only in short bursts. AMD fared better in the rendering sub test, which

uses all available cores, and when opening files and saving to disk.

Both the Solidworks and Inventor benchmarks also test CAD-native simulation capabilities through Solidworks Simulate and Autodesk Inventor dynamic simulation / FEA. These tests use a few CPU cores, whereas some of the more advanced simulation tools, such as Ansys Mechanical, can take advantage of more.

Here, it’s worth pointing out the chart above where you can see how frequency drops as more cores (threads) are enabled.

Between 1 and 8 threads, Intel maintains a higher frequency than AMD, so this helps give Intel a lead in workloads that use a similar number of threads.

Of course, the core code in many CAD tools is quite old and new generation tools, including nTopology for design for additive manufacturing, is built from the ground up for multi-core processors (and,

more recently, GPU computation). For our nToplogy geometry optimisation test we solely focused on the CPU and, with all cores in use, the Intel workstation had the edge over AMD.

Reality modelling is becoming much more prevalent in the AEC sector. Agisoft Metashape is a photogrammetry tool that generates a mesh from multiple hires photos. It is multi-threaded, but uses CPU cores in fits and starts, and uses a combination of CPU and GPU processing.

We tested using a benchmark from specialist US workstation manufacturer Puget Systems. In most of our tests the Ryzen 9 7950X performed poorly and was even slower than the Ryzen 5950X. Intel had a clear lead – between 25% and 39% faster.

In point cloud processing software, Leica Cyclone Register 360, which can run

on up to five CPU threads on machines with 64 GB of memory, Intel had a 7-10% lead when registering both of our point cloud datasets. In practice, the lead over the 11th / 12th Gen Intel Core and Ryzen 5000 would be even higher, as those machines had 128 GB memory, so the software used 6 CPU cores.

The Ryzen 9 7950X starts to show real benefits when rendering, a process that can harness every single core, all the time. In V-Ray and KeyShot, two of the most popular tools for design visualisation, the Ryzen 9 7950X showed around a 10% lead over the Core i9-13900K.

In Unreal Engine, the lead was smaller when recompiling shaders, a process that uses every CPU core, along with the GPU.

In Cinebench 23, a benchmark based on Cinema4D, there was virtually nothing between the two processors.

We also did stress tests to see how CPU frequency dropped over time. When rendering in KeyShot, the Core i9-13900K started at 4.89 GHz, dropped to 4.63 GHz after a few minutes, but maintained that frequency for well over an hour. The Ryzen 9 7950X fared better here, starting at 5.5 GHz but then maintaining a solid 5.0 GHz.

Much of this is down to the relative power consumption of the two CPUs, which you can read more about later.

These days, very few architects, engineers or product designers use single applications and, with compute intensive workflows on the rise, such as reality modelling, rendering and simulation, it’s very important to consider a CPU’s ability to multi-task.

Even with the lower-end 13th Gen Intel Core and AMD Ryzen 7000 Series processors, which have fewer cores, it will be possible to leave one or more multi-threaded tasks running in the background, and still leave resources free for bread-and-butter 3D modelling.

To explore multi-tasking potential, we pushed both machines to their limits, with a demanding AEC workflow, consisting of point cloud processing and photogrammetry.

We registered a 24 GB point cloud dataset in Leica Cyclone Register 360 while, at the same time, processing a series of high-res photographs in Agisoft Metashape.

If done sequentially it would have taken the AMD machine 1,039 secs and the Intel 826 secs. However, running both

jobs in parallel the AMD finished in 625 secs and the Intel in 646 secs. With more threads trying to run concurrently, clock speeds dropping, and some processes being pushed to slower E-Cores, the Intel workstation starts to slow down.

To push the machines even harder we added ray tracing into the mix, rendering an 8K scene in KeyShot using 8 cores and 16 threads. Here, AMD’s 16 highperformance cores showed a real benefit, completing all three tasks in 729 secs compared to 779 secs on Intel.

CPU speed has some influence over graphics performance, but the extent to which it does depends on the software. In Revit, an application renowned for being CPU limited, the Intel Core i9-13900K showed around a 7% performance lead over the AMD Ryzen 9 7950X when using the same Nvidia RTX A4500 GPU. This lead was smaller in Solidworks, which has a more modern graphics API that better harnesses the power of the GPU.

In Unreal Engine 4.26, an application renowned for being GPU limited, rather than CPU limited, the difference between Intel and AMD was negligible when testing with the Audi Car Configurator model.

‘‘ Intel’s good all-round performance does come at a cost. While power demands of the two CPUs are similar in single threaded workflows, the Core i9-13900K soon starts piling on the watts when more CPU cores come into play

Compared to the previous generations, both processors use a lot of power. The Intel Core i9-13900K has a Thermal Design Power (TDP) of 125W and a max turbo power of 253W. The AMD Ryzen 9 7950X has a TDP of 170W and a peak power of 230W. But specs only tell part of the story.

In reality, the Intel chip draws noticeably more power in multi-threaded workflows than AMD. This was observed at the plug socket, when measuring power draw of the overall systems – taking into account CPU, motherboard, memory, storage, and fans.

When rendering in Cinebench using all cores, for example, the AMD workstation draws 341W at the plug, but the Intel workstation trumps this considerably with a whopping 451W. In KeyShot it’s even more with Intel at 509W and AMD at 382W

Power consumption in single threaded workflows is significantly lower and much more equal, with the AMD and Intel workstations drawing 127W and 122W respectively.

However, as you can see in the chart on page WS07, as core utilisation increases Intel soon starts drawing more power.

Of course, more power means more heat, which means the workstation’s fans must work harder. This has a knock-on effect on acoustics, and the Intel machine was noticeably louder when rendering, especially over long periods. This could potentially be mitigated with a higher-end all-in-one cooler.

But what you really want to know is how increased power usage might impact your electricity bill. Based on the current UK electricity rate of £0.34 per kWh for households (and £0.211 per kWh for businesses), rendering for eight hours

a day, five days a week, would cost you £241 (£150) per year with the AMD Ryzen 9 7950X workstation and £319 (£198) per year with the Intel Core i9-13900K workstation.

While rendering all day long is an extreme use case, it should give some food for thought, especially if your firm uses multiple workstations. Energy prices are also set to rise in April 2023 (see page WS03 for more on energy use in workstations).

The verdict

For years, Intel was the only serious option for workstation processors. But

now with AMD Ryzen 7000 and 13th Gen Intel core, designers, engineers and architects have real choice.

From our tests, the Intel Core i9-13000K demonstrates a clear lead over the AMD Ryzen 9 7950X in single threaded and lightly threaded workflows. And with more E-Cores than before, it has also closed the gap considerably in ray trace rendering.

To provide some context, 18 months ago, AMD Ryzen offered nearly double the rendering performance of Intel Core (Ryzen 5000 vs 11th Gen). That lead has now shrunk to around 10% in some apps.

And while AMD still comes out top when multi-tasking, Intel now competes much more strongly, even in scenarios where before it failed.

But Intel’s good all-round performance does come at a cost. While power demands of the two CPUs are similar in single threaded workflows, the Core i9-13900K soon starts piling on the watts when more CPU cores come into play. AMD’s superior power efficiency is a big win, both in terms of energy usage and acoustics.

Of course, Ryzen 7000 and 13th Gen Intel Core aren’t just about the top-end models. For CAD users on a tight budget, the Intel Core i5-13600K and AMD Ryzen 5 7600X look like great value CPUs. And with the 7600X having a slightly higher boost frequency, we expect there to be little between both processors in single threaded CAD or BIM workflows.

From 3D modeling and simulation to visualization in VR and more, power through your most demanding workflows with Z by HP desktop and laptop workstations.

Design in real time with the power of high-performance workstations configured with NVIDIA RTX™ graphics.

Certified with leading software applications to ensure peak performance even with complex projects.

Configure to meet your complex workflow demands today with ample room to expand as your needs change.

Our workstations undergo military-standard testing,1 plus up to 360K additional hours of testing.

Modern graphics cards (or GPUs) are complex pieces of hardware which should improve performance within the viewport, but should also improve visual clarity and accuracy. Although there is no reason they should be daunting pieces of hardware. This article aims to offer some simple guidance on how GPUs work and considerations to help increase software performance. Let’s begin with the most common question:

Do I choose a gaming GPU or a professional GPU?

Both are designed for different tasks and software needs. If you want to mostly play games, then choose a purpose-built gaming GPU. If you use business critical software requiring hardware validation or certifications, then a professional GPU is right for you. These are optimized for 24x7 usage with warranties that characteristically last for a lengthy 3 years. Professional GPUs can carry more memory than their gaming counterparts, although the underlying graphics architecture can be similar. Other main differences are the physical card design and layouts, Pro GPUs are built for workstation case needs, which requires different power connector positions, different fan designs, and have different thermal considerations to their counterparts - particularly how hot air is exhausted out the GPU and overall system. The next consideration is overall host system portability.

Do I choose a mobile or deskside system?

Recent mobile laptops (or mobile workstations) are now as powerful as many tower or deskside equivalents. There are benefits to a larger system, such as component upgradeability and multiple GPU support, but for typical CAD workflows a mobile system can be adequate. When looking to matching GPUs in both choices, you won’t see much performance or feature difference between the comparable GPUs, although mobile laptops can mean less dedicated graphics memory.

Windows can require more RAM, so GPU requirements are increasing.

6GB is a great sweet spot for traditional CAD.

Scott Jackson, Intel, Director, Product Management.High-end GPUs: are great for real time experiences, simulations and GPU rendering tasks. They often draw the most power.

Medium workload GPUs: are great for 2D and 3D CAD/BIM projects, along with typical image and video editing.

Does expensive graphics mean better software performance?

No, not always. Normally more expensive discrete GPUs have extra dedicated memory and cores which can aid some software’s speed, but like a traditional processor, the real questions are what software

Light work workload GPUs: are great options for modern office productivity tasks.

Integrated GPUs: (iGPUs) are great for many software uses for example ones that don’t require much memory combined with long-battery life.

Tip: Look to your existing workflow and see how much of the GPU it’s using. It’s unlikely you are using just one application, so explore a few of your common software tools.

do you use, and will it directly benefit? For some the answer is a firm No. So always start by looking at your software’s hardware requirements, before selecting a GPU as you may not need to spend so much.

What does ‘discrete’ GPU mean?

This is normally a way of distinguishing that the GPU has dedicated memory on board. This can offer enhanced performance, as it does not need to share it with other system resources. The GPU (or dGPU) will also be separate to the processor.

Generally, there are three essential components to all discrete graphics cards:

1 The GPU chip: The brains of the card, processing various complex tasks.

2 The memory: Where things like viewport textures are stored.

3 The fan and heat sink: Helping regulate thermals, keeping the card running at it’s optimum.

Resizeble BAR (Base Address Register) Is a new consideration for many. It is recommended to enable this for Intel® Arc™ GPUs to optimize advanced system resources via the PCIe® device and how it transfers information from the CPU to the GPU. It can result in performance improvements by enabling this overall system functionality. Many modern system vendors already enable this by default within the VBIOS. If unsure, check with your system provider.

1 Dual slot vs single slot card

Graphics cards are available in different form factors to suit the range of professional workstations that exist. The term refers to how many slots it takes up on the motherboard and workstation case. Dual slot cards have higher performance, requiring more power to run.

2 End bracket

These help to hold the GPU in place with their fixing ‘ears’.

3 Graphics cores

GPUs are made up of huge amounts of calculation cores, with each GPU vendor giving them different names and using different architectures, for example, 8x NVIDIA CUDA cores, does not directly equal 8x Intel Xe Cores. More cores do however mean more performance when comparing each vendor to itself.

4 Display connectors

For workstation GPUs look for matching connectors that help outputs to match. HDMi is used for home entertainment with most professional GPUs standardizing on DisplayPort® Mini-DisplayPort (mDP) offers the same functionality but condensed.

5 Gold Fingers

Gold-plated vertical rows (or lanes) on the graphics (PCB) board for connecting to the systems motherboard expansion slots. The ‘fingers’ are used to communicate with other system components, with gold alloy used for superior strength and conductivity.

6 PCIe® connector

Graphics cards are typically x16 ‘lane’ devices, and more lanes used directly relates to more bandwidth speed. Note that PCIe gen 3.0 is half the speed of PCIe gen 4.0. Meaning PCIe 4 x8, and PCIe 3 x16 offer similar speeds.

7 Cable locking mechanisms

Look for a small slot above each output on the GPU for a display cable to lock into, preventing the compatible cable being accidentally removed.

8 Memory

For professional graphics, GDDR6 is today’s common high-performance memory type, but the amount can vary. Memory (RAM) can be expensive, and more RAM can increase the price of your GPU. However, you don’t always need lots of RAM for your software. Generally, 6-8GB is a great choice for modern CAD and design tasks.

9 Fan

The fan on your GPU has the important task of cooling your card. If too hot, thermal throttling occurs, slowing the GPU.

10 Multiple outputs

Numerous third-party reports have shown the tangible efficiency benefits of adding at least a second monitor to your setup. By adding more screen space, you save time hunting for menus, or finding content hidden behind multiple windows.

11 Vents

These help to efficiently expel air directly out the GPU and overall system. As with a GPU, if the host system gets too hot the complete system will start to slow down or worse, critical components may start to fail.

12 Teraflop

Is the average number of (floating-point) calculations completed each second, with tera equating to one thousand. While teraflops (TFLOPs) are not the sole indication of final GPU performance, they’re generally seen as key to faster viewports. Some CAD and design software will not benefit from huge TFLOP values though.

If the total board power (TBP) requirements of the graphics card are 75w or below, then the card can be powered directly by the PCIe® slot. Not needing a dedicated power connector can mean a much smaller workstation. The industry standard for high power consumption GPUs is 6-pin, 8-pin or a combination.

When you purchase a GPU, you are also investing in robust drivers for your software needs. Each new driver typically brings performance improvements, and a regular driver release cycle can bring frequent performance uplifts and new software support. Look for a 3-month release cycle from the vendor.

A workstation graphics card is designed to meet the requirements of professional workstation cases. In particular, look for the power connector block on the edge, a high-performant fan, and a robust aluminium shroud (or protective casing).

More pixels mean greater line detail displayed, although with ultrawide (UWD) monitors growing in popularity it’s important to check your GPU specifications can drive one. (Remember application interfaces rarely run above 4K resolution.)

HDR (High Dynamic Range) technology allows the representation of more colors on your compatible display. In some CAD circumstances this isn’t needed, but for image production or renders this means greater depth and sharper contrast.

To learn more about Intel graphics visit intel.com/ArcProGraphics

Between these two new desktop workstations, Scan has most bases covered in product development, from CAD and simulation to rendering and beyond, writes Greg

CorkeScan is always one of the first out of the blocks with new technologies. And with the 3XS GWP-ME A132C and 3XS GWP-ME A132R it has delivered two powerful desktop workstations with the very latest processors from Intel and AMD.

The ‘C’ and ‘R’ suffixes refer to ‘Core’ and ‘Ryzen’, specifically the brand-new 13th Gen Intel Core and AMD Ryzen 7000 Series processors. As you will see from our indepth review on page WS4 these powerful new chips are extremely well suited to a range of professional workflows, from CAD and BIM to reality modelling and rendering.

Both of our test machines look identical and include several common components, but they differ in three main areas — CPU, motherboard, and GPU. So how do they fare in the typical workflows of designers?

Our Scan 3XS GWP-ME A132C test unit arrived with a top-end Intel Core i913900K CPU, paired with Nvidia’s entry-

level pro ray tracing and AI-accelerated GPU, the Nvidia RTX A2000. This combination of processors is well suited to CAD, BIM and entry-level visualisation, as well as more CPU-intensive workflows such as point cloud registration, photogrammetry, and simulation. Together with 64 GB of Corsair Vengeance DDR5 5,600MHz memory, a 2TB Samsung 980 Pro SSD and an Asus Z790-P WiFi motherboard, the unit will set you back £2,583 + VAT.

Everything is housed inside a 542 x 240 x 474 mm Fractal Design Meshify 2 chassis, adorned with Scan’s trademark 3XS custom front panel. It’s a solid, well-built case, with a ready supply of ports. Up front and top, there are two USB 3.2 Type A and one USB 3.2 Type C, with plenty more at the rear (four USB 2.0 Type A, three USB 3.2 Type A and one USB 3.2 Type C). Access is easy. Simply lift off the top panel and pull off the side to get to the parts inside. With the SSD mounted directly on the motherboard, a compact GPU, no hard disk drive (HDD), and all the cabling tucked neatly away, everything feels a little lost inside the spacious interior. But modern-day workstations are as much about keeping components cool as they are about expansion, and with the power-hungry Intel Core i9-

13900K CPU, Scan certainly has its work cut out here.

When rendering in KeyShot we recorded over 450W of power draw at the plug, pushing 530W in Solidworks Visualize, which can use both CPU and GPU. The Corsair H100i Pro XT liquid CPU cooler is well regarded as a thermal solution, but fan noise in this system is very noticeable under these heavy loads.

■ Intel Core i9-13900K processor (3.0 GHz, 5.8 GHz turbo) (8 P-cores, 16 E-cores, 32 threads)

■ Nvidia RTX A2000 GPU (12 GB GDDR6)

■ 64 GB (2 x 32 GB)

Corsair Vengeance DDR5 5,600 memory

■ 2 TB Samsung 980 Pro NVMe SSD

■ Asus Z790-P WiFi motherboard

■ Corsair H100i Pro XT hydrocooler

■ 750W Corsair RMX, 80PLUS Gold PSU

■ Fractal Design

Meshify 2 chassis, adorned with 3XS custom front panel (542 x 240 x 474mm)

■ Microsoft Windows 11 Pro 64-bit

■ 3 Years warranty –1st Year Onsite, 2nd and 3rd Year RTB (Parts and Labour)

■ £2,583 (Ex VAT)

■ scan.co.uk/3xs

Performance is top notch, especially in CAD and BIM software. It delivered very impressive single threaded and lightly threaded CPU benchmark scores in Revit, Inventor, and Solidworks. The machine also set a new record when processing point clouds in Leica Cyclone. All four tests run entirely on the Core i9-13900K’s eight superfast P-Cores.

The processor’s 16 E-cores come into play when rendering. While performance in V-Ray and KeyShot is impressive, it doesn’t hit the heights of the AMD-based Scan workstation, propelled by its 16-core Ryzen 9 7950X CPU. Performance in Cinebench is much closer.

The processor is helped along by its 64 GB of DDR5 memory, which takes up two of the four slots on the Asus Z790-P WiFi motherboard. It is supported by a single 2TB Samsung 980 Pro SSD. Additional storage can

is well equipped to handle some fairly sizeable datasets for GPU rendering and real-time viz. But it has its limitations in real-time workflows. While it can comfortably handle complex models at FHD resolution, 4K is a bit of a stretch.

With our 9.5 GB Enscape office model, it dropped from 39 FPS @ FHD to 17 FPS @ 4K. And the slowdown is more dramatic as demands on the GPU grow – going from 43.3 FPS to 12.6 FPS with the Audi car configurator in Unreal Engine, and from 38.2 FPS to 16.2 FPS in our Autodesk VRED Professional car model with medium anti-aliasing.

As you might expect, GPU rendering performance is significantly lower than pro viz-focused GPUs like the RTX A4500. In the KeyShot benchmark 11.3.1 it recorded a score of 32 compared to 67 for the RTX A4500. In the V-Ray benchmark 5.02 it was 1,012 versus 2,119.

With the top-of-the-range AMD Ryzen 7950X CPU and powerful Nvidia RTX A4500 GPU, our Scan GWP-ME A132R test workstation is tuned for all types of demanding visualisation workflows. But this balance does come at a premium. At £3,583 Ex VAT, it’s precisely £1,000 more than the Intel machine.

The 16-core processor makes light work of ray trace rendering, second only to the 32-core and 64-core AMD

V-Ray and KeyShot, it demonstrates a 10% lead over its Intel-based sibling.

But with the powerful Nvidia RTX A4500 GPU with 20 GB of memory, users have choice. Both KeyShot and V-Ray, plus many other rendering tools, can take advantage of the dedicated ray-tracing and AI hardware within the dual slot card. And with careful juggling of CPU and GPU resources, this can bring new efficiencies to day-to-day workflows.

For real time viz, expect a silky-smooth viewport at 4K in all but the most demanding of workflows. It’s only when enabling real time ray tracing in Unreal Engine that frame rates drop below the golden 30 FPS, but 17.37 FPS is still acceptable.

Of course, a GPU this powerful is overkill for CAD and BIM. And with Revit and Inventor in particular, users will do just as well with an Nvidia RTX A2000, if not an Nvidia T1000. In fact, because these applications are so CPU limited, the Intel machine with its RTX A2000 actually wins out in viewport performance, as it does in core application workflows from model creation to data translation.

The workstation shares

machines, extremely well built with a 3-year warranty (1st year onsite) as standard. The big question on everyone’s lips is which one is best? That very much depends on what you do, day in day out.

■ AMD Ryzen 9 7950X processor (4.5 GHz, 5.7 GHz boost) (16 cores, 32 threads)

■ Nvidia RTX A4500 GPU (20 GB GDDR6)

■ 64 GB (2 x 32 GB)

If your workflows centre on CAD or BIM, with a little bit of visualisation, then the 3XS GWP-ME A132C is a great choice. It has single-threaded performance in abundance, it’s no slouch in multi-threaded workflows either, and the GPU is a great entry point to realtime viz. However, fan noise really kicks in when it hits top gear. This is certainly not a quiet machine.

Corsair Vengeance DDR5 5,600 memory

■ 2 TB Samsung 980 Pro NVMe SSD

■ Asus TUF B650Plus WiFi mainboard

■ Corsair H100i Pro XT hydrocooler

■ 750W Corsair RMX, 80PLUS Gold PSU

■ Fractal Design Meshify 2 chassis, adorned with 3XS custom front panel (542 x 240 x 474mm)

■ Microsoft Windows

11 Pro 64-bit

■ 3 Years warranty –1st Year Onsite, 2nd and 3rd Year RTB (Parts and Labour)

■ £3,583 (Ex VAT)

■ scan.co.uk/3xs

Meanwhile, the 3XS GWPME A132R delivers in all areas of visualisation, with the bestin-class CPU for rendering, a powerful pro GPU and good acoustics. But having this level of all-round performance does come at a premium, with our test machine costing a full £1,000 more than the Intel.

Of course, both machines are fully customisable, so you can pick and choose components to match your specific workflows. And there’s plenty of room to grow with an additional 64 GB of memory and buckets of storage.

This powerful desktop workstation not only offers impressive performance for CAD, thanks to its enhanced Intel Core i9-13900K CPU, but it can be rackmounted for an easy-to-deploy resource for flexible working, writes Greg

CorkeBoxx has a long history of building high-performance custom workstations. The Texas-based company not only matches components to specific 3D workflows, but designs and manufactures its own chassis in ‘aircraft-grade’ aluminium. These are solid machines with a strength and rigidity beyond that of most off-the-shelf cases used by other custom manufacturers.

The Boxx Apexx S4.04 also stands out because it is a desktop workstation that can be rack mounted. While the ability to rack mount is not uncommon in workstations from major players like HP and Lenovo, it’s not something we often see done by custom manufacturers.

Boxx has been doing this for years, but it has never been more relevant. With increased demand for flexible working, it makes it easier for firms to support staff working from office or home. Simply set up the workstations in a centralised rack and remote into the machines from anywhere.

Of course, this can be done with dedicated rack workstations / servers, but rack-mounting desktop workstations has two major benefits: first you get the absolute best performance and second you don’t necessarily need a dedicated airconditioned datacenter or server room.

With the Apexx S4, the rack mount kit is included with the machine – you simply bolt it on to the chassis. It is designed to fit a 19” rack with 3/8” square holes.

Form certainly follows function here. The chassis is very angular, essentially a 4U box. There are no bevelled edges, a trademark of the Apexx S3, which offers the same core specs in a smaller chassis with less expandability. One gripe is there are no USB Type C ports on the front, and only two Type A (one of which is USB 2.0).

But there’s plenty at the rear — two USB Type C and eight USB Type A. Our test machine was maxed out in CPU and

memory, with the top-end Intel Core i9-13900K processor supported by 128 GB of DDR54800 MHz memory.

■ Intel Core i9-13900K processor (3.0 GHz, 5.8 GHz turbo) (8 P-cores, 16 E-cores, 32 threads)

Boxx has ‘performance enhanced’ the CPU beyond its standard settings, which gives it an edge. It hit 5.97 GHz in single threaded workflows and 4.89 GHz on all cores, which it managed to maintain when rendering in KeyShot for over an hour. This translates to real performance benefits in core workflows. Compared to the other Core i9-13900K workstation we’ve reviewed, the Scan 3XS GWP-ME A132C (see page WS14), it was around 3-4% faster in single or lightly threaded tests in Autodesk Revit, and around 6% faster in the single threaded and multi-threaded Cinebench R23 benchmark. It was marginally slower in V-ray and KeyShot, but these benchmarks take less than a minute to run and, as we found previously, all core frequencies on the Scan machine drop after a couple of minutes.

■ Nvidia RTX A4000 GPU (16 GB GDDR6)

■ 128 GB (4 x 32 GB)

DDR5-4800 MHz memory

■ 1 TB Seagate 530

Firecuda SSD + 2 TB

7,200rpm Hard Disk Drive (HDD)

■ ASRock Z790

Taichi motherboard

■ Asetek 240mm

670LT closed loop liquid cooler

■ 1300W Seasonic Prime GX-1300 PSU

■ Custom chassis (174 x 457 x 513mm)

+ rack mount kit

■ Microsoft Windows 11 Pro 64-bit

■ 3 year return to base warranty

■ £5,400 (Ex VAT)

■ boxx-tech.co.uk

But Boxx doesn’t have the lead everywhere. Its choice of slower DDR5 4,800 MHz memory looks to have a significant impact on performance in some workflows. The Apexx S4 took 32% longer than the Scan workstation (DDR5 5,600 memory) to recompile shaders in Unreal Engine and 25% longer to process point clouds in Leica Cyclone 360. Both processes also use the GPU a little, so to check the GPU was not the major contributor to the drop in performance we swapped out the Nvidia RTX A4000 for the same GPU, but that only made a very small difference.

One might presume that running all CPU cores at higher frequencies would have a negative impact on power draw and acoustics. However, this was not the case. Compared to the Scan machine, power draw with all cores was only slightly higher (458W vs 452W) and the Apexx S4 was also quieter. This is testament to Boxx’s CPU tuning and chosen cooling system, which comprises an Asetek 240mm 670LT closed loop liquid cooler and three 120mm fans at the front of the machine.

While CPU performance is top notch, Boxx has been more restrained with graphics. However, the Nvidia RTX A4000 is still an excellent GPU for CAD and mainstream

visualisation, and with 16 GB of GDDR6 memory it should cover many bases from real-time 3D to GPU rendering.

Indeed, at 4K resolution it delivers a perfectly adequate 25.89 Frames Per Second (FPS) with the Unreal Engine Audi Car Configurator model, and 32.15 FPS in VRED Professional with our automotive scene.

But the single slot card doesn’t reach the same heights as Nvidia’s dual slot RTX cards. And, if you really want to boost you GPU horsepower, then the Apexx 4 and its 1300W power supply, can handle up to dual Nvidia RTX A6000 GPUs, giving you a whopping 96 GB of GPU memory in supported applications.

For storage, there’s the classic combination of 1 TB NVMe SSD and 2 TB 7,200rpm Hard Disk Drive (HDD). Unlike most custom workstation manufacturers, which go with the Samsung 980 Pro, Boxx has chosen the Seagate 530 Firecuda. According to the company, this is down to the longevity of the SSD, with Seagate claiming an endurance of 1,275 Total Bytes Written (TBW), more than double that of the Samsung 980 Pro. Rounding out the specifications there’s 2.5G LAN, 802.11ax Wi-Fi 6E + Bluetooth.

If you’re looking for an incredibly powerful, well-built rack mountable workstation for CAD and mainstream visualisation you’ll be hard pushed to find one better than this. However, there’s no escaping the fact that you’ll pay a premium for the pleasure.

There are ways to shed some pounds off the sizeable £5,400 (Ex VAT) price tag. Going down to 64 GB of DDR5 memory will save you £200 and not negatively impact performance, unless you work with very large datasets.

A downgrade to the Nvidia RTX A2000 (12 GB) will also save you £400 and while you’ll take a performance hit in design viz tools like Enscape, V-Ray and KeyShot, you should hardly notice any difference in Revit, Inventor, and Solidworks.

Finally, there’s the Boxx Apexx S3 to consider. If you don’t need to rack mount or expand into multiple HDDs or super high-end dual CPUs, then this more compact sibling should give you the exact same performance without making such a big dent in your precious workstation budget.

After six years in service, HP has finally redesigned the chassis for its iconic tiny workstation. With better acoustics and significantly enhanced performance, there’s much to like about this diminutive desktop, writes

Greg Corke

Greg Corke

In late 2016, HP broke the mould with a desktop workstation that was significantly smaller than any other from a major OEM. Since then the HP Z2 Mini has established itself as a firm favourite at DEVELOP3D.

But the original HP Z2 Mini design is now end of life. The G9 edition features a new all-metal chassis. Simple in form, it’s essentially a rectangular prism with rounded corners and a distinctive front mesh, through which air is drawn in, then expelled at the rear.

The beauty of the HP Z2 Mini G9 is its size — a mere 211 x 218 x 69mm. It can sit horizontally or vertically on the desk, kept stable with the included stand. And to keep on brand, the Z logo on the front can be rotated 90 degrees. It can even be VESA mounted under a desk or behind a display and paired with a wireless keyboard and mouse for a clutter free working environment. With integrated Intel WiFi 6E AX211 there’s no need for Ethernet. It’s an ideal machine for space constrained home offices — and there have been plenty of those over the last two years.

It’s 10mm thicker than the previous design, but that means significantly more performance. ‘Alder Lake’ 12th Gen Intel Core CPUs replace ‘Comet Lake’ 10th Gen and, with improved thermal management, the G9 can also support the 125W models, up to the Core i9-12900K. However, it’s important to note that these aren’t the latest ‘Raptor Lake’ 13th Gen Intel Core CPUs, as seen elsewhere in this special report, so are a little slower.

There’s also a wider choice of GPUs,

In single or lightly threaded workflows, the machine was only around 14-20% slower than the fastest 13th Gen Intel Core desktop. For a machine of this size, that’s impressive.

■ Intel Core i7-12700K CPU (3.6 GHz base, 5.0 GHz Turbo, 8 P-cores, 4 E-cores, 20 threads)

■ Nvidia T1000 GPU (4 GB)

But in highly multi-threaded workflows like rendering things start to slow down. It not only has fewer E-cores than the top-end Core i9 processors, but due to the smaller chassis the CPU can’t clock as high, particularly under sustained loads. Rendering in Keyshot, for example, it only took 30 seconds for it to drop from 4.10 GHz to 3.48 GHz, although it then maintained that frequency for hours. Notably, there was hardly any fan noise throughout. Considering the thermal challenges of packing standard desktop components into a small chassis, this is quite an achievement, especially as some of the earlier HP Z2 Mini workstations could be quite noisy at times.

■ 32 GB DDR5-4800 memory (2 x 16 GB)

■ 1 TB, M.2 PCIe 4.0 NVMe SSD

■ 211 x 218 x 69mm

■ From 2.4 kg

■ Microsoft Windows 11 Pro

■ 3 year (3/3/3) limited warranty includes 3 years of parts, labour and on-site repair

■ Exact price as reviewed not available, but with Intel Core i7-12700 and Nvidia T1000 (8 GB)

£1,499 (Ex VAT)

■ www.hp.com/ zworkstations

The Nvidia T1000 hits the sweet spot for CAD, and the 50W GPU is available in 4 GB and 8 GB models. Our test machine’s 4 GB card performed well in Inventor, Revit and Solidworks, but with some of our larger Solidworks models that need more memory things slowed down, particularly at 4K resolution. Here, the 8 GB card would be a much better fit. An upgrade to the Nvidia RTX A2000 (12 GB) would take the machine into entry-level visualisation territory. Opening the case takes seconds — simply press the rear release button and slide off the top – but the small chassis does make servicing a bit tricky. To get to the storage, you’ll need to remove the GPU. To get to the memory, unscrew the CPU fan. In our test machine both SODIMM sockets were already taken but there’s a spare M.2 slot for a second SSD. There’s no PSU inside. Instead the HP Z2 Mini comes with a sizeable 280W external power adapter. Speaking of power, it drew a mere 160W at the plug when rendering.

Considering its size, the HP Z2 Mini G9 is well equipped with ports. There are two USB Type C and one USB Type A (charging) on the side, and three USB Type A at the rear, along with 1GbE LAN. A Flex I/O port allows you to add ports of your choosing — from more USB and 2.5GbE LAN to Thunderbolt and HDMi.

HP has done an excellent job in updating this impressive micro workstation. The industrial design is excellent, the acoustics are improved, and it’s taken a big step forward in terms of performance.

But there are compromises to be made for a machine this small. While the HP Z2 Mini G9 does very well in single threaded and lightly threaded workflows, it falls off the pace with all cores in play. It simply can’t compete with larger towers when it comes to power and cooling. So if your workflows lean that way, there’s a tough choice to make: either maximise performance or minimise the impact on your desk.

At 190 x 72 x 178mm, Dell’s micro workstation is a little smaller than the HP Z2 Mini G9, but that narrows down your choice of CPUs to 65W ‘Alder Lake’ 12th Gen Intel Core.

This shouldn’t matter if you only use CAD — the difference in single threaded performance between the 65W Core i9-12900 and 125W Core i9-12900K will be negligible, but you’ll probably get less multi-threaded performance when rendering.

There’s no compromise on graphics with a choice of GPUs up the Nvidia RTX A2000 (12GB), covering CAD and entry-level viz.

The machine benefits from a wider choice of storage (2.5-inch HDDs and SSDs) and there are front USB ports for easy access.

It can be VESA-mounted and Dell’s compact all-in-one monitor stand (pictured right) helps keep your desk clutter-free. ■ dell.com/precision

Tiny by name, tiny by nature, the ThinkStation P360 Tiny is by far the smallest workstation from a major OEM — coming in at 179 x 37 x 183mm. To give you an idea of just how small this machine is, simply look at the USB ports in the picture to the left. To keep thermals in check, one might presume the ThinkStation P360 Tiny can only have low power processors, but it can be configured with 35W or 65W ‘Alder Lake’ 12th Gen Intel Core CPUs (up to the Core i9-12900), plus a range of entry-level pro GPUs up to the Nvidia T1000 8GB - making it ideal for 3D CAD. The machine can be placed in desktop or tower mode (with the help of a stand) or secured under a desk or behind a VESA display with custom mounting brackets. It’s so small and light (1.4 kg) that you can easily throw it in a bag to move between office and home.

■ lenovo.com/workstations

This is the most powerful of all the super compact workstations, with the option of a high-performance GPU that opens it up to new workflows. The top-end Nvidia RTX A5000 GPU (16 GB) makes it well suited to real-time viz and GPU ray tracing. However, don’t expect to get the exact same level of performance as you would from a standard tower. This is a mobile GPU on a custom desktop board, so draws a little less power.

The ThinkStation P360 Ultra measures 87 x 223 x 202mm and features a unique design where the motherboard runs down the middle of the chassis to aid cooling and serviceability. Elsewhere, the system supports up to 128 GB of DDR5 SODIMM memory, which is double that of the slightly smaller HP Z2 Mini G9 and Precision 3260 Compact, and 12th Gen Intel Core processors up to 125W.

■ lenovo.com/workstations

For this high-end workstation, Armari is getting the very best out of the powerful 64-core AMD Ryzen Threadripper Pro CPU, writes Greg

CorkeWhen it comes to tuning workstations and squeezing every last bit of performance out of high-end components, few can compete with Armari. The UK firm blew our minds in February 2020 with the Magnetar X64T-G3, which tore up the workstation rule book completely. It delivered never been seen before benchmark scores in multi-threaded workflows without compromising on single threaded performance. Ray trace rendering and 3D modelling had never been such good bedfellows.

The processor at the heart of the machine was the AMD Ryzen Threadripper 3990X, a 64-core chip for ‘consumers’ or ‘enthusiasts’ but not for workstations per se. An amazing CPU in its own right, Armari took it to the next level, tuning it with AMD Precision Boost Overdrive (PBO), a technology that allows processor core frequency to rise as long as the workstation can cool it adequately.

And the Magnetar X64T-G3 certainly could. Its Full Water Loop (FWL) cooling

system came with a giant radiator with nearly three times the surface area of those used in its other workstations. It meant that the CPU could sustain 550 – 650 watts of power in real world applications, with momentary boosts in excess of 800 watts.

■ AMD Ryzen Threadripper Pro 5995WX processor (2.7 GHz, 4.5 GHz boost) (64-cores, 128 threads)

■ AMD Radeon Pro W6800 GPU (32 GB GDDR6)

Fast-forward nearly three years and Armari is taking a more conservative approach for its new 64-core desktop workstation. Built around the workstation-class AMD Ryzen Threadripper Pro 5995WX, the Armari Magnetar M64TPRW1300G3 is designed to work within a more moderate CPU power budget of 400 watts – 120 watts above the processor’s standard Thermal Design Power (TDP) of 280 watts. However, it still has a sprinkling of Armari magic. According to the company, it can hit all core frequencies that are 0.4 GHz to 0.5 GHz higher than the Dell Precision 7865 Tower and Lenovo ThinkStation P620. This, says Armari, is because it implements PBO, and the workstation giants do not.

■ 128 GB (8 x 16 GB)

DDR4-3200 ECC Registered memory

■ 2 x 2 TB Western Digital SN850 NVMe

4.0 M.2 2280 SSD

■ ASRock WRX80 Creator Motherboard

■ Armari Threadripper AIO CPU cooler

■ 1,300W PSU

■ Magnetar M60 Gen3 chassis (470 x 220 x 570mm)

■ Microsoft Windows 11 Pro 64-bit

■ 3 Years RTB Parts & Labour, 1st year collect and return is included. Optional 7yr warranty available

■ £10,799 (Ex VAT)

■ www.armari.com

At this point it’s worth pointing out some differences between the workstation-class Ryzen Threadripper Pro processor and the

now discontinued ‘consumer’ Threadripper processor at the heart of Armari’s 2020 machine. ‘Pro’ includes more memory channels (8 vs 4), higher memory capacity (2 TB vs 256 GB), support for ECC memory, more PCIe lanes and several enterprise-class security and management features.

Considering few ‘consumers’ would ever need 64-cores, everything pointed towards AMD focusing all of its efforts on a workstation-specific Ryzen Threadripper processor, rather than a consumer Ryzen Threadripper processor used for workstation applications. Plus, AMD had most ‘consumer’ workflows well covered with AMD Ryzen with up to 16-cores.

As you might expect Armari’s workstation excels in multithreaded workflows where the power of all 64-cores can be fully harnessed. This, of course, includes ray trace rendering, where the machine was between 1.95 and 2.29 faster than a 16-core AMD Ryzen 9 7950X workstation.

With four times as many cores as AMD’s ‘consumer’ chip, one might expect a bigger lead, but the Ryzen 9 7950X is based on AMD’s newer Zen 4 architecture, so benefits from a higher Instructions Per

Clock (IPC). In the Scan GWP-ME A132R (see page WS14) it can also maintain a solid 5.0 GHz across its 16-cores. In contrast, the Threadripper Pro 5995WX peaked at 3.38 GHz in Cinebench and 3.45 GHz in KeyShot, though this is still around 0.7 GHz higher than the processor’s 2.70 GHz base frequency.

We saw similar results when recompiling shaders in Unreal Engine. We had expected the uplift in Unreal might be bigger because of Threadripper Pro’s 8-channel memory offering superior memory bandwidth, but this didn’t appear to be the case.

One area where we would expect to see a benefit from the machine’s 128 GB of DDR4-3200 ECC

Registered memory (8 x 16 GB modules) is in simulation. Computational Fluid Dynamics (CFD) software including Ansys Fluent and Finite Element Analysis (FEA) software Ansys Mechanical are well known to thrive on high memory bandwidth in certain workflows.

While the machine excels in rendering, it doesn’t win out in more lightly multithreaded workflows, where fewer cores are used. In our Leica Cyclone Register 360 point cloud processing benchmark, for example, which can use up to 6-cores, it was significantly slower than the Ryzen 9 7950X. AMD’s 16-core consumer processor also wins out in single-threaded workflows like CAD.

The Armari workstation is also no slouch when it comes to graphics workflows. With 32 GB of on-board memory, the AMD Radeon Pro W6800 is well equipped to handle colossal viz

Armari might have made its name in high-performance desktop workstations, but the company is now expanding into cloud – but with a difference.

Unlike most cloud workstations, which are virtual machines, Armari’s cloud workstations offer the exact same specs as the company’s desktop Threadripper Pro machines. And each user gets their own dedicated workstation which they can access remotely over a

datasets. You’ll get the most out of this card in demanding applications like Unreal Engine and in VR, but it’s overkill for CAD. Compared to the rest of the machine, storage is quite pedestrian, courtesy of two 2 TB Western Digital SN850 NVMe M.2 2280 SSDs – one for OS and applications and one for data. But there’s plenty of scope for expansion. For I/O intensive workflows, RAID 0 SSDs are an option, and there are four bays for a range of drives including 3.5-inch HDDs.

The ASRock WRX80 Creator Motherboard also supports up to 2 TB of memory, so the machine can be configured to handle colossal datasets, way above the 128 GB limit of Ryzen 7000. There’s a whopping seven PCIe 4.0 x16 slots that can accommodate up to four double height graphics cards, so if GPU rendering is your thing, this has you covered. There are plenty of ports for peripherals including two USB Type A and one USB Type C, front / top. Dual 10Gb/s LAN and Intel 802.11ax (WiFi 6E) + Bluetooth is standard.

As we’ve come to expect from Armari, the machine is solid and well-built, with a steel frame and lightweight aluminium side panels. It’s also extremely quiet when rendering on all 64-cores.

Considering the CPU is so powerful, this is quite a remarkable achievement. This is thanks to Armari’s use of low

1:1 connection. This means higher CPU frequencies for both single threaded and multi-threaded workflows.

To connect, Armari uses an ‘extensively modified’ Microsoft RDP service, which runs on the cloud workstations and is said to improve the experience and performance.

The so-called Armari ‘ripper-rentals’ service is designed to help firms either dynamically expand their workstation capacity without upfront

noise Noctua fans with a custom All-inOne (AIO) cooler designed to handle up to 500W on the CPU. Unlike the Full Water Loop (FWL) cooling system that Armari used in its ‘consumer’ Threadripper machine, the AIO is sealed for life so never requires servicing.

Fan noise only really became a minor annoyance when pushing the machine to its absolute limits, maxing out both processors at the same time — CPU rendering in KeyShot and GPU ray tracing in Unreal Engine. Doing so also drew a colossal 700W of power at the socket, so be warned!

Armari’s Threadripper Pro workstation is the type of machine you feel you can throw anything at, and it will just keep on going. Even with extreme multi-tasking everything feels responsive.

If your workflows demand lots of CPU cores, Threadripper Pro delivers everything you would want in a high-end workstation processor. And Armari certainly knows how to get the most out of it.

At the moment, Intel has nothing that can compete. But doesn’t AMD just know that. The price of its flagship workstation processor has risen considerably since first gen Threadripper Pro and the 64core Threadripper Pro 5950X processor alone is just shy of an eye-watering £6,000 + VAT. This is huge jump up from the previous generation, where £6,755 + VAT would have got you an entire 64-core Threadripper Pro 3995WX workstation (a Lenovo ThinkStation P620) with 128 GB RAM, 1TB SSD and an Nvidia Quadro RTX 5000 GPU.

What you’ve probably guessed from all of this is that the Armari Magnetar M64TP-RW1300G3 is not exactly cheap. As reviewed, it comes in at an eye watering £10,799 + VAT. But for a machine that can completely transform certain workflows, delivering more iterations in shorter timeframes, many will feel this is a price worth paying.

capital expense, or to give customers access to a workstation while they are waiting for their physical machine to arrive. Those who order a qualifying workstation within 30 days from the last day of their rental can claim up to 50% of the rental cost back against the new system.

Armari also offers VPN and LAN-to-LAN bridges to allow firms to dynamically add machines to their existing network.

Armari says that, once

the order is placed, the workstation will be ready to use within 15 minutes.

We gave the service a quick test drive. It’s certainly very easy to get started - simply punch the supplied credentials into the Microsoft Remote Desktop app and away you go.

Within minutes we had Enscape running in a smooth responsive environment, using a standard laptop over WiFi. However, latency is on our

side as we are just down the road from Armari’s HQ in Watford, where the workstations are located.

The service is currently recommended for users across the UK, but Armari is in the process of testing in Europe. Coverage may be extended by colocating machines in other datacentres.

Prices start at £350 per week (£50 per day). Minimum rental period is seven days. Armari also offers a free trial.

This highly portable 14-inch mobile workstation impresses. Equipped with an Nvidia RTX A1000 GPU, it opens up possibilities beyond those of mainstream 3D CAD for this historically less popular form factor, writes Greg

CorkeThe 14-inch mobile workstation form factor has come and gone over the years. They’ve never been great sellers, partly because performance has significantly lagged behind their 15-inch counterparts. But with the Precision 5470, Dell is looking to change that.

With a choice of 45-watt 12th Gen Intel Core H-Series CPUs and the Nvidia RTX A1000 GPU, this workstation-class 14-inch laptop certainly stands out from the competition. The HP ZBook Firefly comes with the less powerful Nvidia RTX A500 GPU while the Lenovo ThinkPad P14s has the Nvidia T550, which does not have RTX acceleration. Both laptops are also limited to 28-watt 12th Gen Intel Core P-Series CPUs.

Having a higher wattage CPU shouldn’t

12800H. When rendering in KeyShot it maintained 2.5 GHz across all P-cores. And it managed to do this without making too much noise, even in Dell’s ‘Ultra Performance mode’ where processor and cooling fan speed is increased for more performance. However, in Solidworks Visualize, when CPU and GPU can be hammered at the same time, CPU frequency dropped to 1.5 GHz and fan noise increased considerably. For a better balance of performance and noise choose the ‘optimised’ setting in Dell’s Power Manager where frequency drops to 1.3 GHz. The ‘Quiet’ setting takes this even lower, to 0.9 GHz. Tuning down the CPU in this way should also extend battery life when not plugged in.

■ Intel Core i7-12800H processor (2.40 GHz, 4.80 GHz Turbo) (6 P-cores, 8 E-cores, 24 threads)

■ Nvidia RTX A1000 GPU (4 GB)

■ 32 GB, DDR5, 5,200 MHz, integrated memory

■ 1 TB, M.2 PCIe 4.0

NVMe SSD

■ 14-inch FHD+ (1,920 x 1,200)

100% sRGB, 500 nits display

■ 311mm x 210mm x 18.95mm (w/d/h)

■ From 1.48 kg

■ 14-inch FHD+ (1,920 x 1,200)

100% sRGB, 500 nits display

■ Microsoft Windows 11 Pro

■ ProSupport and Next Business Day Onsite Service, 36 Month

For graphics, the Nvidia RTX A1000 (4 GB) GPU easily delivers for 3D CAD and BIM, in applications like Revit and Inventor, but it also offers enough performance for entrylevel visualisation at FHD+ (1,920 x 1,200) resolution. With a small to medium sized BIM model in Enscape, the 50 Frames Per

■ £3,119 (Ex VAT)

■ www.dell.com/ precision

responsive, although a little small compared to larger machines. The Pro 2.5 keyboard is excellent with a fingerprint reader built into the power button. Alternatively, gain access to the machine through facial recognition with the Windows Hello compliant HD IR camera.

The 100% sRGB, 500 nits IPS display is perfectly respectable, but lacks the impact of brighter and more vibrant OLED displays available on larger pro laptops. Our test machine’s FHD+ (1,920 x 1,200) display resolution is well matched to a machine of this size, adding a few more pixels to the standard FHD (1,920 x 1,080). For higher pixel density, there’s a QHD+ (2,560 x 1,600) touchscreen option, though this will increase load on the GPU, probably reducing frame rates a little in graphics-hungry viz applications. The lnfinityEdge bezel contributes to the compact 311mm x 210mm footprint. Weight starts at 1.48kg, plus 0.47kg for the 130W AC adapter including UK plug.

The laptop is slim, maxing out at 18.95mm, which means it’s USB-C only (two Thunderbolt 4 ports left, two right). There’s no HDMi, DisplayPort, or Ethernet, but Dell includes a USB-C to HDMi / USB-A adapter in the box. Of course, to increase the connectivity options, there’s an optional Dell Thunderbolt 4 Dock. Superfast wireless is standard, courtesy of the Intel AX211 Wi-

There’s much to like about this highly portable 14-inch mobile workstation. Historically, machines of this size have been limited in what they can be used for, mostly because their GPUs have struggled with anything more demanding than small to medium sized 3D CAD models. However, the Dell Precision 5470’s Nvidia RTX A1000 GPU expands the possibilities considerably, making it a viable option for entry-level visualisation.

This impressive balance of performance and portability doesn’t come cheap, however. Our test machine comes in at £3,119 (Ex VAT). Still, considering the flexibility a lightweight laptop like this can give you, some will certainly find it a price worth paying.

The 15.6-inch Dell Precision 5570 is the latest incarnation of one of our favourite workstation laptops. It’s very portable, considerably smaller than the 16-inch Lenovo ThinkPad P1 G5 and HP ZBook Studio G9. It’s a mere 7.7mm at the front, 11.64mm at the rear (although this is slightly misleading, as Dell doesn’t appear to include the display in this measurement). It starts at 1.84kg.

Despite its sleek aesthetic, it still includes perfectly good processors for CAD, including up to the Intel Core i9-12900HK CPU and Nvidia RTX A2000 (8 GB) GPU, which includes hardware ray-tracing to make your CAD models pop.

One trade off of the slender chassis is no USB Type A ports (only USB Type C / Thunderbolt 4), although it ships with a USB Type A and HDMi dongle.

17-inch mobile workstations used to be all about performance and expandability with high-end GPUs and loads of storage, but the Dell Precision 5770 is a far cry from these heavyweights of old. Trading off some GPU power for more portability, you get a stunning 17-inch UHD+ (3,840 x 2,400) InfinityEdge display for detailed design work in a 2.17kg, sub 20mm chassis.

The Nvidia RTX A3000 GPU might not be top notch, but it’s a step up from the 15.6-inch Precision 5570’s Nvidia RTX A2000 and has 12GB of GPU memory to raise the ceiling on the size of datasets it can handle.

It has four Thunderbolt 4 ports (USB Type C with DisplayPort) and relies on the included dongle for USB Type A and HDMi.

■ www.dell.com/precision

For the G9 edition of this slimline pro laptop, HP made the switch from a 15.6-inch to a 16-inch panel. This made it a tiny big bigger than the G8 edition, but with a 16:10 aspect ratio 4K (3,840 x 2,400) display (with Dreamcolor and OLED options) you get 11% more usable screen area than the previous 16:9. Memory has also been improved with 64 GB, double that of the G8, which was a little lacking. Compared to the beefier HP ZBook Fury G9, the HP ZBook Studio G9 offers slightly less powerful 12th Gen Intel Core H-Series processors with up to 6 P-cores and 8 E-cores. Graphics options are similar, up to the Nvidia RTX A5500 (16 GB), but with a lower power draw, expect slightly www.hp.com/zworkstations

The HP ZBook Firefly G9 comes in two sizes - 14-inch and 16-inch. With a chassis depth of 19.9mm, the budget mobile workstation just about makes it into our sub 20mm roundup. Users get a choice of low power 12th Generation Intel Core i7 Processors. These should still deliver good performance in single threaded CAD-centric workflows, and some of the super power efficient 15W ‘U’ models will save you money. However, with fewer cores and a lower power budget, multi-threaded renders will take longer.

The GPU choices are limited, with only 4 GB options available. Both machines offer the Nvidia T550 GPU, while the 16-inch model can also be configured with the Nvidia RTX A500. However, despite having hardware enabled ray tracing, it’s still really just suited to mainstream CAD. ■ www.hp.com/zworkstations

The CAD-focused 14-inch ThinkPad P14s G3 comes in two variants - an ‘Intel’ edition with 28W 12th Generation P series Intel Core processors and an Nvidia T550 (4GB) GPU and an ‘AMD’ edition with AMD Ryzen 5 / 7 Pro 6000 U Series processors and integrated AMD Radeon 680M graphics with Pro APU driver.

Both share the same 17.9mm chassis, but by combining CPU and GPU onto a single chip the ‘AMD’ edition is slightly lighter, starting at 1.28kg. The ‘Intel’ edition has a slight edge in memory capacity (48 GB vs 32 GB).

The laptop stands out for its optional 4K IPS display, which is higher res than others, a FHD camera, HDMi, two USB Type A ports and two USB Type C. ■ www.lenovo.com/ workstations

Speed up modelling, animating and rendering with workstations optimised for M & E applications such as 3DS Max, Cinema4D & Unreal Engine £

INC VAT

INC VAT

9,499.99 INC VAT

17,999.99 INC VAT

Intel’s long awaited discrete pro graphics cards have finally arrived, targeting CAD and BIM workflows. But do these entry-level workstation GPUs have enough to upset the apple cart?

Greg Corke exploreswww.intel.com/arcprographics

For years, AMD and Nvidia have been the only manufacturers of discrete workstation GPUs - by which we mean graphics cards that plug into a workstation motherboard and are not built into the CPU. But with the launch of the Intel Arc Pro A-series two players have now become three.

Competition is always good, and with Intel’s first-generation pro GPUs — the Intel Arc Pro A40 (6 GB) and Intel Arc Pro A50 (6 GB) — the battle is at the entry-level. And as Intel eases its way into discrete graphics with the first products from its ‘Alchemist’ GPU architecture it makes perfect sense to address this volume market.

A huge part of the entry-level pro graphics market is for workstations that run 3D CAD and Building Information Modelling (BIM) software. Applications including Autodesk Revit, Autodesk Inventor, Graphisoft Archicad and others, are largely CPU limited. This happens when the CPU becomes the bottleneck and

is not able to keep up with the graphics card. As a result, no matter how much graphics processing power you throw at a 3D CAD model, frame rates will only increase by a relatively small amount. In some cases, they will not increase at all.