Adopting the Power of AI to Drug Development Projects

Your air cargo partner of choice

Vaccines and antivirals have been the fundamental pillars in the battle against infectious diseases. Nonetheless, their development is fraught with substantial hurdles, necessitating a protracted and costly journey, frequently marred by a notable rate of failure. The onset of the COVID-19 pandemic brought Human Challenge Trials (HCTs) showcased the significant potential of this research approach in advancing our understanding and assessment of infectious diseases and expediting vaccine development.

“With small biotechs, these human challenge trials offer clear proof-of-concept data, much better than data from animal studies, which can be plagued with translation issues on how it would perform in humans. As for big pharma, human challenge trial data can be used to argue their case for internal funding over other assets in the pipeline,” said Open Orphan’s chief scientific officer Andrew Catchpole.

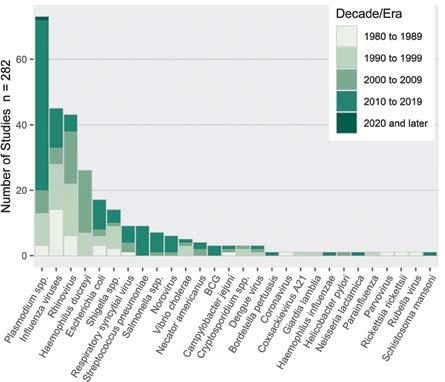

Human challenge studies have made a remarkable contribution to speeding up the progress of treatments for various diseases, such as malaria, typhoid, cholera, norovirus, and influenza in the past few decades. These trials have also been instrumental in aiding researchers determine which candidate vaccines are most promising for advancement to phase 3 clinical trials—a crucial step that typically entails the involvement of thousands of volunteers.

In the realm of medical research, where science and ethics intersect, HCTs stand as a powerful symbol of collective pursuit of knowledge and commitment to improving public health.

All risks in human challenge studies ought to be minimised to the greatest extent possible, while focusing on not excessively compromising the potential research benefits. This requires consultation with scientific experts, prospective participants, and the broader community. Through these consultations, it becomes possible to assess and determine the acceptable level of residual risks, ensuring that they are justified by the expected research benefits. This collaborative

and ethical approach helps uphold the principles of transparency, informed consent, and the responsible advancement of scientific knowledge in the context of human challenge studies.

Despite the significant inroads made by human challenge trials (HCTs) in infectious disease research, however, we do not yet have codified regulations pertaining to HCTs. Furthermore, there is a visible lack of regulatory guidance related to standardising approaches to HCTs among different regulatory bodies.

As indicated by a study published in The Lancet Infectious Diseases journal, recent assessments of the ethical frameworks concerning human challenge studies have emphasised on striking a balance between the acceptable risk associated with exposing individuals to experimental interventions or pathogens for vaccine development and the social value or public health benefits. This delicate equilibrium ensures that the ethical conduct of these studies prioritises the wellbeing of participants while advancing vital research with the potential to benefit public health and combat infectious diseases effectively.

The article in this issue on “Human Challenge TrialsEstablishing early risk-benefit in development of vaccines and therapies for infectious diseases” by Bruno Speder, VP Regulatory Affairs, Poolbeg Pharma explains the role of HCTs in the testing and development of novel antivirals and vaccines.

Keep reading!

Prasanthi Sadhu Editor06 The Glocalisation Classroom Astute glocalisers learn from their successes and failures

Brian Smith, Principal Advisor, PragMedic

14 Accelerating Cell and Gene Therapy Treatments to Patients with the Right CDMO Outsourcing Model

Andy Whitmoyer, VP Supply Chain, Center for Breakthrough Medicines

Emily Moran, SVP, Vector Manufacturing, Center for Breakthrough Medicines

18 Securing Long-term Success

What to look for in a cell culture media supplier

Chad Schwartz, Senior Manager, Global Product Management, Thermo Fisher Scientific

27 Emerging Tools Shaping Drug Discovery and Development Landscape

Qasem Ramadan, Alfaisal University

54 Human Challenge Trials

Establishing early risk-benefit in development of vaccines and therapies for infectious diseases

Bruno Speder, VP, Regulatory Affairs, hVIVO plc

36 An introduction to FDA’s Human Factors Engineering Requirements for DrugDevice Combination Products

Allison Strochlic, Global Leader – Human Factors, Emergo by UL,

Yvonne Limpens, Managing Human Factors Specialist, Emergo by UL,

Katelynn Larson, Technical Writer, Emergo by UL

42 A Step-By-Step Strategy for Designing A Meta-Analysis

Ramaiah M, Manager, Freyr solutions

Balaji M, Deputy Manager, Freyr solutions

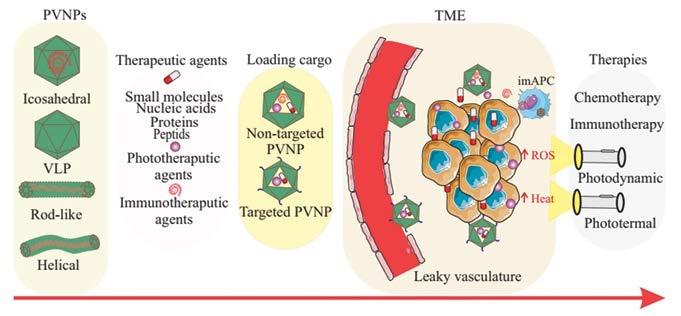

49 A Plant Virus Nanoparticle Toolbox to Combat Cancer

Mehdi Shahgolzari, Department of Medical Nanotechnology, Faculty of Advanced Medical Sciences, Tabriz University of Medical Sciences

Afagh Yavari, Department of Biology, Payame Noor University

Kathleen Hefferon, Virology Laboratory, Department of Cell & Systems Biology, University of Toronto

58 Respirable Engineered Spray Dried Dry Powder as a Platform Technology

Aditya R Das, Founder and Principal, Pharmaceutical Consulting LLC

61 Just in Time Manufacturing

Creating more sustainable supply chains

Lyn McNeill, JTM Supply Chains Solutions Manager, Almac Clinical Services

Alessio Piccoli

Lead

Sales and Business Development Activities for Europe Aragen Life Science

Andri Kusandri

Market Access and Government & Public Affairs Director

Merck Indonesia

Brian D Smith

Principal Advisor PragMedic

Gervasius Samosir

Partner, YCP Solidiance, Indonesia

Hassan Mostafa Mohamed Chairman & Chief Executive Office ReyadaPro

Imelda Leslie Vargas Regional Quality Assurance Director Zuellig Pharma

Neil J Campbell Chairman, CEO and Founder Celios Corporation, USA

Nicoleta Grecu Director

Pharmacovigilance Clinical Quality Assurance Clover Biopharmaceuticals

Nigel Cryer FRSC

Global Corporate Quality Audit Head, Sanofi Pasteur

Pramod Kashid

Senior Director, Clinical Trial Management Medpace

Quang Bui

Deputy Director at ANDA Vietnam Group, Vietnam

Tamara Miller

Senior Vice President, Product Development, Actinogen Medical Limited

EDITOR

Prasanthi Sadhu

EDITORIAL TEAM

Grace Jones

Harry Callum

Rohith Nuguri

Swetha M

ART DIRECTOR

M Abdul Hannan

PRODUCT MANAGER

Jeff Kenney

SENIOR PRODUCT ASSOCIATES

Ben Johnson

David Nelson

John Milton

Peter Thomas

Sussane Vincent

PRODUCT ASSOCIATE

Veronica Wilson

CIRCULATION TEAM

Sam Smith

SUBSCRIPTIONS IN-CHARGE

Vijay Kumar Gaddam

HEAD-OPERATIONS

S V Nageswara Rao

Ochre Media Private Limited Media Resource Centre,#9-1-129/1,201, 2nd Floor, Oxford Plaza, S.D Road, Secunderabad - 500003, Telangana, INDIA, Phone: +91 40 4961 4567, Fax: +91 40 4961 4555 Email: info@ochre-media.com

www.pharmafocusasia.com | www.ochre-media.com

© Ochre Media Private Limited. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, photocopying or otherwise, without prior permission of the publisher and copyright owner. Whilst every effort has been made to ensure the accuracy of the information in this publication, the publisher accepts no responsibility for errors or omissions. The products and services advertised are not endorsed by or connected with the publisher or its associates. The editorial opinions expressed in this publication are those of individual authors and not necessarily those of the publisher or of its associates.

Copies of Pharma Focus Asia can be purchased at the indicated cover prices. For bulk order reprints minimum order required is 500 copies, POA.

Magazine Subscribe LinkedIn

Done well, this process avoids the three dangers of isomorphic targeting.

Brian D Smith, Principal Advisor, PragMedicIn the first article in this series (“The Glocalisation Challenge”), I differentiated between astute and naïve glocalisers and, in this fourth and final article, I describe the most undervalued difference between them. For astute glocalisers, local execution of global strategies goes further than allocating effort between national affiliates (see article two, “The Glocalisation Choice”). It also goes beyond how those affiliates adapt global strategy to local market conditions (article three, “The Glocalisation Change”). They

also sustain and expand their success by using glocalisation as a classroom. They deliberately learn lessons from their affiliates and spread them around the firm. This article describes how they do that in practice.

Let’s assume that you have assimilated and acted on the lessons from the first three articles in this series. You have understood that there is a capability chasm between those life science companies that are astute and those that are naïve. You have learned not to target countries

naively, by market size, but astutely, according to the winnable segment size. And you have learned not to adapt to local markets by naively making only the essential and easy changes but by astutely building augmented value propositions around contextual segments. If you have done all that, then your results will be more like those of the astute companies I studied and less like the naïve ones. But you will still have an important question to answer: how do we sustain our glocalisation success?

Sustaining success is difficult because market change is a constant and what worked yesterday may not work tomorrow. That’s why the respected academics

Sparrow and Hodgkinson said that, in a changing market, a firm’s only sustainable competitive advantage is its ability to learn. But how do firms learn and, in particular, how do pharma, medtech and other life science firms learn from and about glocalisation? Let’s begin with how naïve glocalisers try and fail to learn.

You might be familiar with the following scenario from your present or past firm. Key people from each affiliate in a region, or globally, are invited to a meeting. Most of the agenda is HQ communicating the global strategy to the

affiliates. But there’s also a session about sharing best practice. In that session, the affiliates are asked to share their successes and failures, although naturally they focus on the former. These successes are held up as “best practice” and the other affiliates are encouraged to copy them. It has the obvious credibility of being a proven, real-world case study in your own company, analogous to learning from a sibling. For naïve glocalisers, this imitation of perceived best practice of friends and colleagues is the default approach to learning and adapting to market changes.

But this kind of best practice is illusory and it hinders learning and adaptation as much as it helps. It is illusory because it is built on the assumption that what works in one country will work with only minor adaptation in another because the company and its products are the same. This is often a false assumption because even affiliates in the same region can be working in markets that differ in important ways. I mentioned some of those important differences between markets, such as competitive activity and segmentation patterns, in article two, “The Glocalization Choice”. And this kind of best practice imitation can hinder adaptation because mimicry drives value proposition design instead of market needs.

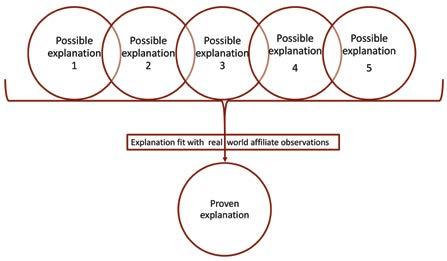

So if sharing and copying illusory best practice is the default of naïve glocalisers, how do astute glocalisers learn from and about glocalisation? In my research,

I observe three approaches to learning. The most astute glocalisers use all three in a complementary manner, as summarised in figure 1.

The most prevalent approach to learning among astute glocalisers is the inductive approach. This is a form of “pattern seeking”, as shown in figure 2. It involves searching across a range of national affiliates for similarities and differences in what works and what does not and then drawing a conclusion about the lessons to be learned. In companies I’ve worked with, inductively-drawn lessons have included the heterogeneity of payer behaviours and the role of communities of practice in influencing new product adoption. Inductive learning works best when there is someone, typically with regional responsibility, who has an open mind and looks at affiliates objectively. That objectivity allows them to be receptive to the successes and failures of the affiliates and to find the patterns in their activity and outcomes. Induction fails when there is no one in that role or when that person comes with fixed opinions. In that case, the process is thwarted by the cognitive bias that psychologists call “confirmation bias” and inductive learning becomes no more effective than the best practice illusion. Typically, inductive learning is used most when a firm is unclear about both what it needs to learn and how to learn.

Deductive learning is more methodical and less prevalent than inductive learning. It parallels the scientific method that evaluates a theory, via a pair of hypotheses, to learn something new, as shown in figure 3. Deductive learning is best explained by one real world example I observed. The company believed, but did not know, that uptake of a women’s health product in Asia Pacific was hindered by cultural attitudes towards this therapy

area. From that putative explanation of why uptake varied, they created a pair of hypotheses to test:

• H1 = If our putative explanation is correct, uptake will vary between affiliates with different cultural beliefs

• H0 = If our putative explanation is incorrect, uptake will not vary between affiliates with different cultural beliefs. By comparing uptake across a number of affiliates, they found H0 was better supported by the real world data. Although this did not give them a new explanation of uptake, it did remove an old, incorrect explanation, which is just as useful. Both inductive and deductive learning need a thoughtful person in a regional role to own the learning process. Unlike inductive learning, deductive learning needs a working theory, testable hypotheses and sufficient data for evaluating them. Deductive learning fails when firms cannot make their working theories explicit, or if hypotheses are poorly designed or if they misinterpret data. As you can imagine, deductive learning processes are most effective when the firm has a scientific, data-driven culture.

The least common but often most effective way that glocalisers learn is by abduction. This combines elements of induction and deduction, although it is a distinct approach in its own right. Abductive learning works by finding which of several explanations best explain the real world, as shown in figure 4. In my research, I found the Asia-Pacific women’s health company, mentioned above, used abduction to build on their deductive process. They had five putative explanations of why uptake varied between their affiliates in the Asia-Pacific region:

• Cultural beliefs regarding women’s health

• Level of economic development

• Attitudes toward traditional medicine

• Education level of women in the target age group

They then evaluated the five possible explanations against the quantitative data, looking for correlations. They complemented this with a qualitative research programme of focus groups, which looked for causal mechanisms. They found that the level of economic development was the dominant influence on uptake but it was significantly moderated by women’s education level, with the other three factors being relatively unimportant. This practical, useful finding strongly shaped their strategy.

As well as needing someone in a regional role who is skilled and commit-

ted to drive it, abductive learning works best when there are multiple competing explanations for the business issue you are interested in, such as uptake, market share or customer attitudes. It fails when a single reason is taken for granted or when firms cannot identify alternative possible explanations for the issue. Abductive learning works best in firms with a pragmatic culture who are not too obsessed with numerical analysis.

As always, the inspiration for my glocalisation research was curiosity. Global life

science companies are often very similar in their size, structure and strategy. Yet they differ greatly in how well they translate core, global strategies into local ones and how much value their national affiliates create. As I studied these companies, the difference between astute glocalisers and their naïve peers emerged. As I described in “The Glocalisation Challenge”, they differed in how they targeted, tailored and learned. Astute targeting is the result of clever competitive analysis, super segmentation and prioritising differently between countries, as I described in “The Glocalisation Choice”. Then, in “The Glocalisation Change”, I looked closely into astute tailoring and saw the building of layers of value around contextual market segments. And finally, in this article, I unpicked the ways that astute companies learn from glocalisation. They use a mixture of induction, deduction and abduction, making the global operations a classroom in which they learn to sustain their success.

Despite the complexity of my research and the richness of its findings, there is one salient difference between astute, successful glocalisers and their naïve, less successful rivals. It is their attitude towards strategy in general and glocalisation in particular. The naïve think it is unimportant and treat local affiliates as a little more than local concierges. Astute, exemplary companies see glocalisation of strategy as critical and view effective affiliates as vital to it.

Curia is a Contract Development and Manufacturing Organization with over 30 years of experience, an integrated network of 29 global sites and over 3,500 employees partnering with customers to make treatments broadly accessible to patients.

Our biologics and small molecule offering spans discovery through commercialization, with integrated regulatory and analytical capabilities.

Our scientific and process experts and state-of-the-art facilities deliver best-in-class experience across drug substance and drug product manufacturing. From curiosity to cure, we deliver every step to accelerate and sustain life-changing therapeutics.

To learn more visit us at curiaglobal.com

International and Regional Bio/Pharma meets online / in-person at Suntec Singapore this month.

Following its biggest gathering post-pandemic, ISPE Singapore Affiliate’s highly anticipated annual meeting reflects the energy and positive growth for the industry in Asia Pacific. Each year, the show provides an extraordinary opportunity for professionals in the bio/pharmaceutical and related industries to gather, learn, and exchange insights on the latest trends and advancements. The Conference runs alongside the sold-out Exhibition, packed with over 90 leading companies offering the spectrum of expertise from aseptic to digital solutions!

Now into its 23rd edition, the 3 day event kicks off with an online pre-Conference Symposium on 23 August. This is followed by the Main Conference, held in-person from 24-25 August at Suntec Singapore. True to its mission of ‘Connecting Pharmaceutical Knowledge’, the conference will also be livestreamed and available on-demand for 6 months afterwards.

Under the theme of "Shaping the Future of Pharmaceutical Manufacturing: Trends, Technologies and Beyond," the conference features over 90 distinguished speakers including 12 Keynotes.

Spanning global to regional biologics, vaccines and pharmaceutical manufacturers, CDMOs, regulatory authorities and industry leaders, highlights include:

• Latest updates from 8 Regulatory agencies: EMA, PIC/s, WHO, HSA, ANSM, Philippine FDA, DRAP Pakistan & Malaysia’s NPRA

• Case study insights from Bio/pharma manufacturers including Pfizer, Amgen, Merck & Co, MSD, Lonza Biologics, Bayer, LOTTE BIOLOGICS, AstraZeneca, Genentech, WuXi Biologics, Moderna, Takeda, Thermo Fisher, GSK and more.

23-25 August | Online & In-person @ Suntec Singapore

Full programme & registration details: www. ispesingapore.org1

Attendance is by registration and open to ISPE Members and non-Members alike. This year, non-Members benefit from 1 year’s ISPE membership included when they register!

This year’s symposium & programme tracks comprise extensive in-depth coverage on digital transformation / pharma 4.0, digital CQV, regulatory & compliance, quality, advanced manufacturing and ATMPs, Annex 1, CCS, GAMP5, sustainability and supply chain amongst others. Extended panel discussions revolve around hot topics, including innovating for speed-to-market, tackling drug shortages, challenges and opportunities working with CDMOs, mRNA vaccines development and beyond.

5, yes 5 Keynote speakers open the online Pre-conference Symposium; from both regulatory agencies and industry. Hear from Pfizer, Amgen & Merck and regulators from ANSM/PIC/s & EMA. Coming together in a live Global Panel discussion, they will address ‘Regulatory, Policy & Industry Approach to Combat Drug Shortages’. Diane L Hustead, Executive Director, Regulatory Affairs, Merck & Co, Inc, & Drug Shortage Initiative Team Chair, ISPE, USA will moderate this online session.

Following the plenary, two concurrent tracks focus on A: Industry Best Practice, sponsored by Fedegari and B: Digital Transformation, sponsored by Lachman Consultants.

Keynote speaker, Dr Joerg Block, GMP Compliance Engineering, Bayer AG Pharmaceuticals,

1 http://www.ispesingapore.org/

Germany will present on ‘Good Engineering Practice: Accelerating Project Delivery‘. Case studies range from HPAPI biomanufacturing, CIP Operation and single-use for mRNA to good engineering practice. Discussion on ‘Applying Good Practices for the Project Life Cycle’ conclude this track.

Visionary Panel: CDMOs, Regulatory & Compliance

ISPE President & CEO, Thomas B Hartman will grace ISPE Singapore Affiliate’s annual conference for the first time this month. He will moderate the Opening VISIONARY PANEL: Biotechnology Industry Challenges and Opportunities Working with Contract Development & Manufacturing Organizations (CDMO’s). Global and regional regulators are well represented, culminating with the in-person ASEAN REGULATORY ROUNDTABLE: How Regional Regulatory Agencies are Adopting Global Standards.

Global & Regional Regulatory Agencies:

Keynote Speakers:

Jacques Morenas, Chair, Training sub-committee, PIC/s & Technical Advisor, Inspection Division, ANSM, France

Andrei Spinei, Manufacturing Team Lead, Inspections Office, Quality and Safety of Medicines Dept, European Medicines Agency, Netherlands

Chong Hock Sia, Director (Quality Assurance) & Senior Consultant (Audit & Licensing), Health Products Regulation Group, Health Sciences Authority, Singapore

Vimal Sachdeva, Senior GxP Inspector, WHO, Switzerland

Jesusa Joyce N. Cirunay, Director IV, FDA Center for Drug Regulation and Research (CDRR), Philippine.

Abdul Mughees Muddassir, Assistant Director to CEO, Drug Regulatory Authority of Pakistan (DRAP)

Dr Noraida Mohamad Zainoor, Deputy Director, Centre of Compliance and Quality Control, National Pharmaceutical Regulatory Agency (NPRA), Malaysia

On 24th August, Keynote speaker Takashi Kaminagayoshi, Head, Biotherapeutics Process Development, Pharmaceutical Sciences, Takeda Pharmaceutical Co Ltd, Japan will expand on ‘Global Engineering Standards for Biopharma Manufacturing’. Additional highlights include: CDMOs outlook, aseptic filling platform technology and a discussion on ‘Innovating to Gain Speed to Market’.

Held in-person, this track in the Main Conference is sponsored by Yokogawa, which hosts a deep-dive ‘Lunch n’ Learn’ workshop on ‘Digital Transformation Toward Smart Manufacturing’ earlier in the day.

This year’s call for presentations yielded a majority under Digital Transformation. Unsurprisingly, given the prevalence and influence of technology in both our work and personal lives. Undoubtedly spurred by necessity during the pandemic, it is significantly impacting and expediting this industry into digitalisation and virtual work practices.

Fundamental to this evolution, ISPE’s GAMP® CoP2 (Community of Practice) has been prolific in disseminating pragmatic guidance on the use of computerised systems in regulated industries without which DT could not happen. Instrumental in this arena is Charlie Wakeham, Global Head of Quality and Compliance, Magentus & Co-Chair, ISPE GAMP Global Steering Committee and Lead, ISPE GAMP CSA Special Interest Group. Her online discussion entitled ‘How does GAMP5 Revision Impact the Industry?’ appears in the ‘Digital Transformation’ track. Held online, presentations such as ‘No-Code GMP Apps: Has Paper Finally Met its Match?’, ‘The Nexus: Data Integrity & Pharma

2 https://cop.ispe.org/forums/community-home?CommunityKey=ed72d220-cd9e4ba9-87c2-a4b3623e5ca5

Charlie will be onsite in Singapore for the launch of the GAMP® South Asia CoP. and lead an interactive workshop on ‘Applying Critical Thinking in Computerised Systems Projects’. Key supporting companies, CAI, NNIT, No deviation and PQE will host an onsite GAMP® Quiz in conjunction with recruitment of members to this new Community of Practice.

The importance of Digital CQV is reflected as a track, sponsored by No deviation. Featuring presentations by experts on ‘Transition & Implementation Strategies for Digitising C&Q’, Digital CPV and a discussion on ‘C&Q Digitalisation’.

Hosted by NNIT on 25 August, the Digital Transformation/Pharma 4.0 theme continues with sessions encompassing innovation, advanced therapies, smart manufacturing, digital twins and sustainability. Keynote speakers Richard Lee, Chief Executive Officer and Member of the Board, LOTTE BIOLOGICS, Korea & Meli Gallup, Director, Global MSAT Commercial Product, Genentech, USA precede a Digital Pharma Discussion tackling ‘Challenges to Digital 'Emancipation' (Freedom)’.

Rounding off the Main Conference, the Advanced Manufacturing/ATMPs track opens with a Keynote by Dr Jerry Yang, CTO & Head of TechOps, Fosun Kite Biotechnology Co Ltd, China. This is complemented by talks on QdD, multimodal ATMP facilities etc are discussions on ‘Reliability in Continuous Manufacturing’ and ‘Key Strategies in Supply Chain Resiliency’.

View all Speakers & the full Symposium/ Conference Programme on www.ispesingapore.org3

Over the 3 days, spanning from aseptic / sterile, single-use, multi-modal, flexible or continuous manufacturing and more, speakers will share their experience alongside the latest updates and developments.

Whether in-person or virtually, the ISPE Singapore Annual Meeting offers a great opportunity to network with peers, engage in meaningful discussions, and explore innovative solutions in the pharmaceutical landscape.

Taking place alongside the main conference and exclusively for all who join in-person, 22 open-access satellite workshops will further facilitate knowledge

3 http://www.ispesingapore.org/

sharing among attendees. Each hour-long session provides in-depth coverage on a wide range of topics, complementing and adding to the conference. Each led by industry subject matter experts, workshops are open to both conference attendees and visitors.

Concurrently, this year’s expanded exhibition showcases the largest gathering of leading product, technology and service providers. Visitors may also attend the ‘Power Hour’ programme of 32 talks held daily at participating exhibitors’ stands. The schedule of workshops, Power Hour talks and associated onsite events can be viewed on the event site www.ispesingapore.org4

ISE’s community of volunteers reaches across manufacturing industry professionals, with student engagement to executive mentoring programmes. The Singapore affiliate encourages students and Emerging Leaders locally and from the region, with the Hackathon competition held online, resulting onsite with final presentations. Results are announced during Pharmanite on 24th August.

Women in Pharma are well represented, gathering for a Roundtable Lunch discussion on multiple topics, each table featuring an inspiring host. This takes place on 25th August, with the Emerging Leaders Networking reception providing an informal celebration to conclude the event.

ISPE Commissioning and Qualification Training Course – 22 & 23 August 2023

For the first time in this region, ISPE Global training offers participants an accessible and affordable onsite course on:

Science and Risk-based Commissioning and Qualification - Applying the ISPE Baseline Guide, Volume-5, Second Edition: Commissioning and Qualification (T40)

Worldwide Regulatory expectations and guidance as led by FDA and the EU have stated that all Pharmaceutical Quality Systems should apply a QRM (Quality Risk Management) approach. Through interactive workshops, this course will explain and apply the science and risk-based approach to integrated lifecycle Commissioning & Qualification by conducting verification of systems, equipment and facilities in accordance with the

recently issued 2nd Edition Guide, ICH documents Q8 (R2), Q9, and Q10, current Regulatory Guidance, industry best practices, and ASTM E2500.

Registration & details can be found at www.ispesingapore.org to Schneider Electric Singapore’s Innovation Hub & Merck’s M Lab™ Collaboration Center provide a great opportunity to view state-of-the-art facilities and experience technology hands-on.

Last year’s ISPE Singapore affiliate Conference & Exhibition drew a record 1,300+ participants, with returning regional delegates and visitors expected to boost this year’s attendance.

With a packed schedule of events lined up, the stage is set to maximise learning and collaboration among regulatory agencies, manufacturers, and technology and service providers. The committee appreciates the support from the community and looks forward to welcoming the industry to Singapore this August!

Exclusive Offer for readers: Register for the Symposium/Conference with discount code PHARM10 to save an extra 10% off prevailing rates.

The International Society for Pharmaceutical Engineering is the world’s largest not-for-profit association serving its Members by leading scientific, technical and regulatory advancement throughout the entire pharmaceutical lifecycle. www.ispe.org

ISPE Singapore Affiliate since 2000 has over 300 Active Members and over 3,000 supporters predominantly in Singapore and the region.

As global supply chains continue to restabilise, innovator companies must carefully manage resources to reach key milestones. Traditional CDMO models with booking, reservation, and other fees devour critical development runway. The new generation of CDMO offers flexible terms, transparent access, and collaboration to ensure success without compromising timelines or budgets.

therapies. In April, Breyanzi (lisocabtagene maraleucel) by Juno Therapeutics, Inc., a Bristol-Myers Squibb Company, obtained approval in the EU. Novartis's Kymriah and Breyanzi also achieved approvals for additional indications, further expanding the reach and potential of these therapies.

Approximately 400 million people worldwide are living with a rare disease, and about half of those affected by rare diseases are children. Cell and gene therapies have the potential to revolutionise the treatment of many diseases, including rare genetic disorders and cancer.

In July of last year, PTC Therapeutics achieved a significant milestone when the European Commission (EC) granted authorisation for Upstaza. This treatment marks the first-ever approved therapy for aromatic L-amino acid decarboxylase (AADC) deficiency, a rare genetic disorder affecting the nervous system. Following this, BioMarin received marketing authorisation from the EC in August for Roctavian, the world's first gene therapy developed to treat Hemophilia A, a hereditary bleeding disorder. In the same month, the FDA approved betibeglogene

autotemcel (beti-cel; Zynteglo) by bluebird bio for the treatment of beta thalassemia in both adults and children. This therapy had previously obtained approval in the EU back in 2019. Another notable approval came in September when the FDA granted its authorisation for elivaldogene autotemcel (eli-cel; Lenti-D), also developed by bluebird bio. This therapy offers a solution for early and active cerebral adrenoleukodystrophy (CALD) patients without an HLA-matched donor. Lastly, in November, the FDA approved a Hemophilia B treatment developed through the collaboration of uniQure and CSL Behring.

In the realm of chimeric antigen receptor (CAR) T-cell therapies, two notable approvals took place in 2022. In February, Carvykti (ciltacabtagene autoleucel) by Legend Biotech and Janssen received approval from the FDA, marking a significant milestone in CAR-T

Despite the clinical promise, getting these treatments to patients quickly and efficiently is a significant challenge. Bringing cell and gene therapies to market is a complex process that requires a significant investment of time and resources from both a developmental and manufacturing perspective.

The clinical development process for these treatments involves several phases of testing, including preclinical studies, phase 1 safety studies, and larger phase 2 and phase 3 studies to evaluate efficacy and safety in larger patient populations. The process of clinical development can take many years and require significant investment. For diseases with small patient populations with no other treatment options (requiring production of small batches), incentives are often not aligned for large pharmaceutical companies and mediumsized biotech companies to invest in.

Developing cell and gene therapies also requires manufacturing processes that can be scaled up to meet the needs of patients. This can be a challenge, particularly for therapies that involve complex manufacturing processes or require specialised facilities.

Additionally, the global supply chain for cell and gene therapies can be complex, as starting materials and other components may need to be sourced from multiple locations around the world. While bottlenecks from pandemic lockdowns have improved since 2021, certain equipment and consumables used in cell and gene therapies continue to have long lead times and unreliable availability.

Contract development and manufacturing organisations (CDMOs) play a crucial role in accelerating the availability of cell and gene therapies to patients by absorbing and mitigating risk, simplifying supply chains with starting material on-hand, and accelerating development with processes and platforms.

However, traditional CDMO models charge fees that can consume critical development runway and negatively impact the financial resources of innovator companies. While fees for traditional CDMO services can vary widely, they can be a significant financial burden for innovator companies. Common charges have included suite reservations and holding, booking, project initiation, tech transfer, onsite accommodation, and programme management. These fees can consume critical development runway and negatively impact the financial resources of innovator companies, which can delay the development of cell and gene therapies.

Another common occurrence as the cell and gene industry evolved was the need to work with multiple providers of steps within the process. For example, an innovator company may need to work with one CDMO for plasmid manufacturing, another for vector manufacturing, and yet another for release testing and analytics. This has led to common challenges in:

Coordination and communication: One of the main challenges of working with multiple CDMOs is coordinating and communicating effectively between the different organisations, in addition to overall vendor management. It can be

challenging to ensure that each CDMO is aware of the project’s overall goals and timelines, and that all parties are working together to achieve these goals. This can be particularly challenging when multiple CDMOs are located in different countries or time zones, making it difficult to coordinate schedules and communication.

Quality control: Another challenge with using multiple CDMOs is ensuring consistent quality control across all aspects of the development and manufacturing process. Different CDMOs may have different quality control standards and procedures, making it challenging to maintain consistent quality throughout the process. This can be particularly problematic when there are handoffs between different CDMOs during the manufacturing process.

Intellectual property & licensing: Another challenge with using multiple CDMOs is protecting intellectual property. Developing cell and gene therapies often involves proprietary technology and processes, and it can be challenging to protect this information when working with multiple CDMOs. There may be concerns around confidentiality and the risk of intellectual property theft, which can hamper coordinating work with multiple CDMOs. Furthermore, development using standard materials e.g., (off-the-shelf plasmid or gene editing nuclease) may surprise an innovator company when the CDMO provider is litigated in court.

To address these challenges, a new generation of CDMOs has emerged. These CDMOs offer flexible solutions—both in terms of contracting as well as facilities, equipment, and platforms. As early stage clinical and scale-up clients face capital headwinds, models being tried include: Modular contracting: Allows innovator companies to select specific services from a menu. For example, a CDMO might offer separate modules for process development, analytical development, and manufacturing, and innovator companies

can choose some or all of the modules they need based on their in-house talent. Pay-for-performance: Under this approach, payment is contingent on specific performance metrics, such as meeting project milestones e.g., (vial thaw, successful batch) or achieving specific quality standards such as yield. This approach can help incentivise the CDMO to perform at the highest level possible and can help mitigate risk for the innovator company.

Flexible pricing: Some CDMOs are offering more flexible pricing models to meet the changing needs of innovator companies. For example, a CDMO might offer a fixed-price contract for a specific scope of work, or a cost-plus pricing model where the CDMO is paid for the actual costs incurred plus a percentage markup. Increasingly, as programs are rationalised and at the mercy of clinical data readouts, recent contracts have offered parking lots and offramps for innovators to manage their pipeline.

In terms of facility design, fit-forpurpose cell therapy CDMOs have re-engineered and decoupled unit operations to eliminate process and operator bottlenecks. As an example, early-generation autologous cell therapy suites manufacture a patient dose in a singular Grade B environment with operators responsible for the entire process. This requires both time-intensive gowning for current staff as well as lengthy training and focused retention efforts. These headwinds lead to both lower throughput due to nonprocess time and space utilisation, as well as risk to output from attrition.

In next-generation suites, process steps with that can be contained (cell selection, activation, transduction, expansion, and harvest) are performed in closed Grade C space while those that require access to the (formulation, filling) are performed in stricter Grade B to enable quick movement of personnel materials across the GMP facility as operators do not have to gown/de-gown as often as in Grade C as they would in a Grade B environment. This enables seamless processing steps to handle higher throughput.

For vector manufacturing, process steps are broken down into seed, upstream processing, and downstream processing. The seed and upstream processing steps are performed in a contained area to ensure high levels of purity, while downstream processing steps that require access to the open environment are performed in a less strict environment. Additionally, this approach enables the manufacturing team to become highly specialised in their respective roles making it more conducive to larger batches and pooling strategies with multiple upstream bulks to one downstream run. Efficiency and batch success metrics are optimised, leading to lower COGS per dose of vectorbased therapy.

In light of increasingly complex therapy development, the pace at which cell and gene therapies on accelerated approval pathways speed through clinical trials, and patients with no other choice waiting for therapies, tighter collaboration between innovators and CDMOs is key. A more collaborative relationship enables speed and increases chances of first-time success as sponsor companies share more information and expertise with the CDMO, and the CDMO takes a more active role in risk mitigation and resolution beyond simply executing an initial scope of work.

At the Center for Breakthrough Medicines (CBM), we enable tighter collaboration through programmes such as Partner-in-Plant (PIP) and digitallyenabled suites and infrastructure. Unlike audit-only models, PIP allows a sponsor company access to their product with office space and access to manufacturing suites (with appropriate GMP training) at CBM. Sponsor company scientists can work hand-in-hand in our process development labs to work through parameters that influence batch success.

For partners that are unable or choose not to be onsite, CBM’s digitally native facilities enable access globally 24/7. Live 360-degree cameras are thoughtfully located in suites to capture a complete

view of the production environment. The cameras capture video footage of the manufacturing process from all angles, providing a comprehensive view of the production line. This technology can be used for process improvement and employee training. By having a real-time view of the manufacturing process, operators can identify bottlenecks, optimise workflows, and improve production efficiency.

Sensor-enabled equipment can also provide real-time visibility into a manufacturing process. These sensors collect data on temperature, pressure, flow rates, and other critical parameters. The data collected by these sensors can be analysed to predict and mitigate risk and manage deviations, improving overall product quality and reducing waste.

As cell and gene therapies continue to evolve and mature in an uncertain global macroeconomic environment, the new CDMO model of flexible collaboration and access is critical to managing scarce resources and constrained timelines to deliver product globally to the patients that need them.

CBM provides faster turnaround times for client projects by having all development, testing, and manufacturing capabilities connected digitally on shared platforms and physically on one campus, and by having invested working capital in consumable materials and critical supplies for cell and gene therapies. This approach reduces the risk of supply chain disruptions and improves overall supply chain resilience. Our proactive inventory strategy has enabled the company to establish strong relationships with strategic suppliers and secure favourable pricing agreements. By anticipating client needs for consumable materials and supplies and investing our working capital accordingly, the company can quickly respond to client requests thereby accelerating the path to product manufacturing. This approach has helped clients focus their time and energy on value-added activities

aimed at delivering advanced therapies to patients rather than being consumed with the complexities associated with building bespoke supply chains in today’s highly complex global sourcing environment.

To discuss how to collaborate with CBM on an ideal outsourcing model or supply chain strategy, reach out to expert@cbm.com

Andy has over 20 years of experience in industries such as Pharma / Consumer Healthcare, Fast-Moving Consumer Goods, and Agricultural Chemicals across North America, South America, and Europe. His professional background includes progressive leadership roles within Supply Chain Management, Production Operations, and General Operations Management of strategic cGMP supply centers. Prior to joining The Center for Breakthrough Medicines Andy led a 500 FTE supply center with annual net sales over 300 million Euro as the Head of Consumer Health and Pharma Product Supply Argentina within Bayer AG. Andy’s educational background includes a B.S. in Supply Chain Management from the Pennsylvania State University and an MBA from the University of North Carolina at Chapel Hill.

Emily Moran is Senior Vice President of Vector Manufacturing at Center for Breakthrough Medicines. She is an experienced leader in cell and gene therapy and biologics manufacturing with a focus on commercial readiness, industrialisation, and manufacturing stabilisation. She most recently served as Head of Viral Vector Manufacturing at Lonza in Houston Texas.

Minimising costs and lead times, while also maximising supply security, is essential when choosing a cell culture media supplier; however, this must be balanced with robust quality standards, optimal consistency, and experienced support. This article outlines the key capabilities that developers should look for in a supplier to help achieve long-term manufacturing success.

Cell culture media is a significant driver of productivity within biopharmaceutical manufacturing processes. As it provides the essential components for cell growth and function, finding a suitable media formulation is a

critical step during process development, and fundamental to achieving high titres and optimal product quality.

During media selection, there are two main choices for developers to consider: an off-the-shelf, catalogue product or a

custom solution—either a customised catalogue product or a fully proprietary formulation. Although many media manufacturing considerations will be similar, if a custom solution is chosen, developers need to pay specific attention to considering how it will be manufactured at a commercial scale.

This can require finding a suitable external media manufacturer, as well as possibly qualifying a secondary or even tertiary supplier to proactively prevent any potential disruptions in supply. There are several options that developers utilising a custom solution can choose from—ranging from smaller, local suppliers to larger, global vendors with multiple manufacturing sites around the world.

When making this decision there are several factors that need to be considered—in particular, cost and lead times. While it is desirable to minimise these factors where possible, it is essential to do so without compromising on quality and consistency standards, supply chain reliability, and even provisions for the protection of intellectual property (IP). Choosing a supplier that has the

experience and capabilities to efficiently resolve any media-related challenges can also help facilitate long-term manufacturing success.

To determine if a supplier can provide the required support and help minimise risks, there are several key areas to assess, including specific capabilities, infrastructure, and processes. By choosing the right supplier, developers can establish a dependable media supply, supporting them to scale up with confidence and mitigate the risk of costly delays arising in the future.

While there are many areas to evaluate during the selection process, there are a few that are pivotal to long-term success. In particular, ensuring regulatory compliance and safeguarding IP should be primary considerations for all developers.

Adhering to regulatory requirements is not only crucial to protect patient safety, but also to avoid incurring costly fines for non-compliance. Consequently, developers should prioritise choosing a supplier that has accredited manufacturing facilities and can provide documentation to validate compliance. When choosing a global supplier, it is particularly important for developers to verify that the supplier can meet the relevant regulatory requirements of the countries they are operating in.

IP protection should be another fundamental consideration. Proprietary formulations and other key process and product details are valuable information and keeping them confidential is essential for developers to protect their innovations and achieve their commercial goals. As a result, a supplier’s data security infrastructure should be a key topic of discussion during the selection and qualification process. This should include a dedicated cybersecurity programme and associated policies designed to mitigate data risks and respond to any threats.

Given the foundational role of cell culture media in biopharmaceutical production, maintaining quality and consistency is vital—particularly as production volumes increase.

To support seamless scale-up, it is paramount that a supplier has the necessary infrastructure, facilities, and procedures in place to help ensure quality and consistency are prioritised at all scales. Without these systems, inconsistency can lead to several challenges for developers, including reduced productivity, wasted resources, and manufacturing delays. Although many different factors can give rise to media and process variability, by carefully evaluating four key areas, the risks can be greatly reduced, and challenges more quickly resolved.

Cell culture media formulations consist of a wide range of components that are sourced from suppliers around the world. As a result, maintaining the overall quality and consistency of media requires ensuring the quality of every raw material used. In particular, identifying the presence of trace element impurities should be prioritised. As key drivers of cell metabolism, even small amounts of trace elements—such as copper and manganese—can have detrimental effects on overall process

productivity by impacting critical protein quality attributes. To minimise this risk, developers should look for a manufacturer that has an in-depth procurement programme and only sources raw materials from reputable and strictly qualified suppliers.

Once a supply of high-quality raw materials is secured, media suppliers should also have rigorous internal testing processes in place to help maintain quality standards. This includes measures such as accurately assessing all incoming raw materials. The use of techniques such as inductively coupled plasma mass spectrometry (ICP-MS) can help vendors identify and quantify any potential raw material impurities. In tandem with this, digital inventory management systems can be used to quickly highlight any variability. This can help enable the supplier’s quality teams to rapidly respond and prevent out-of-specification raw material lots from being introduced into the media manufacturing process.

Alongside routine raw material testing, analytical technology can also be leveraged to help optimise the media manufacturing process. Specifically, some suppliers will be able to offer services to help developers understand which trace elements their process or cell line is most sensitive to, and the optimal concentrations of these elements. Using these insights, developers can work with the supplier to implement proactive steps, such as dedicated raw material screening protocols, to control variability, lowering the risk of inconsistency and optimising productivity.

In addition to raw material impurities, contaminants can also be introduced into media during the manufacturing process itself. These unexpected contaminants are particularly critical to monitor as, in addition to causing process variability, they can also present potential safety concerns for the final biopharmaceutical product.

Diligent testing and sterilisation are especially crucial process steps that can help reduce the risk of variabilitycausing contamination being introduced.

One potential source that should be considered is the contamination of animal origin-free (AOF) products with animal origin (AO) material. To reduce this risk, any sites that are manufacturing both AO and AOF products should have dedicated mitigation protocols in place. These should include measures such as maintaining a unidirectional flow of raw materials, material airlocks, integrated operating systems for realtime monitoring, and dedicated QC labs with separate material and waste flows.

Outside of AO/AOF cross-contamination, microbial testing is also central to controlling cell culture media quality—in particular, bioburden and endotoxin management. This is an area where choosing an established supplier can be advantageous, as it can leverage its expertise to detect and minimise endotoxin and bioburden risks. Developers should look for a supplier that can demonstrate that all raw materials are validated to their appropriate specifications and that the equipment utilised for production has been subjected to the necessary procedures. Diligent testing and sterilisation are especially crucial process steps that can help reduce the risk of variability-causing contamination being introduced.

3) Site-to-site equivalency

Alongside carefully evaluating a supplier’s quality systems and manufacturing protocols, considering the level of supply assurance it can provide is also essential. To reduce the risk of supply chain challenges and any associated costly production delays, it can be beneficial to choose a supplier with a multi-site network that has built-in manufacturing redundancy. However, it is key that all processes across its locations are harmonised to enable equivalent products to be manufactured at each site. Site harmonisation encompasses the entire production workflow, so there are several areas where equivalency should be implemented.

Within a harmonised site network, raw material management is key. As

previously mentioned, inconsistent raw materials can have significant effects on media performance. To mitigate this risk, suppliers should implement equivalency in their raw material processes, harmonising both sourcing specifications and supplier review protocols.

Process and equipment equivalency is another area developers should verify with the supplier. In particular, suppliers should be able to demonstrate that manufacturing steps are mirrored, and that all manufacturing equipment can deliver comparable performance and is validated to the same standards. Quality assurance, control frameworks, and operating infrastructure should also be harmonised to help verify the same standards are achieved across locations. This includes utilising identical testing methods, equipment, cell lines, and specifications at each site.

Outside of media manufacturing, considering the overall cell culture knowledge and analytical capabilities of the supplier can also be advantageous. When issues such as batch-to-batch inconsistency arise during production it can often be difficult to identify the exact causes. However, an experienced vendor will often have the knowledge and the tools to carry out detailed investigations to help resolve any media-related challenges as efficiently as possible.

Support through manufacturing stability studies can also help give developers confidence in their formulation. Real-time stability testing of cGMPmanufactured media in the desired packaging configuration should be offered as a customisable service conducted under conditions and time points determined by the developer.

In addition to stability studies, an experienced media manufacturer may also be able to offer in-depth investigative analytical services to help developers resolve more complex challenges such as precipitates, extractables, and leachables. Using the outcomes of these investigations, developers can take steps to reduce

the risk of similar challenges arising in the future—for example, by modifying their formulation or optimising media storage.

From regulatory compliance and IP protection to raw material sourcing and site-to-site harmonisation, there are many considerations developers need to take into account when selecting a media manufacturer. By working with the supplier to carefully evaluate and validate each of these areas, developers can determine if the supplier can meet their needs and begin the process of building a long-term collaborative relationship.

Although the evaluation process can require an initial investment of time and money, this can often be offset by the considerable benefits of working with a dependable media manufacturer. Through supplying high-quality and consistent media, alongside dedicated and responsive support, an experienced supplier can help developers streamline scale-up and accelerate their speed to market. Moreover, using its knowledge and capabilities, the supplier can also help quickly resolve any challenges that arise during manufacturing, helping developers maintain commercial success into the future.

After completion of his PhD at the University of Kentucky and a postdoctoral appointment at UC Davis, Chad has had professional stints across the bioprocessing industry. As a Senior Product Manager at Thermo Fisher Scientific, Chad is responsible for the Gibco™ Efficient-Pro™ medium and feed system and the customer-owned formulation product line.

The top challenge today for life sciences and healthcare organisations is effectively extracting and operationalising information from complex sources for decision-making. Certara’s deep-learning platform and modelbased meta-analysis services offer integrated solutions for researchers seeking to enhance their R&D programs by leveraging all available clinical trial data sources.

Matt Zierhut, Vice President, Integrated Drug Development, Certara Nick Brown, Associate Director of Marketing, Certara

Nick Brown, Associate Director of Marketing, Certara

1. How can artificial intelligence (AI) and deep learning help in integrating and analysing data in clinical trials? Can you share any examples?

NICK: A tremendous amount of documentation is created throughout the clinical trial lifecycle. These documents include recruitment forms, trial summaries, scientific publications, clinical study reports, drug labels and post-marketing materials; all of which hold valuable data points that can influence future trials.

The challenge with these documents is that it’s incredibly time consuming to review and extract relevant data points from this content. That’s where AI comes in.

AI in the form of generative pre-trained transformers (GPTs) and large language models (LLMs) are uniquely adept at searching and “understanding” complex content.

By applying these AI models to clinical trial documents, researchers can accelerate screening and data extraction workflows enabling them to collect highly-relevant information in a format that complements their analytics needs. For example, at Certara.AI we have a dataset creation tool that researchers use to take large corpuses of clinical trial documents and leverage AI to extract the relevant data points they need directly into a structured format. These newly structured datasets can then be used for expanding clinical outcomes databases or fed into visualisation tools to run a variety of different analyses.

2. How does deep learning benefit small compound analysis and generating new drugs? Can you highlight any advancements or breakthroughs in this area?

NICK: Just as LLMs and GPTs can be trained to “understand” language and then leveraged to analyse documents, they can also be trained on SMILES and SELFIES strings. SMILES and SELFIES are sequences of numbers and letters that represent molecules and in simple terms can be considered the “language of compounds.”

Models trained on SMILES or SELFIES can perform exciting tasks such as property prediction and de novo compound generation which complement the existing analytics workflows of chemists and discovery scientists. For example, the Certara.AI team is collaborating closely with our

colleagues in medicinal chemistry, to develop these models. To date we’ve successfully deployed models to help users predict the toxicity, blood brain barrier permeability and lipophilicity of structures which can enhance which molecular properties to prioritise. In addition, we also have made tremendous progress in models for de novo compound generation which will deliver highly-relevant structures to complement existing discovery workflows.

NICK: Flexibility, data access and model training are all factors organisations should consider when selecting a deep learning platform for R&D in life sciences. The AI landscape is rapidly evolving, so having a platform with the flexibility to handle multiple types of models will enable your team to shift as new innovative models hit the market. Secondly, data access will always be a challenge in AI. A platform that allows you to securely connect your internal data and apply AI models onto those assets enables you to create an expansive environment for leveraging AI across multiple data types and teams within your organisation. Last, but certainly not least, is the base training of the AI models. In many cases, AI models are trained on broad datasets that enable an expansive, but top-level understanding of concepts. At Certara.AI, we focus specifically on developing models that are trained specifically on life sciences content. This enables our customers to leverage these models with confidence knowing that they understand the unique complexities of the life sciences industry.

MATT: Model-based meta-analysis utilises study results at the summary-level (aggregate data) to gain a deeper understanding of the landscape of both available treatments and treatments currently in development for any given indication or therapeutic area. MBMA is a broad term that can encompass almost all types of meta-analyses. These meta-analyses can range from simple pairwise meta-analyses of two treatments based on multiple similarly-designed studies (testing the same compounds), to network meta-analyses that compare multiple treatments using connected networks of studies (allowing for indirect comparisons of treatments without direct comparison data), and to full model-based meta-analyses that can add complex model structures to account for variability from dose-ranging data, longitudinal data, and data from studies with different populations or designs. MBMA can provide detailed context around any drug development program by enabling an appropriate comparison across all relevant trials, thus providing insight into the likelihood any drug in development would be able to successfully compete with other treatment options. This insight can be appreciated by all parties involved: sponsors, regulatory bodies, patients, and others.

5. How does MBMA help understand covariates in clinical trials? What methodologies are employed to unravel these factors and their implications for decision-making?

MATT: MBMA uses modeling techniques that are common to the fields of pharmacometrics and biostatistics to help explain variability in trial results that may be due to a variety of sources and factors. Usually, covariate effects are explored and described using additive, proportional, and power/ exponential terms, but more complex functions can also be used. For example, to capture the influence of dose on outcome, a sigmoidal or Emax function can be applied. Exponential decay is also commonly used to explain changes in outcomes over time. Additionally, MBMA can capture the influence of both prognostic and predictive covariates, a difference that is critical to development decisions. Prognostic covariates are factors that influence trial outcomes at the general treatment arm level, not relative to any other treatments. Prognostic covariates can be thought of as covariates that effect populations receiving placebo, or control treatment, or they can help explain differences in disease progression. Predictive covariates, on the other hand, help to explain differences in relative treatment effects, and relative effects are ultimately what involved parties are interested in. The key question being: does this drug elicit a larger benefit than the control or competitor treatment?

6. What limitations and considerations should researchers and stakeholders keep in mind

when applying MBMA and AI-powered analytics? Are there potential biases, challenges in data interpretation, or regulatory considerations?

NICK: Data quality is one of the top considerations to keep in mind. MBMA requires highly-specific datasets to ensure accuracy for any statistical analyses taking place. As discussed earlier, AI can accelerate the creation of these datasets. However, it’s critical that validation workflows are in place to ensure AI predictions are accurate. This is a key focus for us at Certara. AI. We’ve developed human-in-theloop validation capabilities that enable users to review AI predictions and adjudicate their accuracy and relevance before the dataset is finalised and used by MBMA experts.

7. What are the common challenges in applying MBMA to gain insights into disease, trial characteristics, and investigational drugs? How does Certara overcome these challenges and develop innovative solutions?

MATT: Each MBMA project presents its own unique challenges; however, the most common challenges typically involve data availability. This could be high-level data availability like the number of trials that have published results in the indication of interest. MBMA in rare diseases is not frequently done due to the typically

low number of drugs in development for a specific rare disease, although MBMA has been utilised in multiple rare disease cases – again it depends on the unique case and questions being asked. Other data challenges can also exist in more popular indications that have many published trial results to work with. Trials in these indications may not always publish the same details around population or trial characteristics. If these missing characteristics are potentially influential to trial outcomes, analysts would have to choose between excluding these trials or imputing the missing data. The most common solution is data imputation, thus keeping trials with other potentially relevant information. However, sensitivity analyses are always conducted to ascertain the potential influence of data imputation techniques. Other data challenges can

exist in how published results define important variables and even endpoints. If a common definition is not used across all studies, the definition may be a significant covariate itself. Finally, there are general challenges in transferring data from external (and internal) sources to an analysis-ready database, typically due to human error. These variables make it incredibly challenging to effectively collect data through manual efforts. Fortunately, advances in AI are a key solution. At Certara, we leverage advanced large language models trained on life science concepts to help our teams extract data from unstructured documents, identify semantically relevant content that is at risk of being overlooked and deliver results in a structured spreadsheet. Automating this first step with AI allows our team of expert curators to focus on data quality. With a low code AI validation tool, the team can more efficiently conduct QC workflows enabling them to be more productive while mitigating some of the tedious tasks that often lead to human error.

8. What are the benefits of conducting a meta-analysis by systematically gathering data from maintained databases? How does this approach enhance reliability and aid evidence-based decision-making in drug development?

MATT:As any modeller would (or should) tell you: garbage in, garbage out. The benefits of using systematically gathered data can be directly observed in the quality of the model output. Additionally, by maintaining these systematically developed databases, one can quickly jump into any

new analysis within an indication that has an already existing database. If you can trust that existing databases accurately portray all the relevant and available information, you can not only quickly initiate work to inform critical decisions, but you can also be confident that those decisions were properly informed. The highest priority of the Data Science team at Certara is to develop and maintain accurate databases using a systematic review and quality control process.

9. How does Certara's advanced deep-learning platform benefit R&D programmes in life sciences and healthcare? Can you share success stories or use cases?

NICK: Certara’s AI analytics platform helps life science R&D teams solve two key challenges — provide a solution for applying LLMs and GPTs to structured and unstructured files and make those multiple data sources searchable and accessible in a single platform. This combination improves collaboration and accelerates insight discovery that can inform a number of go/no-go decisions across the drug discovery pipeline.

As previously mentioned, so much of the data needed for R&D efforts resides in literature-based documents. The Certara AI platform's strength in analysing and bringing new value to this content enables it to be leveraged in a number of use cases. For example, using LLMs to accelerate large scale systematic literature reviews that are used to inform trial design and development, GPTs assist in the creation of regulatory documents and concept tagging to improve insight discovery and data standardisation.

10. How have AI-powered analytics improved decision-making in drug development through model-based metaanalysis?

MATT: Effective MBMA starts with high quality data. The area that we see the greatest promise in AI complementing MBMA is in the identification and collection of relevant data needed to effectively conduct analyses. As mentioned earlier in this interview, much of the data we need to understand a clinical landscape comes from unstructured documents. AI’s ability to “comprehend” this content enables us to effectively identify relevant insights, and curate more accurate datasets that can improve our understanding of the given area we’re studying.

11. What motivated Certara to incorporate deep learning expertise? How has this collaboration benefited Certara's clients and drug development initiatives?

Can you share specific success stories?

NICK: AI holds tremendous promise in life sciences and drug discovery. Certara AI's focus area of AI development complements Certara’s product portfolio. By integrating this technology into the Certara product portfolio, we’re able to add new predictive and generative AI capabilities to drug discovery workflows that arm our clients with the ability to easily access insights that can improve go/no go decisions.

12. What tools and services does Certara offer to help life science companies analyse and interpret data for drug development decisions? Can you provide examples of their impact on decisionmaking?

MATT: Certara offers many more tools and services than what we have discussed today. We are a full-service company that can improve drug development at all stages. To demonstrate our size and success, in each of the last nine years, 90 percent of new drug approvals by the U.S. Food and Drug Administration’s (FDA) Center for Drug Evaluation and Review (CDER) were received by Certara’s customers.

Focusing on Certara AI, Data Sciences, and MBMA consulting services, we offer essential tools to identify, curate, and analyse both public and proprietary data sources. Our recently developed and newly improved software is available to provide all available relevant information to the decision maker. This information could come in the form of a comprehensive database curated from multiple sources, or it could

come in the form of simulations based on a model that was developed using the comprehensive database. Certara provides the tools and the recommendations to make better decisions.

MATT: Successful drug development depends on making wise decisions about portfolios, clinical trials, marketing, etc. We are continuously faced with the challenge of deciding whether to continue development or stop it. To support those decisions, we gather data, typically through clinical trials. We analyse the data from those clinical trials, and then we use these analyses to build models that we then use to predict what may happen in the next trial. The data collected from these in-house trials are “internal data” or “proprietary data.” Companies rarely share individual level data. But, they all publish most of their aggregate level trial results.

Before publishing results from an internal study, sponsors are in a unique position where they have access to all published competitor data, and are the only ones with access to their own proprietary data. Certara utilises MBMA to put this proprietary data into the proper context of the published summary-level trial results, thus enabling the sponsor to make critical decisions before others have seen their new trial results. By fully understanding the landscape, now including their drug, they are able to make fully-informed decisions about the best next steps for their compound, whether it’s advancing to the next stage or shifting resources to a different compound, with a better predicted probability of success.

14. How does the integration of AI in Certara's CODEx platform enhance MBMA capabilities? In what ways does AI empower the analysis of complex datasets and enable informed decision-making in drug development?

Dr. Matt Zierhut advances the integration of external aggregate clinical trial data into development decisions and commercial and regulatory strategy via modelbased meta-analysis (MBMA) at Certara. Matt works closely with clinical development teams to ensure MBMA is leveraged for optimal impact when making the most critical decisions. Previously, Matt was at Janssen (J & J) where over the past 5+ years he has led the development of their global MBMA capability and has worked together with many clinical development teams to ensure optimal impact of MBMA into the decision making in their programs.

https://www.certara.com/teams/matthew-zierhut/

Nick Brown is associate director of marketing at Certara AI. He manages customer and partner communication and engagement for the Layar platform and AI integrations across the Certara product portfolio. Nick has over 10 years of experience in AI marketing where he focuses on educating a variety of audiences on complex technology across deep learning, AI and big data.

https://www.certara.com/teams/ nick-brown/

NICK & MATT: High-quality clinical outcomes data is at the core of the CODEX platform. By adding AI into CODEX, we’re able to provide our customers with an intuitive solution for custom dataset curation that mixes our CODEX indication databases with other relevant data sources, including customer’s proprietary data. As a result, our customers can dynamically expand the datasets they’re analysing to quickly provide them greater insights and results that impact their most critical drug development decisions.

leverage internal data to enhance research and development capabilities?

Current advancements in bioengineering, AI, supercomputing, and ML are reshaping the landscape of the pharmaceutical industry. They are anticipated to make an even greater impact and draw a new vision to guide pharmaceutical research and innovation in the coming years.

Qasem Ramadan, Alfaisal UniversityRapid scientific and technological innovations have enabled us to manipulate complex living systems and expanded our understanding of human biology. Additionally, the unprecedented investment in pharmaceutical research and development, enormously increased the identification of novel targets and their associated modalities. However, drug development is still a costly undertaking that involves a high risk of failure during clinical trials. The number of new molecular entities annually approved by the Food and Drug Administration (FDA) remains low, the likelihood of success continues to fall, and the number of drug withdrawals

has shown historic increase. Therefore, it is time to transform the traditional approaches of drug development to reduce the development costs and allow medicines to reach patients faster. In addition, pharmaceutical companies are facing increasing public and political pressure to reduce the prices of drugs, which may imply lower returns. In response, large investments are directed towards translational research to find innovative tools that accelerate the drug discovery and development processes. The success of one drug would stimulate reinvestment of drug sales into the development of new drugs. This innovation cycle is essentially impacted positively/negatively by the success/fail-

ure of the initial development of a drug [Saadi and White, 2014]. In fact, the impact of innovation on dug development within the pharmaceuticals industry has not been fully realised the same way in other industries [https://druginnovation. eiu.com/, The economist]. This can be attributed to three limitations factors: 1) technical constrains that is associated with lack of understanding of the underlying science; 2) financial constrains that caused by technical risk which leads drug makers to favour safer investment, hence less innovation; 3) regulatory constraints which make it difficult to approve new drugs due to uncertainty about new ways to combat disease.